The Security of IP-Based Video Surveillance Systems

Abstract

1. Introduction

- Threat Actors (Who)

- There is a set of attackers who want to exploit the functionality of surveillance systems specifically. For example, state-actors or thieves performing reconnaissance over a geographic area and criminals planning to blackmail a victim with video footage.

- Assets (What)

- If compromised, these systems can provide an attacker with private imagery resulting in a direct explicit violation of privacy. These systems are also lucrative assets to botnet owners since they typically have high bandwidth (for DDoS attacks) and decent compute capabilities (for cryptomining). The features of a surveillance system change the weight of attacker’s goals and the defender’s priority on the defenses. For example, there is more emphasis on anti-DoS and MitM attacks in surveillance systems than other systems. Overall, the privacy violation of exposed data has much stronger implications than data from other IoTs.

- Topology (Where)

- Unlike other IoTs, surveillance systems are often centralized systems connected to a single server. They are also commonly connected to both the Internet and an internal private network—thus exposing a potential infiltration vector. It is also common for surveillance systems to have their server physically on-site, as opposed to being in the cloud. These aspects change attack surface by encouraging the attacker to find other ways to compromise the system (e.g., via local attacks).

- Motivation (Why)

- Aside from being a stepping stone into another network, surveillance systems elicit monetary motivations such as blackmail, cryptomining, and spying for military or political reasons. Moreover, an attacker can have a physical advantage if the system is targeted in a DoS attack. For example, stopping video-feeds in certain geographic areas prior to an attack/theft, or as an act of cyber terrorism. Another aspect to consider is that a DoS attack on a generic IoT device or information system is a nuisance, whereas on a surveillance system it means that the attacker can remotely disable video feeds at will. For example, a VPN router in the surveillance system can be targeted remotely causing a loss of signal to all cameras.

- Attack Vectors (How)

- Surveillance systems have unique security flaws and attacks due to their functionality. Some examples include (1) servers which accept self-signed certificates (which can lead to man-in-the-middle attacks) just to be compatible with many different camera models of various vendors, (2) their unique susceptibility to side channel attacks on encrypted traffic due to the nature of video compression algorithms, (3) video injection attacks where a clip of footage is played back in a loop to cover up a crime, and (4) data exfiltration performed via a camera’s infrared nigh vision sensor. Moreover, modern systems rely on machine learning algorithms to identify and track objects and people. Unlike AI on other IoTs, these AI models can be easily evaded/exploited due to their accessibly and flaws [10].

2. System Overview

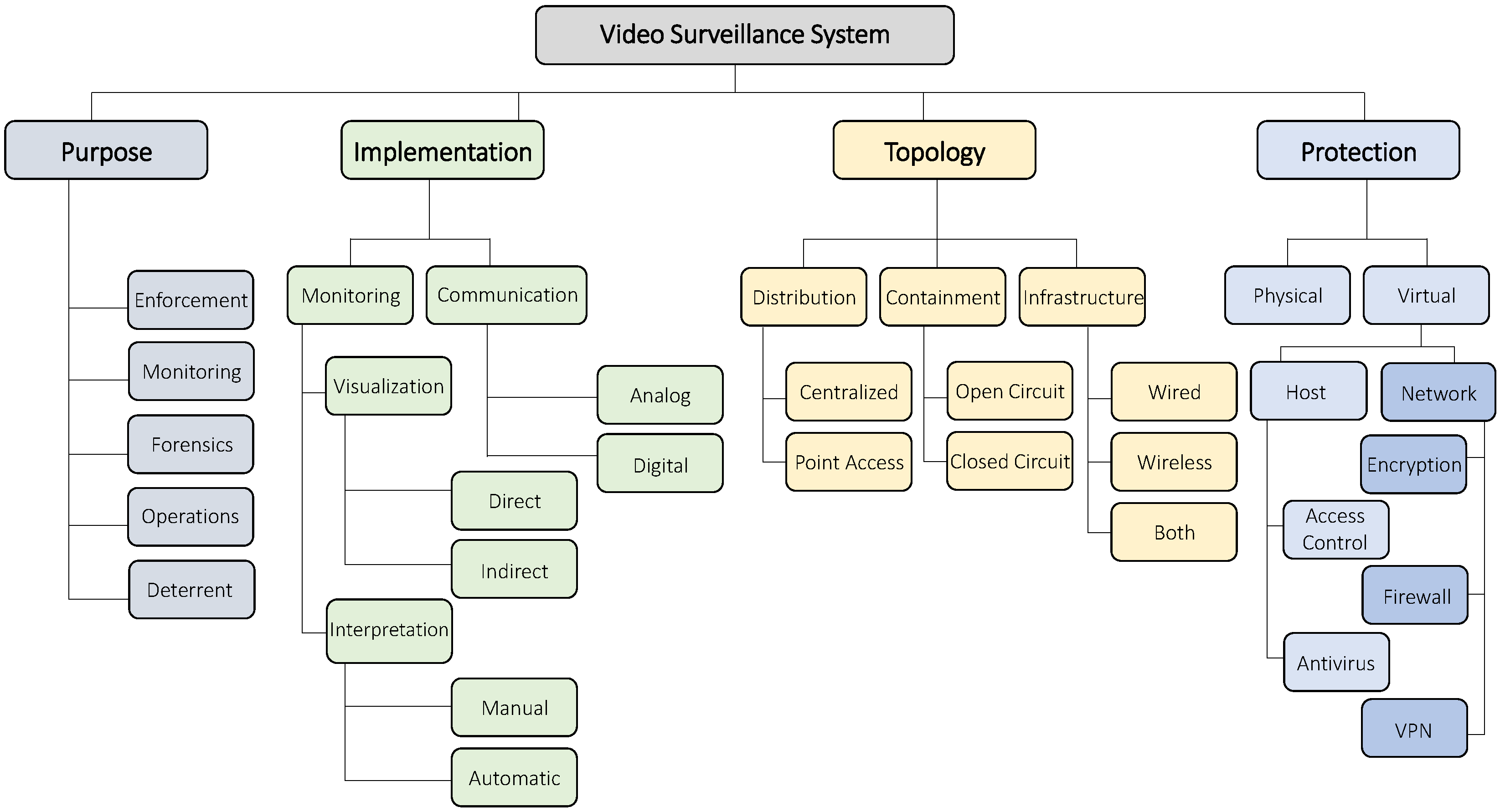

2.1. Overview

- Purpose

- The purpose of a video surveillance system depends on the user’s needs.

- Enforcement

- The user may want to send security forces or police to an area undergoing some violation of law or protocol. This is common in governments, transportation services, stores, and even workplaces.

- Monitoring

- The user may want to know what is happening in a certain location for some general purpose, or to have a sense of security—for example, home, baby, and pet monitoring.

- Forensics

- The user may want to be able to produce evidence or track down an individual.

- Operations

- The user may want to improve operations by having an overview of what is going on. For example, employees can be guided or managed more efficiently.

- Deterrent

- The user may want to have the system visually present to simply ward off potential offenders or trespassers. In some cases, the user will not even have a means of viewing the video footage.

- Implementation

- There are various ways the hardware/software of the system can be setup to collect and interpret the video footage. We categorize the system’s implementation into two categories:

- Monitoring

- This concept regards how the user visualizes the video streams, and how the content is interpreted. The visualization can be provided directly to the user directly such as in a closed circuit monitoring station, or indirectly via a digital video recorder (DVR) with remote access or in the cloud. The interpretation of the content can be done manually by a human user reviewing the content, or automatically via motion detection, or advanced applications such as object tracking, image recognition, face-detection, and event-detection.

- Communication

- This refers to the means in which the system transports the video feeds [1]. With analog methods, the video is sent to the DVR as an analog signal (which is subsequently connected to Internet). With digital methods, the video is processed, compressed, and then sent as a packet stream to the DVR via IPv4 and IPv6 network protocols. A common approach is to compress the stream with the H.264 codec and then send it over the network with a real-time protocol such as RTP over UDP.

- Topology

- An IP-based surveillance system’s topology can be described by its distribution, containment, and infrastructure. Distribution refers to whether the cameras are located anywhere in the world or physically located in one area. Containment refers to whether the system is closed circuit (not connected to the Internet) or open circuit—and relies on access control to deny users without proper credentials. Finally, infrastructure refers to how the elements of the system are connected together: wireless (e.g., Wi-Fi), wired (e.g., Ethernet via CAT6 cables), or both.

- Protection

- The protection of surveillance system refers to how the user secures physical and virtual access to the system’s assets and services. Without physical protection, an attacker can tamper/damage the cameras or install his/her own equipment on the network. Virtual protection can be employed on the network hosts or on the network itself:

- Host

- Cameras, DVRs, and other devices can be protected by using proper access control mechanisms. However, like any computer, these devices are subject to the exploitation of un/known vulnerabilities in the software, hardware, or simply due to user misconfiguration (e.g., default credentials) [4]. Protecting the hosts from attacks may involve anti-virus software or other techniques.

- Network

- Depending on the topology, access to the system’s devices may be gained via the DVR, an Internet gateway, or directly via the Internet. A user may protect the devices and the system as a whole by securing the network via encryption, firewalls, and end-to-end virtual private network connections (VPN).

2.2. Assets

- DVR—Media Server

- The digital video recorder, or other media server, which is responsible for receiving, storing, managing, and viewing the recorded/archived video feeds. DVRs are typically an application running on the user’s server, or a custom hardware Linux box. DVRs can also be a cloud based server. In a small system, there may be cameras which do not support a DVR, and require the user to connect to the camera directly (e.g., via web interface).

- Cameras

- The devices which capture the video footage. There are many types, brands, and models of IP-Cameras, and each has its own capabilities, functionalities, and vulnerabilities. For configuration, some IP cameras provide web-based interfaces (HTTP, Telnet, etc.) while others connect to a server in the cloud. Most cameras act as web servers which provide video content to authorized clients (e.g., the DVR will connect to the camera as a client).

- Viewing Terminal

- The device/application used to connect to the DVR or camera in order to view and manage the video content. For example, an Android application running on a smartphone or the DVR itself.

- Network Infrastructure

- The elements which connect the cameras to the DVR, and DVR to the user’s viewing terminal—for example, routers, switches, cables, etc. The infrastructure also includes Virtual Private Network (VPN) equipment and links. VPNs are LANs which tunnel Layer 2 (Ethernet) traffic across the Internet, between gateways and user devices, using encryption. Site-to-site VPNs can bridge two segments of a the surveillance network over the Internet. A remote-site connection tunnels traffic directly from a user’s terminal to the surveillance network.

- Video Content

- The video feeds which are being recorded or that have been archived for later viewing.

- User Credentials

- The usernames, passwords, cookies, and authentication tokens used to gain access to the DVR, cameras, and routers. The credentials are used to authenticate users and determine access permissions of video content, device configurations, and other assets.

- Network Traffic—Data in Motion

- Data being transmitted over the network infrastructure. This can be credentials, video content, system control data [12] (e.g., pan, tilt, or zoom), and other network protocols (ARP, DNS, HTTP, SSL, TCP, UDP, etc).

2.3. Deployments

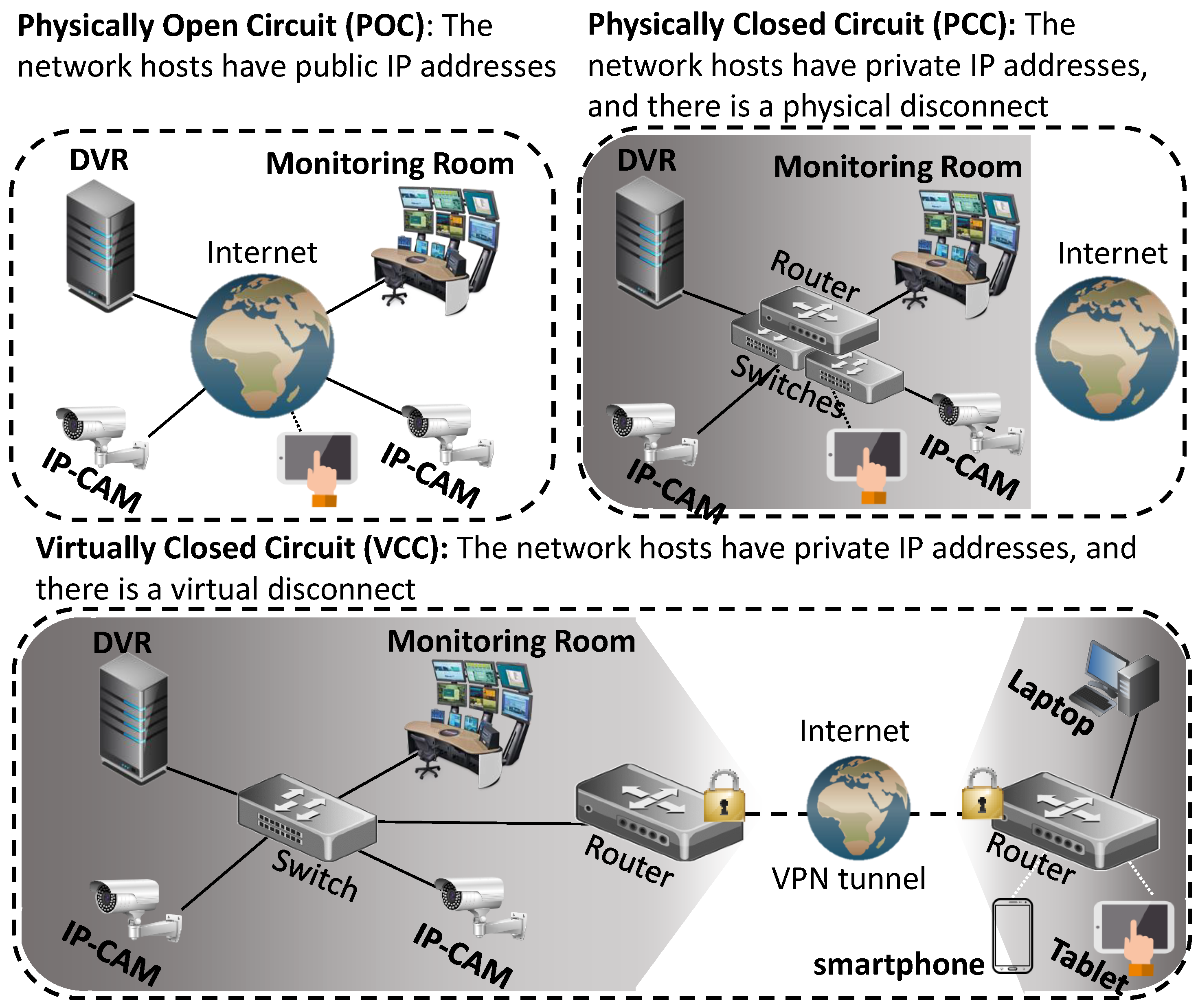

- Physically Open Circuit (POC)

- When the network hosts in the system (cameras, DVR, etc.) have public IP addresses. This means that anybody from the Internet can send packets to the devices.

- Physically Closed Circuit (PCC)

- When the network hosts in the system have private IP addresses, and there is no infrastructure which connects the network to the Internet. This means that noone from the Internet can send packets to the devices directly. These systems are also called air-gapped networks [13].

- Virtually Closed Circuit (VCC)

- When the network hosts in the system have private IP addresses, and the network is connected via the Internet using a VPN. This means that noone from the Internet can send packets to the devices directly, unless they send packets via the VPN.

2.4. Surveillance Systems vs. IoTs

2.4.1. The Technological Perspective

- Architecture.

- Architecture: In security, we must consider the topology and relationship between components and not just the individual devices. Some elements only become vulnerable because of their relationship to others. In the case of IoTs, these devices often follow the same cloud based model, whereas surveillance systems have a variety of architectures and topologies which, if disregarded, can lead to severe vulnerabilities. For example, a camera may be safe from remote attacks because its traffic is sent over a VPN, but the DVR may be exposed because of its web accessibility. Another example is that the communication channels of the cameras (wired or wireless) are often exposed and cross over publicly accessible areas, giving the attacker more opportunities to infiltrate the network than in the case of IoTs. In this survey, we exemplify the architectures unique to surveillance systems compared to the IoT cloud model, and the strategies which attackers may use to exploit them.

- Artificial Intelligence.

- In the case of IoTs, machine learning is often performed on the back-end for data-mining purposes. In the case of surveillance systems, there are unique attacks (e.g., all image based attacks) which enable attacks only relevant to surveillance systems (evasion, false evidence, DoS) that can lead to significant breaches in safety, privacy, and justice –unlike other IoTs. For example, an attacker can use adversarial markings on their clothes to evade detection, delay investigations (plant false triggers), and identify as a legitimate personnel. Recently researchers have shown how attackers can craft images which incur significant overhead on a model, causing the device to slow down in an effective DoS attack [14]. These attacks uniquely affect surveillance systems since the AI features provide attackers with new vectors towards accomplishing their goals and undermining the purpose of the system.

2.4.2. The Attack Surface Perspective

- How.

- Consider a DoS attack. The classic way of an attacker performing this attack on IoTs would be to overload the device with traffic. Doing so would alert the local authorities to the camera’s area because the feed would go down. However, here the attacker is clearly motivated to be covert as to not alert the authorities to his or her presence. Therefore, the DoS would likely be in the form of (1) looping video content, (2) freezing the current frame, (3) targeting the DVR to manipulate the content since the DVR may be more exposed by its web portal, or (4) using an adversarial machine learning attack to evade detection (e.g., hide the fact that there is a person in the scene though use of an adversarial sticker).Another consideration is that IP-cameras generate massive amounts of network traffic compared to other IoTs. This makes it very easy to detect disruptions from attacks, this challenging the attacker to compromise the devices in other ways (e.g., prefer obtaining credentials than rooting the device).

- Where.

- Consider a data theft attack. The classic way of performing this attack would be through remote social engineering attacks (e.g., phishing) to access credentials or to exploit vulnerabilities directly in the IoT device itself to gain privileged access. However, in a surveillance system that is completely VPN-based, remotely targeting the device is not possible and the social engineering attacks may not provide access (e.g., in the case of time-based rotating keys). Rather, it is more likely that the attacker will perform a physical social engineering attack (e.g., plant a USB drive) or physically tamper with the network infrastructure to plant a man in the middle device. Distinguishing the difference is critical since it will not only indicate where emphasis in security design must be made but also determine where countermeasures should be placed (e.g., placing an NIDS by the cameras and not just at the web portal or DVR) and emphasized (e.g., to bury and lock up cables, even in ‘private’ hallways inside the building).

- Why.

- Consider how the system could be leveraged. An IoT device may be targeted for the information or resources in which it contains. However, there is more risk for a camera to be compromised for its capabilities (observing the video footage) or to act as a stepping stone in a grander attack. For the latter case, consider data exfiltration: cameras may be used to exfiltrate data past an advanced firewall since the cameras are always sending large amounts of data in real time. Here the attacker can ride this current undetected (below the noise floor) where in contrast to other IoTs, the traffic is typically sparse and easier to model. Another case to consider is the fact that IP-Cameras produce far more traffic (bandwidth) than other IoTs and therefore may be targeted for the purpose of creating massive DDoS attacks.We note that the risk of an attack on an IP-surveillance system is higher than other categories of IoT because (1) they are wide spread, (2) provide high payoff for the attacker (provide the attacker with stronger processors than other IoTs for bitcoin mining etc, and direct access to confidential information), and (3) directly impact the safety, privacy, and integrity (e.g., planting false evidence) of the user. Therefore, understanding the ‘why’ of the attack enables the defender to know where time and resources should be put into hardening the architecture and prevent poor design choices in topology and access control.

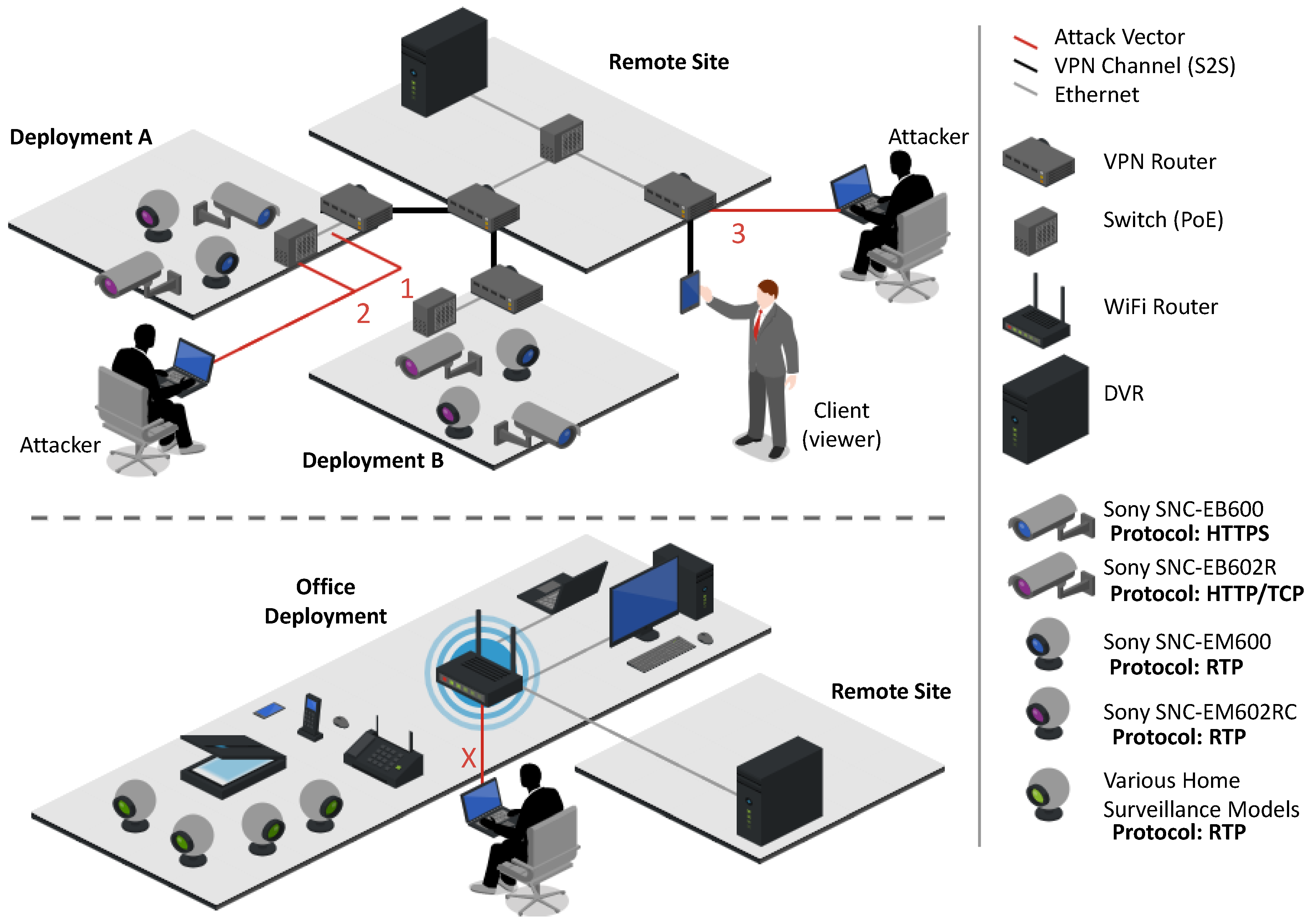

2.5. The Surveillance System Testbed

3. Security Violations

- Confidentiality Violation—the unauthorized access of video content, user credentials, network traffic. In this case, the attacker intends to observe the video footage for his/her own nefarious purposes. As a result, this goal puts the privacy and physical security of the premises at risk.

- Integrity Violation—the manipulation of video content, or the active interference of a secure channels in the system (e.g., the POODLE SSL downgrade attack). In this case, the attacker intends to alter the video content (at rest or in transit). Alteration can include freezing frame, looping an archived clip, or inserting some other content. This misinformation can lead to physical harm or theft.An attacker may violate a system’s integrity for a goal which is not directly related to the video content. For example, the attacker may want to exploit the system’s vulnerabilities to gain lateral movement to external assets. The system may be used as a stepping stone to gain access to the following external assets:

- (a)

- Internal network—surveillance systems (especially closed circuit systems) may be connected to the organization’s internal network for management purposes. An attacker may leverage this link in order to gain access to the organization’s internal assets.

- (b)

- Users—users of the system may be targeted by the attacker. For example, the attacker may wish to install ransomware on the viewing terminal, or to hijack a user’s personal accounts.

- (c)

- Recruiting a Botnet—A ‘bot’ is an automated process running on a compromised computer which receives commands from a hacker via a command and control (C & C) server. A collection of bots is reffed to as a botnet, and is commonly used for launching DDoS attacks, mining crypto currencies, manipulating online services, and performing other malicious activities. An example botnet which infected affected IP-cameras and DVRs was the Mirai malware botnet. In 2016, the Mirai botnet generated a 1.1Tbps DDoS attack against websites, webhosts, and service providers. Another example is a worm named Linux.Darlloz which targets vulnerable devices and exploits them through a PHP vulnerability (CVE-2012-1823) [16].

- Availability Violation—the denial of access to stored or live video feeds. In this case, the attacker’s goal is to (1) disable one or more camera feeds (hide activity), (2) delete stored video content (remove evidence), or (3) launch a ransomware attack (earn money)—for example, the attack on Washington DC’s surveillance system in 2017 [17].

4. Attacks

- Threat Agent/Actor.

- The person, device, or codewhich performs an attack step on behalf of the attacker.

- Threat Action.

- The malicious activitywhich an agent can perform at each step (access, misuse, modify, etc.)

- Threat Consequence/Outcome.

- What the attacker obtainsat the successful completion of an attack step.

- Attack Goal.

- The ultimate outcomewhich the attacker is trying to achieve (at the end of the attack vector).

4.1. Threat Agents

- Hacker—An individual who is experienced at exploiting computer vulnerabilities, whose unauthorized activities violate the system’s security policies. A Hacker can be in a remote location (i.e., the Internet) or in close proximity to the physical network.

- Network Host—A computer connected to the system’s network which is executing malicious code. The computer can be an IP-camera, DVR, or any programmable device in the network. A network host can become a threat actor via local exploitation (the un/intentional instillation of malware—social engineering and insiders), remote exploitation (e.g., exploit a web server vulnerability or an open telnet server), or a supply chain attack [18].

- Insider—An authorized user of the system who is the attacker or colluding with the attacker [19]. The insider may be a regular user (e.g., security officer), an IT support member, or even the system’s administrator. An insider may directly perform the entire attack, or enable a portion of an attack vector by installing malware, changing access permissions, etc.

4.2. Threat Actions

4.2.1. Performing Code Injection

4.2.2. Manipulating/Observing Traffic

4.2.3. Exfiltrating Information

4.2.4. Flooding and Disrupting

4.2.5. Scanning and Reconnaissance

4.2.6. Exploiting a Misconfiguration

4.2.7. Performing a Brute-Force Attack

4.2.8. Social Engineering

4.2.9. Physical Access

4.2.10. Supply Chain

4.2.11. Reverse Engineering

4.2.12. Adversarial Machine Learning

4.3. Threat Consequence

4.3.1. Privilege Escalation

4.3.2. Access to Video Footage

4.3.3. Arbitrary Code Execution (Ace)

4.3.4. Installation of Malware

4.3.5. Lateral Movement

4.3.6. Man in the Middle (MiTM)

4.3.7. Denial-of-Service (DoS)

4.3.8. Access to an Isolated Network

4.3.9. Covert Exfiltration Channel

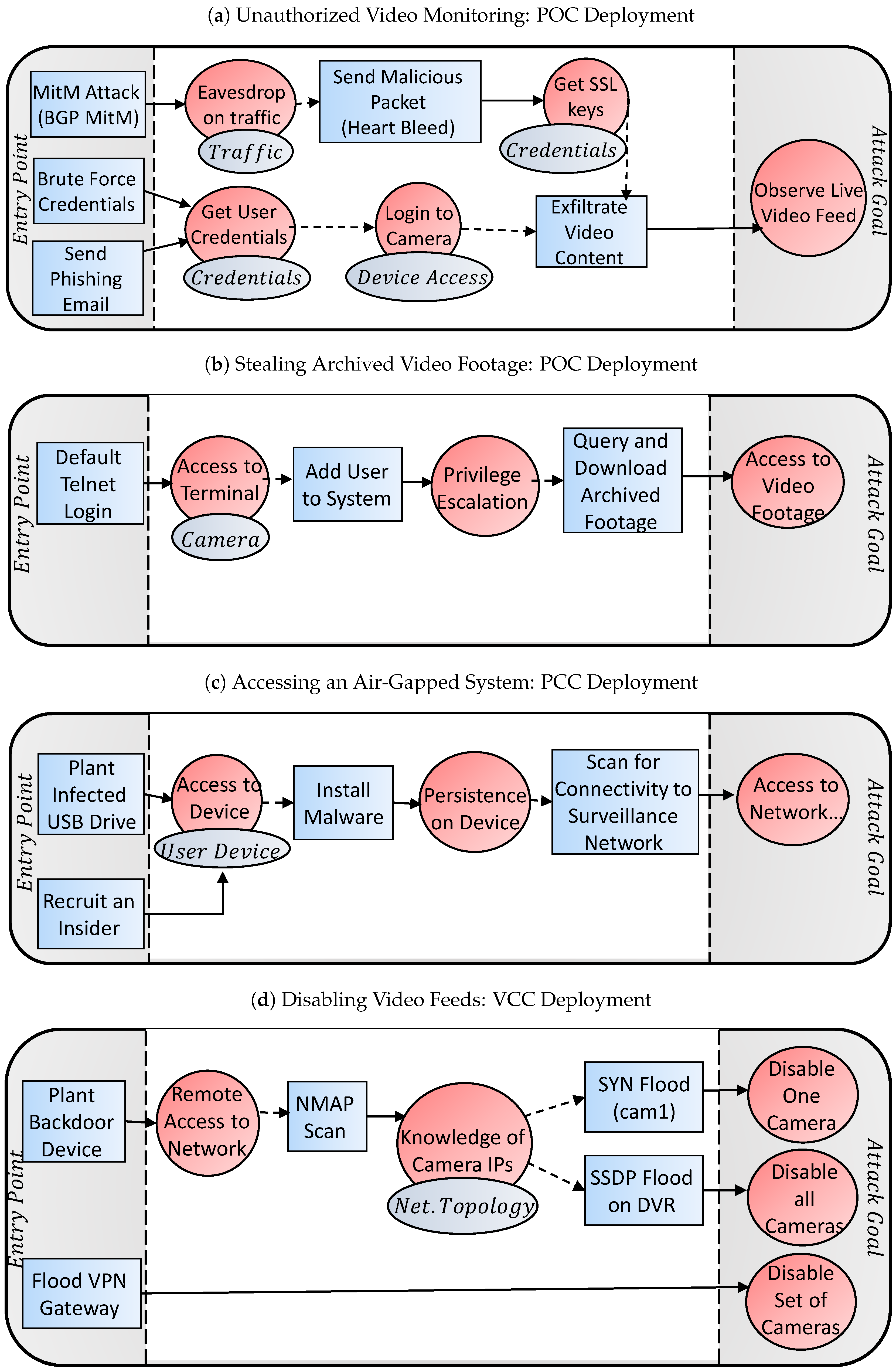

4.4. Example Attack Vectors

4.4.1. Unauthorized Video Monitoring

4.4.2. Stealing Archived Video Footage

4.4.3. Accessing an Air-Gapped System

4.4.4. Disabling Video Feeds

5. Countermeasures and Best Practices

5.1. Intrusion Detection and Prevention Systems

5.1.1. Configurations and Encryption

5.1.2. Restrict Physical Access

5.1.3. Defense against DoS Attacks

5.1.4. Defense against MitM Attacks

5.1.5. Defense against Adversarial Machine Learning Attacks

5.1.6. Education

6. Future Work & Conclusions

Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cabasso, J. Analog vs. IP cameras. Aventura Technol. Newsl. 2009, 1, 1–8. [Google Scholar]

- Statista. Security & Surveillance Technology Statistics & Facts. Technical Report. 2015. Available online: https://www.statista.com/topics/2646/security-and-surveillance-technology/ (accessed on 21 August 2020).

- Mukkamala, S.; Sung, A.H. Detecting denial of service attacks using support vector machines. In Proceedings of the 12th IEEE International Conference on Fuzzy Systems, St. Louis, MO, USA, 25–28 May 2003; pp. 1231–1236. [Google Scholar]

- Antonakakis, M.; April, T.; Bailey, M.; Bernhard, M.; Bursztein, E.; Cochran, J.; Durumeric, Z.; Halderman, J.A.; Invernizzi, L.; Kallitsis, M.; et al. Understanding the mirai botnet. In Proceedings of the USENIX Security Symposium, Vancouver, BC, Canada, 16–18 August 2017. [Google Scholar]

- Peeping into 73,000 Unsecured Security Cameras Thanks to Default Passwords. Available online: https://www.csoonline.com/article/2844283/peeping-into-73-000-unsecured-security-cameras-thanks-to-default-passwords.html (accessed on 21 August 2020).

- Dangle a DVR Online and It’ll Be Cracked in Two Minutes. 2017. Available online: https://www.theregister.com/2017/08/29/sans_mirai_dvr_research/ (accessed on 21 August 2020).

- The Internet of Things: An Overview. Available online: https://www.internetsociety.org/wp-content/uploads/2017/08/ISOC-IoT-Overview-20151221-en.pdf (accessed on 21 August 2020).

- Ling, Z.; Liu, K.; Xu, Y.; Jin, Y.; Fu, X. An end-to-end view of iot security and privacy. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–7. [Google Scholar]

- Khan, M.A.; Salah, K. IoT security: Review, blockchain solutions, and open challenges. Future Gener. Comput. Syst. 2018, 82, 395–411. [Google Scholar] [CrossRef]

- Akhtar, N.; Mian, A. Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access 2018, 6, 14410–14430. [Google Scholar] [CrossRef]

- Costin, A. Security of cctv and video surveillance systems: Threats, vulnerabilities, attacks, and mitigations. In Proceedings of the 6th International Workshop on Trustworthy Embedded Devices, Vienna, Austria, 28 October 2016; pp. 45–54. [Google Scholar]

- Liu, Z.; Peng, D.; Zheng, Y.; Liu, J. Communication protection in IP-based video surveillance systems. In Proceedings of the Seventh IEEE International Symposium on Multimedia, Irvine, CA, USA, 14 December 2005; p. 8. [Google Scholar]

- Guri, M.; Elovici, Y. Bridgeware: The air-gap malware. Commun. ACM 2018, 61, 74–82. [Google Scholar] [CrossRef]

- Sponge Examples: Energy-Latency Attacks on Neural Networks. Available online: https://arxiv.org/abs/2006.03463 (accessed on 21 August 2020).

- Kitayama, K.I.; Sasaki, M.; Araki, S.; Tsubokawa, M.; Tomita, A.; Inoue, K.; Harasawa, K.; Nagasako, Y.; Takada, A. Security in photonic networks: Threats and security enhancement. J. Lightwave Technol. 2011, 29, 3210–3222. [Google Scholar] [CrossRef]

- Bertino, E.; Islam, N. Botnets and internet of things security. Computer 2017, 50, 76–79. [Google Scholar] [CrossRef]

- Two Romanian Suspects Charged with Hacking of Metropolitan Police Department Surveillance Cameras in Connection with Ransomware Scheme. Available online: https://www.justice.gov/usao-dc/pr/two-romanian-suspects-charged-hacking-metropolitan-police-department-surveillance-cameras (accessed on 21 August 2020).

- Another Supply Chain Mystery: IP Cameras Ship with Malicious Software. Available online: https://securityledger.com/2016/04/another-supply-chain-mystery-ip-cameras-ship-with-malicious-software/ (accessed on 21 August 2020).

- Hunker, J.; Probst, C.W. Insiders and Insider Threats—An Overview of Definitions and Mitigation Techniques. J. Wirel. Mob. Netw. Ubiquitous Comput. Dependable Appl. 2011, 2, 4–27. [Google Scholar]

- Widely Used Wireless IP Cameras Open to Hijacking over the Internet, Researchers Say. Available online: https://www.networkworld.com/article/2165253/widely-used-wireless-ip-cameras-open-to-hijacking-over-the-internet–researchers-say.html (accessed on 21 August 2020).

- HACKING IoT: A Case Study on Baby Monitor Exposures and Vulnerabilities. Available online: https://www.rapid7.com/globalassets/external/docs/Hacking-IoT-A-Case-Study-on-Baby-Monitor-Exposures-and-Vulnerabilities.pdf (accessed on 21 August 2020).

- This POODLE Bites: Exploiting the SSL 3.0 Fallback. Available online: https://www.openssl.org/~bodo/ssl-poodle.pdf (accessed on 21 August 2020).

- Here, Come the XOR ninjas. Available online: https://tlseminar.github.io/docs/beast.pdf (accessed on 21 August 2020).

- Tekeoglu, A.; Tosun, A.S. Investigating security and privacy of a cloud-based wireless IP camera: NetCam. In Proceedings of the 2015 24th International Conference on Computer Communication and Networks (ICCCN), Las Vegas, NV, USA, 3–6 August 2015; pp. 1–6. [Google Scholar]

- Schuster, R.; Shmatikov, V.; Tromer, E. Beauty and the burst: Remote identification of encrypted video streams. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017. [Google Scholar]

- VideoJak. Available online: http://videojak.sourceforge.net/index.html (accessed on 21 August 2020).

- Guri, M.; Zadov, B.; Elovici, Y. LED-it-GO: Leaking (a lot of) Data from Air-Gapped Computers via the (small) Hard Drive LED. In Proceedings of the International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment, Bonn, Germany, 6–7 July 2017; pp. 161–184. [Google Scholar]

- DoS Attack with hPing3. Available online: https://linuxhint.com/hping3/ (accessed on 21 August 2020).

- Kührer, M.; Hupperich, T.; Rossow, C.; Holz, T. Exit from Hell? Reducing the Impact of Amplification DDoS Attacks. In Proceedings of the USENIX Security Symposium, San Diego, CA, USA, 20–22 August 2014; pp. 111–125. [Google Scholar]

- Backdoor in Sony Ipela Engine IP Cameras. Available online: https://sec-consult.com/en/blog/2016/12/backdoor-in-sony-ipela-engine-ip-cameras/ (accessed on 21 August 2020).

- Social Engineering Attacks. Available online: http://www.jmest.org/wp-content/uploads/JMESTN42352270.pdf (accessed on 21 August 2020).

- Pavković, N.; Perkov, L. Social Engineering Toolkit—A systematic approach to social engineering. In Proceedings of the MIPRO, 34th International Convention, Opatija, Croatia, 23–27 May 2011; pp. 1485–1489. [Google Scholar]

- Chikofsky, E.J.; Cross, J.H. Reverse engineering and design recovery: A taxonomy. IEEE Softw. 1990, 7, 13–17. [Google Scholar] [CrossRef]

- Exploiting Surveillance Cameras. Available online: https://media.blackhat.com/us-13/US-13-Heffner-Exploiting-Network-Surveillance-Cameras-Like-A-Hollywood-Hacker-Slides.pdf (accessed on 21 August 2020).

- Shwartz, O.; Mathov, Y.; Bohadana, M.; Elovici, Y.; Oren, Y. Opening Pandora’s Box: Effective Techniques for Reverse Engineering IoT Devices. In Proceedings of the International Conference on Smart Card Research and Advanced Applications, Lugano, Switzerland, 13–15 November 2017; pp. 1–21. [Google Scholar]

- Swann Song-DVR Insecurity. 2013. Available online: http://console-cowboys.blogspot.com/2013/01/swann-song-dvr-insecurity.html (accessed on 21 August 2020).

- Optical Surgery: Implanting a Dropcam. Available online: https://www.defcon.org/images/defcon-22/dc-22-presentations/Moore-Wardle/DEFCON-22-Colby-Moore-Patrick-Wardle-Synack-DropCam-Updated.pdf (accessed on 21 August 2020).

- Looking Through the Eyes of China’s Surveillance State. 2018. Available online: https://www.nytimes.com/2018/07/16/technology/china-surveillance-state.html (accessed on 21 August 2020).

- Wang, Y.; Bao, T.; Ding, C.; Zhu, M. Face recognition in real-world surveillance videos with deep learning method. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 239–243. [Google Scholar]

- Ding, C.; Huang, K.; Patel, V.M.; Lovell, B.C. Special issue on Video Surveillance-oriented Biometrics. Pattern Recognit. Lett. 2018, 107, 1–2. [Google Scholar] [CrossRef]

- Li, T.; Chang, H.; Wang, M.; Ni, B.; Hong, R.; Yan, S. Crowded scene analysis: A survey. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 367–386. [Google Scholar] [CrossRef]

- Piciarelli, C.; Foresti, G.L. Surveillance-Oriented Event Detection in Video Streams. IEEE Intell. Syst. 2011, 26, 32–41. [Google Scholar] [CrossRef]

- Hampapur, A. Smart video surveillance for proactive security [in the spotlight]. IEEE Signal Process. Mag. 2008, 25, 136–134. [Google Scholar] [CrossRef]

- Joshi, K.A.; Thakore, D.G. A survey on moving object detection and tracking in video surveillance system. Int. J. Soft Comput. Eng. 2012, 2, 44–48. [Google Scholar]

- Parekh, H.S.; Thakore, D.G.; Jaliya, U.K. A survey on object detection and tracking methods. Int. J. Innov. Res. Comput. Commun. Eng. 2014, 2, 2970–2978. [Google Scholar]

- Ojha, S.; Sakhare, S. Image processing techniques for object tracking in video surveillance-A survey. In Proceedings of the 2015 International Conference on Pervasive Computing (ICPC), Pune, India, 8–10 January 2015; pp. 1–6. [Google Scholar]

- Paul, P.V.; Yogaraj, S.; Ram, H.B.; Irshath, A.M. Automated video object recognition system. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L.; Reiter, M.K. Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1528–1540. [Google Scholar]

- The Batch: Clothes That Thwart Surveillance, DeepMind in the Hot Seat, BERT’s Revenge, Federal AI Standards. Available online: https://blog.deeplearning.ai/blog/the-batch-clothes-that-thwart-surveillance-deepmind-in-the-hot-seat-berts-revenge-federal-ai-standards (accessed on 21 July 2020).

- Chung, J.; Sohn, K. Image-based learning to measure traffic density using a deep convolutional neural network. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1670–1675. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, Y. Machine Learning and Computer Vision-Enabled Traffic Sensing Data Analysis and Quality Enhancement. In Elsevier’s Data-Driven Solutions to Transportation Problems; Elsevier: Amsterdam, The Netherlands, 2019; pp. 51–79. [Google Scholar]

- Kadim, Z.; Johari, K.M.; Samaon, D.F.; Li, Y.S.; Hon, H.W. Real-Time Deep-Learning Based Traffic Volume Count for High-Traffic Urban Arterial Roads. In Proceedings of the IEEE 10th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 18–19 April 2020; pp. 53–78. [Google Scholar]

- The Creation and Detection of Deepfakes: A Survey. Available online: https://arxiv.org/abs/2004.11138 (accessed on 21 August 2020).

- Valente, J.; Koneru, K.; Cardenas, A. Privacy and security in Internet-connected cameras. In Proceedings of the 2019 IEEE International Congress on Internet of Things (ICIOT), Milan, Italy, 8–13 July 2019; pp. 173–180. [Google Scholar]

- Kolias, C.; Kambourakis, G.; Stavrou, A.; Voas, J. DDoS in the IoT: Mirai and other botnets. Computer 2017, 50, 80–84. [Google Scholar] [CrossRef]

- Researchers Hack Building Control System at Google Australia Office. Available online: https://www.wired.com/2013/05/googles-control-system-hacked/ (accessed on 22 April 2019).

- Guri, M.; Bykhovsky, D. aIR-Jumper: Covert air-gap exfiltration/infiltration via security cameras & infrared (IR). Comput. Secur. 2019, 82, 15–29. [Google Scholar] [CrossRef]

- Mirsky, Y.; Doitshman, T.; Elovici, Y.; Shabtai, A. Kitsune: An Ensemble of Autoencoders for Online Network Intrusion Detection. Netw. Distrib. Syst. Secur. Symp. (Ndss) 2018, 5, 2. [Google Scholar]

- Meidan, Y.; Bohadana, M.; Mathov, Y.; Mirsky, Y.; Shabtai, A.; Breitenbacher, D.; Elovici, Y. N-baiot—Network-based detection of iot botnet attacks using deep autoencoders. IEEE Pervasive Comput. 2018, 17, 12–22. [Google Scholar] [CrossRef]

- Liu, F.; Koenig, H. A survey of video encryption algorithms. Comput. Secur. 2010, 29, 3–15. [Google Scholar] [CrossRef]

- Mitmproxy—An Interactive HTTPS Proxy. Available online: https://mitmproxy.org/ (accessed on 18 June 2020).

- Bala, R. A Brief Survey on Robust Video Watermarking Techniques. Int. J. Eng. Sci. 2015, 4, 41–45. [Google Scholar]

- Arab, F.; Abdullah, S.M.; Hashim, S.Z.M.; Manaf, A.A.; Zamani, M. A robust video watermarking technique for the tamper detection of surveillance systems. Multimed. Tools Appl. 2016, 75, 10855–10885. [Google Scholar] [CrossRef]

- Kumar, P.A.R.; Selvakumar, S. Distributed denial of service attack detection using an ensemble of neural classifier. Comput. Commun. 2011, 34, 1328–1341. [Google Scholar] [CrossRef]

- Kumar, P.A.R.; Selvakumar, S. Detection of distributed denial of service attacks using an ensemble of adaptive and hybrid neuro-fuzzy systems. Comput. Commun. 2013, 36, 303–319. [Google Scholar] [CrossRef]

- Zargar, S.T.; Joshi, J.; Tipper, D. A survey of defense mechanisms against distributed denial of service (DDoS) flooding attacks. IEEE Commun. Surv. Tutor. 2013, 15, 2046–2069. [Google Scholar] [CrossRef]

- Saied, A.; Overill, R.E.; Radzik, T. Detection of known and unknown DDoS attacks using Artificial Neural Networks. Neurocomputing 2016, 172, 385–393. [Google Scholar] [CrossRef]

- Wu, L.; Cao, X. Geo-location estimation from two shadow trajectories. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 585–590. [Google Scholar]

- Li, X.; Xu, W.; Wang, S.; Qu, X. Are You Lying: Validating the Time-Location of Outdoor Images. In Applied Cryptography and Network Security; Springer: Berlin/Heidelberg, Germany, 2017; pp. 103–123. [Google Scholar]

- Garg, R.; Varna, A.L.; Wu, M. Seeing ENF: Natural time stamp for digital video via optical sensing and signal processing. In Proceedings of the 19th ACM International Conference on Multimedia; Association for Computing Machinery: New York, NY, USA, 2011; pp. 23–32. [Google Scholar]

- Rosenfeld, K.; Sencar, H.T. A study of the robustness of PRNU-based camera identification. Media Forensics Secur. Int. Soc. Opt. Photonics 2009, 7254, 72540M. [Google Scholar]

- Mayer, O.; Hosler, B.; Stamm, M.C. Open set video camera model verification. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2962–2966. [Google Scholar]

- Khan, P.W.; Byun, Y.C.; Park, N. A Data Verification System for CCTV Surveillance Cameras Using Blockchain Technology in Smart Cities. Electronics 2020, 9, 484. [Google Scholar] [CrossRef]

- López-Valcarce, R.; Romero, D. Defending surveillance sensor networks against data-injection attacks via trusted nodes. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 380–384. [Google Scholar]

- Vesper: Using Echo-Analysis to Detect Man-in-the-Middle Attacks in LANs. Available online: https://arxiv.org/abs/1803.02560 (accessed on 21 August 2020).

- Pitropakis, N.; Panaousis, E.; Giannetsos, T.; Anastasiadis, E.; Loukas, G. A taxonomy and survey of attacks against machine learning. Comput. Sci. Rev. 2019, 34, 100199. [Google Scholar] [CrossRef]

- Pan, J. Physical Integrity Attack Detection of Surveillance Camera with Deep Learning based Video Frame Interpolation. In Proceedings of the 2019 IEEE International Conference on Internet of Things and Intelligence System (IoTaIS), Bali, Indonesia, 5–7 November 2019; pp. 79–85. [Google Scholar]

- Using Depth for Pixel-Wise Detection of Adversarial Attacks in Crowd Counting. Available online: https://arxiv.org/abs/1911.11484 (accessed on 21 August 2020).

- Xiao, C.; Deng, R.; Li, B.; Lee, T.; Edwards, B.; Yi, J.; Song, D.; Liu, M.; Molloy, I. Advit: Adversarial frames identifier based on temporal consistency in videos. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3968–3977. [Google Scholar]

- Mundra, K.; Modpur, R.; Chattopadhyay, A.; Kar, I.N. Adversarial Image Detection in Cyber-Physical Systems. In Proceedings of the 1st ACM Workshop on Autonomous and Intelligent Mobile Systems; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–5. [Google Scholar]

- Tankard, C. Advanced persistent threats and how to monitor and deter them. Netw. Secur. 2011, 2011, 16–19. [Google Scholar] [CrossRef]

- Castillo, A.; Tabik, S.; Pérez, F.; Olmos, R.; Herrera, F. Brightness guided preprocessing for automatic cold steel weapon detection in surveillance videos with deep learning. Neurocomputing 2019, 330, 151–161. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional neural networks based fire detection in surveillance videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Amazon’s Automated Grocery Store of the Future Opens Monday. 2018. Available online: https://www.reuters.com/article/us-amazon-com-store/amazons-automated-grocery-store-of-the-future-opens-monday-idUSKBN1FA0RL (accessed on 21 August 2020).

- Ding, C.; Tao, D. Trunk-branch ensemble convolutional neural networks for video-based face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1002–1014. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.; Lazzaretti, A.E.; Lopes, H.S. A study of deep convolutional auto-encoders for anomaly detection in videos. Pattern Recognit. Lett. 2018, 105, 13–22. [Google Scholar] [CrossRef]

- Evtimov, I.; Eykholt, K.; Fernandes, E.; Kohno, T.; Li, B.; Prakash, A.; Rahmati, A.; Song, D. Robust physical-world attacks on deep learning models. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Goswami, G.; Ratha, N.; Agarwal, A.; Singh, R.; Vatsa, M. Unravelling robustness of deep learning based face recognition against adversarial attacks. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Tambe, A.; Aung, Y.L.; Sridharan, R.; Ochoa, M.; Tippenhauer, N.O.; Shabtai, A.; Elovici, Y. Detection of Threats to IoT Devices using Scalable VPN-forwarded Honeypots. In Proceedings of the Ninth ACM Conference on Data and Application Security and Privacy, Dallas, TX, USA, 25–27 March 2019. [Google Scholar]

- Wampler, C. Information Leakage in Encrypted IP Video Traffic. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2014. [Google Scholar]

- Game of Drones-Detecting Streamed POI from Encrypted FPV Channel. Available online: https://arxiv.org/abs/1801.03074 (accessed on 21 August 2020).

| (a) t |

| (b) t | (c) t | (d) t |

| Attack Type | Attack Name | Tool | Description: The attacker... | Violation | Vector |

|---|---|---|---|---|---|

| Recon. | OS Scan | Nmap | …scans the network for hosts, and their operating systems, to reveal possible vulnerabilities. | C | 1 |

| Fuzzing | SFuzz | …searches for vulnerabilities in the camera’s web servers by sending random commands to their cgis. | C | 3 | |

| Man in the Middle | Video Injection | Video Jack | …injects a recorded video clip into a live video stream. | C, I | 1 |

| ARP MiTM | Ettercap | …intercepts all LAN traffic via an ARP poisoning attack. | C | 1 | |

| Active Wiretap | Raspberry Pi 3B | …intercepts all LAN traffic via active wiretap (network bridge) covertly installed on an exposed cable. | C | 2 | |

| Denial of Service | SSDP Flood | Saddam | …overloads the DVR by causing cameras to spam the server with UPnP advertisements. | A | 1 |

| SYN DoS | Hping3 | …disables a camera’s video stream by overloading its web server. | A | 1 | |

| SSL Renegotiation | THC | …disables a camera’s video stream by sending many SSL renegotiation packets to the camera. | A | 1 | |

| Malware Botnet | Mirai | Telnet | …infects IoT with the Mirai malware by exploiting default credentials, and then scans for new vulnerable victims network. | C, I | X |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kalbo, N.; Mirsky, Y.; Shabtai, A.; Elovici, Y. The Security of IP-Based Video Surveillance Systems. Sensors 2020, 20, 4806. https://doi.org/10.3390/s20174806

Kalbo N, Mirsky Y, Shabtai A, Elovici Y. The Security of IP-Based Video Surveillance Systems. Sensors. 2020; 20(17):4806. https://doi.org/10.3390/s20174806

Chicago/Turabian StyleKalbo, Naor, Yisroel Mirsky, Asaf Shabtai, and Yuval Elovici. 2020. "The Security of IP-Based Video Surveillance Systems" Sensors 20, no. 17: 4806. https://doi.org/10.3390/s20174806

APA StyleKalbo, N., Mirsky, Y., Shabtai, A., & Elovici, Y. (2020). The Security of IP-Based Video Surveillance Systems. Sensors, 20(17), 4806. https://doi.org/10.3390/s20174806