Deep Learning-Based Real-Time Multiple-Person Action Recognition System

Abstract

1. Introduction

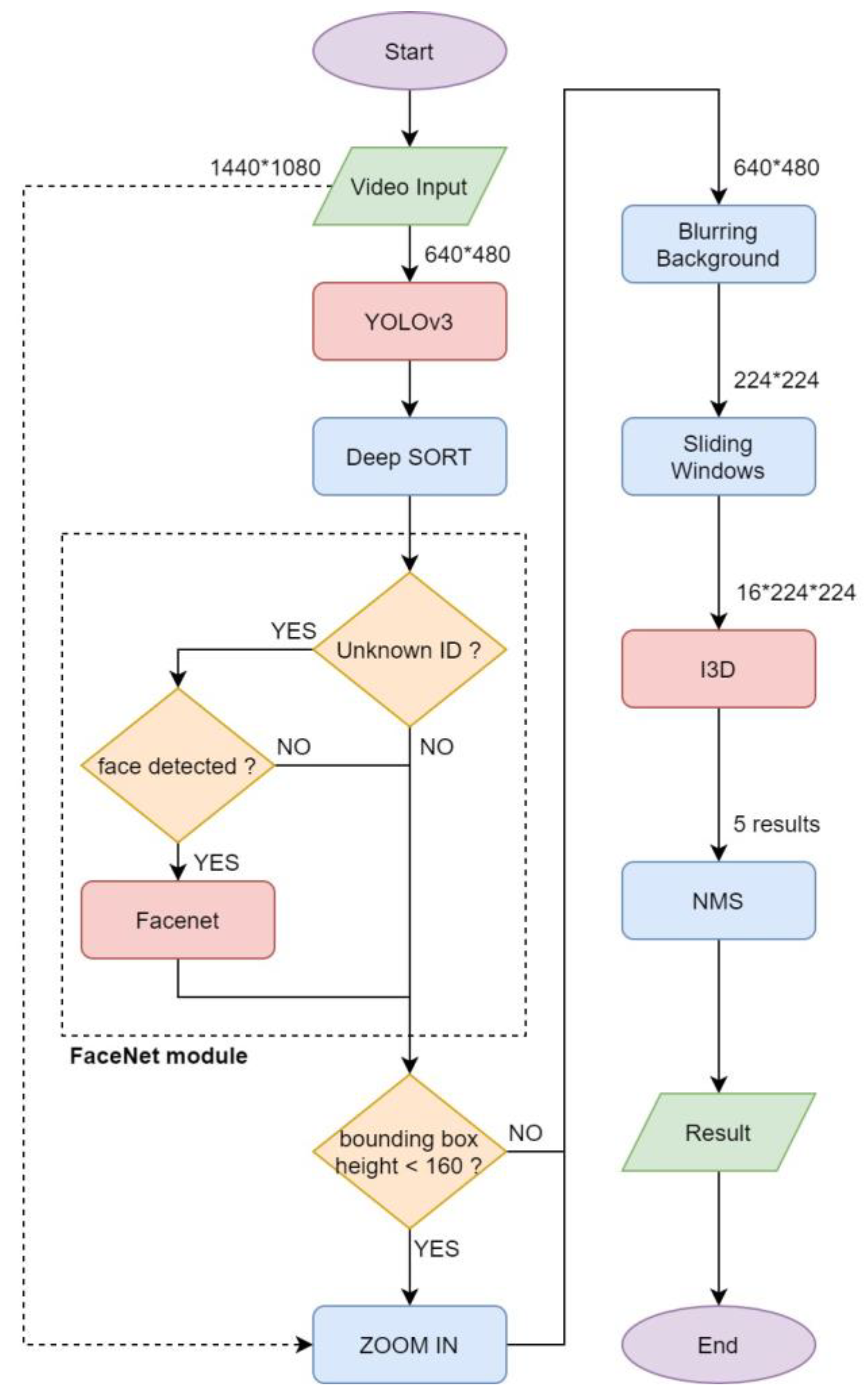

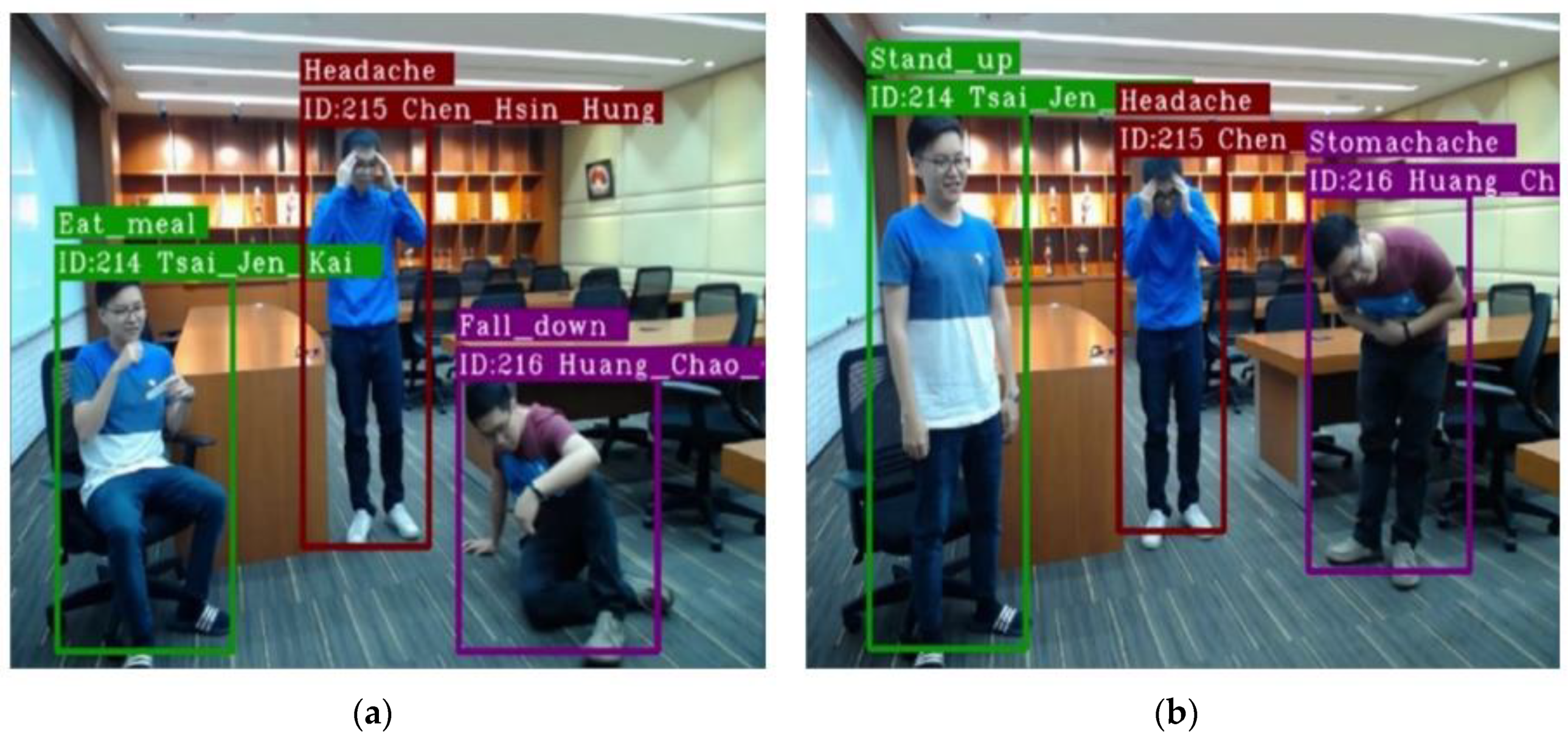

2. Real-Time Multiple-Person Action Recognition System

2.1. YOLO (You Only Look Once)

2.2. Deep SORT

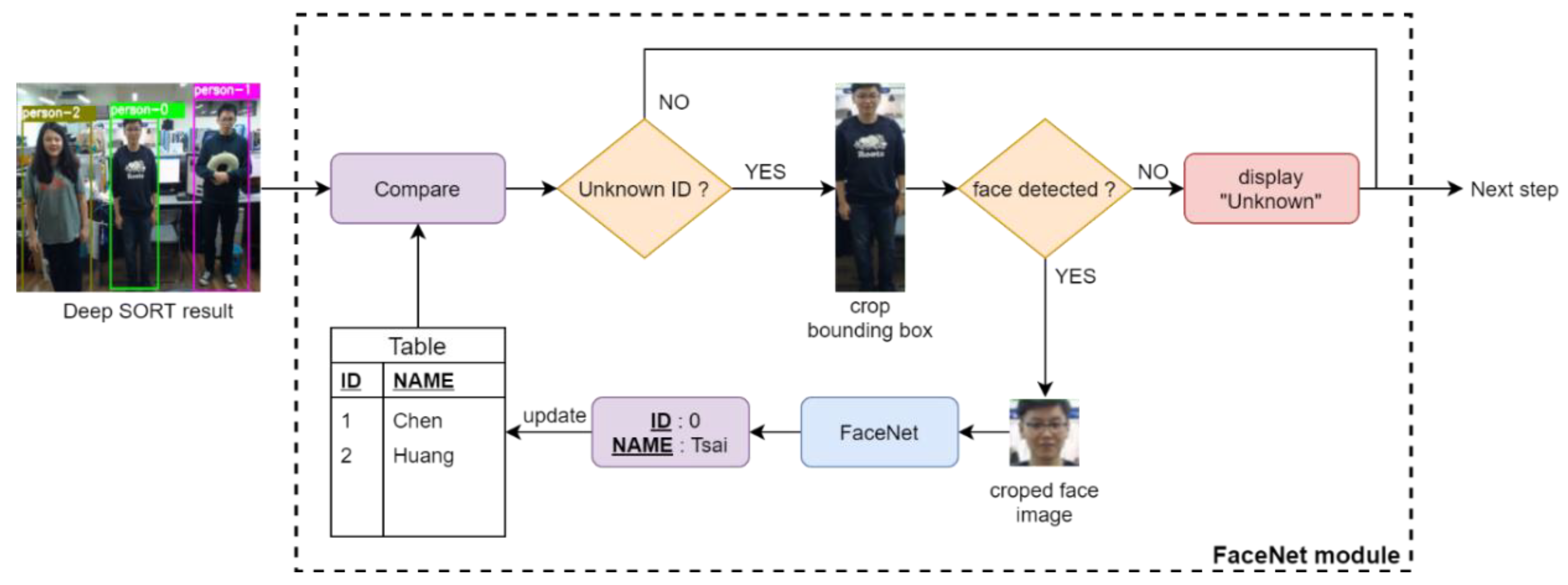

2.3. FaceNet

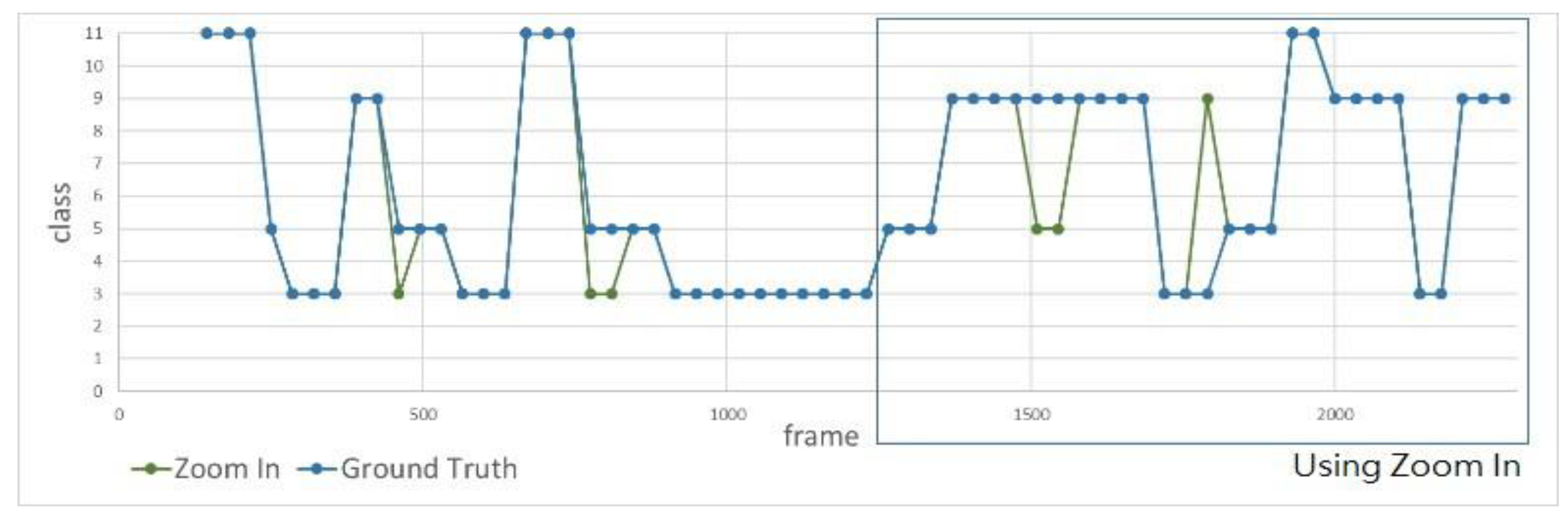

2.4. Automatic “Zoom-In”

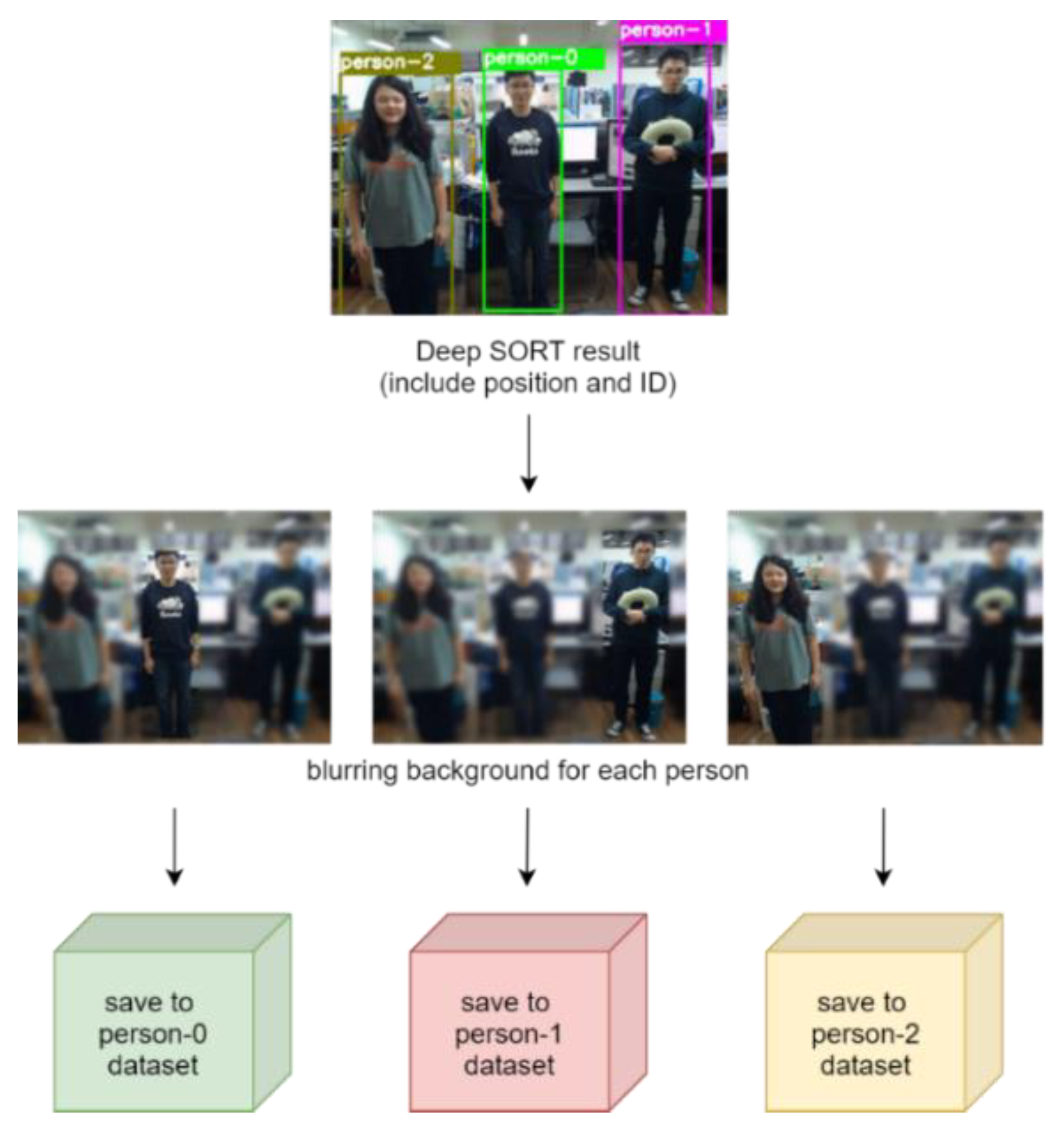

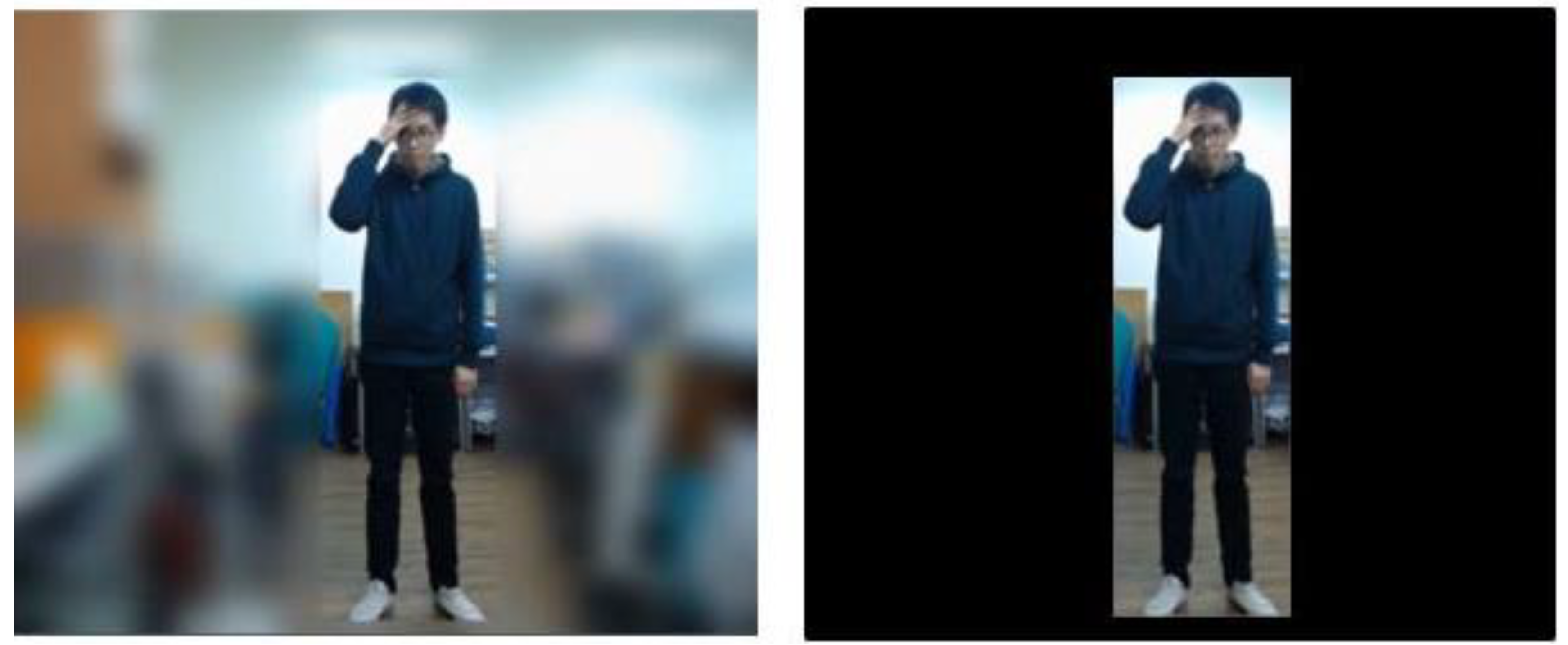

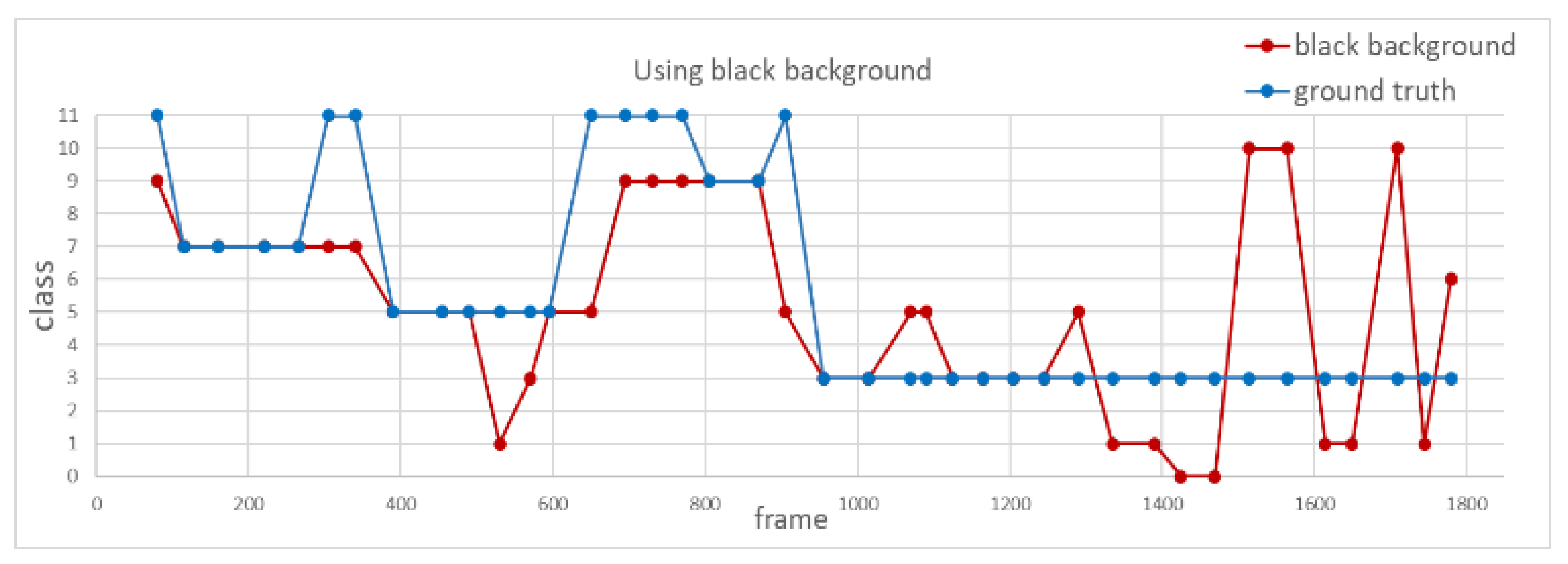

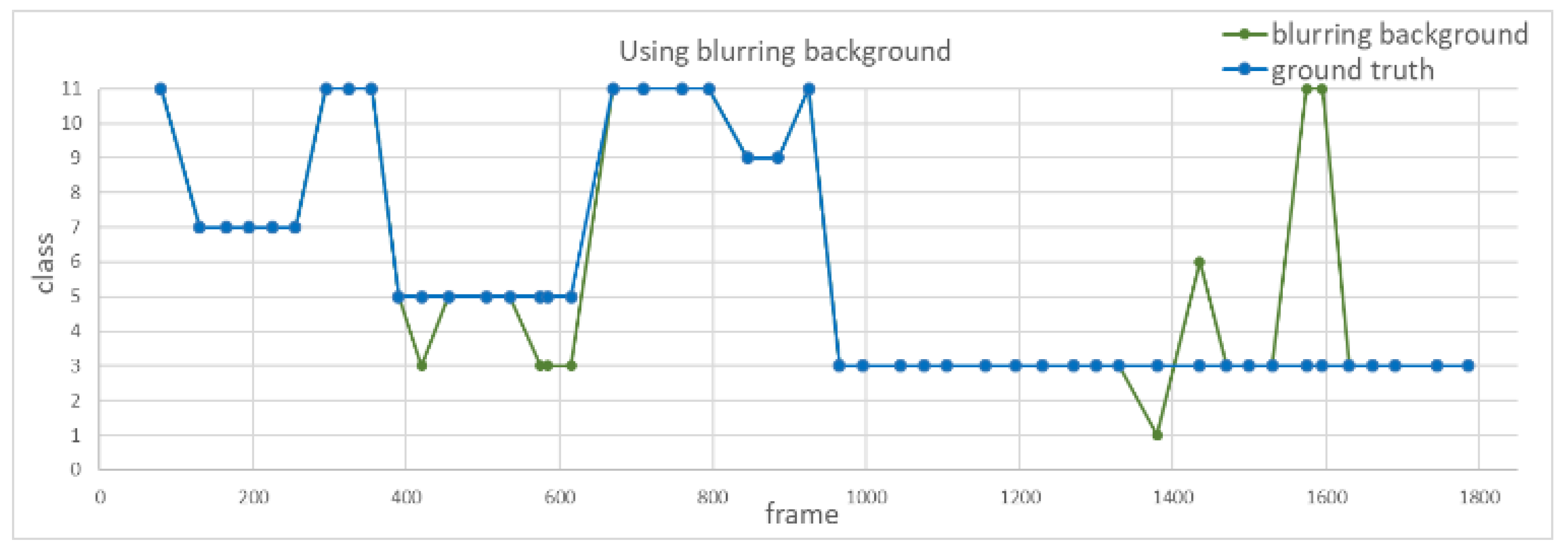

2.5. Blurring Background

2.6. Sliding Windows

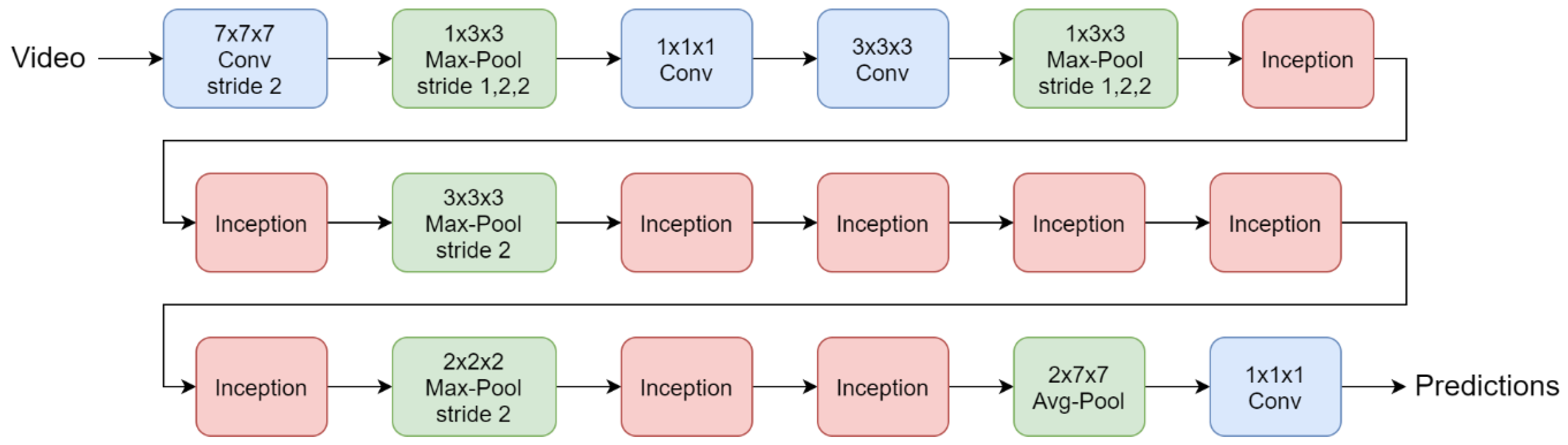

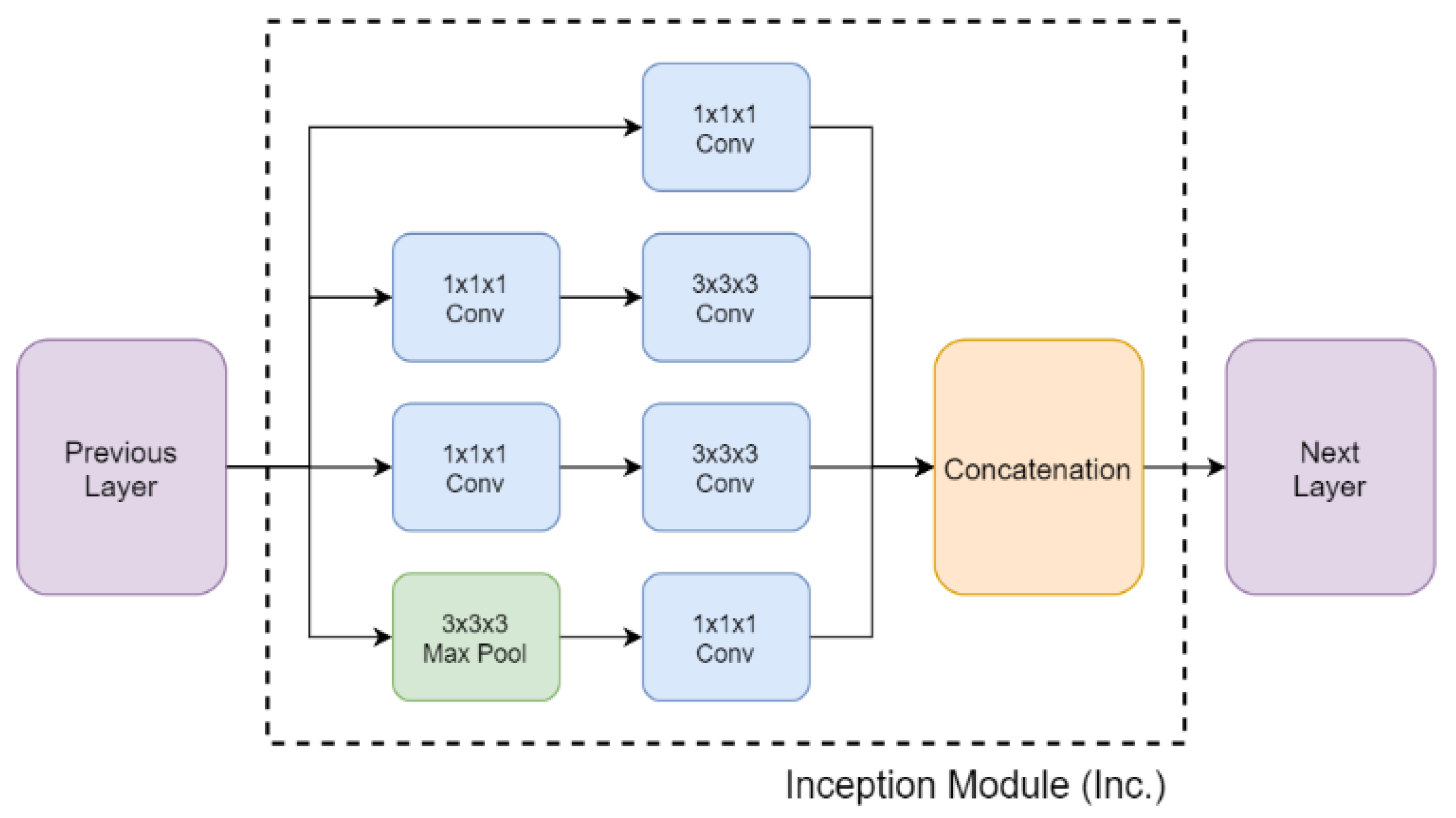

2.7. Inflated 3D ConvNet (I3D)

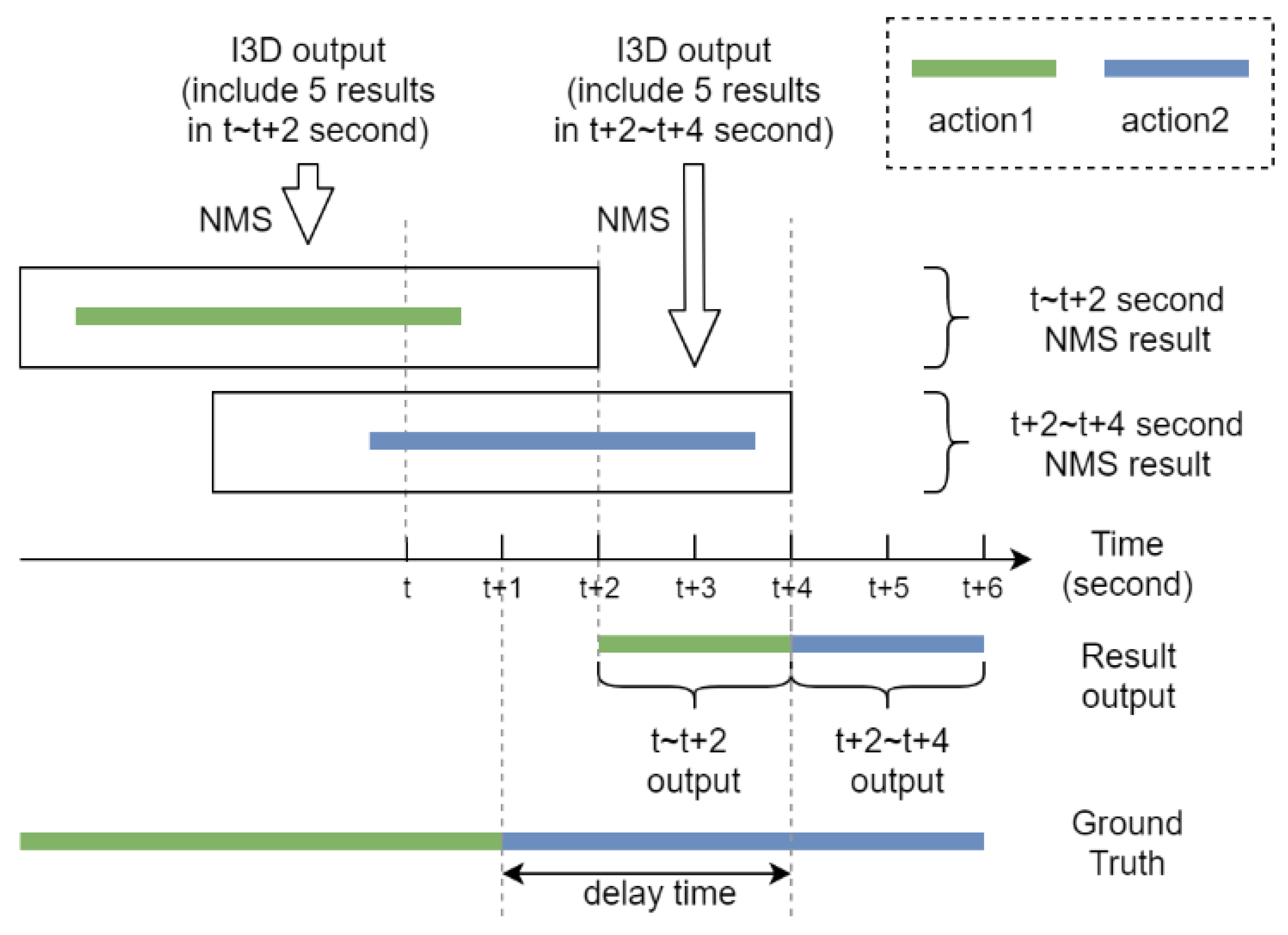

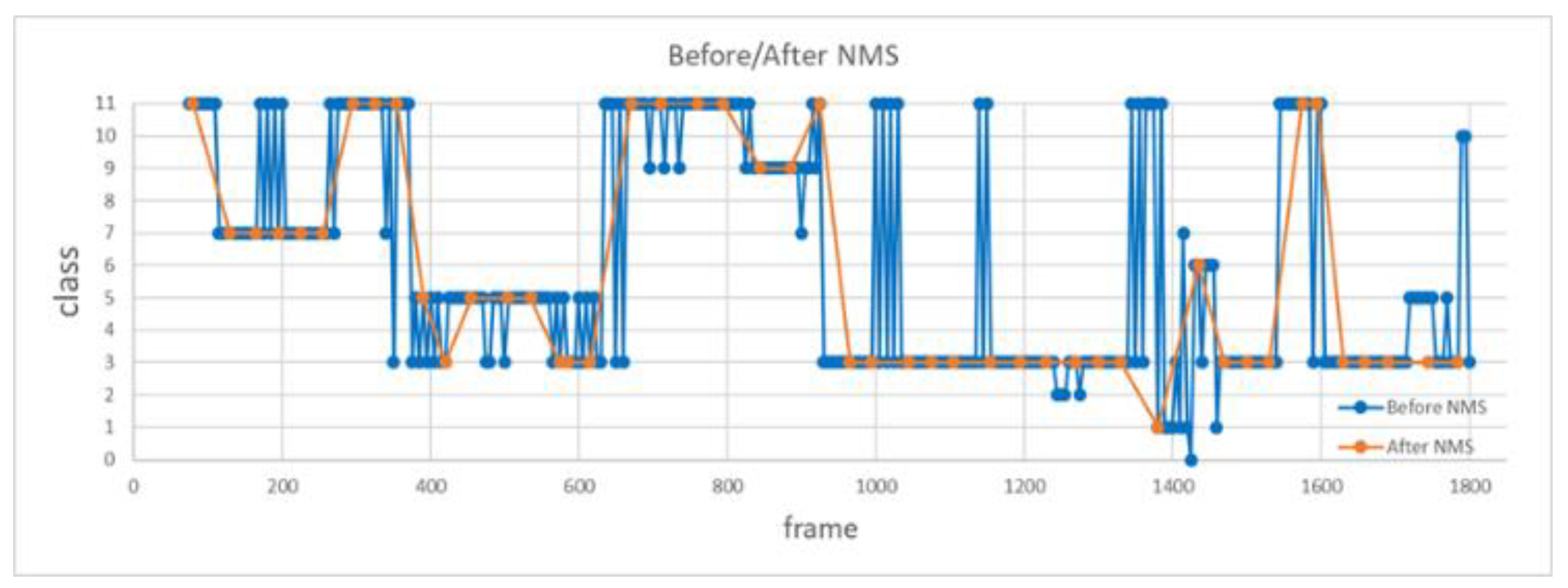

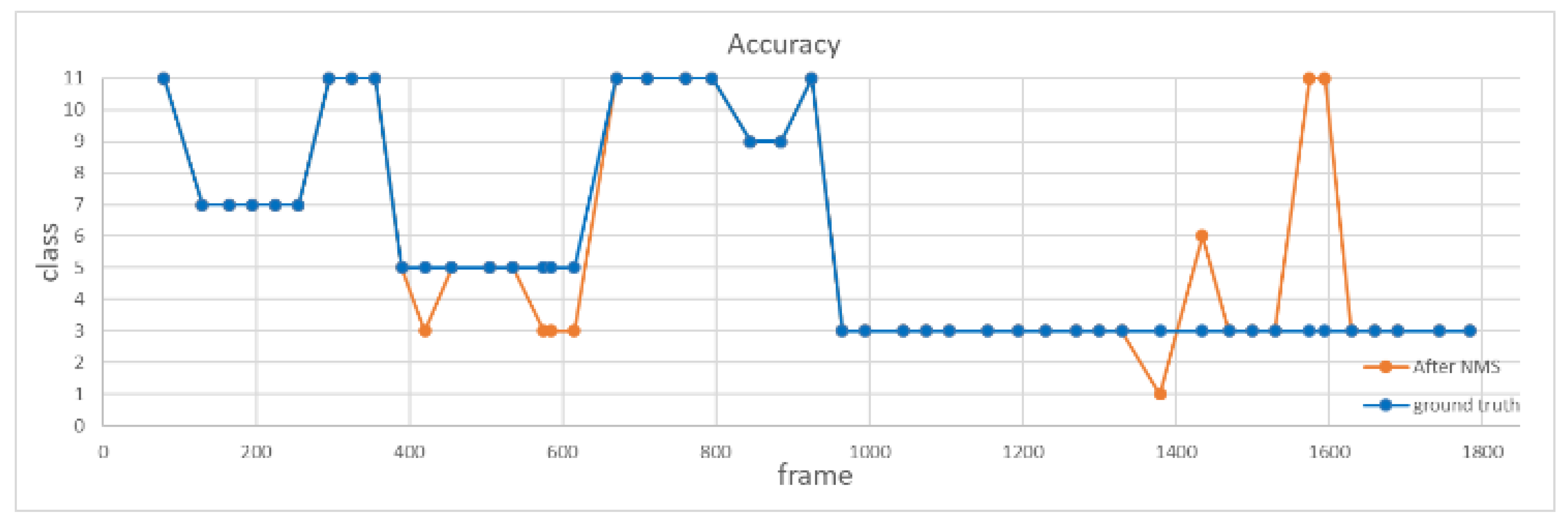

2.8. Nonmaximum Suppression (NMS)

3. Experimental Results

3.1. Computational Platforms

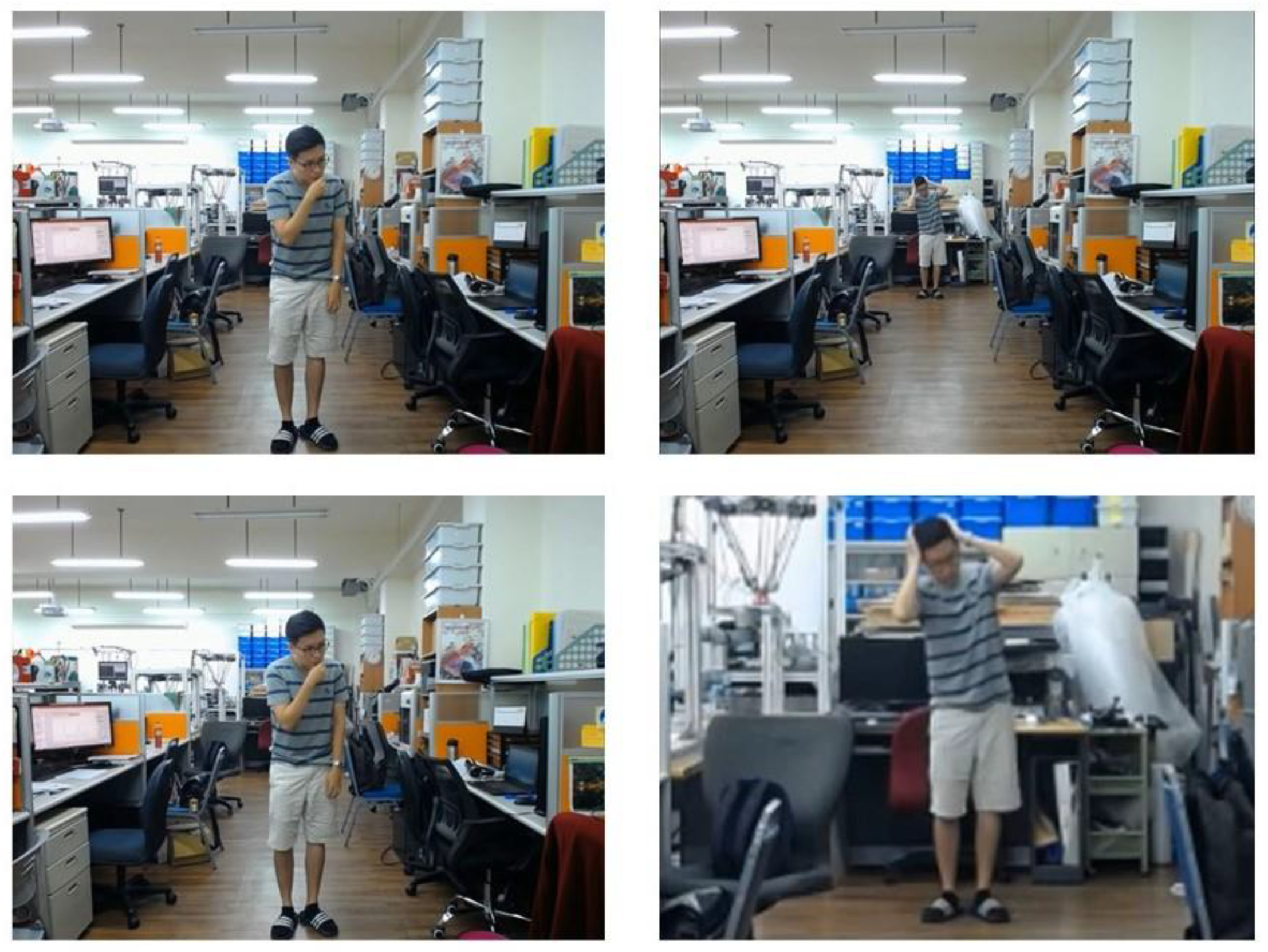

3.2. Datasets

3.3. Experimental Results

- Can the system simultaneously recognize the actions performed by multiple people in real time?

- Performance comparison of action recognition with and without “zoom-in” function;

- Differences of action recognition using black background and blurred background;

- Differences of I3D-based action recognition with and without NMS;

- Accuracy of the overall system.

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wiliem, A.; Madasu, V.; Boles, W.; Yarlagadda, P. A suspicious behaviour detection using a context space model for smart surveillance systems. Comput. Vis. Image Underst. 2012, 116, 194–209. [Google Scholar] [CrossRef]

- Feijoo-Fernández, M.C.; Halty, L.; Sotoca-Plaza, A. Like a cat on hot bricks: The detection of anomalous behavior in airports. J. Police Crim. Psychol. 2020. [Google Scholar] [CrossRef]

- Ozer, B.; Wolf, M. A Train station surveillance system: Challenges and solutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 652–657. [Google Scholar] [CrossRef]

- Zhang, H.B.; Zhang, Y.X.; Zhong, B.; Lei, Q.; Yang, L.; Du, J.X.; Chen, D.S. A comprehensive survey of vision-based human action recognition methods. Sensors 2019, 19, 1005. [Google Scholar] [CrossRef] [PubMed]

- Bakalos, N.; Voulodimos, A.; Doulamis, N.; Doulamis, A.; Ostfeld, A.; Salomons, E.; Caubet, J.; Jimenez, V.; Li, P. Protecting water infrastructure from cyber and physical threats: Using multimodal data fusion and adaptive deep learning to monitor critical systems. IEEE Signal Process. Mag. 2019, 36, 36–48. [Google Scholar] [CrossRef]

- Kar, A.; Rai, N.; Sikka, K.; Sharma, G. Adascan: Adaptive scan pooling in deep convolutional neural networks for human action recognition in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3376–3385. [Google Scholar]

- Wei, H.; Jafari, R.; Kehtarnavaz, N. Fusion of video and inertial sensing for deep learning–based human action recognition. Sensors 2019, 19, 3680. [Google Scholar] [CrossRef] [PubMed]

- Ding, R.; Li, X.; Nie, L.; Li, J.; Si, X.; Chu, D.; Liu, G.; Zhan, D. Empirical study and improvement on deep transfer learning for human activity recognition. Sensors 2018, 19, 57. [Google Scholar] [CrossRef] [PubMed]

- Xia, L.; Chen, C.; Aggarwal, J. View invariant human action recognition using histograms of 3D joints. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 20–27. [Google Scholar]

- Liu, J.; Shahroudy, A.; Xu, D.; Wang, G. Spatio-temporal lstm with trust gates for 3d human action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 816–833. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar]

- Fang, H.-S.; Xie, S.; Tai, Y.-W.; Lu, C. RMPE: Regional multi-person pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2334–2343. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Hwang, P.-J.; Wang, W.-Y.; Hsu, C.-C. Development of a mimic robot-learning from demonstration incorporating object detection and multiaction recognition. IEEE Consum. Electron. Mag. 2020, 9, 79–87. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Conference and Workshop on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Chen, Z.; Li, A.; Wang, Y. A temporal attentive approach for video-based pedestrian attribute recognition. In Chinese Conference on Pattern Recognition and Computer Vision (PRCV); Springer: Cham, Switzerland, 2019; pp. 209–220. [Google Scholar]

- Hwang, P.-J.; Hsu, C.-C.; Wang, W.-Y.; Chiang, H.-H. Robot learning from demonstration based on action and object recognition. In Proceedings of the IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 4–6 January 2020. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? new models and the kinetics dataset. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Rose, T.; Fiscus, J.; Over, P.; Garofolo, J.; Michel, M. The TRECVid 2008 event detection evaluation. In Proceedings of the IEEE Workshop on Applications of Computer Vision, Snowbird, UT, USA, 7–9 December 2009. [Google Scholar]

- Schuldt, C.; Laptev, I.; Caputo, B. Recognizing human actions: A local svm approach. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 32–36. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Carreira, J.; Noland, E.; Hillier, C.; Zisserman, A. A short note on the kinetics-700 human action dataset. arXiv 2019, arXiv:1907.06987. [Google Scholar]

- Song, Y.; Kim, I. Spatio-temporal action detection in untrimmed videos by using multimodal features and region proposals. Sensors 2019, 19, 1085. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbinl, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sum, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask r-cnn. arXiv 2017, arXiv:1703.06870. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-T.; Berg, A.C. Single shot MultiBox detector. In Proceedings of the 14th European Conference on Compute Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Wu, Y.-T.; Chien, Y.-H.; Wang, W.-Y.; Hsu, C.-C. A YOLO-based method on the segmentation and recognition of Chinese words. In Proceedings of the International Conference on System Science and Engineering, New Taipei City, Taiwan, 28–30 June 2018. [Google Scholar]

- Bewley, A.; Zongyuan, G.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Shou, Z.; Wang, D.; Chang, S.-F. Temporal action localization in untrimmed videos via multi-stage CNNs. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1049–1058. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. NTU RGB+D: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1010–1019. [Google Scholar]

| Number | Action | Number | Action |

|---|---|---|---|

| 1 | Drink | 7 | Chest ache |

| 2 | Eat meal | 8 | Backache |

| 3 | Stand up | 9 | Stomachache |

| 4 | Cough | 10 | Walking |

| 5 | Fall down | 11 | Writing |

| 6 | Headache | 12 | Background |

| Number of People | 1 | 2 | 3 | 4 | 8 | 10 |

|---|---|---|---|---|---|---|

| Average speed (fps) | 12.29 | 11.70 | 11.46 | 11.30 | 11.02 | 10.93 |

| Average Accuracy | Short-Distance Accuracy | Long-Distance Accuracy | |

|---|---|---|---|

| With zoom-in | 90.79% | 91.49% | 89.29% |

| Without zoom-in | 69.74% | 32.14% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsai, J.-K.; Hsu, C.-C.; Wang, W.-Y.; Huang, S.-K. Deep Learning-Based Real-Time Multiple-Person Action Recognition System. Sensors 2020, 20, 4758. https://doi.org/10.3390/s20174758

Tsai J-K, Hsu C-C, Wang W-Y, Huang S-K. Deep Learning-Based Real-Time Multiple-Person Action Recognition System. Sensors. 2020; 20(17):4758. https://doi.org/10.3390/s20174758

Chicago/Turabian StyleTsai, Jen-Kai, Chen-Chien Hsu, Wei-Yen Wang, and Shao-Kang Huang. 2020. "Deep Learning-Based Real-Time Multiple-Person Action Recognition System" Sensors 20, no. 17: 4758. https://doi.org/10.3390/s20174758

APA StyleTsai, J.-K., Hsu, C.-C., Wang, W.-Y., & Huang, S.-K. (2020). Deep Learning-Based Real-Time Multiple-Person Action Recognition System. Sensors, 20(17), 4758. https://doi.org/10.3390/s20174758