Assessment of Camouflage Effectiveness Based on Perceived Color Difference and Gradient Magnitude

Abstract

1. Introduction

2. Methods

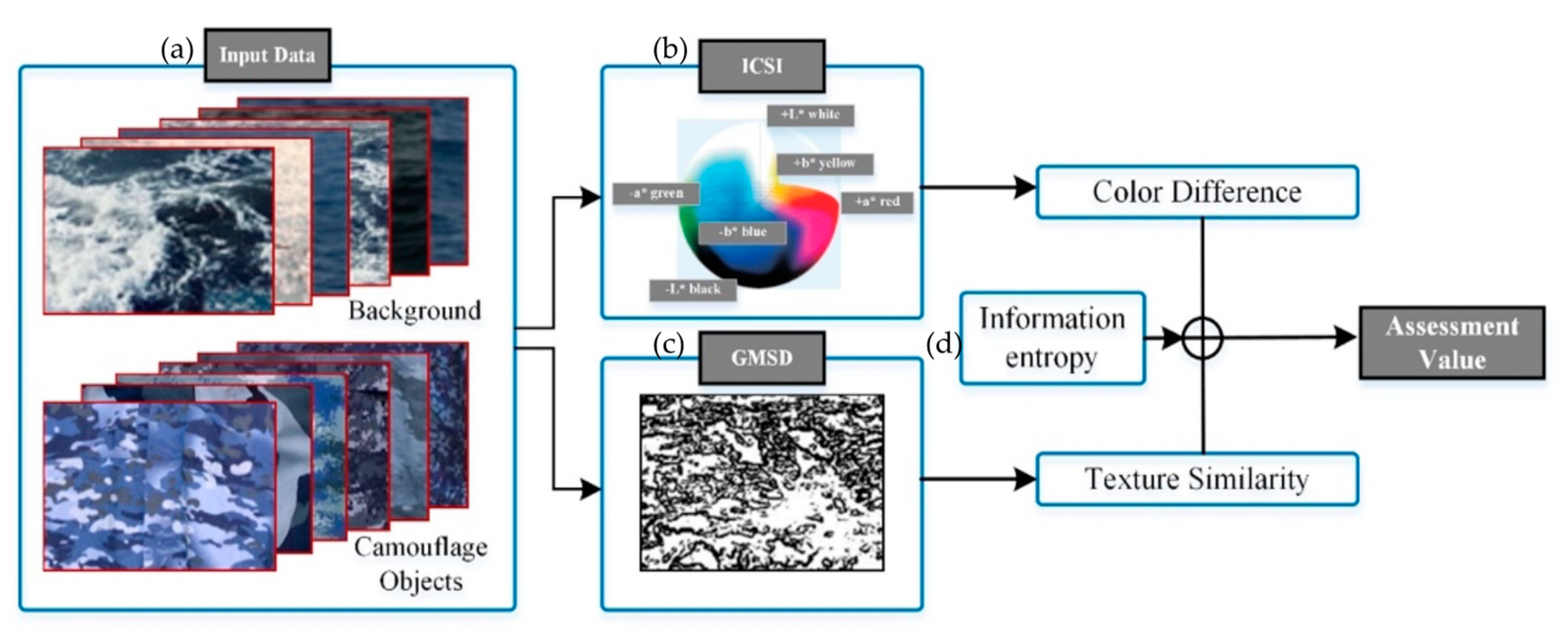

2.1. Overview

2.2. Image Color Simiarity Index (ICSI)

2.3. Gradient Magnitude Similarity Deviation (GMSD)

2.4. Calculation of Metrics Weights

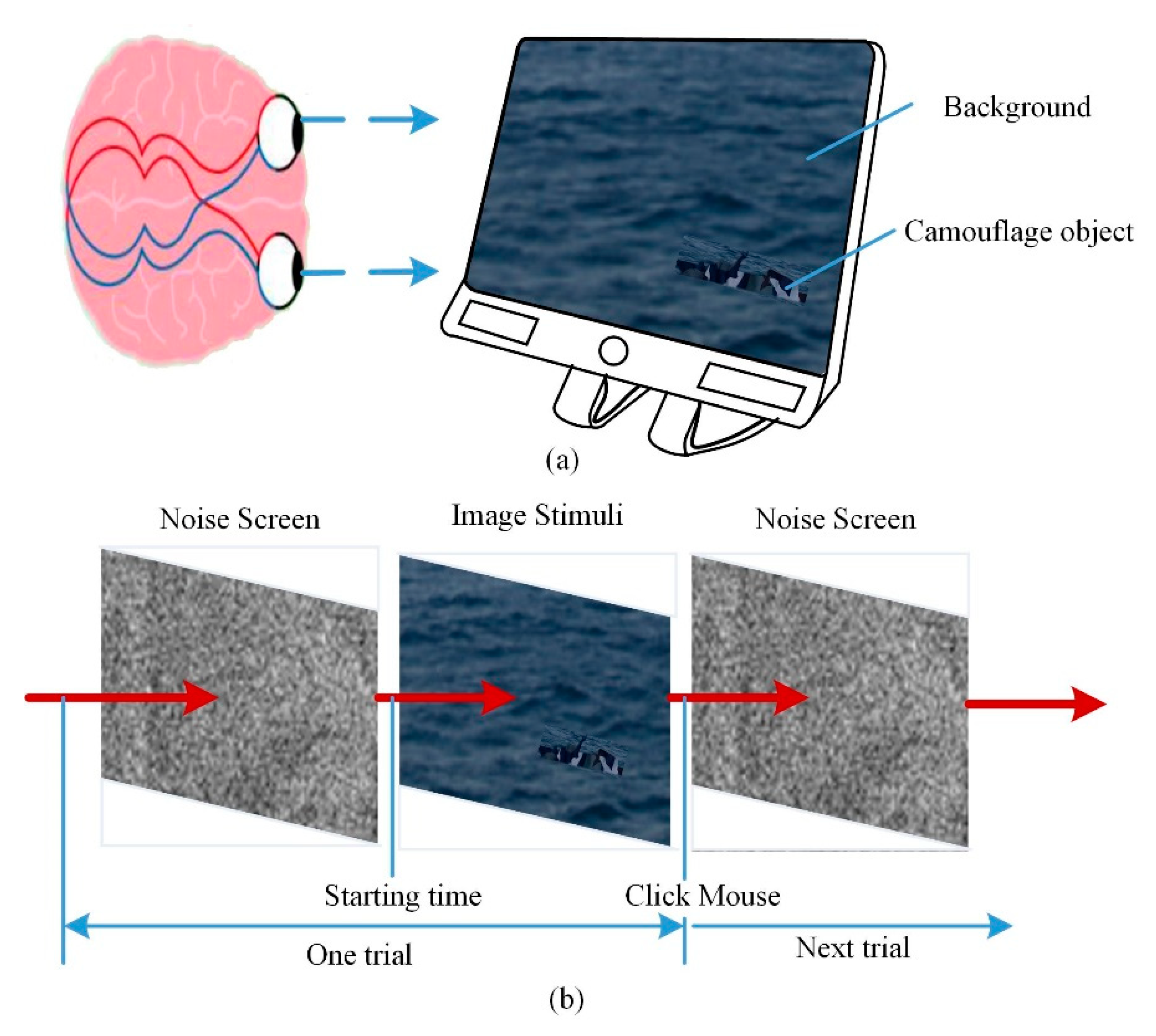

3. Experimental Setup

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

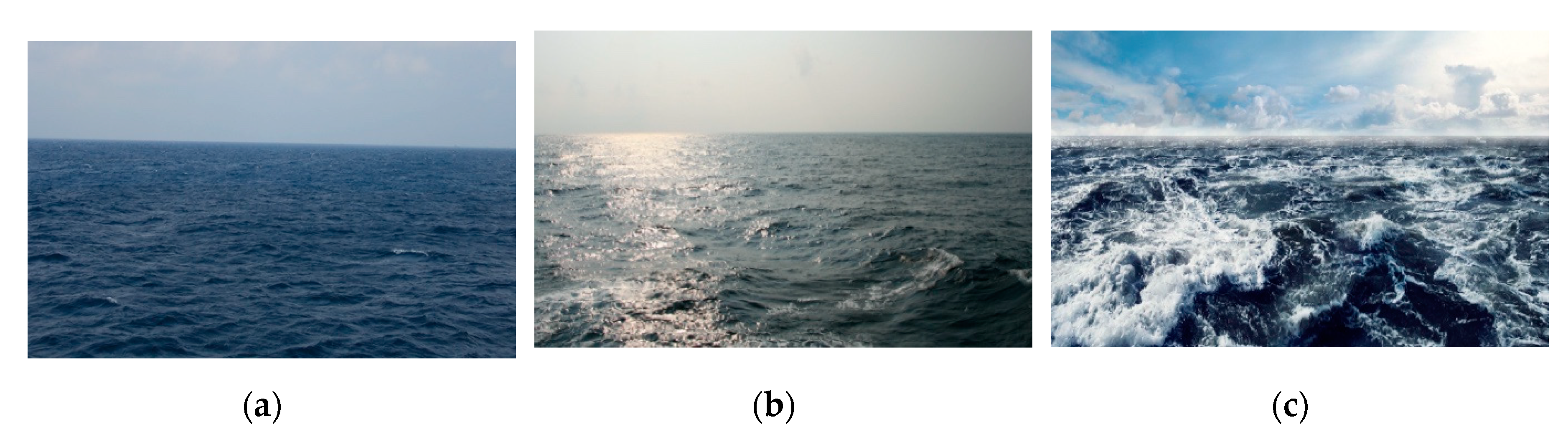

Appendix A.1. Background Collection

Appendix A.2. Camouflage Collection

| Type | Camouflage Pattern | Design Description |

|---|---|---|

| MTP |  | Multi-Terrain Pattern (MTP) is used by British forces. The main variants of MTP are a four-color woodland pattern for use on webbing in all terrains. The design of MTP was intended to be used across a wide range of environments encountered. |

| Flecktarn |  | Flecktarn was designed in the mid-1970s by the West German Army. The leopard-like pattern took Europe by storm in the same way that Woodland did in North America. |

| MARPAT |  | MARPAT (Marine Pattern) was the United States Marine Corp’s first digital camouflage and was implemented throughout the entire Marine forces in 2001. The color scheme seeks to update the US Woodland pattern into a pixelated micropattern. |

| Woodland |  | US Woodland was the Battle Dress Uniform pattern for almost all of the American armed forces from 1981 to 2006 and is still in use by almost a quarter of all militaries around the world. It is one of the most popular camouflages. |

| Multi-cam |  | Multi-cam was designed to blend into any type of terrain, weather, or lighting condition. It is the all-season-tire of the camouflage world. Crye Precision developed this camouflage in 2003 for American troops in Afghanistan. This cutting-edge design is a favorite for more technical outfitters. |

| Type 07 |  | Type 07 is a group of military uniforms introduced in 2007 and used by all branches of the People’s Liberation Army (PLA) of the People’s Republic of China (PRC). |

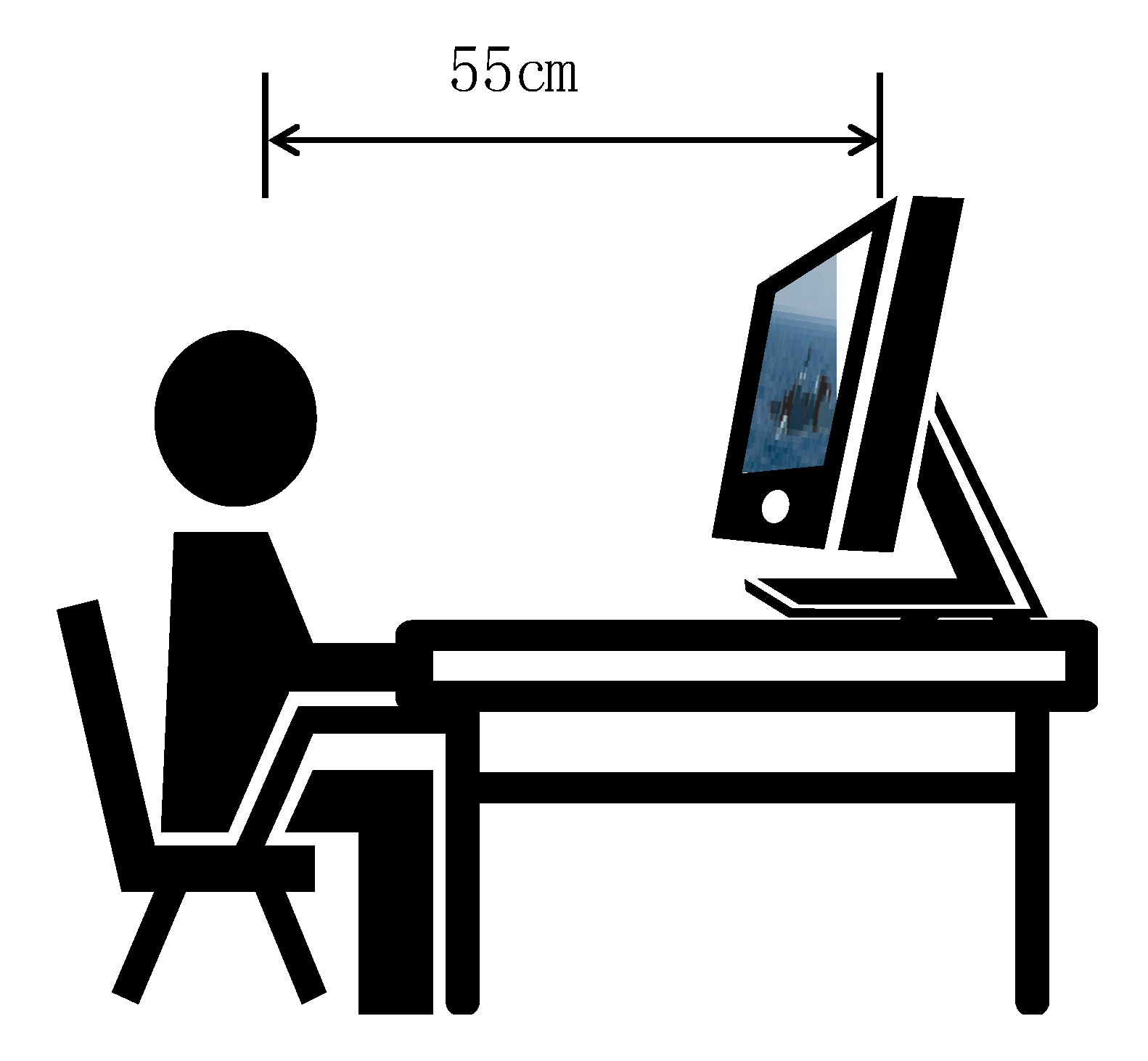

Appendix A.3. Apparatus

References

- Morin, S.A.; Shepherd, R.F.; Kwok, S.W.; Stokes, A.A.; Nemiroski, A.; Whitesides, G.M. Camouflage and display for soft machines. Science 2012, 337, 828–832. [Google Scholar] [CrossRef]

- Song, W.T.; Zhu, Q.D.; Huang, T.; Liu, Y.; Wang, Y.T. Volumetric display based on multiple mini-projectors and a rotating screen. Opt. Eng. 2015, 54, 013103. [Google Scholar] [CrossRef]

- Volonakis, T.N.; Matthews, O.E.; Liggins, E.; Baddeley, R.J.; Scott-Samuel, N.E.; Cuthill, I.C. Camouflage assessment: Machine and human. Comput. Ind. 2018, 99, 173–182. [Google Scholar] [CrossRef]

- Copeland, A.C.; Trivedi, M.M. Computational models for search and discrimination. Opt. Eng. 2001, 40, 1885–1896. [Google Scholar] [CrossRef][Green Version]

- Troscianko, T.; Benton, C.P.; Lovell, P.G.; Tolhurst, D.J.; Pizlo, Z. Animal camouflage and visual perception. Philos. Trans. Roy. Soc. B 2009, 364, 449–461. [Google Scholar] [CrossRef]

- Nyberg, S.; Bohman, L. Assessing camouflage methods using textural features. Opt. Eng. 2001, 40, 60–71. [Google Scholar] [CrossRef][Green Version]

- Song, J.; Liu, L.; Huang, W.; Li, Y.; Chen, X.; Zhang, Z. Target detection via HSV color model and edge gradient information in infrared and visible image sequences under complicated background. Opt. Quant. Electron. 2018, 50, 175. [Google Scholar] [CrossRef]

- González, A.; Vázquez, D.; López, A.M.; Amores, J. On-board object detection: Multicue, multimodal, and multiview random forest of local experts. IEEE Tran. Cybern. 2016, 47, 1–11. [Google Scholar] [CrossRef]

- Bosch, A.; Zisserman, A.; Muñoz, X. Scene classification using a hybrid generative/discriminative approach. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 712–727. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In Proceedings of the 8th European Conference on Computer Vision (ECCV), Prague, Czech, 11–14 May 2004; Pajdla, T., Matas, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar] [CrossRef]

- Lin, C.J.; Chang, C.C.; Liu, B.S. Developing and evaluating a target background similarity metric for camouflage detection. PLoS ONE 2014, 9, e87310. [Google Scholar] [CrossRef] [PubMed]

- Patil, K.V.; Pawar, K.N. Method for improving camouflage image quality using texture analysis. Int. J. Comput. Appl. 2017, 180, 6–8. [Google Scholar] [CrossRef]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef] [PubMed]

- Gentile, R.S. Device-independent color in PostScript. In Proceedings of the Human Vision, Visual Processing, & Digital Display IV, IS&T/SPIE’s Symposium on Electronic Imaging: Science and Technology; Jan, P.A., Bernice, E.R., Eds.; Proc. SPIE 1913, San Jose, CA, USA, 31 January–5 February 1993; pp. 419–432. [Google Scholar] [CrossRef]

- McDonald, R. Acceptability and perceptibility decisions using the CMC color difference formula. Text. Chem. Color 1988, 20, 31–37. [Google Scholar] [CrossRef]

- Luo, M.R.; Rigg, B. BFD (l:c) colour-difference formula. Part 1—Development of the formula. J. Soc. Dyers Colour. 1987, 103, 86–94. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.H.; Rigg, B. The development of the CIE 2000 color difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Lin, C.J.; Chang, C.C.; Lee, Y.H. Developing a similarity index for static camouflaged target detection. Imaging Sci. J. 2013, 62, 337–341. [Google Scholar] [CrossRef]

- Lin, C.J.; Prasetyo, Y.T.; Siswanto, N.D.; Jiang, B.C. Optimization of color design for military camouflage in CIELAB color space. Color Res. Appl. 2019, 44, 367–380. [Google Scholar] [CrossRef]

- Johnson, G.M.; Fairchild, M.D. A top down description of S-CIELAB and CIEDE2000. Color Res. Appl. 2003, 28, 425–435. [Google Scholar] [CrossRef]

- Kwak, Y.; MacDonald, L. Characterization of a desktop LCD projector. Displays 2000, 21, 179–194. [Google Scholar] [CrossRef]

- Poirson, A.B.; Wandell, B.A. Appearance of colored patterns: Pattern-color separability. J. Opt. Soc. Am. 1993, 10, 2458–2470. [Google Scholar] [CrossRef] [PubMed]

- Zou, Z.-H.; Yun, Y.; Sun, J.-N. Entropy method for determination of weight of evaluating indicators in fuzzy synthetic evaluation for water quality assessment. J. Environ. Sci. 2006, 18, 1020–1023. [Google Scholar] [CrossRef]

- Pomplun, M.; Reingold, E.M.; Shen, J. Investigating the visual span in comparative search: The effects of task difficulty and divided attention. Cognition 2001, 81, B57–B67. [Google Scholar] [CrossRef][Green Version]

- Available online: https://www.nationalgeographic.org/encyclopedia/ocean/ (accessed on 27 February 2020).

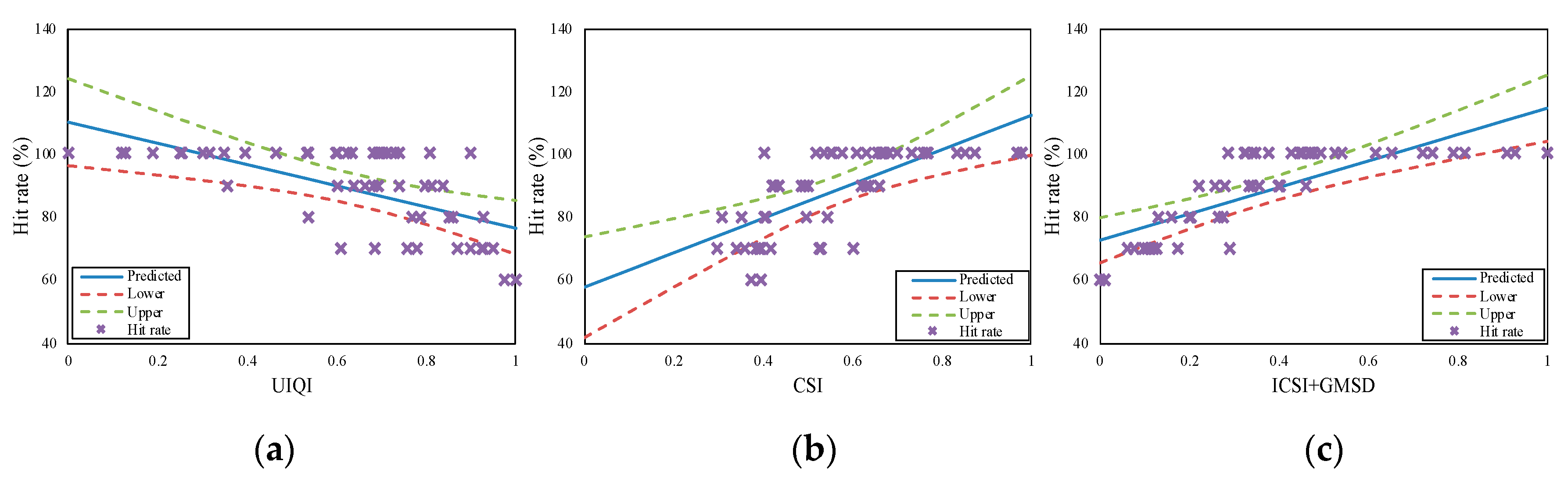

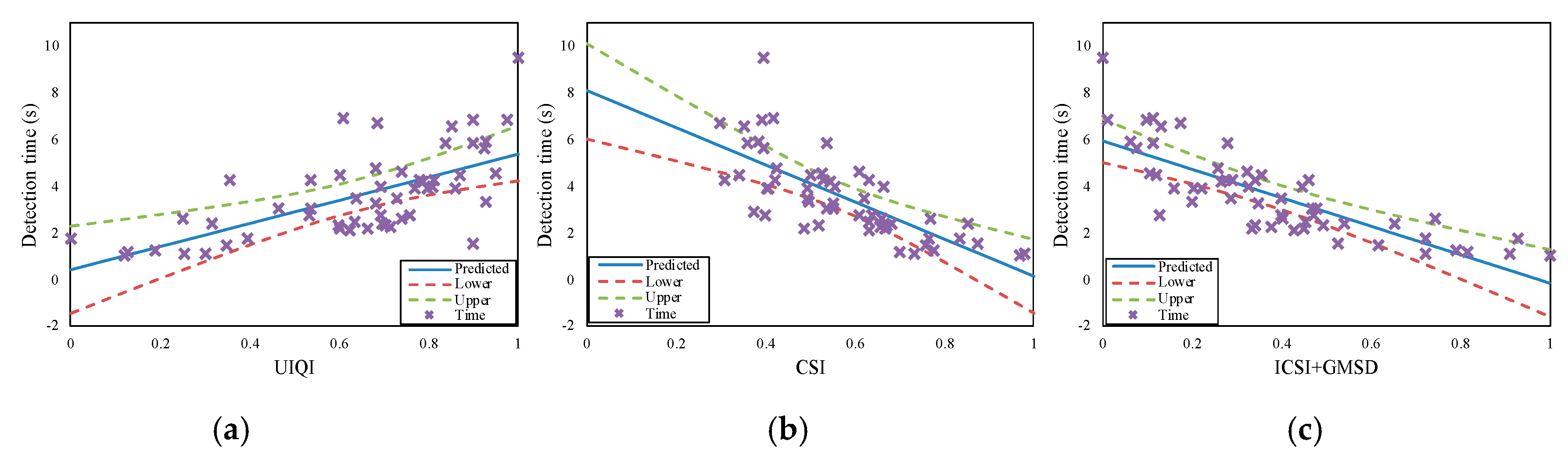

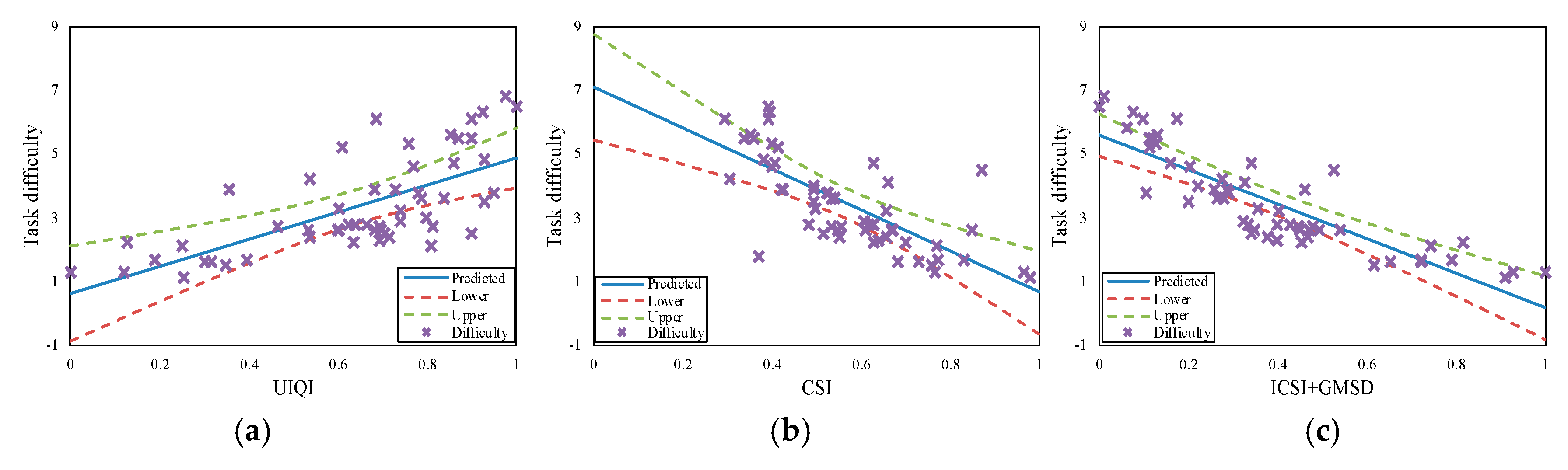

| Index | Hit Rate (%) | Detection Time (Second) | Task Difficulty |

|---|---|---|---|

| UIQI | 0.6240 | 0.6578 | 0.6882 |

| CSI | 0.7586 | 0.8028 | 0.8029 |

| GMSD | 0.6991 | 0.7498 | 0.7952 |

| ICSI | 0.6807 | 0.6887 | 0.7803 |

| ICSI + GMSD | 0.7821 | 0.8087 | 0.8637 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, X.; Liao, N.; Wu, W. Assessment of Camouflage Effectiveness Based on Perceived Color Difference and Gradient Magnitude. Sensors 2020, 20, 4672. https://doi.org/10.3390/s20174672

Bai X, Liao N, Wu W. Assessment of Camouflage Effectiveness Based on Perceived Color Difference and Gradient Magnitude. Sensors. 2020; 20(17):4672. https://doi.org/10.3390/s20174672

Chicago/Turabian StyleBai, Xueqiong, Ningfang Liao, and Wenmin Wu. 2020. "Assessment of Camouflage Effectiveness Based on Perceived Color Difference and Gradient Magnitude" Sensors 20, no. 17: 4672. https://doi.org/10.3390/s20174672

APA StyleBai, X., Liao, N., & Wu, W. (2020). Assessment of Camouflage Effectiveness Based on Perceived Color Difference and Gradient Magnitude. Sensors, 20(17), 4672. https://doi.org/10.3390/s20174672