Low-Light Image Brightening via Fusing Additional Virtual Images

Abstract

1. Introduction

- Propose a simple method to brighten a low-light or backlighting image by fusing additional virtual images;

- Reduce the possible color distortion of the generated virtual image due to inaccurate IMF;

- Present a simple method to alleviate the noise in virtual image.

2. Generation of Two Virtual Images

2.1. Generation of Two Intermediate Brightened Images via IMFs

2.2. Brightening Pixels in Underexposed Regions

2.3. Noise Reduction of Brightened Images

3. Fusion of the Input Image and Two Virtual Images

3.1. Weights of Three Images

3.2. Fusion of Three Images via a GGIF-Based MEF

| Algorithm 1: Single image brightening and the corresponding CRFs |

| Input: a low-light image |

| Output: a brightened image |

| Step 1 Compute IMFs from the available CRFs |

| Step 2 Generate two virtual images with larger exposure times |

| Case1 the value of pixel above 5 do |

| Brighten the pixel via Equation (17) |

| end |

| Case2 the value of pixel below 5 do |

| 1. Constructing weight matrix by Equation (2) |

| 2. Decompose input image as base layer and detail layer using WGIF |

| 3. Compute brighten constant using Equation (8) |

| 4. Brighten the pixel using Equation (9) |

| end |

| Step3 Fuse the input image and two virtual images to produce the |

| final image using Equations (10)–(16). |

4. Experimental Results

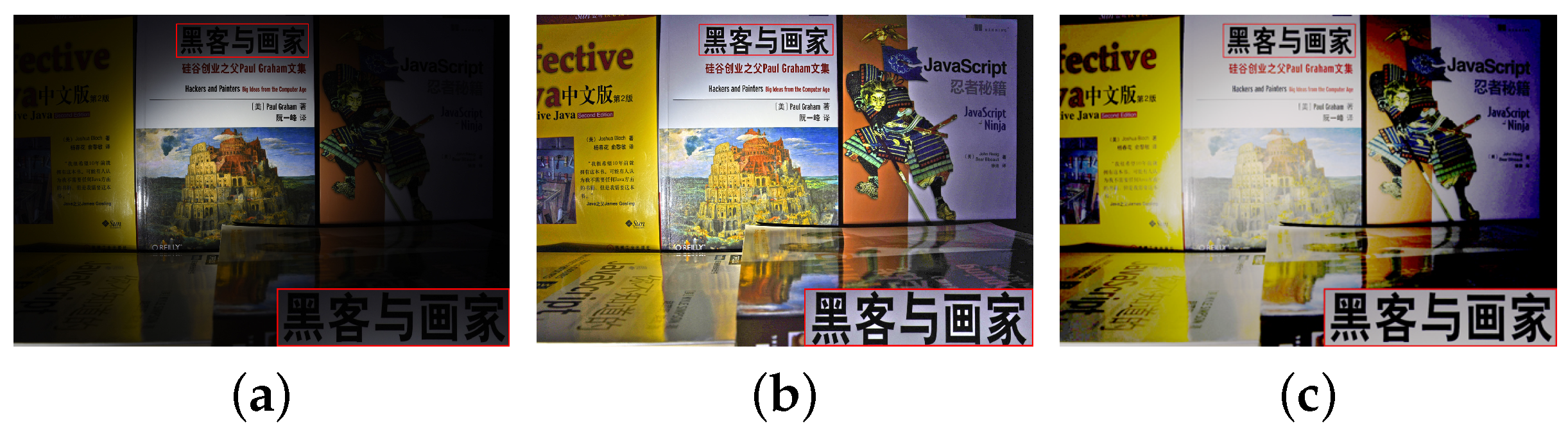

4.1. Generation of Virtual Images

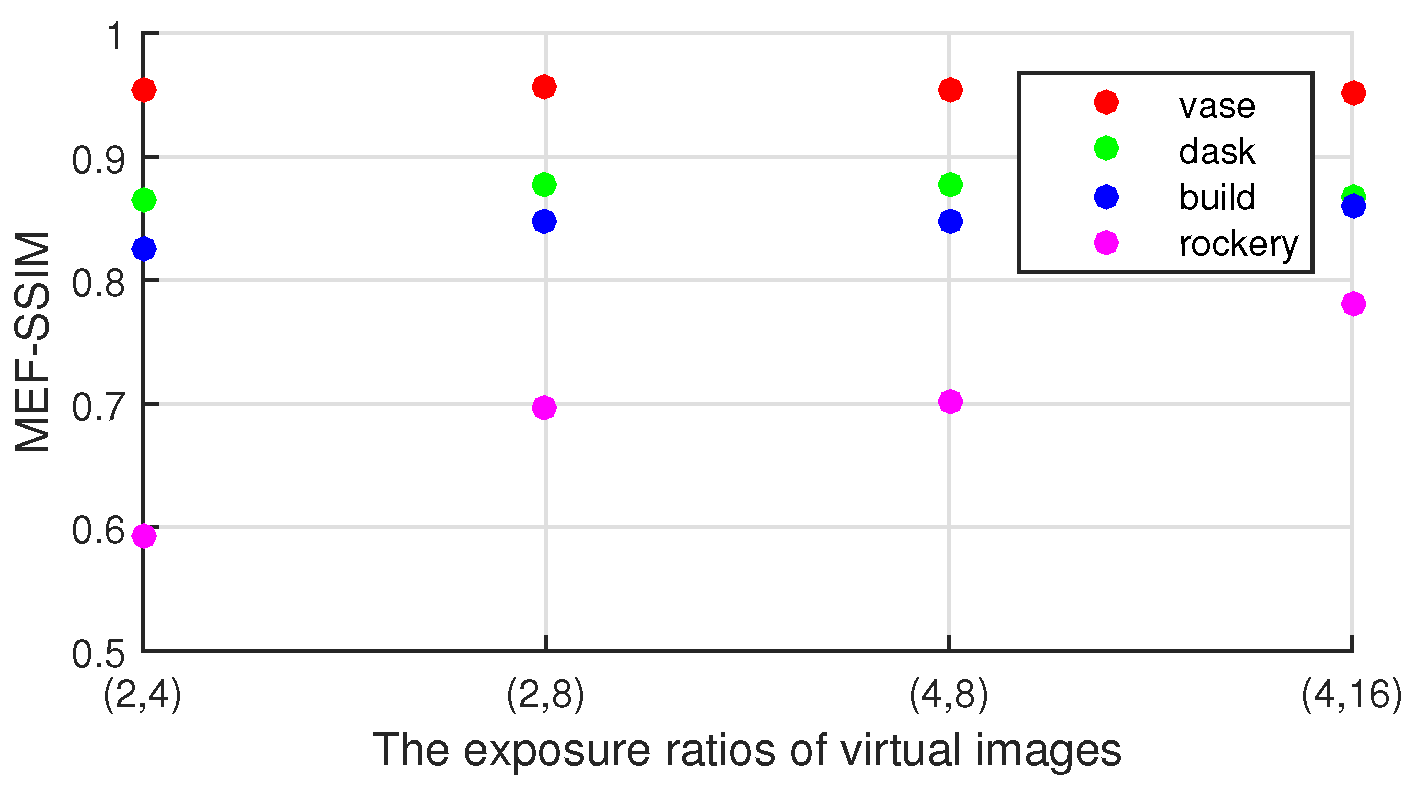

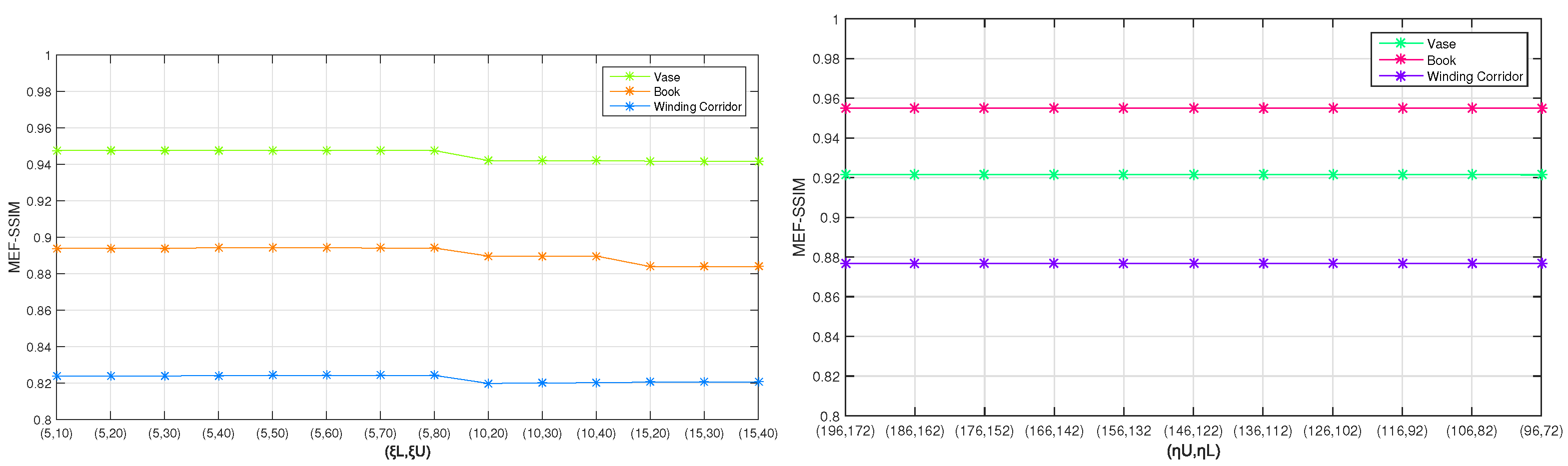

4.2. Difference Choices of Parameters

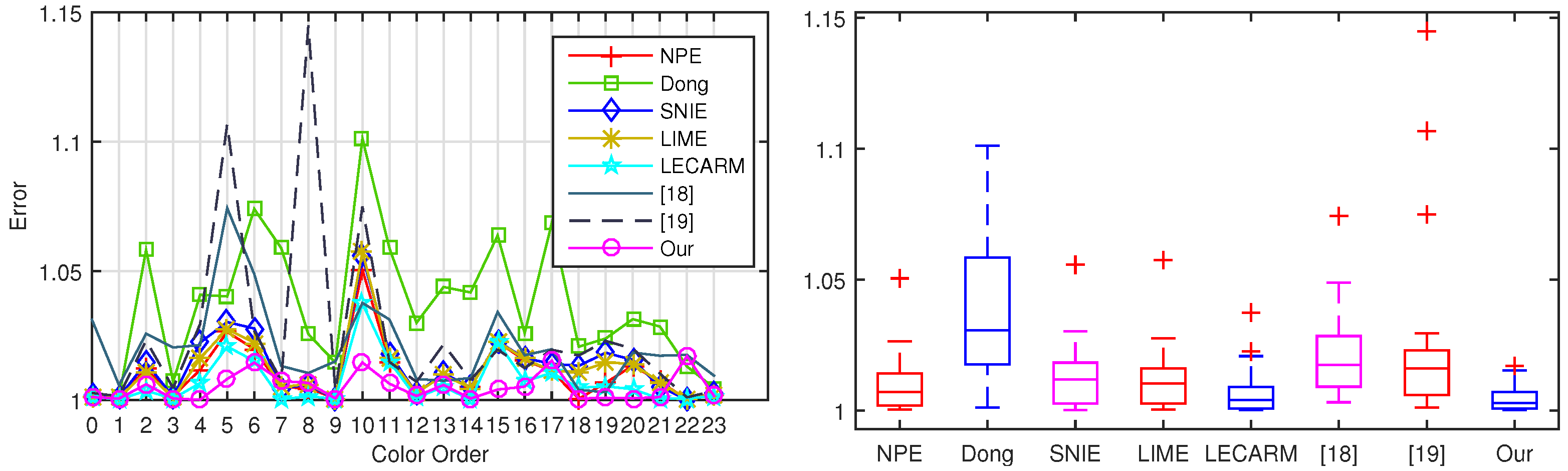

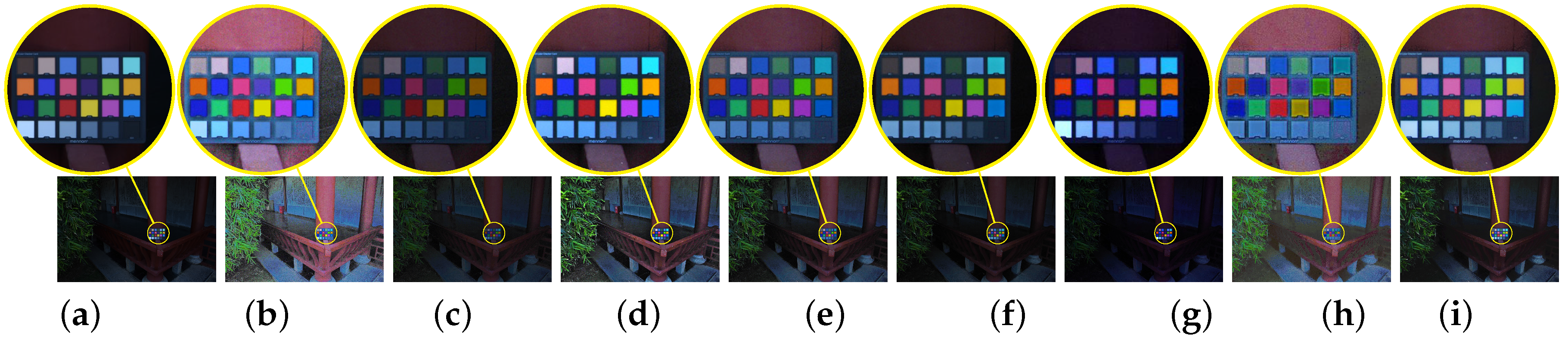

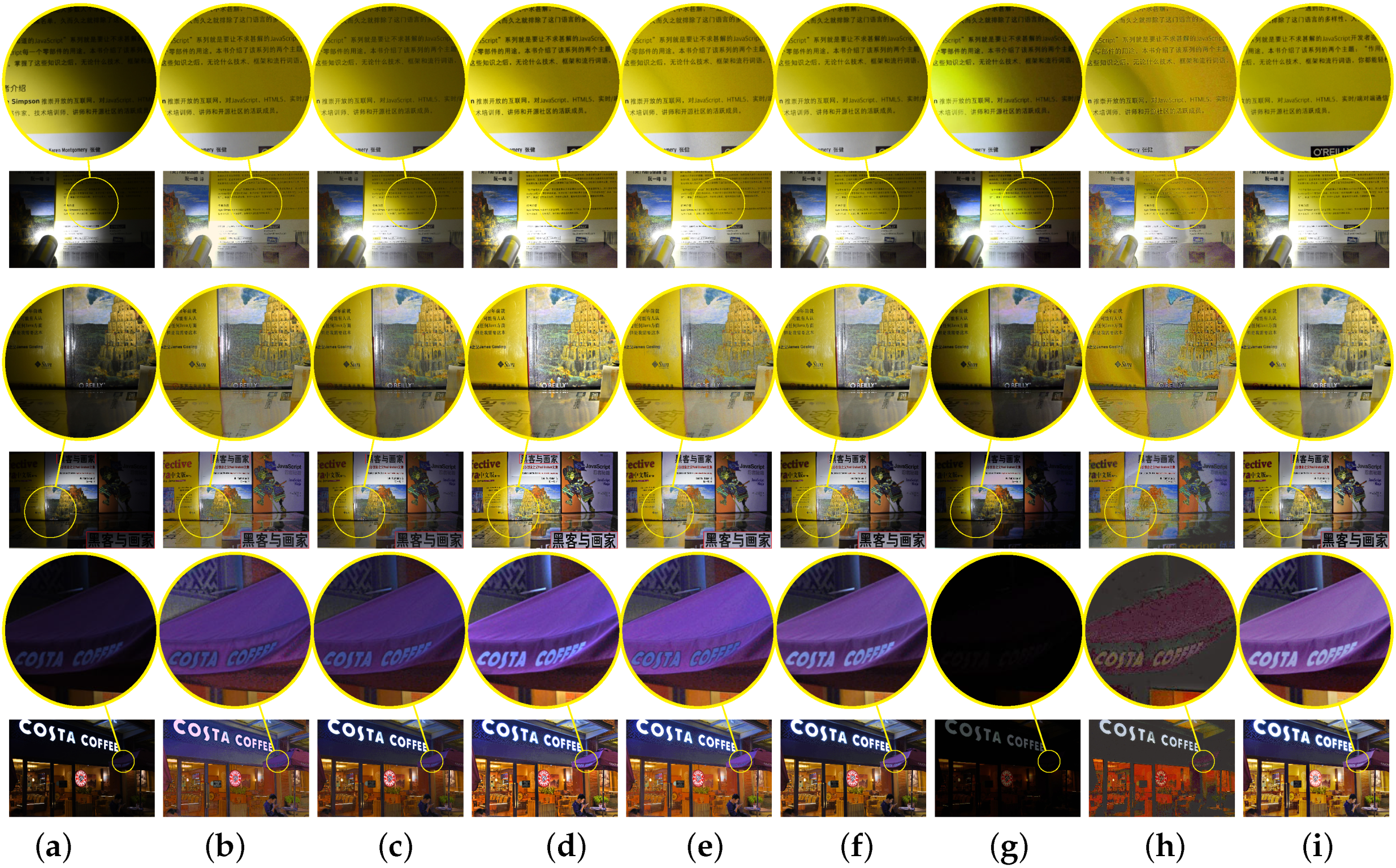

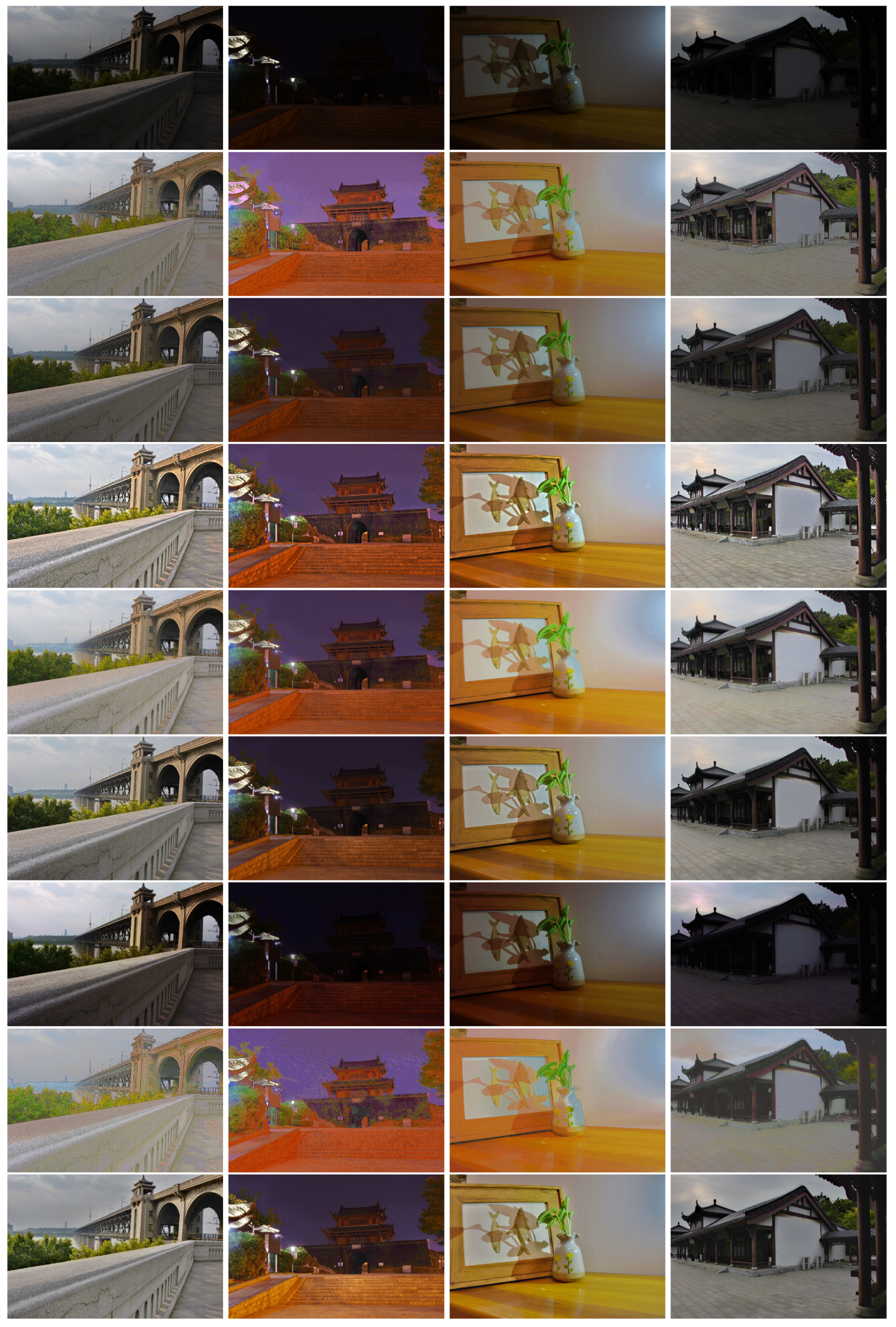

4.3. Comparison of the Proposed Algorithm with Existing Ones

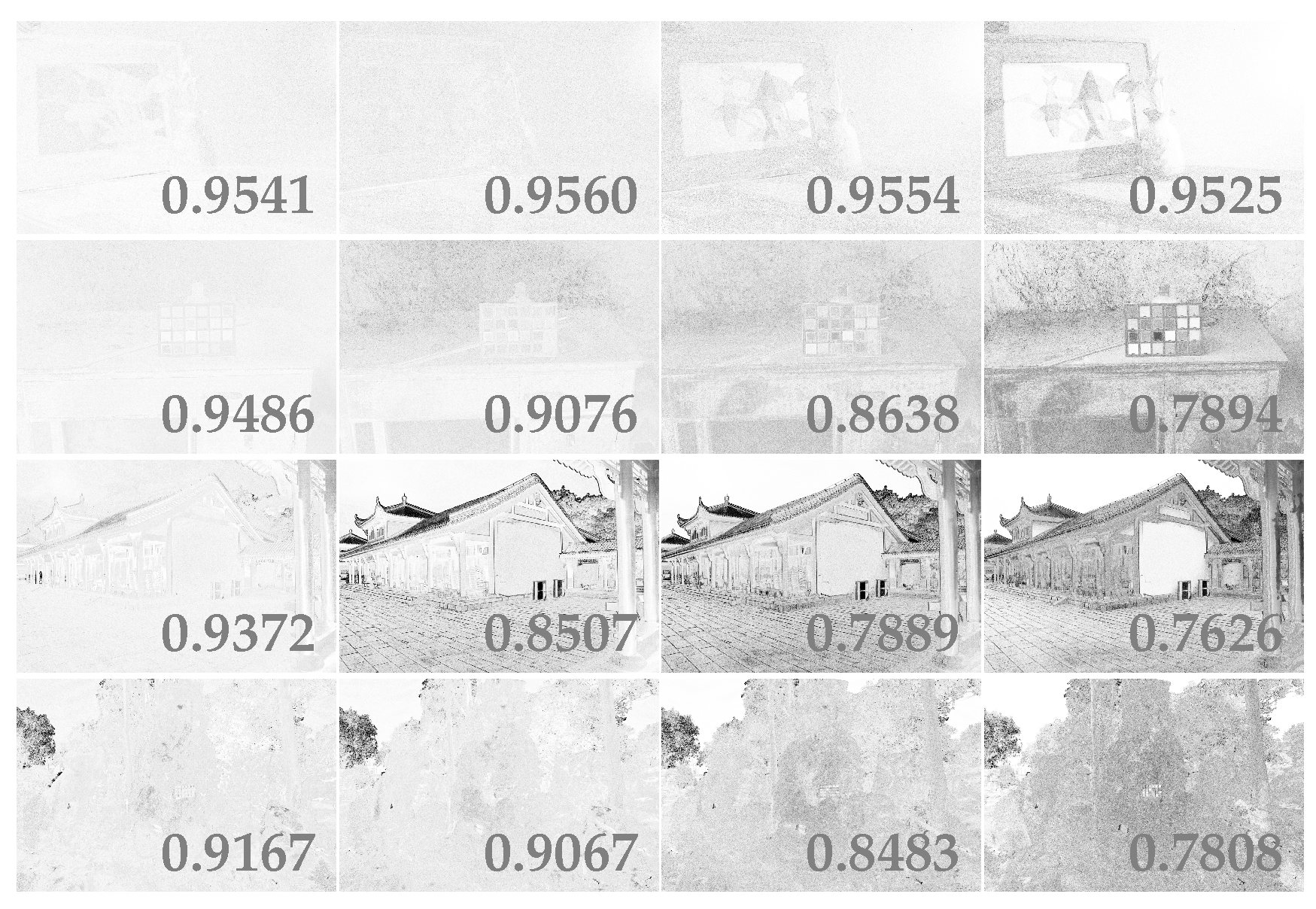

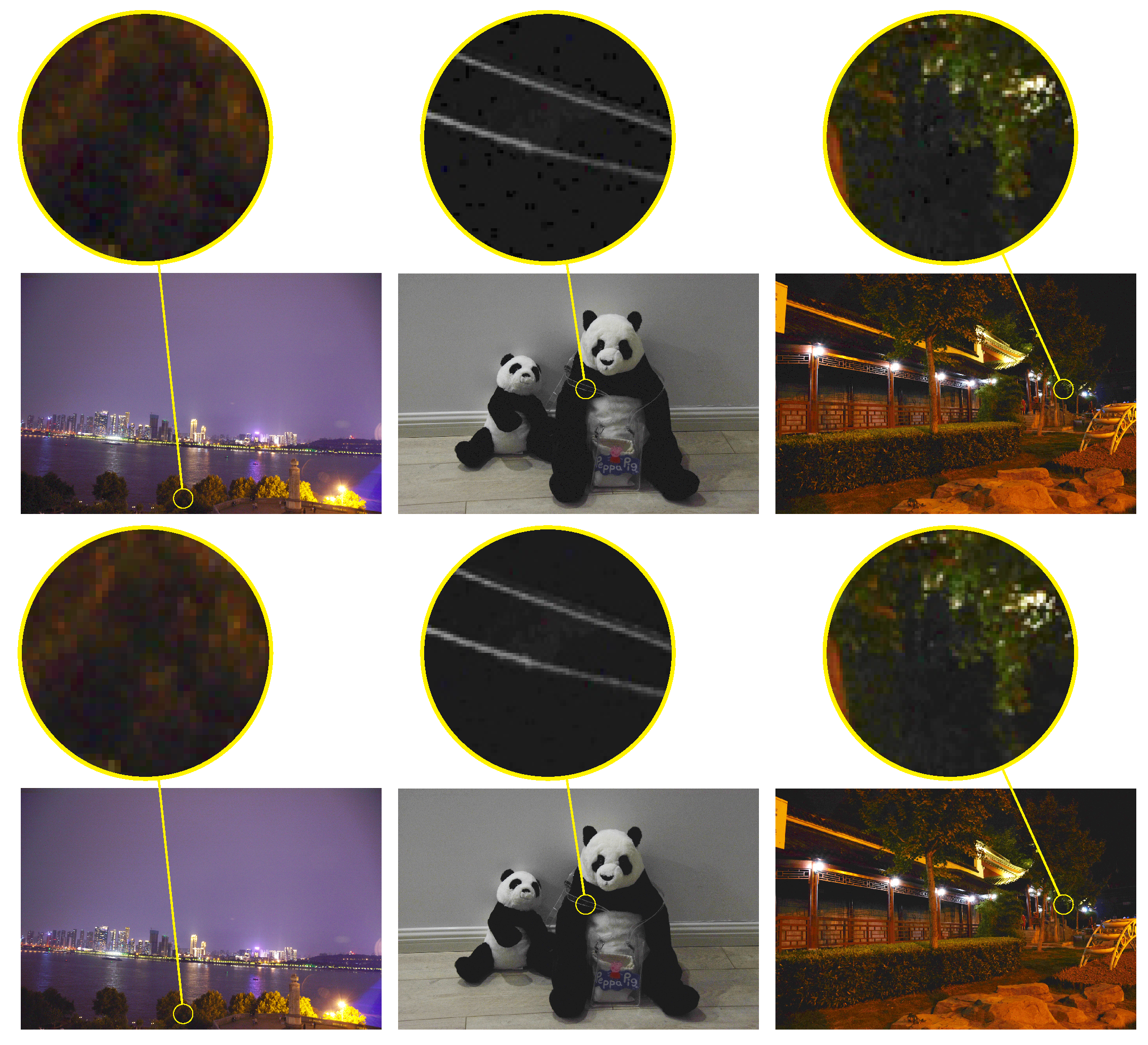

4.4. Efficiency of Noise Reduction via WGIF-Based Smoothing Technique

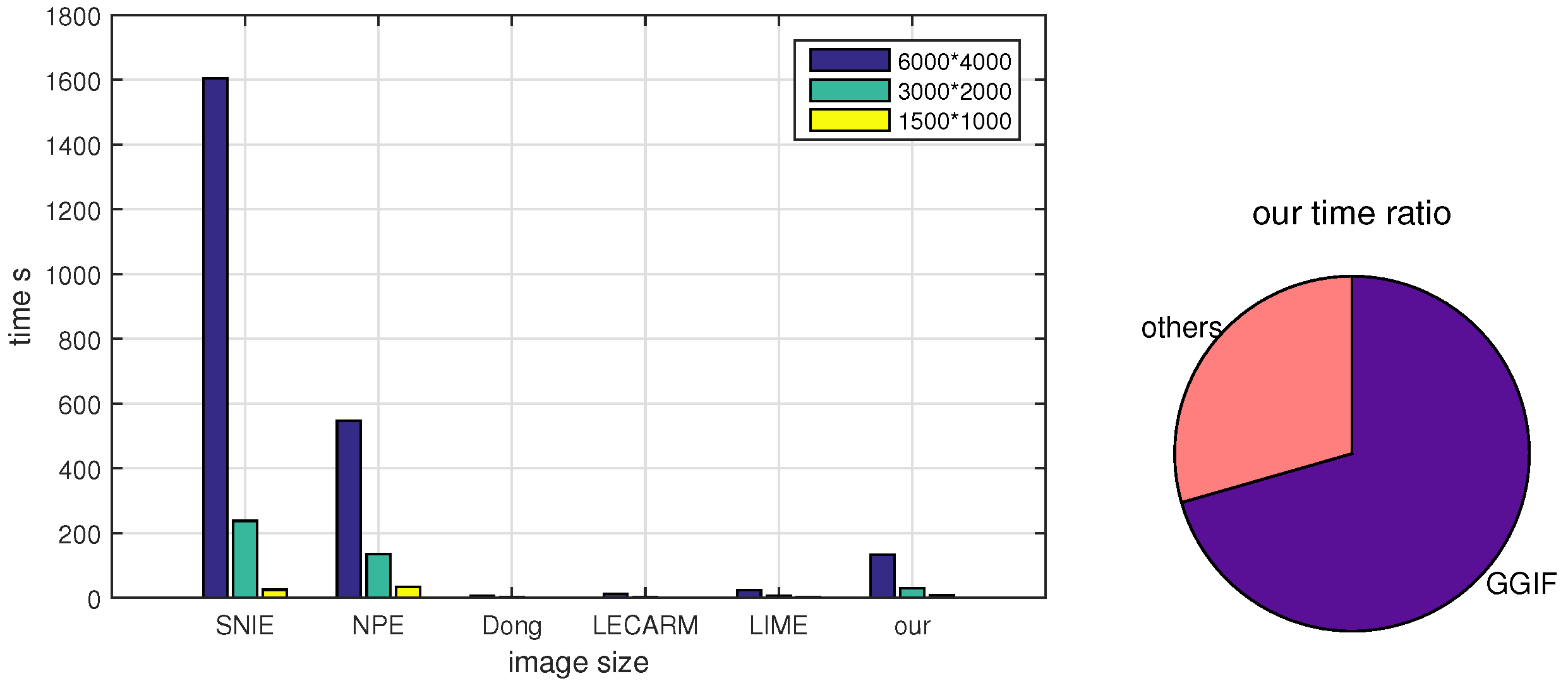

4.5. Comparison of Running Time

4.6. Limitation of the Proposed Algorithm

5. Conclusions and Future Remarks

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, L.; Deshpande, A.; Chen, X. Denoising vs. deblurring: HDR imaging techniques using moving cameras. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 522–529. [Google Scholar]

- Hasinoff, S.W.; Durand, F.; Freeman, W.T. Noise-optimal capture for high dynamic range photography. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 553–560. [Google Scholar]

- Li, Z.G.; Zheng, J.H. Single image brightening via exposure fusion. In Proceedings of the 2016 International Conference on Acoustics, Speech, and Signal Processing, Shanghai, China, 20–25 March 2016. [Google Scholar]

- Chen, X.; Wang, S.; Shi, C.; Wu, H.; Zhao, J.; Fu, J. Robust Ship Tracking via Multi-view Learning and Sparse Representation. J. Navig. 2019, 72, 176–192. [Google Scholar] [CrossRef]

- Wei, W.; Zhou, B.; Połap, D.; Woźniak, M. A regional adaptive variational PDE model for computed tomography image reconstruction. Pattern Recognit. 2019, 92, 64–81. [Google Scholar] [CrossRef]

- Celik, T.; Tjahjadi, T. Contextual and variational contrast enhancement. IEEE Trans. Image Process. 2011, 20, 3431–3441. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zheng, J.; Hu, H.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3578. [Google Scholar] [CrossRef] [PubMed]

- Dong, X.; Wang, G.; Pang, Y.; Li, W.; Wen, J.; Meng, W.; Lu, Y. Fast efficient algorithm for enhancement of low lighting video. In Proceedings of the IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar]

- Li, L.; Wang, R.; Wang, W.; Gao, W. A low-light image enhancement method for both denoising and contrast enlarging. In Proceedings of the IEEE Conference on Image Processing, Quebec, Canada, 27–30 September 2015; pp. 3730–3734. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Land, E.H. The Retinex theory of color vision. Sci. Am. 1997, 237, 108–128. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.G.; Zheng, J.H. Edge-preserving decomposition-based single image haze removal. IEEE Trans. Image Process. 2015, 24, 5432–5441. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.; Ding, X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2782–2790. [Google Scholar]

- Li, Z.G.; Wei, Z.; Wen, C.Y.; Zheng, J.H. Detail-enhanced multi-scale exposure fusion. IEEE Trans. Image Process. 2017, 26, 1243–1252. [Google Scholar] [CrossRef] [PubMed]

- Ren, Y.; Ying, Z.; Li, H.; Zheng, G.L. LECARM: Low-light image enhancement using camera response model. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 968–981. [Google Scholar] [CrossRef]

- Li, Z.G.; Zheng, J.H.; Zhu, Z.J.; Wu, S.Q. Selectively detail enhanced fusion of differently exposed images with moving objects. IEEE Trans. Image Process. 2014, 23, 4372–4382. [Google Scholar] [CrossRef] [PubMed]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Qing, Z.; Fu, C.; Shen, X.; Zheng, W.; Jia, J. Underexposed photo enhancement using deep illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6849–6857. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; p. 1. [Google Scholar]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 3–8 August 1997; pp. 369–378. [Google Scholar]

- Yang, Y.; Cao, W.; Wu, S.Q.; Li, Z.G. Multi-scale fusion of two large-exposure-ratio images. IEEE Trans. Image Process. 2018, 25, 1885–1889. [Google Scholar] [CrossRef]

- Kou, F.; Li, Z.G.; Wen, C.Y.; Chen, W.H. Multi-scale exposure fusion via gradient domain guided image filtering. In Proceedings of the IEEE International Conference on Multimedia and Expo, Hong Kong, China, 10–14 July 2017; pp. 1105–1110. [Google Scholar]

- Mertens, T.; Kautz, J.; Reeth, F.V. Exposure fusion. In Proceedings of the Conference on Computer Graphics and Applications, Budmerice Castle, Slovakia, 26–28 April 2007; pp. 382–390. [Google Scholar]

- Li, Z.G.; Zheng, J.H.; Zhu, Z.J.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2014, 24, 120–129. [Google Scholar]

- Ma, K.D.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- Karaimer, H.C.; Brown, M.S. Improving color reproduction accuracy on cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6440–6449. [Google Scholar]

- Schiller, F.; Valsecchi, M.; Gegenfurtner, R.K. An evaluation of different measures of color saturation. Vis. Res. 2018, 151, 117–134. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Ma, K.; Yeganeh, H.; Wang, Z.; Lin, W.S. A patch-structure representation method for quality assessment of contrast changed images. IEEE Signal Process. Lett. 2015, 22, 2387–2390. [Google Scholar] [CrossRef]

- Cai, J.R.; Gu, S.H.; Zhang, L. Learning a Deep Single Image Contrast Enhancer from Multi-Exposure Images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Chen, Q.F.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3291–3300. [Google Scholar]

- Wei, Z.; Wen, C.Y.; Li, Z.G. Local inverse tone mapping for scalable high dynamic range image coding. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 550–555. [Google Scholar] [CrossRef]

- Li, Z.G.; Zheng, J.H. Visual-salience-based tone mapping for high dynamic range images. IEEE Trans. Ind. Electron. 2014, 61, 7076–7082. [Google Scholar] [CrossRef]

| Book | Building | Coffee | Vase | Winding | Museum | Gate | Pavilion | Market | Bridge | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NPE [7] | 0.5756 | 0.4882 | 0.4496 | 0.6245 | 0.4435 | 0.4446 | 0.3568 | 0.3531 | 0.5080 | 0.3262 | 0.4572 |

| SNIE | 0.5312 | 0.4762 | 0.4551 | 0.5966 | 0.4287 | 0.4379 | 0.3650 | 0.2622 | 0.4693 | 0.3150 | 0.4337 |

| LIME [10] | 0.6333 | 0.4923 | 0.5584 | 0.6426 | 0.5204 | 0.4380 | 0.4091 | 0.3686 | 0.5195 | 0.3405 | 0.4923 |

| LECARM [15] | 0.5874 | 0.4975 | 0.5173 | 0.6508 | 0.4751 | 0.4461 | 0.3925 | 0.2753 | 0.4945 | 0.3251 | 0.4662 |

| Dong [8] | 0.5619 | 0.4595 | 0.4666 | 0.5040 | 0.4329 | 0.4163 | 0.3899 | 0.2990 | 0.4879 | 0.3190 | 0.4337 |

| [18] | 0.5210 | 0.4613 | 0.4386 | 0.5802 | 0.4931 | 0.4258 | 0.3897 | 0.3011 | 0.4581 | 0.3589 | 0.4428 |

| [19] | 0.5194 | 0.4601 | 0.4067 | 0.4048 | 0.4184 | 0.4019 | 0.3561 | 0.2448 | 0.4610 | 0.3111 | 0.3984 |

| Our | 0.6229 | 0.5171 | 0.5571 | 0.6756 | 0.4982 | 0.4629 | 0.4350 | 0.3137 | 0.5095 | 0.4260 | 0.5018 |

| Book | Vase | Winding | Museum | Building | Bridge | Coffee | Gate | Pavilion | Market | Avg | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NPE [7] | 0.8571 | 0.9237 | 0.7625 | 0.6566 | 0.8149 | 0.6916 | 0.7499 | 0.6285 | 0.7382 | 0.7559 | 0.7579 |

| SNIE [13] | 0.8517 | 0.9405 | 0.7895 | 0.6684 | 0.8402 | 0.7182 | 0.7939 | 0.7097 | 0.6410 | 0.7392 | 0.7692 |

| LIME [10] | 0.8730 | 0.9163 | 0.8059 | 0.6391 | 0.8111 | 0.6938 | 0.8398 | 0.6986 | 0.7666 | 0.7797 | 0.7823 |

| LECARM [15] | 0.8897 | 0.9498 | 0.8300 | 0.6729 | 0.8570 | 0.7315 | 0.8459 | 0.7496 | 0.6730 | 0.7711 | 0.7971 |

| Dong [8] | 0.8347 | 0.8599 | 0.7313 | 0.6279 | 0.8029 | 0.6431 | 0.7639 | 0.6954 | 0.7143 | 0.7609 | 0.7434 |

| [18] | 0.7599 | 0.9135 | 0.8121 | 0.6250 | 0.8109 | 0.6905 | 0.7354 | 0.6821 | 0.7232 | 0.7710 | 0.7524 |

| [19] | 0.7257 | 0.9023 | 0.7045 | 0.6134 | 0.7949 | 0.6314 | 0.7144 | 0.6180 | 0.7034 | 0.7216 | 0.7130 |

| Our | 0.9061 | 0.9525 | 0.8447 | 0.6730 | 0.8601 | 0.7407 | 0.8544 | 0.7513 | 0.7201 | 0.7778 | 0.8081 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Li, Z.; Wu, S. Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors 2020, 20, 4614. https://doi.org/10.3390/s20164614

Yang Y, Li Z, Wu S. Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors. 2020; 20(16):4614. https://doi.org/10.3390/s20164614

Chicago/Turabian StyleYang, Yi, Zhengguo Li, and Shiqian Wu. 2020. "Low-Light Image Brightening via Fusing Additional Virtual Images" Sensors 20, no. 16: 4614. https://doi.org/10.3390/s20164614

APA StyleYang, Y., Li, Z., & Wu, S. (2020). Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors, 20(16), 4614. https://doi.org/10.3390/s20164614