1. Introduction

The unprecedented success of the Internet of Things (IoT) paradigm has changed and reshaped our daily lives. We start a day by asking smart speakers, such as Amazon Echo or Google Home, about the weather, remotely control the home appliances and door lock, and switch off the light from mobile devices before going to bed. As was inevitable, IoT is now playing an important role in many other fields, such as industry [

1,

2], healthcare [

3,

4], energy [

5,

6], transportation [

7,

8] and environment monitoring [

9], to name a few. The increasing number of pervasive and widespread, Internet-connected IoT devices capture the environment and generate an enormous amount of data, which is becoming one of the major sources of information nowadays. To understand such massive sensor data, and thus, to draw meaningful information out of it in an autonomous manner, various approaches have been applied, including deep learning.

Deep learning is a type of or a class of techniques in machine learning [

10,

11] that surpasses the capacity of machine learning in many applications such as computer vision and pattern recognition. Deep learning is a representation learning technique, and is capable of learning a proper representation for the given task, such as classification or detection, from the sensor data. Deep learning is now one of the most actively studied areas, and it is expected to contribute much to the success of many IoT applications. As a result, applying different deep learning techniques to IoT applications [

12,

13] is also gaining much attention nowadays.

Among those areas deep learning excels in, we focus on a classification task in this paper. Classification is a popular supervised learning task in deep learning [

10], wherein a trained model is asked to predict which of the classes or categories the unseen input belongs to. Deep learning is carried out by using a neural network model that learns features from the input data. For clarification, a deep neural network with a multi-layer, fully connected perceptron is referred to as artificial neural network (ANN), whereas a network with convolution and pooling layers is a convolutional neural network (CNN). For the output classifier of ANN, CNN and other deep neural network models, the softmax function is the most widely used [

10] combined with cross-entropy loss, thereby called, softmax loss.

Although the primitive or basic deep neural network models have shown surprisingly excellent classification performances in many applications, many research communities have been striving for better performance. Such efforts can be roughly divided into two research directions. One is to design sophisticated network models [

14,

15,

16], and the other is to replace the softmax loss with an advanced equivalent [

17,

18,

19]. Among these, we briefly review the latter which are closer to what we focus on in this paper.

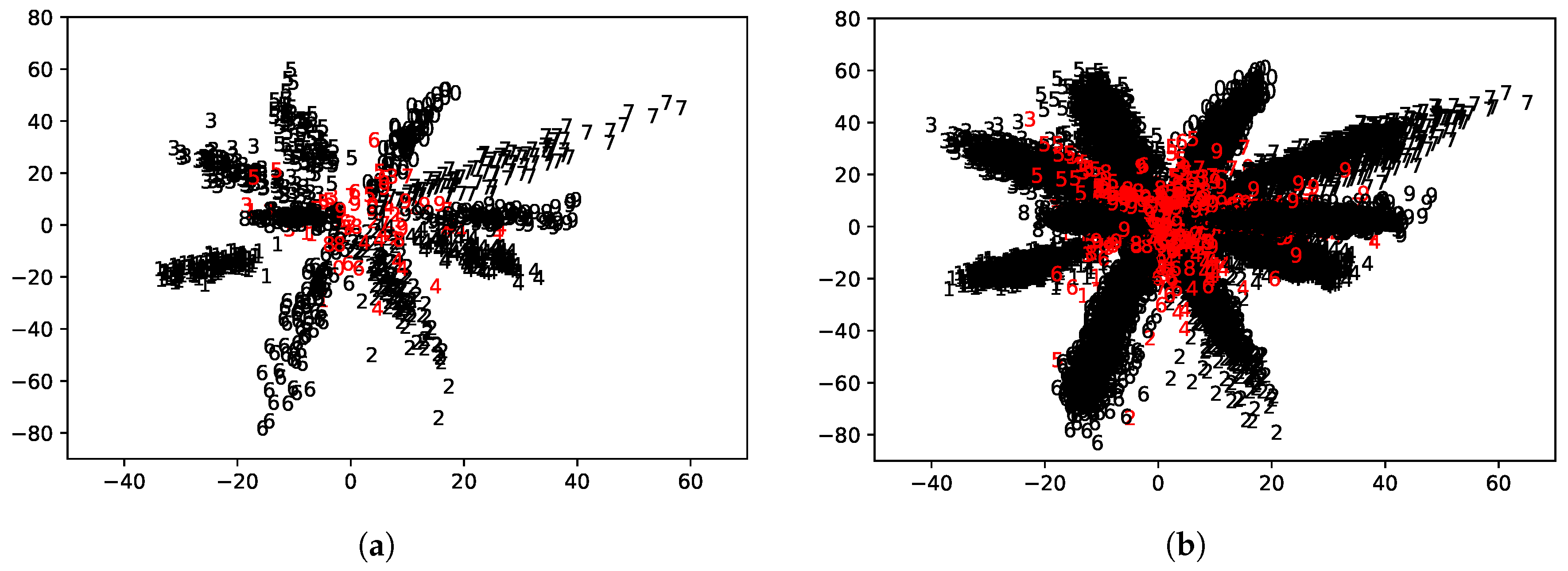

In general, to achieve a better image recognition performance for face identification and verification, for example, many researchers have focused on how to effectively classify a given face, i.e., mapping a face to a known identity, and determining if the two given faces are identical, respectively [

20,

21]. For such tasks, the chosen features from the decision boundary can immensely affect the classification or recognition performance. Therefore, extracting ideal features having small intra-class and large inter-class distances under a given metric space is the most important, yet challenging, task. Among the previous work tackling the issue with softmax loss, angular softmax loss for CNN [

17] is proposed to adjust the angular margin when the model determines decision boundaries for the feature distribution, and it helps CNN learn angularly discriminative features. In [

19], the authors introduced another softmax loss that contributes to incorporating the margin in more instinctively and interpretable manners, while minimizing the intra-class variance, which was considered to be the main drawback of the widely-used softmax loss. Liu et al. [

18] proposed a large-margin softmax loss function, called L-softmax, which effectively enhances both intra-class compactness and inter-class separability between the already-learned features. Furthermore, L-softmax can adjust the desired margin, while preventing the network model from being overfitted. With respect to the hardware-centric research, Wang et al. [

22] proposed optimizing the softmax function by mitigating its complexity by means of an advanced hardware implementation. The authors showed that by reducing the total number of operations in the constant multiplication, the adjusted softmax function architecture enables multiple algorithm reduction, fast addition and less memory consumption at the expense of a negligible accuracy loss. These approaches successfully enhanced the classification performance in the domains of their concerns. However, many of such advanced techniques may not be viable or practical when dealing with low-power IoT devices.

In this paper, we assume a situation wherein a trained model from a simple neural network architecture is given to low-power IoT devices. In general, IoT devices are limited in computing power, energy and memory capacity. Thus, running sophisticated and complex neural networks or advanced loss models are challenging [

13], and even impractical for real-time IoT applications, such as self-driving cars. Additionally, changing the existing network model to a different one for better performance may take a significant amount of time, and it will delay the deployment stage. In general, shifting to a different network model requires several iterations of training (from a vast amount of data), testing and hyper-parameter tuning tasks. Thus, it is necessary to devise a low-complexity method to enhance the performance of deep learning models for IoT.

On the other hand, in the cases without IoT devices, there exist some sophisticated deep learning models that achieve a close-to-perfect accuracy. Nevertheless, it is impossible for a model not to make any mistakes, and such subtle errors may result in severe damage in mission-critical systems, such as battlefields and hospitals [

23]. One may propose an application-specific way to further reduce errors, but it cannot be applied to general applications. Thus, it is necessary to devise a one-size-fits-all approach to assisting a deep learning model to achieve a better accuracy that can be used with general deep learning models.

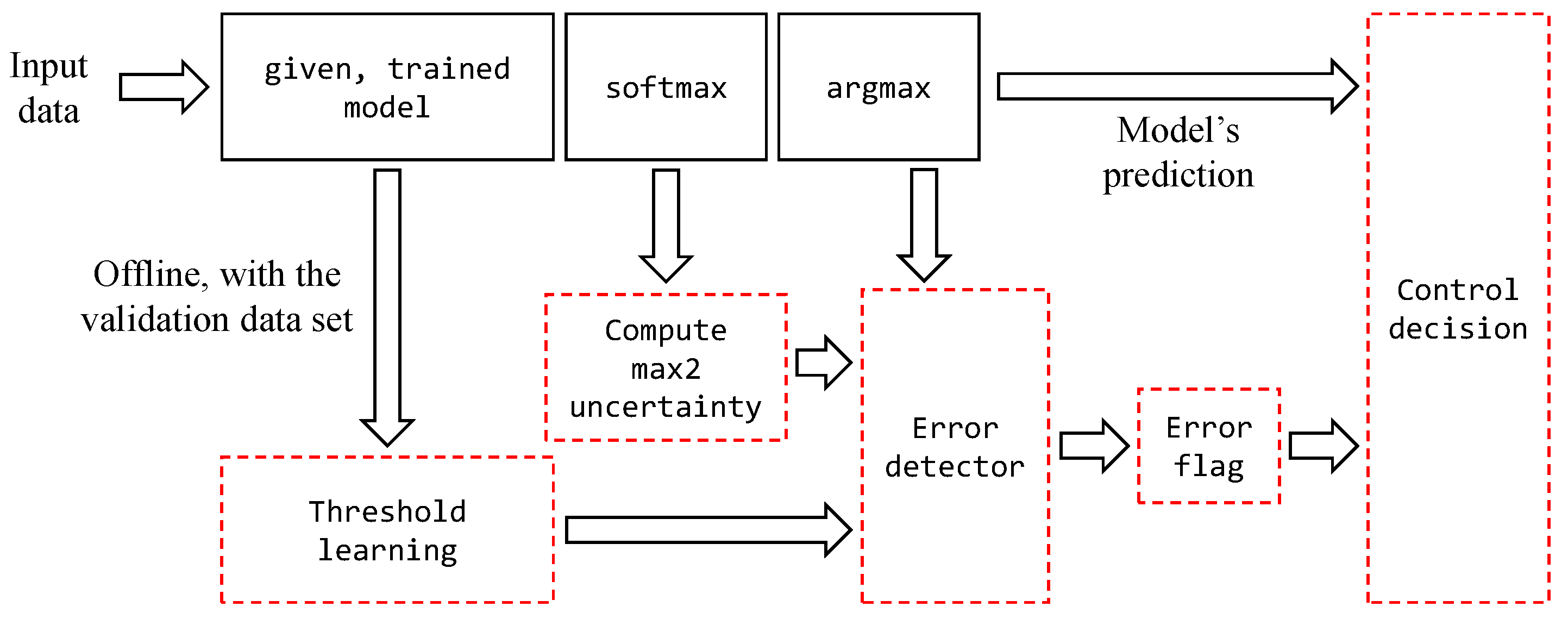

To enhance the performance of a given deep learning model without incurring any additional significant or time-consuming computation, we propose a low-complexity novel framework which operates as an add-on to the general deep learning models without requiring any modification on the model’s side. The basic idea of the proposed framework is straightforward. For the given input

, the softmax output

is a vector of

’s, where

is the posterior probability of

belonging to class

and

is the number of classes/categories. Although the following arg max operation takes the most likely class, it does not care about how close the corresponding probability is to 1. Furthermore, if the largest probability

was not so much different from the second-largest

, the model might be, what we call,

uncertain about its prediction or decision. We propose to measure such

decision uncertainty in a single quantity by using the well-known Jain’s fairness index [

24], which has been widely used in the computer network domain [

25]. In this paper, the computed fairness score of the softmax output is referred to as the

uncertainty score, and is used to measure the level of uncertainty as to the model’s prediction.

In this paper, we propose a light-weight, uncertainty-score-based framework that effectively identifies incorrect decisions made by softmax decision-making models. We also propose a novel way to make mixed control decisions to enhance the target performance when the given deep learning model makes an incorrect decision. Additionally, the proposed framework does not make any change to the given trained model, but it simply puts an additional low-complexity function on top of the softmax classifier. The specific contributions we make in this work are summarized as follows:

We propose a novel framework for the widely-used softmax decision-making models to enhance the performance of the given deep learning task without making any modification to the given trained model. Therefore, the proposed framework can be used with any neural network models using softmax loss.

We propose to use an uncertainty score to gauge the level of uncertainty as to the model’s prediction. In a nutshell, the similarity among the softmax output is interpreted as how sure the model is about the current decision. To this end, we developed a practical method to effectively detect incorrect decisions to be made by the given deep learning model.

We propose an effective way to enhance the performance of a deep leaning control system by making a mixed control decision. When the given model is believed to be yielding an incorrect decision/prediction, the proposed model replaces the model’s output with the probabilistic mixture of the available actions in order not to deviate much from the correct decision.

We propose a low-complexity yet effective method to enhance the performance of the softmax decision-making models for low-power IoT devices. By using the time complexity terms, we show that the proposed framework does not incur any significant load from the given decision-making model, and thus, it can be used for online tasks.

We show by an empirical study how the proposed framework effectively enhances the performance of the softmax decision-making tasks. To be specific, we carried out an experiment for IoT car control; we designed a control decision system that utilizes the softmax output to make a mixed, probabilistic car control decision when the model prediction is of low certainty.

Our work presented in this paper is innovative in that it suggests a new and systematic way of enhancing the performances of deep learning models. The proposed method treats the trained model as a black box, and thus, it can be applied to general deep learning models with little overhead. Additionally, it takes advantage of the entire softmax output to generate a decision when the model fails. The proposed approach is different from the previous studies focusing on either revising the deep neural networks or loss models. Additionally, by statistical and evaluation studies we show that not only the largest softmax output to be taken by the arg max operator, but also the actual values in the entire softmax output can be utilized to enhance the performances of deep learning models in the low-power IoT device control domain.

The rest of this paper is organized as follows.

Section 2 introduces a brief overview on image classification and softmax loss. In the following

Section 3, we describe the proposed framework to enhance the performances of deep neural networks with softmax loss.

Section 4 presents experiment results, and the following

Section 5 includes some discussion along with some notes as to the proposed framework. Finally,

Section 6 concludes the paper.

4. Experiments

In order to validate the performance of

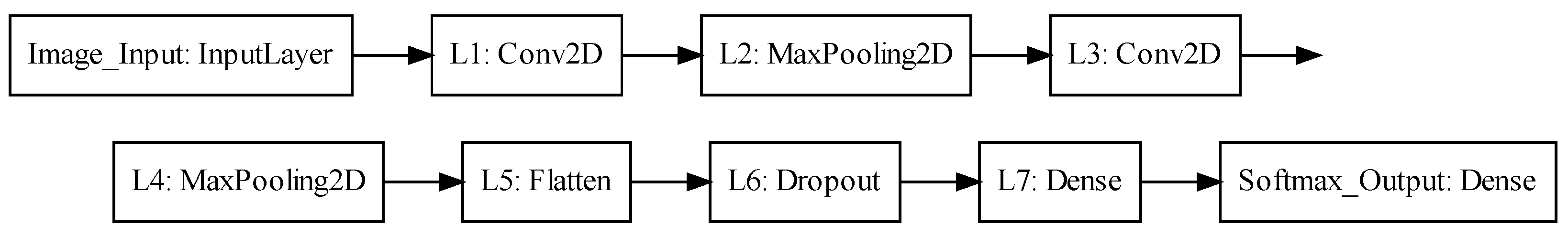

UFrame with a real-world IoT application, we have carried out an experiment. Please note that the use of the proposed framework is not limited to IoT devices. It can also be used for general-purpose and programmable low-power sensor devices. The considered use case here is making control decisions for indoor self-driving toy cars. For the low computing power of single board computers (SBC) such as Raspberry Pi (RPi), we simplified the self-driving task to image classification. At the beginning, a series of manual driving tasks were carried out by a human, during which images through the USB camera and the human controller’s input key strokes, i.e., left, forward and right, are collected. The acquired image dataset was then increased by flipping horizontally and shifting by a small amount of pixels. The entire dataset was divided into three, i.e., training (42,446 samples), validation (5305 samples) and testing (5306 samples), before training the CNN model (see

Figure 6). To speed up the real-time control decision-making process, the trained model to run on RPi was converted to a TensorFlow Lite equivalent. The trained model was an image classifier that mapped the incoming camera image into one of the three different classes indicating steering wheel directions, i.e., left, forward or right.

In fact, the self-driving task can be implemented without deep learning in many cases. For example, an IoT car can detect the lanes on both sides with a feature extraction technique, e.g., Hough transform [

28]. Then, by comparing the centers of the lanes on both sides and the center of the car, an IoT car can drive autonomously. However, in this experiment, we considered a realistic situation where the drive or journey could be interrupted by other moving objects, such as humanoid robots, as in

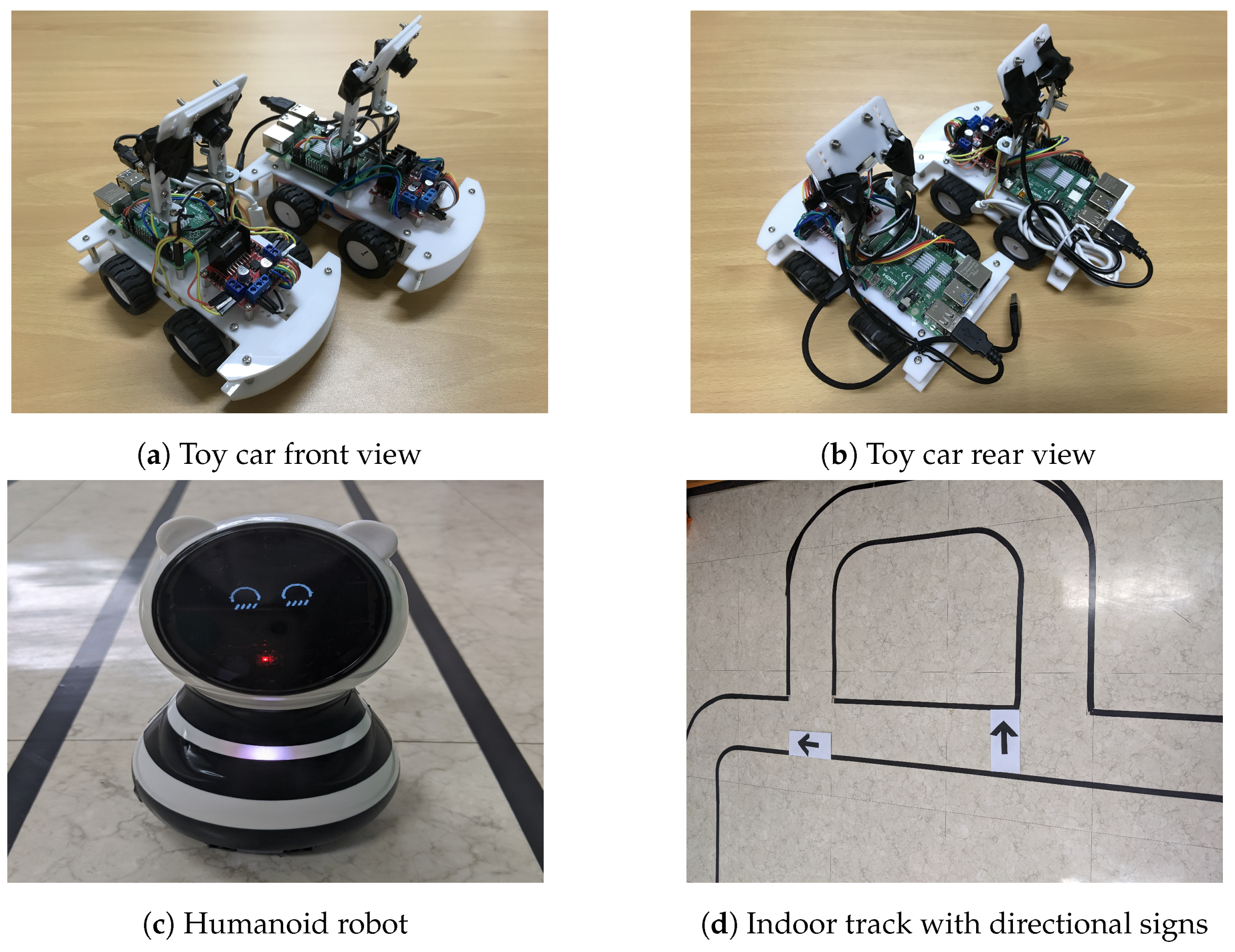

Figure 7c. Additionally, there are several intersections, and an IoT car can decide on which direction to go by the directional signs (see

Figure 7d). For a self-driving car to successfully drive while complying with the simple rules of the road, i.e., following the directional signs and stopping when blocked by other objects, we chose to solve the self-driving task by CNN-based deep learning approach.

Each toy car shown in

Figure 7 carried an RPi v4 as a controller and an L298N motor drive shield on its back. The RPi was powered by a battery, which is invisible in the figure, with 5.0 V and 2.0 A output. The IoT toy cars were connected via a built-in WiFi interface so that they could communicate with each other and with the road side unit (RSU). The RSU broadcast heavy-traffic and accident information, and a toy car receiving such information was to slow down. Toy cars could return to the normal speed only when another message indicating the clearance of the situation was received from RSU. If a car failed to receive any information from the RSU, the car which successfully received the information could forward it to other cars nearby. Please note that such reception failure can happen for many reasons, such as out of the transmission range of RSU, packet collision and packet drop, to name a few.

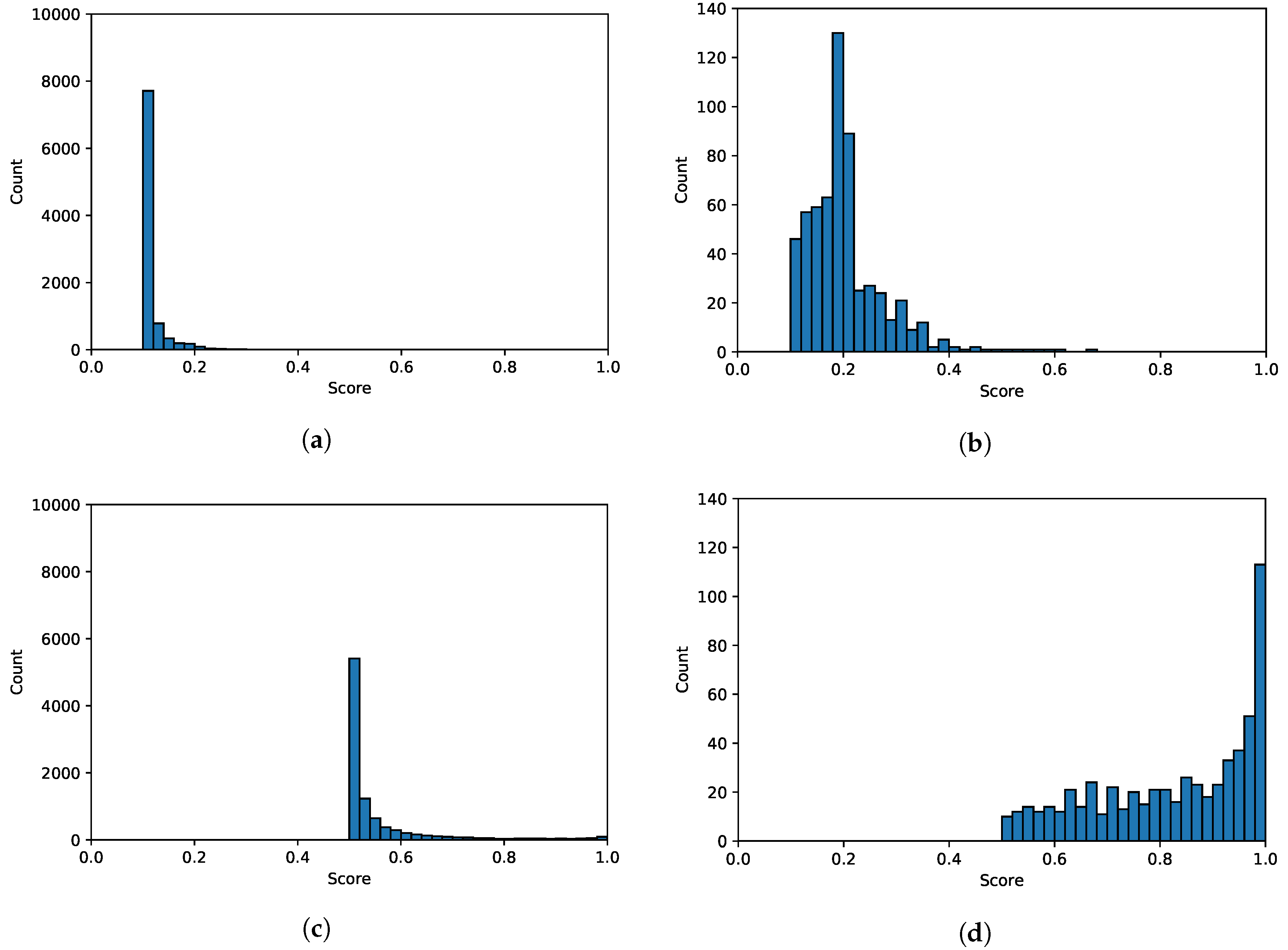

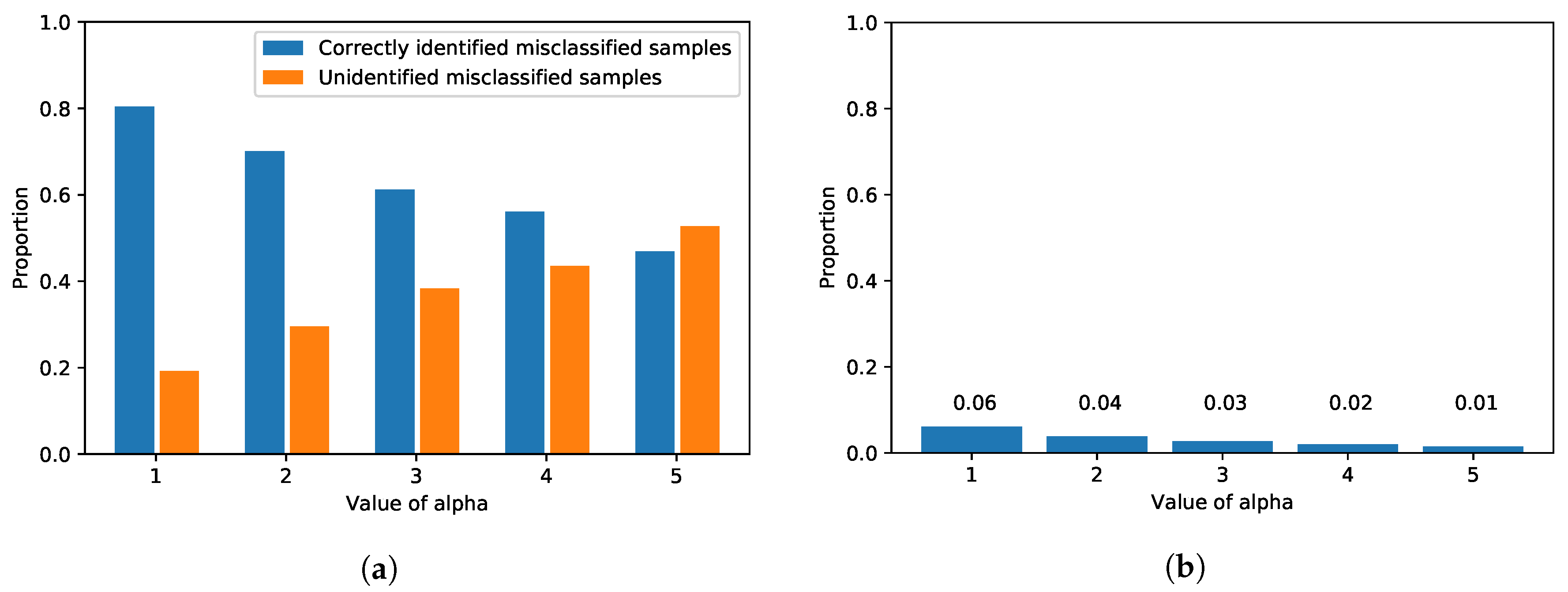

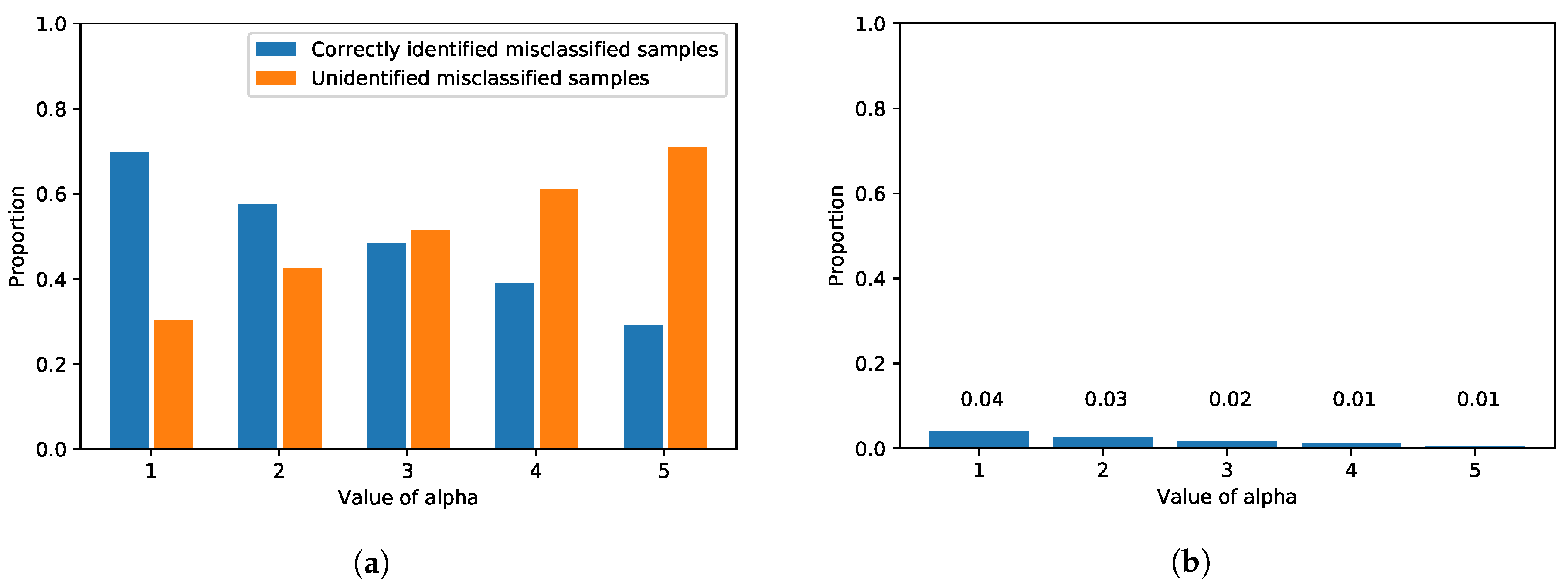

Through a series of trainings with different configurations, five epochs with the mini-batch size of 128 were chosen to avoid over-fitting. The resulting model yielded an accuracy of about 95% on the test dataset. Again, we measured the max2 uncertainty score of the softmax output from the correctly classified samples in the validation dataset. The per-class means and standard deviations of the max2 uncertainty scores are reported in

Table 3. We also evaluated the detection performance on the IoT car image dataset, and the results are shown in

Figure 8. Please note that the same evaluation was carried out for the MNIST dataset as well (see

Figure 5). It is clear from both figures that, although one dataset along with its application is very much different from the other in terms of the underlying neural network architecture, the number of classes and the contents in the images, the proposed framework can effectively identify misclassified samples in both applications (see

Figure 5a and

Figure 8a), and the proportion of the incorrectly identified samples is insignificant overall (see

Figure 5b and

Figure 8b). Although there is a difference in the patterns between

Figure 5a and

Figure 8a—the orange bar exceeds the blue bar with a lesser value of

in the IoT car dataset than in the MNIST dataset—that was only because of the different number of classes and the samples in each neural network and dataset, respectively.

The misprediction of the model can be regarded as a steering wheel direction error by greater than or equal to 90 degrees. If the model misses a forward direction, for example, the wheel direction error is exactly 90 degrees no matter which direction the model mistakenly chooses. If the model misses a left direction, for example, the wheel direction error is either 90 or 180 degrees for mistakenly choosing forward or right direction, respectively.

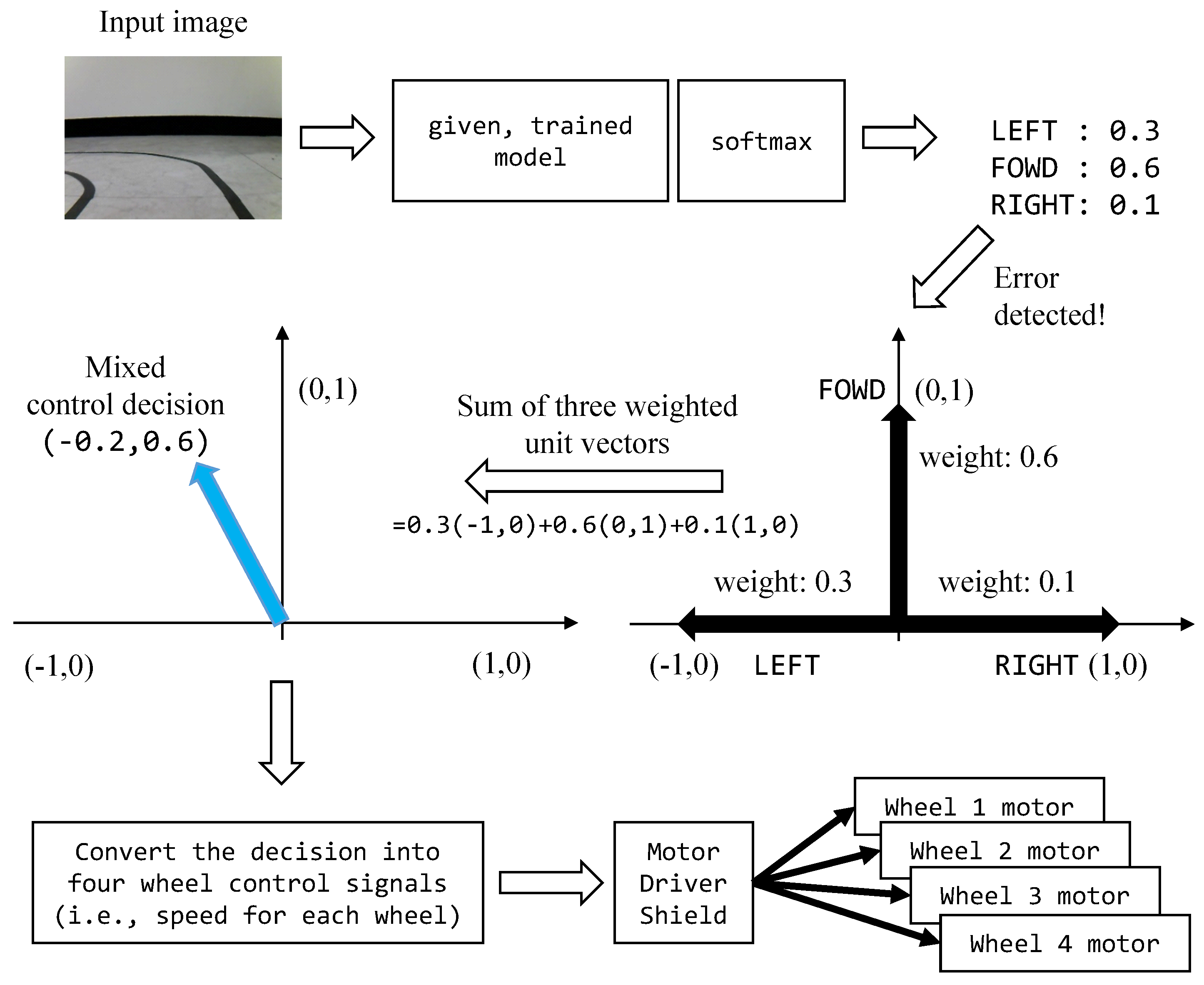

For this steering wheel control task on an IoT car, when the model’s prediction is uncertain, the proposed

UFrame can make a mixed control decision in the following manner by levering the decision uncertainties, i.e., the softmax output. If the max2 uncertainty score of the softmax output is below the threshold of the class to which the model classified the current input, the model prediction is passed to the toy car an the control input as it is. On the other hand, if the uncertainty score exceeds the threshold, the control output of the car, i.e., the steering direction, becomes a probabilistic combination of the three directions as shown in

Figure 9. Suppose the case described in the figure: for the given input image, the softmax output is [0.3, 0.6, 0.1] for left, forward and right directions. Additionally, suppose the max2 uncertainty score has exceeded the threshold. Then, instead of using the model prediction (i.e., moving straight since 0.6 is the largest among the softmax output) to steer the toy car,

UFrame makes a mixed control decision as follows. Let [−1,0], [0,1] and [1,0] be the unit vectors representing left, forward and right directions, respectively. Additionally, let each softmax output be the probability of the given input image belonging to the corresponding direction.

UFrame multiplies a unit vector and the corresponding probability for each direction, and then adds them together to produce a vector

. The

indicates the normalized velocity towards left or right depending on the sign (+ or −). Likewise,

indicates the normalized velocity towards the forward direction. The next step is to convert

to the motor speed for the four wheels which will be passed to the corresponding motors via the motor driver shield.

To evaluate and compare the performance of

UFrame, we have measured the errors in angle, i.e., the angle difference between the correct direction and the direction chosen by either the CNN model or

UFrame (i.e., mixed control decision) for each sample in the test dataset. Please note that when the CNN model makes an incorrect decision on direction, the error in angle amounts to at least 90 degrees. The performance evaluation result with respect to the different values of

is shown in

Table 4.

The CNN model which has nothing to do with made correct decisions on 5058 test samples, and missed 248, resulting in about 95% accuracy on the test dataset. Those misses deviate from the correct steering angle by at least 90 degrees. On the other hand, for any values of , UFrame made only eight misses of such large-degree mistakes. As aforementioned, smaller values of make the error detector more strict. In the case of , for example, UFrame did not set the error flag only for 4636 samples, which is the smallest. On the other hand, in the case of , 5014 samples resulted in taking the model’s decision as it was without making a mixed control decision due to the large threshold. As decreases, UFrame makes more mixed control decisions, and results in the smallest the number of control mistakes with 50+ degrees of angle differences to the correct angle. That proves that making a mixed control even in the case wherein the model makes a correct prediction does not degrade the quality of the decision, since in such cases the softmax output is biased to the correct decision and so does the mixed decision. On the contrary, as increases, UFrame makes less mixed control decisions, but suffers from having many control mistakes with the same amount of angle errors (i.e., >50 degrees). However, for any values of , UFrame outperforms the CNN model.

The CNN model produced 248 errors, or in other words, the model misclassified 248 input images. However, due to the high accuracy of the model (i.e., about 95%), even when the model made an incorrect prediction, the softmax output for the correct direction was still large, increasing the uncertainty score. The high uncertainty score lets the proposed framework make a mixed decision. By mixing the three unit vectors with the softmax output being the weight, the mixed control decision leans towards the correct decision. This is why the proposed framework makes a much smaller number of errors than the CNN model. On the other hand, regardless of , the proposed framework produced eight cases with large angle errors, i.e., ≥90 degrees. This happens when the softmax output of the model is completely incorrect, giving almost zero probability to the correct direction. When the majority of the probability is given to one of the incorrect directions, the error flag becomes off, preventing the proposed framework from making a mixed decision. When the other two incorrect decisions receive a similar amount of probability and the correct direction is either left or right, the proposed framework makes a mixed decision, but it deviates much from the correct decision. Such cases happened for the input images that did not have any meaningful information for the CNN model to predict which direction to go—for example, images having no track/lane at all and blurry images (from camera shake).