Abstract

The low-distortion processing of well-testing geological parameters is a key way to provide decision-making support for oil and gas field development. However, the classical processing methods face many problems, such as the stochastic nature of the data, the randomness of initial parameters, poor denoising ability, and the lack of data compression and prediction mechanisms. These problems result in poor real-time predictability of oil operation status and difficulty in offline interpreting the played back data. Given these, we propose a wavelet-based Kalman smoothing method for processing uncertain oil well-testing data. First, we use correlation and reconstruction errors as analysis indicators and determine the optimal combination of decomposition scale and vanishing moments suitable for wavelet analysis of oil data. Second, we build a ground pressure measuring platform and use the pressure gauge equipped with the optimal combination parameters to complete the downhole online wavelet decomposition, filtering, Kalman prediction, and data storage. After the storage data are played back, the optimal Kalman parameters obtained by particle swarm optimization are used to complete the data smoothing for each sample. The experiments compare the signal-to-noise ratio and the root mean square error before and after using different classical processing models. In addition, robustness analysis is added. The proposed method, on the one hand, has the features of decorrelation and compressing data, which provide technical support for real-time uploading of downhole data; on the other hand, it can perform minimal variance unbiased estimates of the data, filter out the interference and noise, reduce the reconstruction error, and make the data have a high resolution and strong robustness.

1. Introduction

In the process of petroleum exploration, the downhole pressure–time relationship in a test well can be obtained through well opening and closing operations. A reasonable real-time data processing method is the prerequisite for successfully explaining the formation structure and oil and gas distribution [1]. However, due to the interference of mechanical vibration, electrical faults, and uncertain noise, useful formation signals are often submerged in a large number of fluctuations and burrs, which are shown as non-stationary and time-varying [2]. These disturbances will not only affect the real-time prediction of downhole operation status, but also lead to inaccuracy of offline playback data, resulting in the inconsistency between the pressure measured from the surface and that from downhole [3], the misjudgment of the interpretation model mode [4], and even major accidents [5]. Logging the wrong production parameter will also increase the uncertainty of reservoir simulation and will result in the mistake of making a decision. In addition, the development of large-bandwidth transmission technology has made it easier and more convenient to routinely collect large underground data. However, at the same time, it also brings huge challenges for engineers to the management, filtering, reduction, and interpretation of these large data sets. Kikani [6] emphasized the importance of data denoising, data reduction, and trend analysis as early as 1998. He introduced the wavelet transform to accomplish the time–frequency analysis of long-term pressure transient data. For many years, although large works have been done to address individual issues, none of them have been systematically studied until now.

Therefore, to meet the needs of downhole data compression transmission and interpretation, how to efficiently extract the change trend containing well-testing operation characteristics from the strong noise background with variable frequency and compress the data have become important focuses of studying downhole parameter processing methods [7]. Given this, this paper establishes the following three purposes:

1. Filter out the noise or interference signals on the premise of ensuring undistorted original well-testing information.

2. Add the data prediction function in the data denoising process to meet the requirements of data noise reduction and underground accident early warning in actual production simultaneously.

3. Find a proper mathematical expression suitable for reversible data compression, convenient data storage, and upload, providing the possibility for transmitting the downhole data to the ground accurately and in real-time.

To filter out noises, enhance useful information, and obtain the dynamic change course of all kinds of geological parameters of oil–gas wells, the first and foremost task is denoising method selection. Classical data denoising methods mostly adopt simple moving average (SMA) [8] and frequency domain filtering [9]. However, the direct use of the SMA method will cause a loss of useful data information, resulting in a decrease in the recognizability of the data [10]. As a classic frequency domain filtering method, Fourier can filter out high frequency interference, but it cannot perform local analysis [11]. Compared with the above methods, the wavelet multiscale transform has good time–frequency domain resolution capabilities that make it extensively applied in reservoir description, noise removal, and data analysis [12], from the earliest seismic signal processing [13] to transient pressure data denoising [14] to parameter estimation [15].

As for the application of wavelet transform in oil and gas well exploration, in recent years, the method has been widely used in logging while drilling (LWD) data processing [16], downhole data boundary determination [17], and historical data reconstruction [18]. Wang [3] used the wavelet transform method to analyze the downhole transient pressure data and proved that the wavelet method can be applied to remove data noise. Athichanagorn [19] developed a wavelet-based algorithm for most of the data processing steps, such as outlier removal, denoising, transient identification, and data reduction by using the long-term data from permanent gauges. Based on Knutson Towboat Company (KTB) logging data, Amrita [20] conducted continuous wavelet transform (CWT) analysis using different decomposition scales. However, due to the difference in time–frequency characteristics of different wavelet bases, different wavelet bases often output different results when processing the same signal. To achieve the detection of a stress concentration zone for oil well casing, Liu [21] used the median filter method and wavelet transform to reduce the noises of the signal. The median filter replaces the center signal value with the signal value from the middle of the sorted list, which will lose a lot of useful information, causing a huge deviation between the filtered signal and the original signal. Zheng [22] presented a wavelet transform-based approach for recovering the production history from the measured permanent downhole gauge (PDG) pressure. By assuming that the reservoir system is linear, they realized the production evaluation of the reservoir. However, the fact is that, for a reservoir with variable properties, the above assumption is not valid. Later, Wang [23] used the Haar wavelet transform for analyzing non-stationary PDG pressure signal, to achieve the purpose of identifying the changes in reservoir properties. Although the above studies deal with issues associated with the processing of pressure data using wavelet techniques, they only touch on a single or a couple of problems related with oil well data. Research on well testing data is even rarer. These works just use different algorithms to process the data in turn, and do not analyze the influence of different wavelet configuration parameters on the processing results. In addition, the existing processing methods only filter the data and lack data prediction mechanisms, so they cannot meet the urgent requirements of special production occasions such as downhole decision-making or early warning.

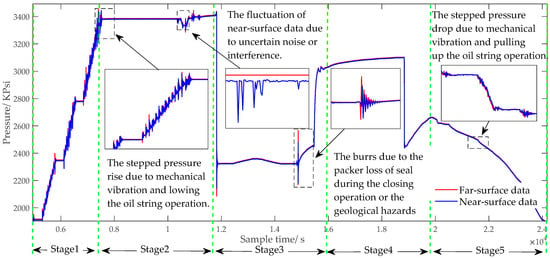

Moreover, some stage curves have similar characteristics with noise, which will make data processing more difficult. Figure 1 analyzes the characteristics of oil well-testing data. Generally, the complete well-testing operation process consists of five stage. The near-surface data are easy to transmit, but they are not as accurate as the far-surface data. In the waiting stage, because there is no downhole operation, the far-surface data remain stable and the pressure curve does not fluctuate too much. By contrast, the near-surface data in the same working stage have fluctuations due to uncertain noise or interference. These differences between near and far surface data are exactly what need to be removed in the filtering process. Meanwhile, the noises are mixed with real downhole geological pressure signals, which have similar characteristics with normal signal. Taking the stepped pressure rise as an example, even if the data characteristics are the same, the causes may be different, such as mechanical vibration, the operation of lowering the oil string, and the negligent use of oilfield workers. Similarly, there also exist burrs and stepped pressure due to the variations in downhole geological conditions and surface operation environment. If the data processing method is not appropriate, it will be very hard to identify whether the above similar characteristics is useful or not, and the useful signal will be easily filtered out as noise. Besides physical changes in the test well, the pressure gauge data acquisition system itself may pose a problem. In some cases, pressure data are stored with low precision, creating stepped change and noise [19]. All of the above situations bring much more uncertainty and ambiguity to the well-testing data processing.

Figure 1.

Diagram of oil well-testing data characteristics analysis. Five stages in the complete well-testing process are: Stage1: Lowering the oil string. Stage 2: Waiting stage. Stage 3: Well opening. Stage 4: Well closing. Stage 5: Pulling up the oil string.

The above similarities and the randomness of engineering parameters show that it is difficult to accurately describe oil and gas operations using simple mathematical formulas. It is necessary to use random system methods for non-deterministic analysis. The good news is that we found that Kalman has advantages in data smoothing and prediction, and can be used to separate dynamic noise and observation noise [24]. It is not only widely used in the fields of medical [25], aviation [26], geology [27], and disaster prediction [28], but also plays an important role in evaluating oil well reservoir parameters [29,30], model parameter correction [31,32], and reservoir dynamic monitoring [33,34]. To improve the performance of reservoir prediction, Raghu [35] used a Kalman filter to estimate the spatial permeability distribution. Xue [36] used the Kalman state-space model to correct the triaxial parameters near the bit. By using the concentration of radioactive elements, Soltani [37] proposed a Kalman-based method for solving log estimation. Mahdianfar [38] established a program-controlled hydraulic model for drilling operations and used Kalman filters for parameter estimation. However, the above researchers only considered the removal of mechanical interference and random noise, and ignored the influence of the algorithm parameters on the final processing results, resulting in poor robustness, and mistakenly removing useful information as noise. Fortunately, particle swarm optimization (PSO) is a type of full-scale random algorithm of optimization and has been widely used in petroleum exploration. The algorithm not only seeks the optimal position of individual particles but also keeps tracking the overall optimal value. To estimate reservoir properties, Adibifard [39] used PSO to implement nonlinear regression in well test analysis. Zhang [40] used PSO-based BP neural networks to predict reservoir parameters by using dynamic production information. Lang [41] studied an oil well production scheduling problem for the light load oil well during petroleum field exploitation. He proved that the PSO-based method can obtain high quality schedules within a much shorter running time. These studies show that PSO brings hope for parameter optimization.

As for the ensemble method, Kalman prediction and wavelet transform are functionally complementary. Combining the two can not only improve the resolution of the data, but also realize the dynamic prediction of engineering parameters [42]. Hong [43] proposed a Kalman Filtering method for wavelet estimation and decomposition of Random Signals. However, the method is developed based on the standard Kalman filtering and needs a set of Monte Carlo simulations. The limitation of the algorithm itself, the high consumption of the hardware, and the difficulty of algorithm transplantation bring lots of obstacles for its application. Soltani [44] designed a fuzzy Kalman filter based on a wavelet smoother to overcome the uncertainty. However, due to the complexity of the processing model and a large number of samples, the method is poorly implantable and cannot be popularized in special underground occasions that require real-time prediction and uploading.

In summary, the existing methods face great challenges in dealing with the problems coming from the occasions that urgently need multi-control parameter optimization, real-time data feedback, and prediction. Considering that the direct data prediction for signals with noises may lead to large uncertainties in parameter estimates or even a wrong prediction, we believe that aberrant sections of the data should be removed before data prediction. Therefore, to address the aforementioned challenges, a wavelet-based Kaman smoothing idea for well-testing data processing is coming up. The contributions are summarized as follows:

1. We are the first to integrate the wavelet transform method, Kalman prediction, and Kalman smoothing and use their integration for well-testing data processing.

2. An optimal wavelet parameter determination scheme for obtaining the optimal combination of decomposition scale and vanishing moment is proposed by taking the correlation and reconstruction errors as indicators.

3. A near-surface well-testing data measurement system of online Kalman prediction and offline Kalman smoothing is developed.

4. This paper takes the far-surface well-testing data as standard data, and proposes a wavelet-based Kalman smoothing method for near-surface well-testing data, which meet the requirements of data filtering, data compression, data prediction, and data smoothing.

The rest of this paper is organized as follows. Section 2 follows the process of method formulating to explain the working principles of different methods used in the proposed method. Section 3 describes the proposed wavelet-based Kalman smoothing measurement system and gives the detailed workflow and the algorithm flow. Section 4 presents the experimental simulations and results, and demonstrates the feasibility of the proposed scheme by analyzing the data reconstruction effects under different parameter configurations. Section 5 discusses the results and declares our future work. Section 6 concludes the paper.

2. Wavelet-Based Kalman Smoothing Method

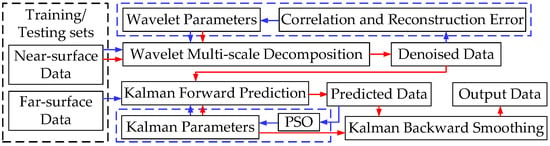

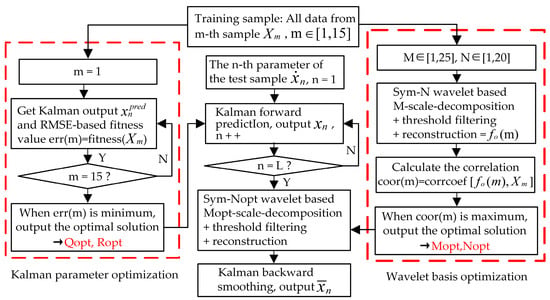

In view of weak real-time accurate data prediction and offline data analysis and interpretation capabilities due to the complex working conditions and the failure of engineering data denoising, a wavelet-based Kalman smoothing (WBKS) method to process uncertain downhole data was developed. This method combined the optimization of model parameters with data filtering and prediction, and adopted the technical route of multiscale decomposition, particle swarm optimization (PSO), Kalman prediction, and Kalman smoothing in turn. First, based on the measured downhole well-testing samples, take correlation as an index and optimize the decomposition scale and disappearance moment of the Symlets wavelet family through the iterative learning of training samples. Then, use PSO to find the optimal variance of Kalman prediction error and observation error. Finally, with the best optimization parameters, Kalman forward prediction and backward smoothing are sequentially performed on the testing samples to complete the entire processing. The flowchart of WBKS is shown in Figure 2.

Figure 2.

Wavelet-based Kalman smoothing (WBKS) algorithm flowchart. The blue dotted line and arrows represent the training process; the red arrows represent the testing process.

2.1. Wavelet Transformation and Compression

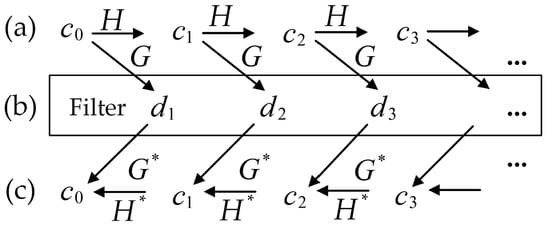

At present, there is no uniform standard for the selection of wavelet bases in the processing of well-testing data. The Symlets wavelet function is an approximately symmetric orthogonal wavelet function, which has good local characteristics in the frequency domain. It can reduce phase distortion when analyzing and reconstructing signals [45]. Therefore, we chose the Symlets family for wavelet decomposition and denoising, and the operation flow is shown in Figure 3.

Figure 3.

The flow of wavelet decomposition, threshold processing, and reconstruction. The data processing steps in this figure are as follows: (a) Decompose the well-testing data into multi-layers by Symlets wavelet. The decomposition is carried out only for low-frequency parts each time; to are the frequency details of the signal with different decomposition levels. The level is determined as needed. (b) Threshold process the high frequency decomposition coefficient d of c to filter out the noise; d can be calculated according to Formula (1). (c) Do wavelet reconstruction using denoised high-frequency decomposition coefficients. The denoised high and low frequency details can be obtained by using Formula (2), and the wavelet reconstruction coefficients can be obtained by Formula (3). Here, H and G generally refer to the low-pass and high-pass filter. and are the duality operators of H and G.

First, under a given range of wavelet disappearance moment M and decomposition scale N of Symlet wavelet, decompose the well-testing data to different scales by using the S. Mallat method and the Birge–Massart soft threshold method. The decomposition formula is as follows:

where is the low-frequency approximation of , is the high-frequency detail of , and are the low-pass and high-pass filter coefficients under the specified wavelet family, σ is the number of layers of multiscale transformation, , and n is the sampling time. Define , , then Formula (1) can be simplified as:

Well-testing information is mostly distributed in low-frequency bands, so the low-frequency features contained in the low-frequency wavelet coefficients can better reflect the variation trend of the data segment. The high-frequency features represented by high-frequency coefficients are the noise and interference parts that need to be filtered out by threshold method in the wavelet reconstruction. Polynomials fit the coefficients in the smallest interval containing all non-zero details. Calculate the coefficient matrix of the fitting polynomial and output the high-frequency details , then the corresponding multiscale reconstruction formula can be simplified as:

Therefore, after the denoising process, the number of high and low-frequency coefficients would be reduced accordingly, which provides the possibility of data restoration and data compression.

In addition, the vanishing moment M is related to the degree of energy concentration and the degree of compression of wavelet coefficients after wavelet transformation. The decomposition scale N reflects the overall approximate characteristics and the details of the signal. Here, we used correlation and mean square deviation as indicators, and chose the parameters M and N with large variance fluctuations (showing that the filtering effect is good) and small average error and mean fluctuations (showing that the reconstructed data are closer to the original data) as the optimal parameters, denoting them as and .

2.2. Kalman Prediction and Smoothing

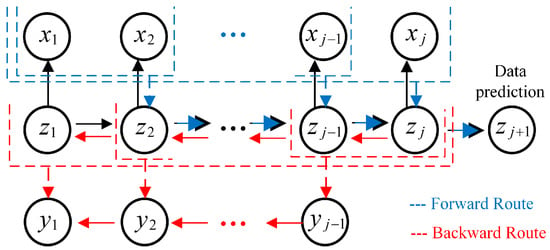

Kalman is a process of continuous prediction and correction. For each observed pressure variable , we introduced a corresponding latent variable . Define node as a variable combination at a specific observation time. Therefore, there always exists a path that connects any two observation variables through latent variables to realize the information interconnection of all oil data. Considering that the latent variables and observed variables follow Gaussian distribution, the joint probability distribution can be obtained as

Figure 4 gives the tree structure illustrating the process of Kalman; it includes two processing routes: forward prediction and backward smoothing.

Figure 4.

The tree structure interconnecting observed variables and latent variables through forward (blue line) and backward (red line) routes.

2.2.1. Online Forward Prediction Model

The forward pressure parameters prediction was done in the downhole online test. As shown by the blue path line in Figure 4, the observed variable sequence was used to derive the variable sequence . Define the transfer information between adjacent observation variables as the normalized marginal probability distribution and denote it as . Therefore, what needs to be calculated in the equation is the mean value and variance , and the recursive equation is

where the transition probability is , , the emit probability is , . is the normalized coefficient, A is the state transition matrix, C is the observation transformation matrix, Q is the variance of prediction error, R is the variance of observation error, means that the variable x follows the Gaussian distribution with mean and variance . Then, based on , the following relationship can be obtained:

The Kalman prediction gain matrix can be expressed as

Therefore, for a given , , , the marginal posterior probability of could be calculated. With the entry of new observation data, the model would continue to obtain prediction values that reflect the true downhole measuring environment.

2.2.2. Offline Backward Smoothing Model

The backward data smoothing belongs to offline processing and was performed after the pressure parameters are played back to the ground. As shown by the red path line in Figure 4, transfer the information of node to node in reverse, calculate the Gaussian marginal probability distribution of smooth node , and record it as . Then, combine and to form an inverse recursive distribution , where,

In the same way, we can obtain

Here, in order to determine the parameters Q and R, we took root mean square error (RMSE) as the fitness index and used PSO to seek for the optimize Kalman parameters. In the PSO algorithm, all particles are given random velocities and positions, and they fly continuously in multi-dimensional space [46]. For the th particle in the kth generation, the PSO algorithm calculates the velocity and position of the Dth dimension of the k + 1th generation according to Formulas (16) and (17).

where ; pop is the total number of particles, and and are the velocity and position of the th particle of the kth generation in the Dth dimension, respectively. is the best location for th particles in the Dth dimension. is the best location for particles in the Dth dimension. and are acceleration factors. is a random number within the interval [0, 1]. is the inertia weighting factor. The final optimal position of the population is the optimal search position of the particle swarm.

For the m-th well-testing training sample , define the fitness value as shown in Formula (18), where , is the Kalman predicted output of obtained according to Formula (6). When iterative times are met or the position of the optimal particle that can obtain the minimum value of is found, and the optimal local Kalman parameters are determined, denote them as and . Finally, compare all training samples and determine the parameters that can minimize as the optimal global Kalman parameters. The global optimal solution is given in the “Experimental simulation and results” section.

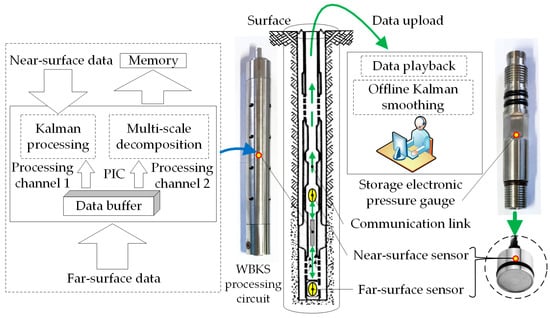

3. Wavelet-Based Kalman Smoothing Measurement System

All samples were collected from the well testing platform of Huabei Oilfield. Each sample included near-surface data and far-surface data, both of which were measured by electronic pressure gauges at the same time but at different well depths. The pressure point number in each sample is from a few score of thousands to hundreds of thousands. Considering that the data obtained from different sensors at different wells or depths have different data sizes N and pressure amplitudes y, we pre-normalized N of each sample at [0, 1000] and y at [0, 100]. The data collected by the near-surface sensor are easy to upload but there exists data distortion and redundancy, while the far-surface sensor is closer to the position to be measured and the collected data are more real and effective. Meanwhile, because the cost of pulling out the oil string is large, so in WBKS system, we took the far-surface data as the standard data, and completed the downhole online parameter estimation and the surface offline data smooth processing for near-surface data. The object we multiscale decomposed is the data of one operation stage. The time interval of each stage data is the same and is one second. As shown in Figure 5, the workflow of WBKS signal acquisition and processing can be summarized as:

Figure 5.

WBKS workflow from data acquisition to data playback based on the well-testing platform.

- Perform filtering, shaping, and amplification of analog signals collected by Keller sensors.

- Convert the pressure analog signals into digital signals through an AD converter.

- Transmit the digital signal to the PIC processor, complete the real-time Kalman forward prediction, and wavelet decompose the pressure signal of one working stage.

- Store the standard data, the predicted data, and the wavelet coefficients of the data in each working stage into the memory chip.

- Use the ground software to download all the above stored data and offline Kalman backward smooth the predicted data.

- Use wavelet coefficients to restore the data, and analyze and compare the data reconstructed by wavelet coefficients with the standard data.

Among them, the storage format of the pressure signal after wavelet decomposition was: “pressure cluster index number + wavelet decomposition coefficient”. The storage location of each pressure cluster was determined by a unique index number. The ground software read the wavelet decomposition coefficients of the corresponding pressure data segment according to the storage format, and sequentially performed data playback, reconstruction, and Kalman smoothing. If a downhole real-time transmission device is equipped to the WBKS measurement system, the new combined system will be able to complete downhole operation decisions and early warnings.

This paper used a combination of multiscale analysis and Kalman processing to provide a guarantee for accurately extracting effective information from well test data. The main task was to complete the processing of the collected data. Figure 6 shows the detailed algorithm flow of WBKS. The simulation algorithm of the WBKS model is presented in Algorithm 1.

| Algorithm 1 WBKS algorithm |

| Pretreatment: Normalize the data length of all samples to L. Model Training: Input: m-th well-testing training sample , [1,15]. Steps: 1: Use PSO to obtain and under the minimum RMSE fitness . 2: Decompose within a given range of decomposition scale and vanishing moments . 3: Get the high-frequency and low-frequency coefficients of the highest decomposition layer, perform Birge–Massart soft threshold processing, and obtain the wavelet reconstruction data . 4: Calculate the correlation between and , where . 5: Repeat steps 1–4, select and with minimum as the optimal Kalman parameter, denote them as and ; select M and N with maximum as the optimal combination of wavelet reconstruction parameters, denote them as and . Model Testing: Input: The n-th data point of testing sample . Steps: 1: Use and to calculate the predicted value of and restore it. 2: If n = L, do wavelet-based -scale-decomposition, soft threshold filtering, and reconstruction on , save the reconstructed wavelet coefficients. |

| 3: Use Formula (14) to smooth and obtain . |

Figure 6.

The algorithm flow of WBKS.

4. Experimental Simulations and Results

This article analyzed 15 field samples obtained on the spot. All data training and test results were obtained by MATLAB 2017a simulating.

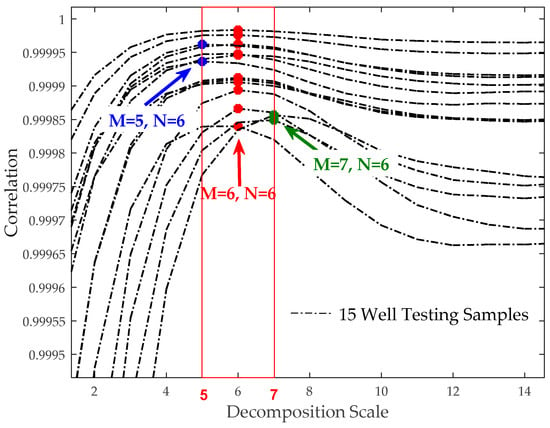

Figure 7 shows the distribution of the best decomposition scale when N = 6 and 15 oil well-testing samples obtaining the maximum correlation. When the decomposition scale was from 5 to 7, the correlation value was the highest, showing that the reconstruction coefficients obtained under these decomposition scales have a good ability to restore data.

Figure 7.

Correlation comparison of different decomposition scales using a sym6 wavelet basis.

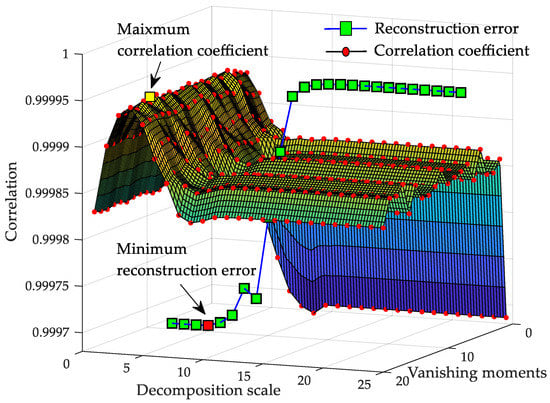

Figure 8 shows the symlets wavelet-based correlation coefficients distribution at different decomposition scales and vanishing moments, [1,25], [1,20]. We randomly selected one of the samples for correlation analysis. Before processing, the pressure range of the sample is normalized to [0,1]. It can be seen that the distribution of correlation coefficient presented a “bed shape”: with the increased of the vanishing moment order, the energy of the wavelet reconstruction signal was more concentrated and finally, tended to be stable. A too large decomposition scale would not only reduce the processing efficiency, but also filter out more small but important wavelet coefficients details, resulting in the loss of important original information and the decline of the correlation between the signals before and after reconstruction.

Figure 8.

Correlation coefficient and reconstruction error trend under Symlets wavelet processing.

Figure 8 also shows the mean square error trend of reconstructed data based on a sym6 wavelet basis. When the decomposition scale is small, due to incomplete decomposition, a large number of original characteristics are still retained in the reconstruction coefficients, so the reconstruction root mean square error was not fluctuated significantly and the noise reduction effect was not obvious. The experimental results are consistent with the analysis of the decomposition principle, which proves that it is feasible to choose correlation as a wavelet denoising standard in this paper.

To determine the optimal decomposition scale, we took the average error , the average value fluctuation rate , and the variance value fluctuation rate as indicators, reconstructed all the near-surface samples and analyzed the ability of the reconstructed samples to represent the original samples. Their formulas are defined as follows:

where i is the serial number of the well-testing sample , j is the index number of the data point in each sample, is the mean of the original sample, is the mean of the reconstructed sample, is the variance of the original sample, and is the variance of the reconstructed sample. Table 1 shows the comparison effects of 15 well-testing samples before and after the wavelet reconstruction. The samples with both M and N configured as 6 were in majority and had achieved good results in reducing the average error of reconstruction and avoiding too much fluctuation in the mean and variance. Therefore, the optimal decomposition scale and the optimal vanishing moment were both determined to be 6.

Table 1.

The comparison of the wavelet reconstruction effects under different M and N combination.

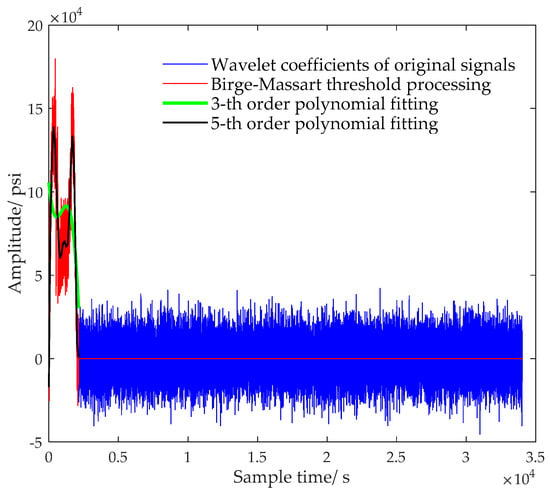

We took the 5-th order polynomial fitting and total 20 fitting points as an example. Firstly, the and were used to perform wavelet decomposition and wavelet coefficient fitting on the fourth sample in Table 1 containing 33,996 pressure points. Then, the fitted coefficients were written in PIC microcontroller as the configuration parameters. In this way, 25 parameters represented the main information of original signal with 33,996 points. Only 25 parameters need to be recorded in memory, and the compression ratio is about 136:1. Formula (22) gives the formula for calculating the value y at x, where n represents the order. The values of the 3-th and 5-th order polynomial fitting coefficients are respectively given in Formulas (23) and (24).

Figure 9 reflects the distribution of wavelet coefficients when different reconstruction methods were used. In it, the number of the obtained coefficients varied with different methods; there were 34,093 original wavelet coefficients, 2135 threshold coefficients after forced denoising, and 20 coefficients after the 3-th or the 5-th order polynomial fitting and quadratic wavelet reconstruction. The 5-th order polynomial could well characterize the trend of wavelet characteristics, while the data reconstructed by the 3-th order polynomial had a large deviation from the original data. Here, being an example, the 5-th order polynomial fitting was put forward just for illustrating the feasibility of fast data compression. The optimal order of the polynomial was not determined and should be flexibly adjusted according to the memory size and production accuracy requirements.

Figure 9.

The distribution of wavelet coefficients under different reconstruction methods.

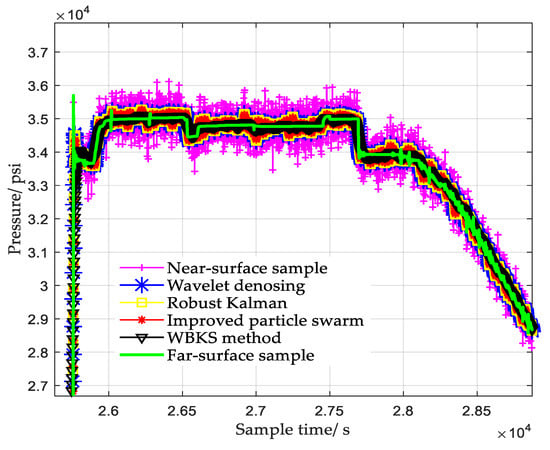

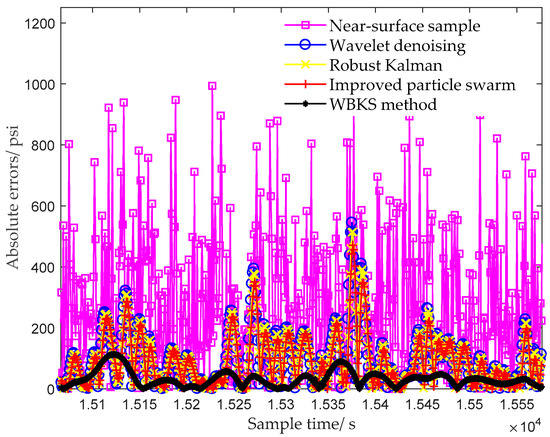

Figure 10 and Figure 11 took the far-surface data as the standard data, compared the filtering effect and absolute error on the near-surface data of the fourth sample after using different processing methods. The wavelet denoising method sequentially performed sym6-based 6-scale wavelet decomposition, coefficient soft threshold processing, and coefficient reconstruction. The classical robust Kalman method used Formula (6) to calculate the filter outputs, and its Q and R were random values. The improved particle swarm algorithm, on the basis of the classical Kalman method, added the optimization of Q and R by PSO. It can be seen from Figure 10 that due to random interference and mechanical vibration, the near-surface sample contained a lot of burrs before being processed by other methods, resulting in the data having poor resolution and high absolute error. From the processing results of near-surface data, we know that the filtering capabilities and the abilities to restore the true pressure trend of different methods were different. Among all the processed data, the data processed by the WBKS method were closest to the real far-surface data, and its absolute error was also the smallest.

Figure 10.

Output comparison under different processing methods.

Figure 11.

Absolute errors comparison under different processing methods.

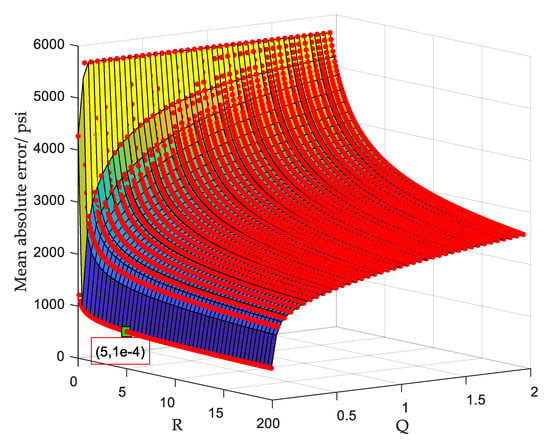

In order to intuitively show the position of the optimal parameters obtained using PSO in all parameters, we took the same fourth sample as the analysis object and used the traversal method to plot the average absolute error distribution under different Q and R values. and , the traversal steps of Q and R, were 0.05 and 0.1, respectively. The experimental results in Figure 12 show that the obtained optimal parameters were and .

Figure 12.

Distribution of mean absolute error under traversing Q and R.

To test the robustness of the WBKS method, the fourth near-surface sample (i = 4), added with different noise intensity, were used as the new signals to be analyzed. As shown in Table 2, we took error and signal-to-noise ratio as the analysis indicators, and used various methods to process new signals and compared their results. The definitions of various analysis indicators are shown in Formulas (25)–(27), which mainly include Mean Absolute Deviation (MAD), Standard Deviation (SD), and Signal-to-Noise Ratio (SNR). is the j-th pressure value from the i-th far-surface sample. is the mean value of all pressure values from the i-th far-surface sample, and is the j-th pressure value after adding noise with intensity coefficient to the i-th near-surface sample. The noise intensity coefficient represents the maximum amplitude ratio of random noise to near-surface data. Opt/Exp indicates the analysis indicators values obtained by different methods configured with the global or local optimal parameters. Optimal (Q, R) represents the local optimal Q and R values suitable for the Kalman model processing , which is obtained by using PSO.

Table 2.

Robust analysis of the WBKS algorithm based on multiple analysis indicators.

5. Discussion

To achieve the requirements of oil data compression and real-time upload, we built a well-testing measurement system and presented a wavelet-based Kalman data smoothing method. With the practical application of WBKS in the oil well-testing operation platform, its model construction, system parameter optimization, various methods comparison, and system performance analysis have been performed in this paper.

Firstly, multiscale analysis and wavelet transform theory were discussed. For data denoising, utilizing coefficients under wavelet transform made the WBKS have a good noise-reducing ability and the complete trend maintenance characteristic. Thresholding the extracted wavelet coefficients was both forthright and reliable and could be easily transplanted to the PIC unit. For data compression, the parameter fitting case in Figure 9 shows that the threshold processing makes the amount of data that needs to be uploaded or stored reduced from 33,996 to 25, which indirectly expands the storage capacity and can upload the data to the computer through the serial port for further processing. Meanwhile, to improve working curve resolution, the influence of using different wavelet scales and vanishing moments on denoising the well-testing data were analyzed. Fifteen near-surface samples were used as testing sets, based on which, the results in Figure 7 demonstrated that, the best combination of parameters M and N had a narrow value range, , . These determined ranges can provide technical reference for wavelet decomposition of uncertain downhole data. For data storage, the memory circuit saved the original data, Kalman prediction data, fitting parameters of wavelet decomposition coefficients, which was convenient for further analysis, statistics, and storage of data. This storage form provides a long-term effective data basis for algorithm verification and parameter optimization.

In Kalman parameters optimization, the improved particle swarm method used PSO to optimize Q and R of the Kalman model. After that, based on indicator RMSE and the criterion of minimizing the fitness values, the best Kalman parameters were determined. Compared to classical Kalman method and wavelet denoising method, although the improved particle swarm method reduced the deviation from the original data (see results in Figure 10 and Figure 11) and the configured Kalman filter parameters were locally optimal, there was still a big deviation from the original signal. The wavelet denoising and Kalman smoothing methods used in WBKS complement each other, which not only remove the noise but also retain the valid data information. Therefore, WBKS could purify and highlight the inherent characteristic information of the signal. After WBKS processing, the output absolute error was smaller, and the signal curve was more “stable” and closer to the real well-testing data.

From the processing results of a single algorithm, Kalman performed more effectively in eliminating noise and its performance was better than that of wavelets. After wavelet processing, although most of the noises in the original data had been eliminated, the error comparison results showed that its noise filtering was not thorough. It may be that the frequency of the noise covered almost the entire frequency axis and was not easily separated. The multi-sample training and the global optimal parameters selection were adopted after the PSO based local optimal parameters seeking, this ensured that the method could improve system efficiency and lead the system to achieve optimal performance. Therefore, the Kalman smoothing method using the global optimal parameters had better analysis and denoising capabilities than using the local optimal parameters, and obtained better processing results (see Table 2).

In the WBKS model, the wavelet first prefiltered out most of the high-frequency noise and system deviations of the near-surface data, which laid the foundation for the next Kalman operation. Table 2 shows that adding the Kalman filter to further process the wavelet reconstructed data made the WBKS model more accurately forecast the dynamic changes of oil parameters while retaining valid data information. Figure 10 intuitively shows that the processing data curve which was calculated from the WBKS method was the most consistent with the far-surface data curve and the method well-simulated the pressure change rule of the downhole measurement system. The smallest absolute error in Figure 11 corroborates the effectiveness of the WBKS method. In Table 2, when , compared with the near-surface data, the absolute error of the data processed by wavelet was reduced by 74.6%, the reduction of Kalman forward prediction was 75.4%, and that of Kalman backward smoothing was 85.2%. Similarly, when , the reductions of the above three comparisons were 75.6%, 77.1%, and 86.5%. The obtained high data characterization capability and low data restore error of WKBS are benefits from the combination of wavelet and Kalman.

In order to evaluate the results of pretreatment on a single sample and obtain the applicability and performance conclusion of each method, one far-surface sample was used as a testing set, and in the comparative analysis of different methods, three definitions including Mean Absolute Deviation, Standard Deviation, and Signal-to-Noise Ratio were given. For different methods with the same noise intensity coefficient, the low MAD and SD and high SNR in Table 2, respectively, reflect the proposed method’s strong data restoration ability and denoising ability. During Kalman processing, the forward recurrence used only part of the observation data to complete the data prediction, while the reverse recursion was to complete the data smoothing on the basis of obtaining all the observation data. Therefore, in the case of using the same configuration parameters, the method that added backward smoothing had better results than that of using the forward prediction alone. For one method with different noise intensity coefficients, the WBKS method had good performance under all evaluation indexes and had the characteristics of strong data restore ability, high robustness, and large signal-to-noise ratio, which proved the feasibility of its application in oilfield development.

The proposed WBKS method is suitable for applications in signal processing and communication where simultaneous estimation, decomposition, and compression are desired. However, there are inevitably some limitations, mainly reflected in three aspects:

1. The complex operational processes.

The played-back data in this paper were obtained by manual download after the downhole measurement was completed and the pressure gauge was pulled out to the surface. In terms of the measurement system, frequently lowering and lifting the pressure gauge will increase the operating burden of the workers and may cause uncertain events such as pipe-stuck and lost circulation.

2. The necessity of further research on data compression.

The main works of this paper are data denoising, prediction, and smoothing. The method of compressing data was not described in detail, but only proved the feasibility of using polynomial fitting to complete data compression.

3. The samples used for the study are few.

In actual production, due to obtaining a complete downhole well-testing data being difficult and requiring a long acquisition cycle, there are only 15 analyzable samples in this paper.

Therefore, in the future, three aspects of work corresponding to the above limitations need to be further done. First, to realize the data real-time upload with zero human intervention, we are planning to introduce transmission systems such as LWD or some wireless transmission modules to reduce project cost and production accidents. Second, we are going to analyze the deviation error between the original data and the restored data obtained using different compression methods with different compression ratios. It is very practical to use compression theory in accordance with actual production requirements to ensure data compression quality. Third, we should increase the number of samples to make the obtained processing model and optimization parameters more convincing.

6. Conclusions

To ensure that the processing results of well-testing data are in line with the dynamic changes of actual downhole geological parameters, we first determined the optimal combination with the mother wavelet (sym6) and the wavelet decomposition scale of 6 by analyzing the data reconstruction effects (involving correlation and reconstruction errors MAD, SD, and SNR) under different decomposition scales and disappearing moments. Secondly, we optimized the parameters of the Kalman prediction and smoothing model using PSO, and obtained the optimal parameters Q and R suitable for processing the well-testing data. Then, we configured all the obtained optimal parameters to the pressure gauge and completed the downhole online wavelet decomposition, fitting, reconstruction, Kalman prediction, and data storage. After the above downhole operations, the playback data were smoothed offline on the surface. The experimental results show that wavelet decomposition and reconstruction using optimal parameters can achieve high-efficiency data compression and low-error data restoration, meeting the requirements of real-time transmission and accurate playback. The optimized Kalman provided effective technical support for removing noise, improving data resolution, enhancing robustness, and completing accurate data interpretation. The proposed WBKS not only opens up new ideas for data compression and real-time data transmission in well-testing operations through multiscale decomposition and reconstruction methods, but also provides important guidance for decision-making and early warning of petroleum working conditions based on Kalman estimates.

Author Contributions

Funding acquisition, X.H., M.Z. and S.L. (Shugui Liu); Investigation, X.H.; Methodology, X.F., Q.F., S.L. (Shaohui Li), X.H., M.Z. and S.L. (Shugui Liu); Resources, Q.F. and S.L. (Shaohui Li); Software, S.L. (Shugui Liu); Validation, X.F.; Writing—original draft, X.F.; Writing—review & editing, X.F., Q.F., S.L. (Shaohui Li), M.Z. and S.L. (Shugui Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under No. 51375338 and the Research on key measurement standards and Traceability Technology of water transport engineering, National key research and development plan under No. 2018YFF0212200 and the Special fund for basic scientific research business expenses of central public welfare scientific research institutes under No. TKS20200202.

Acknowledgments

The authors would like to thank the editor and the reviewers who provided many helpful comments and thereby contributed to the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Al-Nahdi, O.A.; Al-Nuaim, S.A.; Siu, L.W.; Al-Shammari, A.T. Three-Dimensional Reservoir Pressure Determination Using Real Time Pressure Data from Downhole Gauges. U.S. Patent 9,896,930, 20 February 2018. [Google Scholar]

- Bazargan, H.; Adibifard, M. A Stochastic Well-Test Analysis on Transient Pressure Data Using Iterative Ensemble Kalman Filter. Neural Comput. Appl. 2017, 31, 3227–3243. [Google Scholar] [CrossRef]

- Wang, F.; Zheng, S. Unknown Rate History Calculation from Down-hole Transient Pressure Data Using Wavelet Transform. Transp. Porous Media 2013, 96, 547–556. [Google Scholar] [CrossRef]

- Feng, X.; Feng, Q.; Li, S.; Hou, X.; Liu, S. A Deep-Learning-Based Oil-Well-Testing Stage Interpretation Model Integrating Multi-Feature Extraction Methods. Energies 2020, 13, 2042. [Google Scholar] [CrossRef]

- Ma, T.; Chen, P.; Han, X. Simulation and interpretation of the pressure response for formation testing while drilling. J. Nat. Gas Sci. Eng. 2015, 23, 259–271. [Google Scholar] [CrossRef]

- Kikani, J.; He, M. Multi-resolution analysis of long-term pressure transient data using wavelet methods. In Proceedings of the SPE Annual Technical Conference and Exhibition, New Orleans, LA, USA, 27–30 September 1998. [Google Scholar]

- Ouyang, L.B.; Kikani, J. New Approaches for Permanent Downhole Gauge (PDG) Data Processing. Liq. Fuels Technol. 2005, 23, 1247–1263. [Google Scholar] [CrossRef]

- Teixeira, B.O.S.; Castro, W.S.; Teixeira, A.F.; Aguirre, L.A. Data-driven soft sensor of downhole pressure for a gas-lift oil well. Control Eng. Pract. 2014, 22, 34–43. [Google Scholar] [CrossRef]

- Zhao, Y.; Ran, L.; Wu, F. Frequency domain filtering correction of log data from a corkscrew borehole. Well Logging Technol. 2012, 36, 499–503. [Google Scholar]

- Kuncar, A. Basic Techniques for Filtering Noise Out of Accelerometer Data. In Proceedings of the 26th DAAAM International Symposium, Vienna, Austria, 1 January 2016; pp. 1122–1128. [Google Scholar]

- Reis, M.S.; Saraiva, P.M.; Bakshi, B.R. Denoising and Signal-to-Noise Ratio Enhancement: Wavelet Transform and Fourier Transform. In Comprehensive Chemometrics; Elsevier: Oxford, UK, 2009; pp. 25–55. [Google Scholar]

- Yiqun, Z.; Shiyi, Z.; Qi, W. Interpretation of transient temperature data from Permanent Down-hole Gauges. J. Geophys. Eng. 2017, 4, 739–750. [Google Scholar]

- Morlet, J.; Arens, G.; Fourgeau, E.; Glard, D. Wave propagation and sampling theory—Part I: Complex signal and scattering in multilayered media. Geophysics 2013, 47, 203. [Google Scholar] [CrossRef]

- Gonzalez, T.F.; Camacho, V.R.; Escalante, R.B. Truncation Denoising in transient pressure tests. In Proceedings of the SPE Annual Technical Conference and Exhibition, Houston, TX, USA, 3 October 1999; pp. 31–46. [Google Scholar]

- Sahni, I.; Horne, R.N. Multiresolution Wavelet Analysis for Improved Reservoir Description. SPE Reserv. Eval. Eng. 2005, 8, 53–69. [Google Scholar] [CrossRef]

- Zhang, W.; Shi, Y. Application of wavelet neural network in the acoustic logging-while-drilling waveform data process. J. Commun. Comput. 2007, 4, 29–34. [Google Scholar]

- Cooper, G.R.J.; Cowan, D.R. Blocking geophysical borehole log data using the continuous wavelet transform. Explor. Geophys. 2018, 40, 233–236. [Google Scholar]

- Wang, F.; Zhang, Y.; Zheng, S.; Kong, D. Oil Flow Rate History Reconstruction using Downhole Transient Temperature Data with Wavelet Transform. In Proceedings of the ECMOR XV-15th European Conference on the Mathematics of Oil Recovery, Amsterdam, The Netherlands, 29 August–1 September 2016. [Google Scholar]

- Athichanagorn, S.; Horne, R.N.; Kikani, J. Processing and interpretation of long-term data acquired from permanent pressure gauges. SPE Reserv. Eval. Eng. 2002, 5, 384–391. [Google Scholar] [CrossRef]

- Amrita, S.; Saumen, M.; Tiwari, R. Selection of optimum wavelet in CWT analysis of geophysical downhole data Selection of optimum wavelet in CWT analysis of geophysical downhole data. J. Indian Geophys. Union 2017, 21, 153–166. [Google Scholar]

- Liu, Z.; Liu, L.; Zhang, J. Signal feature extraction and quantitative evaluation of metal magnetic memory testing for oil well casing based on data preprocessing technique. Abstr. Appl. Anal. 2014, 2014, 902304. [Google Scholar] [CrossRef]

- Zheng, S.; Li, X. Individual well flowing rate recovery from PDG transient pressure with either assigned daily rate or total cumulative production of the well or group of wells through wavelet approach. J. Pet. Sci. Eng. 2009, 68, 277–286. [Google Scholar]

- Wang, F.; Zheng, S. Diagnostic of changes in reservoir properties from long-term transient pressure data with wavelet transform. J. Pet. Sci. Eng. 2016, 146, 921–931. [Google Scholar] [CrossRef]

- Kwasniok, F. Estimation of noise parameters in dynamical system identification with Kalman filters. Phys. Rev. E 2012, 86, 036214. [Google Scholar] [CrossRef]

- Zeng, N.; Wang, Z.; Li, Y.; Du, M.; Liu, X. A Hybrid EKF and Switching PSO Algorithm for Joint State and Parameter Estimation of Lateral Flow Immunoassay Models. IEEE/ACM Trans. Comput. Biol. Bioinform. 2012, 9, 321–329. [Google Scholar]

- Stroud, J.R.; Stein, M.L.; Lesht, B.M.; Schwab, D.J.; Beletsky, D. An Ensemble Kalman Filter and Smoother for Satellite Data Assimilation. J. Am. Stat. Assoc. 2010, 105, 978–990. [Google Scholar] [CrossRef]

- ELSheikh, A.H.; Pain, C.C.; Fang, F.; Gomes, J.L.; Navon, I.M. Parameter estimation of subsurface flow models using iterative regularized ensemble Kalman filter. Stoch. Environ. Res. Risk Assess. 2013, 27, 877–897. [Google Scholar] [CrossRef]

- Chou, C.M.; Wang, R.Y. Application of wavelet-based multi-model Kalman filters to real-time flood forecasting. Hydrol. Process. 2004, 18, 987–1008. [Google Scholar] [CrossRef]

- Nygaard, G.; Naevdal, G.; Mylvaganam, S. Evaluating nonlinear Kalman filters for parameter estimation in reservoirs during petroleum well drilling. In Proceedings of the 2006 IEEE Conference on Computer Aided Control System Design, Munich, Germany, 4–6 October 2006. [Google Scholar]

- Ahmadi, R.; Shahrabi, J.; Aminshahidy, B. Automatic well-testing model diagnosis and parameter estimation using artificial neural networks and design of experiments. J. Pet. Explor. Prod. Technol. 2017, 7, 759–783. [Google Scholar] [CrossRef]

- Li, Y.J.; Kokkinaki, A.; Darve, E.T.; Kitanidis, P.K. Smoothing-based compressed state Kalman filter for joint state-parameter estimation: Applications in reservoir characterization and CO2 storage monitoring. Water Resour. Res. 2017, 53, 7190–7207. [Google Scholar] [CrossRef]

- Harlim, J.; Mahdi, A.; Majda, A.J. An ensemble Kalman filter for statistical estimation of physics constrained nonlinear regression models. J. Comput. Phys. 2014, 257, 782–812. [Google Scholar] [CrossRef]

- Kim, S.; Lee, C.; Lee, K.; Choe, J. Aquifer characterization of gas reservoirs using Ensemble Kalman filter and covariance localization. J. Pet. Sci. Eng. 2016, 146, 446–456. [Google Scholar] [CrossRef]

- Wang, Y.; Li, M. Reservoir history matching and inversion using an iterative ensemble Kalman filter with covariance localization. Pet. Sci. 2011, 8, 316–327. [Google Scholar] [CrossRef]

- Raghu, A.; Yang, X.; Khare, S.; Prakash, J.; Huang, B.; Prasad, V. Reservoir history matching using constrained ensemble Kalman filtering. Can. J. Chem. Eng. 2018, 96, 145–159. [Google Scholar] [CrossRef]

- Xue, Q.; Leung, H.; Wang, R.; Liu, B.; Wu, Y. Continuous Real-Time Measurement of Drilling Trajectory with New State-Space Models of Kalman Filter. IEEE Trans. Instrum. Measur. 2016, 65, 144–154. [Google Scholar] [CrossRef]

- Soltani, S.; Kordestani, M.; Aghaee, P.K.; Saif, M. Improved Estimation for Well-Logging Problems Based on Fusion of Four Types of Kalman Filters. IEEE Trans. Geosci. Remote 2018, 56, 647–654. [Google Scholar] [CrossRef]

- Mahdianfar, H.; Pavlov, A.; Aamo, O.M. Joint unscented Kalman filter for state and parameter estimation in Managed Pressure Drilling. In Proceedings of the 2013 European Control Conference (ECC), Zurich, Switzerland, 17–19 July 2013. [Google Scholar]

- Adibifard, M.; Bashiri, G.; Roayaei, E.; Emad, M.A. Using Particle Swarm Optimization (PSO) Algorithm in Nonlinear Regression Well Test Analysis and Its Comparison with Levenberg-Marquardt Algorithm. Int. J. Appl. Metaheuristic Comput. 2016, 7, 1–23. [Google Scholar] [CrossRef]

- Zhang, L.; Ma, J.; Wang, Y.; Pan, S. PSO-BP Neural Network in Reservoir Parameter Dynamic Prediction. In Proceedings of the 2011 Seventh International Conference on Computational Intelligence and Security, Hainan, China, 3–4 December 2011; pp. 123–126. [Google Scholar]

- Lang, J.; Zhao, J. Modeling and optimization for oil well production scheduling. Chin. J. Chem. Eng. 2016, 24, 1423–1430. [Google Scholar] [CrossRef]

- Obidin, M.V.; Serebrovski, A.P. Signal denoising with the use of the wavelet transform and the Kalman filter. J. Commun. Technol. Electron. 2014, 59, 1440–1445. [Google Scholar] [CrossRef]

- Hong, L.; Cheng, G.; Chui, C. A filter-bank-based Kalman filtering technique for wavelet estimation and decomposition of random signals. IEEE Trans. Circuits Syst. II 1998, 45, 237–241. [Google Scholar] [CrossRef]

- Soltani, S.; Kordestani, M.; Aghaee, P.K. New estimation methodologies for well logging problems via a combination of fuzzy Kalman filter and different smoothers. J. Pet. Sci. Eng. 2016, 145, 704–710. [Google Scholar] [CrossRef]

- Wavelets Information. The MathWorks, Inc.. Available online: https://ww2.mathworks.cn/help/wavelet/ref/waveinfo.html (accessed on 29 June 2020).

- Kennedy, J.E.; Eberhart, R.C. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).