STEPS: An Indoor Navigation Framework for Mobile Devices

Abstract

1. Introduction

- Accuracy: Often the main and foremost parameter being tested

- Keep it simple: Simplicity is a key factor: The system should work automatically with no manual overhead operation or calibration.

- Real time: For natural and intuitive positioning results, especially for highly dynamic movements’

- Privacy: The suggested solution should be able to work offline, e.g., “flight-mode”.

- Bring your own device: The suggested solution should work on existing commercial off-the-shelf mobile devices (e.g., common smartphones). This requirement implies energy-consumption and computing-power limitations.

1.1. Related Works

- Indoor : In general, signals are mostly unavailable in indoor scenarios but, in many cases, can be received through windows, skylights, or simply “thin” ceilings. Because it is poplar and widely used in smartphones, several researchers have suggested indoor positioning solutions [11].

- WiFi Fingerprinting: This method uses the signals of nearby wireless access points to determine its location relative to them [12].

- Bluetooth Low Energy (): This is another popular fingerprinting method [16].

- Pedometer and Inertial Navigation: This method computes a relative position with respect to a known starting location. It approximates both speed and direction via inertial motion sensors. Such an inertial system suffers from drifting error that accumulates with time, for example, [17].

- Optical Flow: This method calculates the user’s relative motion according to optical features. Comparing the features from consecutive frames allows a tight estimation of the relative motion. As with the pedometer method, the optical flow method tends to drift in time [18].

- Magnetic Field: As in WiFi and cellular signals cases, the magnetic field sensor can be used to generate unique magnetic “fingerprint” to certain locations. The magnetic filed is affect by electric devices and metal materials which distort the earth magnetic filed. The use of magnetic filed for indoor positioning was suggested by several researchers see: [19,20,21].

1.2. Our Contribution

1.3. Smartphone’s Sensors for Indoor Positioning

2. Particle Filter for Localization

2.1. Basis of Indoor Positioning

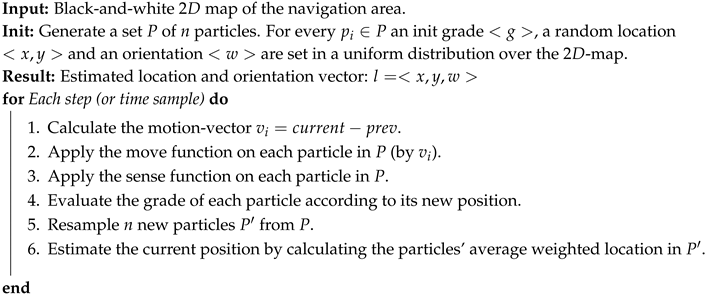

2.2. Particle Filter for Localization

- Map: The particle filter method estimates the internal state in a given area. Thus, the input of this algorithm is a map of the region of interest (). This map should include as many constraints as possible (e.g., walls and tables). The map constraints are one of the parameters that determine each particle grade, because particles with impossible locations on the map will be downgraded.

- Particle: At the beginning of the localization process, we “spread” a set of particles P on the map. Each particle will have the attributes of location: , orientation: w, and grade: g. In each step, all particles’ locations, orientations, and grades will be modified. Because these particles represent the internal-state distribution, the sum of the P particles’ grade is 1 in each step. At the initial step, each particle grade is . The particle’s grade will be set higher as its location on the map seems more likely to represent the internal state.

- Move (action) function: With each step, all particles on the map should be relocated according to the internal movement. Hence, for each step, we calculate the movement vector (in ) and the difference in orientation, then move all particles accordingly. As commonly used with smartphones, the mobile pedometer (step counter with orientation) provides the movement in each step.

- Sense function: The device sensors also are used to determine each particle grade. The sense method predicts each particle’s sense for each step and then grades it with respect to the correlation between the particle prediction and the internal sense. In our case, the sense function is discrete, comparing each particle’s internal location to the corresponding location on the map. The grading is according to the match between the map location and a known state (going up or down, inability to walk thru walls, etc.).

- Resampling: The process of choosing a new set of particles () from P can be conducted with various methods, but the main purpose of resampling is to choose the particles with high grades over those with low grades.

- Random noise: To prevent convergence of the particles from happening too quickly (and thus risk missing the true location), after resampling, we move each particle with a small random noise on the map. Usually, this is done by moving each particle in a small radius from its original location.

| Algorithm 1: Generic particle filter localization algorithm: A black-and-white map is used to present the geo-constrains used by the particle filter. |

|

2.3. Run-Time Analysis

- Reducing the number of sample per s: such method can be done by using an adaptive sampling rate, i.e., in case of stationary user (low movement) a sampling rate of a 1 Hz should sufficient while in high dynamic movement (e.g., a running user) a sample rate of 30 Hz might be needed.

- Reducing the number of particles: such natural approach can be implemented using the expected probabilistic space represented the particle. Additional details regarding such improvement can be found in the improved algorithm see Section 3.7.

- Particle filter algorithms can be implemented efficiently on multi cores and (General Purpose Graphic Processing Unit) platforms: [26]. Consider Algorithm 1, lines 2–4 can be perform in parallel (thread-core for each particle). Resampling (line 5) in calculating the “best location” (line 6) in parallel is somewhat tricky—yet several papers have suggested parallel and distributed solutions for these methods (e.g., [27,28]).

3. Improved Algorithm

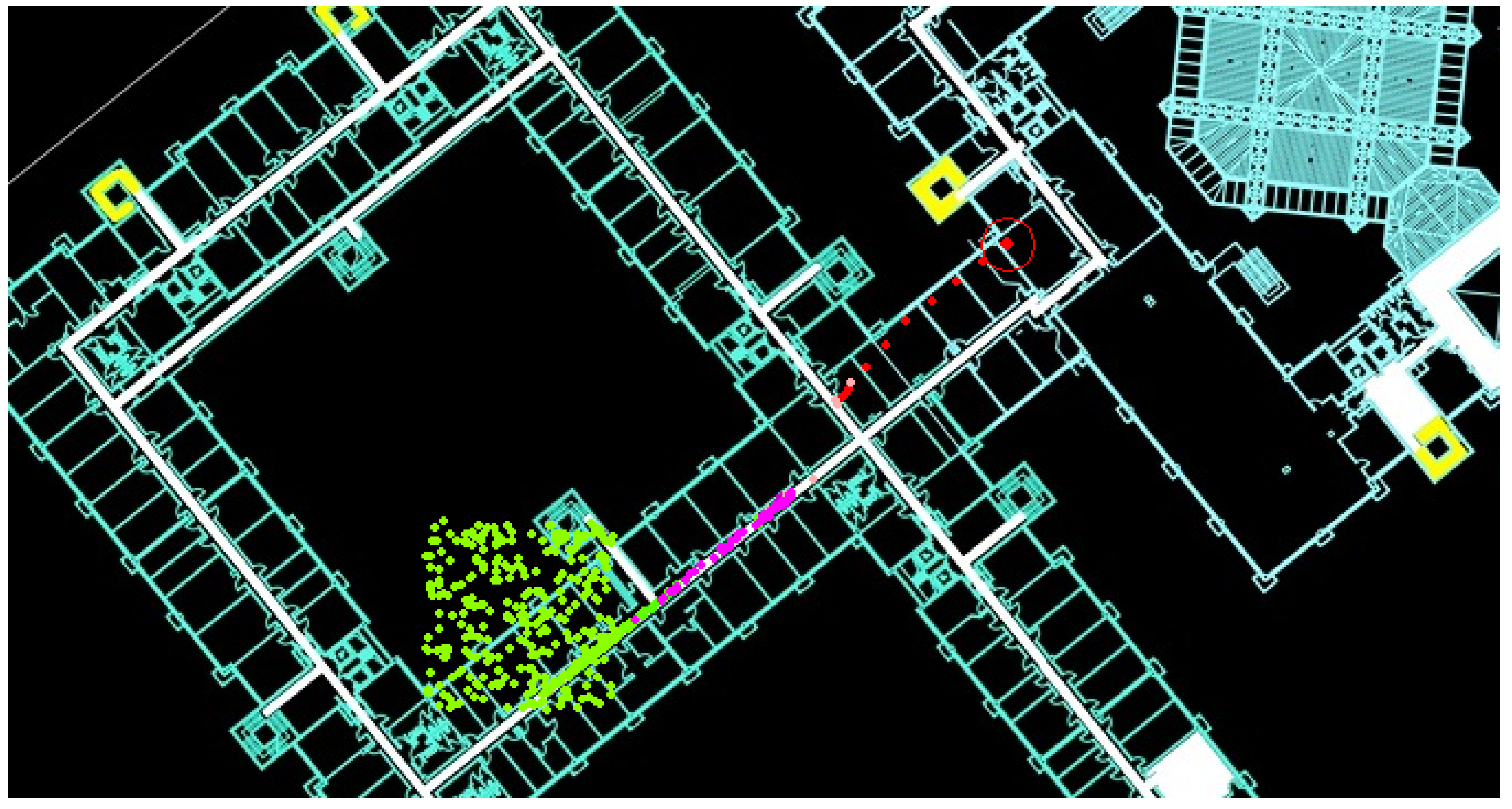

3.1. Map Constrains

- AR measurement tools for surface detection, which allowed us to determine the sampled boundaries, and

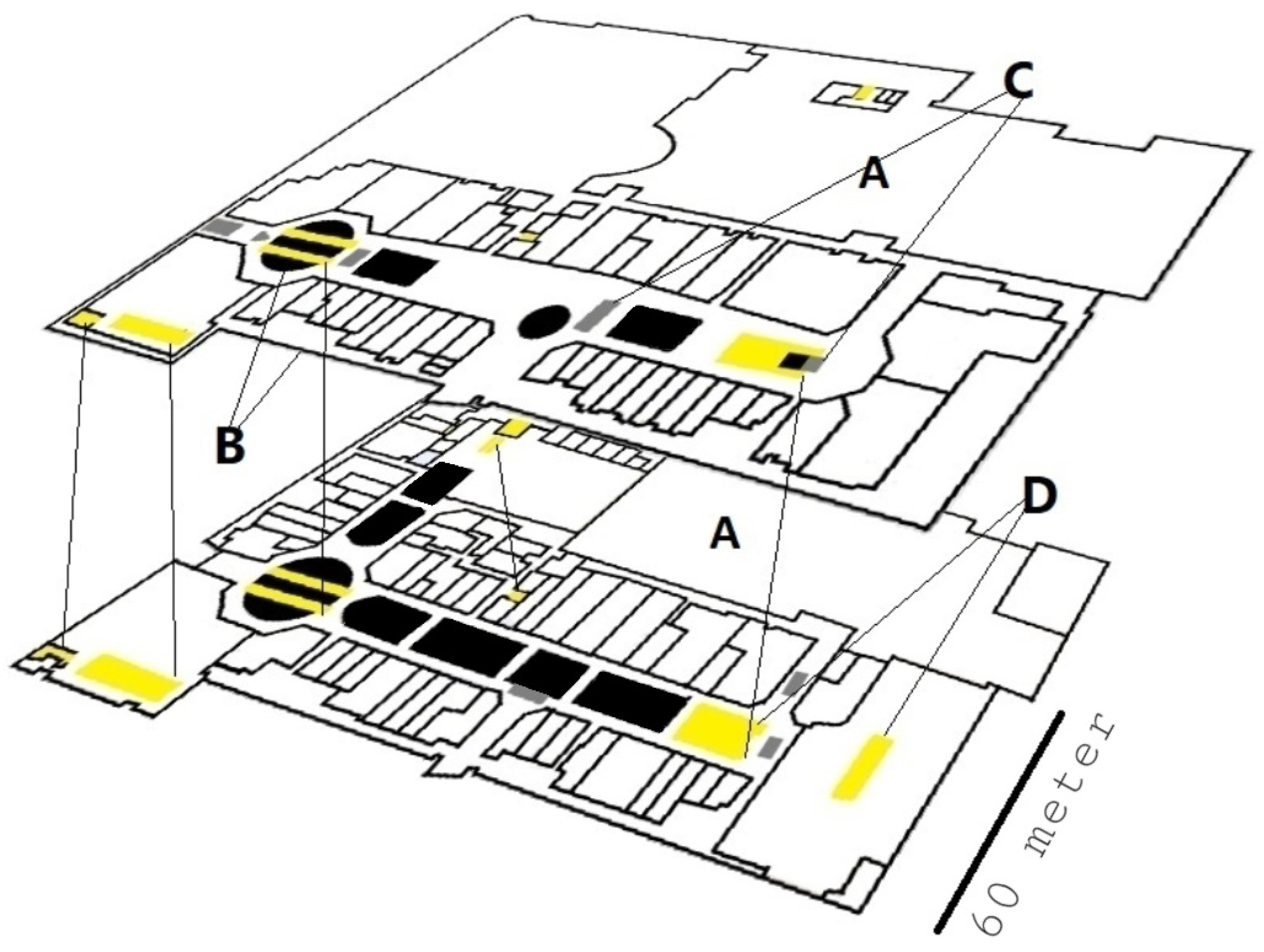

- the map in the form of a painted image, using defined colors (A, B, C, D) to represent the verity of the constraints.

- A: accessible area

- B: inaccessible area, such as walls or fixed barriers, as sensed by the AR tool

- C: partially accessible regions, representing locations with relatively low probability for users to be at (e.g., tables)

- D: floor-changing regions, such as stairs, escalators, and elevators

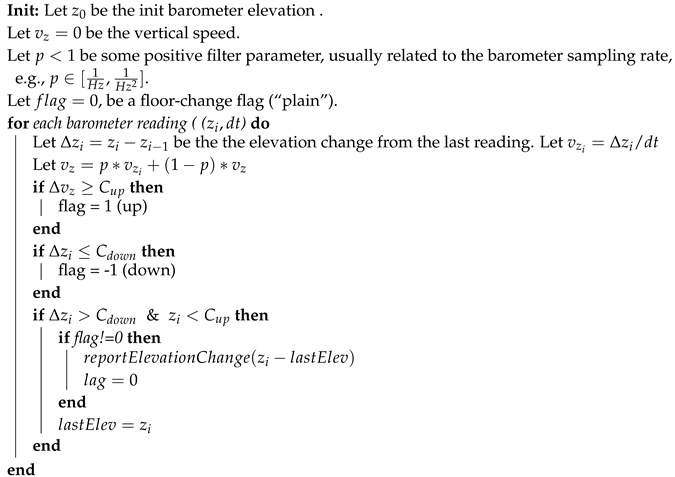

3.2. Floor-Change Detection

| Algorithm 2: Floor-change algorithm: if the user had been going up or down, the algorithms estimate the elevation change between the current z and the last flat-floor parameter. As long as the user remains in a flat, minor changes in the measured elevation are omitted. A vertical velocity of m per s, is a common value for and assuming a walking user. |

|

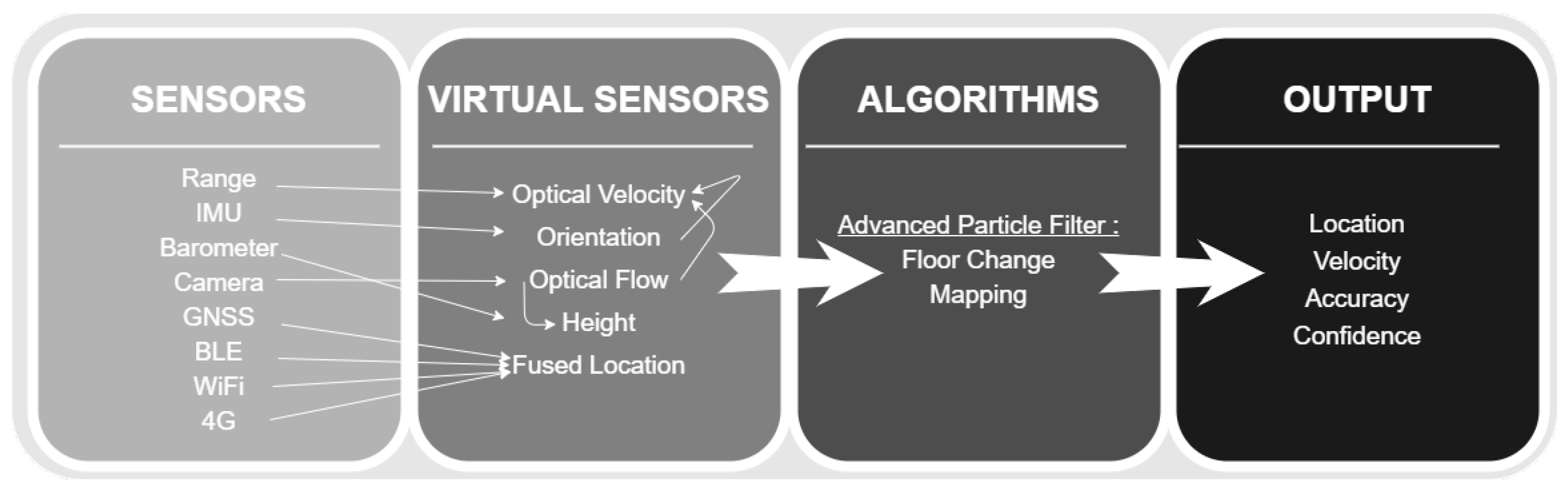

3.3. Velocity Estimation

| Algorithm 3: Optical Velocity Algorithm: in cases of low confidence it will report several options of velocity and device orientation. |

|

3.4. Improved Sense Function

3.5. Improving Compass Accuracy

3.6. Sparse Sensing

3.7. Adjustable Particle Set

3.8. Kidnapped Robot

3.9. Indoor and Outdoor Classification

3.10. Parameter Optimization

- Selection: Select the fittest individual through the process.

- Crossover: Pair two selected individuals to populate the next generation. Their offspring will carry a genetic cargo that is a combination of their parents’ genes.

- Mutation: Create a mutation in the offspring’s genes from the previous step.

4. Experimental Results

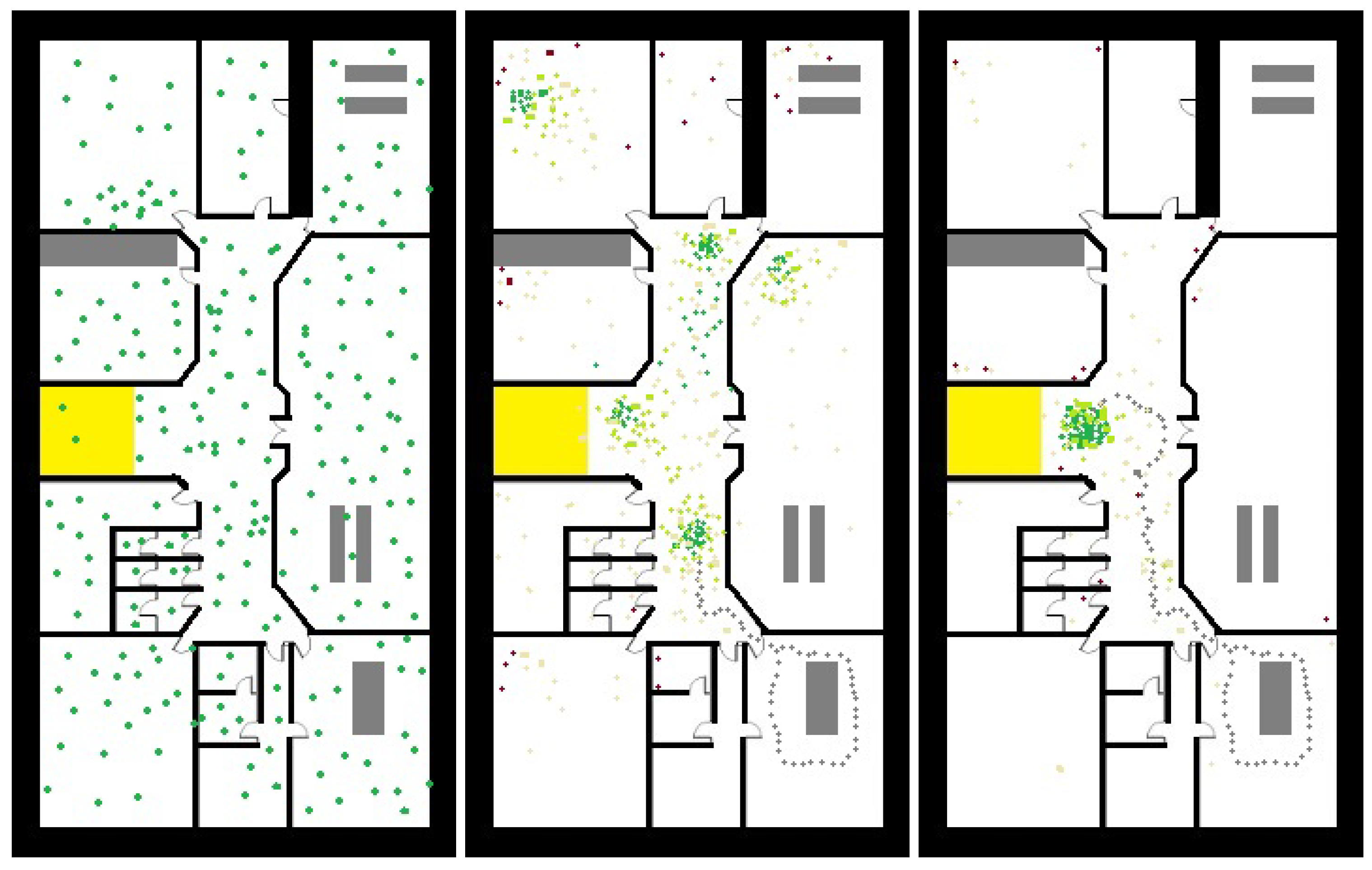

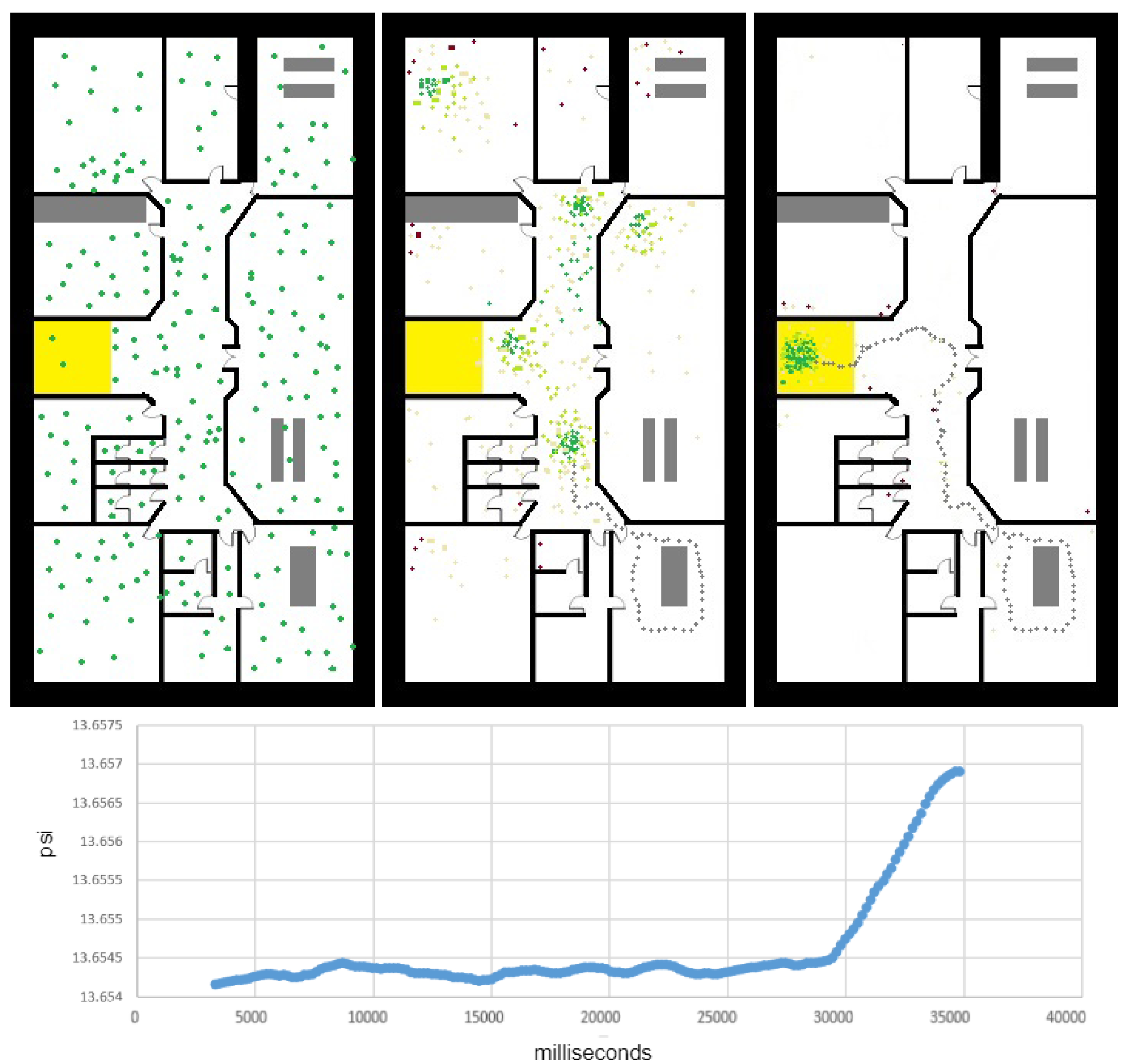

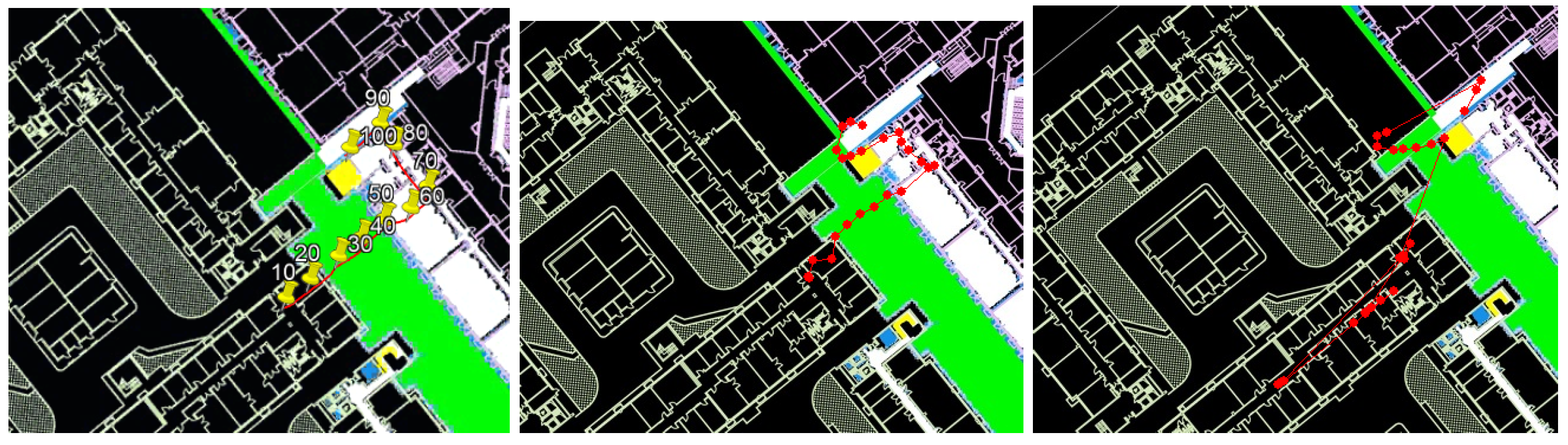

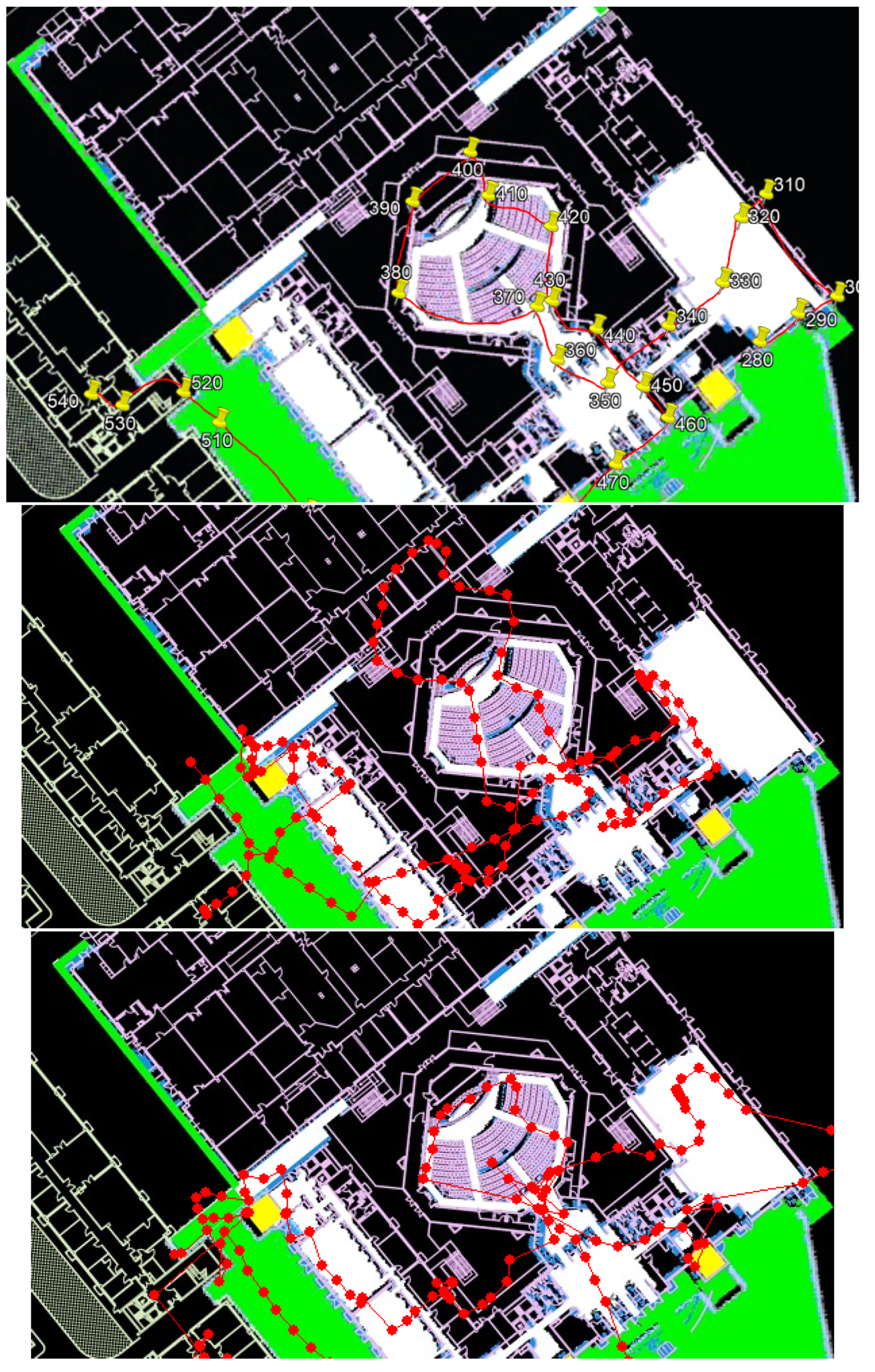

4.1. Simulation

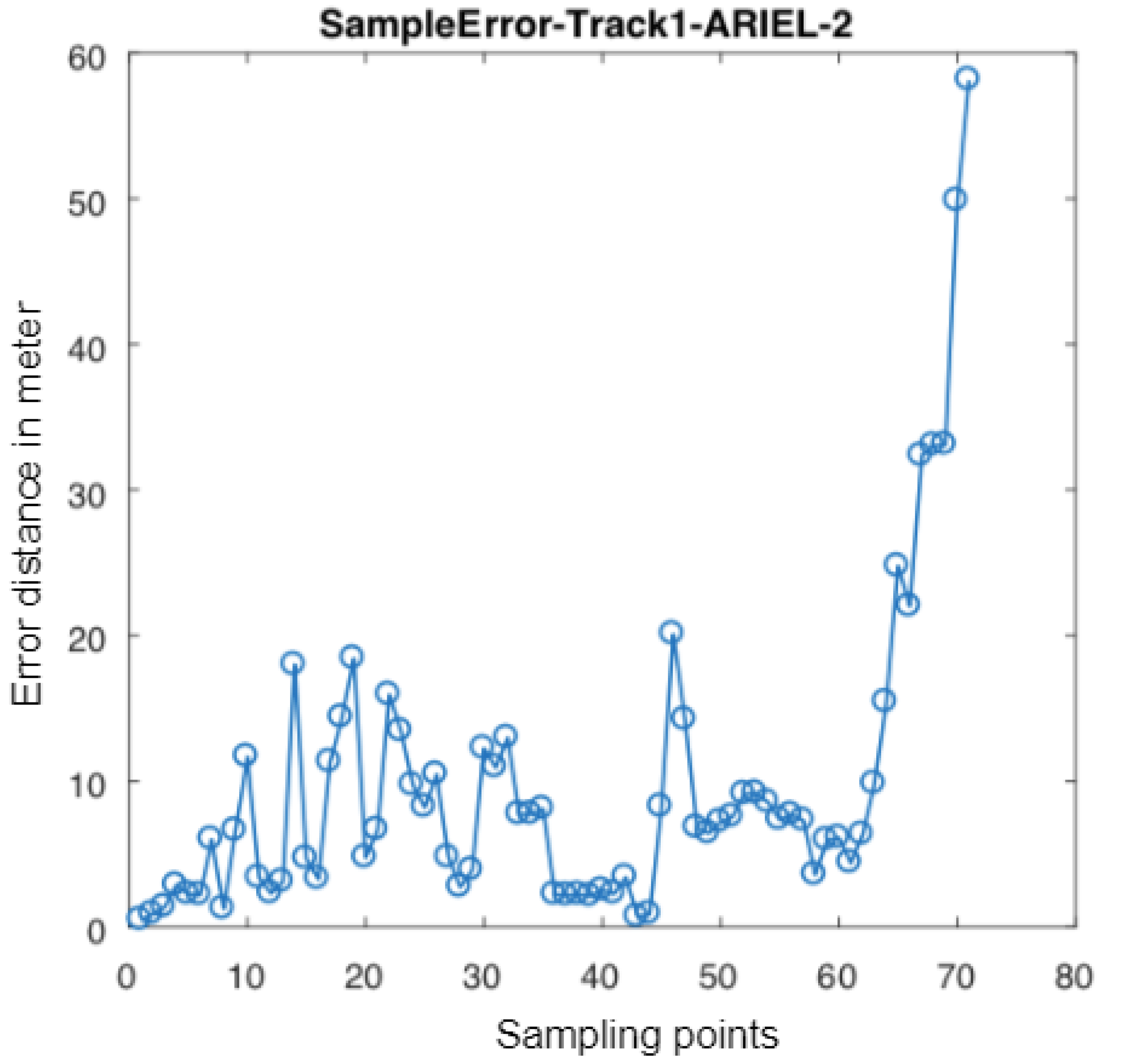

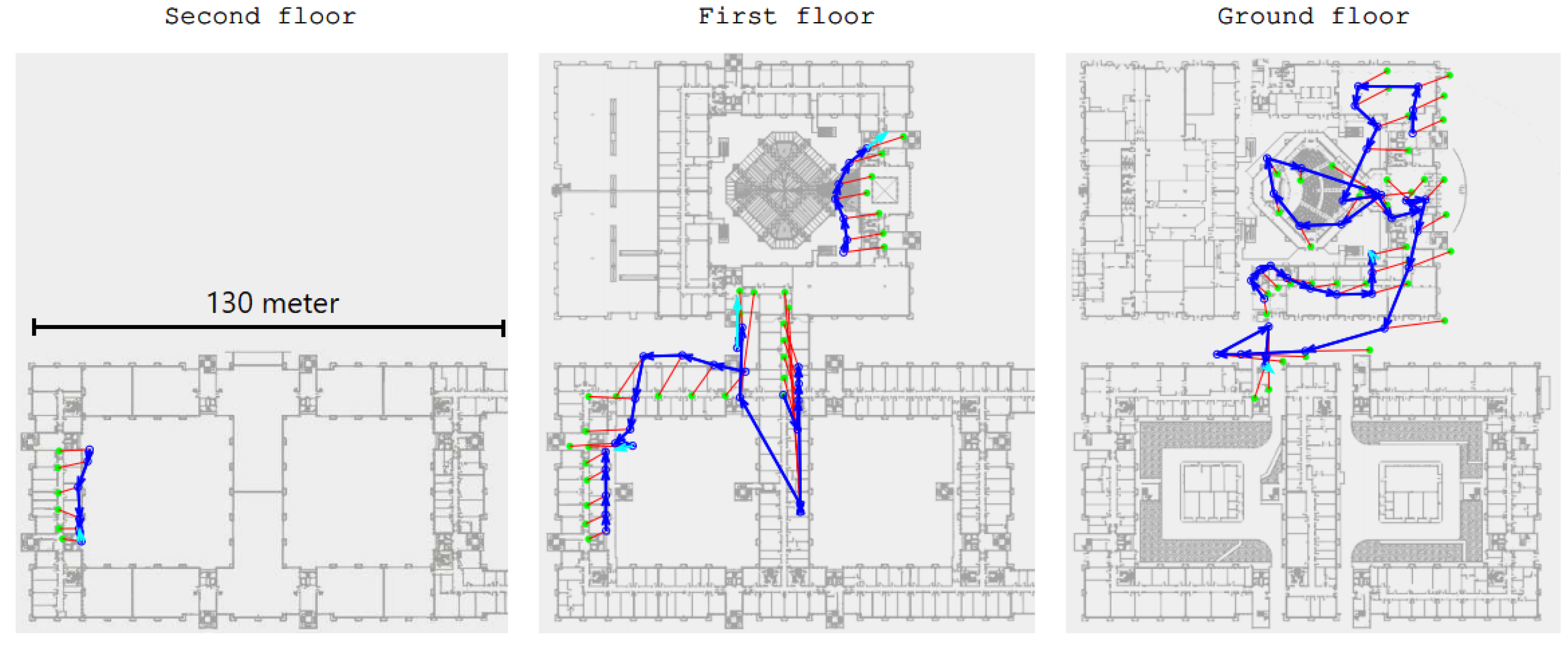

4.2. Case Study: Microsoft Indoor Localization Competition

- : The proposed system uses mobile’s inertial sensors to track user location with data fusion algorithm to fuse inertial data.

- : The proposed system exploits and geomagnetic fingerprinting along side with and computer vision techniques.

- : The proposed system uses time-of-flight Wireless Indoor Navigation System, that estimates the position of commercial off-the-shelf devices such as smartphones, tablets and laptops using pure commercial off-the-shelf WiFi Access Points in near real-time.

- : The proposed system based on human stride-model analysis combining with geomagnetic positioning and WiFi fingerprinting technology.

- : The proposed system is an infrastructure free technique which exploits the location and tracking information of and PDR with sparse geomagnetic tagging of the environment.

- : The proposed system uses particle filter which combine RF fingerprinting, odometry, visual landmarks and map constraints.

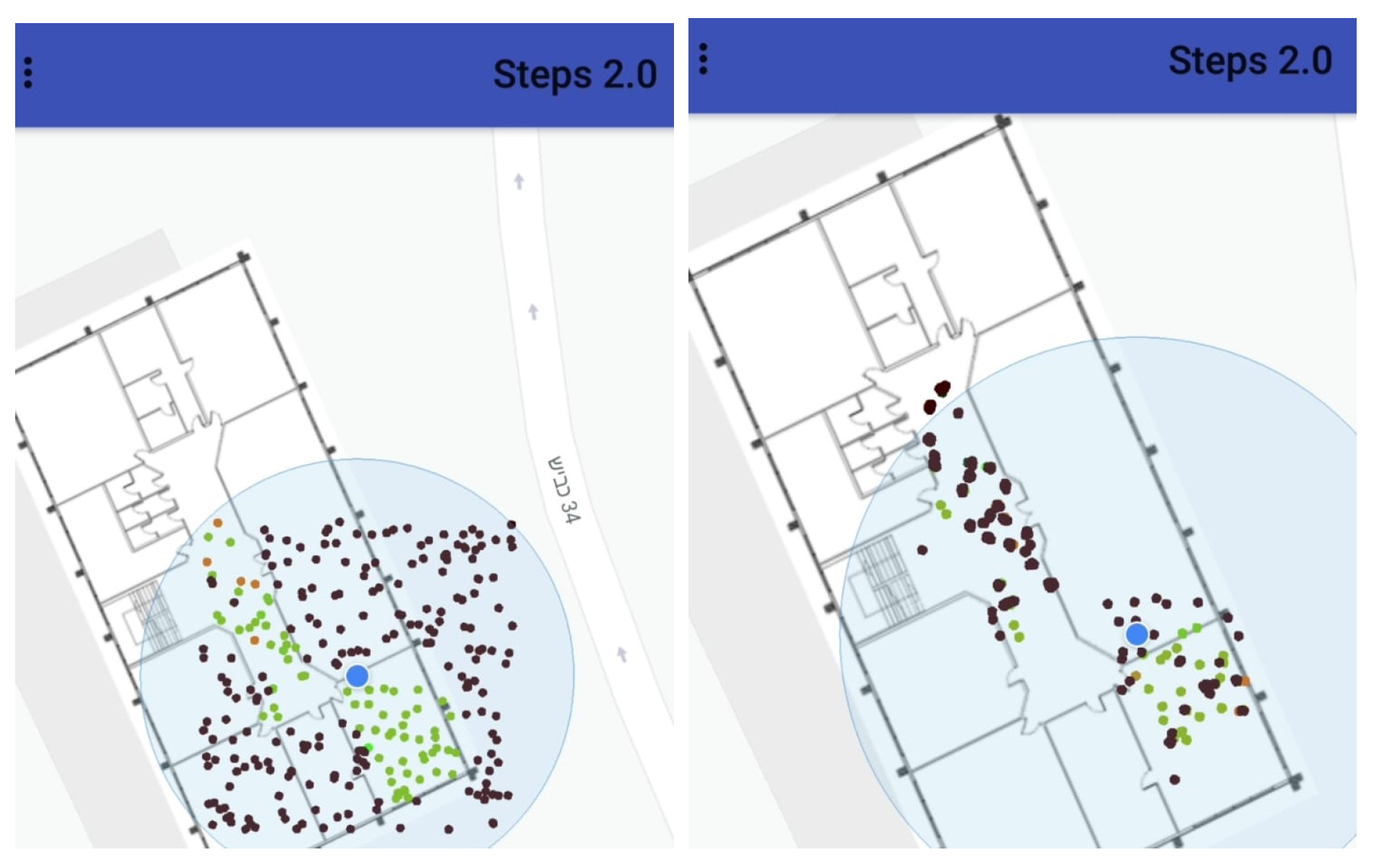

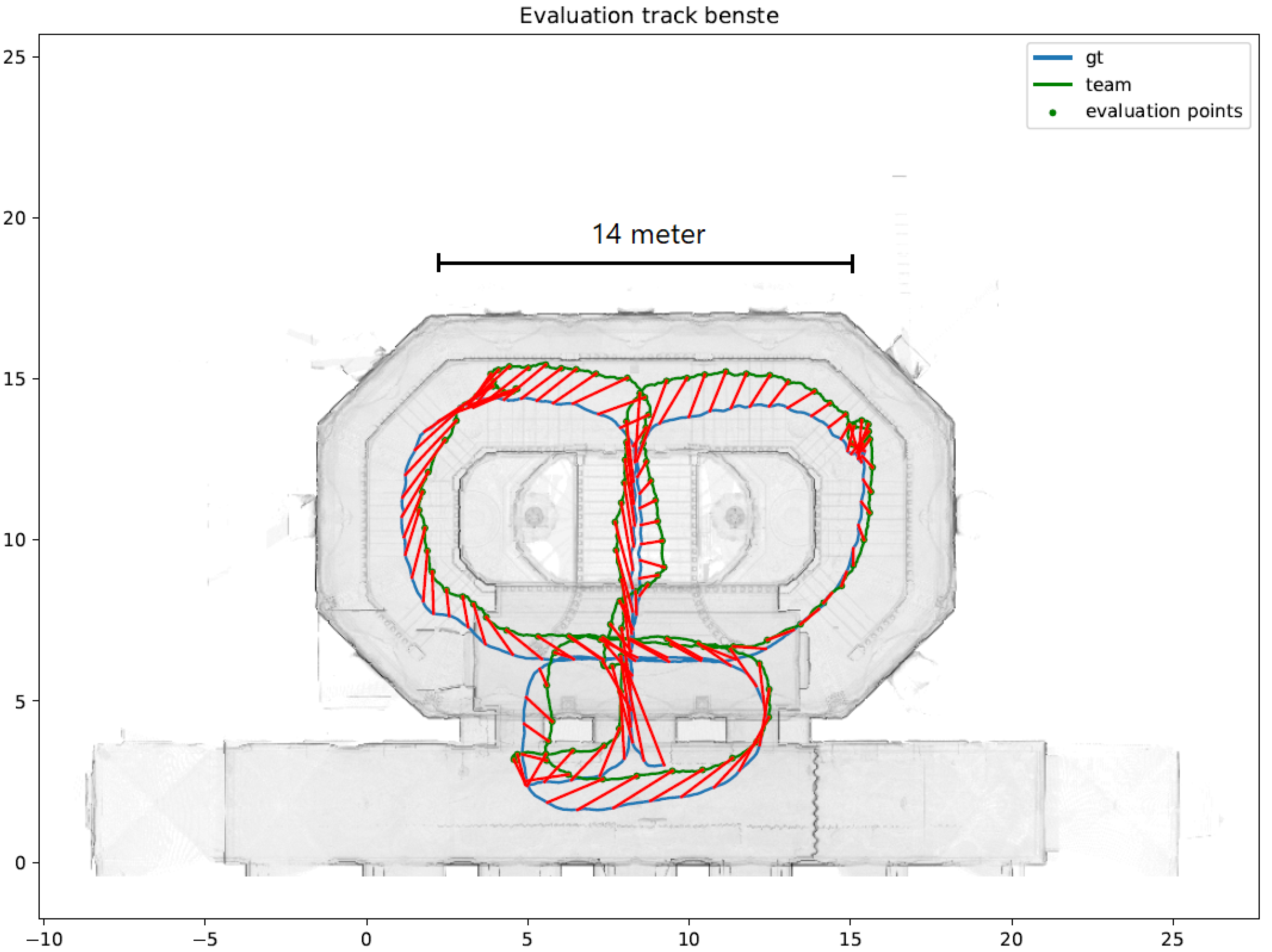

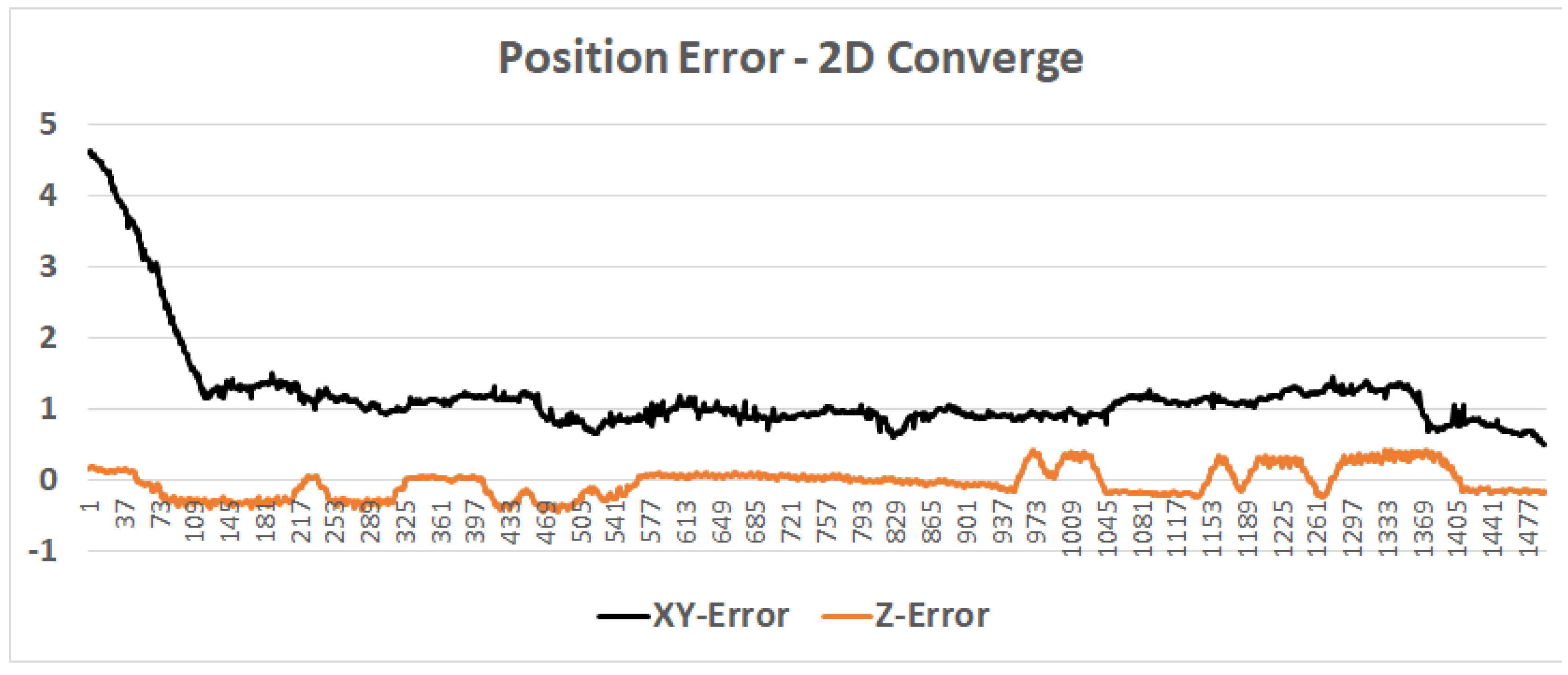

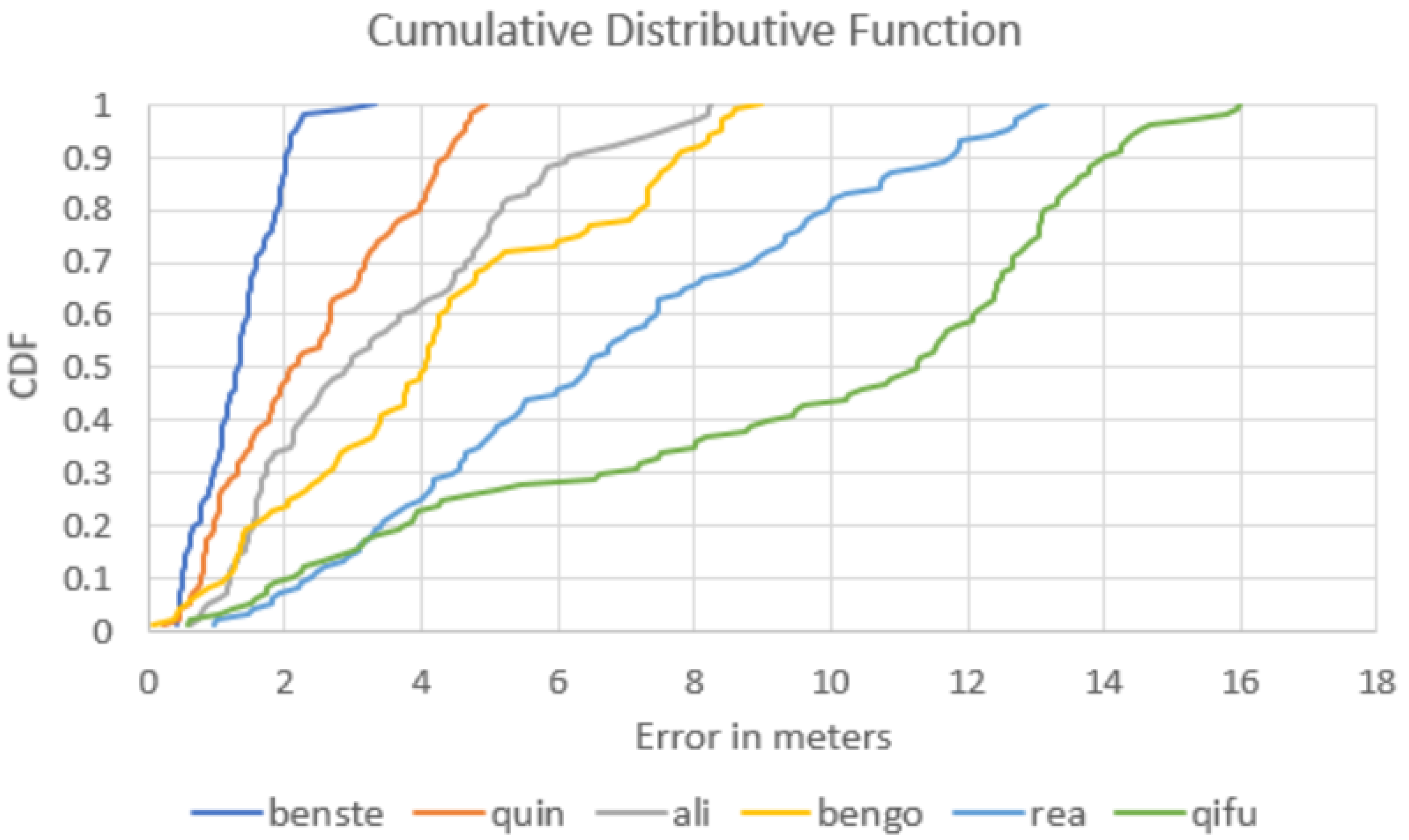

4.3. Case Study: 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN)

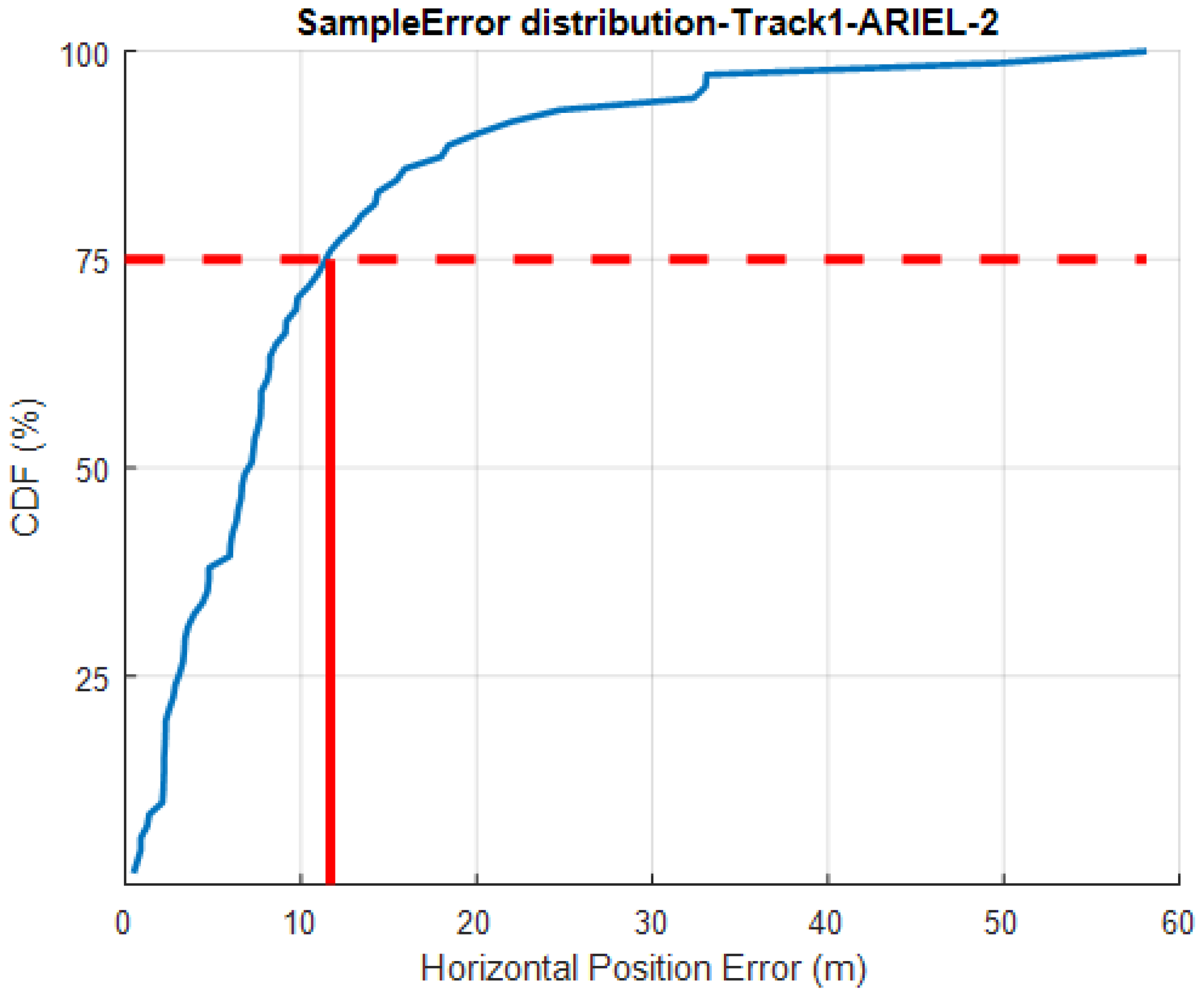

4.4. Case Study: 2019 IPIN

4.5. Energy Consumption and Privacy Evaluation

5. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Farid, Z.; Nordin, R.; Ismail, M. Recent advances in wireless indoor localization techniques and system. J. Comput. Net. Commun. 2013, 2013. [Google Scholar] [CrossRef]

- Gu, Y.; Lo, A.; Niemegeers, I. A survey of indoor positioning systems for wireless personal networks. IEEE Commun. Surv. Tutor. 2009, 11, 13–32. [Google Scholar] [CrossRef]

- Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 7th Workshop on Positioning, Navigation and Communication, Dresden, Germany, 11–12 March 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 135–143. [Google Scholar]

- Krach, B.; Roberston, P. Cascaded estimation architecture for integration of foot-mounted inertial sensors. In Proceedings of the Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 112–119. [Google Scholar]

- Krach, B.; Robertson, P. Integration of foot-mounted inertial sensors into a Bayesian location estimation framework. In Proceedings of the 5th Workshop on Positioning, Navigation and Communication, Hanover, Germany, 27 March 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 55–61. [Google Scholar]

- Lymberopoulos, D.; Liu, J. The Microsoft Indoor Localization Competition: Experiences and lessons learned. IEEE Signal Process. Mag. 2017, 34, 125–140. [Google Scholar] [CrossRef]

- Yozevitch, R.; Ben-Moshe, B. Advanced particle filter methods. In Heuristics and Hyper-Heuristics Principles and Applications; Del Ser Lorente, J., Ed.; InTech: Rijeka, Croatia, 2017. [Google Scholar]

- Davidson, P.; Piché, R. A survey of selected indoor positioning methods for smartphones. IEEE Commun. Surv. Tutor. 2016, 19, 1347–1370. [Google Scholar] [CrossRef]

- Brena, R.F.; García-Vázquez, J.P.; Galván-Tejada, C.E.; Muñoz-Rodriguez, D.; Vargas-Rosales, C.; Fangmeyer, J. Evolution of indoor positioning technologies: A survey. J. Sens. 2017, 2017, 2630413. [Google Scholar] [CrossRef]

- Ashraf, I.; Hur, S.; Shafiq, M.; Kumari, S.; Park, Y. GUIDE: Smartphone sensors-based pedestrian indoor localization with heterogeneous devices. Int. J. Commun. Syst. 2019, 32, e4062. [Google Scholar] [CrossRef]

- Lachapelle, G. GNSS indoor location technologies. J. Glob. Position. Syst. 2004, 3, 2–11. [Google Scholar] [CrossRef]

- Farshad, A.; Li, J.; Marina, M.K.; Garcia, F.J. A microscopic look at WiFi fingerprinting for indoor mobile phone localization in diverse environments. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation, Montbeliard-Belfort, France, 28–31 October 2013; p. 31. [Google Scholar]

- Del Peral-Rosado, J.A.; Castillo, R.E.I.; Mıguez-Sánchez, J.; Navarro-Gallardo, M.; Garcıa-Molina, J.A.; López-Salcedo, J.A.; Seco-Granados, G.; Zanier, F.; Crisci, M. Performance analysis of hybrid GNSS and LTE Localization in urban scenarios. In Proceedings of the 2016 8th ESA Workshop on Satellite Navigation Technologies and European Workshop on GNSS Signals and Signal Processing (NAVITEC), Noordwijk, The Netherlands, 14–16 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Vaghefi, R.M.; Buehrer, R.M. Improving positioning in LTE through collaboration. In Proceedings of the 11th Workshop on Positioning, Navigation and Communication (WPNC), Dresden, Germany, 12–13 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Mirowski, P.; Ho, T.K.; Yi, S.; MacDonald, M. SignalSLAM: Simultaneous localization and mapping with mixed WiFi, Bluetooth, LTE and magnetic signals. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Montbeliard-Belfort, France, 28–31 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–10. [Google Scholar]

- Zhuang, Y.; Yang, J.; Li, Y.; Qi, L.; El-Sheimy, N. Smartphone-based indoor localization with bluetooth low energy beacons. Sensors 2016, 16, 596. [Google Scholar] [CrossRef] [PubMed]

- Foxlin, E. Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, C.; Bames, N. Performance of optical flow techniques for indoor navigation with a mobile robot. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA ’04, New Orleans, LA, USA, 26 April–1 May 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 5, pp. 5093–5098. [Google Scholar]

- Wang, X.; Yu, Z.; Mao, S. Indoor localization using smartphone magnetic and light sensors: A deep LSTM approach. Mob. Netw. Appl. 2020, 25, 819–832. [Google Scholar] [CrossRef]

- Shu, Y.; Bo, C.; Shen, G.; Zhao, C.; Li, L.; Zhao, F. Magicol: Indoor localization using pervasive magnetic field and opportunistic WiFi sensing. IEEE J. Sel. Areas Commun. 2015, 33, 1443–1457. [Google Scholar] [CrossRef]

- Ashraf, I.; Kang, M.; Hur, S.; Park, Y. MINLOC: Magnetic Field Patterns-Based Indoor Localization Using Convolutional Neural Networks. IEEE Access 2020, 8, 66213–66227. [Google Scholar] [CrossRef]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3357–3364. [Google Scholar]

- Montemerlo, M.; Thrun, S. FastSLAM: A Scalable Method for the Simultaneous Localization and Mapping Problem in Robotics; Springer: Berlin, Germany, 2007; pp. 63–90. [Google Scholar]

- Gallegos, G.; Rives, P. Indoor SLAM based on composite sensor mixing laser scans and omnidirectional images. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 3519–3524. [Google Scholar]

- Massey, B. Fast perfect weighted resampling. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 3457–3460. [Google Scholar]

- Par, K.; Tosun, O. Parallelization of particle filter based localization and map matching algorithms on multicore/manycore architectures. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 820–826. [Google Scholar]

- Gong, P.; Basciftci, Y.O.; Ozguner, F. A parallel resampling algorithm for particle filtering on shared-memory architectures. In Proceedings of the 2012 IEEE 26th International Parallel and Distributed Processing Symposium Workshops & PhD Forum, Shanghai, China, 21–25 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1477–1483. [Google Scholar]

- Bolic, M.; Djuric, P.M.; Hong, S. Resampling algorithms and architectures for distributed particle filters. IEEE Trans. Signal Process. 2005, 53, 2442–2450. [Google Scholar] [CrossRef]

- Valentin, J.; Dryanovski, I.; Afonso, J.; Pascoal, J.; Tsotsos, K.; Leung, M.; Schmidt, M.; Guleryuz, O.; Khamis, S.; Tankovitch, V.; et al. Depth from motion for smartphone AR. ACM Trans. Graph. (TOG) 2018, 37, 1–19. [Google Scholar] [CrossRef]

- Feigl, T.; Porada, A.; Steiner, S.; Löffler, C.; Mutschler, C.; Philippsen, M. Localization Limitations of ARCore, ARKit, and Hololens in Dynamic Large-scale Industry Environments. In Proceedings of the 15th International Conference on Computer Graphics Theory and Applications, Valletta, Malta, 27–29 February 2020; pp. 307–318. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Thrun, S.; Fox, D.; Burgard, W.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 128, 99–141. [Google Scholar] [CrossRef]

- Yozevitch, R.; Moshe, B.B.; Weissman, A. A robust GNSS LOS/NLOS signal classifier. Navigation 2016, 63, 429–442. [Google Scholar] [CrossRef]

- Whitley, D. A genetic algorithm tutorial. Stat. Comput. 1994, 4, 65–85. [Google Scholar] [CrossRef]

- Vlad Landa; Boaz Ben-Moshe, N.S.S.H. GoIn: An accurate 3D indoor navigation framework for mobile devices. In Proceedings of the 9th International Conference on Indoor Positioning and Indoor Navigation, Nantes, France, 24–27 September 2018. [Google Scholar]

| Sensor List | |||

|---|---|---|---|

| Sensor Type | Main Use for Positioning | Sampling Rate | Expected Error |

| Magnetometer (compass) | Orientation: Yaw - E-compass | 10 Hz | [2–10] degrees |

| Magnetometer (strength) | Magnetic Field | 10 Hz | NA |

| IMU | Orientation: Pitch and Roll | 50 Hz | [1–2] degrees |

| Barometer | Relative Elevation | 10 Hz | [0.3–1.0] m (relative) |

| Camera | Optical flow | 30 Hz | [1–3]% (relative) |

| Range | Distance from wall or floor | 10 Hz | 2% upto 4 m |

| GNSS | Indoor Global Position | 1 Hz | [5–50] m in indoors |

| BLE | RSSI Fingerprinting | 1 Hz | [3–10] m |

| WiFi | RSSI Fingerprinting | [0.3–1] Hz | [3–15] m |

| 4G | RSSI Fingerprinting | 1 Hz | [10–50] m |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Landau, Y.; Ben-Moshe, B. STEPS: An Indoor Navigation Framework for Mobile Devices. Sensors 2020, 20, 3929. https://doi.org/10.3390/s20143929

Landau Y, Ben-Moshe B. STEPS: An Indoor Navigation Framework for Mobile Devices. Sensors. 2020; 20(14):3929. https://doi.org/10.3390/s20143929

Chicago/Turabian StyleLandau, Yael, and Boaz Ben-Moshe. 2020. "STEPS: An Indoor Navigation Framework for Mobile Devices" Sensors 20, no. 14: 3929. https://doi.org/10.3390/s20143929

APA StyleLandau, Y., & Ben-Moshe, B. (2020). STEPS: An Indoor Navigation Framework for Mobile Devices. Sensors, 20(14), 3929. https://doi.org/10.3390/s20143929