A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression

Abstract

1. Introduction

- To the best of our knowledge, this is the first time that signatures of objects, local descriptors and multiple kernel learning for objects categorization and multi-class logistic regression for scene classification have been introduced.

- Fusing of Geometric and SIFT feature descriptors for objects and scene classification.

- Accurate multiple region extraction and label indexing of complex scene datasets.

- Significant improvement in the accuracy of object and scene classification with less computational time compared to other state-of-the-art methods.

2. Related Work

2.1. Object Segmentation

2.2. Single/Multiple Object Categorization

2.3. Scene Classification

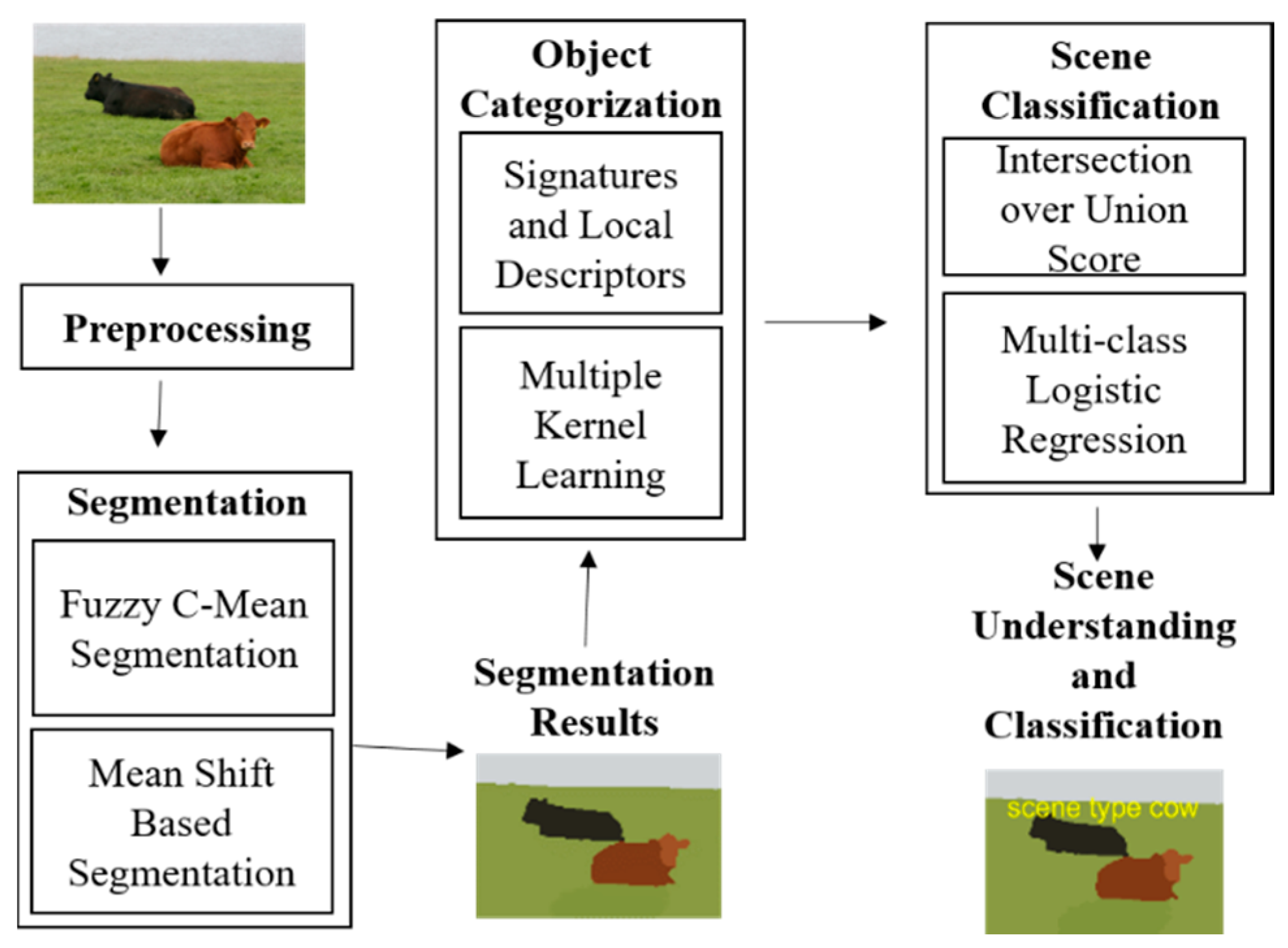

3. Overview of Solution Framework

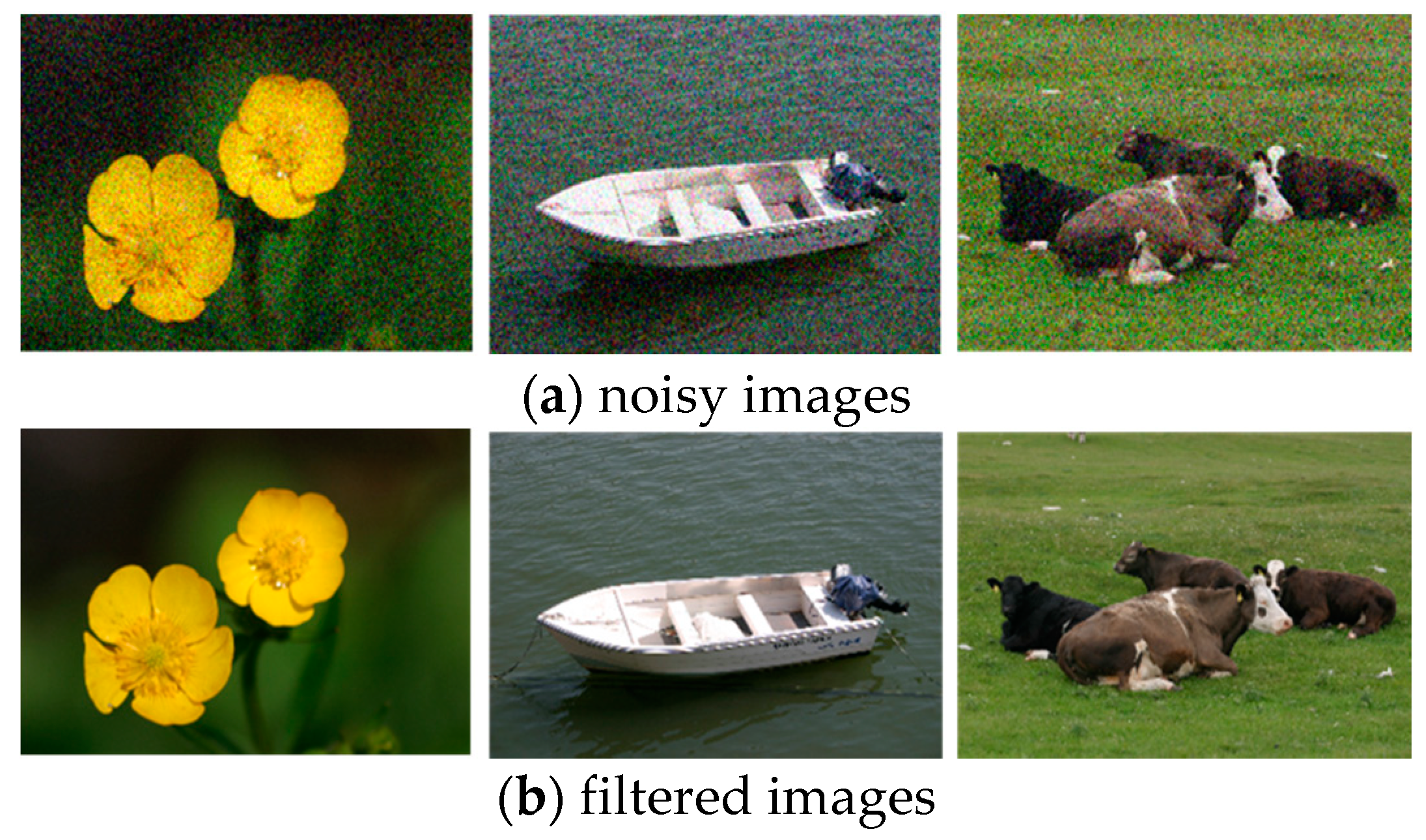

3.1. Preprocessing and Normalization

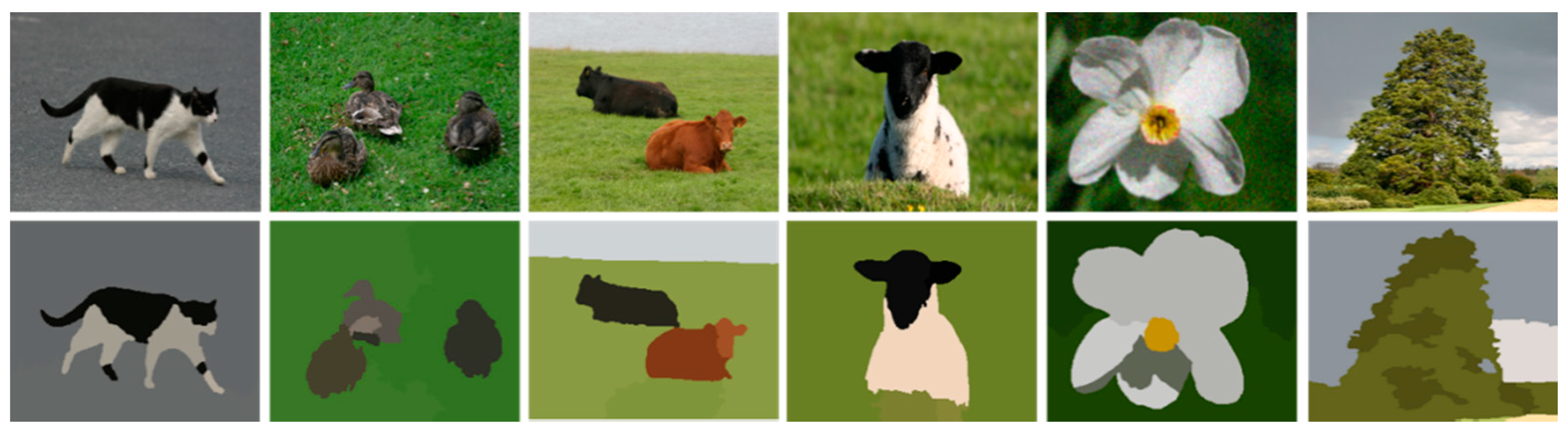

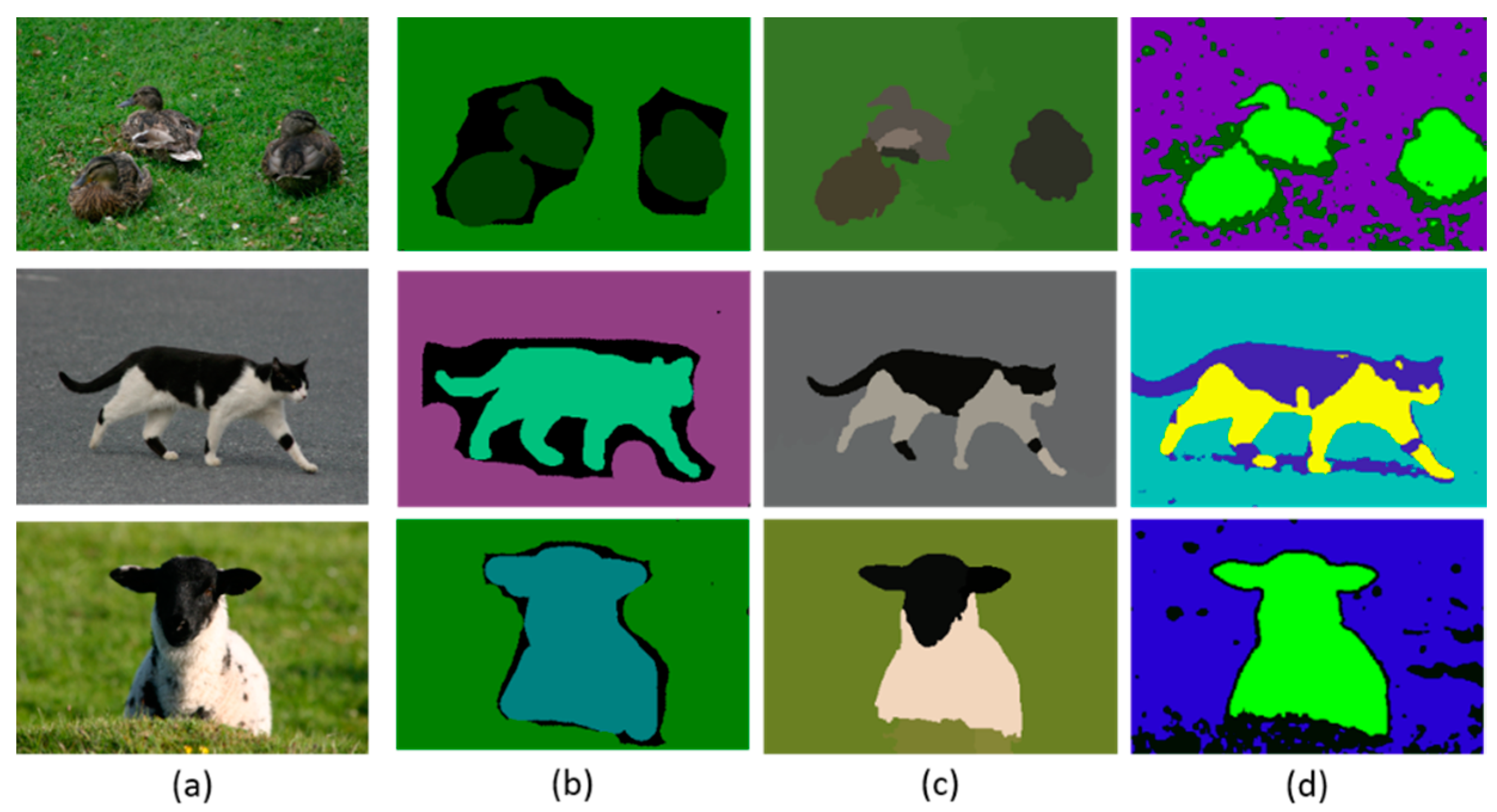

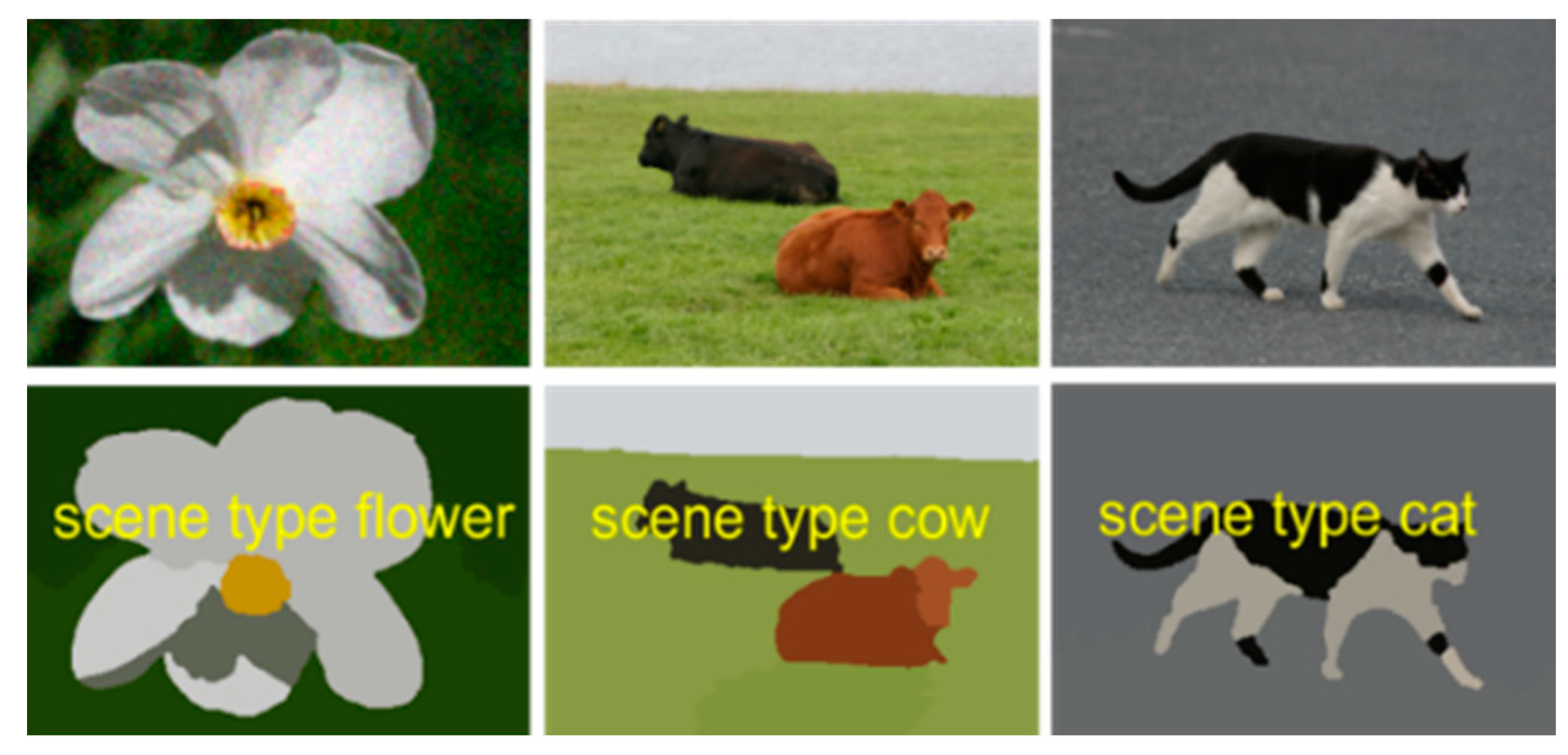

3.2. Single/Multiple Object Segmentation

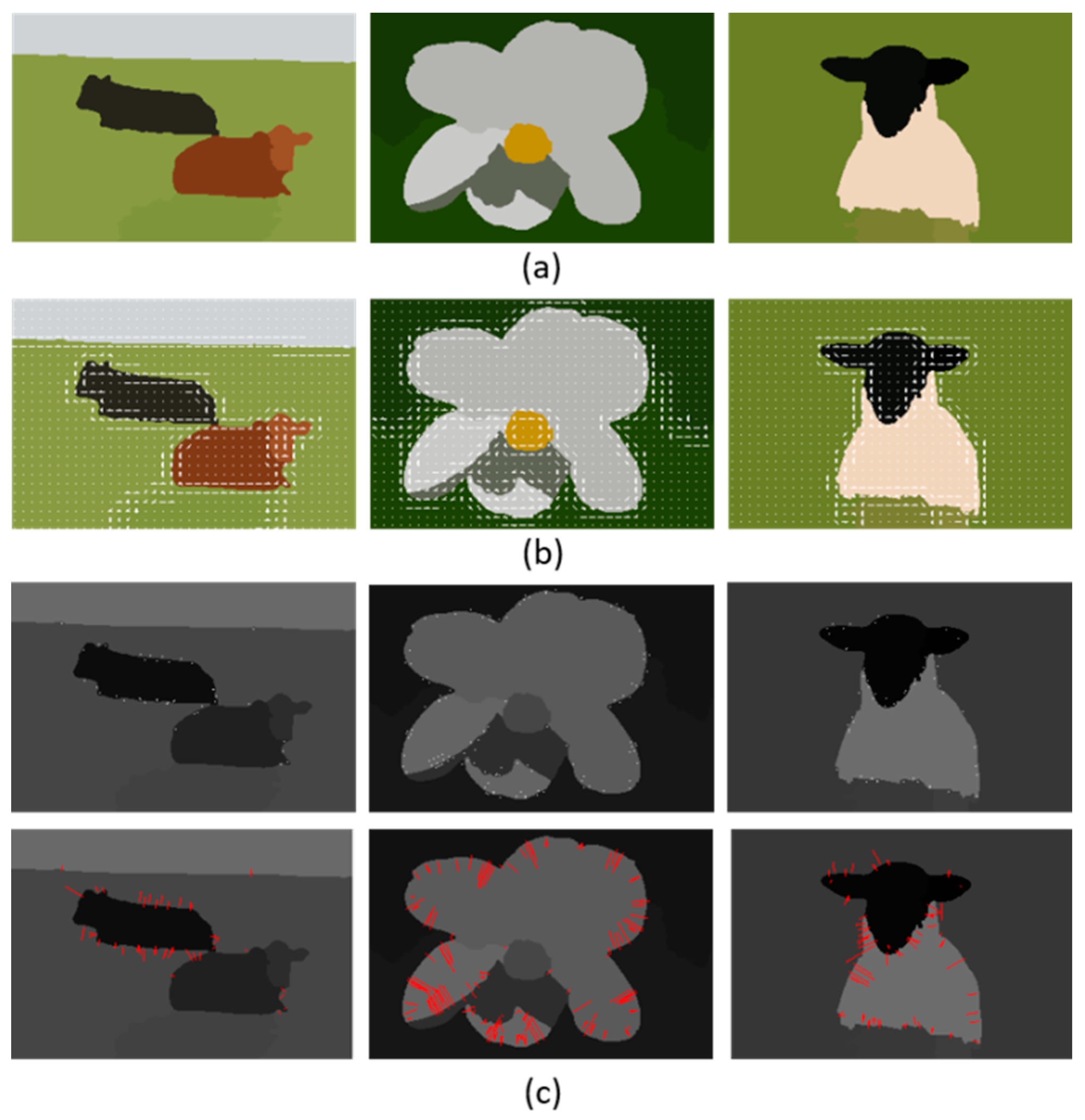

3.2.1. Modified Fast Super-pixel Based Fuzzy C-Mean Segmentation (MFCS)

| Algorithm 1. Pseudo code of the MFCM Algorithm |

| 1: Initialize the clusters randomly 2: calculate the centers of clusters 3: while minimum value of objective function do 4: for each data point in an image do 5: Step 1. Measure the membership of given data point to clusters 6: Step 2. Update the cluster centers 7: end for 8: end while |

3.2.2. Mean Shift-Based Segmentation (MSS)

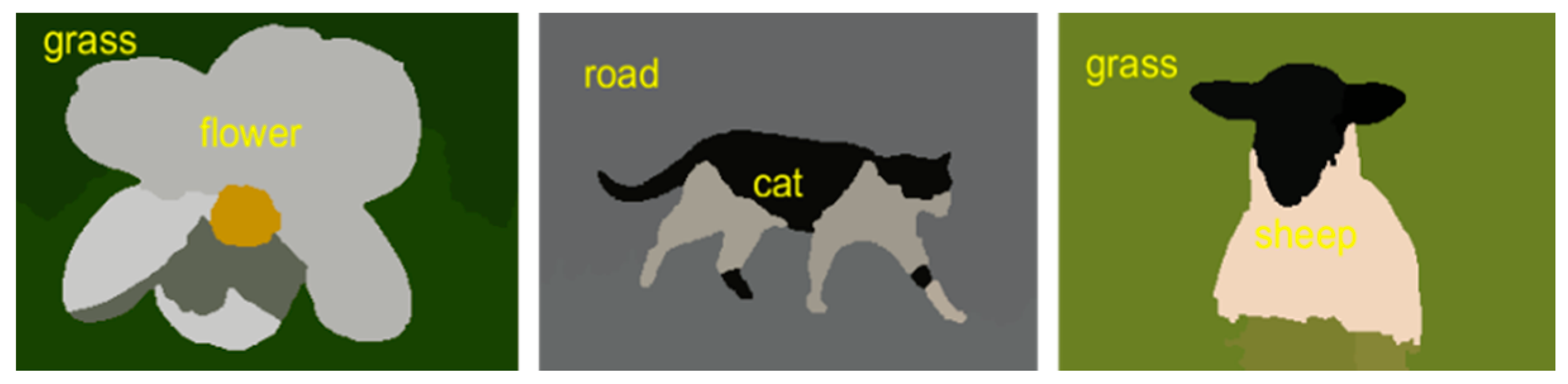

3.3. Object Categorization

3.4. Scene Classification

3.4.1. Expected Intersection over Union score (EIOU)

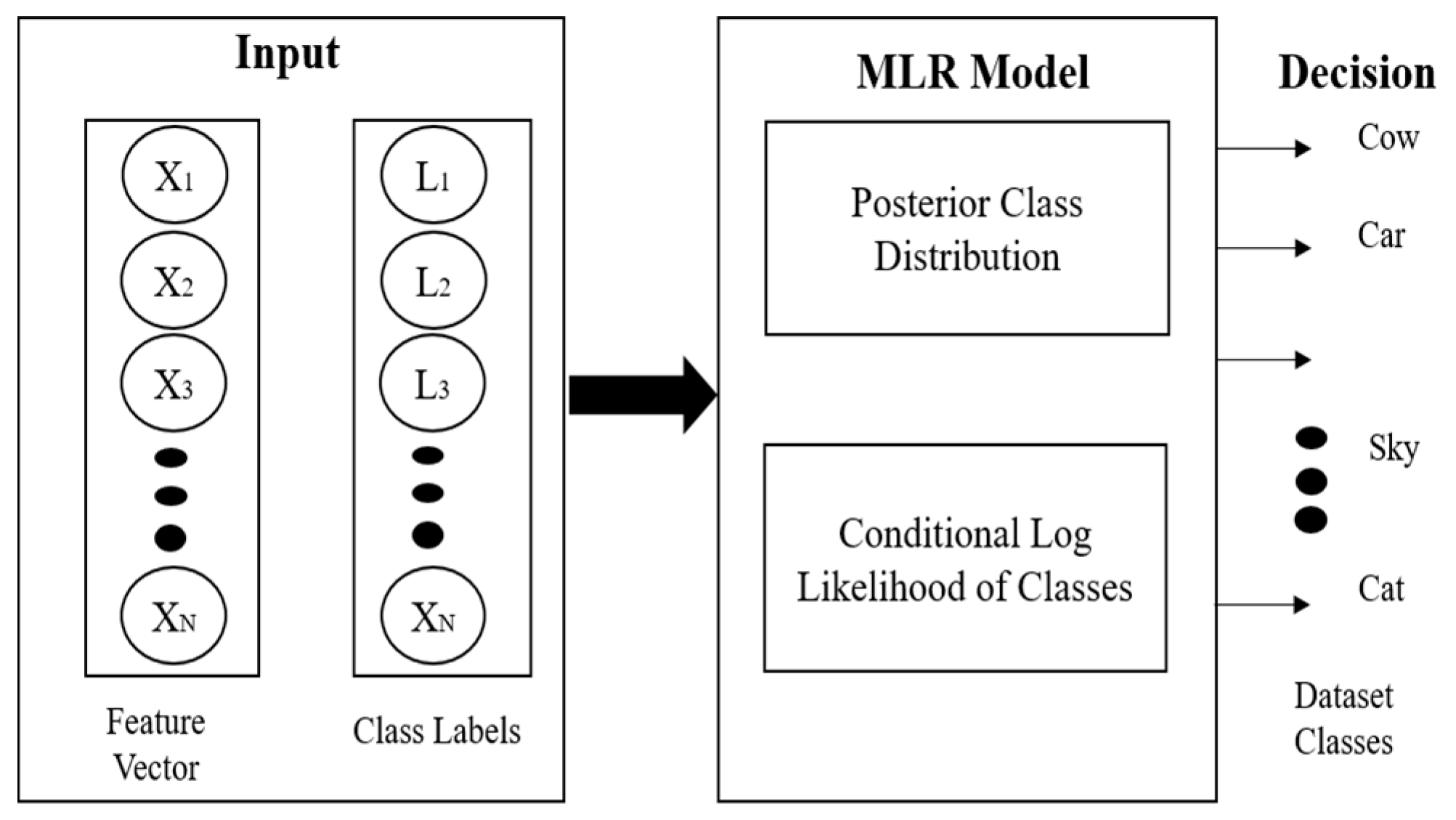

3.4.2. Multi-Class Logistic Regression (McLR)

4. Experimental Setup and Evaluation

4.1. Dataset Descriptions

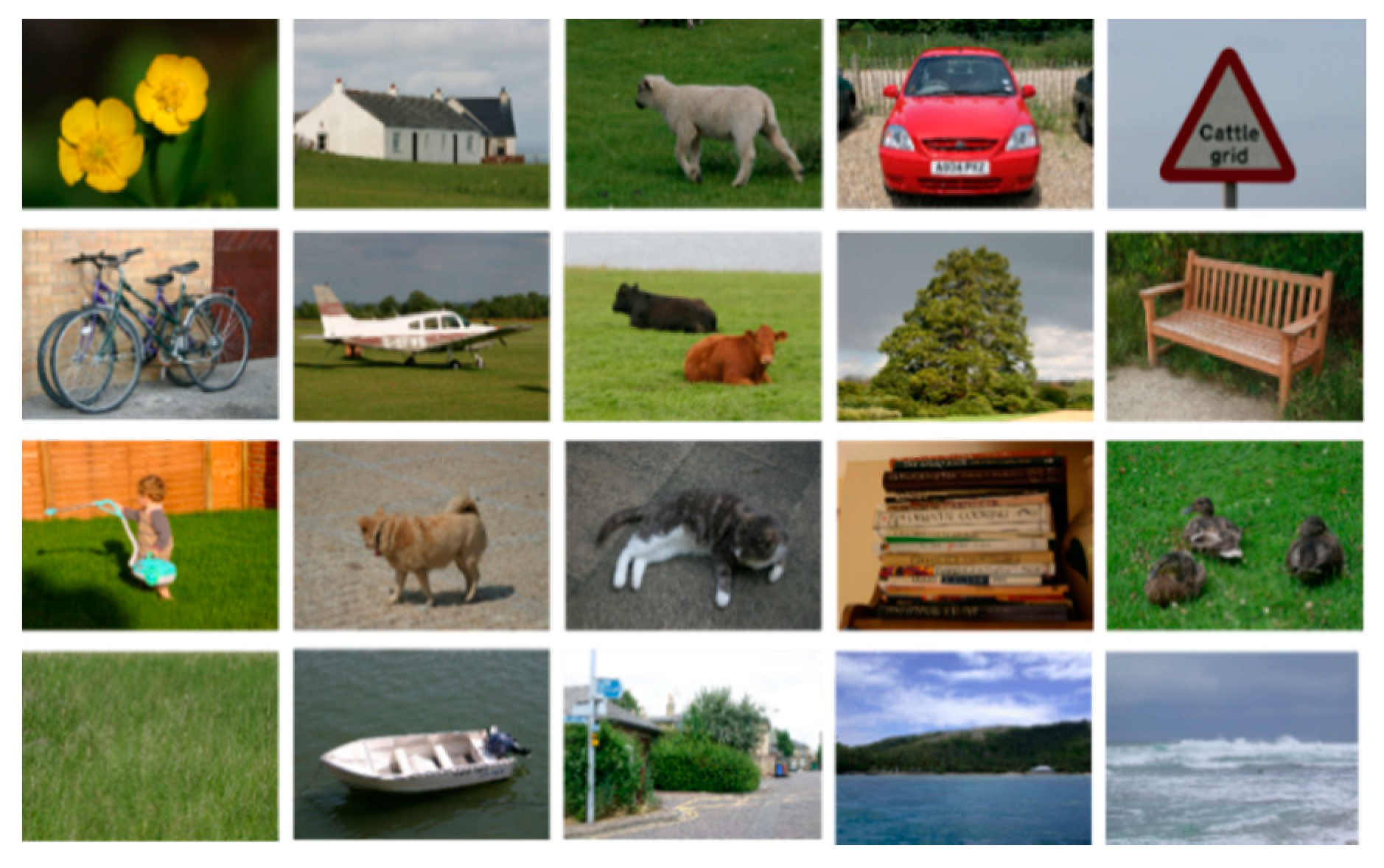

4.1.1. MSRC Dataset

4.1.2. Corel-10k Dataset

4.1.3. CVPR 67 indoor Scene Dataset

4.2. Experimental Results

4.2.1. Experiment 1: Using the MSRC Dataset

4.2.2. Experiment 2: Using the Corel-10k Dataset

4.2.3. Experiment 3: Using the CVPR 67 Indoor Scene Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, Y.; Gu, Y.; Yan, F.; Zhuang, Y. Outdoor Scene Understanding Based on Multi-Scale PBA Image Features and Point Cloud Features. Sensors 2019, 19, 4546. [Google Scholar] [CrossRef]

- Chen, C.; Li, S.; Fu, X.; Ren, Y.; Chen, Y.; Kuo, C.C.J. Exploring confusing scene classes for the places dataset: Insights and solutions. In Proceedings of the Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Kuala Lumpur, Malaysia, 12 December 2017; pp. 550–558. [Google Scholar]

- Chen, L.; Cui, X.; Li, Z.; Yuan, Z.; Xing, J.; Xing, X.; Jia, Z. A New Deep Learning Algorithm for SAR Scene Classification Based on Spatial Statistical Modeling and Features Re-Calibration. Sensors 2019, 19, 2479. [Google Scholar] [CrossRef]

- Chen, C.; Ren, Y.; Kuo, C.C.J. Outdoor scene classification using labeled segments. In Big Visual Data Analysis; Springer: Singapore, 2016; pp. 65–92. [Google Scholar]

- Susan, S.; Agrawal, P.; Mittal, M.; Bansal, S. New shape descriptor in the context of edge continuity. CAAI Trans. Intell. Technol. 2019, 4, 101–109. [Google Scholar] [CrossRef]

- Chen, C.; Ren, Y.; Kuo, C.C.J. Large-scale indoor/outdoor image classification via expert decision fusion (edf). In Proceedings of the Asian Conference on Computer Vision, Los Angeles, CA, USA, 1 November 2014; pp. 426–442. [Google Scholar]

- Zhang, C.; Cheng, J.; Li, L.; Li, C.; Tian, Q. Object categorization using class-specific representations. IEEE Trans. Neu. Net. Learn. Sys. 2017, 29, 4528–4534. [Google Scholar] [CrossRef] [PubMed]

- Rafique, A.A.; Jalal, A.; Ahmed, A. Scene Understanding and Recognition: Statistical Segmented Model using Geometrical Features and Gaussian Naïve Bayes. In Proceedings of the IEEE conference on International Conference on Applied and Engineering Mathematics, Texila, Pakistan, 27 August 2019; pp. 225–230. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. A depth video sensor-based life-logging human activity recognition system for elderly care in smart indoor environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef] [PubMed]

- Shokri, M.; Tavakoli, K. A review on the artificial neural network approach to analysis and prediction of seismic damage in infrastructure. Int. J. Hydromechatron. 2019, 4, 178–196. [Google Scholar] [CrossRef]

- Sezgin, M.; Sankur, B. Survey over image thresholding techniques and quantitative performance evaluation. J. Elect. Imaging 2004, 13, 46–166. [Google Scholar]

- Sujji, G.E.; Lakshmi, Y.V.S.; Jiji, G.W. MRI brain image segmentation based on thresholding. Int. J. Adv. Comput. Res. 2013, 3, 97. [Google Scholar]

- Bi, S.; Liang, D. Human segmentation in a complex situation based on properties of the human visual system. Intell. Control Autom. 2006, 2, 9587–9590. [Google Scholar]

- Yan, M.; Cai, J.; Gao, J.; Luo, L. K-means cluster algorithm based on color image enhancement for cell segmentation. In Proceedings of the 5th International Conference on BioMedical Engineering and Informatics, Chongqing, China, 16 October 2012; pp. 295–299. [Google Scholar]

- Kamdi, S.; Krishna, R.K. Image segmentation and region growing algorithm. Int. J. Comput. Tecnol. Elect. Eng. 2012, 2, 103–107. [Google Scholar]

- Wong, S.C.; Stamatescu, V.; Gatt, A.; Kearney, D.; Lee, I.; McDonnell, M.D. Track everything: Limiting prior knowledge in online multi-object recognition. IEEE Trans. Image Proc. 2017, 26, 4669–4683. [Google Scholar] [CrossRef] [PubMed]

- Sumbul, G.; Cinbis, R.G.; Aksoy, S. Multisource Region Attention Network for Fine-Grained Object Recognition in Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4929–4937. [Google Scholar] [CrossRef]

- Martin, S. Sequential bayesian inference models for multiple object classification. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5 July 2011; pp. 1–6. [Google Scholar]

- Lecumberry, F.; Pardo, A.; Sapiro, G. Multiple shape models for simultaneous object classification and segmentation. In Proceedings of the 16th IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 3001–3004. [Google Scholar]

- Jalal, A.; Kim, Y.H.; Kim, Y.J.; Kamal, S.; Kim, D. Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2017, 61, 295–308. [Google Scholar] [CrossRef]

- Shi, J.; Zhu, H.; Yu, S.; Wu, W.; Shi, H. Scene Categorization Model Using Deep Visually Sensitive Features. IEEE Access 2019, 7, 45230–45239. [Google Scholar] [CrossRef]

- Zhang, C.; Cheng, J.; Tian, Q. Multiview, Few-Labeled Object Categorization by Predicting Labels with View Consistency. IEEE Trans. 2019, 49, 3834–3843. [Google Scholar] [CrossRef]

- Zhou, L.; Zhou, Z.; Hu, D. Scene classification using a multi-resolution bag-of-features model. Pattern Recognit. 2013, 46, 424–433. [Google Scholar] [CrossRef]

- Hayat, M.; Khan, S.H.; Bennamoun, M.; An, S. A Spatial Layout and Scale Invariant Feature Representation for Indoor Scene Classification. IEEE Trans. Image Proc. 2016, 25, 4829–4841. [Google Scholar] [CrossRef]

- Zou, J.; Li, W.; Chen, C.; Du, Q. Scene classification using local and global features with collaborative representation fusion. Inf. Sci. 2016, 348, 209–226. [Google Scholar] [CrossRef]

- Ismail, A.S.; Seifelnasr, M.M.; Guo, H. Understanding Indoor Scene: Spatial Layout Estimation, Scene Classification, and Object Detection. In Proceedings of the 3rd International Conference on Multimedia Systems and Signal Processing, Shenzhen, China, 28 April 2018; pp. 64–70. [Google Scholar]

- Tingting, Y.; Junqian, W.; Lintai, W.; Yong, X. Three-stage network for age estimation. CAAI Trans. Intell. Technol. 2019, 4, 122–126. [Google Scholar] [CrossRef]

- Mahajan, S.M.; Dubey, Y.K. Color image segmentation using kernalized fuzzy c-means clustering. In Proceedings of the 2015 Fifth International Conference on Communication Systems and Network Technologies, Gwalior, India, 4–6 April 2015; pp. 1142–1146. [Google Scholar]

- Zhu., C.; Miao, D. Influence of kernel clustering on an RBFN. CAAI Trans. Intell. Technol. 2019, 4, 255–260. [Google Scholar] [CrossRef]

- Gandhi, N.J.; Shah, V.J.; Kshirsagar, R. Mean shift technique for image segmentation and Modified Canny Edge Detection Algorithm for circle detection. In Proceedings of the 2014 International Conference on Communication and Signal Processing, Melmaruvathur, India, 3–5 April 2014; pp. 246–250. [Google Scholar]

- Wiens, T. Engine speed reduction for hydraulic machinery using predictive algorithms. Int. J. Hydromechatron. 2019, 1, 16–31. [Google Scholar] [CrossRef]

- Durand, T.; Picard, D.; Thome, N.; Cord, M. Semantic pooling for image categorization using multiple kernel learning. In Proceedings of the 2014 IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 170–174. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Osterland., S.; Weber, J. Analytical analysis of single-stage pressure relief valves. Int. J. Hydromechatron. 2019, 2, 32–53. [Google Scholar] [CrossRef]

- Nowozin, S. Optimal decisions from probabilistic models: The intersection-over-union case. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 548–555. [Google Scholar]

- Behadada, O.; Trovati, M.; Chikh, M.A.; Bessis, N.; Korkontzelos, Y. Logistic regression multinomial for arrhythmia detection. In Proceedings of the 2016 IEEE 1st International Workshops on Foundations and Applications of Self* Systems (FAS*W), Augsburg, Germany, 12–16 September 2016; pp. 133–137. [Google Scholar]

- Shotton, J.; Winn, J.; Rother, C.; Criminisi, A. Textonboost: Joint appearance, shape and context modeling for multi-class object recognition and segmentation. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2016; pp. 1–15. [Google Scholar]

- Liu, G.H.; Yang, J.Y.; Lo, Z.Y. Content-based image retrieval using computational visual attention model, Pattern Recognition. Pattern Rec. 2015, 48, 2554–2566. [Google Scholar] [CrossRef]

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20 June 2009; pp. 413–420. [Google Scholar]

- Irie, G.; Liu, D.; Li, Z.; Chang, S.F. A bayesian approach to multimodal visual dictionary learning. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 329–336. [Google Scholar]

- Mottaghi, R.; Fidler, S.; Yuille, A.; Urtasun, R.; Parikh, D. Human-machine CRFs for identifying bottlenecks in scene understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 74–87. [Google Scholar] [CrossRef]

- Liu, X.; Yang, W.; Lin, L.; Wang, Q.; Cai, Z.; Lai, J. Data-driven scene understanding with adaptively retrieved exemplars. IEEE Multidiscip. 2015, 22, 82–92. [Google Scholar] [CrossRef]

- Jegou, H.; Perronnin, F.; Douze, M.; Sánchez, J.; Perez, P.; Schmid, C. Aggregating local image descriptors into compact codes. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1704–1716. [Google Scholar] [CrossRef]

- Long, X.; Lu, H.; Peng, Y.; Wang, X.; Feng, S. Image classification based on improved VLAD. Multimed. Tools Appl. 2016, 75, 5533–5555. [Google Scholar] [CrossRef]

- Cheng, C.; Long, X.; Li, Y. VLAD Encoding Based on LLC for Image Classification. In Proceedings of the 2019 11th International Conference on Machine Learning and Computing, Zhuhai, China, 22–24 February 2019; pp. 417–422. [Google Scholar]

| Classes | fl | bo | sh | do | ca | co | Bi |

| Accuracy (%) | 92.3 | 88.6 | 96.4 | 94.6 | 82.7 | 94 | 87 |

| Classes | ro | bd | gr | ch | du | bu | Sk |

| Accuracy (%) | 83.3 | 86.8 | 89.3 | 79.9 | 88.4 | 84.8 | 87 |

| Classes | tr | si | ct | wt | bc | bk | |

| Accuracy (%) | 84.4 | 78.2 | 87.9 | 92 | 79.8 | 78 | |

| Mean Segmentation Accuracy = 86.77 % | |||||||

| Class | MFCS | MSS | Class | MFCS | MSS |

|---|---|---|---|---|---|

| fl | 76.5 | 78.2 | ch | 84.6 | 98.7 |

| bo | 47.4 | 47.8 | bu | 92.3 | 93.4 |

| sh | 65.9 | 71.2 | sk | 32.7 | 35.5 |

| do | 35.2 | 43.5 | tr | 54.5 | 61.8 |

| ca | 45.8 | 46.1 | si | 46.7 | 47.0 |

| co | 97.5 | 101.5 | ct | 63.1 | 65.2 |

| bi | 41.1 | 43.7 | wt | 29.8 | 33.5 |

| ro | 52.6 | 53.1 | bc | 36.2 | 41.8 |

| bd | 39.2 | 42.9 | bk | 54.7 | 52.1 |

| gr | 51.4 | 52.2 | du | 172.9 | 201.5 |

| Mean computational time of the MFCS algorithm = 61.00 s | |||||

| Mean computational time of the MSS algorithm = 65.53 s | |||||

| Class | MFCS | MSS | Class | MFCS | MSS |

|---|---|---|---|---|---|

| rh | 112.0 | 131.2 | wo | 130.6 | 149.5 |

| dr | 130.1 | 143.5 | do | 129.1 | 148.2 |

| ca | 91.7 | 105.0 | bo | 150 | 168.9 |

| wa | 87.4 | 99.3 | fl | 114.5 | 126.1 |

| bu | 171.0 | 188.9 | be | 145.8 | 166.0 |

| el | 96.5 | 114.2 | sk | 89.0 | 104.5 |

| ai | 150.2 | 170.3 | la | 97.5 | 113.2 |

| tr | 94.1 | 105.9 | ct | 122.9 | 143.9 |

| ti | 133.2 | 156.3 | bd | 131.2 | 157.0 |

| bi | 170.9 | 199.2 | fi | 135.0 | 162.7 |

| Mean computational time of the MFCS algorithm = 124.13 s | |||||

| Mean computational time of the MSS algorithm = 142.69 s | |||||

| fl | bo | sh | do | ca | co | bi | ro | bd | gr | ch | du | bu | sk | tr | si | ct | wt | bc | Bk | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| fl | 0.95 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0.4 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0 | 0 | 0 |

| bo | 0 | 0.89 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0 | 0 | 0.5 | 0 | 0 | 0 | 0.1 | 0 | 0.4 | 0 | 0 |

| sh | 0 | 0 | 0.92 | 0.2 | 0 | 0.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0 |

| do | 0 | 0 | 0.2 | 0.89 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.7 | 0 | 0 | 0 |

| ca | 0 | 0.2 | 0 | 0 | 0.84 | 0 | 0.7 | 0.5 | 0 | 0 | 0 | 0 | 0.3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| co | 0 | 0 | 0.7 | 0 | 0 | 0.93 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| bi | 0 | 0 | 0 | 0 | 0 | 0 | 0.90 | 0 | 0 | 0 | 0 | 0 | 0 | 0.9 | 0 | 0 | 0 | 0.1 | 0 | 0 |

| ro | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.87 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| bd | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 | 0 | 0.89 | 0.1 | 0 | 0 | 0.3 | 0 | 0 | 0.2 | 0 | 0 | 0.1 | 0.2 |

| gr | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.91 | 0 | 0 | 0 | 0.2 | 0.9 | 0 | 0 | 0 | 0 | 0 |

| ch | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0.3 | 0 | 0 | 0.88 | 0 | 0.4 | 0 | 0 | 0.2 | 0 | 0 | 0 | 0.2 |

| du | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.85 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| bu | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 | 0.88 | 0 | 0 | 0 | 0 | 0.9 | 0 | 0.1 |

| sk | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.3 | 0.87 | 0 | 0 | 0 | 0.9 | 0 | 0.1 |

| tr | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.9 | 0 | 0 | 0 | 0 | 0.88 | 0 | 0 | 0 | 0 | 0 |

| si | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.3 | 0 | 0 | 0 | 0 | 0.4 | 0 | 0 | 0.89 | 0 | 0 | 0 | 0.4 |

| ct | 0 | 0 | 0 | 0 | 0.9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.2 | 0.88 | 0 | 0 | 0.1 |

| wt | 0 | 0.2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.8 | 0 | 0 | 0 | 0.90 | 0 | 0 |

| bc | 0 | 0 | 0 | 0 | 0.6 | 0 | 0 | 0.1 | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 | 0.2 | 0 | 0 | 0.89 | 0 |

| bk | 0.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.5 | 0 | 0.2 | 0 | 0 | 0.8 | 0 | 0 | 0 | 0.84 |

| Methods | Classification Accuracy (%) |

|---|---|

| Bayesian model [40] | 82.9 |

| Scene classification using machine performance [41] | 81.0 |

| Scene classification with weighted method [42] | 84.7 |

| Proposed Method | 88.75 |

| rh | de | ca | wt | bu | el | ai | tr | ti | bi | wl | do | bo | fl | be | sk | la | ct | bd | fi | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| rh | 0.87 | 0 | 0 | 0 | 0 | 0.9 | 0 | 0 | 0.2 | 0 | 0.1 | 0.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| de | 0.3 | 0.76 | 0 | 0 | 0 | 0.5 | 0 | 0 | 0.8 | 0 | 0.4 | 0.2 | 0 | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 |

| ca | 0 | 0 | 0.83 | 0 | 0.7 | 0 | 0.6 | 0 | 0 | 0 | 0 | 0 | 0.4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| wt | 0 | 0 | 0 | 0.91 | 0.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0.7 | 0 | 0 | 0 | 0 |

| bu | 0 | 0 | 0 | 0.3 | 0.84 | 0 | 0.7 | 0 | 0 | 0.3 | 0 | 0 | 0.4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| el | 0.5 | 0 | 0 | 0 | 0 | 0.93 | 0 | 0 | 0.1 | 0 | 0.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ai | 0 | 0 | 0.3 | 0 | 0.5 | 0 | 0.90 | 0 | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| tr | 0 | 0 | 0 | 0.2 | 0 | 0 | 0 | 0.91 | 0 | 0 | 0 | 0 | 0 | 0.6 | 0 | 0.1 | 0 | 0 | 0 | 0 |

| ti | 0.1 | 0 | 0.2 | 0 | 0 | 0.3 | 0 | 0 | 0.89 | 0 | 0.4 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 |

| bi | 0 | 0 | 0.9 | 0 | 0.2 | 0 | 0.4 | 0 | 0 | 0.79 | 0 | 0 | 0.6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| wl | 0 | 0.1 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0.6 | 0 | 0.88 | 0.4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| do | 0 | 0.2 | 0 | 0 | 0 | 0 | 0 | 0 | 0.6 | 0 | 0.2 | 0.87 | 0 | 0 | 0 | 0 | 0 | 0.3 | 0 | 0 |

| bo | 0 | 0 | 0 | 0.4 | 0.5 | 0 | 0.3 | 0 | 0 | 0.3 | 0 | 0 | 0.83 | 0 | 0 | 0 | 0 | 0 | 0 | 0.2 |

| fl | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.9 | 0 | 0.2 | 0 | 0 | 0 | 0.84 | 0 | 0.2 | 0.3 | 0 | 0 | 0 |

| be | 0.2 | 0 | 0 | 0 | 0 | 0.3 | 0 | 0 | 0.3 | 0 | 0 | 0.2 | 0 | 0 | 0.90 | 0 | 0 | 0 | 0 | 0 |

| si | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.8 | 0 | 0.89 | 0.1 | 0 | 0 | 02 |

| sk | 0 | 0 | 0 | 0.9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 | 0 | 0.88 | 0 | 0 | 0.2 |

| la | 0 | 0 | 0 | 0.6 | 0 | 0 | 0 | 0.4 | 0 | 0 | 0 | 0 | 0 | 0.3 | 0 | 0 | 0.4 | 0.83 | 0 | 0 |

| bd | 0 | 0 | 0 | 0 | 0 | 0 | 0.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0.5 | 0 | 0.4 | 0 | 0 | 0.83 | 0.3 |

| fi | 0 | 0 | 0 | 0.9 | 0 | 0 | 0 | 0 | 0 | 0.2 | 0 | 0 | 0.2 | 0.4 | 0 | 0 | 0.5 | 0.1 | 0 | 0.77 |

| Methods | Classification Accuracy (%) |

|---|---|

| VLAD [43] | 80.0 |

| TNNVLAD [44] | 81.0 |

| VLAD + LLC [45] | 83.7 |

| Proposed Method | 85.75 |

| Class | Accuracy % | Class | Accuracy % | Class | Accuracy % |

|---|---|---|---|---|---|

| kitchen | 0.89 | grocery store | 0.79 | nursery | 0.83 |

| bedroom | 0.85 | florist | 0.82 | train station | 0.82 |

| bathroom | 0.87 | church inside | 0.83 | laundromat | 0.79 |

| corridor | 0.76 | auditorium | 0.82 | stairs case | 0.81 |

| elevator | 0.80 | buffet | 0.77 | gym | 0.78 |

| locker room | 0.78 | class room | 0.81 | tv studio | 0.76 |

| waiting room | 0.81 | green house | 0.75 | pantry | 0.80 |

| dining room | 0.83 | bowling | 0.79 | pool inside | 0. 77 |

| game room | 0.79 | cloister | 0.83 | inside subway | 0.79 |

| garage | 0.82 | concert hall | 0.81 | wine cellar | 0.77 |

| lobby | 0.77 | computer room | 0.80 | fast food restaurant | 0.76 |

| office | 0.79 | dental office | 0.84 | bar | 0.82 |

| mall | 0.81 | library | 0.79 | clothing store | 0.81 |

| Laboratory wet | 0.77 | inside bus | 0.77 | casino | 0.83 |

| jewelry shop | 0.79 | closet | 0.81 | deli | 0.79 |

| museum | 0.82 | studio music | 0.79 | book store | 0.80 |

| living room | 0.77 | lobby | 0.80 | children room | 0.82 |

| movie theater | 0.83 | prison cell | 0.84 | hospital room | 0.79 |

| toy store | 0.80 | hair saloon | 0.80 | kinder garden | 0.77 |

| operating room | 0.82 | subway | 0.81 | shoe shop | 0.76 |

| airport inside | 0.79 | warehouse | 0.77 | restaurant kitchen | 0.78 |

| art studio | 0.80 | meeting room | 0.82 | bakery | 0.79 |

| video store | 0.76 | ||||

| Mean Scene Classification Accuracy = 80.02 % | |||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, A.; Jalal, A.; Kim, K. A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression. Sensors 2020, 20, 3871. https://doi.org/10.3390/s20143871

Ahmed A, Jalal A, Kim K. A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression. Sensors. 2020; 20(14):3871. https://doi.org/10.3390/s20143871

Chicago/Turabian StyleAhmed, Abrar, Ahmad Jalal, and Kibum Kim. 2020. "A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression" Sensors 20, no. 14: 3871. https://doi.org/10.3390/s20143871

APA StyleAhmed, A., Jalal, A., & Kim, K. (2020). A Novel Statistical Method for Scene Classification Based on Multi-Object Categorization and Logistic Regression. Sensors, 20(14), 3871. https://doi.org/10.3390/s20143871