Cross-Machine Fault Diagnosis with Semi-Supervised Discriminative Adversarial Domain Adaptation

Abstract

1. Introduction

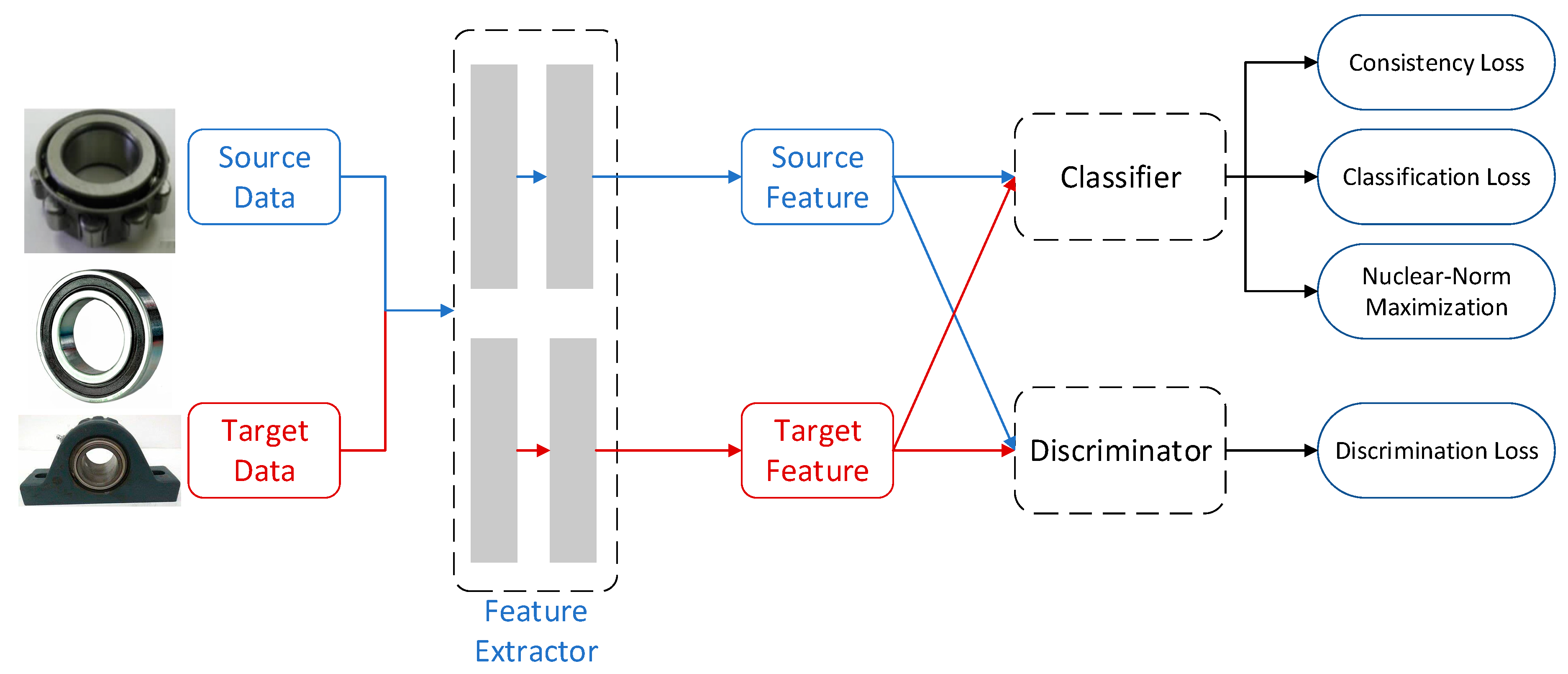

- (1)

- An intelligent cross-machine fault diagnosis model is proposed. Fully utilizing the labeled data in source domain and unlabeled data in target domain, this model could perform fault diagnosis task effectively while only limited labeled target data is available.

- (2)

- We proved that the batch norm maximization is effective to improve the discriminability decline and diversity decline, which are both caused by the large domain shift.

- (3)

- Experiments between three open dataset of bearing faults are carried to verify the effective of the proposed method, especially under the situation that only 1 or 5 labeled samples from each category are available for target domain.

2. Related Works

2.1. Cross-Machine Fault Diagnosis Domain Adaptation

2.2. Target Discriminative Domain Adaptation Methods

2.3. Improving the Target Discriminability and Diversity Through Nuclear-Norm Maximization

3. Proposed Methods

| Algorithm 1: Details of the proposed method |

Require: source data ; target data ; minibatch size ; training step ;

|

4. Experiments and Results

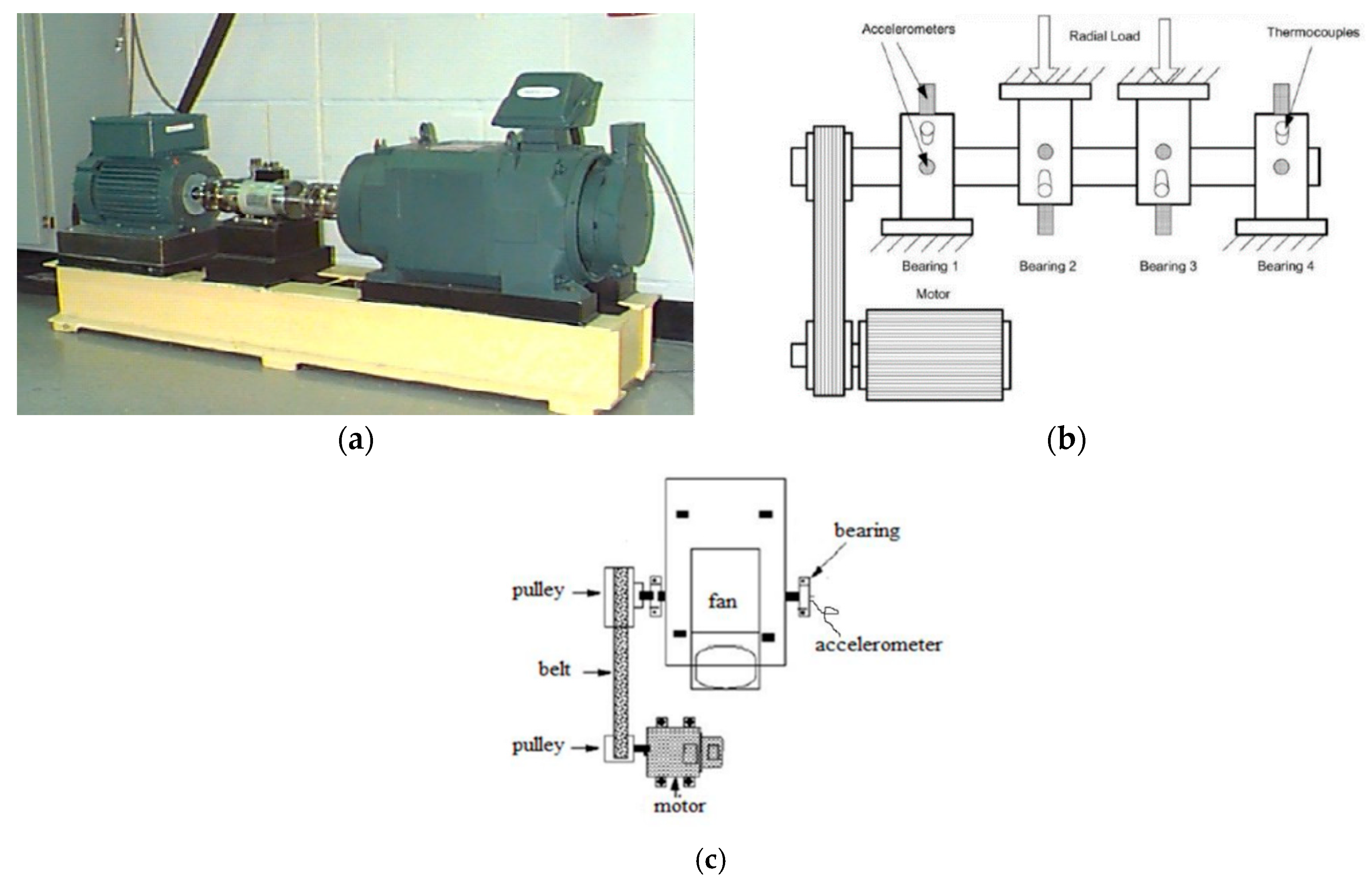

4.1. Datasets

4.2. Implementation Details

- CNN: The model trained on labeled source domain data is used to classify the target samples without domain adaptation.

- Domain adversarial neural network (DANN) proposed by Ganin et al. [48]. Feature distributions are aligned through adversarial training between feature extractor and domain discriminator.

- DCTLN proposed by Guo et al. [16]. Adversarial training and MMD distance are employed to minimize domain shift between domains.

- VADA proposed by Shu et al. [21]. VADA incorporates virtual adversarial training loss and conditional entropy loss to push the decision boundaries away from the empirical data.

- DANN + Entropy Minimization (EntMin). The discriminability of model is improved by entropy minimization on the basis of adversarial training.

- DANN + BNM (BNM). The discriminability of model is further improved by batch nuclear-norm maximization on the basis of adversarial training.

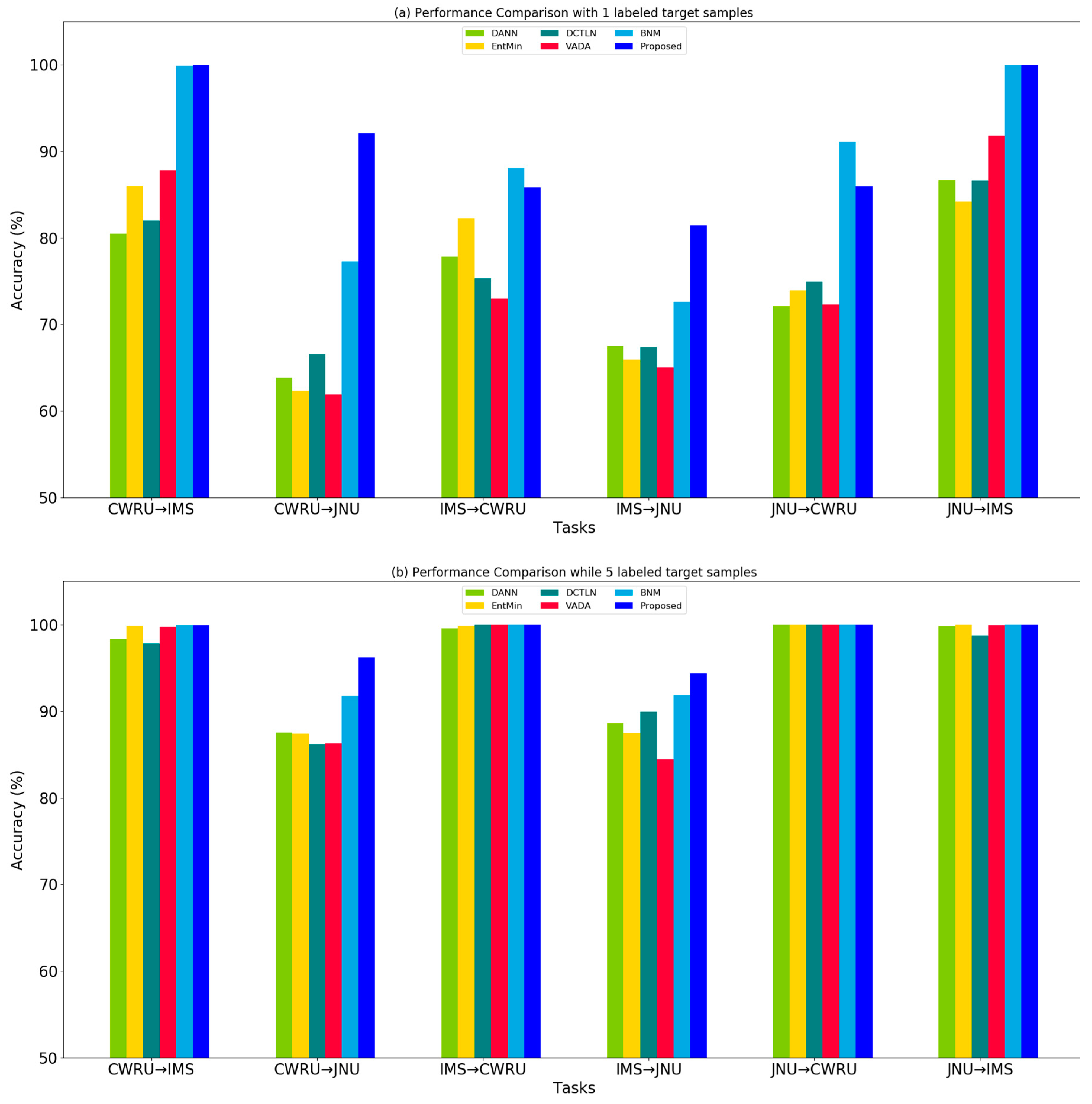

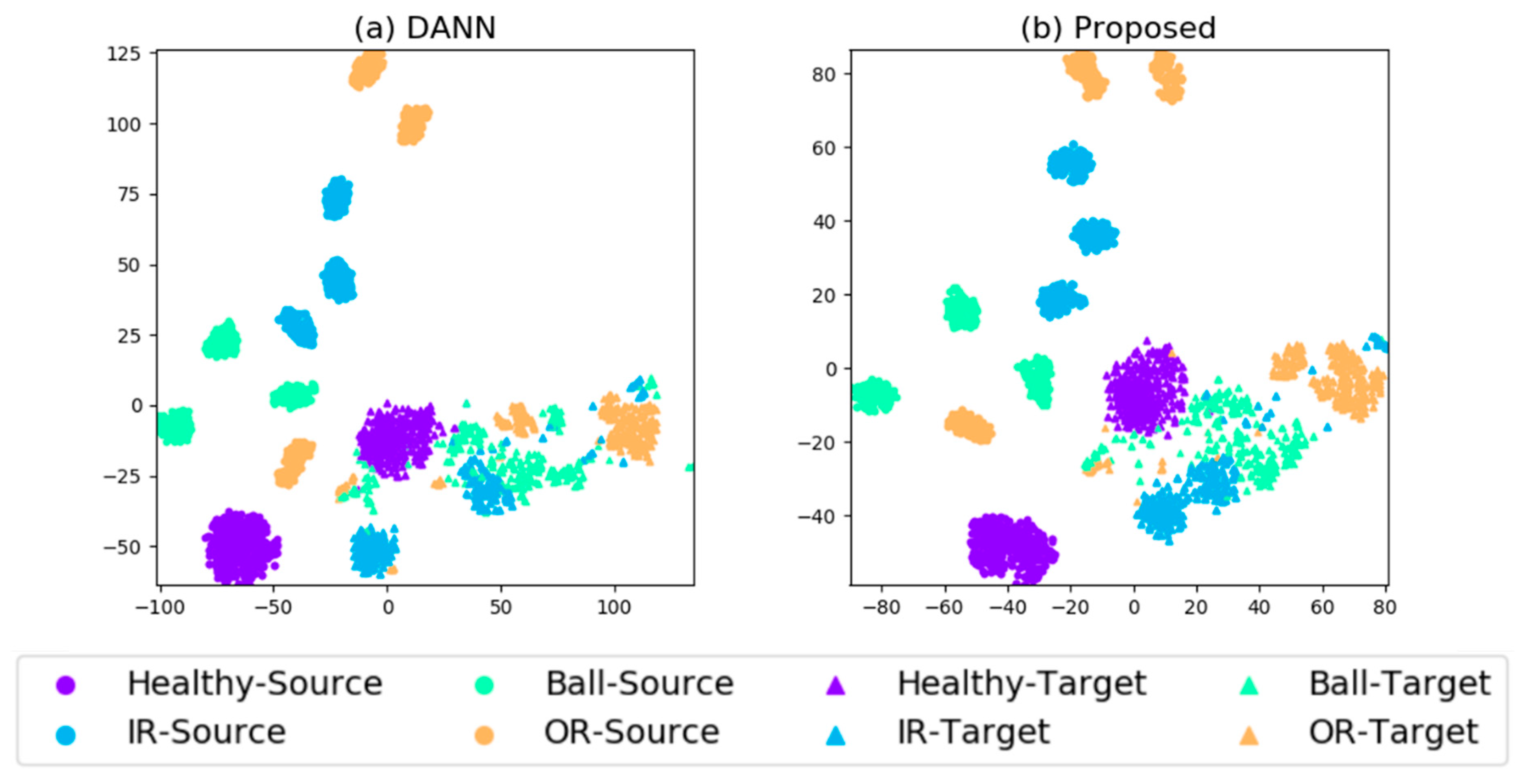

4.3. Case 1: Results and Analysis of Cross-Domain Diagnosis

4.4. Case 2: Cross-Domain Diagnosis under Class-Imbalanced Scenarios

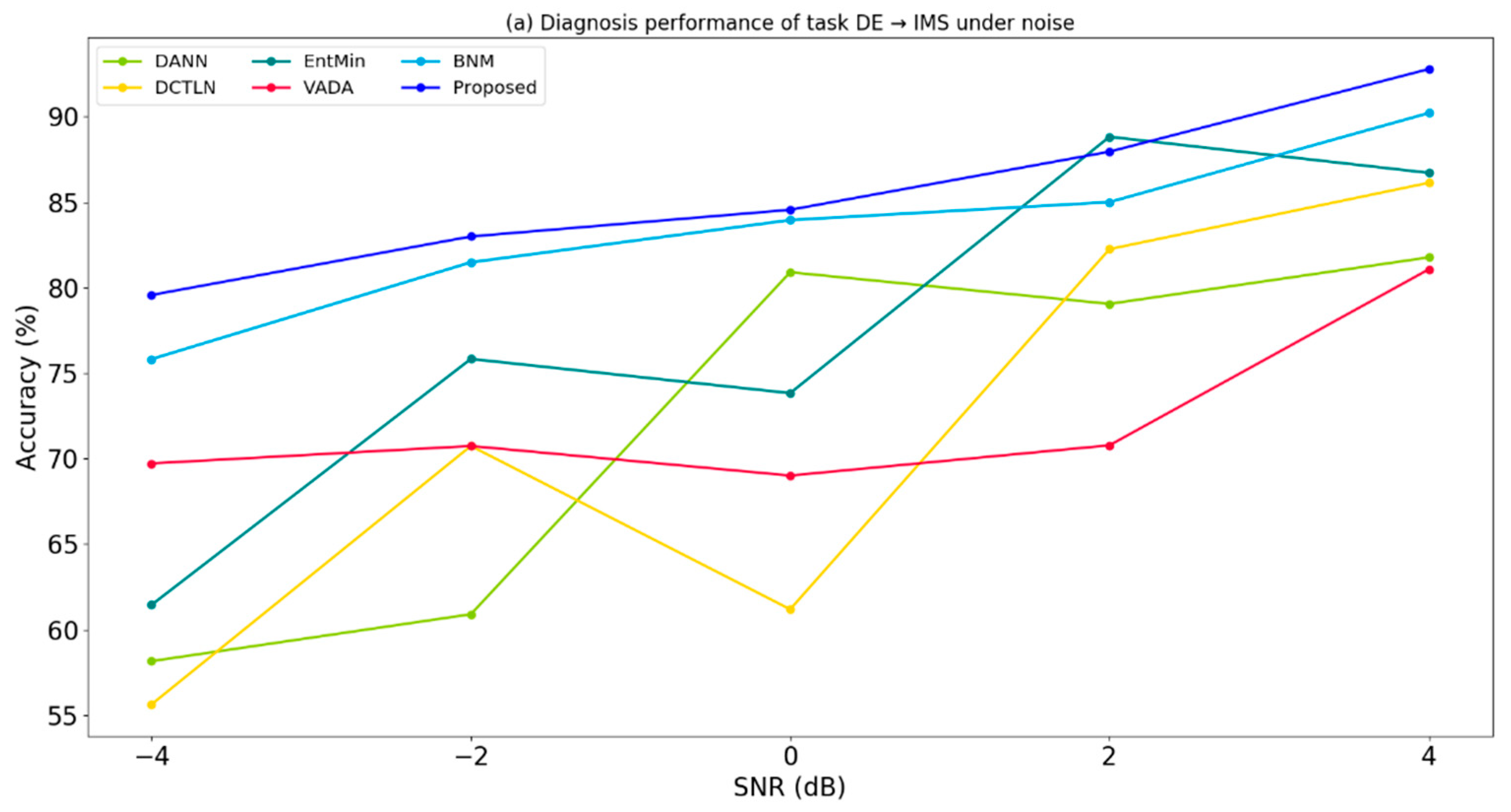

4.5. Case 3: Experiments with Environmental Noise

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gupta, P. Dynamics of rolling-element bearings—Part I: Cylindrical roller bearing analysis. J. Lubr. Tech. 1979, 101, 293–302. [Google Scholar] [CrossRef]

- Adams, M.L. Analysis of Rolling Element Bearing Faults in Rotating Machinery: Experiments, Modeling, Fault Detection and Diagnosis. Ph.D. Thesis, Case Western Reserve University, Cleveland, OH, USA, 2001. [Google Scholar]

- El-Saeidy, F.M.; Sticher, F. Dynamics of a rigid rotor linear/nonlinear bearings system subject to rotating unbalance and base excitations. J. Vib. Control 2010, 16, 403–438. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Lu, W.; Liang, B.; Cheng, Y.; Meng, D.; Yang, J.; Zhang, T. Deep model based domain adaptation for fault diagnosis. IEEE Trans. Ind. Electron. 2016, 64, 2296–2305. [Google Scholar] [CrossRef]

- Wen, L.; Gao, L.; Li, X. A new deep transfer learning based on sparse auto-encoder for fault diagnosis. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 136–144. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q.; Sun, J.-Q. Multi-Layer domain adaptation method for rolling bearing fault diagnosis. Signal Process. 2019, 157, 180–197. [Google Scholar] [CrossRef]

- Li, X.; Zhang, W.; Ding, Q. Cross-domain fault diagnosis of rolling element bearings using deep generative neural networks. IEEE Trans. Ind. Electron. 2018, 66, 5525–5534. [Google Scholar] [CrossRef]

- An, J.; Ai, P.; Liu, D. Deep domain adaptation model for bearing fault diagnosis with domain alignment and discriminative feature learning. Shock Vib. 2020, 2020, 1–14. [Google Scholar] [CrossRef]

- Cheng, C.; Zhou, B.; Ma, G.; Wu, D.; Yuan, Y. Wasserstein distance based deep adversarial transfer learning for intelligent fault diagnosis. arXiv 2019, arXiv:1903.06753. [Google Scholar]

- Wang, X.; Liu, F. Triplet loss guided adversarial domain adaptation for bearing fault diagnosis. Sensors 2020, 20, 320. [Google Scholar] [CrossRef]

- Wang, Q.; Michau, G.; Fink, O. Domain adaptive transfer learning for fault diagnosis. arXiv 2019, arXiv:1905.06004. [Google Scholar]

- Han, T.; Liu, C.; Yang, W.; Jiang, D. A novel adversarial learning framework in deep convolutional neural network for intelligent diagnosis of mechanical faults. Knowledge-Based Syst. 2019, 165, 474–487. [Google Scholar] [CrossRef]

- Zhang, B.; Li, W.; Hao, J.; Li, X.-L.; Zhang, M. Adversarial adaptive 1-D convolutional neural networks for bearing fault diagnosis under varying working condition. arXiv 2018, arXiv:1805.00778. [Google Scholar]

- Yang, B.; Lei, Y.; Jia, F.; Xing, S. An intelligent fault diagnosis approach based on transfer learning from laboratory bearings to locomotive bearings. Mech. Syst. Signal Process. 2019, 122, 692–706. [Google Scholar] [CrossRef]

- Guo, L.; Lei, Y.; Xing, S.; Yan, T.; Li, N. Deep convolutional transfer learning network: A new method for intelligent fault diagnosis of machines with unlabeled data. IEEE Trans. Ind. Electron. 2018, 66, 7316–7325. [Google Scholar] [CrossRef]

- Li, X.; Jia, X.-D.; Zhang, W.; Ma, H.; Luo, Z.; Li, X. Intelligent cross-machine fault diagnosis approach with deep auto-encoder and domain adaptation. Neurocomputing 2020, 383, 235–247. [Google Scholar] [CrossRef]

- Grandvalet, Y.; Bengio, Y. Semi-supervised learning by entropy minimization. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2005; pp. 529–536. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2005; pp. 1195–1204. [Google Scholar]

- Miyato, T.; Maeda, S.-I.; Koyama, M.; Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1979–1993. [Google Scholar] [CrossRef] [PubMed]

- Shu, R.; Bui, H.H.; Narui, H.; Ermon, S. A dirt-t approach to unsupervised domain adaptation. arXiv 2018, arXiv:1802.08735. [Google Scholar]

- Kumar, A.; Sattigeri, P.; Wadhawan, K.; Karlinsky, L.; Feris, R.; Freeman, B.; Wornell, G. Co-regularized alignment for unsupervised domain adaptation. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2005; pp. 9345–9356. [Google Scholar]

- Zhang, Y.; Li, X.; Gao, L.; Wang, L.; Wen, L. Imbalanced data fault diagnosis of rotating machinery using synthetic oversampling and feature learning. J. Manuf. Syst. 2018, 48, 34–50. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M.I. Learning transferable features with deep adaptation networks. arXiv 2015, arXiv:1502.02791. [Google Scholar]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Deep transfer learning with joint adaptation networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 2208–2217. [Google Scholar]

- Sun, B.; Feng, J.; Saenko, K. Return of frustratingly easy domain adaptation. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Sun, B.; Saenko, K. Deep coral: Correlation alignment for deep domain adaptation. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 443–450. [Google Scholar]

- Zellinger, W.; Grubinger, T.; Lughofer, E.; Natschläger, T.; Saminger-Platz, S. Central moment discrepancy (cmd) for domain-invariant representation learning. arXiv 2017, arXiv:1702.08811. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. arXiv 2014, arXiv:1409.7495. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Long, M.; Cao, Z.; Wang, J.; Jordan, M.I. Conditional adversarial domain adaptation. In Advances in Neural Information Processing Systems; MIT press: Cambridge, MA, USA, 2005; pp. 1640–1650. [Google Scholar]

- Liu, Z.-H.; Lu, B.-L.; Wei, H.-L.; Chen, L.; Li, X.-H.; Rätsch, M. Deep Adversarial Domain Adaptation Model for Bearing Fault Diagnosis. IEEE Trans. Syst. Man Cybern. Syst. 2019, 99, 1–10. [Google Scholar] [CrossRef]

- Saito, K.; Watanabe, K.; Ushiku, Y.; Harada, T. Maximum classifier discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake, UT, USA, 18–23 June 2018; pp. 3723–3732. [Google Scholar]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Unsupervised domain adaptation with residual transfer networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2005; pp. 136–144. [Google Scholar]

- Rahman, M.M.; Fookes, C.; Baktashmotlagh, M.; Sridharan, S. On minimum discrepancy estimation for deep domain adaptation. In Domain Adaptation for Visual Understanding; Springer: Cham, Switzerland, 2020; pp. 81–94. [Google Scholar]

- Vu, T.-H.; Jain, H.; Bucher, M.; Cord, M.; Pérez, P. Advent: Adversarial entropy minimization for domain adaptation in semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2517–2526. [Google Scholar]

- Saito, K.; Kim, D.; Sclaroff, S.; Darrell, T.; Saenko, K. Semi-supervised domain adaptation via minimax entropy. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8050–8058. [Google Scholar]

- Cui, S.; Wang, S.; Zhuo, J.; Li, L.; Huang, Q.; Tian, Q. Towards discriminability and diversity: Batch nuclear-norm maximization under label insufficient situations. arXiv 2020, arXiv:2003.12237. [Google Scholar]

- Wu, X.; Zhou, Q.; Yang, Z.; Zhao, C.; Latecki, L.J. Entropy minimization vs. diversity maximization for domain adaptation. arXiv 2020, arXiv:2002.01690. [Google Scholar]

- Mao, W.; He, L.; Yan, Y.; Wang, J. Online sequential prediction of bearings imbalanced fault diagnosis by extreme learning machine. Mech. Syst. Signal Process. 2017, 83, 450–473. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Lee, Y.O.; Jo, J.; Hwang, J. Application of deep neural network and generative adversarial network to industrial maintenance: A case study of induction motor fault detection. In Proceedings of the IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 3248–3253. [Google Scholar]

- Xie, Y.; Zhang, T. Imbalanced learning for fault diagnosis problem of rotating machinery based on generative adversarial networks. In Proceedings of the 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 6017–6022. [Google Scholar]

- Wang, J.; Li, S.; Han, B.; An, Z.; Bao, H.; Ji, S. Generalization of deep neural networks for imbalanced fault classification of machinery using generative adversarial networks. IEEE Access 2019, 7, 111168–111180. [Google Scholar] [CrossRef]

- Fazel, S.M. Matrix Rank Minimization with Applications. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2003. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 2030–2096. [Google Scholar]

- Case Western Reserve University Bearing Data Center Website. Available online: https://csegroups.case.edu/bearingdatacenter/home (accessed on 10 February 2019).

- Lee, J.; Qiu, H.; Yu, G.; Lin, J. Bearing Data Set. IMS, University of Cincinnati, NASA Ames Prognostics Data Repository, Rexnord Technical Services 2007. Available online: https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/ (accessed on 1 May 2019).

- Bearing Dataset of Jiangnan University. Available online: http://mad-net.org:8765/explore.html?id=9 (accessed on 10 February 2019).

| Dataset | Bearing in Use | Bearing Category | # of Rollers | Fault Type | Rotating Speed (rpm) | Sample Rate (Hz) |

|---|---|---|---|---|---|---|

| CWRU | 6205-2RS JEM SKF | deep groove ball bearing | 9 | induced using electro-discharge machining | 1797 | 12k |

| IMS | Rexnord ZA-2115 | double-row spherical roller bearing | 16 | test-to-failure experiments | 2000 | 20k |

| JNU | N/A | single-row spherical roller bearing | 13 | induced using wire-cutting machine | 1000 | 50k |

| Dataset | Class Label | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| CWRU | Fault Location | Inner | Outer | Ball | Healthy |

| Fault depth | 14 | 14 | 14 | 14 | |

| IMS | Fault Location | Inner | Outer | Ball | Healthy |

| Fault depth | Serv. | Serv. | Serv. | Serv. | |

| JNU | Fault Location | Inner | Outer | Ball | Healthy |

| Fault depth | N/A | N/A | N/A | N/A |

| Component | Layer Type | Kernel | Stride | Channel | Activation |

|---|---|---|---|---|---|

| Feature Extractor | Convolution1 | 32 × 1 | 2 × 1 | 8 | Relu |

| BN1 | |||||

| Convolution2 | 16 × 1 | 2 × 1 | 16 | Relu | |

| BN2 | |||||

| Convolution3 | 8 × 1 | 2 × 1 | 32 | Relu | |

| BN3 | |||||

| Label Classifier | Fully connected 1 | 500 | 1 | Relu | |

| Fully connected 2 | 4 | 1 | Relu | ||

| Domain Discriminator | Fully connected 1 | 500 | 1 | Relu | |

| Fully connected 2 | 2 | 1 | Relu |

| Tasks | Number | Accuracy (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| CNN | DANN | DCTLN | EntMin | VADA | BNM | Proposed | ||

| CWRU → IMS | 0 | 41.94 | 40.52 | 25.62 | 25.73 | 27.45 | 35.62 | 50.62 |

| 1 | / | 80.47 | 81.98 | 85.95 | 87.76 | 99.90 | 99.95 | |

| 5 | / | 98.33 | 97.81 | 99.84 | 99.74 | 99.89 | 99.91 | |

| 10 | / | 99.25 | 99.01 | 99.47 | 99.86 | 99.96 | 99.98 | |

| CWRU → JNU | 0 | 23.79 | 24.64 | 24.79 | 25.01 | 25.57 | 25.05 | 31.20 |

| 1 | / | 63.82 | 66.56 | 62.36 | 61.89 | 77.26 | 92.07 | |

| 5 | / | 87.55 | 86.20 | 87.40 | 86.32 | 91.79 | 96.18 | |

| 10 | / | 93.09 | 95.16 | 96.35 | 93.18 | 95.57 | 97.24 | |

| IMS → CWRU | 0 | 41.03 | 49.11 | 49.32 | 50.21 | 50.16 | 30.68 | 26.04 |

| 1 | / | 77.85 | 75.31 | 82.26 | 72.99 | 86.04 | 85.85 | |

| 5 | / | 99.56 | 100.00 | 99.86 | 100.00 | 100.00 | 100.00 | |

| 10 | / | 99.98 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| IMS → JNU | 0 | 25.81 | 25.83 | 26.46 | 25.36 | 25.83 | 27.81 | 28.75 |

| 1 | / | 67.48 | 67.40 | 65.95 | 65.07 | 72.61 | 81.41 | |

| 5 | / | 88.64 | 89.95 | 87.50 | 84.44 | 91.84 | 94.38 | |

| 10 | / | 94.95 | 95.00 | 93.91 | 94.25 | 94.65 | 96.51 | |

| JNU → CWRU | 0 | 35.64 | 25.26 | 25.10 | 24.95 | 25.68 | 24.27 | 24.95 |

| 1 | / | 72.09 | 74.95 | 73.92 | 72.31 | 89.29 | 87.94 | |

| 5 | / | 100.00 | 100.00 | 99.98 | 99.98 | 100.00 | 100.00 | |

| 10 | / | 100.00 | 100.00 | 99.98 | 99.98 | 100.00 | 100.00 | |

| JNU → IMS | 0 | 40.52 | 41.72 | 41.41 | 44.22 | 27.03 | 55.99 | 49.01 |

| 1 | / | 86.67 | 86.56 | 84.19 | 91.79 | 99.95 | 99.97 | |

| 5 | / | 99.79 | 98.70 | 99.97 | 99.93 | 100.00 | 100.00 | |

| 10 | / | 99.29 | 100.00 | 99.78 | 99.74 | 99.97 | 100.00 | |

| Scenarios | Number of Unlabeled Target Samples | ||||

|---|---|---|---|---|---|

| Healthy | IR | Ball | OR | Test | |

| #1 | 50% | 25% | 25% | 25% | 50% |

| #2 | 50% | 10% | 10% | 10% | 50% |

| #3 | 50% | 5% | 5% | 5% | 50% |

| Tasks | Imbalanced Scenarios | Accuracy | |||||

|---|---|---|---|---|---|---|---|

| DANN | DCTLN | EntMin | VADA | BNM | Proposed | ||

| CWRU -> IMS | #1 | 99.37 | 99.95 | 99.56 | 99.59 | 99.74 | 99.95 |

| #2 | 99.13 | 99.74 | 99.06 | 99.84 | 99.62 | 99.84 | |

| #3 | 99.83 | 95.73 | 99.87 | 99.85 | 99.98 | 99.95 | |

| CWRU -> JNU | #1 | 89.46 | 89.58 | 88.62 | 87.50 | 89.66 | 95.83 |

| #2 | 88.18 | 83.28 | 88.15 | 88.31 | 88.70 | 95.19 | |

| #3 | 90.09 | 88.02 | 88.93 | 87.71 | 88.78 | 94.14 | |

| IMS -> CWRU | #1 | 99.77 | 99.22 | 99.95 | 99.92 | 100.00 | 100.00 |

| #2 | 99.95 | 100.00 | 99.98 | 99.93 | 100.00 | 100.00 | |

| #3 | 99.87 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| IMS -> JNU | #1 | 89.54 | 87.97 | 89.56 | 84.92 | 92.38 | 96.57 |

| #2 | 87.58 | 89.74 | 87.47 | 88.75 | 90.94 | 96.33 | |

| #3 | 86.59 | 86.77 | 86.38 | 89.17 | 90.34 | 93.39 | |

| JNU -> CWRU | #1 | 99.95 | 99.95 | 99.95 | 100.00 | 100.00 | 100.00 |

| #2 | 99.95 | 99.95 | 100.00 | 100.00 | 100.00 | 100.00 | |

| #3 | 99.95 | 99.85 | 99.93 | 100.00 | 100.00 | 100.00 | |

| JNU -> IMS | #1 | 99.85 | 99.95 | 99.87 | 99.92 | 99.95 | 99.93 |

| #2 | 99.87 | 99.69 | 99.79 | 99.92 | 99.98 | 99.87 | |

| #3 | 99.95 | 99.58 | 99.90 | 99.95 | 97.53 | 99.72 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Liu, F.; Zhao, D. Cross-Machine Fault Diagnosis with Semi-Supervised Discriminative Adversarial Domain Adaptation. Sensors 2020, 20, 3753. https://doi.org/10.3390/s20133753

Wang X, Liu F, Zhao D. Cross-Machine Fault Diagnosis with Semi-Supervised Discriminative Adversarial Domain Adaptation. Sensors. 2020; 20(13):3753. https://doi.org/10.3390/s20133753

Chicago/Turabian StyleWang, Xiaodong, Feng Liu, and Dongdong Zhao. 2020. "Cross-Machine Fault Diagnosis with Semi-Supervised Discriminative Adversarial Domain Adaptation" Sensors 20, no. 13: 3753. https://doi.org/10.3390/s20133753

APA StyleWang, X., Liu, F., & Zhao, D. (2020). Cross-Machine Fault Diagnosis with Semi-Supervised Discriminative Adversarial Domain Adaptation. Sensors, 20(13), 3753. https://doi.org/10.3390/s20133753