Abstract

Deep reinforcement learning (DRL) has been successfully applied in mapless navigation. An important issue in DRL is to design a reward function for evaluating actions of agents. However, designing a robust and suitable reward function greatly depends on the designer’s experience and intuition. To address this concern, we consider employing reward shaping from trajectories on similar navigation tasks without human supervision, and propose a general reward function based on matching network (MN). The MN-based reward function is able to gain the experience by pre-training through trajectories on different navigation tasks and accelerate the training speed of DRL in new tasks. The proposed reward function keeps the optimal strategy of DRL unchanged. The simulation results on two static maps show that the DRL converge with less iterations via the learned reward function than the state-of-the-art mapless navigation methods. The proposed method performs well in dynamic maps with partially moving obstacles. Even when test maps are different from training maps, the proposed strategy is able to complete the navigation tasks without additional training.

1. Introduction

Autonomous navigation system enables the mobile robot to determine its position within the reference frame environment and move to the desired target position autonomously. The classical navigation solution is a combination of algorithms, including simultaneous localization and mapping (SLAM), path planning and motion control [1]. These methods rely on high-precision global maps, resulting in limitations in unknown map environments or dynamic environments. Recently, the research of mapless navigation, which implicitly performs localization and mapping, has attracted attention from both industry and academia [2]. Deep reinforcement learning (DRL) techniques, which map states to actions through continuous interaction with the environment, have achieved great success in many fields [3,4,5,6], such as video games, robot control and autonomous driving. A variety of DRL technologies have been introduced to mapless navigation tasks [7,8,9,10]. However, DRL algorithms are known to be data-inefficient [11], especially when the rewards are sparse. To accelerate the learning, dense rewards, which provide more information after each action are desired.

Reward shaping can be used to generate dense rewards [12], whose goal is that providing supplemental rewards to make a problem easier to learn. However, the design of manually programmed reward functions requires substantial domain expertise, especially in complex environments. Therefore, data-driven reward definition from trajectories or experiences has been studied [13,14,15,16,17]. Different from the hand-crafted reward function, inverse reinforcement learning (IRL) automatically learn reward function with trajectories from expert demonstrations [18,19]. However, IRL proved that expert demonstrations defeat the central goal of DRL: learning policies automatically by ‘trial and error’ [20]. Without expert demonstrations, data-driven methods for reward shaping directly use the sampled trajectories on a series of similar tasks. The approach of classifier-based reward shaping directly estimate the policy underlying sampled trajectories through pre-training a suitable classifier with supervised learning [20,21]. The probability of success in the current state obtained by the classifier will be used as the additional reward value. However, in order to ensure that the classifier can provide a suitable reward under any circumstances, a large number of samples are needed to train the classifier in a single task. To overcome such limitation, meta-learning has been introduced to design reward function or learn potential function [22,23,24]. However, the method assumes that the tasks share the same state space, and hence it might be ineffective when state space changes significantly. In addition, although the reward function based on meta-learning can learn a priori knowledge from different tasks, it still needs a certain amount of demonstrations under new tasks.

It was demonstrated that few-shot learning is able to learn from less samples than traditional deep learning methods [25,26,27,28,29]. In this paper, the matching network (MN) [30], a non-parametric approach to realize one-shot or few-shot learning, is proposed for designing a reward function in indoor navigation tasks. MN can implicitly extract the prior knowledge form trajectories on similar navigation tasks. Then the knowledge is transferred to new tasks in an additional reward manner.

To accelerate the learning speed of the navigation in a new environment, we propose a novel DRL framework based on MN. The main contributions of our framework lie in two folds.

We use the sequence composed of continuous robot states as the input of MN and the output of MN is the probability that the robot can complete the task. The benefit from the input is a sequence, MN can be applied to the navigation tasks where only partial information can be observed. The historical information contained in the path sequence enables the MN to make judgments about the current state of the robot.

Our method does not need human demonstrations or positive samples when training in a new environment, and the robot still can quickly learn the navigation strategy under the new map. The experiments demonstrate that our method has better adaptability to a dynamic environment and stable transfer ability. This mainly benefits from the experience gained by the reward function under other maps.

The rest of this paper is organized as follows. The related work of the proposed method is briefly introduced in the second section. The third section introduces our proposed method. The MN-based reward function structure and training process is introduced in Section 4. Section 5 introduces the structure of DRL model. The simulation experimental results of the model will be explained in Section 6. Section 7 presents a brief discussion and then concludes this paper.

2. Related Work

2.1. Navigation Algorithm

In the robot navigation problem, path planning is the foundation of robot navigation and control. Path planning generally consists of global path planning and local path planning [31]. Global path planning is to select a whole path when the map is known, which mainly including ant colony optimization (ACO) [32] and A-star algorithm [33]. Global path planning relies on known static maps and it may not work in the dynamic environment. Local path planning is to use the robot’s own sensors to obtain environmental information to complete obstacle avoidance and robot control. Commonly used methods include model predictive control (MPC) [34], artificial potential field [35] and the dynamic window approach (DWA) [36]. Due to the lack of global information, the result of local path planning may not be optimal.

Combine global path planning and local path planning, path planning is able to realize stable robot control based on known maps and robot models (kinematic models and dynamic models). It can find the optimal path in the known environment. However, the path planning algorithm needs to reset parameters when the environment or the robot changes, and it will consume a lot of calculation time to find a suitable path when the environment is more complicated.

2.2. Mapless Navigation

Mapless navigation refers to robots completing navigation tasks without artificially providing maps. Reactive approaches, which are able to control and execute a plan autonomously, were proposed for mapless navigation [37]. For instance, Roberge et al. [38] implemented particle swarm optimization (PSO) in real-time unmanned aerial vehicle (UAV) path planning. Zoumponos et al. [39] realized the path planning of the manipulator through fuzzy logic (FL). These reactive approaches are able to provide a path in unknown or dynamic environments. However, reactive approaches require a local map constructed using information obtained from the sensors. Therefore these methods cannot provide end-to-end control and they have several disadvantages such as longer computational time and complex design.

Recently, DRL is widely used in mapless navigation. It learns the mapping between agent states and actions to provide end-to-end control. A variety of DRL models such as Deep Q-Network (DQN) [40], deep deterministic policy gradient (DDPG) [41], asynchronous advantage actor-critic (A3C) [42] and proximal policy optimization (PPO) [43] have been applied and provided promising results. Long et al. [44] used laser data as input and proposed a PPO-based framework to avoid obstacles between multiple robots. Ma et al. [45] employed the RGB image as the visual input and presented a DRL-based mapless motion planner alleviating the need of interactions between the agent and environment. A few special techniques and model structures are also used in navigation tasks, including multiple subtasks to assist reinforcement learning [46], continuous motion control based on DDPG [47] and target-driven navigation [48]. Most of the above-mentioned methods focus on the improvement of reinforcement learning structure, and the reward value is mostly sparse. By designing a reasonable reward function, the effect of reinforcement learning can be further improved.

2.3. Meta-Reinforcement Learning

Meta-reinforcement learning (meta-RL) is able to rapidly learn new tasks. Duan et al. [49] used a series of interrelated RL tasks to train the recurrent neural network (RNN). Finn et al. [50] used model-agnostic meta-learning (MAML) to initialize parameters for DRL and DRL can quickly converge to the optimal strategy under new tasks. Unlike the previous work on training average models and model parameters, we focused on reward shaping. In recent years, several works have been investigated to improve learning efficiency for robots, including fast imitation learning [51,52] and meta-inverse reinforcement learning [53]. Both tasks are dedicated to quickly learning from a small number of demonstrations. Our goal is to achieve rapid and autonomous adaptation of the robot in the new environment without manually providing any new demonstrations.

2.4. Automatic Reward Shaping

Automatic reward shaping can reduce the dependence on the designers’ experience. Singh et al. [20] used the classifier combined with the active query mechanism, and only needs to provide few positive samples under a single task to achieve fast learning of DRL. This paper provides the classifier with a modest number of examples of successful outcomes, which can reduce the difficulty of sample collection. However, in the navigation problem, it is difficult to make the classifier judge by only providing the presentation whether the current state can complete the task. Xie et al. [21] used concept acquisition through meta-learning (CAML) [17] as the target code for DRL. After pre-training CAML under a variety of different tasks, the robot can obtain the ability of continuous learning with only providing a small number of demonstrations in a new task. However, humans must provide positive samples to CAML in new tasks and manually take the samples, which is time-consuming. Zou et al. [23] designed a reward function using meta-learning. It can quickly adapt to changes in a similar environment, but cannot perform well in the case of significant changes in the environment. Our method does not need artificial samples at the beginning of a new task. The robot collects samples through autonomous exploration and realizes the strategy of rapid learning to complete the task.

3. DRL with MNR

3.1. Problem Formulation

The aim of this paper was to design universal reward functions for DRL in navigation tasks. This problem is formulated as a Markov decision process (MDP). MDP is defined by a tuple ⟨⟩, where is a set of state spaces, is a set of actions, is the transition probabilities, is the reward function and is a discount factor. At each step, the agent receives the state , where is laser scan data and is the Euclidean distance between the agent and the target point. We set the range of the laser scan as 0.1–10 meters. The agent action is sampled from a policy , where , is the agent linear velocity and is angular velocity. In navigation tasks, the reward is usually calculated based on the Euclidean distance and the reward can be rewritten as .

We considered an extension to the basic MDP framework, defined by ⟨⟩, where the reward value of agent at each step is the sum of Euclidean distance reward and the additional matching network reward (MNR) . We denote this reward-augmented MDP as MDP(+MNR). In the following subsection, we will show that a policy that is optimal under is also optimal under .

3.2. Model Architecture

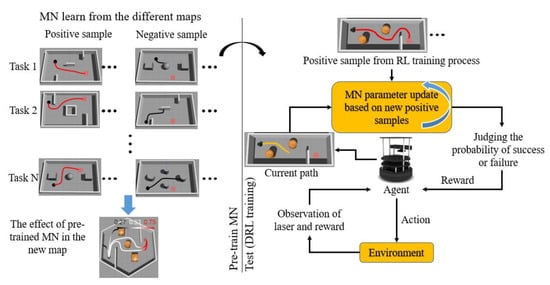

In this research, we proposed a DRL method based on the MNR. The model flowchart is shown in Figure 1. Our reward function was designed based on MN. By pre-training the MN through different navigation tasks, MN is able to learn the rules of the navigation task. The pre-trained MN can generate a suitable reward in the new tasks. In the process of DRL training, the pre-trained MN evaluates the current state of the robot and generates rewards for guiding the DRL. After the robot completes a task, the newly obtained positive samples will be used for fine-tuning the MN, so that the reward value generated by the MN under the current map is more suitable.

Figure 1.

Our model flowchart.

4. Matching Network Based Reward

4.1. MN Network Structure

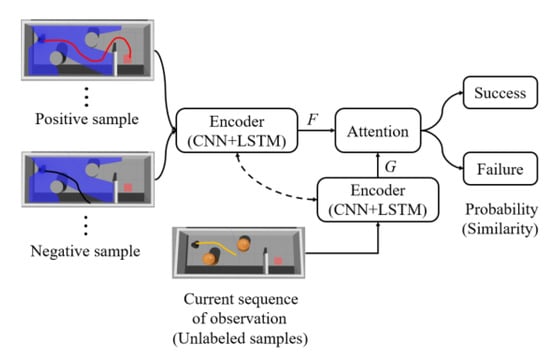

Our reward function is based on MN, which combines attention and memory to realize fast learning. In the pre-training phase, MN learns the mapping between unlabeled samples and labeled support sets of examples thus MN can realize rapid adaptation under new tasks. The flow chart of MN in our method is shown in Figure 2. MN takes a set of state sequences as input and predicts the success probability of the current state. The state sequence is transformed to the input vector through the encoder, which comprises of convolutional neural networks (CNN) and long short-term memory (LSTM). Meanwhile, MN selects the positive and negative samples from the data set as the input of the encoder, and gets the vector . A similarity is given through computing the cosine similarity between the vectors and . The attention mechanism is to use the softmax over cosine similarity and represented as:

Figure 2.

Matching network reward in mapless navigation.

The sum of the softmax output values is 1. Each softmax output corresponds to a label and we added up all the softmax output that have the same label. Each support set contains two categories (positive and negative) and each category contains 20 samples. The output of MN is represented as:

where is the softmax output. The labels of each sample in the support set are expressed in the form of one-hot. represents the ‘success’ label and represents the ‘failure’ label. We used as the output of MN to represent the probability of success.

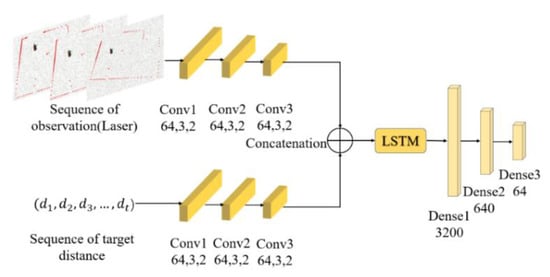

Figure 3 shows the structure of the encoder. First, one-dimensional convolution was employed to respectively extract features of the laser scan data and the relative position relationship between the agent and the target position. Then we merged the compressed data and feed it to LSTM. The initial number of LSTM nodes was 128 and the step size was 25.

Figure 3.

Encoder network structure. Every one-dimensional convolutional layer is represented by its channel size, kernel size and stride size. Other layers are represented by their types and dimensions. Each layer’s activation function is Rectified Linear Unit (ReLU).

4.2. MN Training Strategy

We pre-train MN with the data set collected from different maps. Each sample in the data set consists of a set of status sequence, which contains laser scan data and the Euclidean distance between the agent and the target position. In each training iteration, a fixed number of positive and negative samples were randomly selected from the data set as training set. Each training set included a support set and query set. After extracting features through the encoder, the similarity between the query set and the support set was calculated to obtain the label of the query sample (positive or negative). The errors between the similarity and label were used to update the MN parameters. The loss function of our model was the cross entropy and was represented as:

where is the output of MN.

The procedure pre-training MN is described in Algorithm 1.

| Algorithm 1. Pre-Training Matching Network (MN) |

| Input: Data set |

| 1: Initialize MN parameter x |

| 2: For each iteration do |

| 3: Sample training task from the data set, each task includes support set and query set |

| 4: Support set and query set were coded to get G and F |

| 5: Calculate the similarity between G and F |

| 6: Calculate the loss |

| 7: Update the parameters x through Adam optimizer |

4.3. Sample Collection

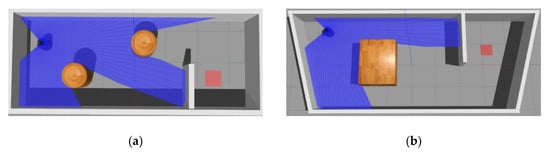

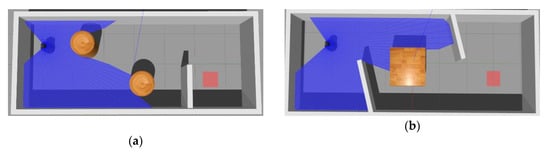

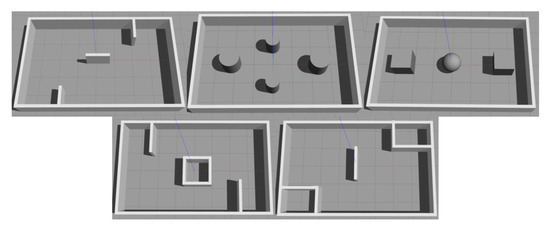

Good-quality data is a prerequisite for training the MN well. Hence we collected a sequence of states on a variety of different maps to guarantee the variety of the data. The sample maps that we called Pre-MAPs are shown in Figure 4. The Pre-MAPs building in the Gazebo were 8 m long and 5 m wide. When we collected samples, starting and target positions in these maps were randomly generated. When the agent hits the obstacle (the smallest value in the laser scan data is less than 0.2) or runs over 200 steps, this process will be recorded as a negative sample. When the agent reaches the target position within 200 steps, this process will be recorded as a positive sample. Positive and negative samples are collected separately. When collecting positive samples, we used path planning methods to control robot to complete the navigation task. The path planning algorithm we used consists of the A-star algorithm and DWA. However, the path planning algorithm can only be applied when a map of the environment is known. When collecting negative samples, we set a random agent to interact with the environment. The robot’s initial positions and target point positions were randomly generated when collecting samples. In order to prevent the robot’s initial position from being too close to the target position, we set the Euclidean distance between the two points to be greater than a certain value. Under each Pre-MAP, we collected 60 positive samples and 60 negative samples, the total number of samples was 600. The data set collected under the Pre-MAPs is called the Pre-data set.

Figure 4.

Data acquisition map (Pre-MAP).

4.4. Pre-Training MN and Result Analysis

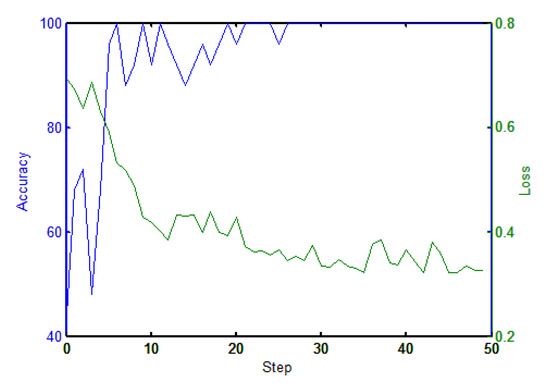

The MN used a 2-way 20-shot training method, where the samples in each training process contained two categories (positive and negative) and 20 samples were randomly selected for each category. The learning rate was set to and the batch size was 20. The green and blue line in Figure 5 show the change in terms of loss and accuracy in the training process respectively.

Figure 5.

Matching network error and accuracy curve.

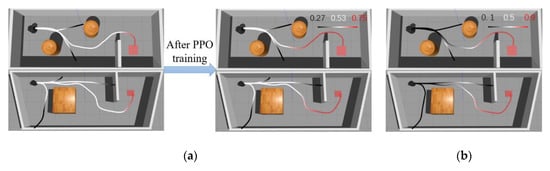

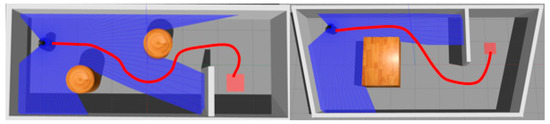

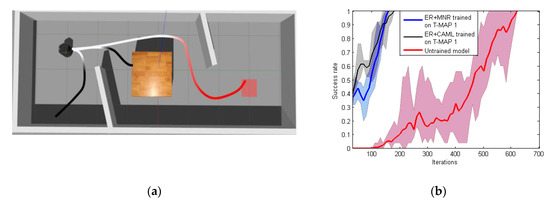

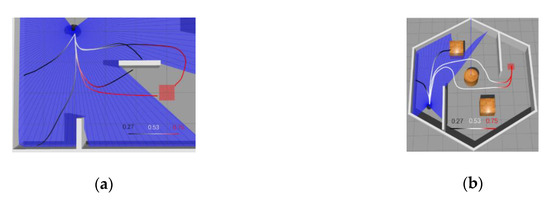

To determine whether the MN’s output can accurately evaluate the current status of the agent, we further tested output values on two maps under different paths for the robot. The results are shown in Figure 6. Figure 6a is one of the training maps and the Figure 6b is different from the training maps in terms of size and shape. Note that the shown output values are direct outputs from MN without any processing.

Figure 6.

Matching network output changes on different paths: (a) matching network (MN) output under Pre-MAP and (b) MN output under new map without fine-tuning.

As can be seen from the Figure 6a, the MN yielded a result close to 0.5 when the robot just started running because the MN failed to determine whether the current state could succeed or fail. When the robot moved away from the target position, it could be seen that the given value decreased gradually. When the robot hit the obstacle, the MN gave an output value of only 0.27, which was considered as a failure. On the other hand, when the robot gradually approached the target position, the given value gradually increased, and before reaching the target position, the result given by MN was 0.75. It can be seen that MN had implicitly learned obstacle avoidance and can give a small output value when navigation task fails. In Figure 6b, MN is put into the new map without fine-tuning. The MN can also give a higher value when the robot is about to reach the target position. However, the majority of MN’s output is close to 0.5 when the robot is on the right path. It can be seen that after training the MN could still produce a suitable reward value without fine-tuning in a new environment, but it needs to be closer to obstacles or target points in order to have obvious changes. Therefore, during the initial training phase of DRL, MN can still provide some guidance.

5. Using MN-Based Rewards in PPO

5.1. PPO with MNR

We used PPO to make the robot generate a more robust strategy in the simulation environment, which was proposed by OpenAI in 2017 [43]. PPO, a new strategy gradient reinforcement learning algorithm, performs multiple gradient updates on each data sample, and hence it is more efficient and has better generalization ability than traditional strategy update methods. The parameters of PPO in the training process are updated as follows:

where is the objective function of the PPO update, and is represented as:

where denotes the probability ratio between the two strategies before and after the update, denotes the estimated advantage. Clip function is the truncation function, which limits the value of between and . PPO can effectively avoid a sudden change in strategy and ensure a stable training process by using clip function. In early stages of PPO training, the MN randomly selects samples from all trajectories in the Pre-data set since no positive samples are collected under the new maps. When positive samples under the new map is collected, MN will preferentially select samples from the new positive samples, and the rest are selected from the Pre-data set. With the increase of new positive samples, MN eventually only selects samples from the new positive samples. For the negative samples, MN randomly selects samples from the data set regardless of whether the samples are collected on new maps. The updated process of the PPO model is shown in Algorithm 2.

| Algorithm 2. Proximal Policy Optimization (PPO) with Pre-Trained MN Reward |

| Input: Pre—date set , load the parameter of pre-trained MN |

| 1: Initialize PPO parameter and policy |

| 2: For each iteration do |

| 3: While the robot does not reach the target position do |

| 4: Initializes the robot position |

| 5: Get state (including laser scan state and relative position ) |

| 6: Run generate action |

| 7: Add to the queue |

| 8: Input into MN |

| 9: Get reward from MN and environment |

| 10: Collect |

| 11: Add and task result (Label) to the MN’s data set |

| 12: If is a multiple of 3 then |

| 13: Update parameter of MN by with few epochs |

| 14: Compute estimated advantage |

| 15: Update with K epochs |

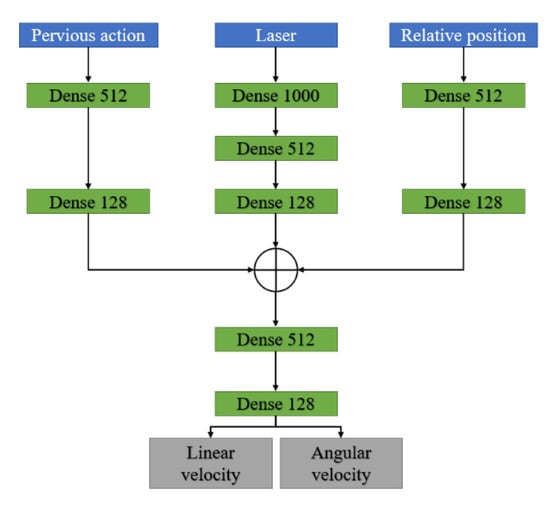

The network structure of the PPO is shown in Figure 7. The PPO input and the action in the previous time-step . The output of PPO is the linear and angular velocity of the robot. In the experiment we set the output of the linear velocity in , and angular velocity in . The network was composed of a fully connected layer and the activation function was ReLU.

Figure 7.

Proximal policy optimization (PPO) network structure.

5.2. Reward Function Design and Policy Invariance

To map the to reward, we defined a potential function for the current timestep as which is the output of the MN. According to potential-based reward shaping, the MN-based reward is defined as , which can satisfy optimal policy invariance [54,55]. The reward function of reinforcement learning is represented as:

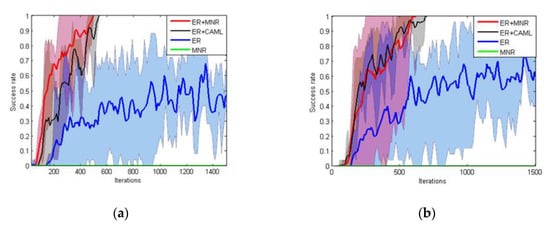

where represents the Euclidean distance between robot and the target position. If the robot reaches the target position (), the robot is given a positive reward , which we set as 100 in the experiment. When the robot collided with the obstacle, we gave a negative reward , which was set as −50 in the experiment. To verify the effectiveness of our method, we compared several different methods. Among them, we compared CAML similar to MN. CAML, which is an extension of MAML, initialized parameters on different tasks to adapt on new tasks by using a small number of samples. Different from MAML, CAML only needs positive samples to adapt on new tasks. The similarities and differences between MN and CAML are shown in Table 1.

Table 1.

Similarities and differences between MN and concept acquisition through meta-learning (CAML).

We compared several different reward functions as follows.

- ER (Euclidean reward): in this process, we only used the Euclidean reward function, , which is the benchmark for our tests.

- MNR: in this step, we tested whether the matching network can provide effective guidance. The value generated by the MN was amplified as the reward function, .

- ER+CAML: in this step, we used the Euclidean and CAML as a reward function, . Note that CAML was pre-trained by the samples described in Section 4.2. During DRL training, we artificially provided positive samples for fine-tuning of CAML.

- ER+MNR (Ours): in this step, we combined the environment rewards function with MN, .

7. Conclusion and Future Work

In this paper, we proposed a MN-based reward shaping model. The model obtained the additional reward by comparing the current path of the robot with the provided samples. The obtained reward value encoded navigation skills. The experiments results show that the reward function model based on MNR+ER could effectively improve the training speed of DRL.

Although our method had achieved some results in speeding reinforcement learning training, it still needs to be improved in the following areas:

- On the whole, the output value of MN was closer to the discrete value, and the guidance effect on the robot was poor, so other reward functions need to be introduced.

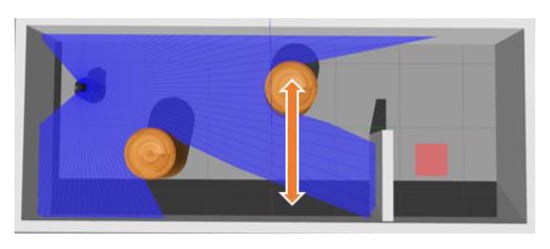

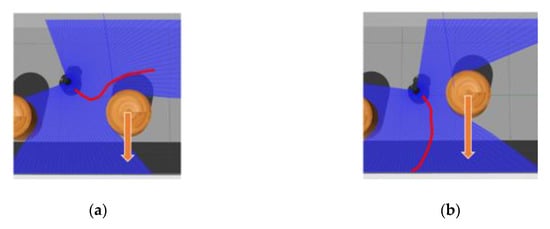

- The robot performed well in a static environment, but in a dynamic map, the robot lacked the ability of predicting the movement of obstacles, which led to a slightly poorer navigation effect of the robot in a dynamic environment.

- Our proposed model is universal and we will also apply the model to other fields in future work.

Supplementary Materials

The following are available online at https://www.mdpi.com/1424-8220/20/13/3664/s1, Video: The strategy of ER+MNR when the robot encounters dynamic obstacle.

Author Contributions

Conceptualization, M.Z.; methodology, M.Z. and Q.Z.; writing—original draft preparation, Q.Z.; writing—review and editing, L.Z., M.L. and Y.Z. All authors have read and agreed to the published version of manuscript.

Funding

This work was supported by the Fundamental Research Funds for the Central Universities (No. 2018XKQYMS03).

Acknowledgments

Thanks to Jie Pan and Jiannan Zheng for their inestimable advice and help for this article, respectively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.L.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Ort, T.; Paull, L.; Rus, D. Autonomous vehicle navigation in rural environments without detailed prior maps. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2040–2047. [Google Scholar]

- Lample, G.; Chaplot, D.S. Playing fps games with deep reinforcement learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv: Learning 2013, arXiv:1312.5602. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Wang, C.; Zhang, Q.; Tian, Q.; Li, S.; Wang, X.; Lane, D.M.; Petillot, Y.; Wang, S. Learning Mobile Manipulation through Deep Reinforcement Learning. Sensors 2020, 20, 939. [Google Scholar] [CrossRef]

- Kulhanek, J.; Derner, E.; De Bruin, T.; Babuska, R. Vision-based navigation using deep reinforcement learning. In Proceedings of the 2019 European Conference on Mobile Robots (ECMR), Prague, Czech Republic, 4–6 September 2019. [Google Scholar]

- Zhelo, O.; Zhang, J.; Tai, L.; Liu, M.; Burgard, W. Curiosity-driven exploration for mapless navigation with deep reinforcement learning. arXiv: Robotics 2018, arXiv:1804.00456. [Google Scholar]

- Mirowski, P.; Grimes, M.K.; Malinowski, M.; Hermann, K.M.; Anderson, K.; Teplyashin, D. Learning to navigate in cities without a map. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y.; Wang, Q. Deep Reinforcement Learning Approach with Multiple Experience Pools for UAV’s Autonomous Motion Planning in Complex Unknown Environments. Sensors 2020, 20, 1890. [Google Scholar] [CrossRef]

- Hu, B.; Shao, S.; Cao, Z.; Xiao, Q.; Li, Q.; Ma, C. Learning a Faster Locomotion Gait for a Quadruped Robot with Model-Free Deep Reinforcement Learning. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 1097–1102. [Google Scholar]

- Hussein, A.; Elyan, E.; Gaber, M.M.; Jayne, C. Deep reward shaping from demonstrations. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 510–517. [Google Scholar]

- Ng, A.Y.; Russell, S. Algorithms for inverse reinforcement learning. Int. Conf. Mach. Learn. 2000, 67, 663–670. [Google Scholar]

- Finn, C.; Levine, S.; Abbeel, P. Guided cost learning: Deep inverse optimal control via policy optimization. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 49–58. [Google Scholar]

- Fu, J.; Luo, K.; Levine, S. Learning robust rewards with adversarial inverse reinforcement learning. arXiv: Learning 2017, arXiv:1710.11248. [Google Scholar]

- Ho, J.; Ermon, S. Generative adversarial imitation learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4565–4573. [Google Scholar]

- Fu, J.; Singh, A.; Ghosh, D.; Yang, L.; Levine, S. Variational inverse control with events: A general framework for data-driven reward definition. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 8538–8547. [Google Scholar]

- Zhou, W.; Li, W. Safety-aware apprenticeship learning. In Proceedings of the International Conference on Computer Aided Verification, Oxford, UK, 14–17 July 2018; pp. 662–680. [Google Scholar]

- Abbeel, P.; Coates, A.; Ng, A.Y. Autonomous helicopter aerobatics through apprenticeship learning. Int. J. Robot. Res. 2010, 29, 1608–1639. [Google Scholar] [CrossRef]

- Singh, A.; Yang, L.; Finn, C.; Levine, S. End-to-end robotic reinforcement learning without reward engineering. In Proceedings of the Robotics Science and Systems, Freiburg im Breisgau, Germany, 22–26 June 2019. [Google Scholar]

- Xie, A.; Singh, A.; Levine, S.; Finn, C. Few-shot goal inference for visuomotor learning and planning. arXiv: Learning 2018, arXiv:1810.00482. [Google Scholar]

- Vecerik, M.; Sushkov, O.; Barker, D.; Rothorl, T.; Hester, T.; Scholz, J. A practical approach to insertion with variable socket position using deep reinforcement learning. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 754–760. [Google Scholar]

- Zou, H.; Ren, T.; Yan, D.; Su, H.; Zhu, J. Reward shaping via meta-learning. arXiv: Learning 2019, arXiv:1901.09330. [Google Scholar]

- Yang, Y.; Caluwaerts, K.; Iscen, A.; Tan, J.; Finn, C. Norml: No-reward meta learning. In Proceedings of the International Foundation for Autonomous Agents and Multiagent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 323–331. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Sun, Q.; Liu, Y.; Chua, T.S.; Schiele, B. Meta-transfer learning for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 403–412. [Google Scholar]

- Sun, X.; Xv, H.; Dong, J.; Zhou, H.; Chen, C.; Li, Q. Few-shot Learning for Domain-specific Fine-grained Image Classification. IEEE Trans. Ind. Electron. 2020, 99, 1-1. [Google Scholar] [CrossRef]

- Liu, Y.; Lee, J.; Park, M.; Kim, S.; Yang, E.; Hwang, S.J.; Yang, Y. Learning to propagate labels: Transductive propagation network for few-shot learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Bertinetto, L.; Henriques, J.F.; Valmadre, J.; Torr, P.H.; Vedaldi, A. Learning feed-forward one-shot learners. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 523–531. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization: Artificial ants as a computational intelligence technique. IEEE Comput. Intell. Mag. 2016, 1, 28–39. [Google Scholar] [CrossRef]

- Wodzinski, M.; Krzyzanowska, A. Sequential Classification of Palm Gestures Based on A* Algorithm and MLP Neural Network for Quadrocopter Control. Metrol. Meas. Syst. 2017, 24, 265–276. [Google Scholar] [CrossRef]

- Mayne, D.Q.; Rawlings, J.B.; Rao, C.V.; Scokaert, P.O. Survey Constrained model predictive control: Stability and optimality. Automatica 2000, 36, 789–814. [Google Scholar] [CrossRef]

- Shi, E.; Cai, T.; He, C.; Guo, J. Study of the New Method for Improving Artifical Potential Field in Mobile Robot Obstacle Avoidance. In Proceedings of the International Conference on Automation and Logistics, Jinan, China, 18–21 August 2007. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef]

- Patle, B.K.; Pandey, A.; Parhi, D.R.K.; Jagadeesh, A. A review: On path planning strategies for navigation of mobile robot. Def. Technol. 2019, 15, 582–606. [Google Scholar] [CrossRef]

- Roberge, V.; Tarbouchi, M.; Labonté, G. Comparison of parallel genetic algorithm and particle swarm optimization for real-time UAV path planning. IEEE Trans. Ind. Inform. 2012, 9, 132–141. [Google Scholar] [CrossRef]

- Zoumponos, G.T.; Aspragathos, N.A. Fuzzy logic path planning for the robotic placement of fabrics on a work table. Robot. Comput. Integr. Manuf. 2008, 24, 174–186. [Google Scholar] [CrossRef]

- Wu, J.; He, H.; Peng, J.; Li, Y.; Li, Z. Continuous reinforcement learning of energy management with deep Q network for a power split hybrid electric bus. Appl. Energy 2018, 222, 799–811. [Google Scholar] [CrossRef]

- Li, S.; Wu, Y.; Cui, X.; Dong, H.; Fang, F.; Russell, S. Robust Multi-Agent Reinforcement Learning via Minimax Deep Deterministic Policy Gradient. In Proceedings of the National Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Wang, X.; Zhuang, Z.; Zou, L.; Zhang, W. An accelerated asynchronous advantage actor-critic algorithm applied in papermaking. In Proceedings of the Chinese Control Conference, Guangzhou, China, 27–30 July 2019. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv: Learning 2017, arXiv:1707.06347. [Google Scholar]

- Long, P.; Fanl, T.; Liao, X.; Liu, W.; Zhang, H.; Pan, J. Towards optimally decentralized multi-robot collision avoidance via deep reinforcement learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Ma, L.; Chen, J.; Liu, Y. Using RGB Image as Visual Input for Mapless Robot Navigation. arXiv preprint 2019, arXiv:1903.09927. [Google Scholar]

- Mirowski, P.; Pascanu, R.; Viola, F.; Soyer, H.; Ballard, A.J.; Banino, A.; Denil, M.; Goroshin, R.; Sifre, L.; Kavukcuoglu, K.; et al. Learning to Navigate in Complex Environments. arXiv preprint 2016, arXiv:1611.0367. [Google Scholar]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Palan, M.; Landolfi, N.C.; Shevchuk, G.; Sadigh, D. Learning Reward Functions by Integrating Human Demonstrations and Preferences. arXiv preprint 2019, arXiv:1906.08928. [Google Scholar]

- Duan, Y.; Schulman, J.; Chen, X.; Bartlett, P.L.; Sutskever, I.; Abbeel, P. Rl2: Fast reinforcement learning via slow reinforcement learning. arXiv: Artificial Intelligence 2016, arXiv:1611.02779. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Sun, L.; Peng, C.; Zhan, W.; Tomizuka, M. A fast integrated planning and control framework for autonomous driving via imitation learning. In Proceedings of the Dynamic Systems and Control Conference. American Society of Mechanical Engineers, Atlanta, GA, USA, 30 September–3 October 2018; Volume 51913, p. V003T37A012. [Google Scholar]

- Cheng, C.; Yan, X.; Wagener, N.; Boots, B. Fast policy learning through imitation and reinforcement. arXiv: Learning 2018, arXiv:1805.10413. [Google Scholar]

- Ghasemipour, S.K.S.; Gu, S.S.; Zemel, R. SMILe: Scalable Meta Inverse Reinforcement Learning through Context-Conditional Policies. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 7879–7889. [Google Scholar]

- Ng, A.Y.; Harada, D.; Russell, S. Policy invariance under reward transformations: Theory and application to reward shaping. In Proceedings of the International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999; pp. 278–287. [Google Scholar]

- Devlin, S.; Kudenko, D. Dynamic potential-based reward shaping. In Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems, Valencia, Spain, 4–8 June 2012; pp. 433–440. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).