A System for the Detection of Persons in Intelligent Buildings Using Camera Systems—A Comparative Study

Abstract

1. Introduction

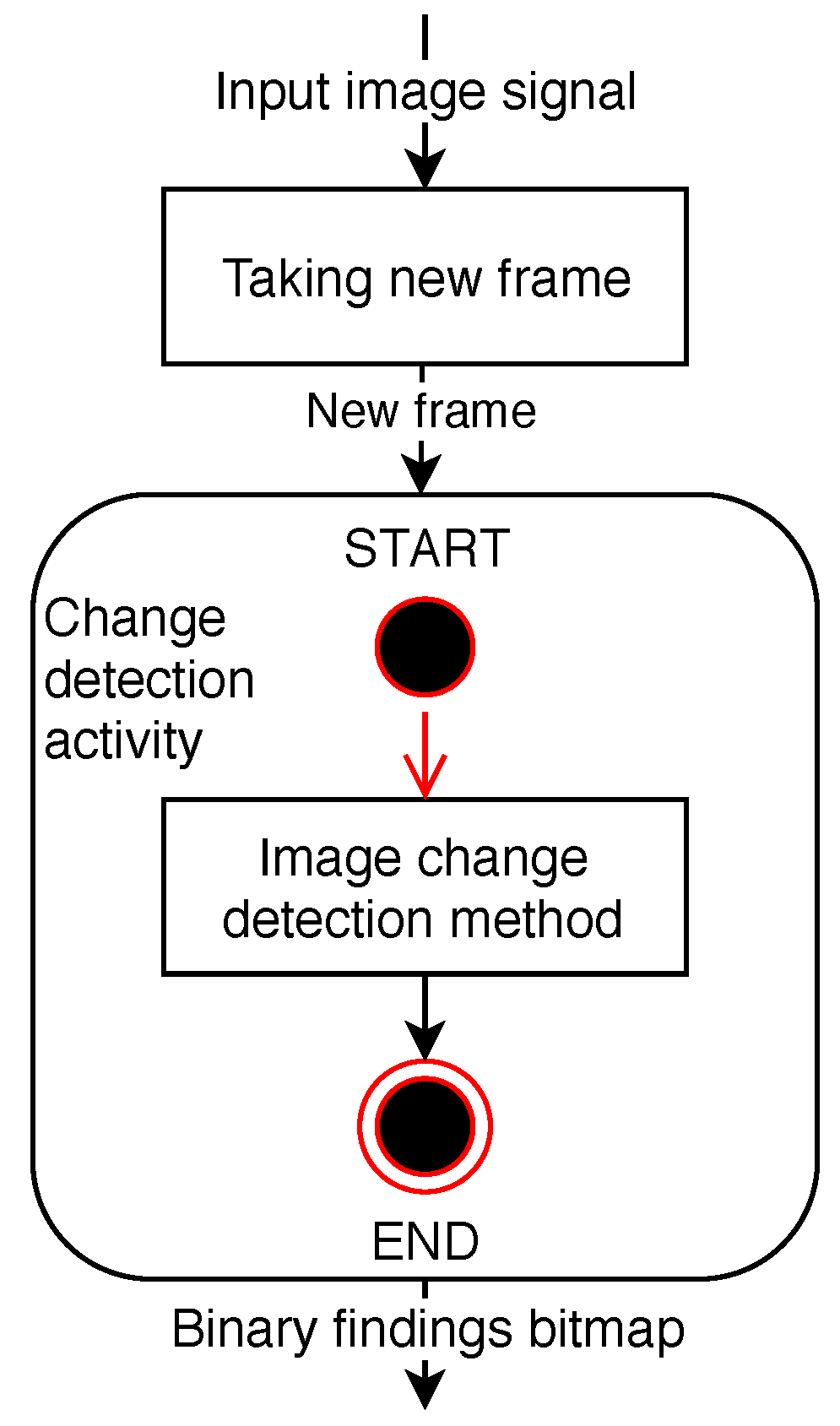

2. Methods

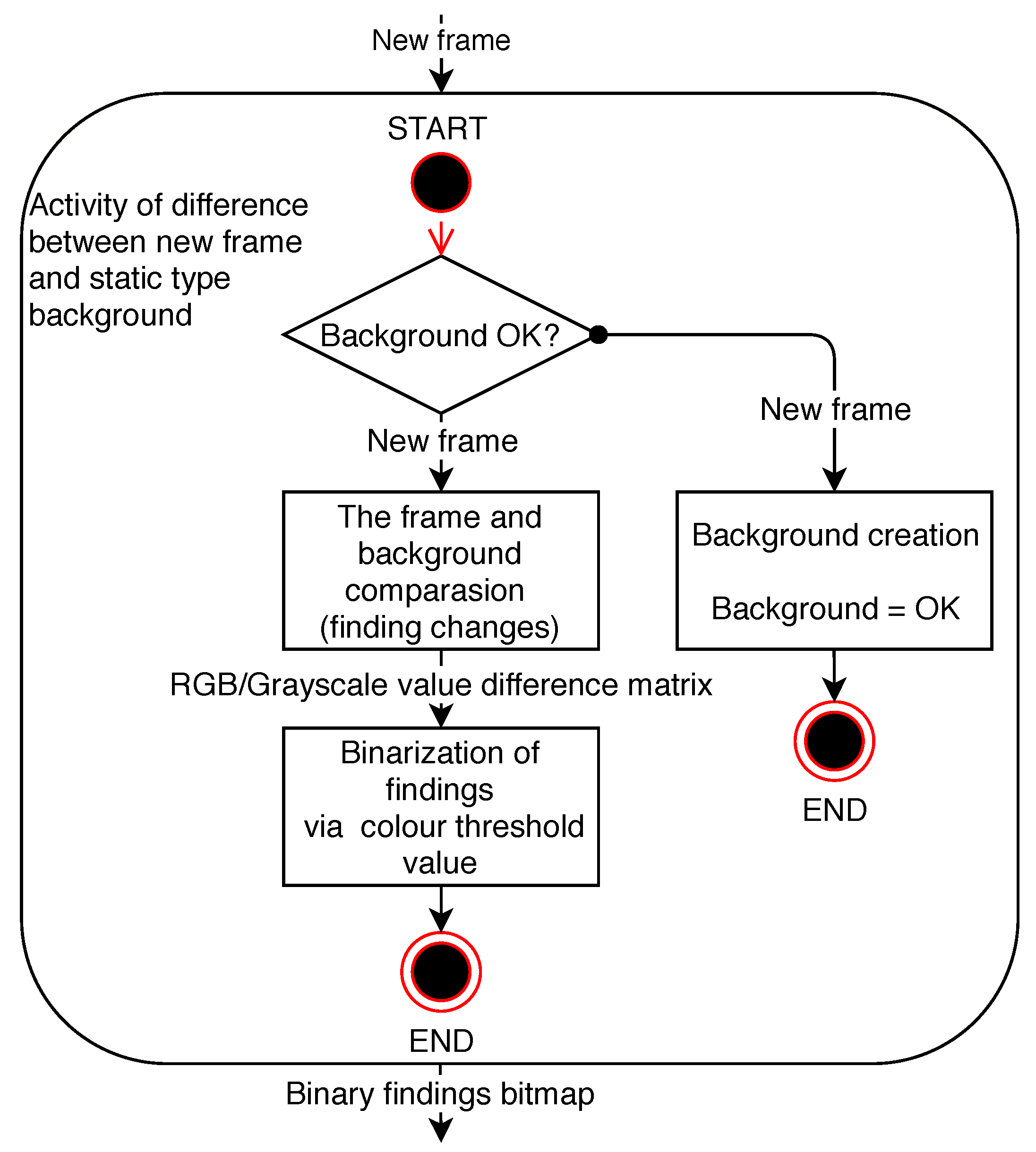

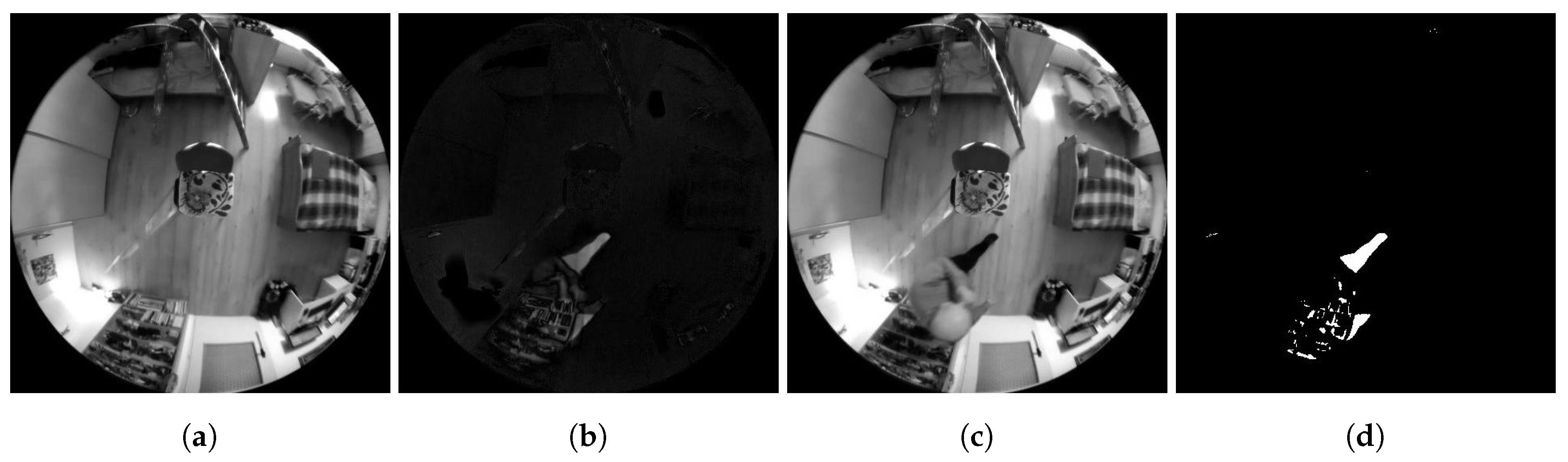

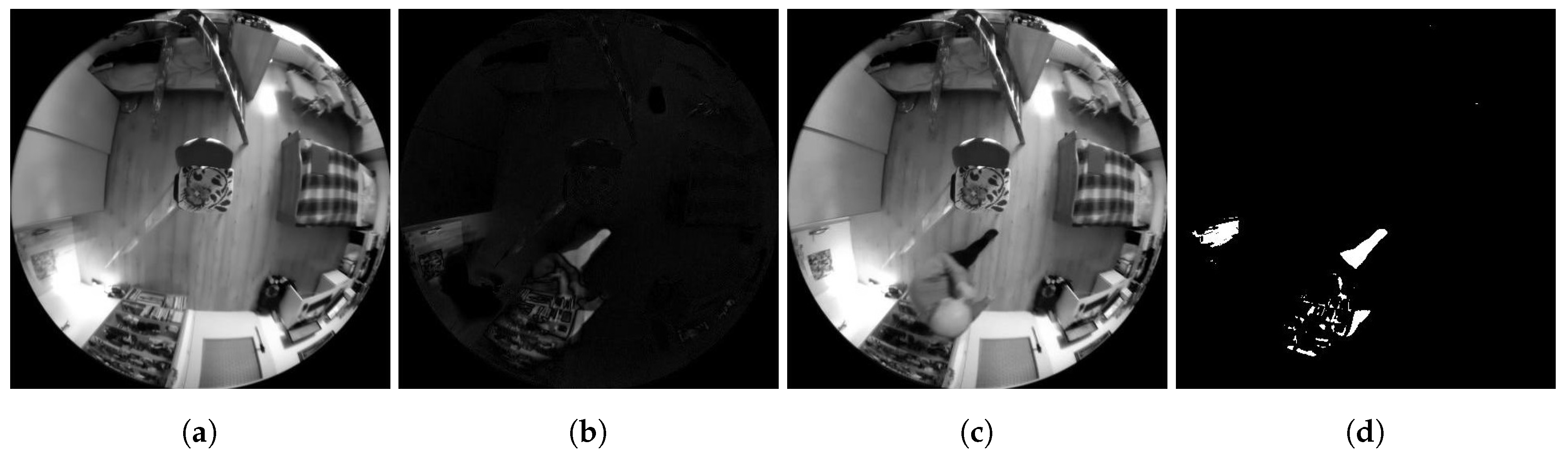

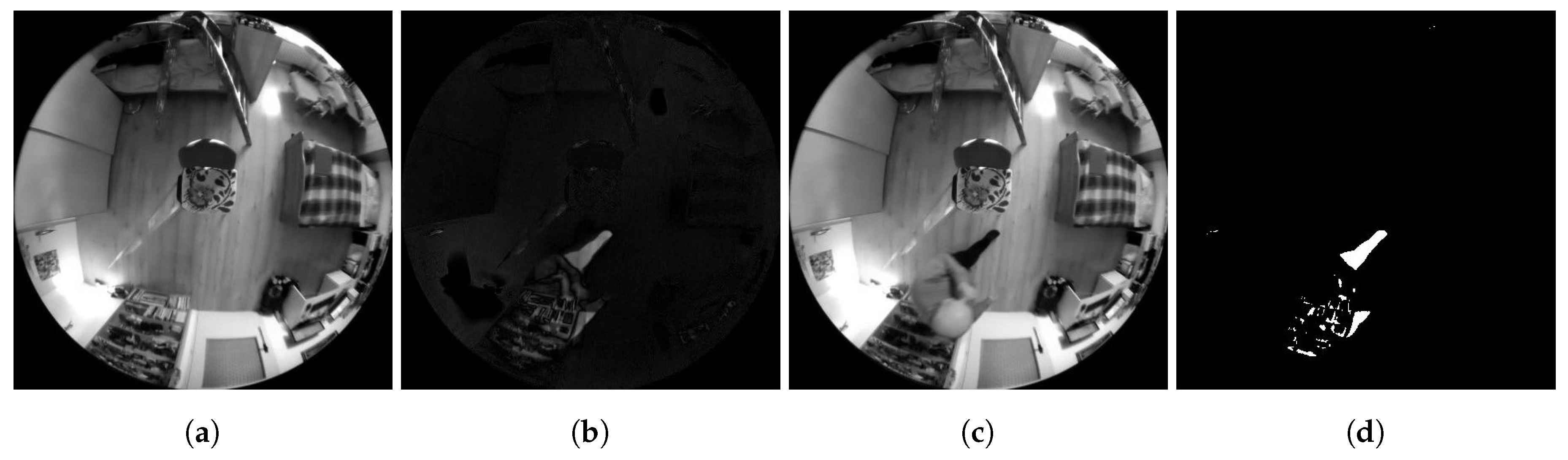

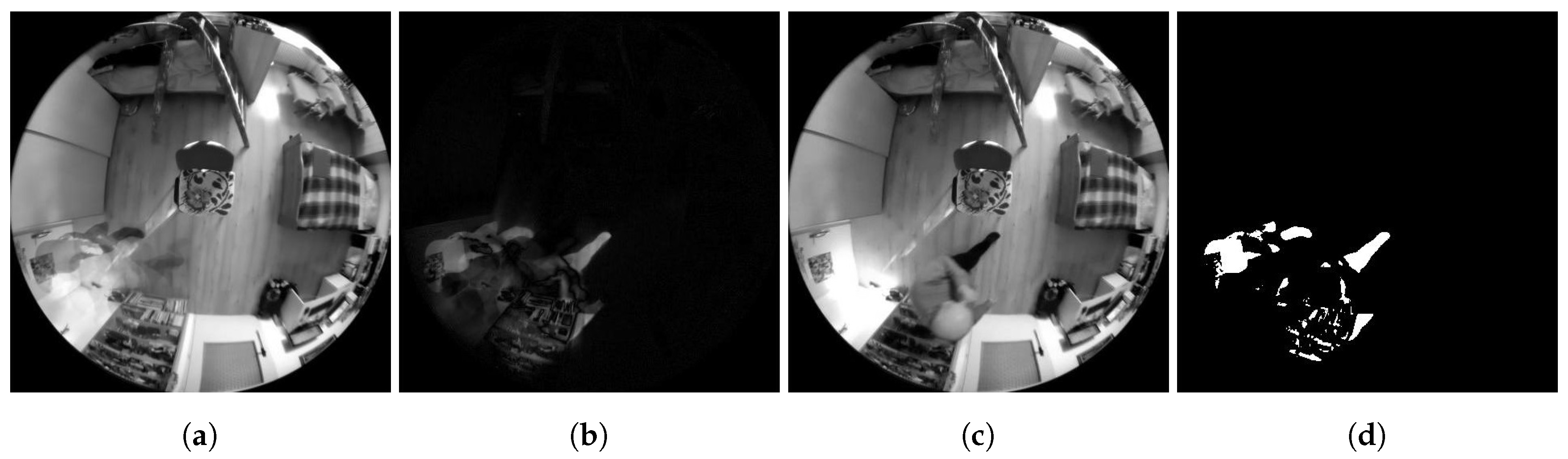

2.1. The Method of Differentiating the Current Frame towards the Static Background

- A single unedited reference frame (hereinafter referred to as Method 1.1).

- A weighted average of the first n-frames (hereinafter referred to as Method 1.2).

- A background map supplemented by invariable regions of two consecutive frames made up of n-frames (hereinafter referred to as Method 1.3).

2.1.1. A Single Unedited Reference Frame (Method 1.1)

2.1.2. A Weighted Average of the First n-Frames (Method 1.2)

2.1.3. A Background Map Supplemented by Invariable Regions of Two Consecutive Frames Made Up of n-Frames (Method 1.3)

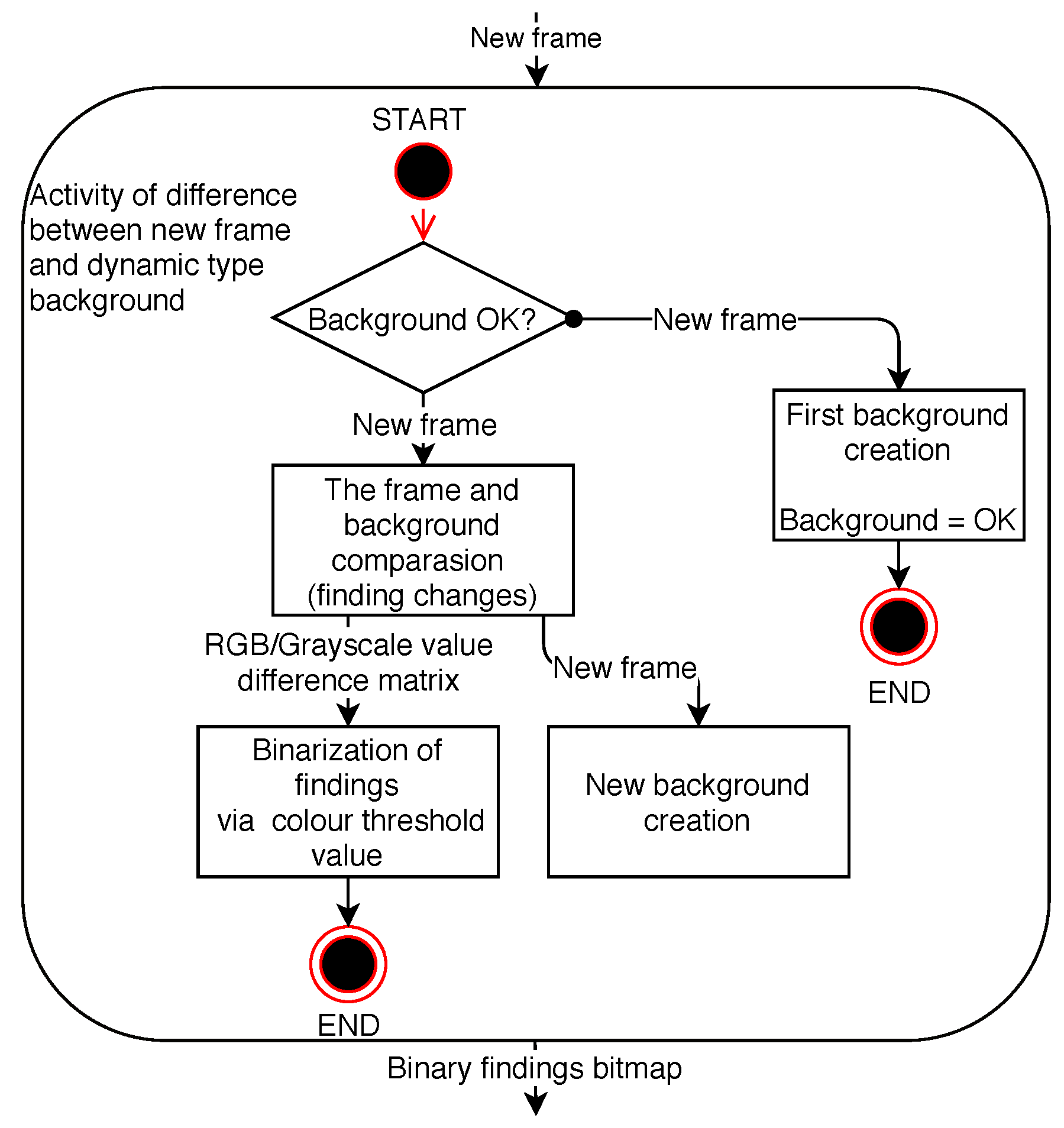

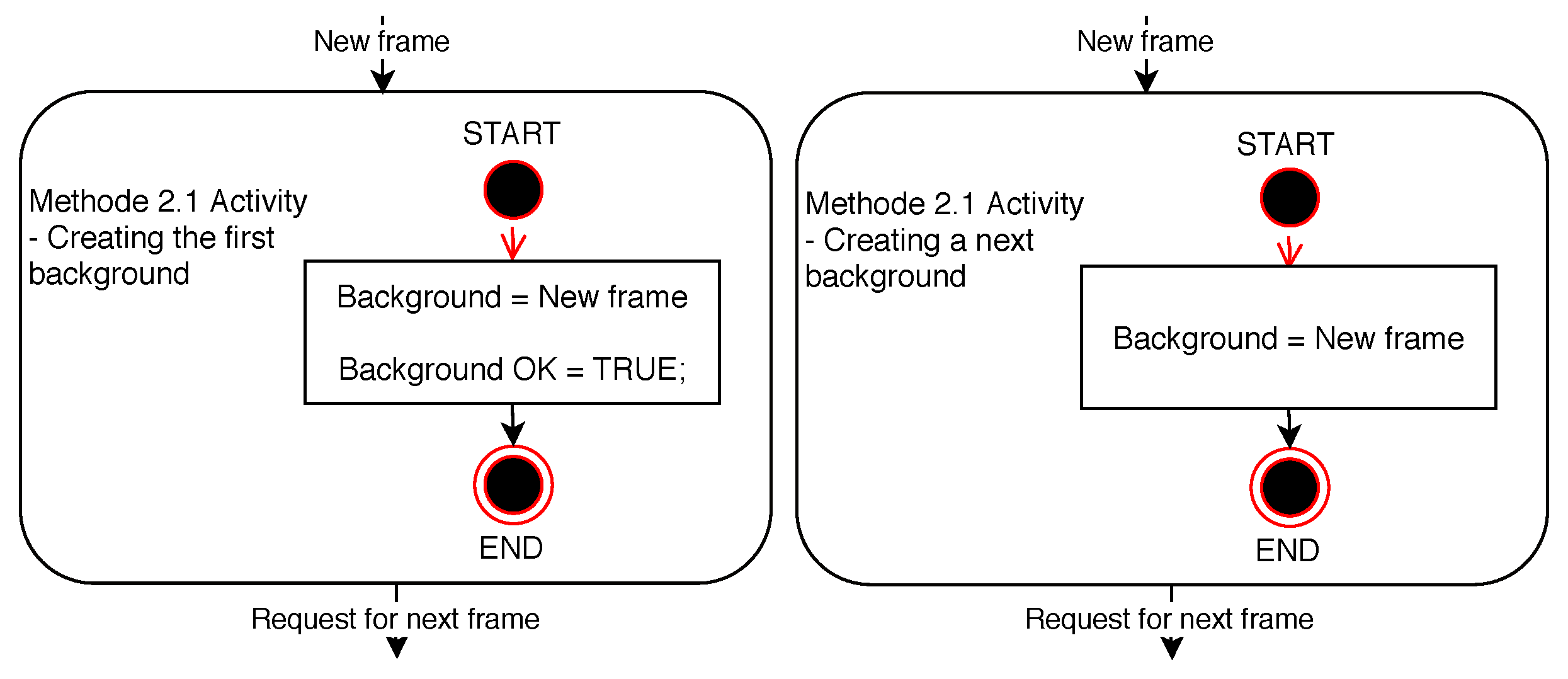

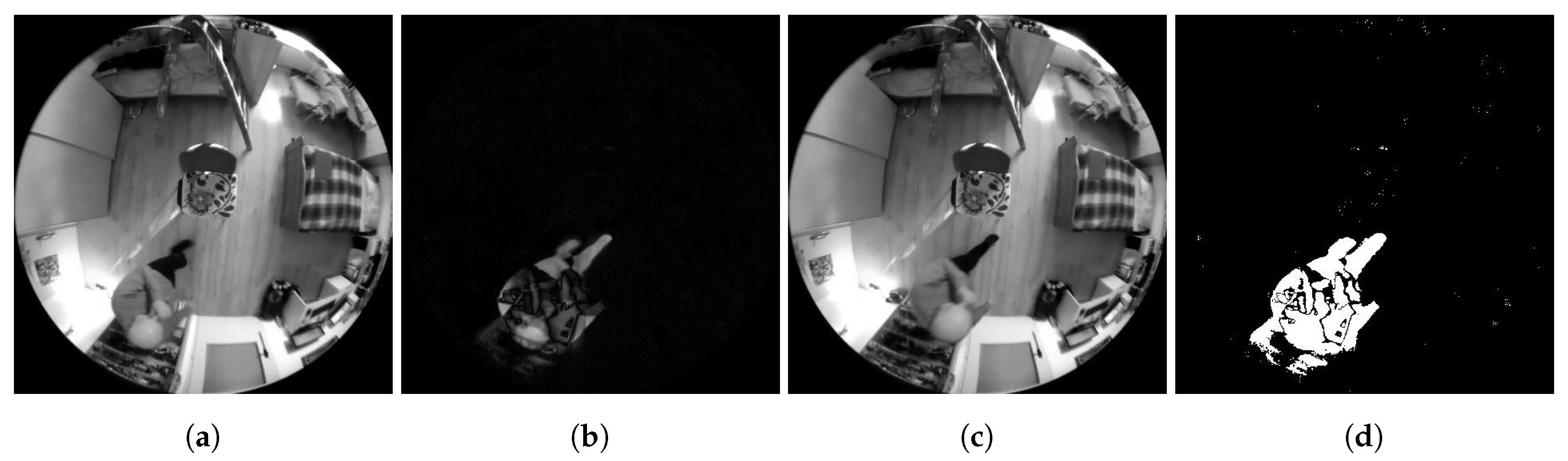

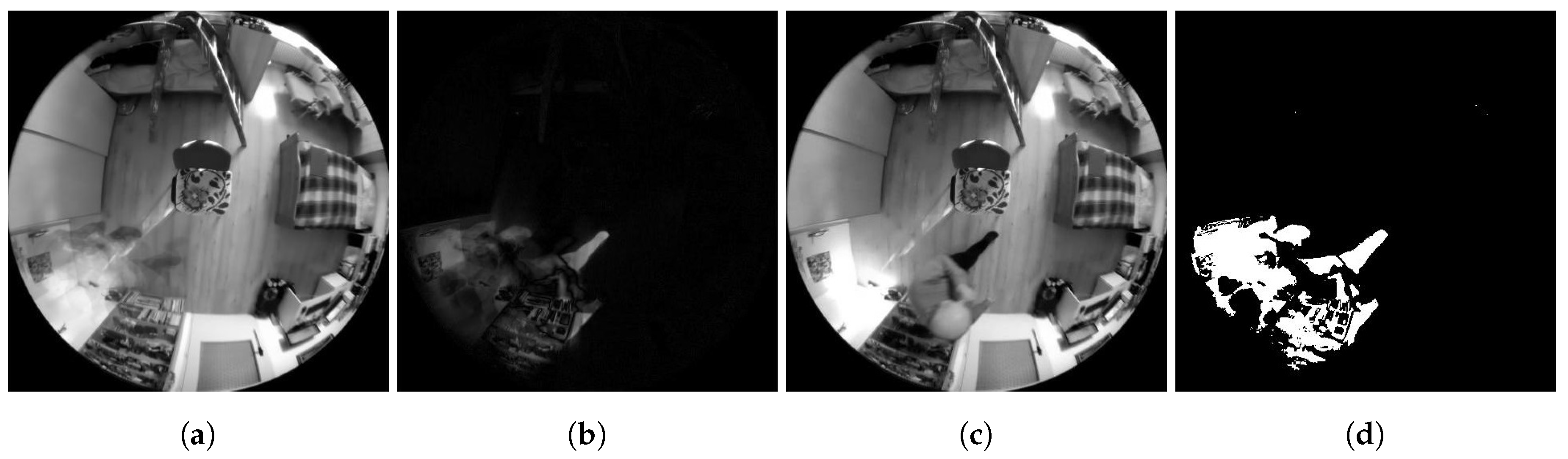

2.2. The Method of Differentiating the Current Frame towards the Dynamic Background

- A frame previous to the current frame (hereinafter referred to as Method 2.1).

- Average of all previous frames (hereinafter referred to as Method 2.2).

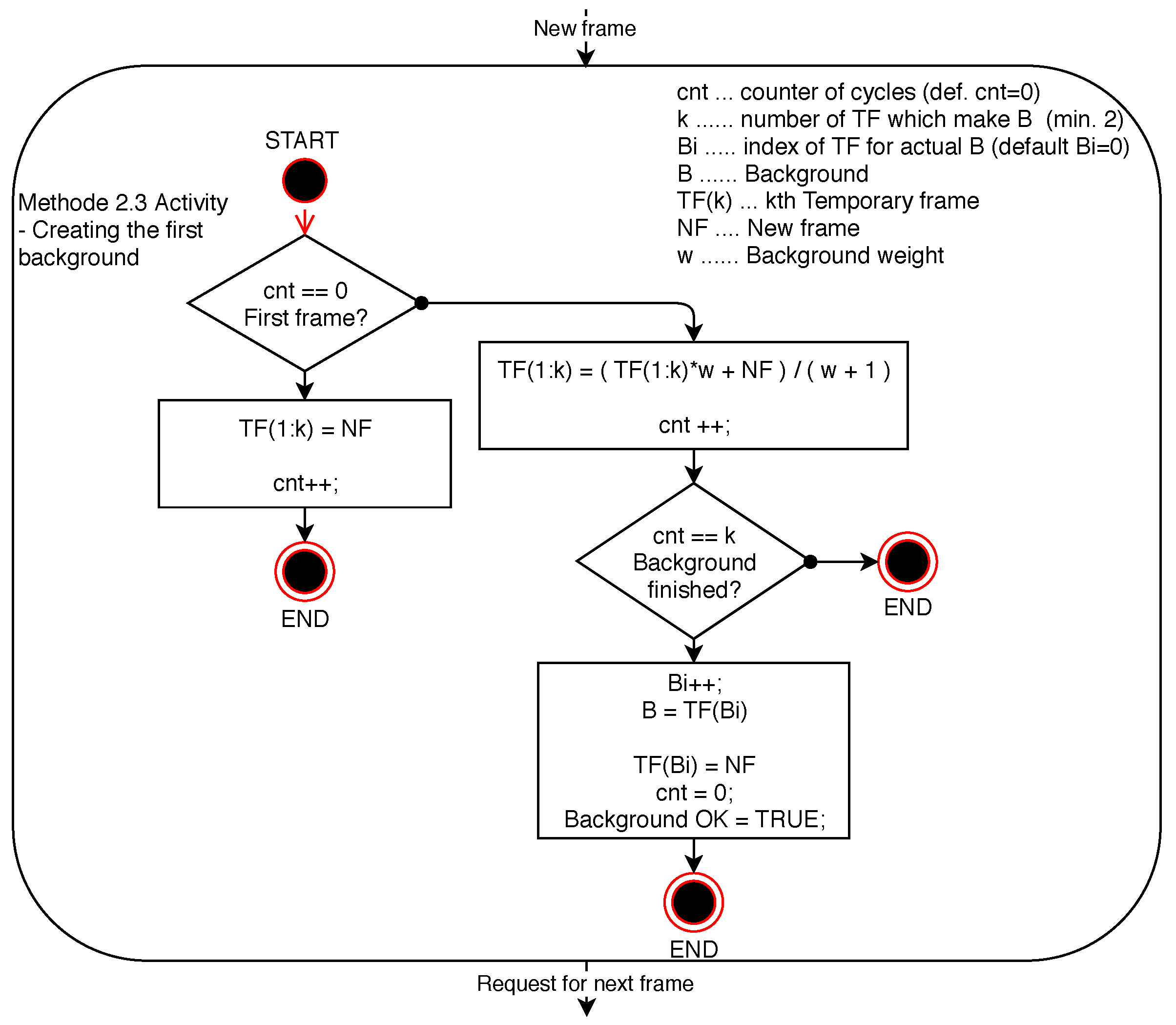

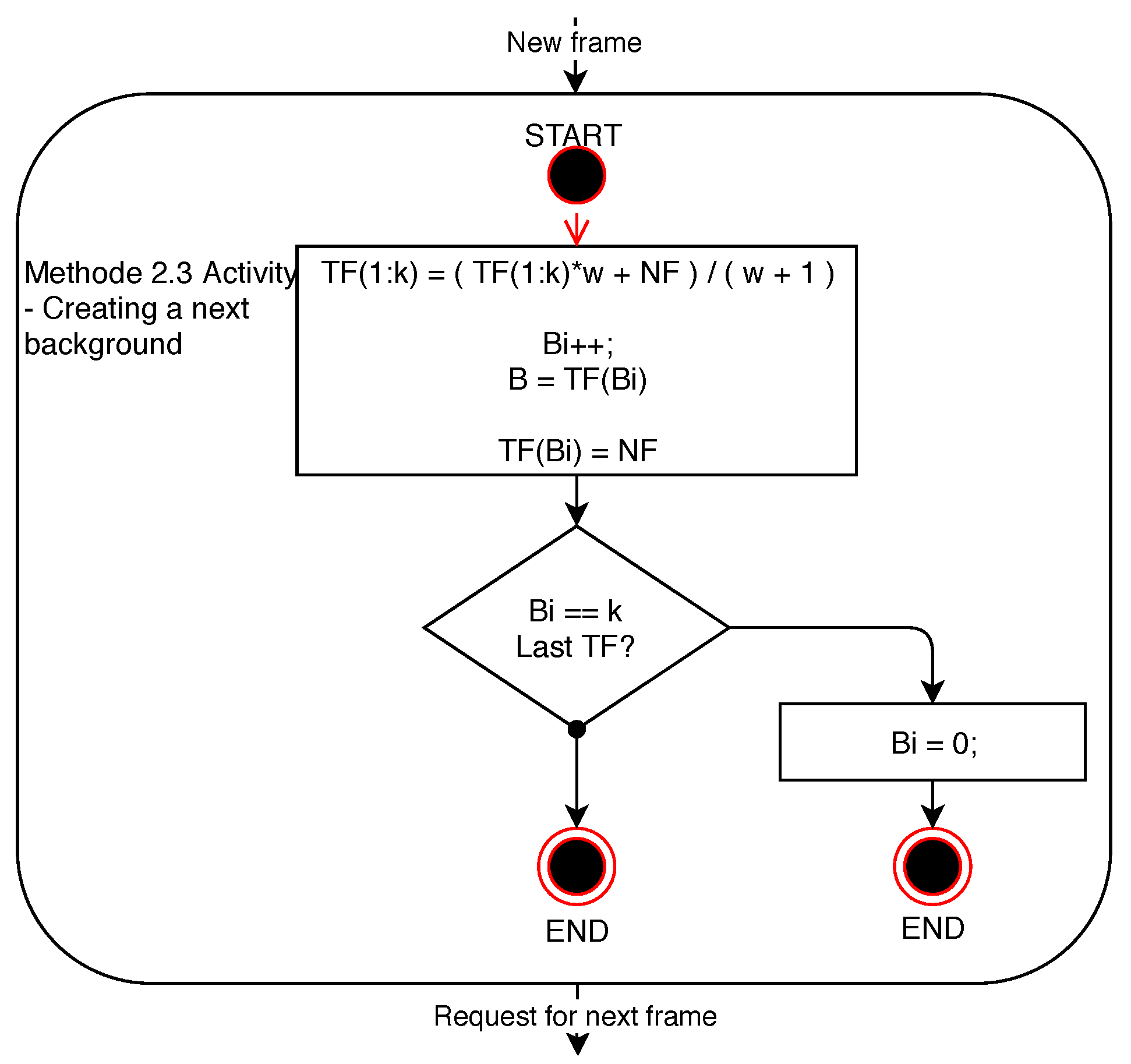

- Average of n-previous frames (hereinafter referred to as Method 2.3).

2.2.1. A Frame Previous to the Current Frame (Method 2.1)

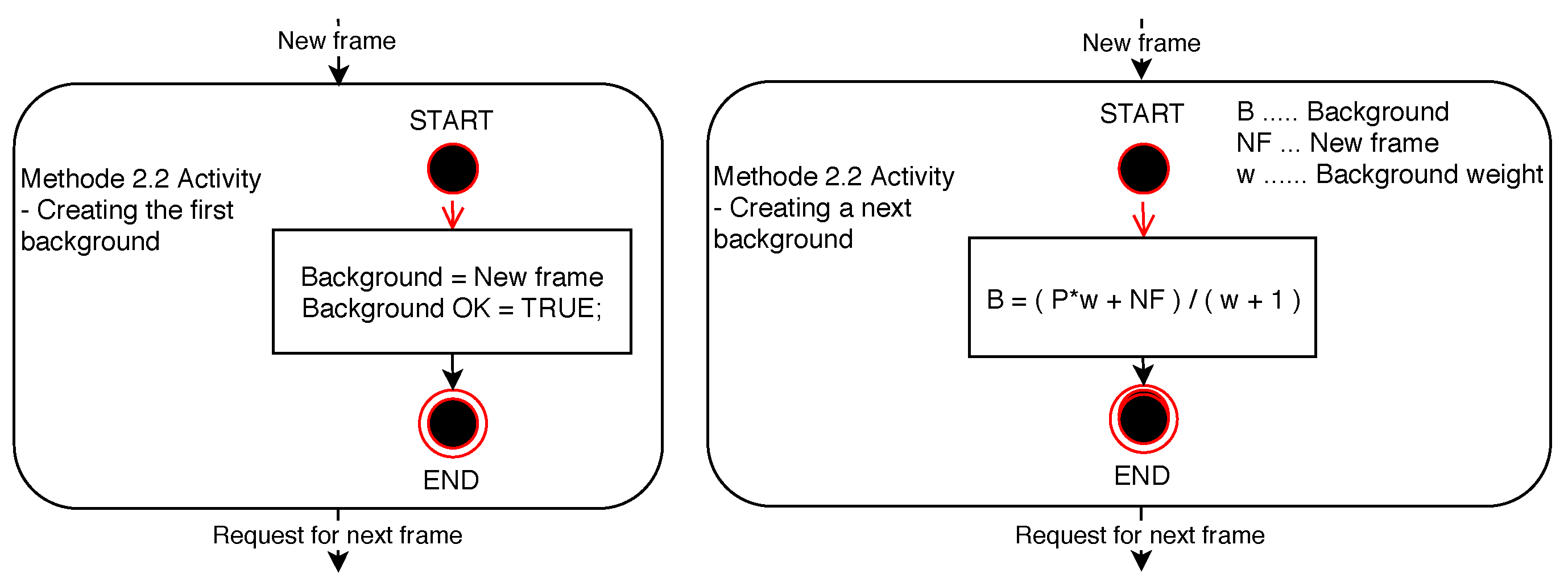

2.2.2. Average of All Previous Frames (Method 2.2)

2.2.3. Average of n-Previous Frames (Method 2.3)

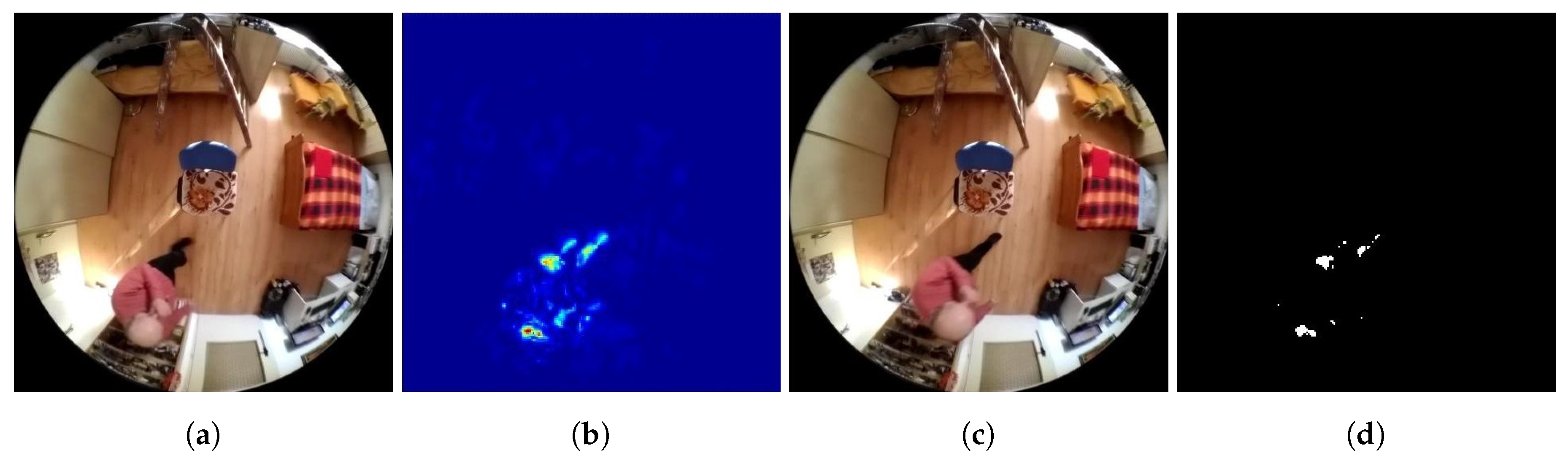

2.3. The Method of Optical Flow (Method 3)

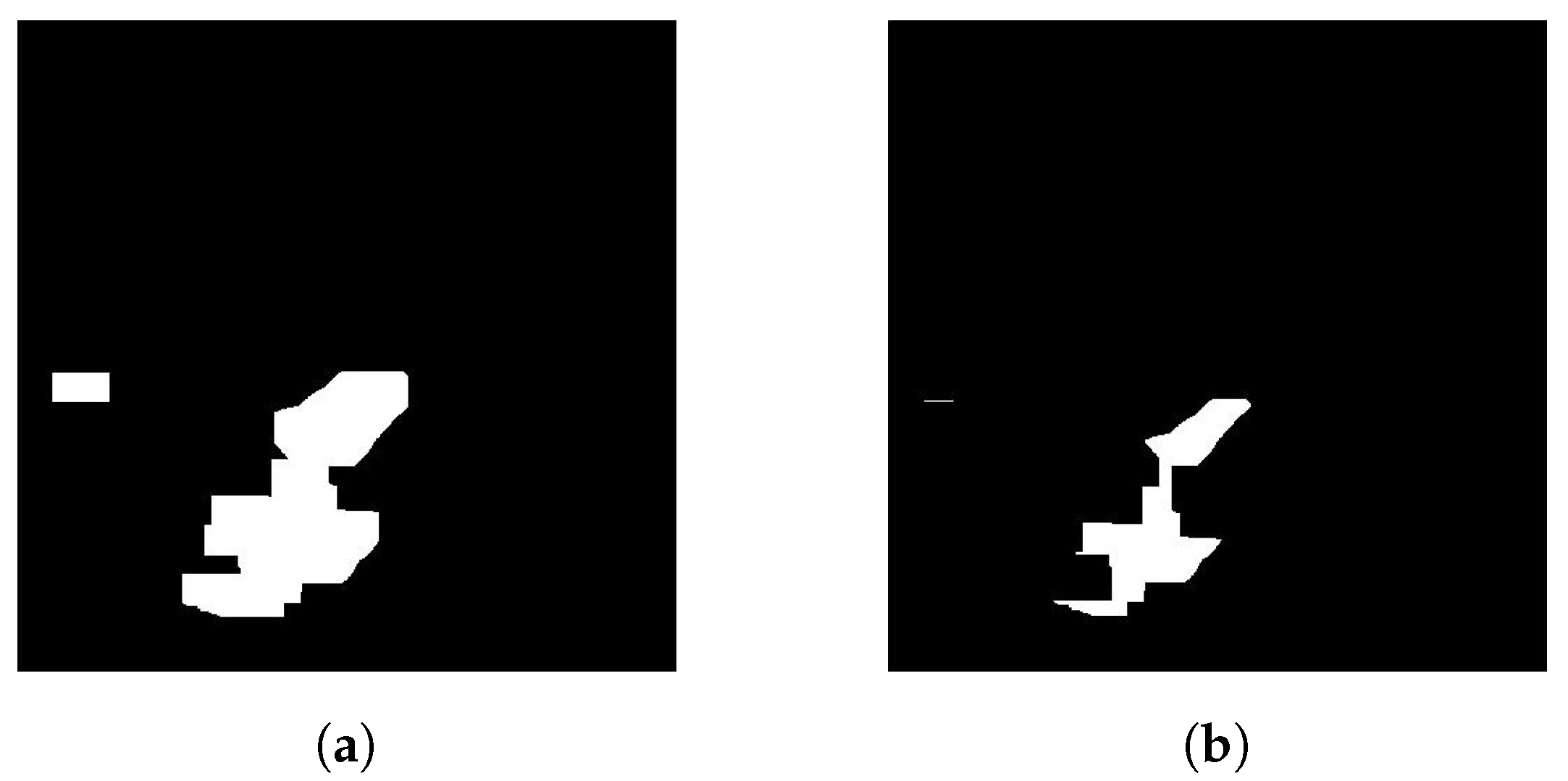

3. Adjustment of the Objects Found

- Filter erosion of the objects found to remove image noise findings.

- Dilatation of the objects found.

- Erosion of the object found.

3.1. Identification of Object Findings

3.2. Filtration of Error Findings from Findings of Persons

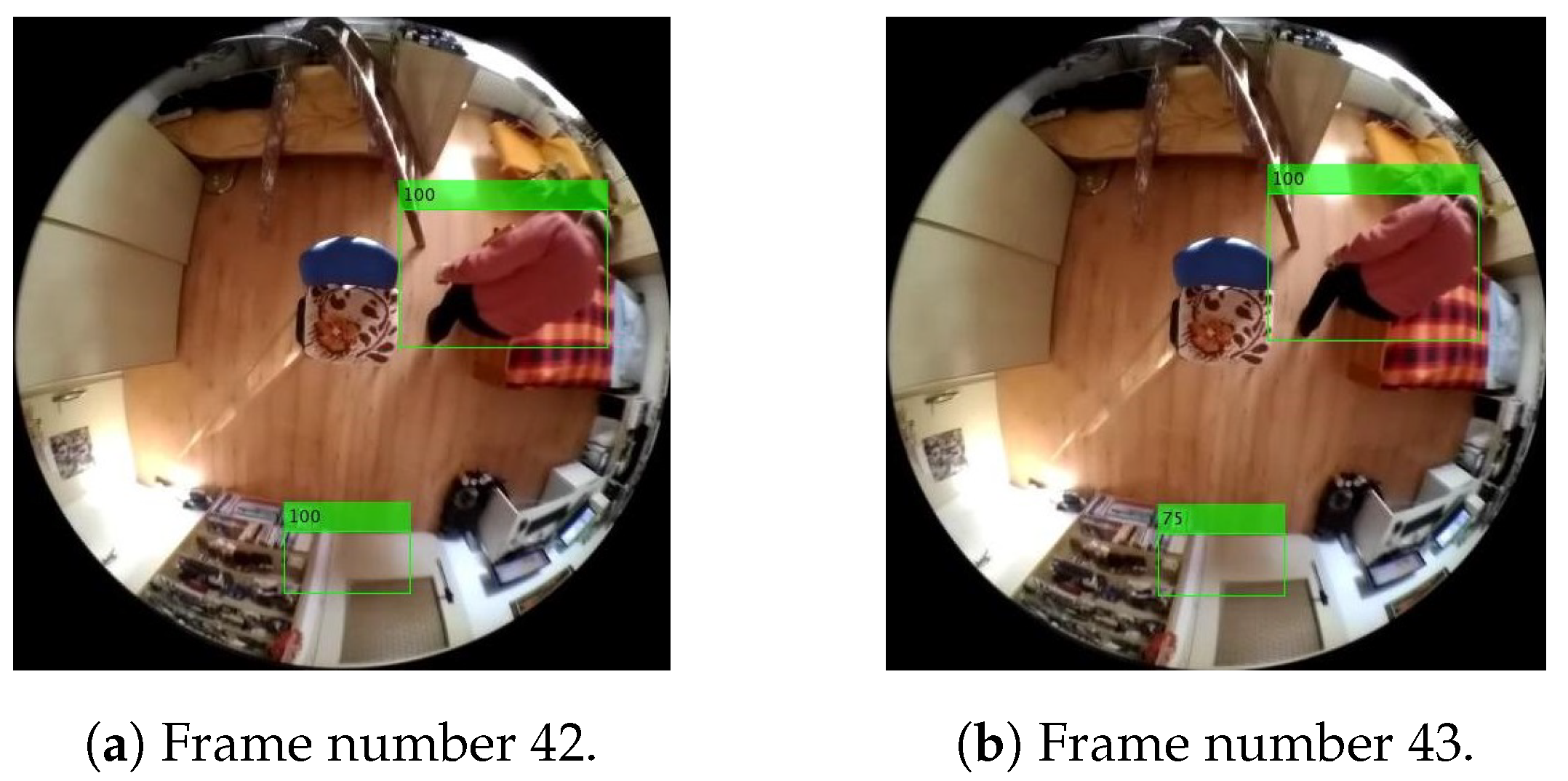

3.3. Cataloguing of Persons Found

4. Results

4.1. Verification of the Results

- Criterion: Average percentage of custom pixels of person findings in the field for marking persons

- Criterion: Average lifespan of person findings

- Criterion: Average percentage intersection of the areas of marking of the person finding with the verification pattern

- Criterion: Average error of location of marking of person findings with the intersection in the verification pattern

- Criterion: Success rate of the detection of correct person findings in relation to all current findings.

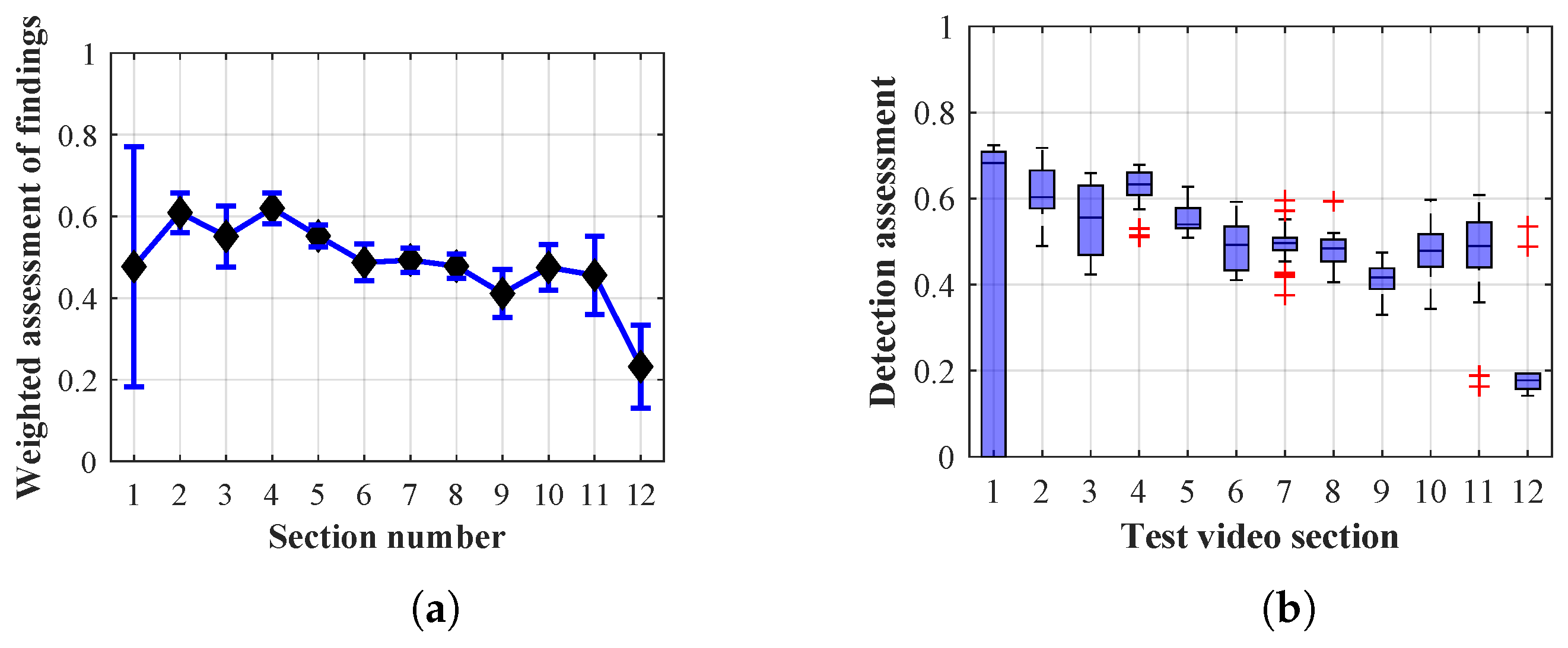

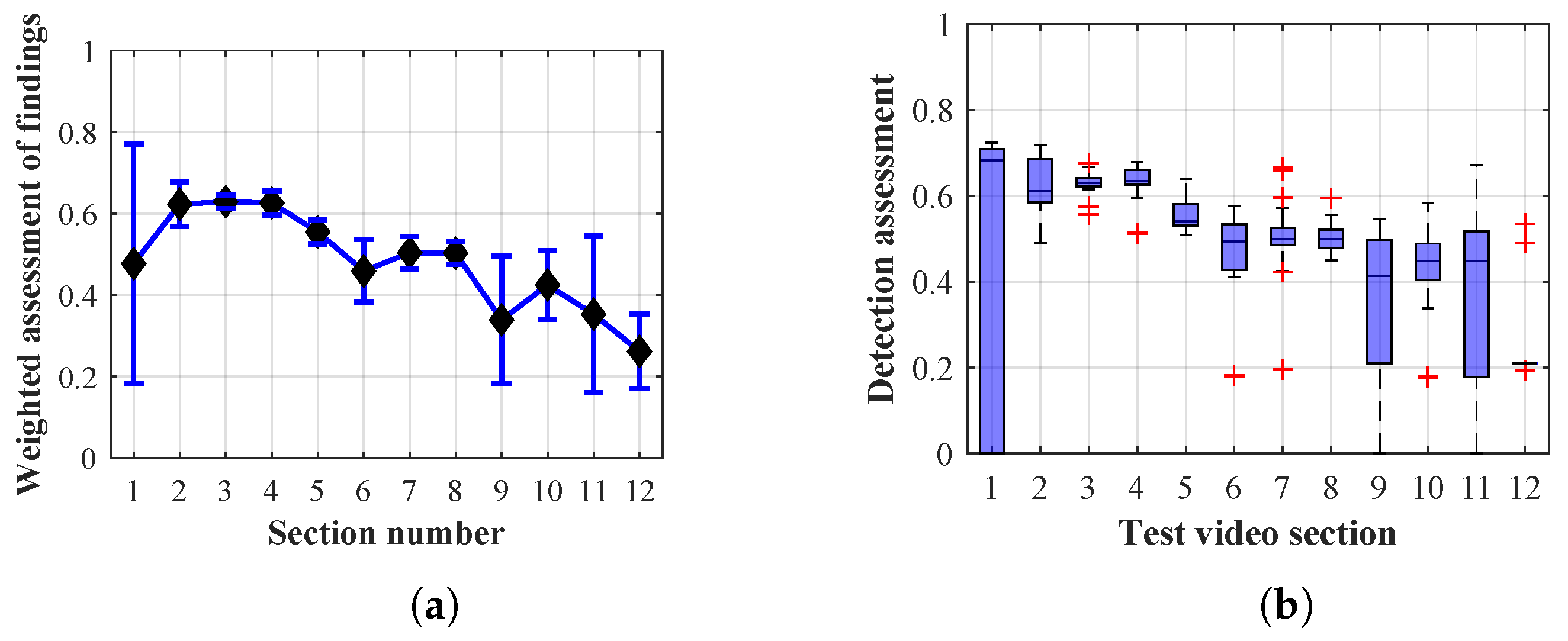

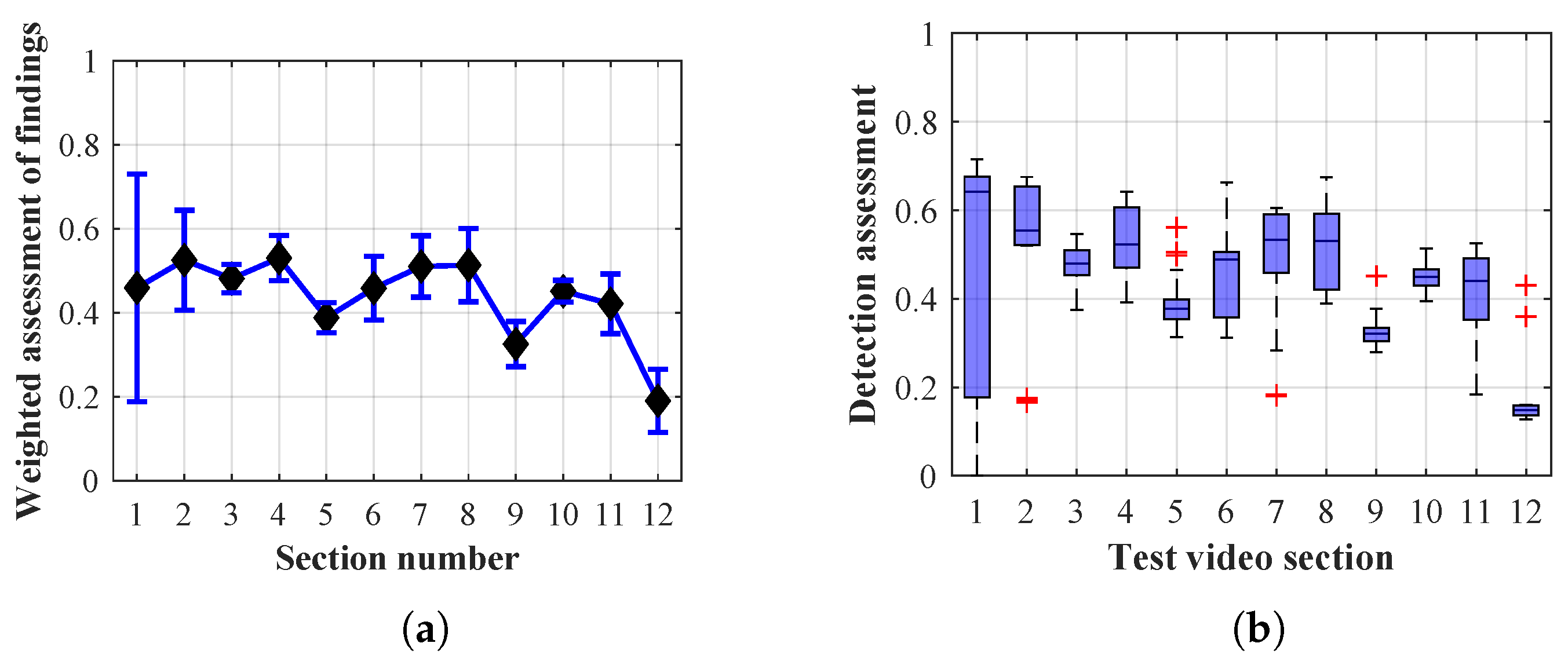

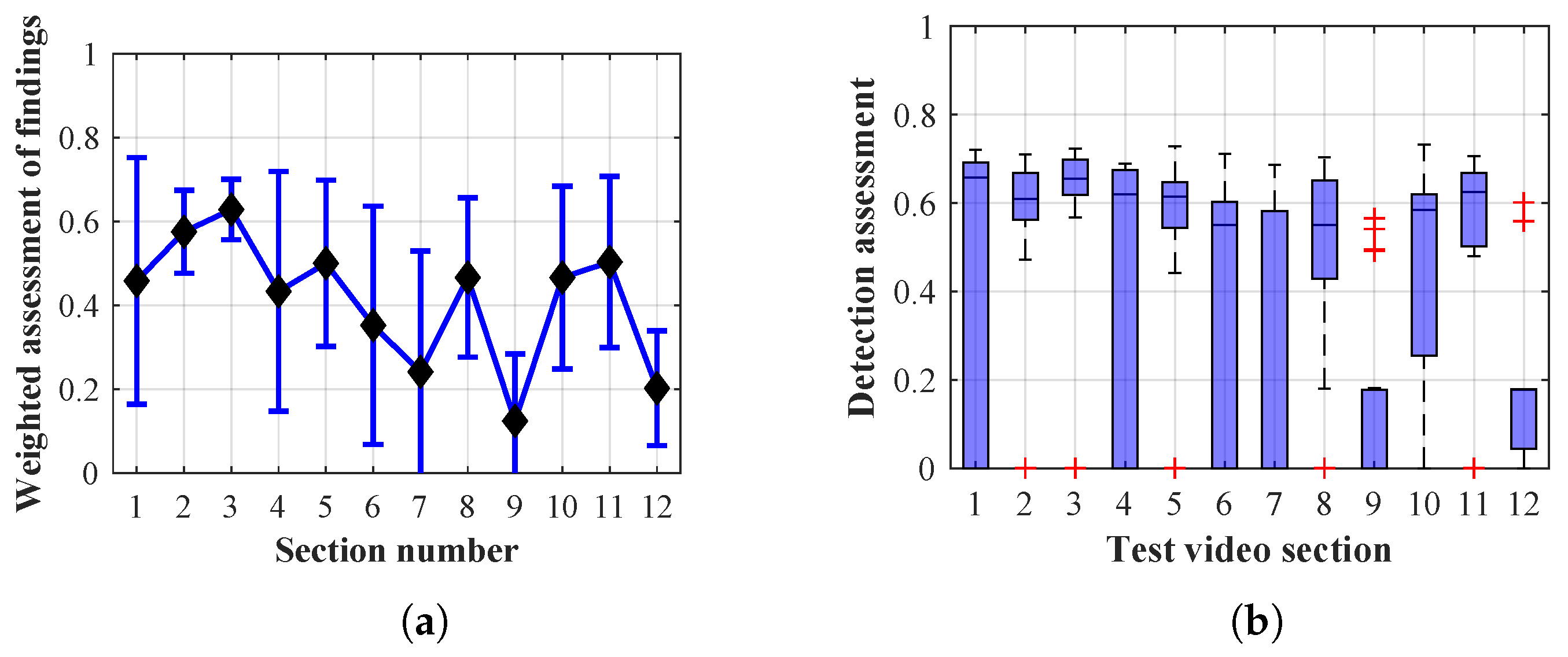

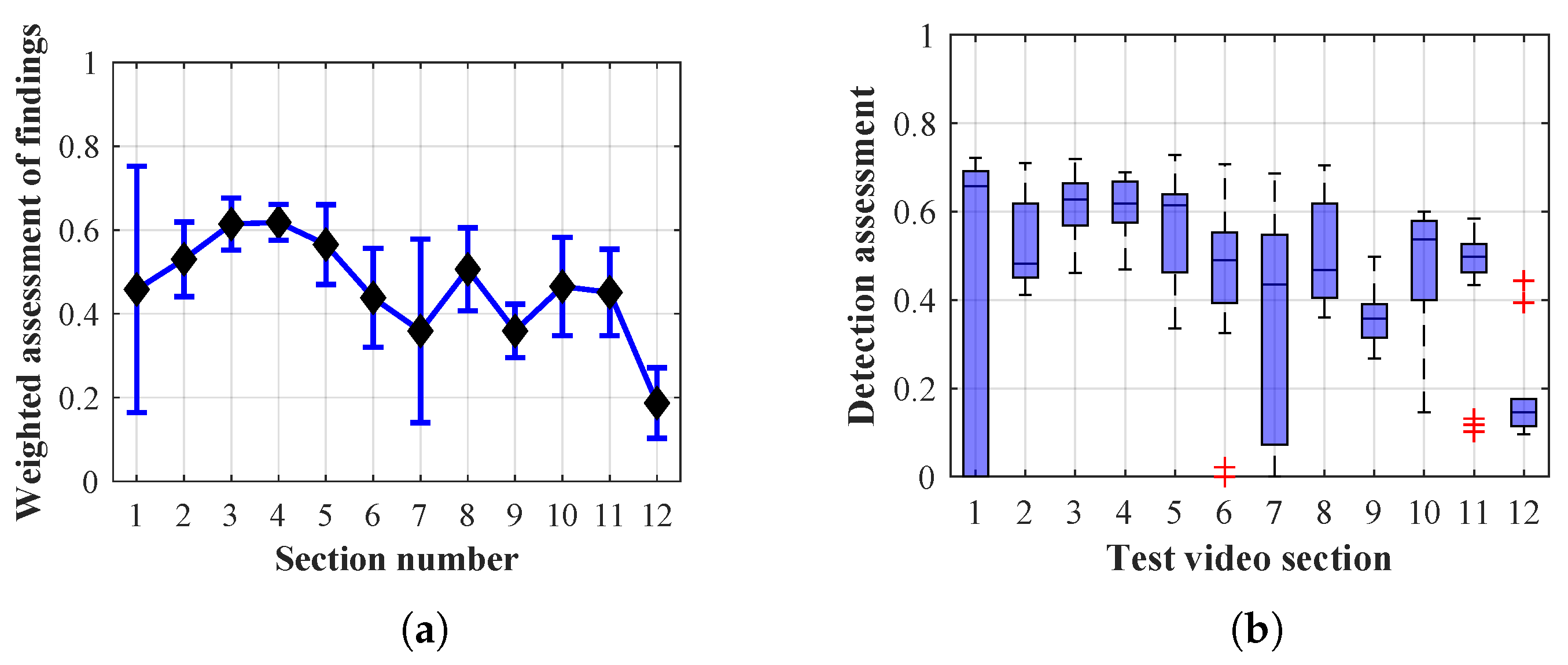

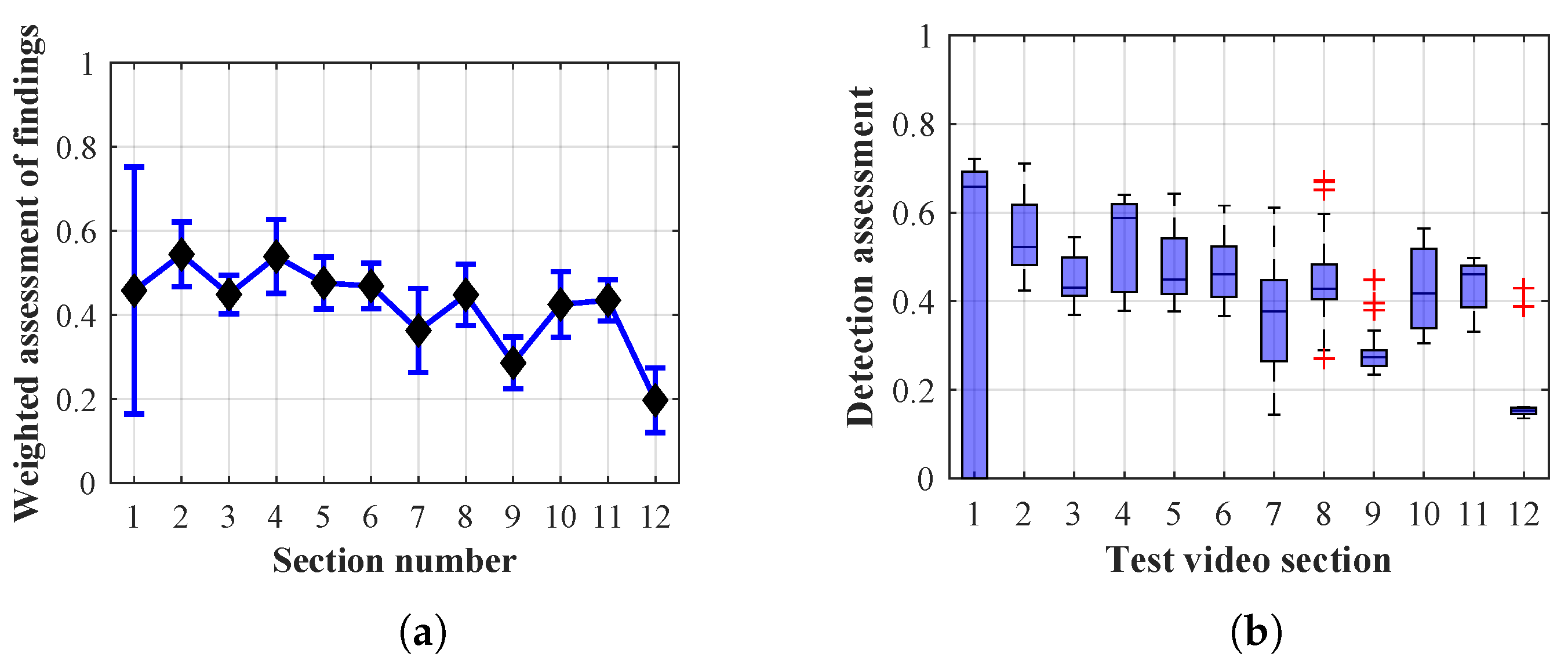

4.1.1. Evaluation of Method 1.1

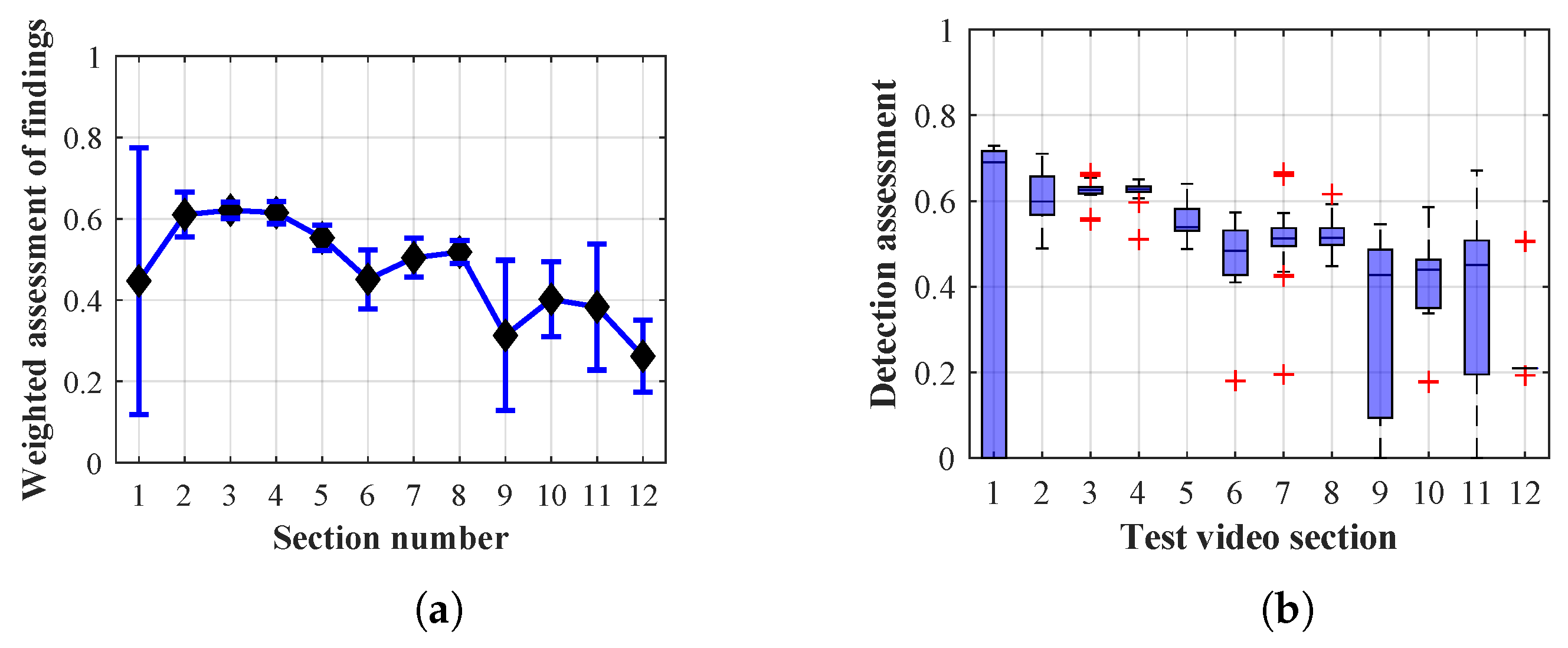

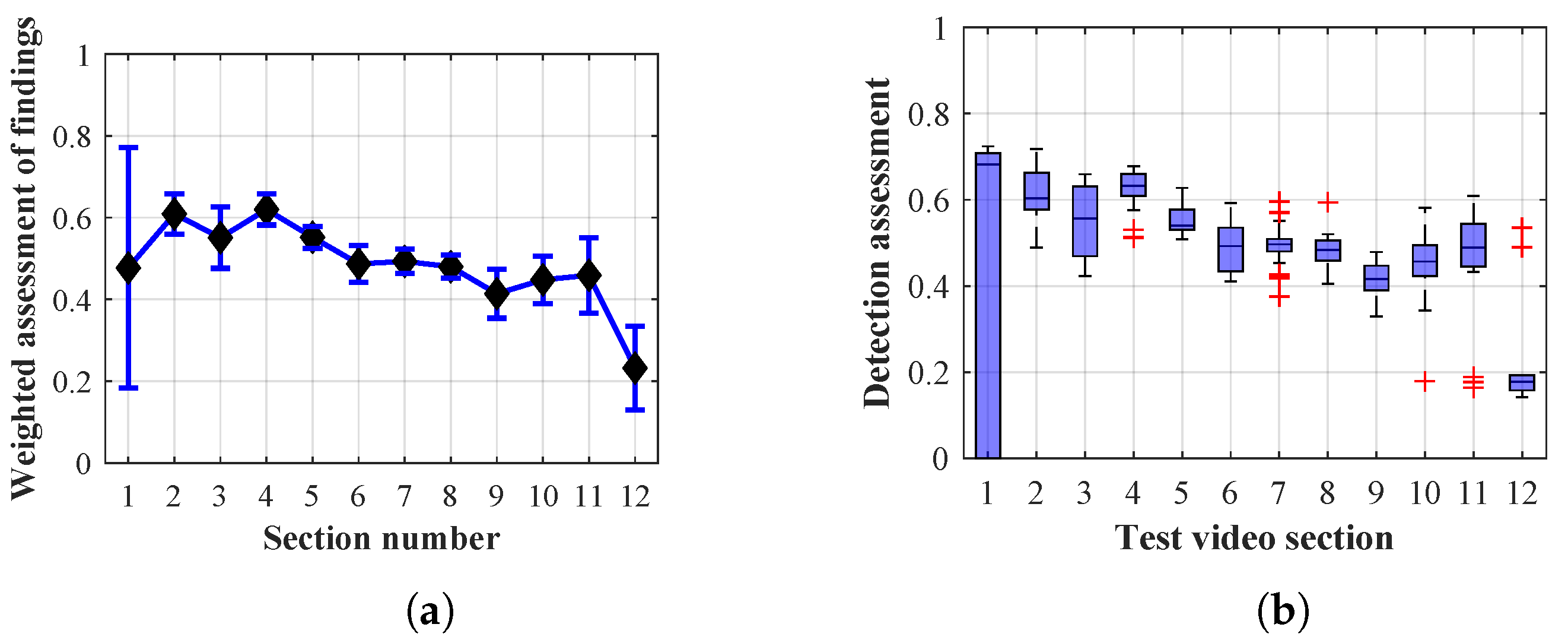

4.1.2. Evaluation of Method 1.2

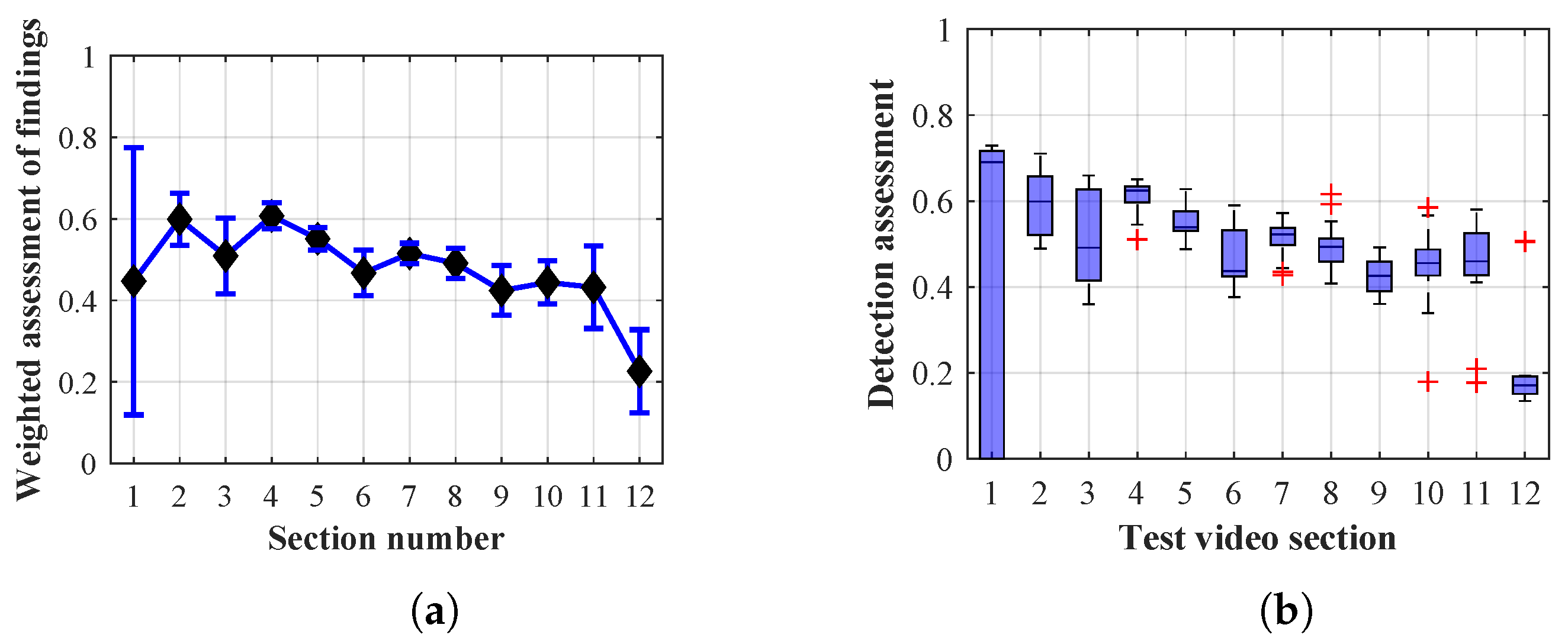

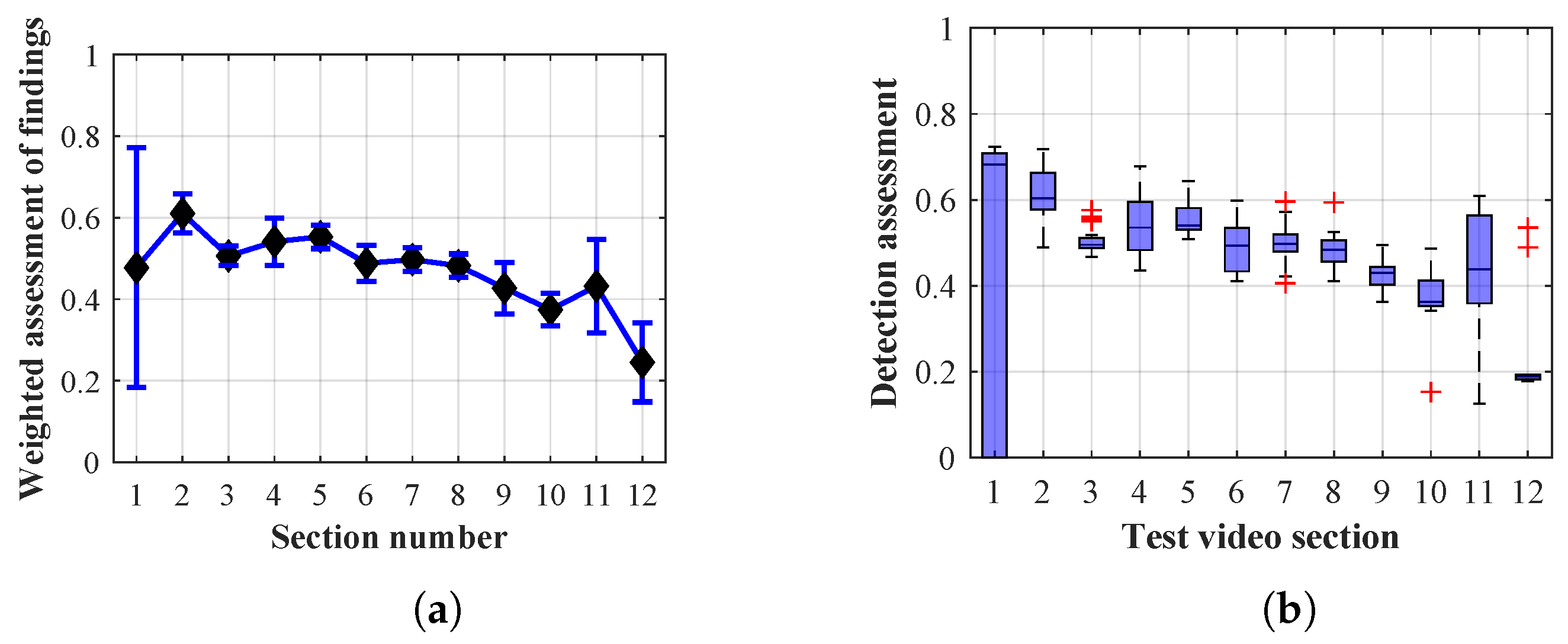

4.1.3. Evaluation of Method 1.3

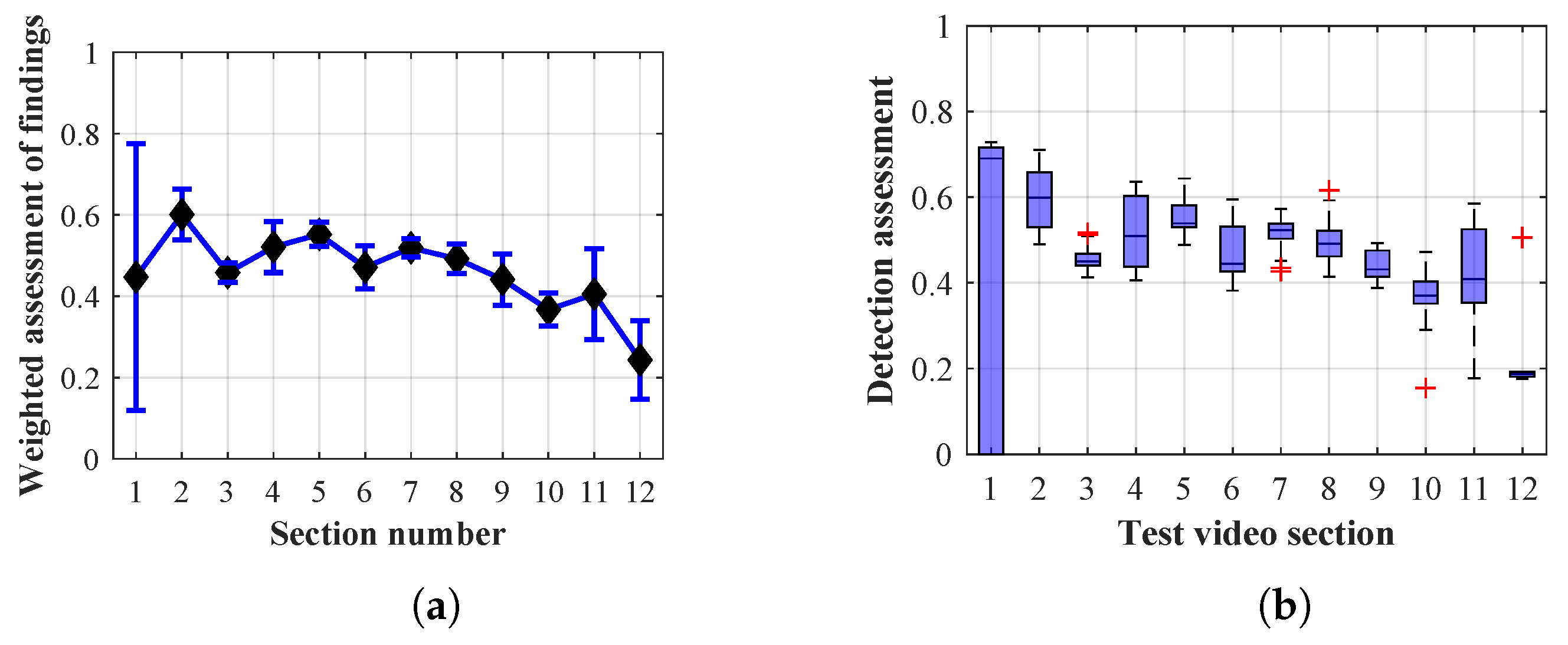

4.1.4. Evaluation of Method 2.1

4.1.5. Evaluation of Method 2.2

4.1.6. Evaluation of Method 2.3

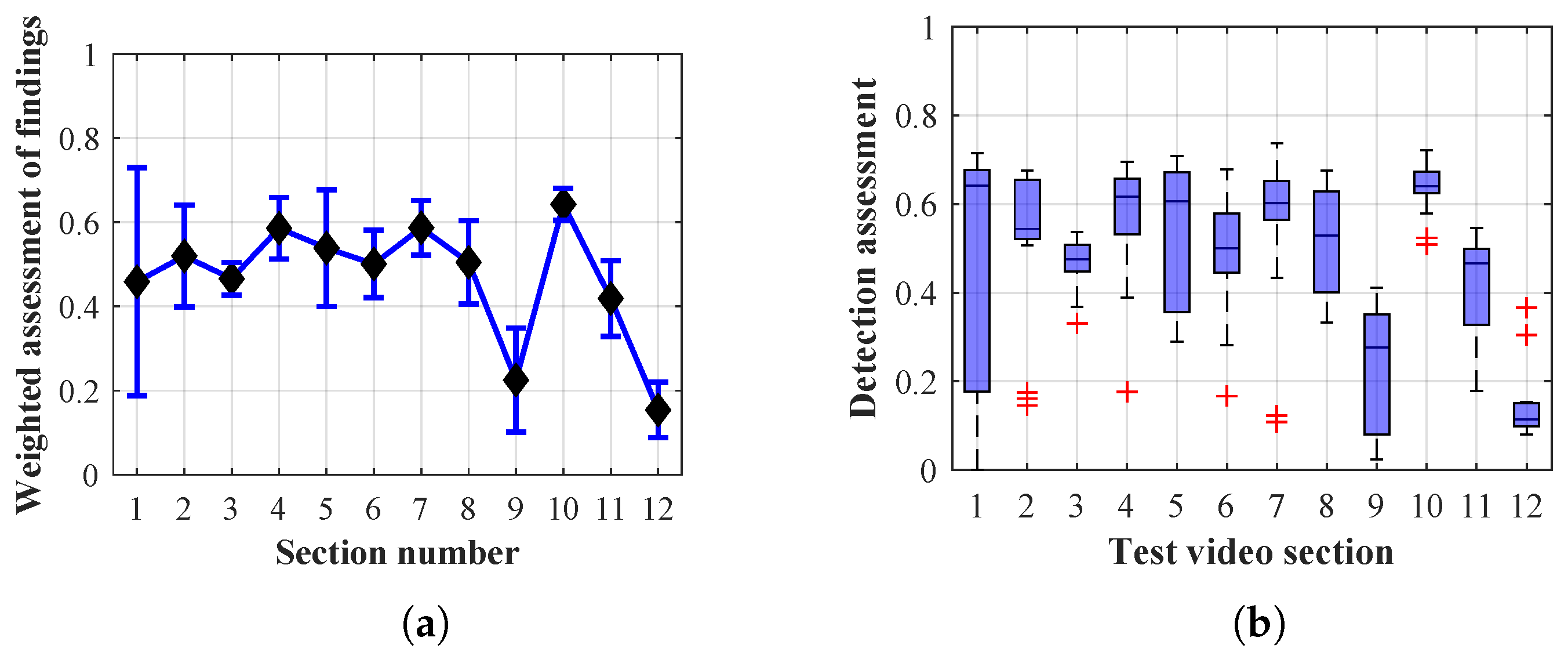

4.1.7. Evaluation of Method 3

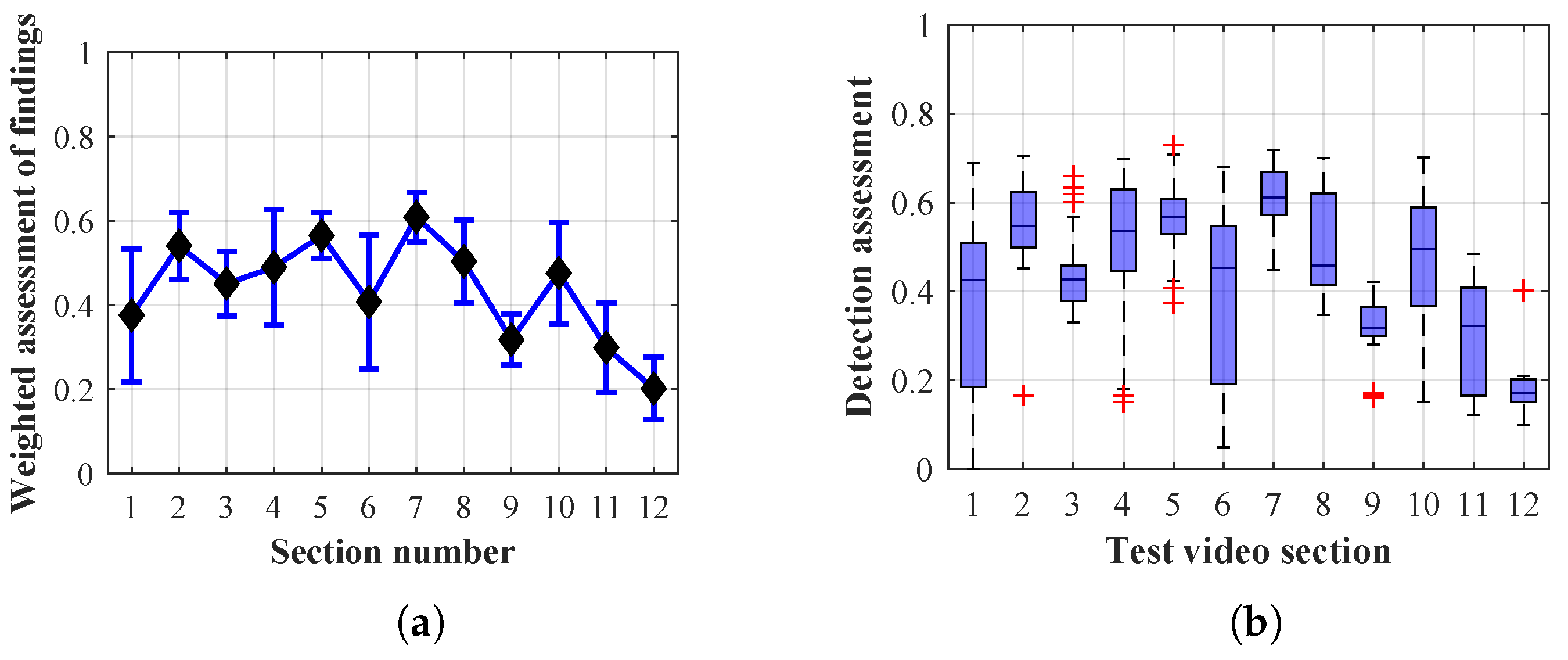

4.2. Mean Error Calculation

4.3. Assessment of the Best Working Method for the Test Video Scene

- Lower limits of the correct finding (Q1).

- Upper limits of the incorrect finding (Q2).

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Luppe, C.; Shabani, A. Towards reliable intelligent occupancy detection for smart building applications. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Kahily, H.M.; Sudheer, A.P.; Narayanan, M.D. RGB-D sensor based human detection and tracking using an armed robotic system. In Proceedings of the 2014 International Conference on Advances in Electronics Computers and Communications, Bangalore, India, 10–11 October 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Ahmad, M. Human motion detection and segmentation from moving image sequences. In Proceedings of the 2008 International Conference on Electrical and Computer Engineering, Dhaka, Bangladesh, 20–22 December 2008; pp. 407–411. [Google Scholar]

- Horn-Schunck Optical Flow Method—File Exchange—MATLAB Central. Library Catalog. Available online: www.mathworks.com (accessed on 16 June 2020).

- a/l Kanawathi, J.; Mokri, S.S.; Ibrahim, N.; Hussain, A.; Mustafa, M.M. Motion detection using Horn Schunck algorithm and implementation. In Proceedings of the 2009 International Conference on Electrical Engineering and Informatics, Bangi, Malaysia, 5–7 August 2009; pp. 83–87. [Google Scholar] [CrossRef]

- Karch, P.; Zolotova, I. An experimental comparison of modern methods of segmentation. In Proceedings of the 2010 IEEE 8th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herlany, Slovakia, 28–30 January 2010; pp. 247–252. [Google Scholar] [CrossRef]

- Kotyza, J.; Machacek, Z.; Koziorek, J. Detection of Directions in an Image as a Method for Circle Detection. IFAC-PapersOnLine 2018, 51, 496–501. [Google Scholar] [CrossRef]

- Ondraczka, T.; Machacek, Z.; Koziorek, J.; Ondraczka, L. Autonomous Recognition System for Skin Pigment Evaluation. IFAC-PapersOnLine 2018, 51, 196–201. [Google Scholar] [CrossRef]

- Chang, X.; Ma, Z.; Lin, M.; Yang, Y.; Hauptmann, A.G. Feature Interaction Augmented Sparse Learning for Fast Kinect Motion Detection. IEEE Trans. Image Process. 2017, 26, 3911–3920. [Google Scholar] [CrossRef]

- Cai, G.; Wang, J.; Qian, K.; Chen, J.; Li, S.; Lee, P.S. Extremely Stretchable Strain Sensors Based on Conductive Self-Healing Dynamic Cross-Links Hydrogels for Human-Motion Detection. Adv. Sci. 2017, 4, 1600190. [Google Scholar] [CrossRef]

- Yang, Z.; Pang, Y.; Han, X.L.; Yang, Y.; Ling, J.; Jian, M.; Zhang, Y.; Yang, Y.; Ren, T.L. Graphene Textile Strain Sensor with Negative Resistance Variation for Human Motion Detection. ACS Nano 2018, 12, 9134–9141. [Google Scholar] [CrossRef] [PubMed]

- Minoli, D.; Sohraby, K.; Occhiogrosso, B. IoT Considerations, Requirements, and Architectures for Smart Buildings—Energy Optimization and Next-Generation Building Management Systems. IEEE Internet Things J. 2017, 4, 269–283. [Google Scholar] [CrossRef]

- Lin, K.; Chen, M.; Deng, J.; Hassan, M.M.; Fortino, G. Enhanced Fingerprinting and Trajectory Prediction for IoT Localization in Smart Buildings. IEEE Trans. Autom. Sci. Eng. 2016, 13, 1294–1307. [Google Scholar] [CrossRef]

- Plageras, A.P.; Psannis, K.E.; Stergiou, C.; Wang, H.; Gupta, B. Efficient IoT-based sensor BIG Data collection–processing and analysis in smart buildings. Future Gener. Comput. Syst. 2018, 82, 349–357. [Google Scholar] [CrossRef]

- Samad, T.; Koch, E.; Stluka, P. Automated Demand Response for Smart Buildings and Microgrids: The State of the Practice and Research Challenges. Proc. IEEE 2016, 104, 726–744. [Google Scholar] [CrossRef]

- Bravo, J.C.; Castilla, M.V. Geometric Objects: A Quality Index to Electromagnetic Energy Transfer Performance in Sustainable Smart Buildings. Symmetry 2018, 10, 676. [Google Scholar] [CrossRef]

- Mocnej, J.; Seah, W.K.; Pekar, A.; Zolotova, I. Decentralised IoT Architecture for Efficient Resources Utilisation. IFAC-PapersOnLine 2018, 51, 168–173. [Google Scholar] [CrossRef]

- Cai, X.; Wang, P.; Du, L.; Cui, Z.; Zhang, W.; Chen, J. Multi-Objective Three-Dimensional DV-Hop Localization Algorithm With NSGA-II. IEEE Sens. J. 2019, 19, 10003–10015. [Google Scholar] [CrossRef]

- Cui, Z.; Sun, B.; Wang, G.; Xue, Y.; Chen, J. A Novel Oriented Cuckoo Search Algorithm to Improve DV-Hop Performance for Cyber–Physical Systems. J. Parallel Distrib. Comput. 2017, 103, 42–52. [Google Scholar] [CrossRef]

- Wang, G.G.; Cai, X.; Cui, Z.; Min, G.; Chen, J. High Performance Computing for Cyber Physical Social Systems by Using Evolutionary Multi-Objective Optimization Algorithm. IEEE Trans. Emerg. Top. Comput. 2017. [Google Scholar] [CrossRef]

- Gao, M.; Tian, J.; Ai, L.; Zhang, F. Sewage Image Feature Extraction and Turbidity Degree Detection Based on Embedded System. In Proceedings of the 2008 International Conference on MultiMedia and Information Technology, Yichang, China, 30–31 December 2008; pp. 357–360. [Google Scholar] [CrossRef]

- Pang, Y.; Yuan, Y.; Li, X.; Pan, J. Efficient HOG human detection. Signal Process. 2011, 91, 773–781. [Google Scholar] [CrossRef]

- Pang, Y.; Yan, H.; Yuan, Y.; Wang, K. Robust CoHOG Feature Extraction in Human-Centered Image/Video Management System. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 458–468. [Google Scholar] [CrossRef]

- Lin, H.I.; Hsu, M.H.; Chen, W.K. Human hand gesture recognition using a convolution neural network. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), Taipei, Taiwan, 18–22 August 2014; pp. 1038–1043. [Google Scholar] [CrossRef]

- Haddadnia, J.; Ahmadi, M. N-feature neural network human face recognition. Image Vis. Comput. 2004, 22, 1071–1082. [Google Scholar] [CrossRef]

- Yoo, J.H.; Hwang, D.; Moon, K.Y.; Nixon, M.S. Automated Human Recognition by Gait using Neural Network. In Proceedings of the 2008 First Workshops on Image Processing Theory, Tools and Applications, Sousse, Tunisia, 23–26 November 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Robinson, R.M.; Lee, H.; McCourt, M.J.; Marathe, A.R.; Kwon, H.; Ton, C.; Nothwang, W.D. Human-autonomy sensor fusion for rapid object detection. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 305–312. [Google Scholar] [CrossRef]

- Setjo, C.H.; Achmad, B.; Faridah. Thermal image human detection using Haar-cascade classifier. In Proceedings of the 2017 7th International Annual Engineering Seminar (InAES), Yogyakarta, Indonesia, 1–2 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- A Motion Detection Approach based on UAV Image Sequence. KSII Trans. Internet Inf. Syst. 2018, 12. [CrossRef]

- Yoon, M.-K.; Mohan, S.; Choi, J.; Kim, J.-E.; Sha, L. SecureCore: A multicore-based intrusion detection architecture for real-time embedded systems. In Proceedings of the 2013 IEEE 19th Real-Time and Embedded Technology and Applications Symposium (RTAS), Philadelphia, PA, USA, 9–11 April 2013; pp. 21–32. [Google Scholar] [CrossRef]

- Rahmatian, M.; Kooti, H.; Harris, I.G.; Bozorgzadeh, E. Hardware-Assisted Detection of Malicious Software in Embedded Systems. IEEE Embed. Syst. Lett. 2012, 4, 94–97. [Google Scholar] [CrossRef]

- Paral, P.; Chatterjee, A.; Rakshit, A. Vision Sensor-Based Shoe Detection for Human Tracking in a Human–Robot Coexisting Environment: A Photometric Invariant Approach Using DBSCAN Algorithm. IEEE Sens. J. 2019, 19, 4549–4559. [Google Scholar] [CrossRef]

- Yin, C.; Chiu, C.F.; Hsieh, C.C. A 0.5 V, 14.28-kframes/s, 96.7-dB Smart Image Sensor With Array-Level Image Signal Processing for IoT Applications. IEEE Trans. Electron. Devices 2016, 63, 1134–1140. [Google Scholar] [CrossRef]

- Maryam Noorbakhsh, S.; Habibi, M. Design of a low power multiple object tracking vision sensor for use in robot localization and surveillance tasks. In Proceedings of the 2016 2nd International Conference of Signal Processing and Intelligent Systems (ICSPIS), Tehran, Iran, 14–15 December 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Tanaka, J.; Imamoto, H.; Seki, T.; Oba, M. Low power wireless human detector utilizing thermopile infrared array sensor. In Proceedings of the IEEE SENSORS 2014 Proceedings, Valencia, Spain, 2–5 November 2014; pp. 462–465. [Google Scholar] [CrossRef]

- Lan, J.; Li, J.; Xiang, Y.; Huang, T.; Yin, Y.; Yang, J. Fast Automatic Target Detection System Based on a Visible Image Sensor and Ripple Algorithm. IEEE Sens. J. 2013, 13, 2720–2728. [Google Scholar] [CrossRef]

- Young, C.; Omid-Zohoor, A.; Lajevardi, P.; Murmann, B. A Data-Compressive 1.5/2.75-bit Log-Gradient QVGA Image Sensor With Multi-Scale Readout for Always-On Object Detection. IEEE J. Solid-State Circuits 2019, 54, 2932–2946. [Google Scholar] [CrossRef]

- Rusci, M.; Rossi, D.; Lecca, M.; Gottardi, M.; Farella, E.; Benini, L. An Event-Driven Ultra-Low-Power Smart Visual Sensor. IEEE Sens. J. 2016, 16, 5344–5353. [Google Scholar] [CrossRef]

- Zou, Y.; Gottardi, M.; Perenzoni, D.; Perenzoni, M.; Stoppa, D. A 1.6 mW 320×240-pixel vision sensor with programmable dynamic background rejection and motion detection. In Proceedings of the 2017 IEEE Sensors, Glasgow, UK, 29 October–1 November 2017; pp. 1–3. [Google Scholar] [CrossRef]

- Carey, S.J.; Barr, D.R.W.; Dudek, P. Demonstration of a low power image processing system using a SCAMP3 vision chip. In Proceedings of the 2011 Fifth ACM/IEEE International Conference on Distributed Smart Cameras, Ghent, Belgium, 22–25 August 2011; pp. 1–2. [Google Scholar] [CrossRef]

- Habibi, M. A low power smart CMOS image sensor for surveillance applications. In Proceedings of the 2010 6th Iranian Conference on Machine Vision and Image Processing, Isfahan, Iran, 27–28 October 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Lacassagne, L.; Manzanera, A.; Dupret, A. Motion detection: Fast and robust algorithms for embedded systems. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3265–3268. [Google Scholar] [CrossRef]

- Fernandez-Berni, J.; Carmona-Galan, R.; Rodriguez-Vazquez, A. Ultralow-Power Processing Array for Image Enhancement and Edge Detection. IEEE Trans. Circuits Syst. II Express Briefs 2012, 59, 751–755. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, R.; Wang, L.; Green, R.C.; Dounis, A.I. A fuzzy adaptive comfort temperature model with grey predictor for multi-agent control system of smart building. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 728–735. [Google Scholar] [CrossRef]

- Ock, J.; Issa, R.R.A.; Flood, I. Smart Building Energy Management Systems (BEMS) simulation conceptual framework. In Proceedings of the 2016 Winter Simulation Conference (WSC), Washington, DC, USA, 11–14 December 2016; pp. 3237–3245. [Google Scholar] [CrossRef]

- O’Keeffe, J.T. System and Method for User Profile Enabled Smart Building Control. U.S. Patent US20170055126A1, 23 February 2017. [Google Scholar]

- Mituyosi, T.; Yagi, Y.; Yachida, M. Real-time human feature acquisition and human tracking by omnidirectional image sensor. In Proceedings of the Proceedings of IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, MFI2003, Tokyo, Japan, 1 August 2003; pp. 258–263. [Google Scholar] [CrossRef]

- Sanoj, C.S.; Vijayaraj, N.; Rajalakshmi, D. Vision approach of human detection and tracking using focus tracing analysis. In Proceedings of the 2013 International Conference on Information Communication and Embedded Systems (ICICES), Chennai, India, 21–22 February 2013; pp. 64–68. [Google Scholar] [CrossRef]

- White, I.; Borah, D.K.; Tang, W. Robust Optical Spatial Localization Using a Single Image Sensor. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar] [CrossRef]

- Alhilal, M.S.; Soudani, A.; Al-Dhelaan, A. Low power scheme for image based object identification in wireless multimedia sensor networks. In Proceedings of the 2014 International Conference on Multimedia Computing and Systems (ICMCS), Marrakech, Morocco, 14–16 April 2014; pp. 927–932. [Google Scholar] [CrossRef]

- Uhm, T.; Park, H.; Seo, D.; Park, J.I. Human-of-interest tracking by integrating two heterogeneous vision sensors. In Proceedings of the 2010 IEEE Virtual Reality Conference (VR), Boston, MA, USA, 20–24 March 2010; pp. 309–310. [Google Scholar] [CrossRef]

- Shetty, A.D.; Shubha, B.; Suryanarayana, K. Detection and tracking of a human using the infrared thermopile array sensor—”Grid-EYE”. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 1490–1495. [Google Scholar] [CrossRef]

- Zou, Y.; Gottardi, M.; Perenzoni, D.; Perenzoni, M.; Stoppa, D. Live demonstration: Motion detection vision sensor with dynamic background rejection. In Proceedings of the 2017 IEEE Sensors, Glasgow, UK, 29 October–1 November 2017. [Google Scholar] [CrossRef]

- Ondraczka, T.; Machacek, Z.; Kubicek, J.; Bryjova, I.; Cerny, M.; Koziorek, J.; Prochazka, V. Automated image detection of the main bloodstream stem from CT angiography image. IFAC-PapersOnLine 2016, 49, 109–114. [Google Scholar] [CrossRef]

- Hercik, R.; Slaby, R.; Machacek, Z. Image processing for low-power microcontroller application. Prz. Elektrotech. 2012, 88, 343–346. [Google Scholar]

- Miaou, S.G.; Sung, P.-H.; Huang, C.-Y. A Customized Human Fall Detection System Using Omni-Camera Images and Personal Information. In Proceedings of the 1st Transdisciplinary Conference on Distributed Diagnosis and Home Healthcare (D2H2), Virginia, VA, USA, 2–4 April 2006; pp. 39–42. [Google Scholar] [CrossRef]

- Ong, K.S.; Hsu, Y.H.; Fu, L.C. Sensor fusion based human detection and tracking system for human-robot interaction. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4835–4840. [Google Scholar] [CrossRef]

- Chang, Y.T.; Shih, T.K. Human fall detection based on event pattern matching with ultrasonic array sensors. In Proceedings of the 2017 10th International Conference on Ubi-media Computing and Workshops (Ubi-Media), Pattaya, Thailand, 1–4 August 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Nakamura, R.; Hadama, H. Target localization using multi-static UWB sensor for indoor monitoring system. In Proceedings of the 2017 IEEE Topical Conference on Wireless Sensors and Sensor Networks (WiSNet), Phoenix, AZ, USA, 15–18 January 2017; pp. 37–40. [Google Scholar] [CrossRef]

- Verdant, A.; Dupret, A.; Mathias, H.; Villard, P.; Lacassagne, L. Adaptive Multiresolution for Low Power CMOS Image Sensor. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; pp. V-185–V-188. [Google Scholar] [CrossRef]

- Klein, J.; Lacassagne, L.; Mathias, H.; Moutault, S.; Dupret, A. Low Power Image Processing: Analog Versus Digital Comparison. In Proceedings of the Seventh International Workshop on Computer Architecture for Machine Perception (CAMP’05), Palermo, Italy, 4–6 July 2005; pp. 111–115. [Google Scholar] [CrossRef]

- Choi, J. Review of low power image sensors for always-on imaging. In Proceedings of the 2016 International SoC Design Conference (ISOCC), Jeju, Korea, 23–26 October 2016; pp. 11–12. [Google Scholar] [CrossRef]

- Ait-Kaci, F.; Mathias, H.; Zhang, M.; Dupret, A. Exploration of analog pre-processing architectures for coarse low power motion detection. In Proceedings of the 2011 IEEE 9th International New Circuits and systems conference, Bordeaux, France, 26–29 June 2011; pp. 45–48. [Google Scholar] [CrossRef]

- Vornicu, I.; Goraş, L. Image processing using a CMOS analog parallel architecture. In Proceedings of the CAS 2010 Proceedings (International Semiconductor Conference), Sinaia, Romania, 11–13 October 2010; pp. 461–464. [Google Scholar] [CrossRef]

- Kim, Y.S.; Park, J.I.; Lee, D.J.; Chun, M.G. Real time detection of moving human based on digital image processing. In Proceedings of the SICE Annual Conference 2007, Takamatsu, Japan, 17–20 September 2007; pp. 2030–2033. [Google Scholar] [CrossRef]

- Yang, B.; Yang, M.; Plaza, A.; Gao, L.; Zhang, B. Dual-Mode FPGA Implementation of Target and Anomaly Detection Algorithms for Real-Time Hyperspectral Imaging. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2950–2961. [Google Scholar] [CrossRef]

- Basilio, J.A.M.; Torres, G.A.; Perez, G.S.; Medina, L.K.T.; Meana, H.M.P. Explicit Image Detection Using YCbCr Space Color Model as Skin Detection. Appl. Math. Comput. Eng. 2011, 123–128. [Google Scholar]

- Jayaraman, S.; Esakkirajan, S.; Veerakumar, T. Digital Image Processing; Tata McGraw-Hill Education: New Delhi, India; London, UK, 2011. [Google Scholar]

- Vollmer, M.; Möllmann, K.P. Infrared Thermal Imaging: Fundamentals, Research and Applications; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Osman, M.Z.; Maarof, M.A.; Rohani, M.F. Improved Dynamic Threshold Method for Skin Colour Detection Using Multi-Colour Space. Am. J. Appl. Sci. 2016, 13, 135–144. [Google Scholar] [CrossRef]

- Jones, M.J.; Rehg, J.M. Statistical Color Models with Application to Skin Detection. Int. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Mate, S.; Bouchet, L.; Atteia, J.L.; Claret, A.; Cordier, B.; Dagoneau, N.; Godet, O.; Gros, A.; Schanne, S.; Triou, H. Simulations of the SVOM/ECLAIRs dynamic background: A fast, accurate and general approach for wide-field hard X-ray instruments. Exp. Astron. 2019, 48, 171–198. [Google Scholar] [CrossRef]

- Duncan, J.; Chou, T.C. On the detection of motion and the computation of optical flow. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 346–352. [Google Scholar] [CrossRef]

- Damarla, T.; Kaplan, L.; Chan, A. Human infrastructure & human activity detection. In Proceedings of the 2007 10th International Conference on Information Fusion, Quebec City, QC, Canada, 9–12 July 2007; pp. 1–8. [Google Scholar] [CrossRef]

| Lifespan Index | Number of Custom Pixels of the Person Found | Coordinates Delimitation | Coordinates Delimitation | Coordinates Delimitation | Coordinates Delimitation |

|---|---|---|---|---|---|

| 4 | 4743 | 281 | 413 | 76 | 138 |

| 3 | 38,632 | 3 | 283 | 160 | 404 |

| Video Section | Section Interval (Video Frames) | Actions in the Section |

|---|---|---|

| 1 | 1 to 6 | empty room with no movement |

| 7 to 30 | door opening, entrance of person no.1, door closing | |

| 2 | 31 to 40 | person no.1—walking across the room |

| 41 to 48 | person no.1—grabbing an object (a folder on the bed) | |

| 3 | 49 to 70 | person no.1—sitting down, end of movement |

| 4 | 71 to 73 | person no.1—at rest |

| 74 to 84 | person no.1—at rest, door opening, entrance of person no.2 | |

| 87 to 96 | person no.1—at rest, door opening, entrance of person no.2 | |

| 5 | 97 to 134 | person no.1—at rest, person no.2—shifting a chair and sitting down |

| 135 to 142 | person no.1—at rest, person no.2—at rest | |

| 6 | 143 to 161 | person no.1—walking across the room, person no.2—at rest |

| 162 to 172 | person no.1—walking across the room, person no.2—at rest, | |

| intersecting of persons in the image | ||

| 173 to 188 | person no.1—at rest, person no.2—at rest, | |

| intersecting of persons in the image | ||

| 189 to 190 | person no.1—walking across the room, person no.2—at rest, | |

| intersecting of persons in the image | ||

| 7 | 191 to 215 | person no.1—walking across the room, person no.2—at rest |

| 216 to 229 | person no.1—pushing a toy car, person no.2—at rest | |

| 230 to 239 | person no.1—at rest, person no.2—at rest | |

| 8 | 240 to 268 | person no.1—at rest, person no.2—walking across the room |

| 269 to 272 | person no.1—at rest, person no.2—at rest | |

| 9 | 273 to 281 | person no.1—at rest, person no.2—at rest, |

| darkening of the room using blinds | ||

| 282 to 296 | person no.1—at rest, person no.2—at rest, | |

| brightening of the room using blinds | ||

| 10 | 297 to 319 | person no.1—walking across the room (exit), person no.2—at rest |

| 11 | 320 to 339 | person no.2—walking across the room (exit) |

| 12 | 340 to 346 | door closing |

| 347 to 350 | empty room with no movement |

| 1 | 0.2 | 0.111 | 0.333 | 0.111 | 0.241 | 0.0351 | |

| 5 | 1 | 0.166 | 3 | 1 | 1.200 | 0.1746 | |

| 9 | 6 | 1 | 3 | 2 | 3.178 | 0.4626 | |

| 3 | 0.333 | 0.333 | 1 | 0.5 | 0.699 | 0.1017 | |

| 9 | 1 | 0.5 | 2 | 1 | 1.552 | 0.2260 | |

| 6.87 | 1 | ||||||

| Section Number | Weighted Average Rating | The Mean Error of Measurement Rating | ||||

|---|---|---|---|---|---|---|

| Lifespan of 1 Frame | Lifespan of 12 Frames | Lifespan of 40 Frames | Lifespan of 1 Frame | Lifespan of 12 Frames | Lifespan of 40 Frames | |

| 1 | 0.477 | 0.477 | 0.477 | 0.294 | 0.294 | 0.294 |

| 2 | 0.623 | 0.609 | 0.610 | 0.056 | 0.049 | 0.048 |

| 3 | 0.629 | 0.551 | 0.506 | 0.017 | 0.075 | 0.024 |

| 4 | 0.626 | 0.620 | 0.541 | 0.030 | 0.038 | 0.058 |

| 5 | 0.555 | 0.552 | 0.553 | 0.030 | 0.027 | 0.029 |

| 6 | 0.459 | 0.487 | 0.488 | 0.077 | 0.045 | 0.044 |

| 7 | 0.504 | 0.493 | 0.497 | 0.040 | 0.030 | 0.029 |

| 8 | 0.505 | 0.478 | 0.479 | 0.028 | 0.030 | 0.030 |

| 9 | 0.337 | 0.411 | 0.424 | 0.156 | 0.059 | 0.062 |

| 10 | 0.469 | 0.475 | 0.391 | 0.071 | 0.056 | 0.040 |

| 11 | 0.326 | 0.456 | 0.432 | 0.202 | 0.096 | 0.120 |

| 12 | 0.262 | 0.232 | 0.245 | 0.091 | 0.102 | 0.097 |

| Average of rating of all sections | 0.481 | 0.487 | 0.470 | 0.091 | 0.075 | 0.073 |

| Final ranking, from top-rated | 2 | 1 | 3 | |||

| System Configuration Used (Change Detection Method and Lifespan of Person Finding) | Result of the Weighted Rating— | Result of the Scaled Rating—Γ | Ranking of the Results According to the Average Scaled Rating in all Sections of the Test Video |

|---|---|---|---|

| Method 1.1 with a lifespan of 1 frame | 0.481 | 0.650 | 9 |

| Method 1.1 with a lifespan of 12 frames | 0.487 | 0.780 | 2 |

| Method 1.1 with a lifespan of 40 frames | 0.470 | 0.775 | 4 |

| Method 1.2 with a lifespan of 1 frame | 0.473 | 0.622 | 13 |

| Method 1.2 with a lifespan of 12 frames | 0.476 | 0.762 | 6 |

| Method 1.2 with a lifespan of 40 frames | 0.460 | 0.747 | 7 |

| Method 1.3 with a lifespan of 1 frame | 0.479 | 0.643 | 10 |

| Method 1.3 with a lifespan of 12 frames | 0.485 | 0.772 | 5 |

| Method 1.3 with a lifespan of 40 frames | 0.469 | 0.776 | 3 |

| Method 2.1 with a lifespan of 1 frame | 0.400 | 0.215 | 20 |

| Method 2.1 with a lifespan of 12 frames | 0.467 | 0.574 | 15 |

| Method 2.1 with a lifespan of 40 frames | 0.438 | 0.639 | 11 |

| Method 2.2 with a lifespan of 1 frame | 0.432 | 0.349 | 18 |

| Method 2.2 with a lifespan of 12 frames | 0.468 | 0.715 | 8 |

| Method 2.2 with a lifespan of 40 frames | 0.453 | 0.789 | 1 |

| Method 2.3 with a lifespan of 1 frame | 0.412 | 0.278 | 19 |

| Method 2.3 with a lifespan of 12 frames | 0.463 | 0.623 | 12 |

| Method 2.3 with a lifespan of 40 frames | 0.424 | 0.552 | 16 |

| Method 3 with a lifespan of 1 frame | 0.328 | -0.015 | 21 |

| Method 3 with a lifespan of 12 frames | 0.437 | 0.539 | 17 |

| Method 3 with a lifespan of 40 frames | 0.405 | 0.608 | 14 |

| System Configuration Used | (A) | (B) | (C) |

|---|---|---|---|

| Method 1.1 with a lifespan of 12 frames | 2 | 1 | 1 |

| Method 1.3 with a lifespan of 12 frames | 3 | 2 | 2 |

| Method 2.2 with a lifespan of 40 frames | 1 | 4 | 3 |

| Method 1.2 with a lifespan of 12 frames | 4 | 3 | 4 |

| Method 2.3 with a lifespan of 12 frames | 6 | 5 | 5 |

| Method 2.1 with a lifespan of 40 frames | 5 | 6 | 6 |

| Method 3 with a lifespan of 40 frames | 7 | 7 | 7 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schneider, M.; Machacek, Z.; Martinek, R.; Koziorek, J.; Jaros, R. A System for the Detection of Persons in Intelligent Buildings Using Camera Systems—A Comparative Study. Sensors 2020, 20, 3558. https://doi.org/10.3390/s20123558

Schneider M, Machacek Z, Martinek R, Koziorek J, Jaros R. A System for the Detection of Persons in Intelligent Buildings Using Camera Systems—A Comparative Study. Sensors. 2020; 20(12):3558. https://doi.org/10.3390/s20123558

Chicago/Turabian StyleSchneider, Miroslav, Zdenek Machacek, Radek Martinek, Jiri Koziorek, and Rene Jaros. 2020. "A System for the Detection of Persons in Intelligent Buildings Using Camera Systems—A Comparative Study" Sensors 20, no. 12: 3558. https://doi.org/10.3390/s20123558

APA StyleSchneider, M., Machacek, Z., Martinek, R., Koziorek, J., & Jaros, R. (2020). A System for the Detection of Persons in Intelligent Buildings Using Camera Systems—A Comparative Study. Sensors, 20(12), 3558. https://doi.org/10.3390/s20123558