Spatio-Temporal Representation of an Electoencephalogram for Emotion Recognition Using a Three-Dimensional Convolutional Neural Network

Abstract

1. Introduction

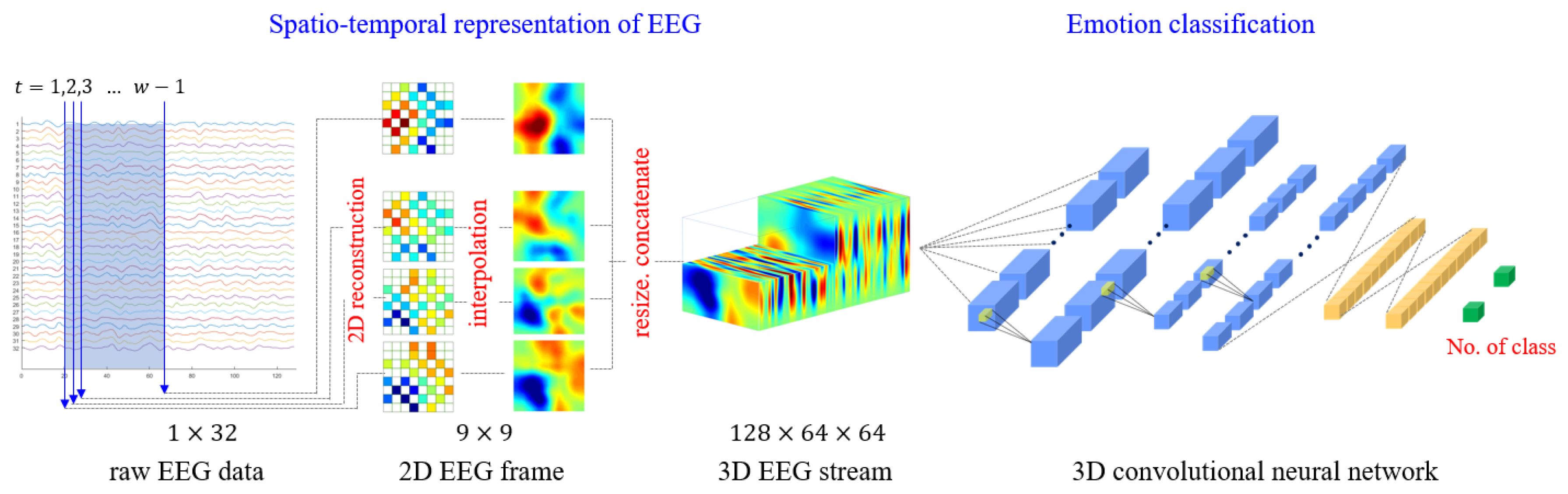

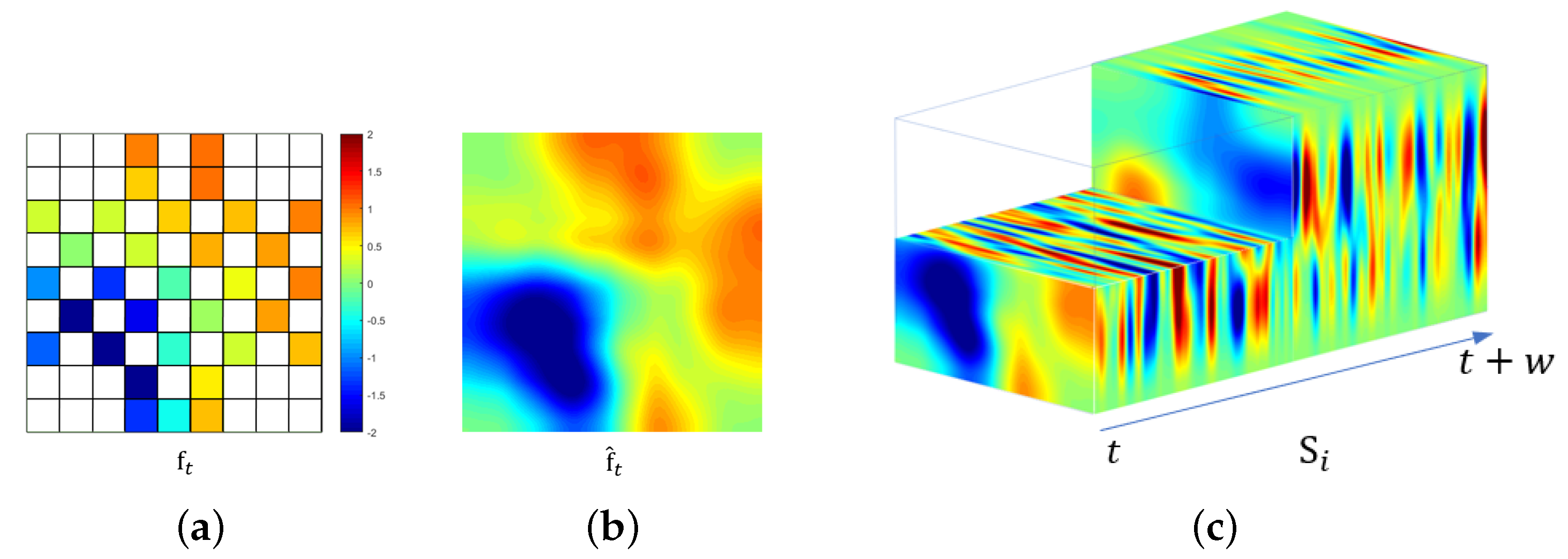

- We propose a novel method for representing EEG signals in a 3D spatio-temporal form. First, we set the positions of each channel at the same sampling time based on their originated position and reconstruct a two-dimensional EEG frame through an interpolation. We then concatenate each 2D EEG frame along the time axis, which creates the 3D EEG stream.

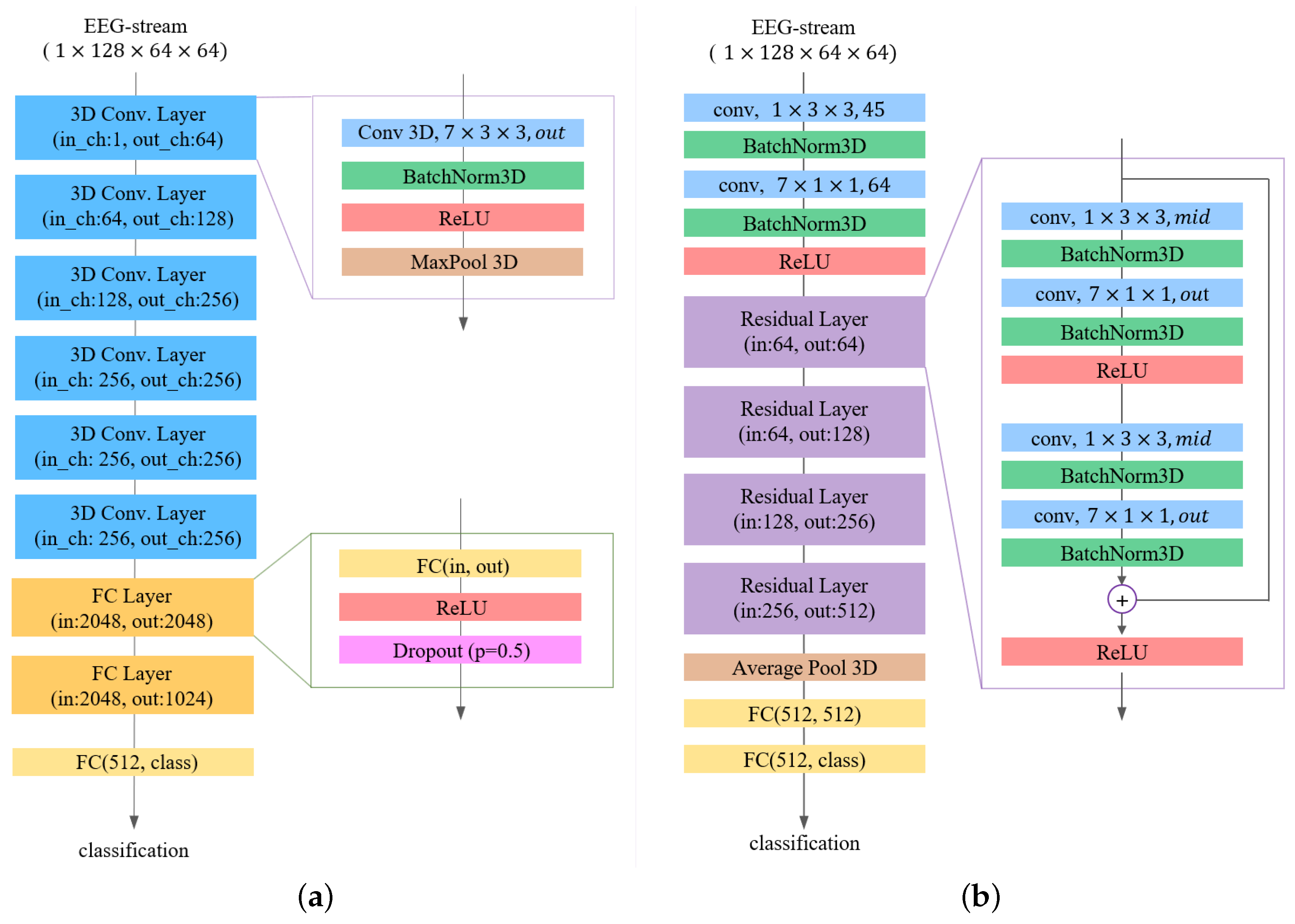

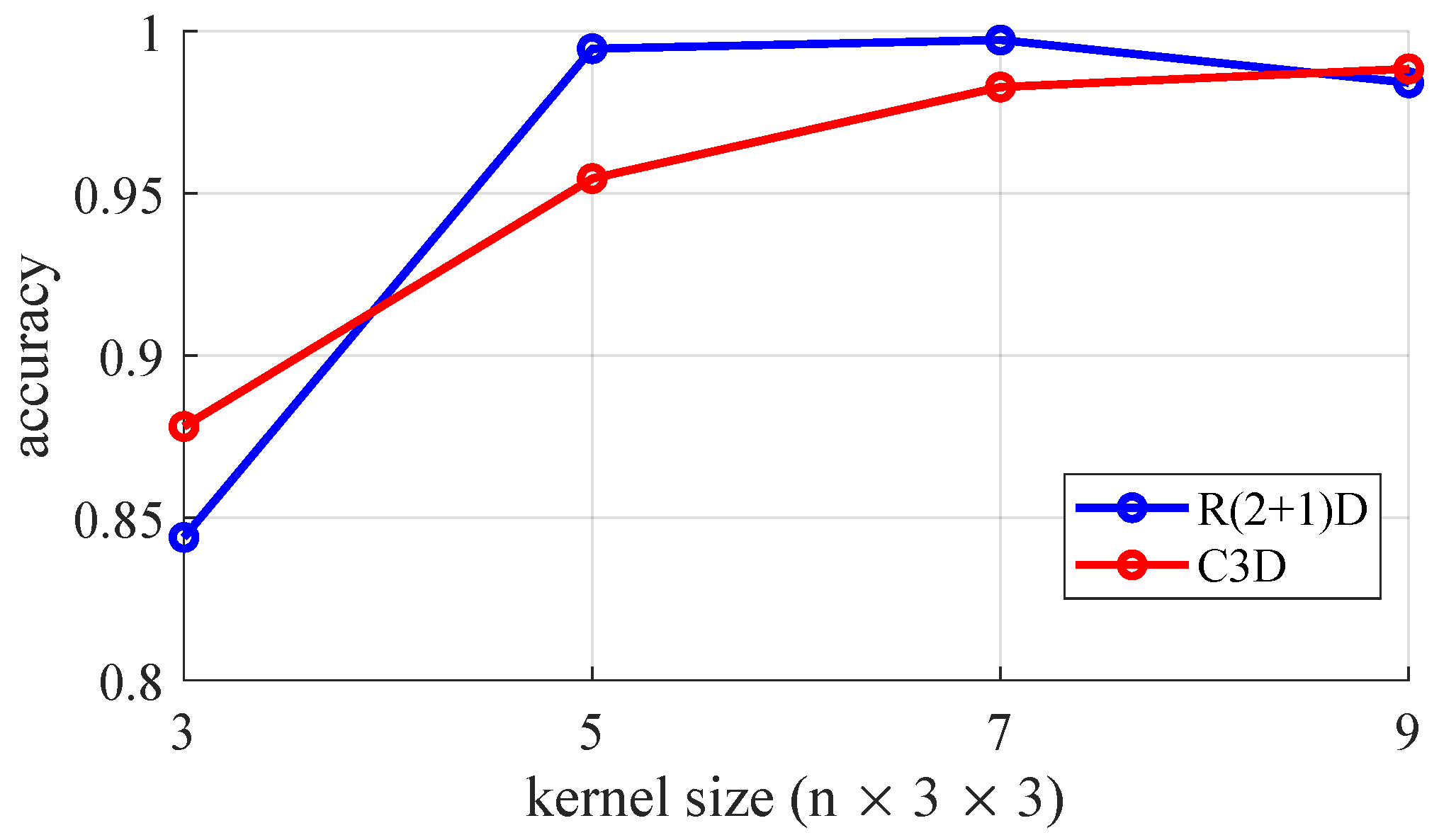

- We design and apply two types of 3D CNN architecture optimized with a 3D EEG stream. We also investigate the optimized shape of a 3D dataset and 3D convolution kernels experimentally.

- We provide extensive experimental results to demonstrate the effectiveness of the proposed method on the DEAP dataset, which achieves an accuracy of 99.11%, 99.74%, and 99.73% in the binary classification of valence and arousal and a four-class classification, respectively.

2. Related Studies

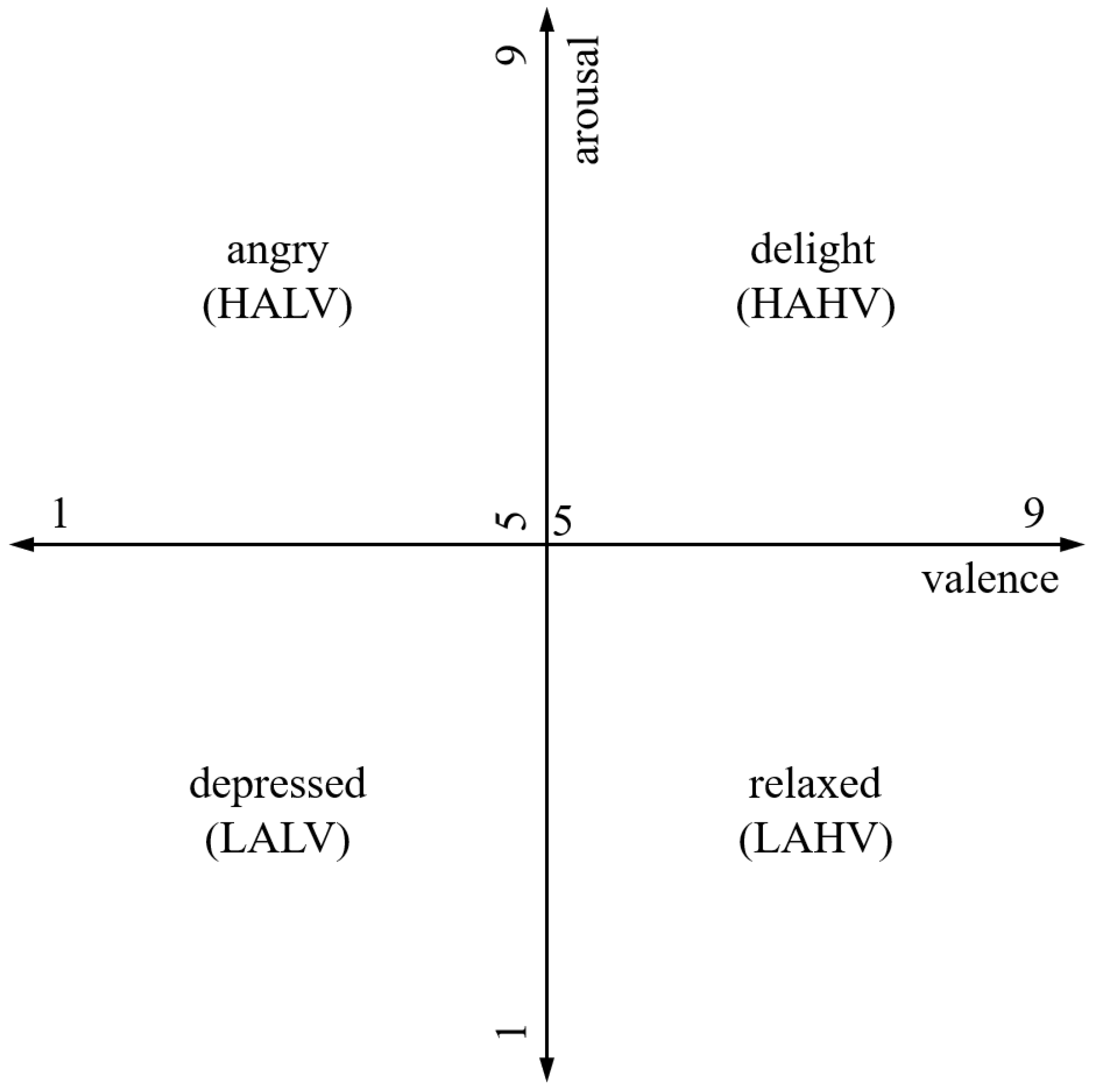

3. Dataset and Emotion Classification

4. Proposed Approach

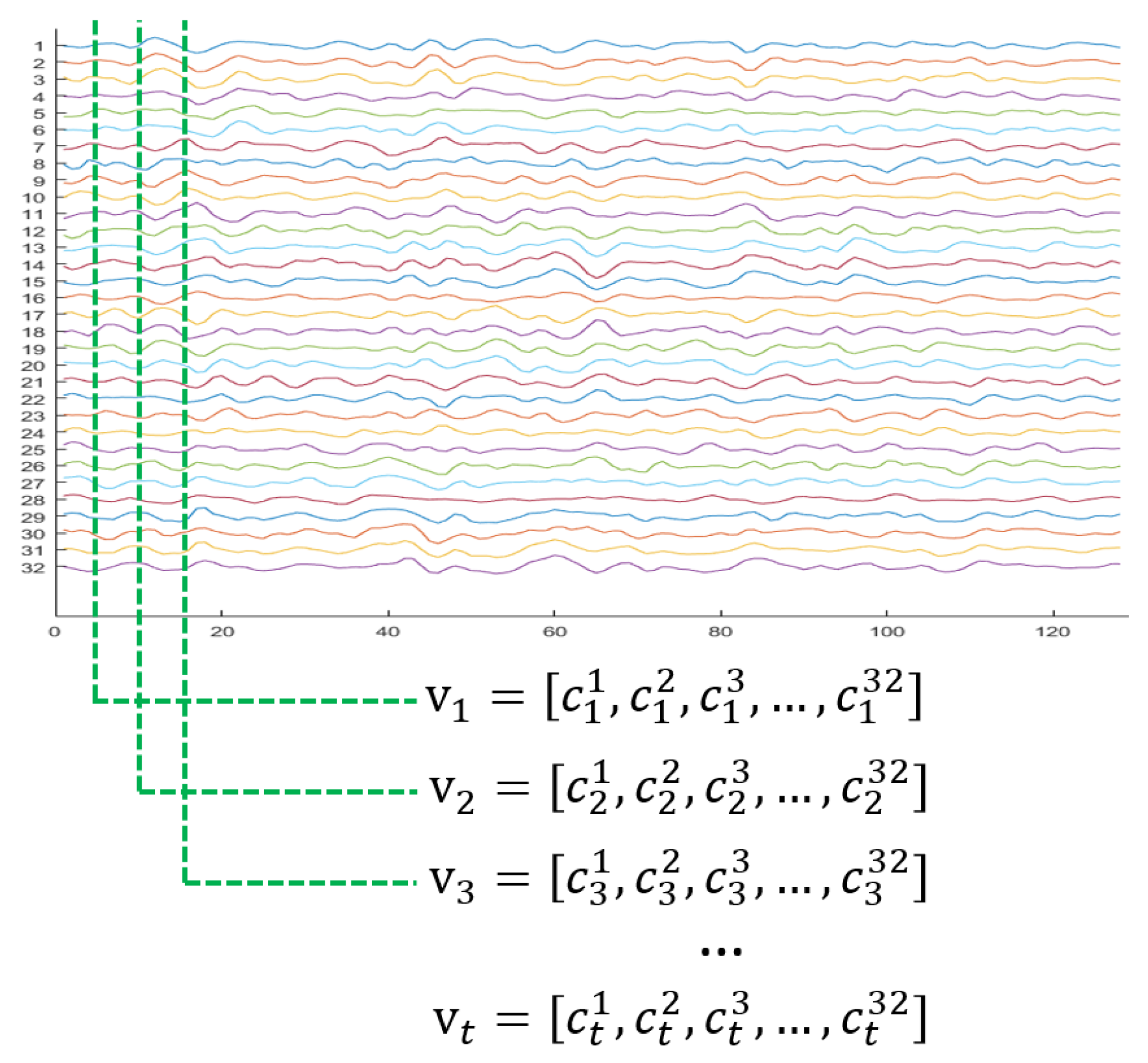

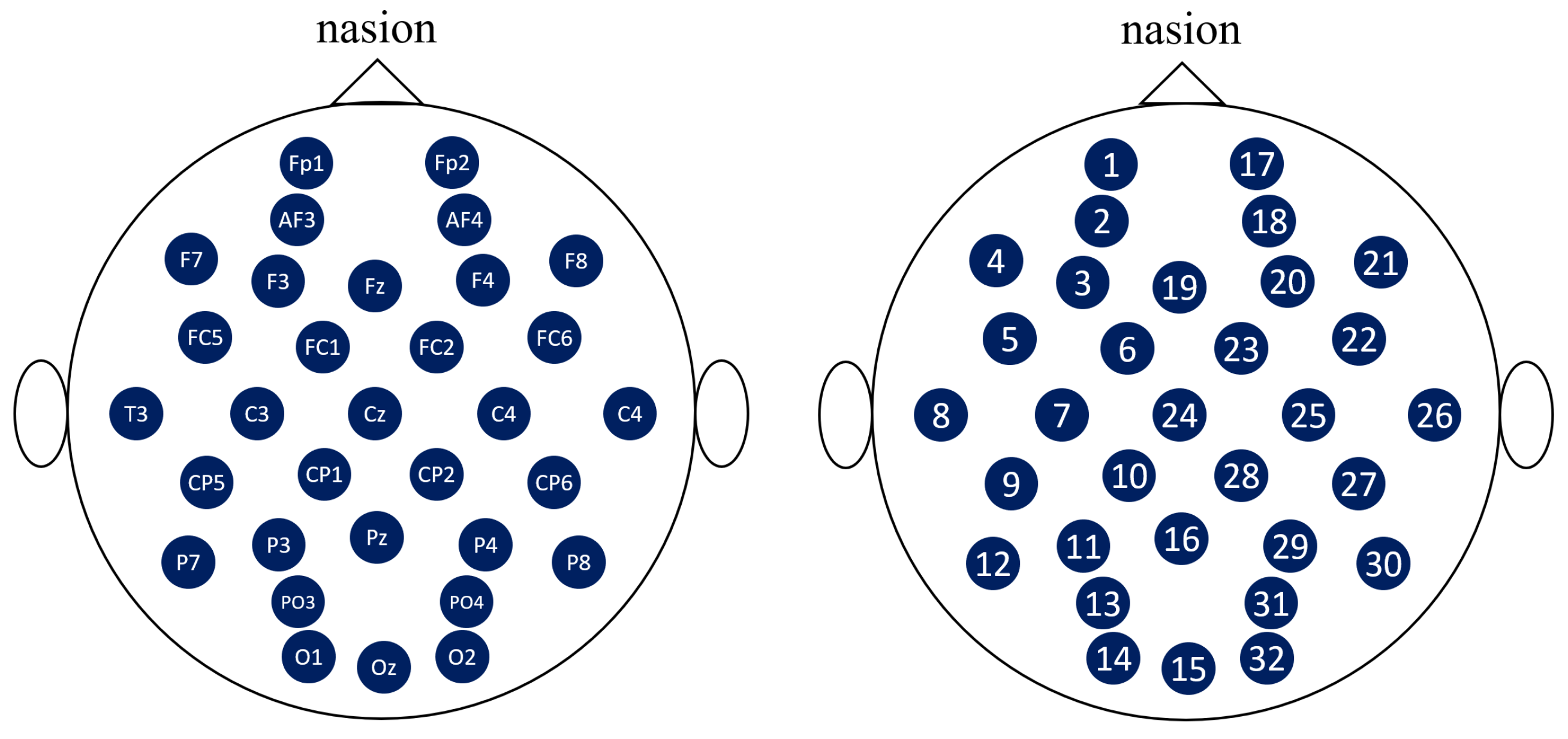

4.1. Spatio-Temporal Representation of EEG

4.2. Spatio-Temporal Learning Based on 3D CNNs

5. Experimental Results

5.1. Experimental Setup

5.2. Emotion Classification

5.3. Spatio-Temporal Effectiveness

5.4. Performance of Various Dimensions

5.5. Limitations of the Proposed Method

6. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

References

- Menezes, M.L.R.; Samara, A.; Galway, L.; Sant’Anna, A.; Verikas, A.; Alonso-Fernandez, F.; Wang, H.; Bond, R. Towards emotion recognition for virtual environments: An evaluation of eeg features on benchmark dataset. Pers. Ubiquitous Comput. 2017, 21, 1003–1013. [Google Scholar] [CrossRef]

- De Nadai, S.; D’Incà, M.; Parodi, F.; Benza, M.; Trotta, A.; Zero, E.; Zero, L.; Sacile, R. Enhancing safety of transport by road by on-line monitoring of driver emotions. In Proceedings of the 11th System of Systems Engineering Conference (SoSE), Kongsberg, Norway, 12–16 June 2016; pp. 1–4. [Google Scholar]

- Wang, F.; Zhong, S.h.; Peng, J.; Jiang, J.; Liu, Y. Data augmentation for eeg-based emotion recognition with deep convolutional neural networks. In International Conference on Multimedia Modeling; Springer: Berlin, Germany, 2018; pp. 82–93. [Google Scholar]

- Guo, R.; Li, S.; He, L.; Gao, W.; Qi, H.; Owens, G. Pervasive and unobtrusive emotion sensing for human mental health. In Proceedings of the 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; pp. 436–439. [Google Scholar]

- Verschuere, B.; Crombez, G.; Koster, E.; Uzieblo, K. Psychopathy and physiological detection of concealed information: A review. Psychol. Belg. 2006, 46, 99–116. [Google Scholar] [CrossRef]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 81–84. [Google Scholar]

- Zhang, Y.D.; Yang, Z.J.; Lu, H.M.; Zhou, X.X.; Phillips, P.; Liu, Q.M.; Wang, S.H. Facial emotion recognition based on biorthogonal wavelet entropy, fuzzy support vector machine, and stratified cross validation. IEEE Access 2016, 4, 8375–8385. [Google Scholar] [CrossRef]

- Tao, F.; Liu, G.; Zhao, Q. An ensemble framework of voice-based emotion recognition system for films and TV programs. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Beijing, China, 20–22 May 2018; pp. 6209–6213. [Google Scholar]

- Yang, Y.; Wu, Q.; Fu, Y.; Chen, X. Continuous Convolutional Neural Network with 3D Input for EEG-Based Emotion Recognition. In International Conference on Neural Information Processing; Springer: Berlin, Germany, 2018; pp. 433–443. [Google Scholar]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep learning-based electroencephalography analysis: A systematic review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef]

- Brunner, C.; Billinger, M.; Vidaurre, C.; Neuper, C. A comparison of univariate, vector, bilinear autoregressive, and band power features for brain–computer interfaces. Med. Biol. Eng. Comput. 2011, 49, 1337–1346. [Google Scholar] [CrossRef]

- Li, X.; Song, D.; Zhang, P.; Yu, G.; Hou, Y.; Hu, B. Emotion recognition from multi-channel EEG data through convolutional recurrent neural network. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 352–359. [Google Scholar]

- Kwon, Y.H.; Shin, S.B.; Kim, S.D. Electroencephalography based fusion two-dimensional (2D)-convolution neural networks (CNN) model for emotion recognition system. Sensors 2018, 18, 1383. [Google Scholar] [CrossRef]

- Zhang, A.; Yang, B.; Huang, L. Feature extraction of EEG signals using power spectral entropy. In Proceedings of the International Conference on BioMedical Engineering and Informatics, Sanya, China, 27–30 May 2008; pp. 435–439. [Google Scholar]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Yoon, H.J.; Chung, S.Y. EEG-based emotion estimation using Bayesian weighted-log-posterior function and perceptron convergence algorithm. Comput. Biol. Med. 2013, 43, 2230–2237. [Google Scholar] [CrossRef]

- Bajaj, V.; Pachori, R.B. Human emotion classification from EEG signals using multiwavelet transform. In Proceedings of the International Conference on Medical Biometrics, Shenzhen, China, 30 May–1 June 2014; pp. 125–130. [Google Scholar]

- Chen, D.W.; Miao, R.; Yang, W.Q.; Liang, Y.; Chen, H.H.; Huang, L.; Deng, C.J.; Han, N. A feature extraction method based on differential entropy and linear discriminant analysis for emotion recognition. Sensors 2019, 19, 1631. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, P.; Mao, Z.; Huang, Y.; Jiang, D.; Zhang, Y. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Tripathi, S.; Acharya, S.; Sharma, R.D.; Mittal, S.; Bhattacharya, S. Using Deep and Convolutional Neural Networks for Accurate Emotion Classification on DEAP Dataset. In Proceedings of the Twenty-Ninth IAAI Conference, San Francisco, CA, USA, 6–9 February 2017. [Google Scholar]

- Yang, H.; Han, J.; Min, K. A Multi-Column CNN Model for Emotion Recognition from EEG Signals. Sensors 2019, 19, 4736. [Google Scholar] [CrossRef]

- Shao, H.M.; Wang, J.G.; Wang, Y.; Yao, Y.; Liu, J. EEG-Based Emotion Recognition with Deep Convolution Neural Network. In Proceedings of the IEEE 8th Data Driven Control and Learning Systems Conference (DDCLS), Dali, China, 24–27 May 2019; pp. 1225–1229. [Google Scholar]

- Cho, J.; Lee, M. Building a Compact Convolutional Neural Network for Embedded Intelligent Sensor Systems Using Group Sparsity and Knowledge Distillation. Sensors 2019, 19, 4307. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.; Li, C.; Sun, S. Deep convolutional neural network for emotion recognition using EEG and peripheral physiological signal. In International Conference on Image and Graphics; Springer: Berlin, Germany, 2017; pp. 385–394. [Google Scholar]

- Li, Z.; Tian, X.; Shu, L.; Xu, X.; Hu, B. Emotion recognition from eeg using rasm and lstm. In International Conference on Internet Multimedia Computing and Service; Springer: Berlin, Germany, 2017; pp. 310–318. [Google Scholar]

- Li, Y.; Huang, J.; Zhou, H.; Zhong, N. Human emotion recognition with electroencephalographic multidimensional features by hybrid deep neural networks. Appl. Sci. 2017, 7, 1060. [Google Scholar] [CrossRef]

- Chao, H.; Dong, L.; Liu, Y.; Lu, B. Emotion recognition from multiband EEG signals using CapsNet. Sensors 2019, 19, 2212. [Google Scholar] [CrossRef]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion recognition based on EEG using LSTM recurrent neural network. Emotion 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Qiu, M.; Wang, Y.; Chen, X. Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 4489–4497. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Shalaby, M.A.W. EEG-based emotion recognition using 3D convolutional neural networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Luo, Y.; Fu, Q.; Xie, J.; Qin, Y.; Wu, G.; Liu, J.; Jiang, F.; Cao, Y.; Ding, X. EEG-Based Emotion Classification Using Spiking Neural Networks. IEEE Access 2020, 8, 46007–46016. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Autonom. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ekmekcioglu, E. Investigating the Use of Pretrained Convolutional Neural Network on Cross-Subject and Cross-Dataset EEG Emotion Recognition. Sensors 2020, 20, 2034. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J. The emotion probe: Studies of motivation and attention. Am. Psychol. 1995, 50, 372. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Sahu, H.; Nanecha, N.; Kumar, P.; Roy, P.P.; Chang, V. Enhancing text using emotion detected from EEG signals. J. Grid Comput. 2019, 17, 325–340. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall PTR: Upper Saddle River, NJ, USA, 1994. [Google Scholar]

- Wang, X.W.; Nie, D.; Lu, B.L. Emotional state classification from EEG data using machine learning approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1510–1517. [Google Scholar] [CrossRef]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6450–6459. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Xing, X.; Li, Z.; Xu, T.; Shu, L.; Hu, B.; Xu, X. SAE+ LSTM: A New framework for emotion recognition from multi-channel EEG. Front. Neurorobot. 2019, 13, 37. [Google Scholar] [CrossRef]

| Valence | ||||

|---|---|---|---|---|

| Low | High | Total | ||

| arousal | high | 296 (HALV) | 458 (HAHV) | 754 |

| low | 260 (LALV) | 266 (LAHV) | 526 | |

| total | 556 | 724 | 1280 | |

| Method | Input | Model | Validation | Accuracy | |

|---|---|---|---|---|---|

| Valence | Arousal | ||||

| Li et al. [12] | WT | C-RNN | 5-fold | 0.7206 | 0.7412 |

| Kown et al. [13] | WT | 2D CNN | 10-fold | 0.7812 | 0.8125 |

| Lin et al. [24] | PSD | 2D CNN | 10-fold * | 0.8550 | 0.8730 |

| Xing et al. [49] | PSD | LSTM | 10-fold | 0.7438 | 0.8110 |

| Yang et al. [9] | DE | 2D CNN | 10-fold * | 0.8945 | 0.9024 |

| Alhagry et al. [28] | raw | LSTM | 4-fold | 0.8565 | 0.8565 |

| Yang et al. [29] | raw | CNN + LSTM | 10-fold * | 0.9080 | 0.9103 |

| Salama et al. [32] | raw | 3D CNN | 5-fold | 0.8744 | 0.8849 |

| Ours (C3D) | raw | 3D CNN | 5-fold | 0.9842 | 0.9904 |

| Ours (R(2 + 1)D) | raw | 3D CNN | 5-fold | 0.9911 | 0.9974 |

| Method | Input | Model | Validation | Accuracy |

|---|---|---|---|---|

| Kwon et al. [13] | WT | 2D CNN | 10-fold | 0.7343 |

| Li et al. [26] | PSD | CLRNN | 5-fold * | 0.7521 |

| Salama et al. [32] | raw | 3D CNN | 5-fold | 0.9343 |

| Ours (C3D) | raw | 3D CNN | 5-fold | 0.9828 |

| Ours (R(2 + 1)D) | raw | 3D CNN | 5-fold | 0.9973 |

| Input Type | Method | CNN | Accuracy | ||

|---|---|---|---|---|---|

| Valence | Arousal | 4-Class | |||

| handcrafted features | PSD [19] | 2D | 0.9446 | 0.9552 | 0.9326 |

| 3D | 0.9543 | 0.9578 | 0.9454 | ||

| raw data | concatenation [32] | 2D | 0.9048 | 0.9166 | 0.9142 |

| 3D | 0.8883 | 0.8942 | 0.9056 | ||

| 3D EEG stream | 3D | 0.9874 | 0.9928 | 0.9932 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, J.; Hwang, H. Spatio-Temporal Representation of an Electoencephalogram for Emotion Recognition Using a Three-Dimensional Convolutional Neural Network. Sensors 2020, 20, 3491. https://doi.org/10.3390/s20123491

Cho J, Hwang H. Spatio-Temporal Representation of an Electoencephalogram for Emotion Recognition Using a Three-Dimensional Convolutional Neural Network. Sensors. 2020; 20(12):3491. https://doi.org/10.3390/s20123491

Chicago/Turabian StyleCho, Jungchan, and Hyoseok Hwang. 2020. "Spatio-Temporal Representation of an Electoencephalogram for Emotion Recognition Using a Three-Dimensional Convolutional Neural Network" Sensors 20, no. 12: 3491. https://doi.org/10.3390/s20123491

APA StyleCho, J., & Hwang, H. (2020). Spatio-Temporal Representation of an Electoencephalogram for Emotion Recognition Using a Three-Dimensional Convolutional Neural Network. Sensors, 20(12), 3491. https://doi.org/10.3390/s20123491