Implementation and Optimization of a Dual-confocal Autofocusing System

Abstract

1. Introduction

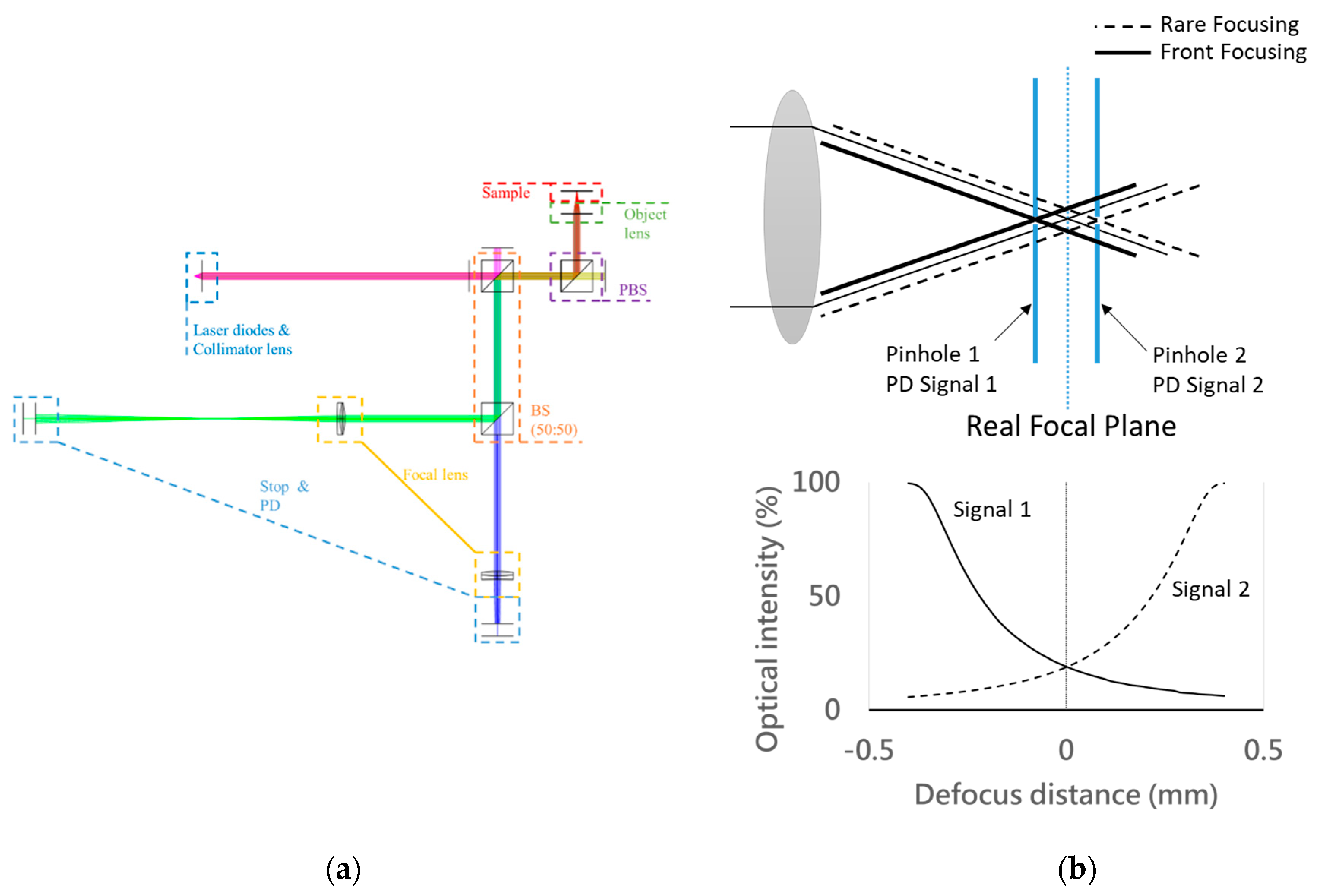

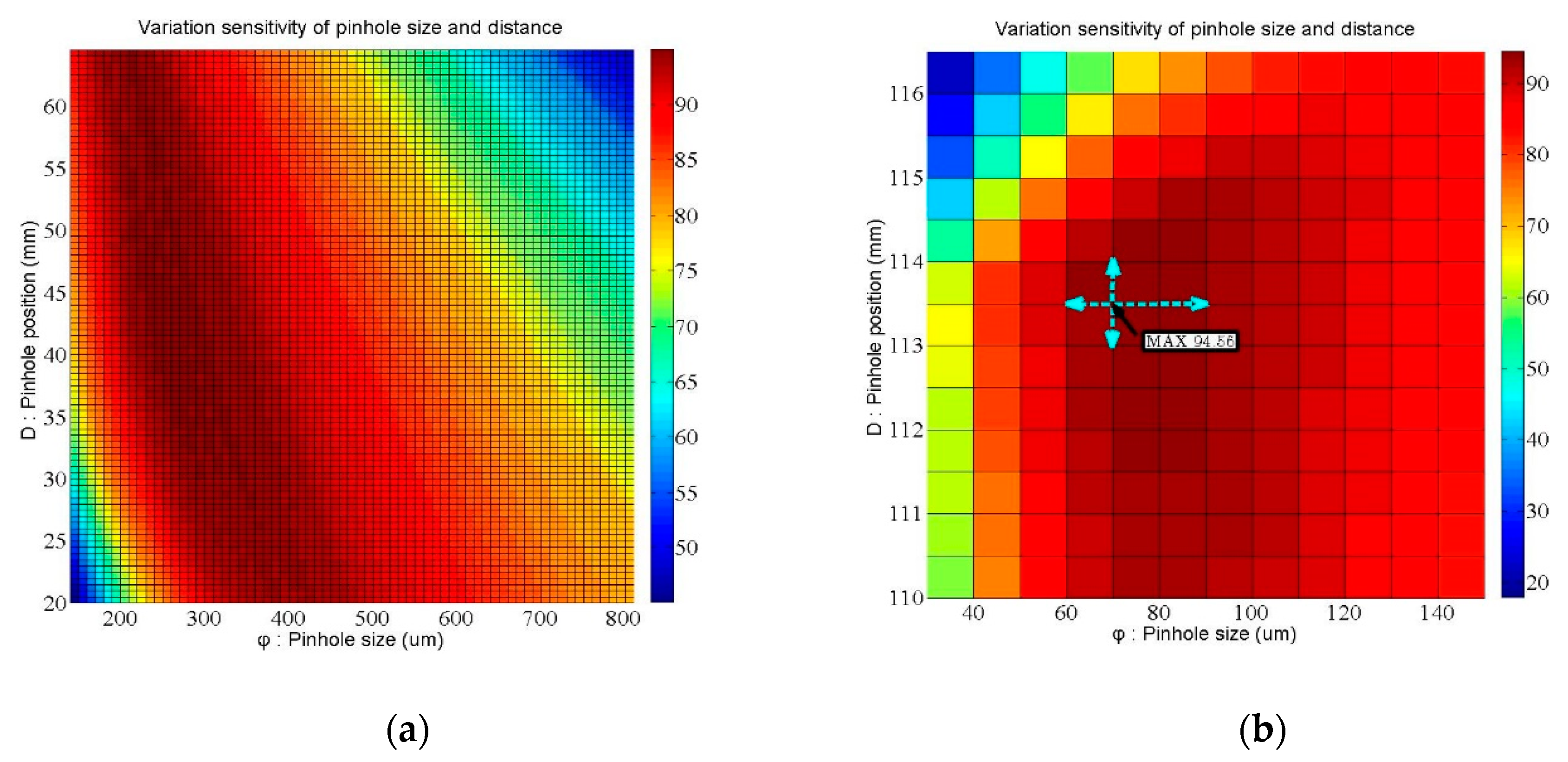

2. Methods

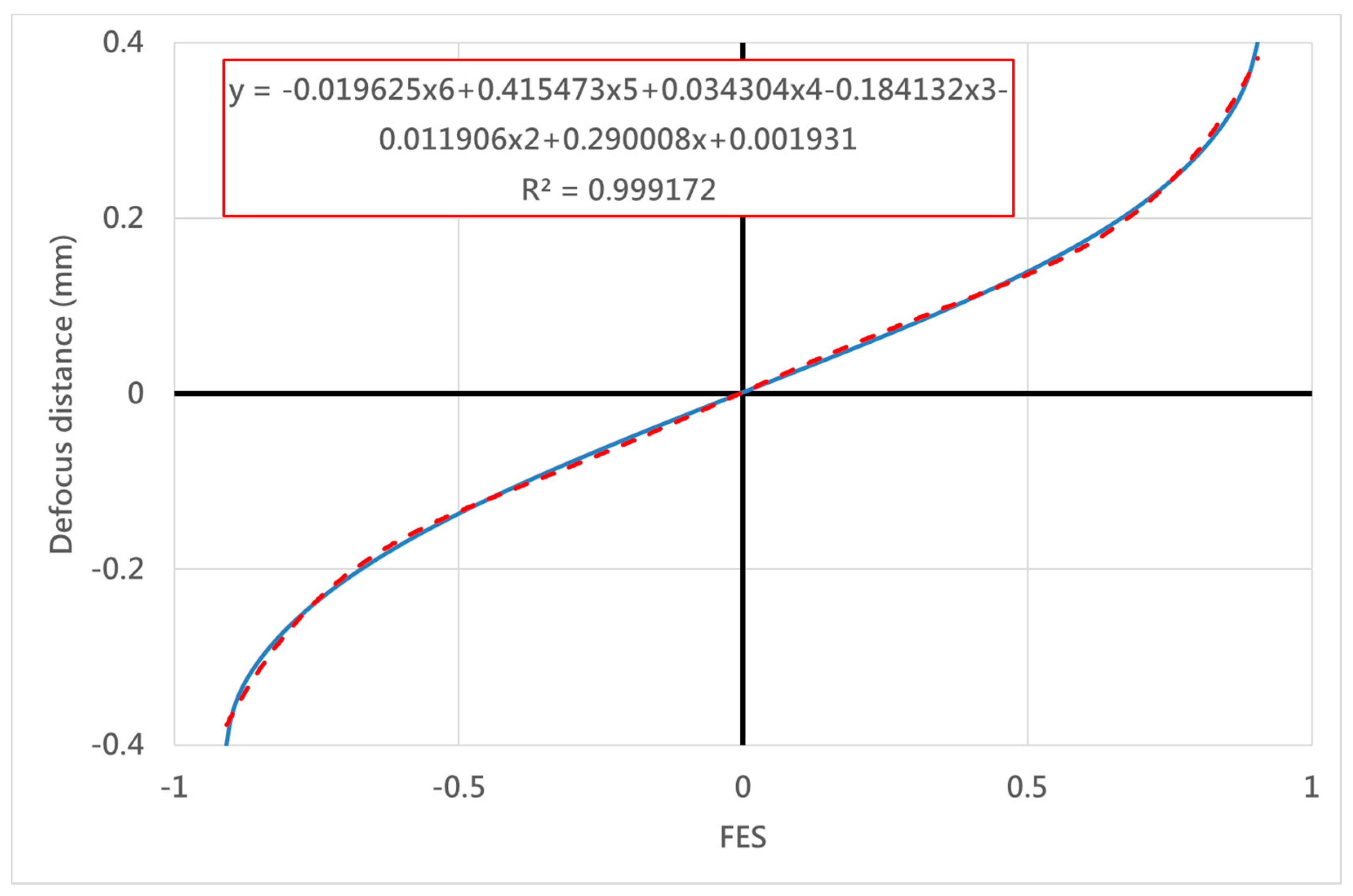

3. System Implementation

4. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, C.S.; Lin, Y.C.; Hu, P.H. Design and characterization of precise laser-based autofocusing microscope with reduced geometrical fluctuations. Microsyst. Technol. 2013, 19, 1717–1724. [Google Scholar] [CrossRef]

- Wu, L.; Wen, G.; Wang, Y.; Huang, L.; Zhou, J. Enhanced Automated Guidance System for Horizontal Auger Boring Based on Image Processing. Sensors 2018, 18, 595. [Google Scholar] [CrossRef]

- Kang, J.H.; Lee, C.B.; Joo, J.Y.; Lee, S.K. Phase-locked loop based on machine surface topography measurement using lensed fibers. Appl. Opt. 2011, 50, 460–467. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.S.; Jiang, S.H. Design and experimental validation of novel enhanced-performance autofocusing microscope. Appl. Phys. B 2014, 117, 1161–1171. [Google Scholar] [CrossRef]

- Lee, S.; Lee, J.Y.; Yang, W.; Kim, D.Y. Autofocusing and edge detection schemes in cell volume measurements with quantitative phase microscopy. Opt. Express 2009, 17, 6476–6486. [Google Scholar] [CrossRef] [PubMed]

- Chang, H.C.; Shih, T.M.; Chen, N.Z.; Pu, N.W. A microscope system based on bevel-axial method auto-focus. Opt. Lasers Eng. 2009, 47, 547–551. [Google Scholar] [CrossRef]

- Chen, C.Y.; Hwang, R.C.; Chen, Y.J. A passive auto-focus camera control system. Appl. Soft Comput. 2010, 10, 296–303. [Google Scholar] [CrossRef]

- Bueno-Ibarra, M.A.; Alvarez-Borrego, J.; Acho, L.; Chavez-Sanchez, M.C. Fast autofocus algorithm for automated microscopes. Opt. Eng. 2005, 44, 063601-1–063601-8. [Google Scholar]

- Lee, J.H.; Kim, Y.S.; Kim, S.R.; Lee, I.H.; Pahk, H.J. Real-time application of critical dimension measurement of TFT-LCD pattern using a newly proposed 2D image-processing algorithm. Opt. Lasers Eng. 2008, 46, 558–569. [Google Scholar] [CrossRef]

- Brazdilova, S.L.; Kozubek, M. Information content analysis in automated microscopy imaging using an adaptive autofocus algorithm for multimodal functions. J. Microsc. 2009, 236, 194–202. [Google Scholar] [CrossRef]

- Yazdanfar, S.; Kenny, K.B.; Tasimi, K.; Corwin, A.D.; Dixon, E.L.; Filkins, R.J. Simple and robust image-based autofocusing for digital microscopy. Opt. Express 2008, 16, 8670–8677. [Google Scholar] [CrossRef] [PubMed]

- Wright, E.F.; Wells, D.M.; French, A.P.; Howells, C.; Everitt, N.M. A low-cost automated focusing system for time-lapse microscopy. Meas. Sci. Technol. 2009, 20, 027003-1–027003-4. [Google Scholar] [CrossRef]

- Kim, T.; Poon, T.C. Autofocusing in optical scanning holography. Appl. Opt. 2009, 48, H153–H159. [Google Scholar] [CrossRef] [PubMed]

- Moscaritolo, M.; Jampel, H.; Knezevich, F.; Zeimer, R. An image based auto-focusing algorithm for digital fundus photography. IEEE Trans. Med. Imaging 2009, 28, 1703–1707. [Google Scholar] [CrossRef] [PubMed]

- Shao, Y.; Qu, J.; Li, H.; Wang, Y.; Qi, J.; Xu, G.; Niu, H. High-speed spectrally resolved multifocal multiphoton microscopy. Appl. Phys. B 2010, 99, 633–637. [Google Scholar] [CrossRef]

- Abdullah, S.J.; Ratnam, M.M.; Samad, Z. Error-based autofocus system using image feedback in a liquid-filled diaphragm lens. Opt. Eng. 2009, 48, 123602-1–123602-9. [Google Scholar] [CrossRef]

- Jung, B.J.; Kong, H.J.; Jeon, B.G.; Yang, D.Y.; Son, Y.; Lee, K.S. Autofocusing method using fluorescence detection for precise two-photon nanofabrication. Opt. Express 2011, 19, 22659–22668. [Google Scholar] [CrossRef]

- Zhang, P.; Prakash, J.; Zhang, Z.; Mills, M.S.; Efremidis, N.K.; Christodoulides, D.N.; Chen, Z. Trapping and guiding microparticles with morphing autofocusing Airy beams. Opt. Lett. 2011, 36, 2883–2885. [Google Scholar] [CrossRef]

- Wang, S.H.; Tay, C.J.; Quan, C.; Shang, H.M.; Zhou, Z.F. Laser integrated measurement of surface roughness and micro-displacement. Meas. Sci. Technol. 2000, 11, 454–458. [Google Scholar] [CrossRef]

- Fan, K.C.; Chu, C.L.; Mou, J.I. Development of a low-cost autofocusing probe for profile measurement. Meas. Sci. Technol. 2001, 12, 2137–2146. [Google Scholar] [CrossRef]

- Tanaka, Y.; Watanabe, T.; Hamamoto, K.; Kinoshita, H. Development of nanometer resolution focus detector in vacuum for extreme ultraviolet microscope. Jpn. J. Appl. Phys. 2006, 45, 7163–7166. [Google Scholar] [CrossRef]

- Li, Z.; Wu, K. Autofocus system for space cameras. Opt. Eng. 2005, 44, 053001-1–053001-5. [Google Scholar] [CrossRef]

- Rhee, H.G.; Kim, D.I.; Lee, Y.W. Realization and performance evaluation of high speed autofocusing for direct laser lithography. Rev. Sci. Instrum. 2009, 80, 073103-1–073103-5. [Google Scholar] [CrossRef] [PubMed]

- He, M.; Zhang, W.; Zhang, X. A displacement sensor of dual-light based on FPGA. Optoelectron. Lett. 2007, 3, 294–298. [Google Scholar] [CrossRef]

- Kim, K.H.; Lee, S.Y.; Kim, S.; Jeong, S.G. DNA microarray scanner with a DVD pick-up head. Curr. Appl. Phys. 2008, 8, 687–691. [Google Scholar] [CrossRef]

- Liu, C.S.; Jiang, S.H. A novel laser displacement sensor with improved robustness toward geometrical fluctuations of the laser beam. Meas. Sci. Technol. 2013, 24, 105101-1–105101-8. [Google Scholar] [CrossRef]

- Liu, C.S.; Hu, P.H.; Lin, Y.C. Design and experimental validation of novel optics-based autofocusing microscope. Appl. Phys. B 2012, 109, 259–268. [Google Scholar] [CrossRef]

- Liu, C.S.; Wang, Z.Y.; Chang, Y.C. Design and characterization of high-performance autofocusing microscope with zoom in/out functions. Appl. Phys. B 2015, 121, 69–80. [Google Scholar] [CrossRef]

- Liu, C.S.; Jiang, S.H. Precise autofocusing microscope with rapid response. Opt. Lasers Eng. 2015, 66, 294–300. [Google Scholar] [CrossRef]

- Liu, C.S.; Song, R.C.; Fu, S.J. Design of a laser-based autofocusing microscope for a sample with a transparent boundary layer. Appl. Phys. B 2019, 125, 199. [Google Scholar] [CrossRef]

- Jeon, J.; Yoon, I.; Kim, D.; Lee, J.; Paik, J. Fully digital auto-focusing system with automatic focusing region selection and point spread function estimation. IEEE Trans. Magn. 2010, 56, 1204–1210. [Google Scholar] [CrossRef]

- Koh, K.; Kuk, J.G.; Jin, B.; Choiand, W.; Cho, N.I. Autofocus method using dual aperture and color filters. J. Electron. Imaging 2011, 20, 033002-1–033002-6. [Google Scholar] [CrossRef]

- Yamana, M. Automatic Focal-Point Sensing Apparatus Sensing High and Low Magnification. U.S. Patent 5245173, 30 November 1993. [Google Scholar]

- Xu, X.; Wang, Y.; Tang, J.; Zhang, X.; Liu, X. Robust Automatic Focus Algorithm for Low Contrast Images Using a New Contrast Measure. Sensors 2011, 11, 8281–8294. [Google Scholar] [CrossRef]

- Aoyama, T.; Takeno, S.; Takeuchi, M.; Hasegawa, Y. Head-Mounted Display-Based Microscopic Imaging System with Customizable Field Size and Viewpoint. Sensors 2020, 20, 1967. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Chen, M.; Zhou, F.; Li, W.; Peng, Z. Accurate and Rapid Auto-Focus Methods Based on Image Quality Assessment for Telescope Observation. Appl. Sci. 2020, 10, 658. [Google Scholar] [CrossRef]

- Werner, T.; Carrasco, J. Validating Autofocus Algorithms with Automated Tests. Robotics 2018, 7, 33. [Google Scholar] [CrossRef]

- Zheng, F.; Zhang, B.; Gao, R.; Feng, Q. A High-Precision Method for Dynamically Measuring Train Wheel Diameter Using Three Laser Displacement Transducers. Sensors 2019, 19, 4148. [Google Scholar] [CrossRef]

- Zheng, F.; Feng, Q.; Zhang, B.; Li, J. A Method for Simultaneously Measuring 6DOF Geometric Motion Errors of Linear and Rotary Axes Using Lasers. Sensors 2019, 19, 1764. [Google Scholar] [CrossRef]

- Chen, Y.-T.; Huang, Y.-S.; Liu, C.-S. An Optical Sensor for Measuring the Position and Slanting Direction of Flat Surfaces. Sensors 2016, 16, 1061. [Google Scholar] [CrossRef]

- Wang, Y.H.; Hu, P.H.; Lin, Y.C.; Ke, S.S.; Chang, Y.H.; Liu, C.S.; Hong, J.B. Dual-confocal auto-focus sensing system in ultrafast laser application. In Proceedings of the 2010 IEEE Sensors, Kona, HI, USA, 1–4 November 2010; pp. 486–489. [Google Scholar]

| Development Team | Key Points | Compared with Our Proposed System | Reference |

|---|---|---|---|

| Chen et al. |

| Uses a complex algorithm | [7] |

| Jeon et al. |

| Has a high-cost image capturing system | [31] |

| Koh et al. |

| Requires a complex algorithm to deal with blurred images | [32] |

| Yamada et al. |

| Requires a high-cost and precise positioning for image capturing | [33] |

| Wang et al. |

| Without retrieving the information of moving direction near the focal plane | [41] |

| Key Parts | Device |

|---|---|

| Laser Light Source | Laser diode (Thorlabs HL6501MG) |

| Collimator | Thorlabs LT110P-A (f = 6.24 mm) Thorlabs LT240P-A (f = 8 mm) |

| Beam Splitter (BS) | Thorlabs CM1-BS013 (50:50) |

| Polarized Beam Splitter (PBS) | Thorlabs CM1-PBS251 |

| Objective | Olympus Co. (f = 18 mm) |

| Focusing Lens | Thorlabs AC254-100A Thorlabs AC254-200A |

| Pinholes | Thorlabs P75S Thorlabs P300S Thorlabs P400S |

| PD | Thorlabs PDA100A |

| Co-axial Vision | Navitar 1-6030, 1-60255 |

| Motor | Newport ILS-250HA |

| SET | EFL (mm) | Pinhole Position D (mm) | Pinhole Size ψ (μm) | ΔX1 (mm) | Slope 1 | ΔX2 (mm) | Slope 2 |

| 1 | 100 | 113.5 | 60 | 1.436 | 4.178 | 9.618 | 0.624 |

| 2 | 70 | 1.465 | 4.095 | 9.361 | 0.641 | ||

| 3 | 75 | 1.493 | 4.019 | 10.342 | 0.58 | ||

| 4 | 80 | 1.518 | 3.952 | 11.314 | 0.53 | ||

| 5 | 90 | 1.581 | 3.795 | 13.583 | 0.442 | ||

| 7 | 113.5 | 75 | 1.465 | 4.095 | 9.361 | 0.641 | |

| 8 | 114 | 1.527 | 3.929 | 8.903 | 0.674 | ||

| SET | EFL (mm) | Pinhole Position D (mm) | Pinhole Size ψ (μm) | ΔX1 (mm) | Slope 1 | ΔX2 (mm) | Slope 2 |

| 10 | 200 | 273.5 | 140 | 1.431 | 4.192 | 7.323 | 0.819 |

| 11 | 150 | 1.453 | 4.129 | 8.181 | 0.733 | ||

| 12 | 160 | 1.48 | 4.055 | 9.49 | 0.632 | ||

| 13 | 170 | 1.497 | 4.007 | 9.356 | 0.641 | ||

| 14 | 180 | 1.517 | 3.956 | 10.061 | 0.596 | ||

| 15 | 190 | 1.539 | 3.9 | 10.977 | 0.547 | ||

| 16 | 200 | 1.557 | 3.855 | 10.74 | 0.559 | ||

| 17 | 210 | 1.578 | 3.803 | 11.116 | 0.54 | ||

| 18 | 220 | 1.609 | 3.728 | 13.002 | 0.461 | ||

| 20 | 270.5 | 160 | 1.429 | 4.197 | 11.135 | 0.539 | |

| 21 | 271 | 1.43 | 4.195 | 9.959 | 0.602 | ||

| 22 | 271.5 | 1.44 | 4.168 | 9.77 | 0.614 | ||

| 23 | 272 | 1.442 | 4.161 | 8.8 | 0.682 | ||

| 24 | 272.5 | 1.451 | 4.134 | 8.665 | 0.692 | ||

| 25 | 273 | 1.465 | 4.096 | 8.896 | 0.674 | ||

| 26 | 273.5 | 1.48 | 4.055 | 9.49 | 0.632 | ||

| 27 | 274 | 1.487 | 4.035 | 9.142 | 0.656 | ||

| 28 | 274.5 | 1.493 | 4.019 | 8.307 | 0.722 | ||

| 29 | 275 | 1.504 | 3.988 | 8.401 | 0.714 | ||

| 30 | 275.5 | 1.512 | 3.969 | 8.13 | 0.738 | ||

| 31 | 276 | 1.517 | 3.955 | 7.904 | 0.759 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jan, C.-M.; Liu, C.-S.; Yang, J.-Y. Implementation and Optimization of a Dual-confocal Autofocusing System. Sensors 2020, 20, 3479. https://doi.org/10.3390/s20123479

Jan C-M, Liu C-S, Yang J-Y. Implementation and Optimization of a Dual-confocal Autofocusing System. Sensors. 2020; 20(12):3479. https://doi.org/10.3390/s20123479

Chicago/Turabian StyleJan, Chia-Ming, Chien-Sheng Liu, and Jyun-Yi Yang. 2020. "Implementation and Optimization of a Dual-confocal Autofocusing System" Sensors 20, no. 12: 3479. https://doi.org/10.3390/s20123479

APA StyleJan, C.-M., Liu, C.-S., & Yang, J.-Y. (2020). Implementation and Optimization of a Dual-confocal Autofocusing System. Sensors, 20(12), 3479. https://doi.org/10.3390/s20123479