Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications

Abstract

1. Introduction

- Training and verification of DAGAN using the public industrial inspection dataset, MVTec AD, and comparing it with previous GAN-based anomaly detection networks.

- Verification of DAGAN’s detection ability in an actual production line with two datasets (surface glass of mobile phone and wood defect detection datasets).

- Verification of DAGAN’s inspection capability with less training data.

2. Related Works

2.1. Generative Adversarial Network (GAN)

2.2. Boundary Equilibrium Generative Adversarial Network (BEGAN)

2.3. AnoGAN

2.4. GANomaly

2.5. Skip-GANomaly

3. Proposed Method

3.1. Pipeline

3.2. Training Objective

- Adversarial loss: To provide the generator with the best image reconstruction ability, the adversarial loss function is referred. This loss function, as shown in Equation (1), will reduce the difference between input image x and generated fake image as much as possible when training generator , whereas discriminator will distinguish the original input image, x, and fake image, x, generated by generator as much as possible. The goal is to minimize the adverse loss of generator and maximize the adverse loss of discriminator . The adversarial loss can be expressed as:

- Contextual loss of generator: To provide generator with better image reconstruction ability, the proposed method uses a contextualized loss function to represent the difference between x and pixels. It is defined as the L2 distance between the input graph, x, and generated fake image, . This ensures that the fake image is consistent with the input image as much as possible. The equation of contextual loss of generator is defined as:

- Contextual loss of discriminator: To converge to the best balance point shortly during training, a contextual loss of discriminator is set. This loss is used to represent the L2 distance between image x and image formed by the discriminator. This ensures that the original image and image generated by the discriminator is consistent with the input image as much as possible. A contextual loss of discriminator is defined as:

3.3. Detection Process

4. Experimental Setup

4.1. Datasets

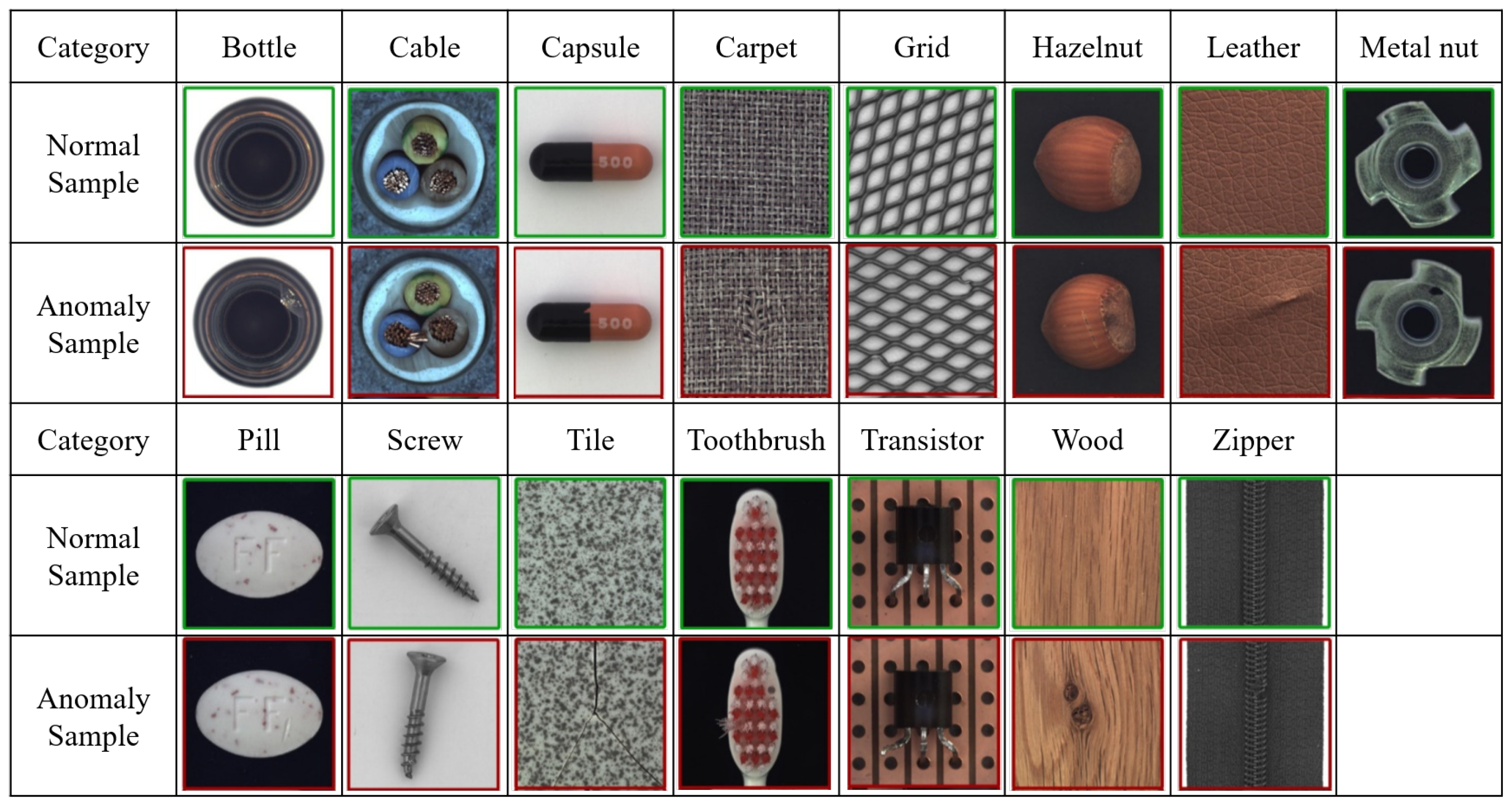

4.1.1. MVTec AD

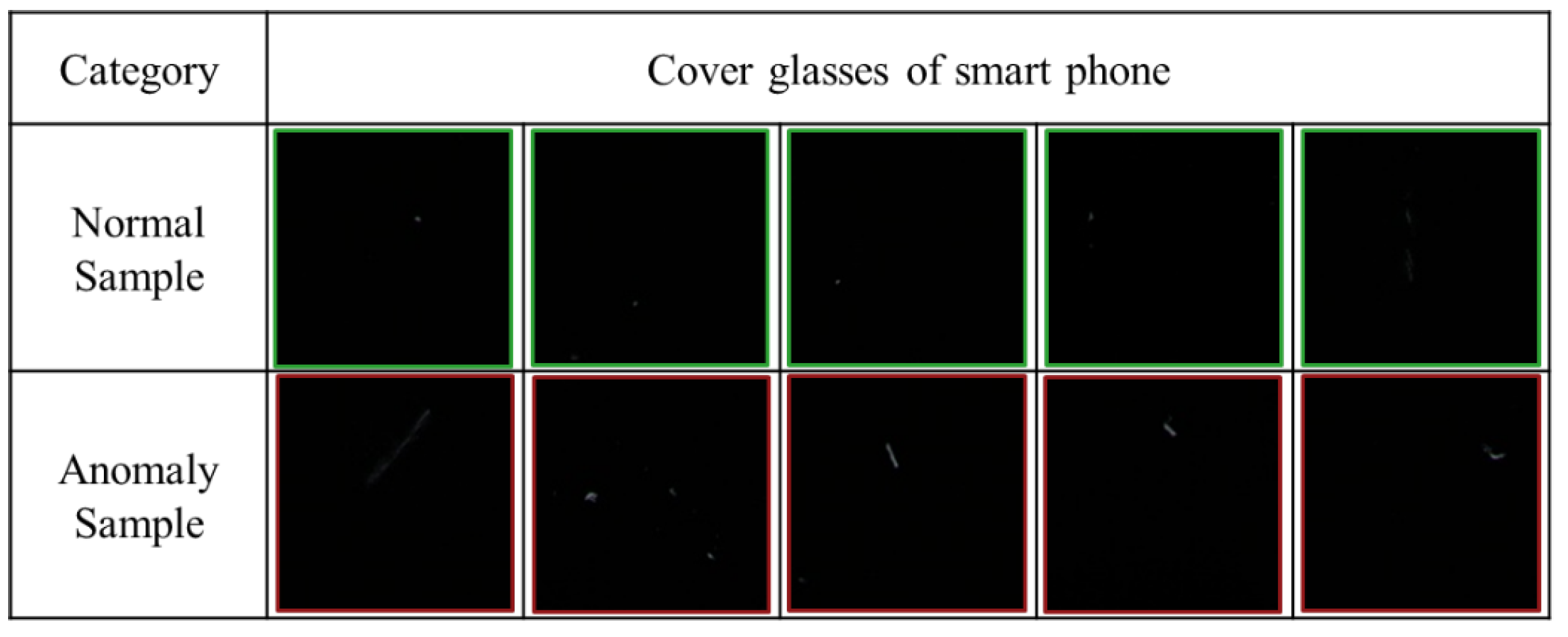

4.1.2. Production Line Mobile Phone Screen Glass Dataset

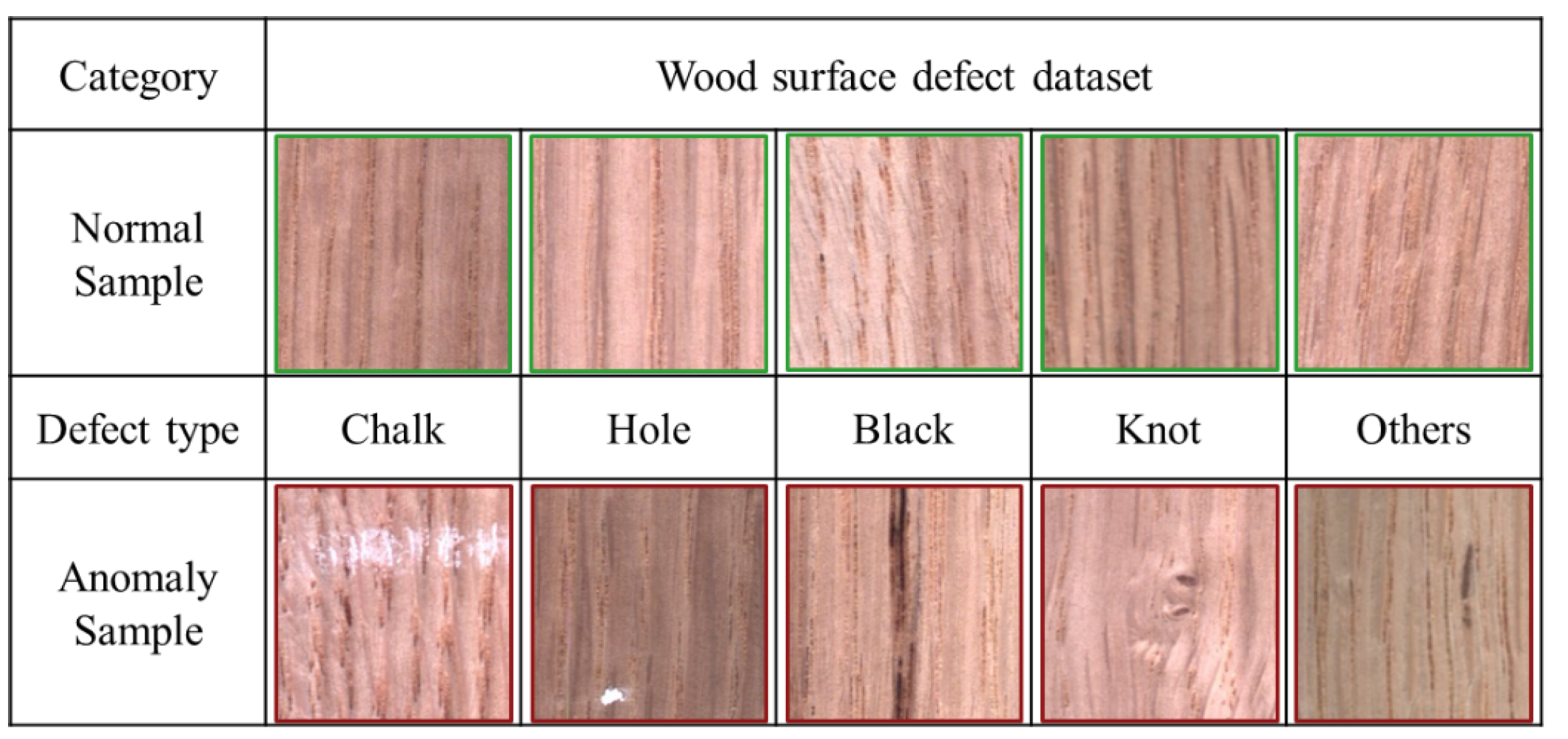

4.1.3. Production Line Wood Surface Dataset

4.2. Training Detail

4.3. Evaluation

5. Experiment Results

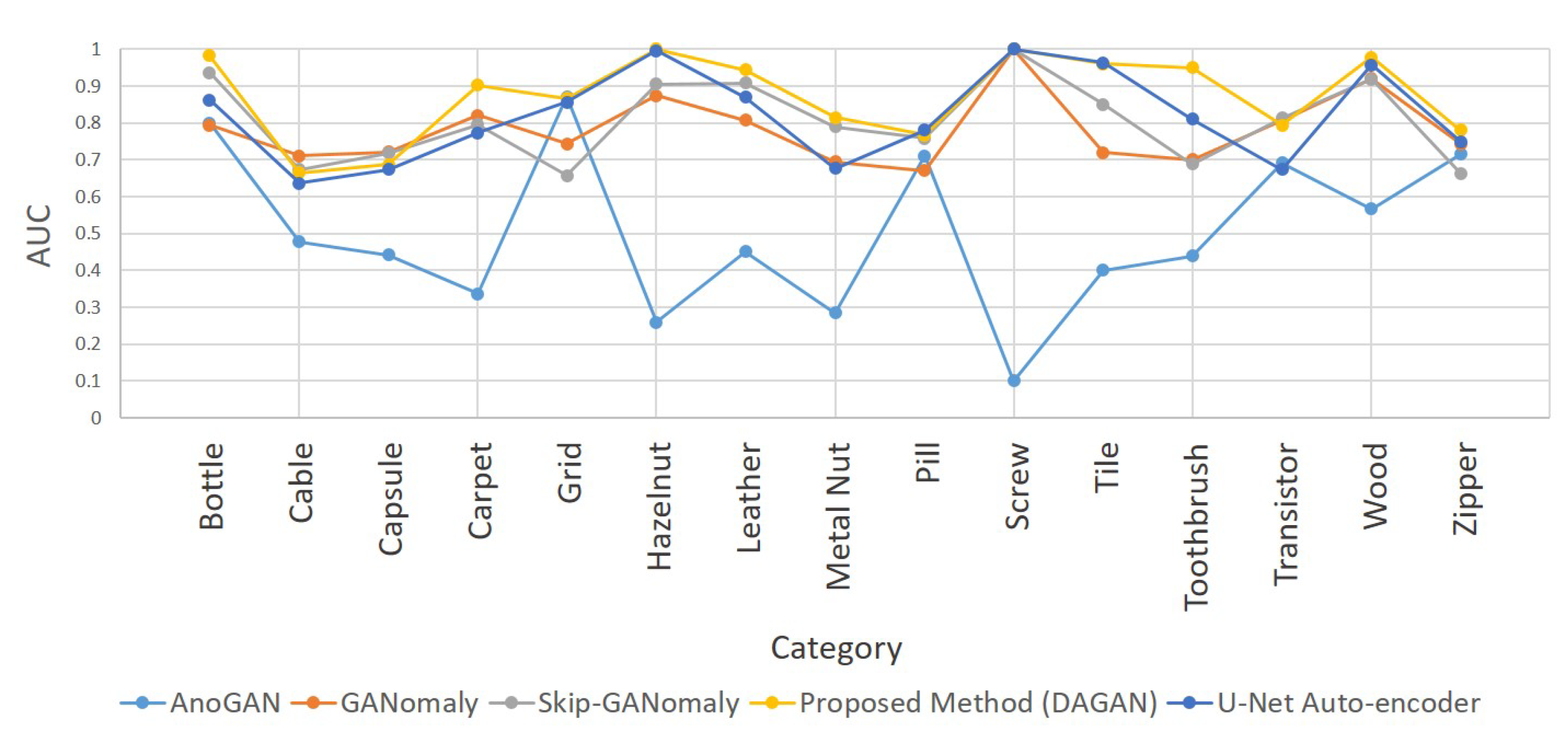

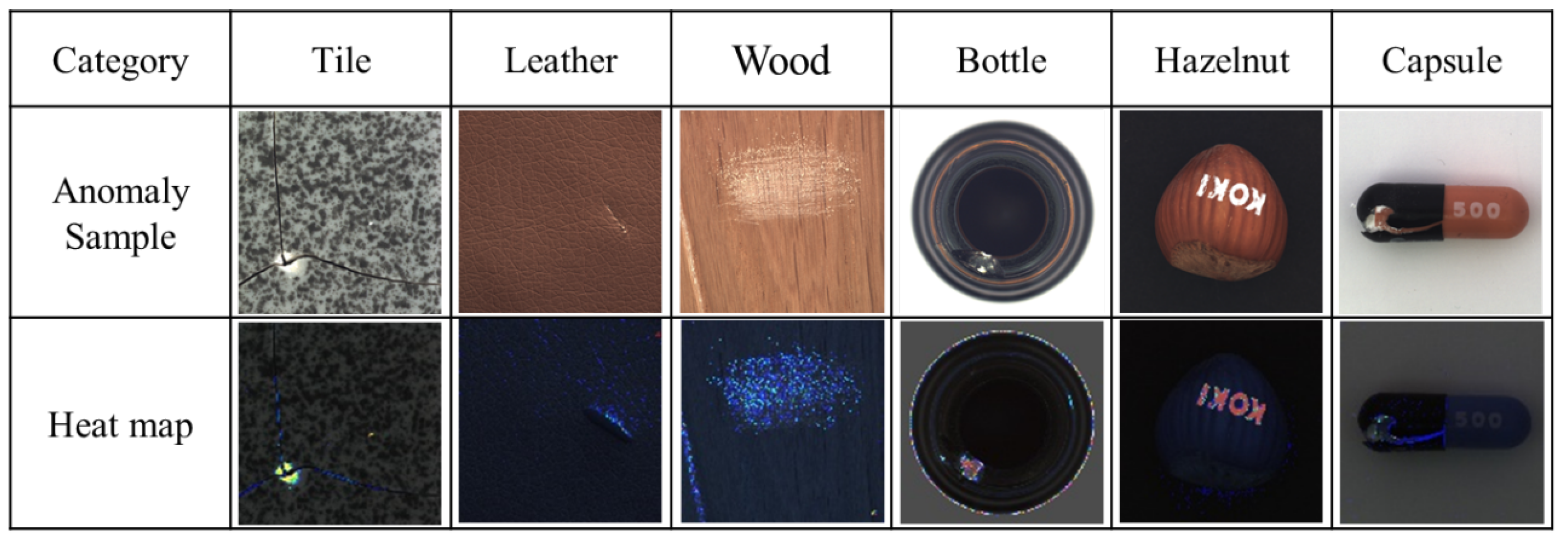

5.1. MVTec AD Dataset

5.2. Production Line Mobile Phone Screen Glass and Wood Surface Dataset

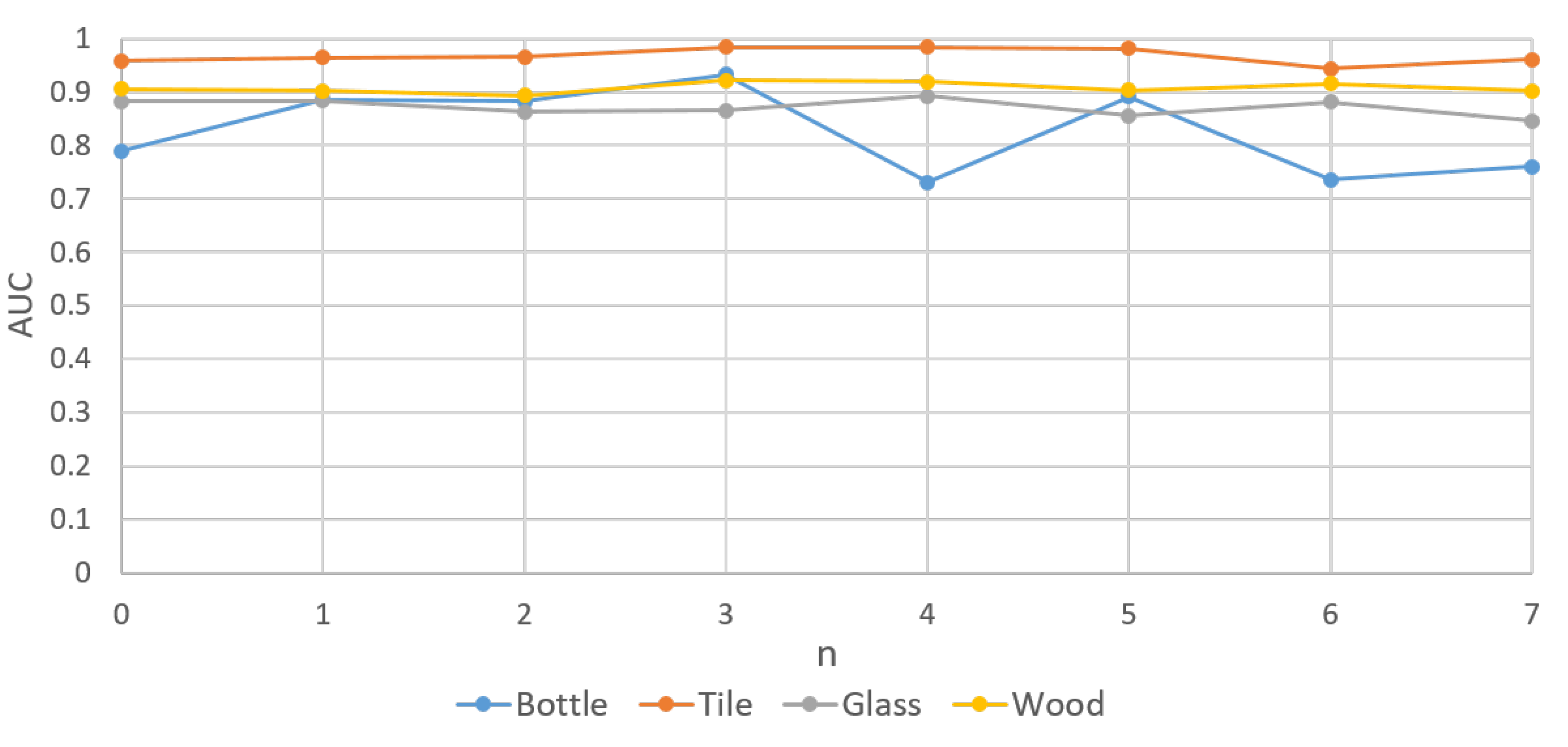

5.3. Training with Few Data

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kruger, R.P.; Thompson, W.B. A Technical and Economic Assessment of Computer Vision for Industrial Inspection and Robotic Assembly. IEEE 1981, 12, 1524–1538. [Google Scholar] [CrossRef]

- Sun, Y.-N.; Tsai, C.-T. A New Model-Based Approach for Industrial Visual Inspection. Pattern Recognit. 1992, 11, 1327–1336. [Google Scholar] [CrossRef]

- Kumar, A. Computer-Vision-Based Fabric Defect Detection: A Survey. IEEE Trans. Ind. Electron. 2008, 1, 348–363. [Google Scholar] [CrossRef]

- Liu, L.; Shah, S.A.; Zhao, G.; Yang, X. Respiration Symptoms Monitoring in Body Area Networks. Appl. Sci. 2018, 3, 568. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. arXiv 2018, arXiv:1608.06993. [Google Scholar]

- Chen, F.; Jahanshahi, M.R. NB-CNN: Deep Learning-Based Crack Detection Using Convolutional Neural Network and Naive Bayes Data Fusion. IEEE Trans. Ind. Electron. 2018, 65, 4392–4400. [Google Scholar] [CrossRef]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of Deep Convolutional Neural Network Architectures for Automated Feature Extraction in Industrial Inspection. CIRP Ann. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Zhong, J.; Liu, Z.; Han, Z.; Han, Y.; Zhang, W. A CNN-Based Defect Inspection Method for Catenary Split Pins in High-Speed Railway. IEEE Trans. Instrum. Meas. 2019, 8, 2849–2860. [Google Scholar] [CrossRef]

- Lee, J.-H.; Yoon, S.-S.; Kim, I.-H.; Jung, H.-J. Diagnosis of Crack Damage on Structures based on Image Processing Techniques and R-CNN using Unmanned Aerial Vehicle (UAV). Proc. SPIE 2018. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. arXiv 2017, arXiv:1708.04896. [Google Scholar]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network with Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote. Sens. Lett. 2016, 1, 364–368. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Diamant, I.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. GAN-Based Synthetic Medical Image Augmentation for Increased CNN Performance in Liver Lesion Classification. Neurocomputing 2018, 12, 321–331. [Google Scholar] [CrossRef]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised Anomaly Detection with Generative Adversarial Networks to Guide Marker Discovery. arXiv 2017, arXiv:1703.05921. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. GANomaly: Semi-Supervised Anomaly Detection via Adversarial Training. arXiv 2018, arXiv:1805.06725. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Skip-GANomaly: Skip Connected and Adversarially Trained Encoder-Decoder Anomaly Detection. arXiv 2019, arXiv:1901.08954. [Google Scholar]

- Li, D.; Chen, D.; Goh, J.; Ng, S.-K. Anomaly Detection with Generative Adversarial Networks for Multivariate Time Series. arXiv 2019, arXiv:1809.04758. [Google Scholar]

- Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; Ng, S.-K. MAD-GAN: Multivariate Anomaly Detection for Time Series Data with Generative Adversarial Networks. In International Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2019; pp. 703–716. [Google Scholar]

- Chen, M.; Li, C.; Li, K.; Zhang, H.; He, Y. Double Encoder Conditional GAN for Facial Expression Synthesis. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 9286–9291. [Google Scholar]

- Kurach, K.; Lucic, M.; Zhai, X.; Michalski, M.; Gelly, S. The GAN Landscape: Losses, Architectures, Regularization, and Normalization. Available online: https://openreview.net/forum?id=rkGG6s0qKQ (accessed on 10 June 2020).

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. [Google Scholar]

- Berthelot, D.; Schumm, T.; Metz, L. BEGAN: Boundary Equilibrium Generative Adversarial Networks. arXiv 2017, arXiv:1703.10717. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9584–9592. [Google Scholar]

- Huang, C.; Cao, J.; Ye, F.; Li, M.; Zhang, Y.; Lu, C. Inverse-Transform AutoEncoder for Anomaly Detection. arXiv 2019, arXiv:1911.10676. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. Uninformed Students: Student-Teacher Anomaly Detection with Discriminative Latent Embeddings. arXiv 2020, arXiv:1911.02357. [Google Scholar]

- Venkataramanan, S.; Peng, K.-C.; Singh, R.V.; Mahalanobis, A. Attention Guided Anomaly Localization in Images. arXiv 2020, arXiv:1911.08616. [Google Scholar]

| AnoGAN | GANomaly | Skip-GANomaly | |

|---|---|---|---|

| Advantages | Training without anomaly data. | Significant improvement in detection time. | Better ability of image reconstruction. |

| Limitations | Excessive time to detection. | Cannot reconstruct complex images. | Model collapse during training. |

| Category | AnoGAN | GANomaly | Skip-GANomaly | DAGAN | U-Net |

|---|---|---|---|---|---|

| Bottle | 0.800 | 0.794 | 0.937 | 0.983 | 0.863 |

| Cable | 0.477 | 0.711 | 0.674 | 0.665 | 0.636 |

| Capsule | 0.442 | 0.721 | 0.718 | 0.687 | 0.673 |

| Carpet | 0.337 | 0.821 | 0.795 | 0.903 | 0.774 |

| Grid | 0.871 | 0.743 | 0.657 | 0.867 | 0.857 |

| Hazelnut | 0.259 | 0.874 | 0.906 | 1.00 | 0.996 |

| Leather | 0.451 | 0.808 | 0.908 | 0.944 | 0.870 |

| Metal Nut | 0.284 | 0.694 | 0.79 | 0.815 | 0.676 |

| Pill | 0.711 | 0.671 | 0.758 | 0.768 | 0.781 |

| Screw | 0.10 | 1.00 | 1.00 | 1.00 | 1.00 |

| Tile | 0.401 | 0.72 | 0.85 | 0.961 | 0.964 |

| Toothbrush | 0.439 | 0.700 | 0.689 | 0.950 | 0.811 |

| Transistor | 0.692 | 0.808 | 0.814 | 0.794 | 0.674 |

| Wood | 0.567 | 0.920 | 0.919 | 0.979 | 0.958 |

| Zipper | 0.715 | 0.744 | 0.663 | 0.781 | 0.750 |

| Category | AnoGAN | GANomaly | Skip-GANomaly | DAGAN | U-Net |

|---|---|---|---|---|---|

| Glass | 0.543 | 0.600 | 0.618 | 0.853 | 0.828 |

| Wood | 0.716 | 0.915 | 0.797 | 0.925 | 0.886 |

| n | Bottle | Tile | Glass | Wood |

|---|---|---|---|---|

| 0 | 0.790 | 0.958 | 0.882 | 0.906 |

| 1 | 0.886 | 0.964 | 0.883 | 0.902 |

| 2 | 0.882 | 0.966 | 0.863 | 0.893 |

| 3 | 0.933 | 0.984 | 0.865 | 0.921 |

| 4 | 0.731 | 0.984 | 0.892 | 0.919 |

| 5 | 0.891 | 0.981 | 0.856 | 0.903 |

| 6 | 0.736 | 0.943 | 0.881 | 0.915 |

| 7 | 0.760 | 0.961 | 0.846 | 0.902 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, T.-W.; Kuo, W.-H.; Lan, J.-H.; Ding, C.-F.; Hsu, H.; Young, H.-T. Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications. Sensors 2020, 20, 3336. https://doi.org/10.3390/s20123336

Tang T-W, Kuo W-H, Lan J-H, Ding C-F, Hsu H, Young H-T. Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications. Sensors. 2020; 20(12):3336. https://doi.org/10.3390/s20123336

Chicago/Turabian StyleTang, Ta-Wei, Wei-Han Kuo, Jauh-Hsiang Lan, Chien-Fang Ding, Hakiem Hsu, and Hong-Tsu Young. 2020. "Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications" Sensors 20, no. 12: 3336. https://doi.org/10.3390/s20123336

APA StyleTang, T.-W., Kuo, W.-H., Lan, J.-H., Ding, C.-F., Hsu, H., & Young, H.-T. (2020). Anomaly Detection Neural Network with Dual Auto-Encoders GAN and Its Industrial Inspection Applications. Sensors, 20(12), 3336. https://doi.org/10.3390/s20123336