Automatic 360° Mono-Stereo Panorama Generation Using a Cost-Effective Multi-Camera System

Abstract

1. Introduction

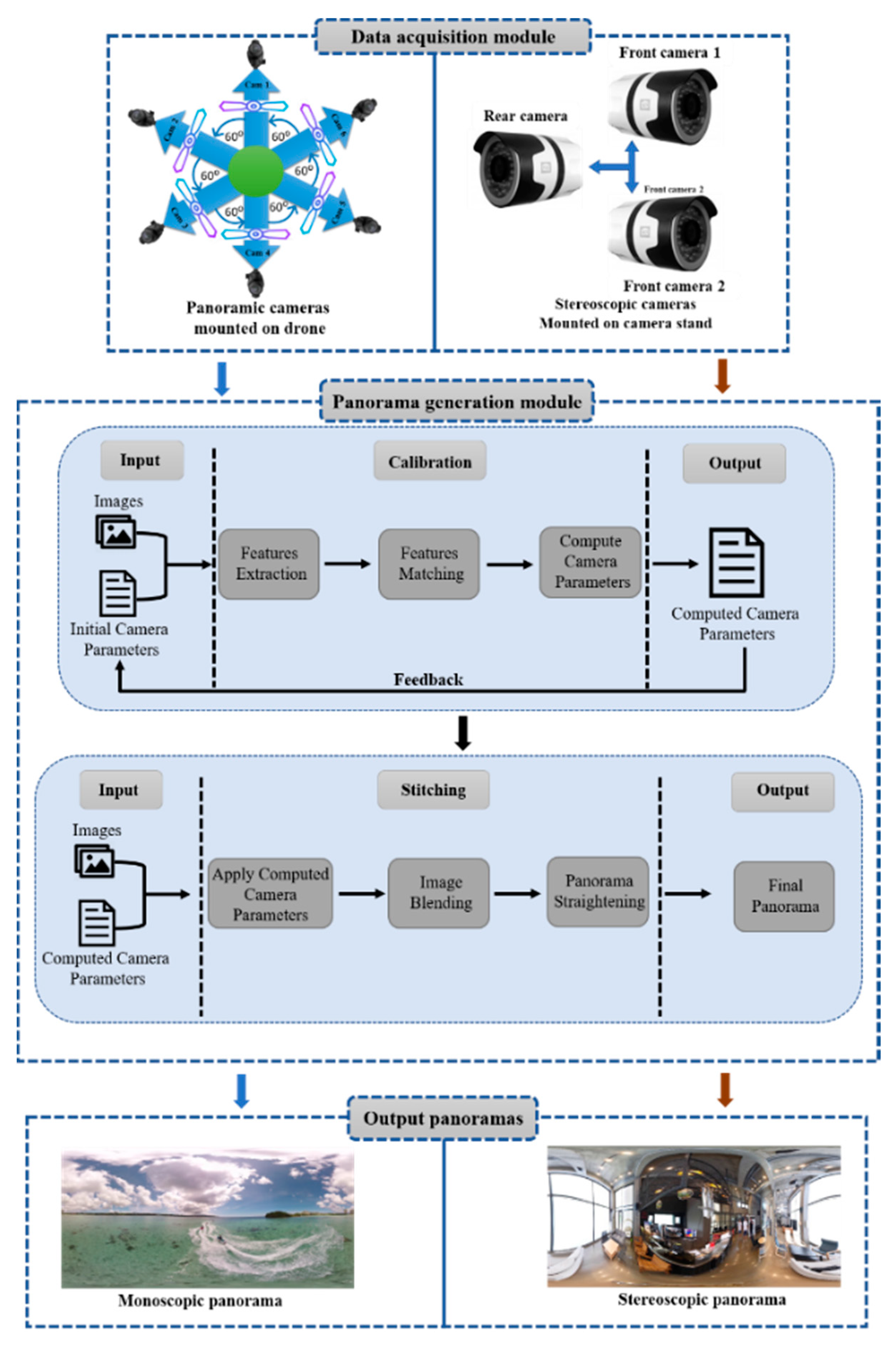

- An efficient and cost-effective multi-camera system is proposed for generating 360° panoramas. The precise placement of cameras with enough overlapping gaps for image acquisition makes the panorama generation module fully automatic, which directly stitches images captured with the proposed image acquisition technology without any user interaction. Furthermore, the panorama generated by our system has no visible seams and is automatically straightened.

- Compared to other existing panorama generation systems, the proposed system reduces the computation cost and time complexity by using a portable image acquisition system that uses only six cameras for mono contents generation and three for stereo contents generation.

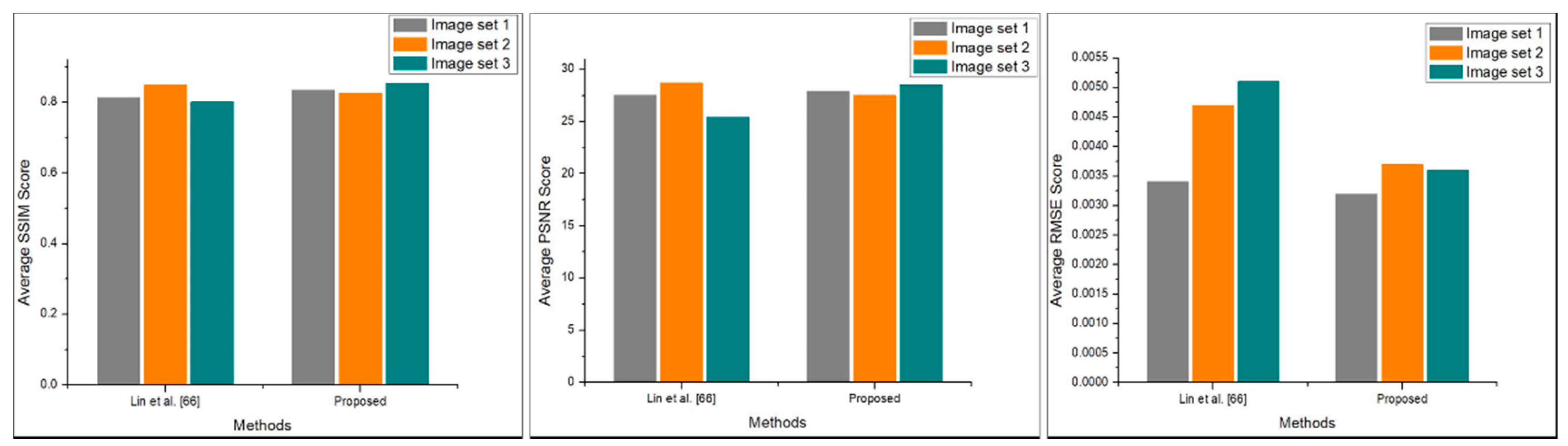

- The proposed system dominates existing mono and stereo contents generation systems from both qualitative and quantitative perspectives.

2. Related Work

3. Proposed Methodology

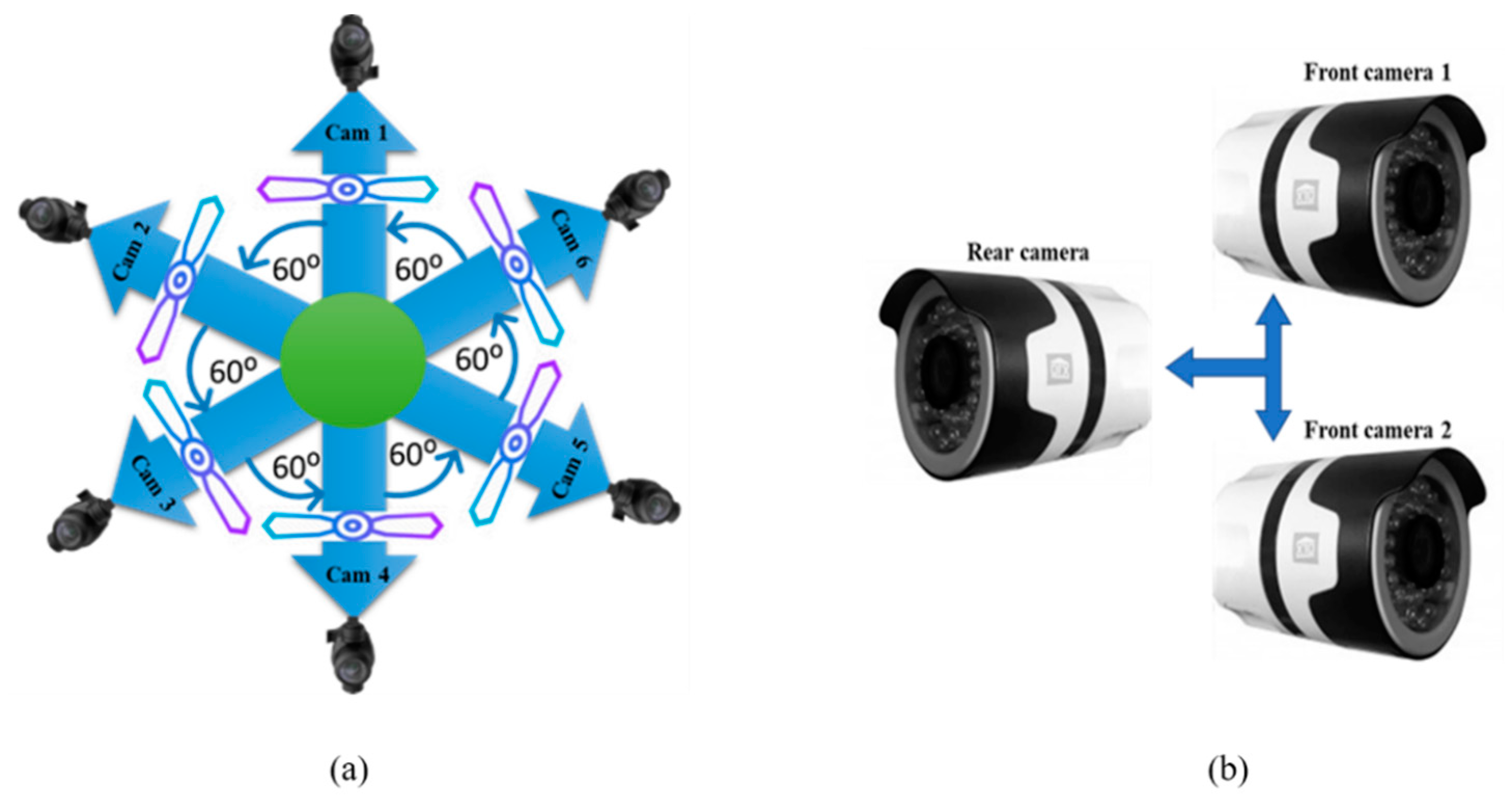

3.1. Data Acquisition

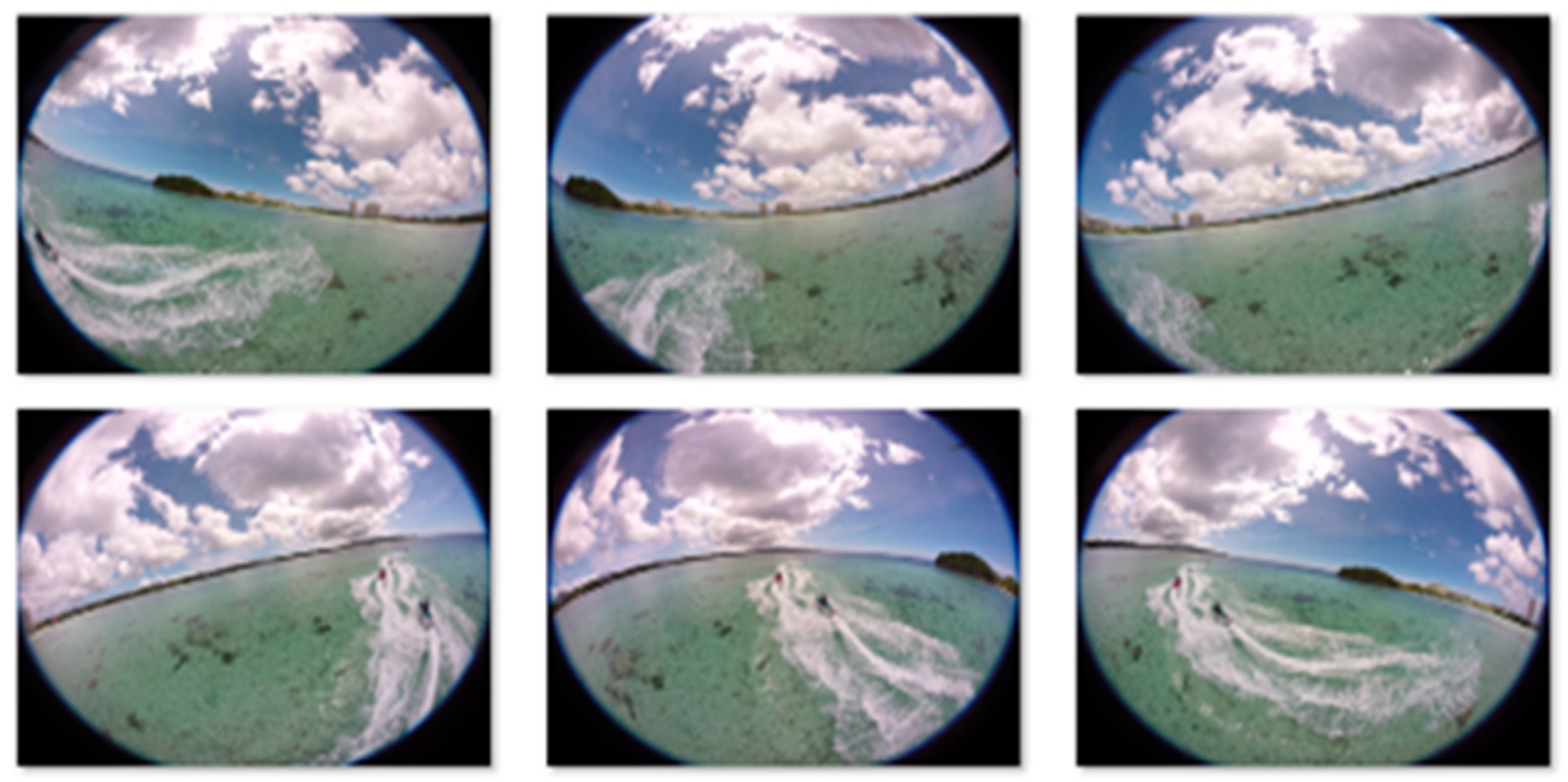

3.1.1. Mono Data Generation

3.1.2. Stereo Data Generation

3.2. Panorama Generation Module

3.2.1. Camera Calibration

Feature Extraction

Feature Matching

Optimization of Camera Parameters

3.2.2. Image Stitching

Image Alignment

Image Blending

| Algorithm 1 Camera Calibration Steps |

| Input: 1) Images Im || Is 2) Initial camera parameters ICP *note: Im and Is are the images taken with the proposed mono and stereo cameras. Where || demonstrates that input will either be Im or Is |

| Output: Computed camera parameters CCP |

| Steps: |

| while (Im || Is) 1: Extract consistent features, £c ← ORB (Imi, Imi+1, Imi+2, Imi+3, Imi+4, Imi+5) 2: Feature matching, Imf ← RANSAC (£c) 3: Homography calculation, Fmf ← H(Imf) 4: Computing camera parameters, CCP ← Φ (Fmf) end while |

| Algorithm 2 Image Stitching Steps |

| Input: 1: Images Im || Is 2: Computed camera parameters CCP |

| Output: Panoramic image թ |

| Steps: |

| while (Im || Is) 1: Image wrapping, wi ← Щ (Imi, Imi+1, Imi+2, Imi+3, Imi+4, Imi+5, CCP) 2: Image blending, Iblend ← βmulti-band (wi, wi+1, wi+2, wi+3, wi+4, wi+5) 3: Panorama straightening, թ ← ζp (Iblend(i), Iblend(i+1), Iblend(i+2), Iblend(i+3), Iblend(i+4), Iblend(i+5)) end while |

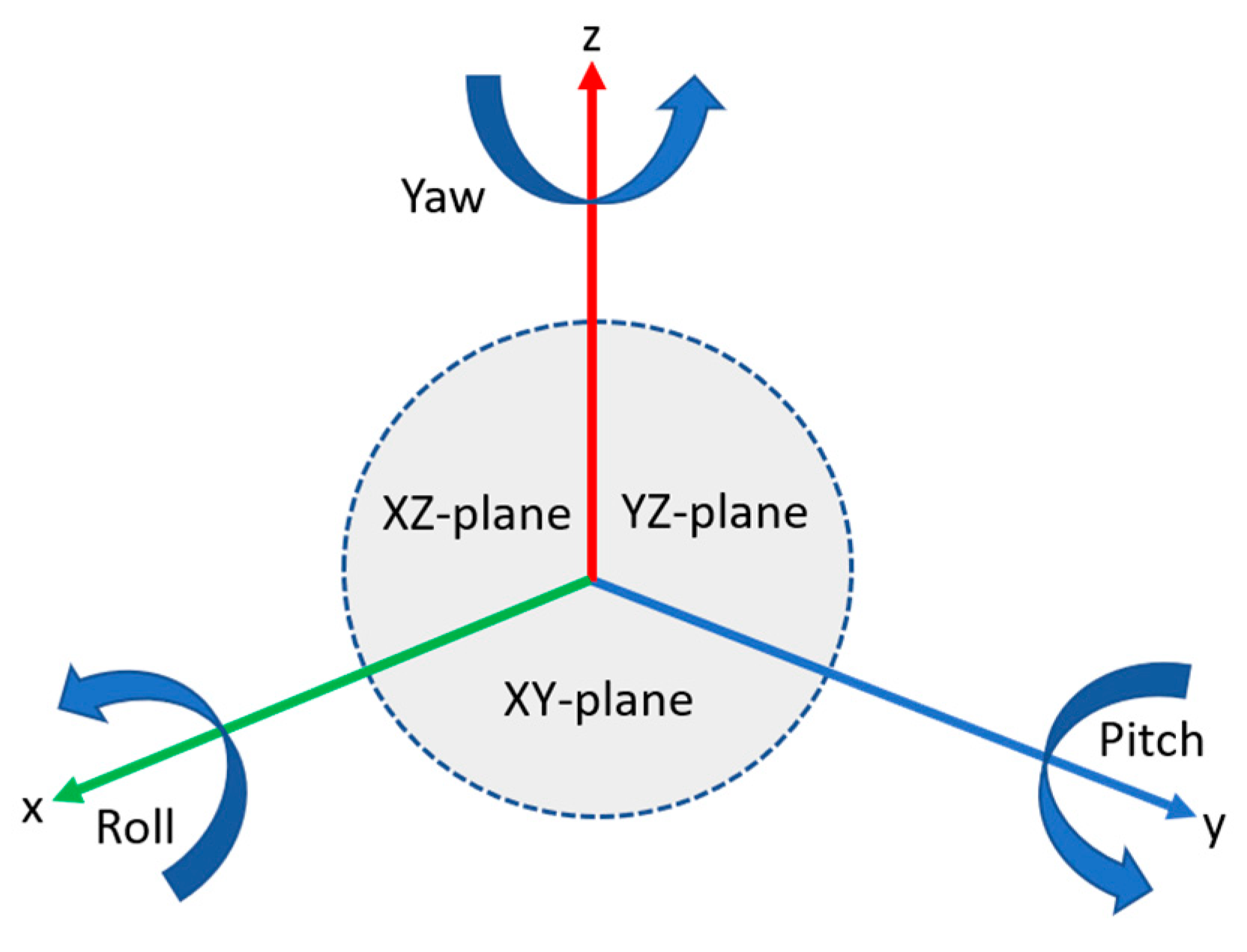

Panorama Straightening

4. Experimental Results

4.1. Mono Panorama Results

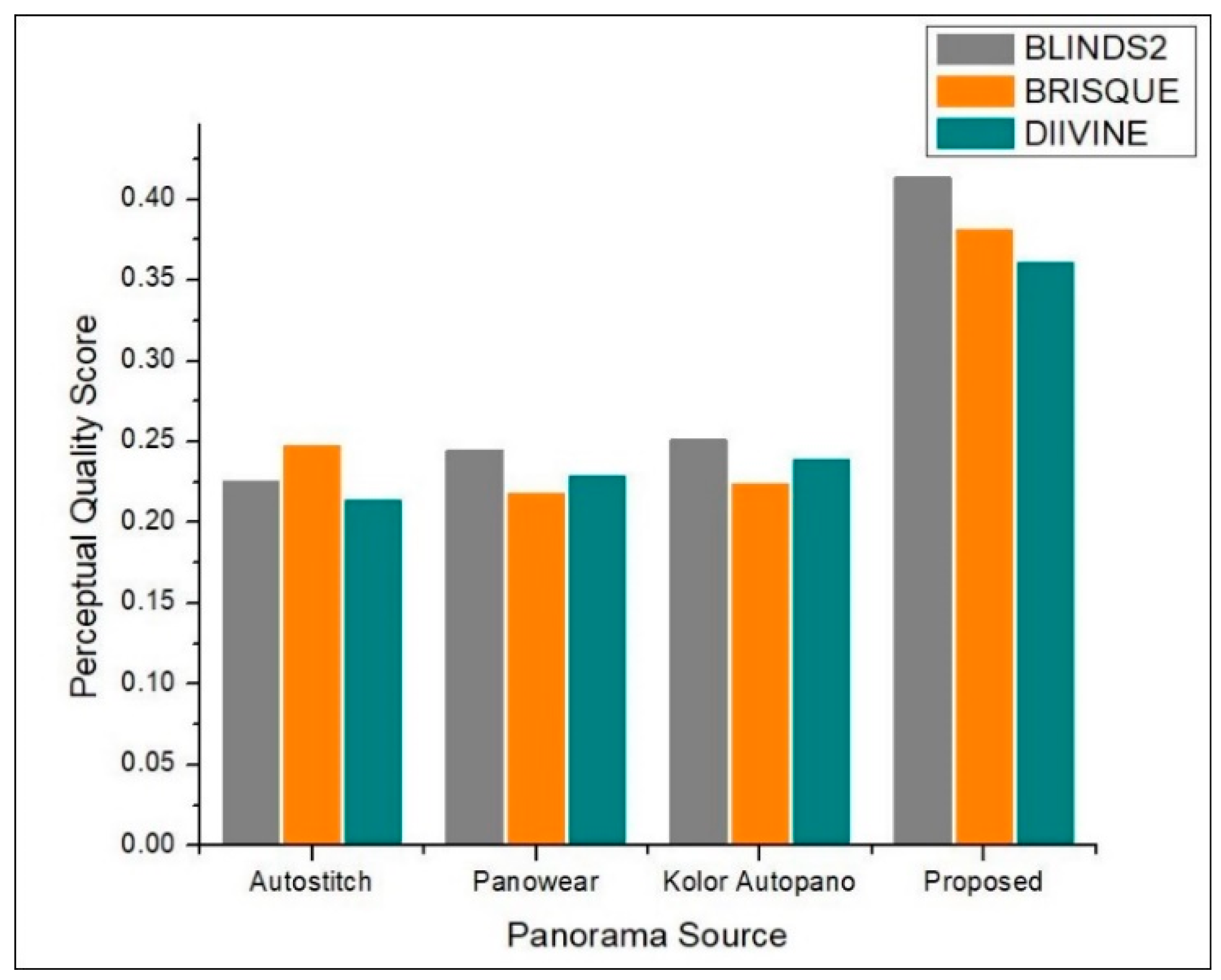

Comparison with State-of-the-Art Mono Panorama Generation Systems

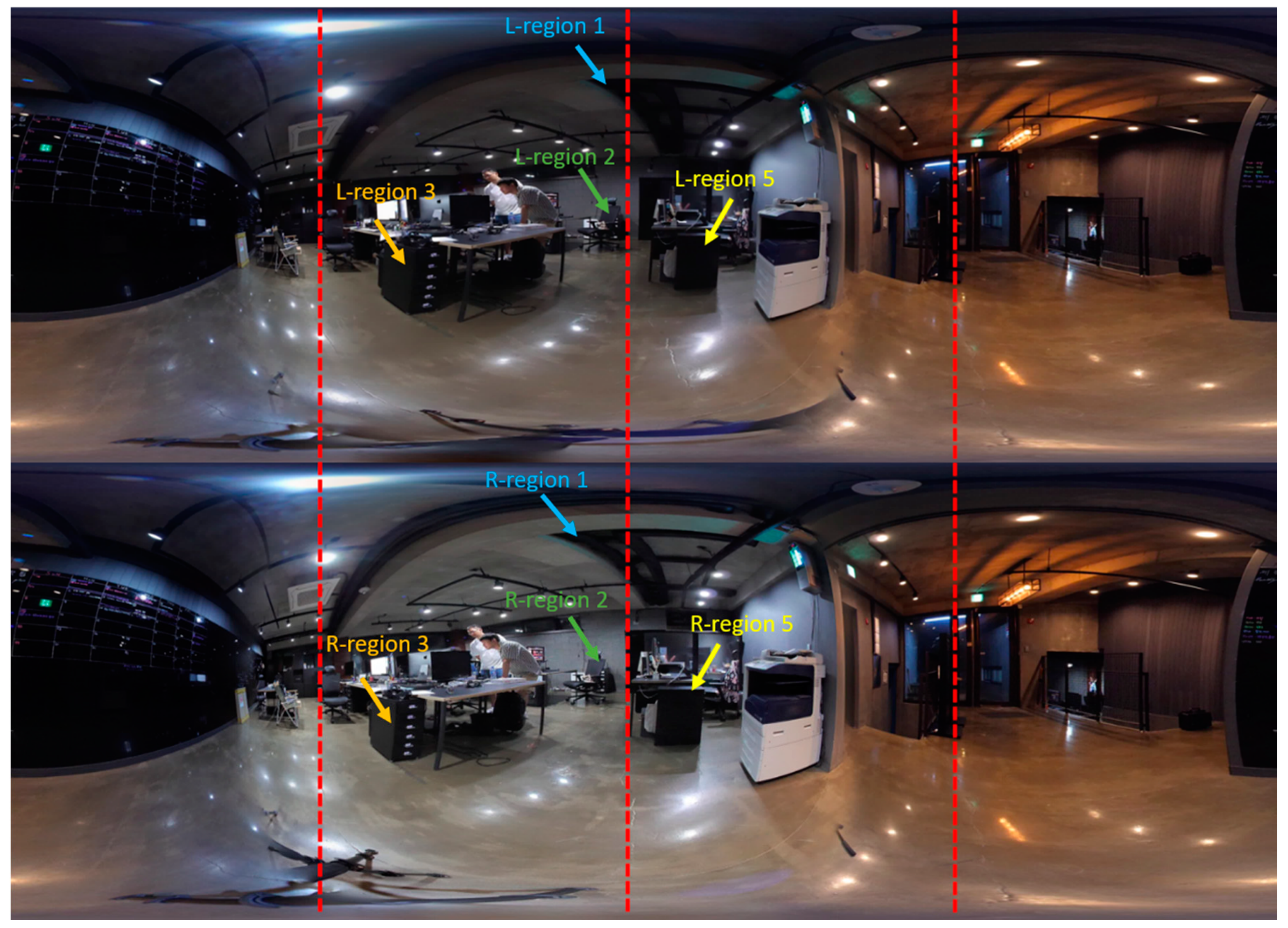

4.2. Stereo Panorama Results

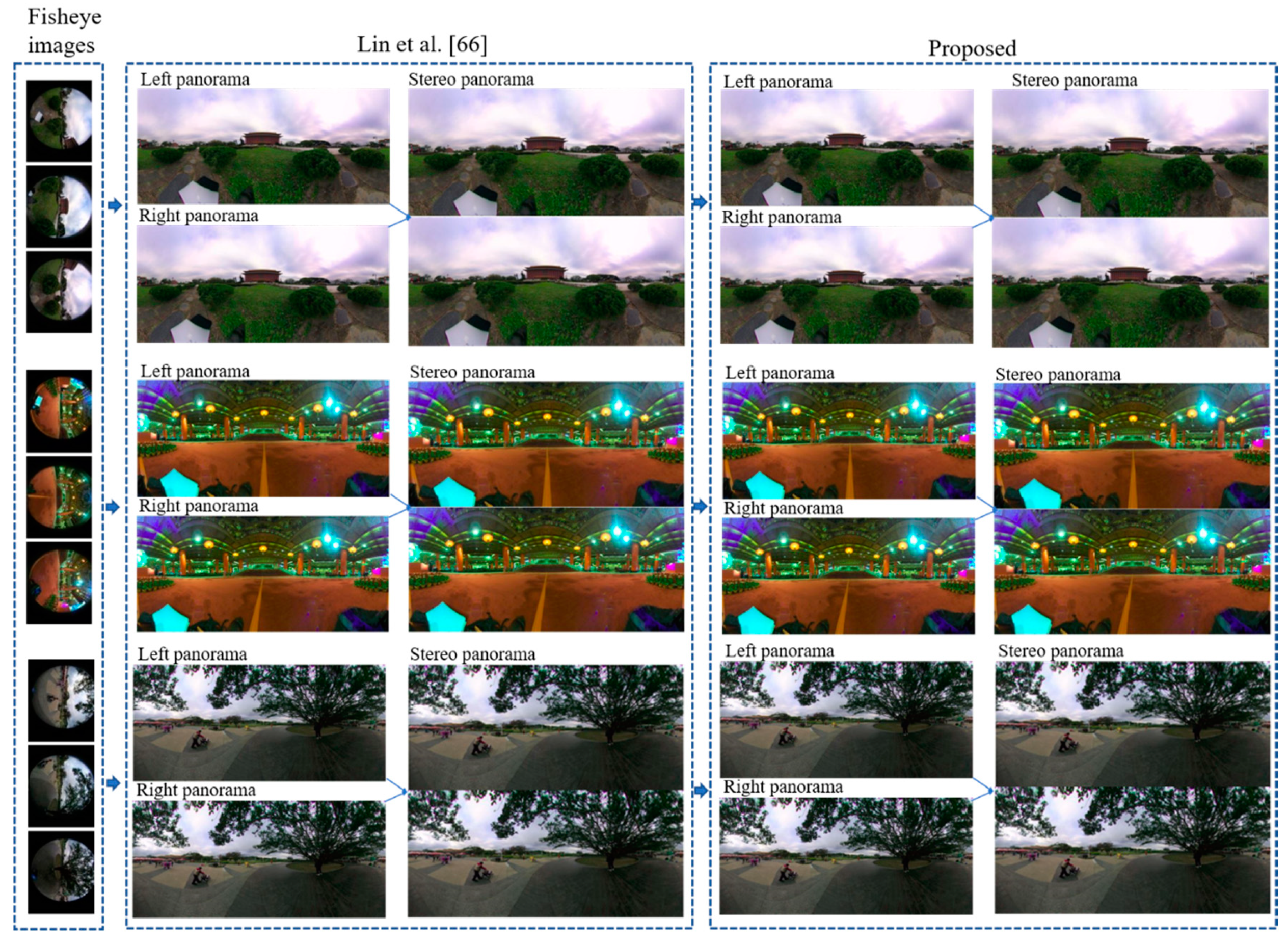

Comparison with State-of-the-Art Stereo Panorama Generation Systems

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Thanh Le, T.; Jeong, J.; Ryu, E.-S. Efficient Transcoding and Encryption for Live 360 CCTV System. Appl. Sci. 2019, 9, 760. [Google Scholar] [CrossRef]

- Feriozzi, R.; Meschini, A.; Rossi, D.; Sicuranza, F. VIRTUAL TOURS FOR SMART CITIES: A COMPARATIVE PHOTOGRAMMETRIC APPROACH FOR LOCATING HOT-SPOTS IN SPHERICAL PANORAMAS. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, 347–353. [Google Scholar] [CrossRef]

- Shah, A.A.; Mustafa, G.; Ali, Z.; Anees, T. Video Stitching with Localized 360o Model for Intelligent Car Parking Monitoring and Assistance System. IJCSNS 2019, 19, 43. [Google Scholar]

- Demiralp, K.O.; Kurşun-Çakmak, E.S.; Bayrak, S.; Akbulut, N.; Atakan, C.; Orhan, K. Trabecular structure designation using fractal analysis technique on panoramic radiographs of patients with bisphosphonate intake: A preliminary study. Oral Radiol. 2019, 35, 23–28. [Google Scholar] [CrossRef]

- Wróżyński, R.; Pyszny, K.; Sojka, M. Quantitative Landscape Assessment Using LiDAR and Rendered 360 Panoramic Images. Remote. Sens. 2020, 12, 386. [Google Scholar] [CrossRef]

- Yong, H.; Huang, J.; Xiang, W.; Hua, X.; Zhang, L. Panoramic background image generation for PTZ cameras. IEEE Trans. Image Process. 2019, 28, 3162–3176. [Google Scholar] [CrossRef] [PubMed]

- Zia, O.; Kim, J.H.; Han, K.; Lee, J.W. 360° Panorama Generation using Drone Mounted Fisheye Cameras. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–3. [Google Scholar] [CrossRef]

- Krishnakumar, K.; Gandhi, S.I. Video stitching using interacting multiple model based feature tracking. Multimedia Tools Appl. 2019, 78, 1375–1397. [Google Scholar] [CrossRef]

- Qi, J.; Li, G.; Ju, Z.; Chen, D.; Jiang, D.; Tao, B.; Jiang, G.; Sun, Y. Image stitching based on improved SURF algorithm. In Proceedings of the International Conference on Intelligent Robotics and Applications, Shenyang, China, 9–11 August 2019; pp. 515–527. [Google Scholar]

- Sovetov, K.; Kim, J.-S.; Kim, D. Online Panorama Image Generation for a Disaster Rescue Vehicle. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Korea, 24–27 June 2019; pp. 92–97. [Google Scholar]

- Zhang, J.; Yin, X.; Luan, J.; Liu, T. An improved vehicle panoramic image generation algorithm. Multimedia Tools Appl. 2019, 78, 27663–27682. [Google Scholar] [CrossRef]

- Chen, Z.; Aksit, D.C.; Huang, J.; Jin, H. Six-Degree of Freedom Video Playback of a Single Monoscopic 360-Degree Video. U.S. Patents 10368047B2, 30 July 2019. [Google Scholar]

- Bigioi, P.; Susanu, G.; Barcovschi, I.; Stec, P.; Murray, L.; Drimbarean, A.; Corcoran, P. Stereoscopic (3d) Panorama Creation on Handheld Device. U.S. Patents 20190089941A1, 21 March 2019. [Google Scholar]

- Zhang, F.; Nestares, O. Generating Stereoscopic Light Field Panoramas Using Concentric Viewing Circles. U.S. Patents 20190089940A1, 21 March 2019. [Google Scholar]

- Violante, M.G.; Vezzetti, E.; Piazzolla, P. Interactive virtual technologies in engineering education: Why not 360° videos? Int. J. Interact. Des. Manuf. 2019, 13, 729–742. [Google Scholar] [CrossRef]

- Rupp, M.A.; Odette, K.L.; Kozachuk, J.; Michaelis, J.R.; Smither, J.A.; McConnell, D.S. Investigating learning outcomes and subjective experiences in 360-degree videos. Comput. Educ. 2019, 128, 256–268. [Google Scholar] [CrossRef]

- Kwon, S. A CNN-Assisted Enhanced Audio Signal Processing for Speech Emotion Recognition. Sensors 2020, 20, 183. [Google Scholar]

- Mustaqeem, M.; Sajjad, M.; Kwon, S. Clustering Based Speech Emotion Recognition by Incorporating Learned Features and Deep BiLSTM. IEEE Access. 2020, 8, 79861–79875. [Google Scholar] [CrossRef]

- Klippel, A.; Zhao, J.; Jackson, K.L.; La Femina, P.; Stubbs, C.; Wetzel, R.; Blair, J.; Wallgrün, J.O.; Oprean, D. Transforming earth science education through immersive experiences: Delivering on a long held promise. J. Educ. Comput. Res. 2019, 57, 1745–1771. [Google Scholar] [CrossRef]

- Mathew, P.S.; Pillai, A.S. Role of Immersive (XR) Technologies in Improving Healthcare Competencies: A Review. In Virtual and Augmented Reality in Education, Art, and Museums; IGI Global: Hershey, PE, USA, 2020; pp. 23–46. [Google Scholar] [CrossRef]

- Reyes, M.E.; Dillague, S.G.O.; Fuentes, M.I.A.; Malicsi, C.A.R.; Manalo, D.C.F.; Melgarejo, J.M.T.; Cayubit, R.F.O. Self-Esteem and Optimism as Predictors of Resilience among Selected Filipino Active Duty Military Personnel in Military Camps. J. Posit. Psychol. Wellbeing 2019, 4, 1–11. [Google Scholar]

- Wang, K.-H.; Lai, S.-H. Object Detection in Curved Space for 360-Degree Camera. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3642–3646. [Google Scholar]

- Yang, T.; Li, Z.; Zhang, F.; Xie, B.; Li, J.; Liu, L. Panoramic uav surveillance and recycling system based on structure-free camera array. IEEE Access. 2019, 7, 25763–25778. [Google Scholar] [CrossRef]

- Heindl, C.; Pönitz, T.; Pichler, A.; Scharinger, J. Large area 3D human pose detection via stereo reconstruction in panoramic cameras. arXiv 2019, arXiv:1907.00534. [Google Scholar]

- Qiu, S.; Zhou, D.; Du, Y. The image stitching algorithm based on aggregated star groups. Signal. Image Video Process. 2019, 13, 227–235. [Google Scholar] [CrossRef]

- Hu, F.; Li, Y.; Feng, M. Continuous Point Cloud Stitch based on Image Feature Matching Constraint and Score. IEEE Trans. Intell. Vehicles 2019, 4, 363–374. [Google Scholar] [CrossRef]

- Bahraini, M.S.; Rad, A.B.; Bozorg, M. SLAM in Dynamic Environments: A Deep Learning Approach for Moving Object Tracking Using ML-RANSAC Algorithm. Sensors 2019, 19, 3699. [Google Scholar] [CrossRef]

- Shi, H.; Guo, L.; Tan, S.; Li, G.; Sun, J. Improved parallax image stitching algorithm based on feature block. Symmetry 2019, 11, 348. [Google Scholar] [CrossRef]

- Chi, L.; Guan, X.; Shen, X.; Zhang, H. Line-point feature based structure-preserving image stitching. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; pp. 2111–2116. [Google Scholar]

- Kekre, H.; Thepade, S.D. Image blending in vista creation using Kekre’s LUV color space. In Proceedings of the SPIT-IEEE Colloquium and International Conference, Andheri, Mumbai, 15–16 December 2007; pp. 4–5. [Google Scholar]

- Gu, F.; Rzhanov, Y. Optimal image blending for underwater mosaics. In Proceedings of the OCEANS, Boston, MA, USA, 18–21 September 2006; pp. 1–5. [Google Scholar]

- Zhao, W. Flexible image blending for image mosaicing with reduced artifacts. Int. J. Pattern Recognit. Artif. Intell. 2016, 20, 609–628. [Google Scholar] [CrossRef]

- Shimizu, T.; Yoneyama, A.; Takishima, Y. A fast video stitching method for motion-compensated frames in compressed video streams. In Proceedings of the 2006 Digest of Technical Papers International Conference on Consumer Electronics, Las Vegas, NV, USA, 7–11 January 2006; pp. 173–174. [Google Scholar]

- Kim, H.-K.; Lee, K.-W.; Jung, J.-Y.; Jung, S.-W.; Ko, S.-J. A content-aware image stitching algorithm for mobile multimedia devices. IEEE Trans. Consum. Electron. 2011, 57, 1875–1882. [Google Scholar] [CrossRef]

- Kim, B.-S.; Choi, K.-A.; Park, W.-J.; Kim, S.-W.; Ko, S.-J. Content-preserving video stitching method for multi-camera systems. IEEE Trans. Consum. Electron. 2017, 63, 109–116. [Google Scholar] [CrossRef]

- Guan, L.; Liu, S.; Chu, J.; Zhang, R.; Chen, Y.; Li, S.; Zhai, L.; Li, Y.; Xie, H. A novel algorithm for estimating the relative rotation angle of solar azimuth through single-pixel rings from polar coordinate transformation for imaging polarization navigation sensors. Optik 2019, 178, 868–878. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Li, L.; He, Y. High-accuracy multi-camera reconstruction enhanced by adaptive point cloud correction algorithm. Opt. Lasers Eng. 2019, 122, 170–183. [Google Scholar] [CrossRef]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. -Integr. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Li, J.; Xiong, J. In-field citrus detection and localisation based on RGB-D image analysis. Biosyst. Eng. 2019, 186, 34–44. [Google Scholar] [CrossRef]

- Tang, Y.; Lin, Y.; Huang, X.; Yao, M.; Huang, Z.; Zou, X. Grand Challenges of Machine-Vision Technology in Civil Structural Health Monitoring. Artif. Intell. Evol. 2020, 1, 8–16. [Google Scholar]

- Joshi, N.; Kienzle, W.; Toelle, M.; Uyttendaele, M.; Cohen, M.F. Real-time hyperlapse creation via optimal frame selection. Acm Trans. Graph. (TOG) 2015, 34, 1–9. [Google Scholar] [CrossRef]

- Autostitch. Available online: http://matthewalunbrown.com/autostitch/autostitch.html (accessed on 30 April 2020).

- Panoweaver. Available online: https://www.easypano.com/panorama-software.html (accessed on 30 April 2020).

- Kolor Autopano. Available online: https://veer.tv/blog/kolor-autopano-create-a-panorama-with-autopano-progiga/ (accessed on 30 April 2020).

- Tan, L.; Wang, Y.; Yu, H.; Zhu, J. Automatic camera calibration using active displays of a virtual pattern. Sensors 2017, 17, 685. [Google Scholar] [CrossRef]

- Qu, Z.; Lin, S.-P.; Ju, F.-R.; Liu, L. The improved algorithm of fast panorama stitching for image sequence and reducing the distortion errors. Math. Probl. Eng. 2015, 2015, 428076. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International conference on computer vision, Barcelona, Spain, 6–3 November 2011; pp. 2564–2571. [Google Scholar]

- Jeon, H.-k.; Jeong, J.-m.; Lee, K.-y. An implementation of the real-time panoramic image stitching using ORB and PROSAC. In Proceedings of the 2015 International SoC Design Conference (ISOCC), Gyungju, South Korea, 2–5 november 2015; pp. 91–92. [Google Scholar]

- Wang, M.; Niu, S.; Yang, X. A novel panoramic image stitching algorithm based on ORB. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 818–821. [Google Scholar]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2017, 74, 59–73. [Google Scholar] [CrossRef]

- Din, I.; Anwar, H.; Syed, I.; Zafar, H.; Hasan, L. Projector calibration for pattern projection systems. J. Appl. Res. Technol. 2014, 12, 80–86. [Google Scholar] [CrossRef][Green Version]

- Chaudhari, K.; Garg, D.; Kotecha, K. An enhanced approach in Image Mosaicing using ORB Method with Alpha blending technique. Int. J. Adv. Res. Comput. Sci. 2017, 8, 917–921. [Google Scholar]

- Pandey, A.; Pati, U.C. A novel technique for non-overlapping image mosaicing based on pyramid method. In Proceedings of the 2013 Annual IEEE India Conference (INDICON), Mumbai, India, 13–15 December 2013; pp. 1–6. [Google Scholar]

- Dessein, A.; Smith, W.A.; Wilson, R.C.; Hancock, E.R. Seamless texture stitching on a 3D mesh by Poisson blending in patches. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2031–2035. [Google Scholar]

- Allène, C.; Pons, J.-P.; Keriven, R. Seamless image-based texture atlases using multi-band blending. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Burt, P.J.; Adelson, E.H. A multiresolution spline with application to image mosaics. Acm Trans. Graph. (TOG) 1983, 2, 217–236. [Google Scholar] [CrossRef]

- Li, X.; Zhu, W.; Zhu, Q. Panoramic video stitching based on multi-band image blending. In Proceedings of the Tenth International Conference on Graphics and Image Processing (ICGIP 2018), Chengdu, China, 12–14 December 2018; p. 110690F. [Google Scholar]

- Kim, H.; Chae, E.; Jo, G.; Paik, J. Fisheye lens-based surveillance camera for wide field-of-view monitoring. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–12 January 2015; pp. 505–506. [Google Scholar]

- Saad, M.A.; Bovik, A.C.; Charrier, C. DCT statistics model-based blind image quality assessment. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 3093–3096. [Google Scholar]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef]

- Perazzi, F.; Sorkine-Hornung, A.; Zimmer, H.; Kaufmann, P.; Wang, O.; Watson, S.; Gross, M. Panoramic video from unstructured camera arrays. Comput. Graph. Forum 2015, 34, 57–68. [Google Scholar] [CrossRef]

- Silva, R.M.; Feijó, B.; Gomes, P.B.; Frensh, T.; Monteiro, D. Real time 360 video stitching and streaming. In Proceedings of the ACM SIGGRAPH 2016 Posters, Anaheim, CA, USA, 24–28 July 2016; pp. 1–2. [Google Scholar]

- Lu, Y.; Wang, K.; Fan, G. Photometric calibration and image stitching for a large field of view multi-camera system. Sensors 2016, 16, 516. [Google Scholar] [CrossRef]

- Lin, M.; Xu, G.; Ren, X.; Xu, K. Cylindrical panoramic image stitching method based on multi-cameras. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 1091–1096. [Google Scholar]

- Lin, H.-S.; Chang, C.-C.; Chang, H.-Y.; Chuang, Y.-Y.; Lin, T.-L.; Ouhyoung, M. A low-cost portable polycamera for stereoscopic 360 imaging. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 915–929. [Google Scholar] [CrossRef]

- Facebook Surround 360. Available online: https://facebook360.fb.com/ (accessed on 1 April 2020).

- Google Jump. Available online: https://arvr.google.com/ (accessed on 1 April 2020).

- Amini, A.S.; Varshosaz, M.; Saadatseresht, M. Evaluating a new stereo panorama system based on stereo cameras. Int. J. Sci. Res. Invent. New Ideas 2014, 2, 1. [Google Scholar]

- Nokia Ozo. Available online: https://ozo.nokia.com/ (accessed on 1 April 2020).

- Matzen, K.; Cohen, M.F.; Evans, B.; Kopf, J.; Szeliski, R. Low-cost 360 stereo photography and video capture. Acm Trans. Graph. (TOG) 2017, 36, 1–12. [Google Scholar] [CrossRef]

| Parameter | Description |

|---|---|

| Im | Mono image |

| Is | Stereo image |

| ICP | Initial camera parameters |

| CCP | Computer camera parameters |

| £c | Consistent features |

| H | Homography calculation function |

| Imf | Initial matched features |

| Fmf | Final matched features |

| RANSAC | Random sample consensus matching algorithm |

| ORB | Random sample consensus matching algorithm (Oriented FAST and rotated BRIEF) feature descriptor |

| Φ | Camera computation function |

| թ | Final panorama |

| wi | Wrapped image |

| Щ | Wrapping function |

| Iblend | Blended image |

| βmulti-band | Image blending function |

| ζp | Panorama straightening function |

| Camera | Yaw | Pitch | Roll |

|---|---|---|---|

| Cam 1 | 0.0° | 0.0° | 0.0° |

| Cam 2 | 60.0° | 0.0° | 0.0° |

| Cam 3 | 120.0° | 0.0° | 0.0° |

| Cam 4 | 180.0° | 0.0° | 0.0° |

| Cam 5 | −120.0° | 0.0° | 0.0° |

| Cam 6 | −60.0° | 0.0° | 0.0° |

| Property | Field of View (FOV) |

|---|---|

| Front left camera | 200° |

| Front right camera | 200° |

| Rear camera | 250° |

| System [Reference] | No of Cameras | Resolution | Stitching Artifacts | Stitching Time (s) |

|---|---|---|---|---|

| Lu et al. [64] | 7 | 4 k | Extra black region | 3.08 |

| Mingxiu et al. [65] | 6 | 4 k | Extra black region | 2.98 |

| Rodrigo et al. [63] | 6 | 4 k | Parallax | 3.01 |

| Proposed system | 6 | 4 k | Parallax-free | 0.031 |

| System [Reference] | No of Cameras | Panorama Resolution | Stitching Time (s) |

|---|---|---|---|

| Surround 360 [67] | 17 | 8 k by 4 k | 7.411 |

| Google Jump [68] | 16 | 8 k by4 k | 6.532 |

| Stereo cameras [69] | 10 | 8 k by 4 k | 4.072 |

| NOKIA OZO [70] | 8 | 8 k by 4 k | 3.085 |

| 360 stereo cameras [71] | 4 | 6 k by 3 k | 2.984 |

| Portable stereo cameras [66] | 4 | 6 k by 3 k | 2.413 |

| Proposed system | 3 | 6 k by 3 k | 0.025 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ullah, H.; Zia, O.; Kim, J.H.; Han, K.; Lee, J.W. Automatic 360° Mono-Stereo Panorama Generation Using a Cost-Effective Multi-Camera System. Sensors 2020, 20, 3097. https://doi.org/10.3390/s20113097

Ullah H, Zia O, Kim JH, Han K, Lee JW. Automatic 360° Mono-Stereo Panorama Generation Using a Cost-Effective Multi-Camera System. Sensors. 2020; 20(11):3097. https://doi.org/10.3390/s20113097

Chicago/Turabian StyleUllah, Hayat, Osama Zia, Jun Ho Kim, Kyungjin Han, and Jong Weon Lee. 2020. "Automatic 360° Mono-Stereo Panorama Generation Using a Cost-Effective Multi-Camera System" Sensors 20, no. 11: 3097. https://doi.org/10.3390/s20113097

APA StyleUllah, H., Zia, O., Kim, J. H., Han, K., & Lee, J. W. (2020). Automatic 360° Mono-Stereo Panorama Generation Using a Cost-Effective Multi-Camera System. Sensors, 20(11), 3097. https://doi.org/10.3390/s20113097