Robust Stereo Visual Inertial Navigation System Based on Multi-Stage Outlier Removal in Dynamic Environments

Abstract

1. Introduction

- We provide the design of a robust stereo vision aided-inertial navigation system with high accuracy and low computational cost based on multi-stage outlier removal towards resource-constrained devices for stochastic environments;

- Multi-stage outlier removal strategies are introduced, in particular an approach with a multi-stage that removes outlier features caused by the influences of the dynamic objects based on the state feedback information in the sliding window is proposed;

- The experimental evaluation of the proposed VINS algorithm is carried out on both public and our datasets for the indoor environment. We demonstrate on relevant datasets that the proposed solution can robustly reject outliers and improve the system’s accuracy both for the static and dynamic environment.

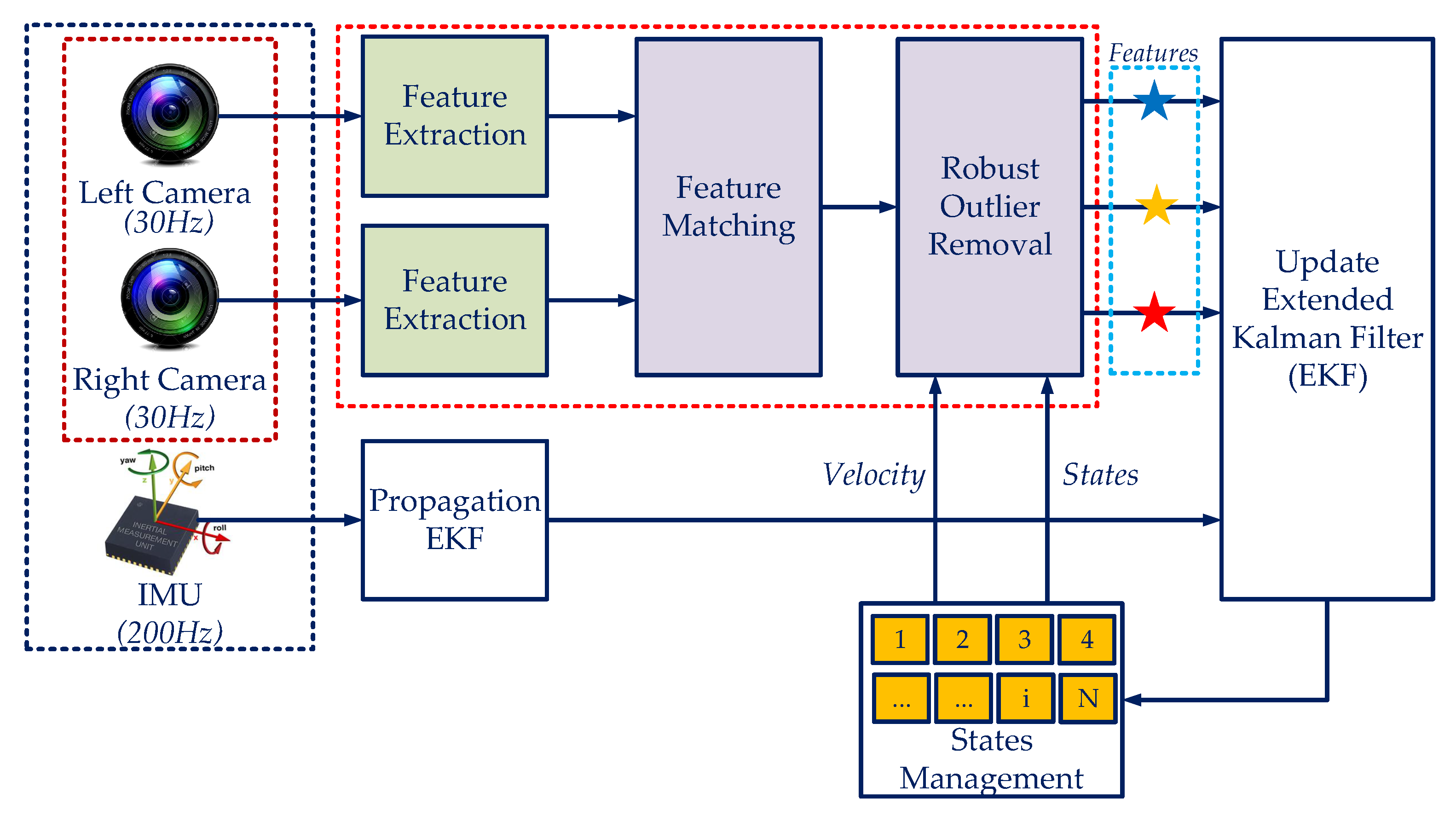

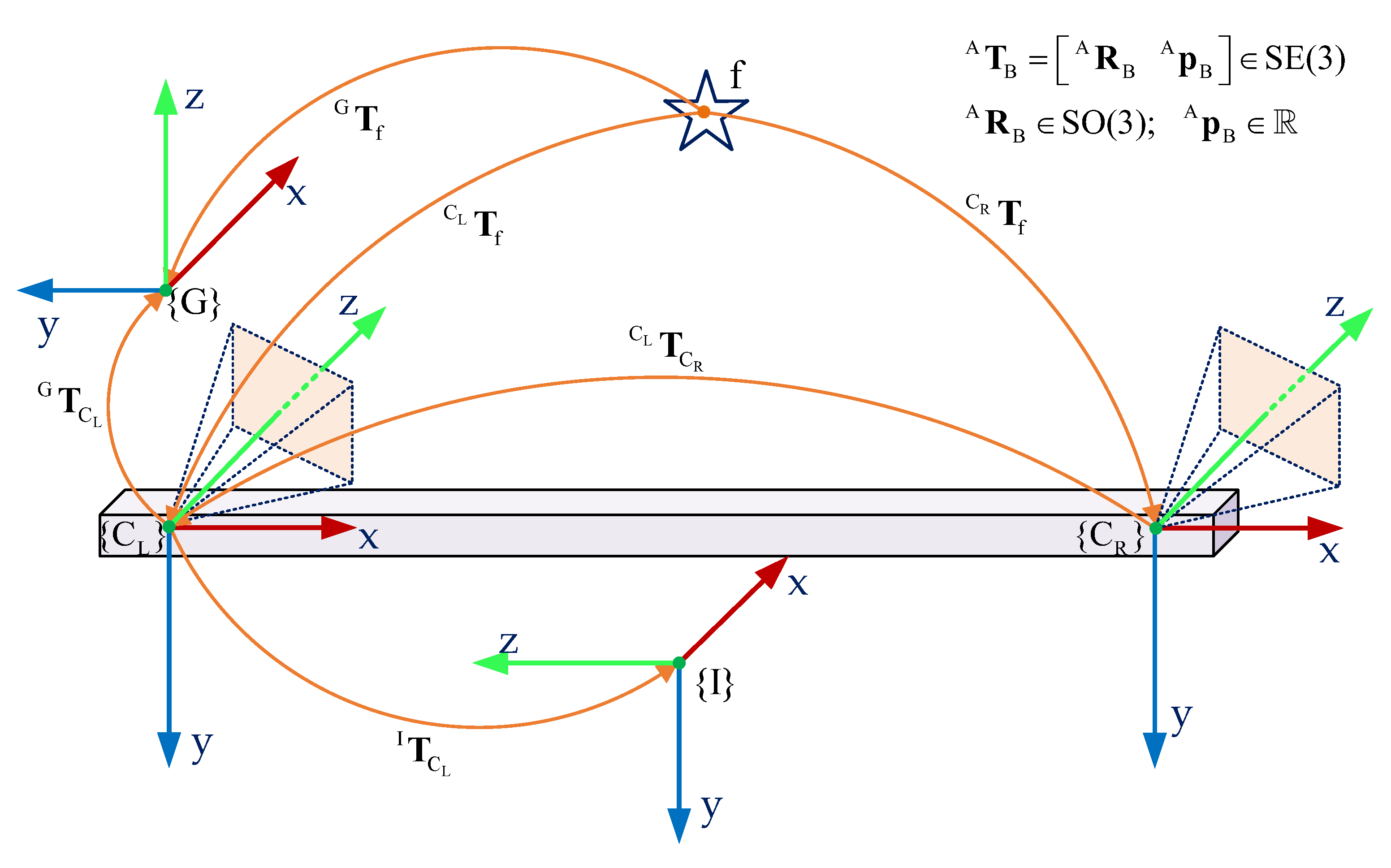

2. Robust Stereo Vision-Aided Inertial Navigation System Estimator Description

2.1. The Definition of System State on Manifolds

2.2. Propagation Model

- -

- The angular velocities between two IMU clock are constant ;

- -

- The accelerations over two IMU clock are constant

| Algorithm 1: Inertial Propagation Process Algorithm |

|

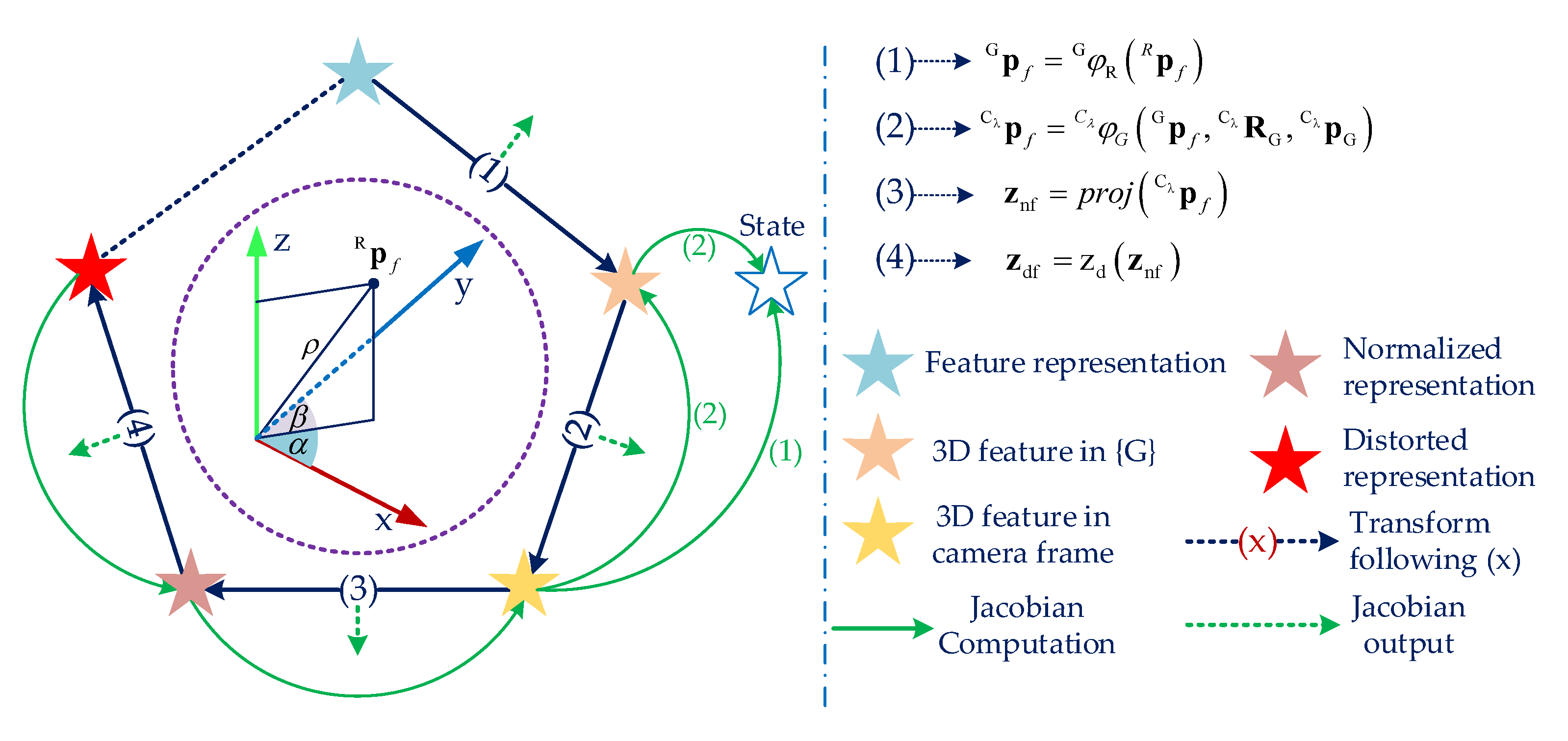

2.3. Visual Measurement Update Model

On-Manifold Correction Phase

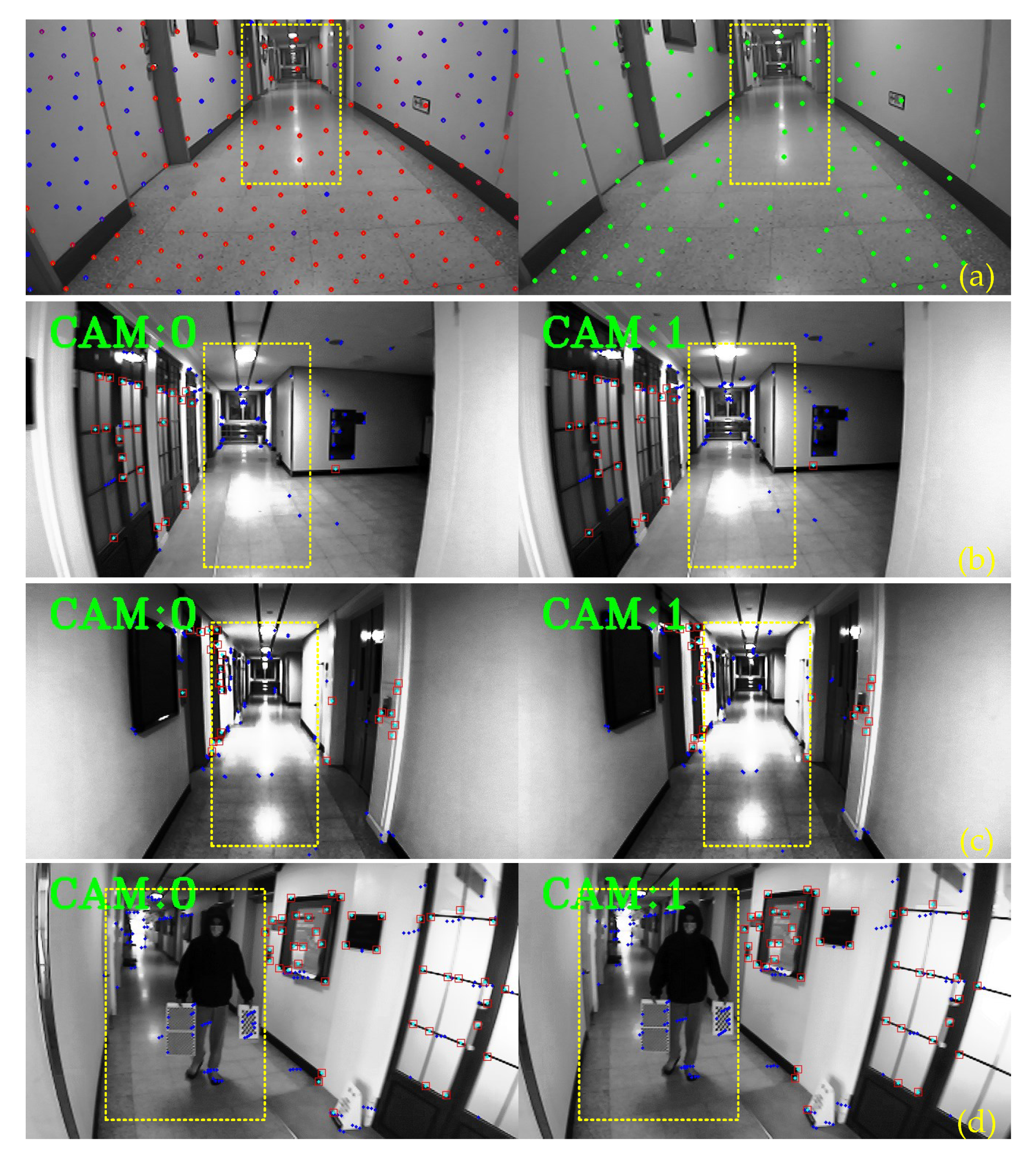

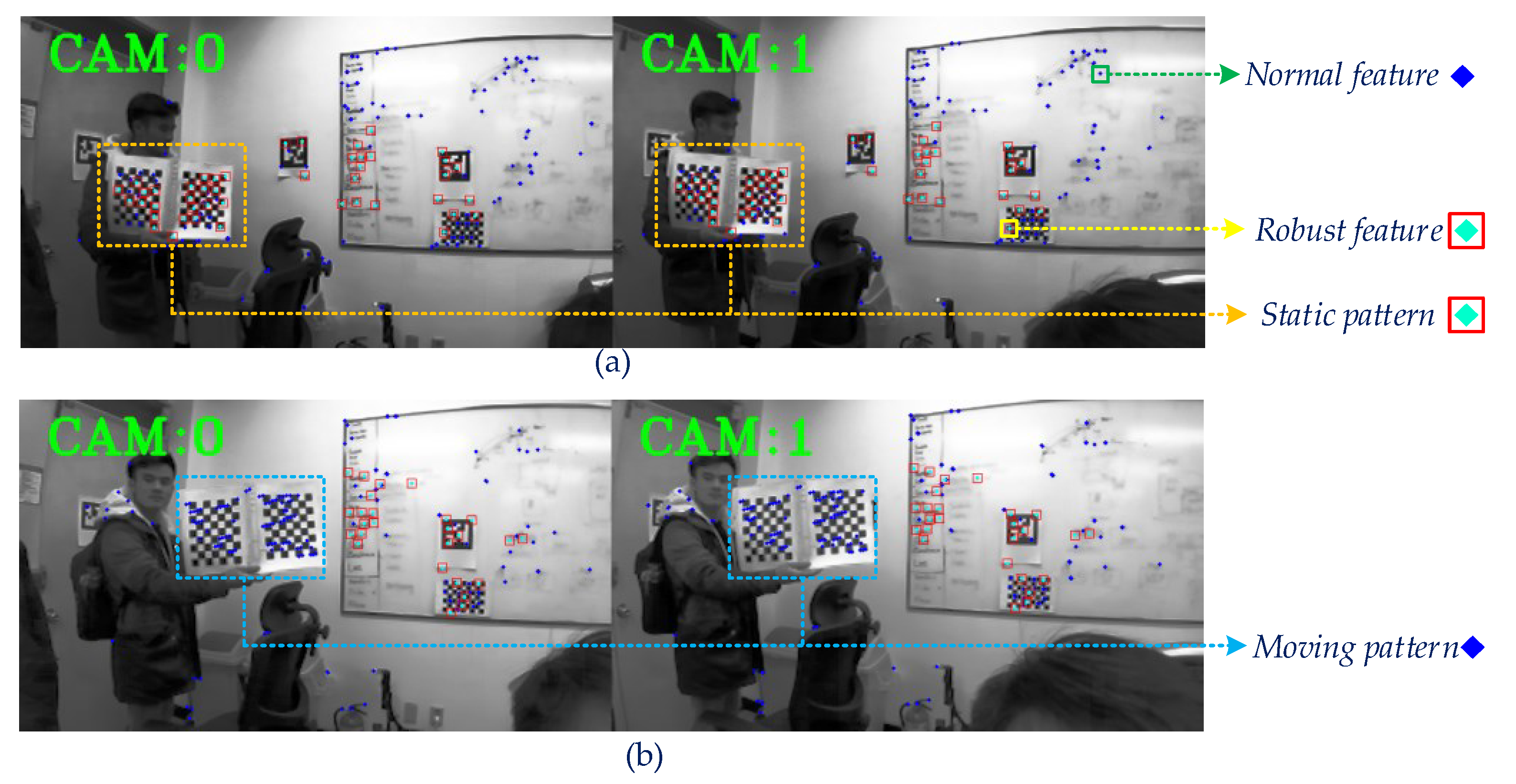

3. Multi-Stage Outlier Removal in the Stochastic Environments

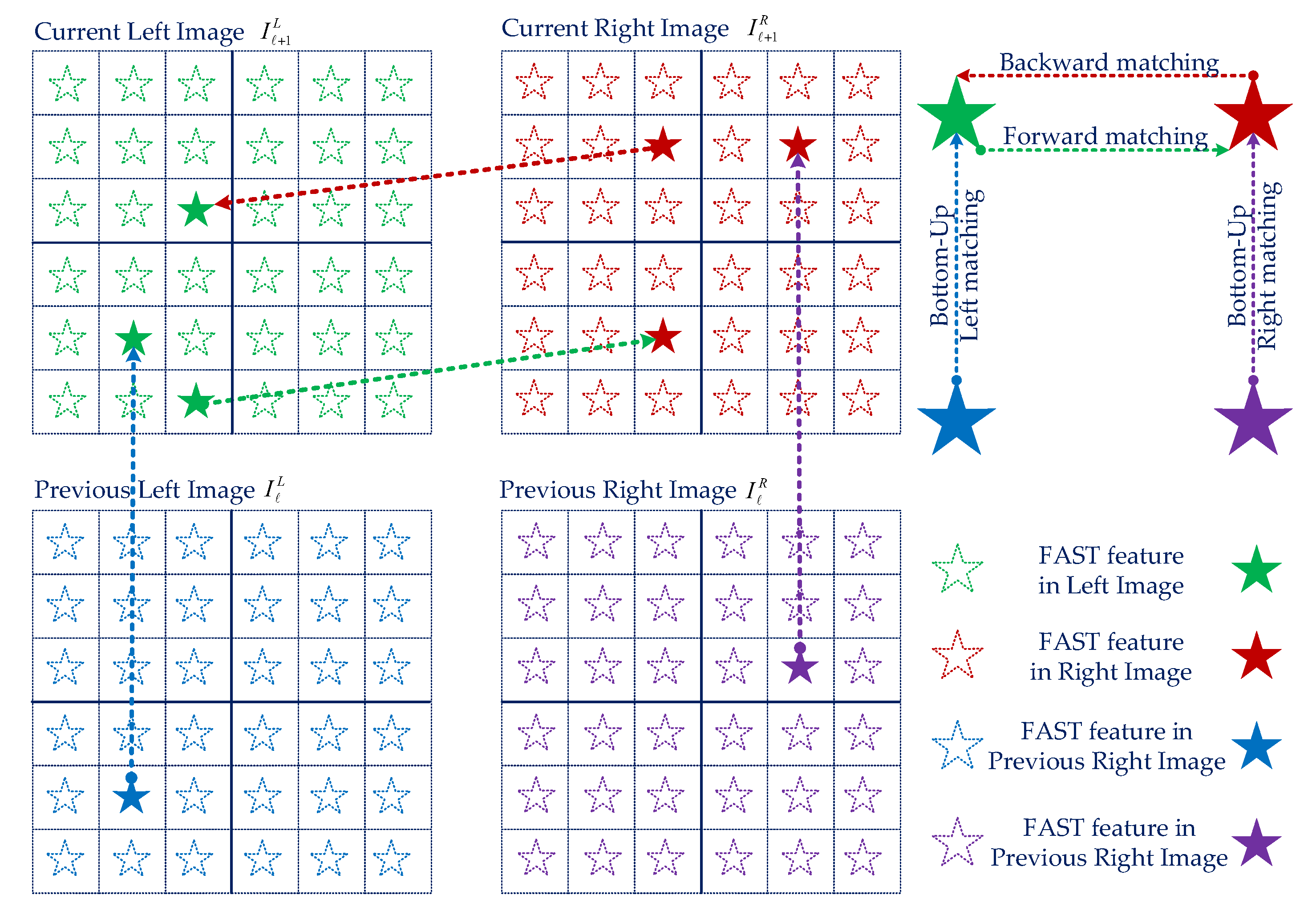

- Stage 1: The FAST features is extracted, then the KLT Feature Tracker algorithm is used to find the corresponding with RANSAC. We perform forward-backward-up-down matching to correct the tracking;

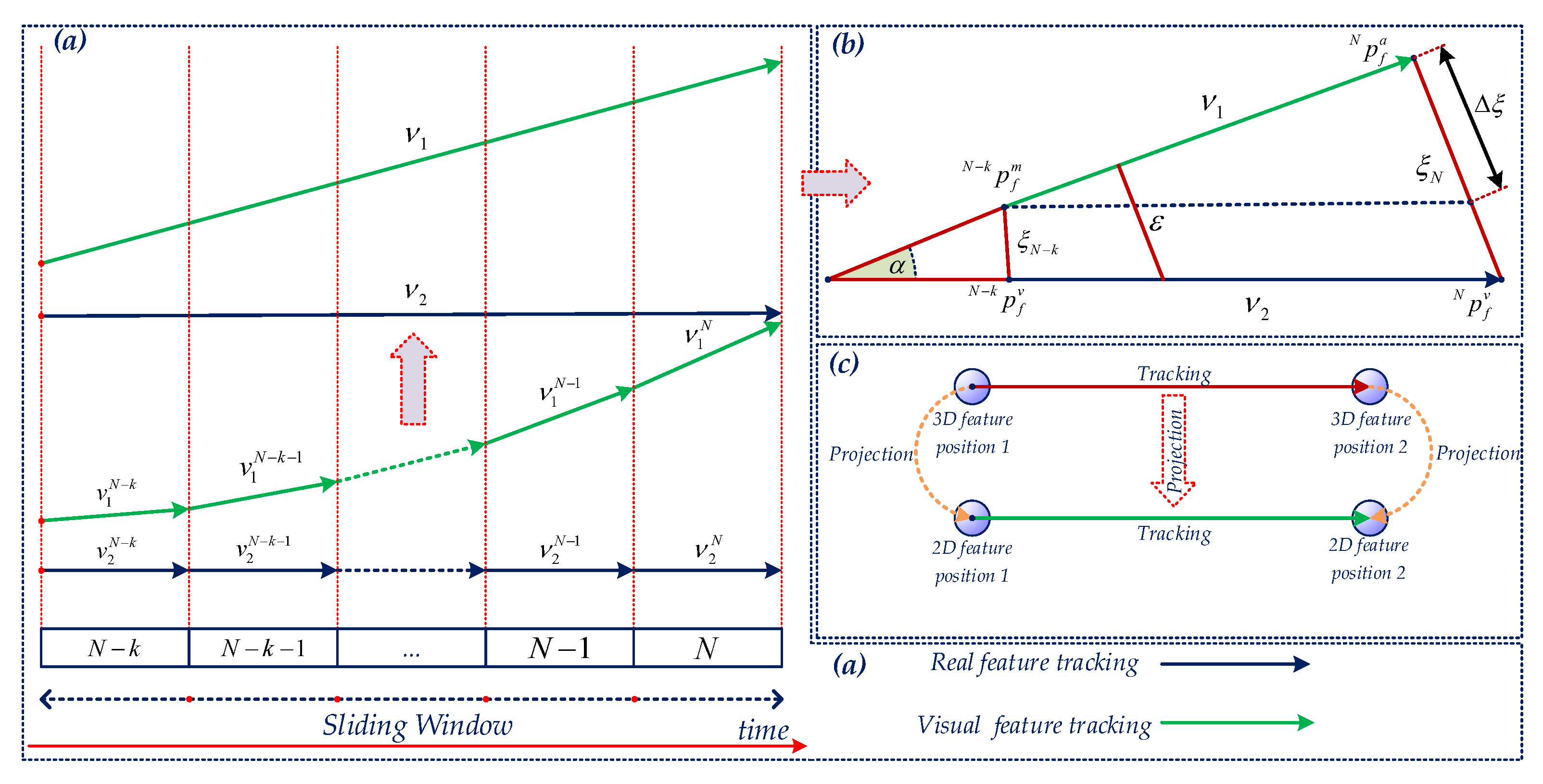

- Stage 2: 3D feature triangulation with optimization solution is utilized to calculate the 3D position and the outlier rejection is also performed in this phase;

- Stage 3: A robust outlier rejection scheme is proposed in which it considers the estimated state motion with the feedback information of the robot poses and velocity;

- Stage 4: During the EKF update as shown in Equation (27), statistical analysis is used with the chi-squared test to carry out outliers from inliers.

4. Experimental Results

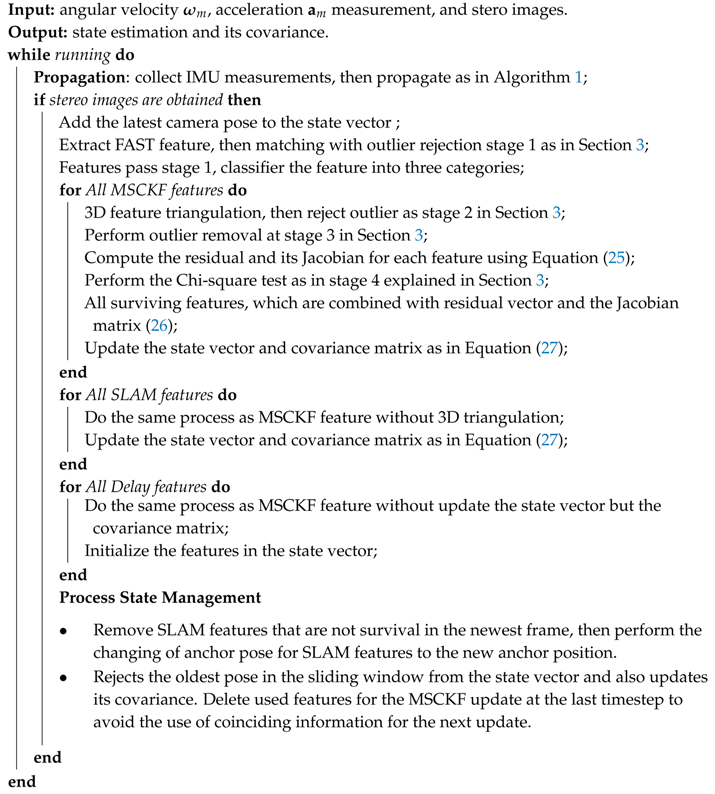

| Algorithm 2: A Robust Stereo VINS Algorithm Based on Multi-Stage Outlier Removal |

|

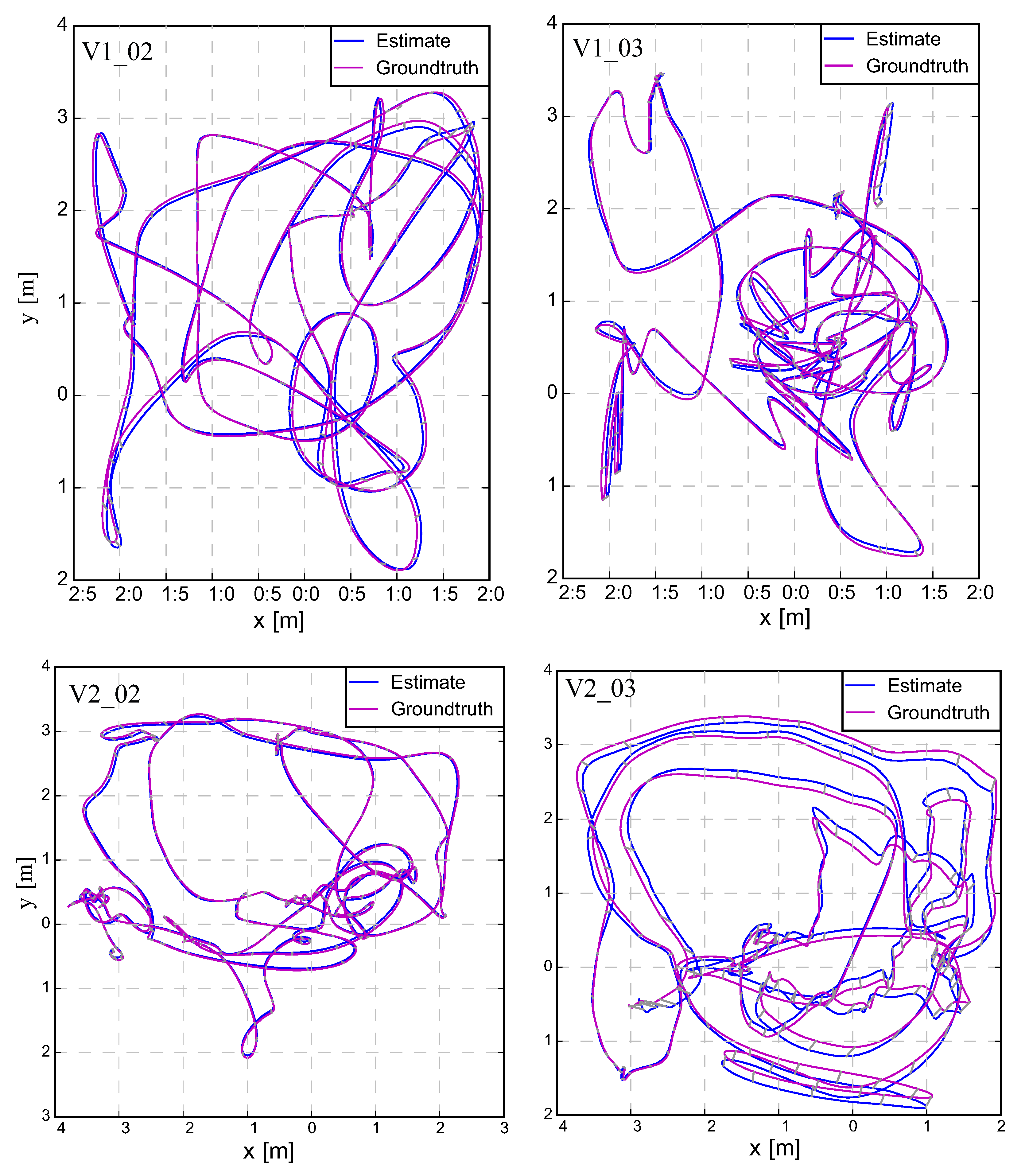

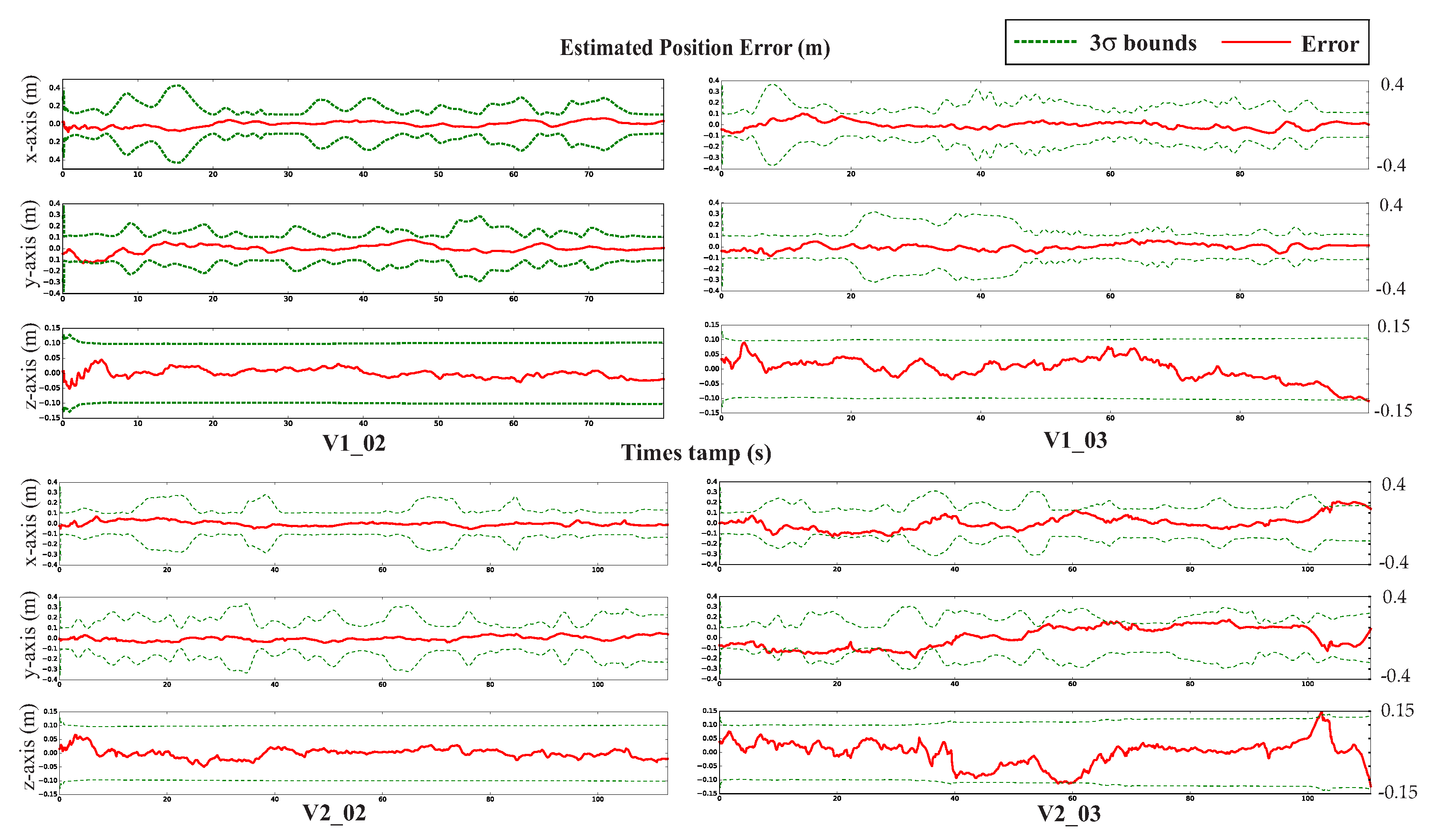

4.1. Experimental Results on the Public Datasets

- Basalt VIO [15]: The most recent state-of-the-art open-sourced VIO, which employs the fix-lag smoother based-optimization methodology. This method uses stereo key-frame to extract the relevant information for VIO based on the nonlinear factor recovery.

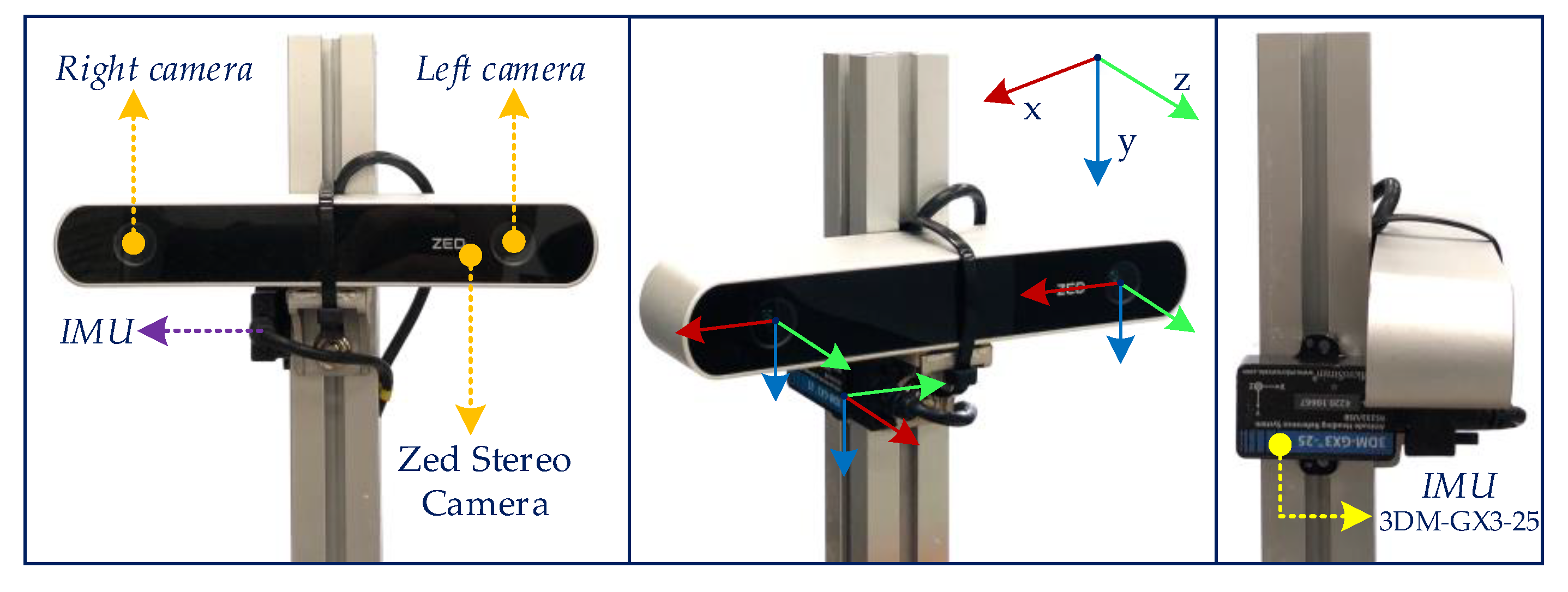

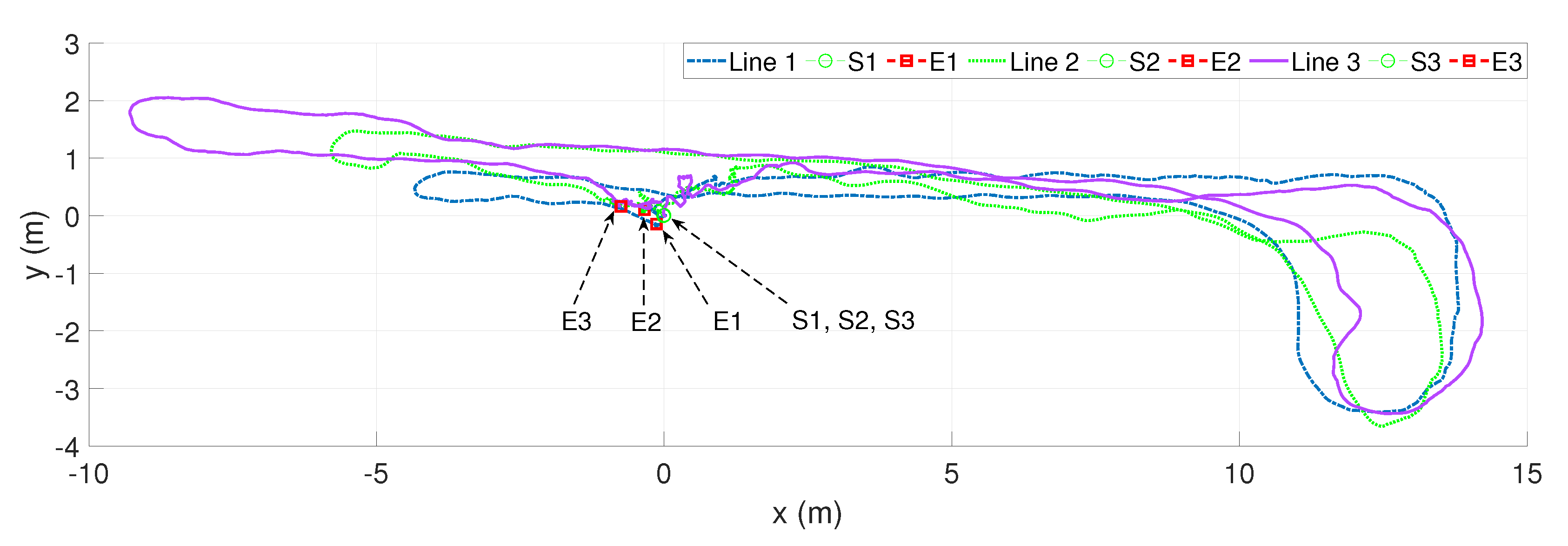

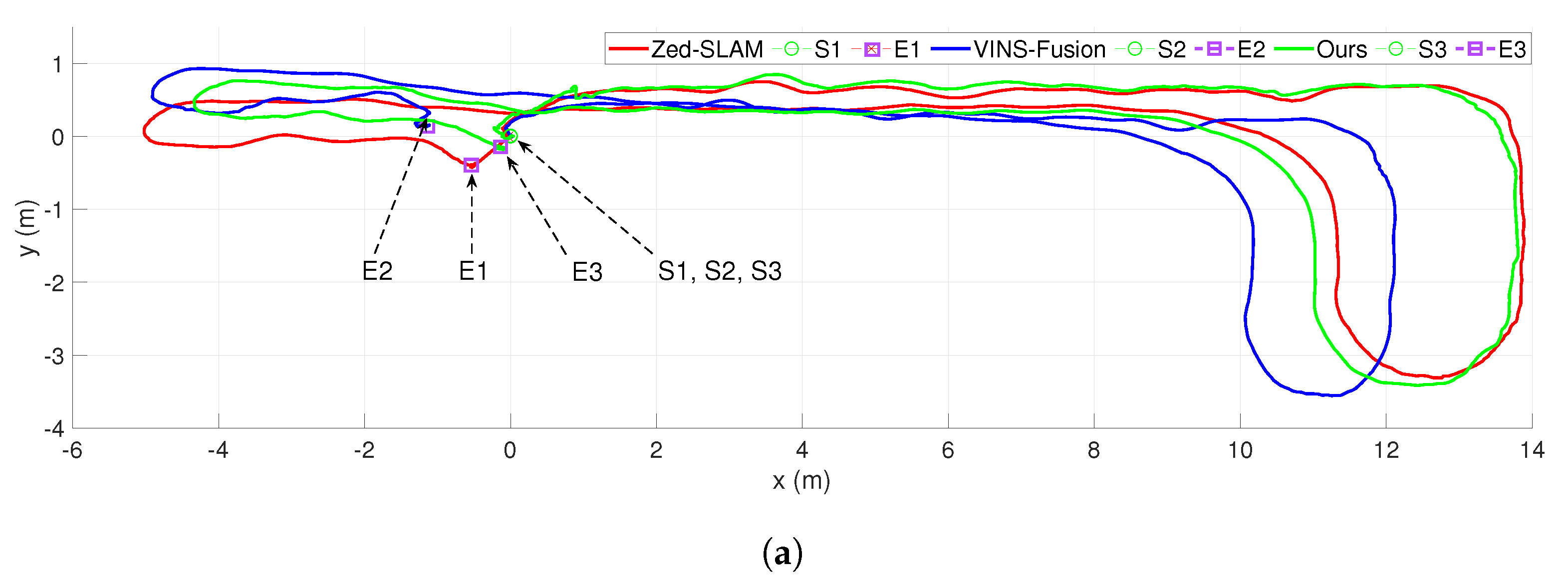

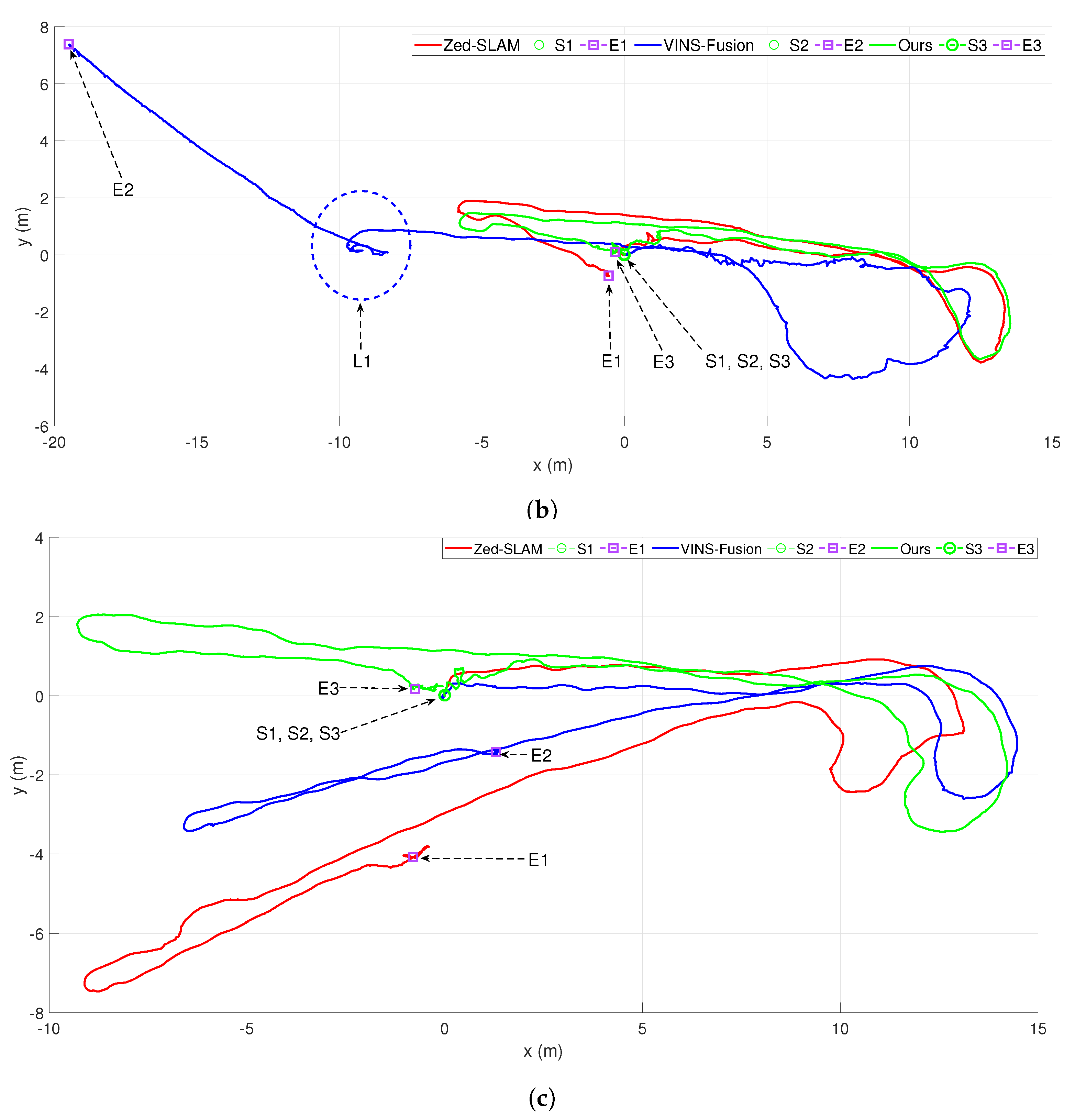

4.2. Experimental Results in an Indoor Dynamic Environments

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A. The State Transition and the Discrete Time Noise Covariance Matrix

References

- Van, N.D.; Kim, G.W. Development of An Efficient and Practical Control System in Autonomous Vehicle Competition. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 15–18 October 2019; pp. 1084–1086. [Google Scholar]

- Van, N.D.; Sualeh, M.; Kim, D.; Kim, G.-W. A Hierarchical Control System for Autonomous Driving towards Urban Challenges. Appl. Sci. 2020, 10, 3543. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar]

- Corke, P.; Lobo, J.; Dias, J. An Introduction to Inertial and Visual Sensing. Int. J. Robot. Res. 2007, 26, 519–535. [Google Scholar] [CrossRef]

- Barfoot, T.D. State Estimation for Robotics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Schneider, T.; Dymczyk, M.; Fehr, M.; Egger, K.; Lynen, S.; Gilitschenski, I.; Siegwart, R. Maplab: An open framework for research in visual-inertial mapping and localization. IEEE Robot. Autom. Lett. 2018, 3, 1418–1425. [Google Scholar] [CrossRef]

- Huai, Z.; Huang, G. Robocentric visual-inertial odometry. Int. J. Robot. Res. 2019. [Google Scholar] [CrossRef]

- Dellaert, F.; Kaess, M. Factor Graphs for Robot Perception. Found. Trends Robot. 2017, 6, 1–139. [Google Scholar] [CrossRef]

- Qin, T.; Pan, J.; Cao, S.; Shen, S. A general optimization-based framework for local odometry estimation with multiple sensors. arXiv 2019, arXiv:1901.03638. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. arXiv 2017, arXiv:1708.03852. [Google Scholar] [CrossRef]

- Usenko, V.; Demmel, N.; Schubert, D.; Stückler, J.; Cremers, D. Visual-Inertial Mapping with Non-Linear Factor Recovery. IEEE Robot. Autom. Lett. (RA-L) 2020, 5, 2916–2923. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual-inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual-inertial odometry. IEEE Trans. Robot. 2017, 34, 1–21. [Google Scholar] [CrossRef]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2017, 33, 249–265. [Google Scholar] [CrossRef]

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An Open-Source Library for Real-Time Metric-Semantic Localization and Mapping. arXiv 2019, arXiv:1910.02490. [Google Scholar]

- Han, L.; Lin, Y.; Du, G.; Lian, S. DeepVIO: Self-supervised Deep Learning of Monocular Visual Inertial Odometry using 3D Geometric Constraints. arXiv 2019, arXiv:1906.11435. [Google Scholar]

- Chen, C.; Rosa, S.; Miao, Y.; Lu, C.X.; Wu, W.; Markham, A.; Trigoni, N. Selective Sensor Fusion for Neural Visual-Inertial Odometry. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10534–10543. [Google Scholar]

- Silva do Monte Lima, J.P.; Uchiyama, H.; Taniguchi, R.I. End-to-End Learning Framework for IMU-Based 6-DOF Odometry. Sensors 2019, 19, 3777. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Van, N.D.; Kim, G.W. Fuzzy Logic and Deep Steering Control based Recommendation System for Self-Driving Car. In Proceedings of the 2018 18th International Conference on Control, Automation and Systems (ICCAS), Daegwallyeong, Korea, 17–20 October 2018; pp. 1107–1110. [Google Scholar]

- Huang, G. Visual-inertial navigation: A concise review. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Van Dinh, N.; Kim, G.W. Multi-sensor Fusion Towards VINS: A Concise Tutorial, Survey, Framework and Challenges. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Korea, 19–22 February 2020; pp. 459–462. [Google Scholar]

- Li, M. Visual-Inertial Odometry on Resource-Constrained Systems. Ph.D. Thesis, UC Riverside, Riverside, CA, USA, 2014. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I. Ror: Rejection of outliers by rotations. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 78–84. [Google Scholar] [CrossRef]

- Santana, P.; Correia, L. Improving Visual Odometry by Removing Outliers in Optic Flow. In Proceedings of the 8th Conference on Autonomous Robot Systems and Competitions, Aveiro, Portugal, 2 April 2008. [Google Scholar]

- Buczko, M.; Willert, V. How to distinguish inliers from outliers in visual odometry high-speed automotive applications. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Buczko, M.; Willert, V. Monocular Outlier Detection for Visual Odometry. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 739–745. [Google Scholar]

- OpenCV Developers Team. Open Source Computer Vision (OpenCV) Library. Available online: http://opencv.org (accessed on 20 February 2020).

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Trawny, N.; Roumeliotis, S.I. Indirect Kalman Filter For 3d Attitude Estimation; Technical Report; University of Minnesota: Minneapolis, MN, USA, 2005. [Google Scholar]

- Maybeck, P.S. Stochastic Models, Estimation, and Control; Academic Press: New York, NY, USA, 1982; Volume 3. [Google Scholar]

- Geneva, P.; Eckenhoff, K.; Lee, W.; Yang, Y.; Huang, G. OpenVINS: A Research Platform for Visual-Inertial Estimation. In Proceedings of the IROS 2019 Workshop on Visual-Inertial Navigation: Challenges and Applications, Macau, China, 3–8 November 2019. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 3rd ed.; Johns Hopkins: Baltimore, MD, USA, 1996; ISBN 978-0-8018-5414-9. [Google Scholar]

- Eigen. Available online: http://eigen.tuxfamily.org (accessed on 20 February 2020).

- ROS Kinetic Kame. Available online: http://wiki.ros.org/kinetic (accessed on 20 February 2020).

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Zhang, Z.; Scaramuzza, D. A Tutorial on Quantitative Trajectory Evaluation for Visual-Inertial Odometry. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7244–7251. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Stereolabs ZED Camera. Available online: https://github.com/stereolabs/zed-ros-wrapper (accessed on 20 February 2020).

| Sequence | Length (m) | S-MSCKF (m) | VINS-Fusion (m) | Basalt (m) | Ours (m) |

|---|---|---|---|---|---|

| V1_01_easy | 58.6 | 0.06 | 0.10 | 0.04 | 0.06 |

| V1_02_medium | 75.9 | 0.16 | 0.10 | 0.05 | 0.05 |

| V1_03_difficult | 79.0 | 0.28 | 0.11 | 0.10 | 0.05 |

| V2_01_easy | 36.5 | 0.07 | 0.12 | 0.04 | 0.05 |

| V2_02_medium | 83.2 | 0.15 | 0.10 | 0.07 | 0.03 |

| V2_03_difficult | 86.1 | 0.37 | 0.27 | X | 0.13 |

| MH_01_easy | 80.6 | 0.23 | 0.24 | 0.09 | 0.08 |

| MH_02_easy | 73.5 | 0.23 | 0.18 | 0.06 | 0.06 |

| MH_03_medium | 130.9 | 0.20 | 0.23 | 0.07 | 0.09 |

| MH_04_difficult | 91.7 | 0.35 | 0.39 | 0.13 | 0.18 |

| MH_05_difficult | 97.6 | 0.21 | 0.19 | 0.11 | 0.19 |

| Tasks | Tracking (ms) | Propagation (ms) | Feat Update (ms) | Init and Update (ms) | Marg (ms) | Total (ms) |

|---|---|---|---|---|---|---|

| 37/2/1 | 16.5 | 0.3 | 2.2 | 1.0 | 0.8 | 20.8 |

| 32/21/5 | 13.9 | 0.3 | 1.0 | 6.2 | 1.0 | 22.4 |

| 48/39/5 | 11.4 | 0.4 | 2.8 | 13 | 1.3 | 29.0 |

| Methods | S-MSCKF | VINS-Fusion | Basalt | Ours | ||

| Average (ms) | 25 | 60 | 53 | 26 | ||

| Sequences | Case 1–Line 1 | Case 2–Line 2 | Case 3–Line 3 |

|---|---|---|---|

| Length (m) | 43.70 | 46.69 | 55.02 |

| Position error (cm/m) | 0.568 | 0.780 | 1.416 |

| Bearing error (deg/m) | 0.376 | 0.064 | 0.915 |

| Zed error (cm/m) | 1.542 | 1.975 | 8.888 |

| VINS-Fusion error (cm/m) | 2.611 | X (47.672) | 4.384 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nam, D.V.; Gon-Woo, K. Robust Stereo Visual Inertial Navigation System Based on Multi-Stage Outlier Removal in Dynamic Environments. Sensors 2020, 20, 2922. https://doi.org/10.3390/s20102922

Nam DV, Gon-Woo K. Robust Stereo Visual Inertial Navigation System Based on Multi-Stage Outlier Removal in Dynamic Environments. Sensors. 2020; 20(10):2922. https://doi.org/10.3390/s20102922

Chicago/Turabian StyleNam, Dinh Van, and Kim Gon-Woo. 2020. "Robust Stereo Visual Inertial Navigation System Based on Multi-Stage Outlier Removal in Dynamic Environments" Sensors 20, no. 10: 2922. https://doi.org/10.3390/s20102922

APA StyleNam, D. V., & Gon-Woo, K. (2020). Robust Stereo Visual Inertial Navigation System Based on Multi-Stage Outlier Removal in Dynamic Environments. Sensors, 20(10), 2922. https://doi.org/10.3390/s20102922