Global Optimal Structured Embedding Learning for Remote Sensing Image Retrieval

Abstract

1. Introduction

- (1)

- We propose to use a softmax function in our novel loss to solve the key challenge of local optimum in most methods. This is efficient to realize global optimization which could be significant to enhance the performance of RSIR.

- (2)

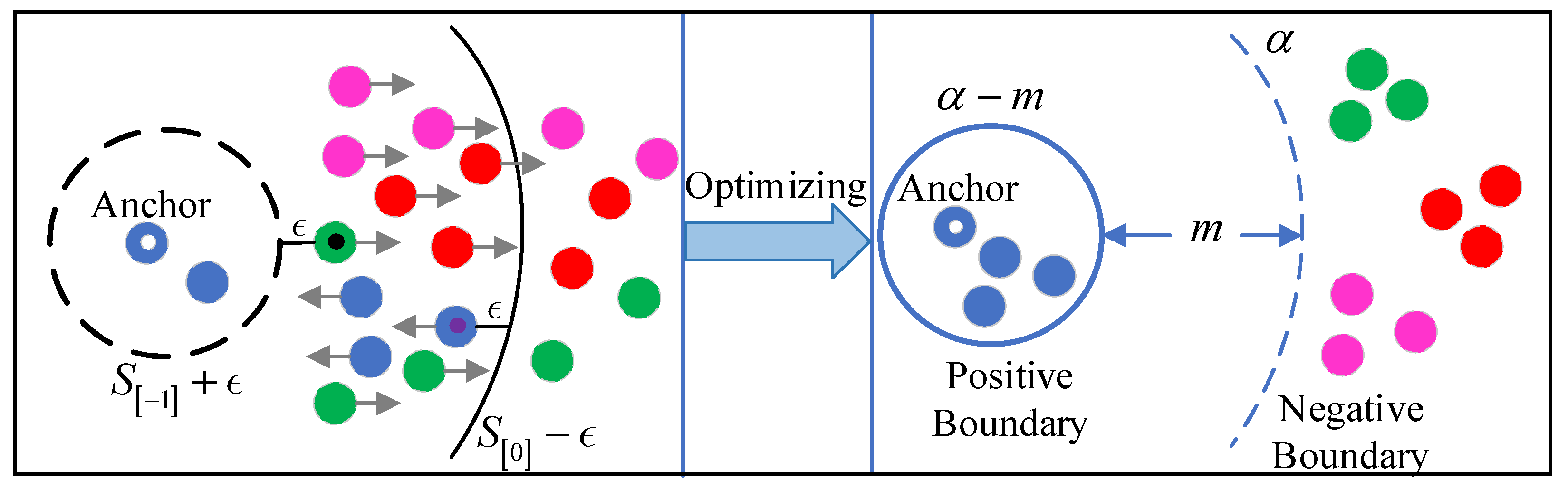

- We present a novel optimal structured loss to globally learn an efficient deep embedding space with mined informative sample pairs to force the positive pairs within a limitation and push the negative ones far away from a given boundary. During training stage, we take the information of all these selected sample pairs and the difference between positive and negative pairs into consideration; make the intraclass samples more compact and the interclass ones more separated while preserving the similarity structure of samples.

- (3)

- To further reveal the effectiveness of the RSIR task under DML paradigm, we perform the task of RSIR with various commonly used metric loss functions on the public remote sensing datasets. These loss functions aim at fine-tuning the pre-trained network to be more adaptive for a certain task. The results show that the proposed method achieves outstanding performance which would be reported in experiments section.

- (4)

- To verify the superiority of our proposed optimal structured loss, we conduct the experiment on multiple remote sensing datasets. The retrieval performance is boosted with approximately 5% on these public remote sensing datasets compared with the existing methods [28,49,50,51] and this demonstrates that our proposed method achieves the state-of-the-art results in the task of RSIR.

2. Related Work

2.1. Deep Metric Learning

2.1.1. Clustering-Based Structured Loss

2.1.2. Pair-Based Structured Loss

2.1.3. Informative Pairs Mining

2.2. The Development of RSIR Task

3. The Proposed Approach

3.1. Problem Definition

3.2. Global Lifted Structured Loss

3.3. Global Optimal Structured Loss

| Algorithm 1: Global Optimal Structured Loss on a mini-batch. |

| 1: mini-batch default: The size of every mini-batch is , the number of categories is , and there are instances in every category. 2: hyper-parameters default: The scope constraint for pairs mining is , the negative boundary is , the margin between positive and negative boundary is , the positive and negative temperature and . 3: Input: , the features are extracted by . 4: Output: Updated network parameters . 5: The forward propagation: for do feed forward into network and output the deep feature . 6: Similarity matrix calculation: calculating all similarities in the mini-batch according to the formula to obtain an matrix . 7: Global Optimal Structured Loss computation: 8: For do 9: Construct informative positive pairs set for anchor as Equation (11) 10: Construct informative negative pairs set for anchor as Equation (12) 11: Calculate as Equation (13) for the sampled positive pairs 12: Calculate as Equation (14) for the sampled negative pairs 13: Calculate as Equation (15) for an anchor 14: end for 15: calculate as Equation (16) for a mini-batch. 16: Backpropagation gradient and network parameters update: 17: . |

3.4. RSIR Framework Based on Global Optimal Structured Loss

4. Experiments and Discussion

4.1. Experimental Setup

4.1.1. Experimental Implementation

4.1.2. Datasets and Training

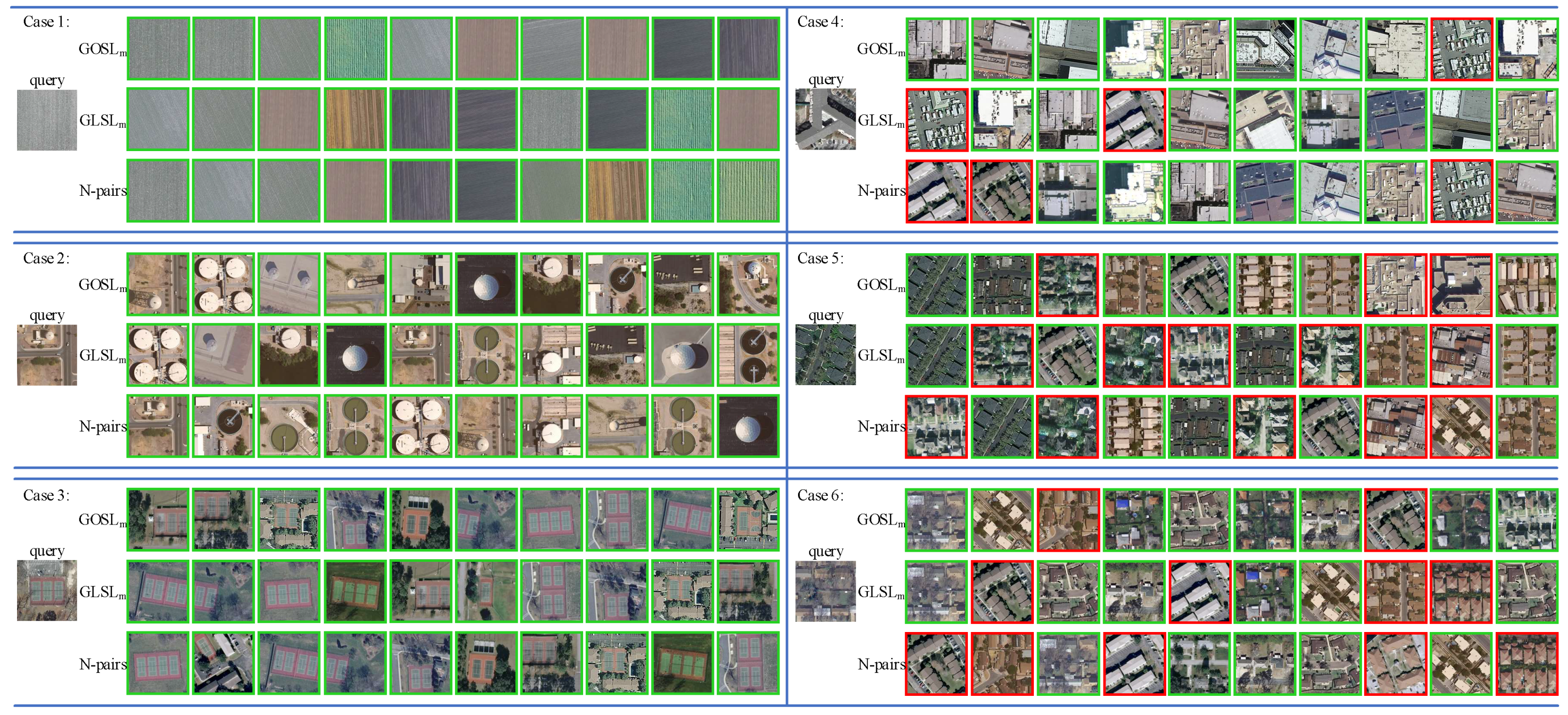

4.2. Comparision with the Baselines

4.3. Comparison with Multiple DML Methods in the Field of RSIR

4.4. Ablation Study

4.4.1. Hyper-Parameter Analysis

4.4.2. Impact of Embedding Size

4.4.3. Impact of Batch Size

4.5. The Retrieval Execution Complexity

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Xia, G.; Tong, X.; Hu, F.; Zhong, Y.; Datcu, M.; Zhang, L. Exploiting Deep Features for Remote Sensing Image Retrieval: A Systematic Investigation. IEEE Trans. Big Data 2019, 1. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Sensing, R. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Li, Y. A Survey on Deep Learning-Driven Remote Sensing Image Scene Understanding: Scene Classification, Scene Retrieval and Scene-Guided Object Detection. Appl. Sci. 2019, 9, 2110. [Google Scholar] [CrossRef]

- Du, P.; Chen, Y.; Hong, T.; Tao, F. Study on content-based remote sensing image retrieval. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium (IGARSS’05), Seoul, Korea, 29 July 2005; p. 4. [Google Scholar]

- Zhao, L.; Tang, J.; Yu, X.; Li, Y.; Mi, S.; Zhang, C. Content-based remote sensing image retrieval using image multi-feature combination and svm-based relevance feedback. In Recent Advances in Computer Science and Information Engineering; Springer: Berlin, Germany, 2012; pp. 761–767. [Google Scholar]

- Datcu, M.; Daschiel, H.; Pelizzari, A.; Quartulli, M.; Galoppo, A.; Colapicchioni, A.; Pastori, M.; Seidel, K.; Marchetti, P.G.; Delia, S. Information mining in remote sensing image archives: System concepts. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2923–2936. [Google Scholar] [CrossRef]

- Ozkan, S.; Ates, T.; Tola, E.; Soysal, M.; Esen, E. Performance Analysis of State-of-the-Art Representation Methods for Geographical Image Retrieval and Categorization. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1996–2000. [Google Scholar] [CrossRef]

- Ge, Y.; Jiang, S.; Xu, Q.; Jiang, C.; Ye, F. Exploiting representations from pre-trained convolutional neural networks for high-resolution remote sensing image retrieval. Multimed. Tools Appl. 2018, 77, 17489–17515. [Google Scholar] [CrossRef]

- Manjunath, B.S.; Ma, W.-Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef]

- Bretschneider, T.; Cavet, R.; Kao, O. Retrieval of remotely sensed imagery using spectral information content. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; pp. 2253–2255. [Google Scholar]

- Xia, G.-S.; Delon, J.; Gousseau, Y. Shape-based invariant texture indexing. Int. J. Comput. Vis. 2010, 88, 382–403. [Google Scholar] [CrossRef]

- Agouris, P.; Carswell, J.; Stefanidis, A. An environment for content-based image retrieval from large spatial databases. ISPRS J. Photogramm. Remote Sens. 1999, 54, 263–272. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Yang, Y.; Newsam, S. Geographic Image Retrieval Using Local Invariant Features. IEEE Trans. Geosci. Remote Sens. 2013, 51, 818–832. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhong, Y.; Zhao, B.; Xia, G.-S.; Zhang, L. Bag-of-visual-words scene classifier with local and global features for high spatial resolution remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 747–751. [Google Scholar] [CrossRef]

- Napoletano, P. Visual descriptors for content-based retrieval of remote-sensing images. Int. J. Remote Sens. 2018, 39, 1343–1376. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.; Zhang, L.; Huang, B. The Fisher kernel coding framework for high spatial resolution scene classification. Remote Sens. 2016, 8, 157. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012), Stateline, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Razavian, A.S.; Sullivan, J.; Carlsson, S.; Maki, A. Visual Instance Retrieval with Deep Convolutional Networks. ITE Trans. Media Technol. Appl. 2014, 4, 251–258. [Google Scholar] [CrossRef]

- Babenko, A.; Slesarev, A.; Chigorin, A.; Lempitsky, V. Neural Codes for Image Retrieval. In Proceedings of the European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 584–599. [Google Scholar]

- Gordo, A.; Almazán, J.; Revaud, J.; Larlus, D. Deep Image Retrieval: Learning Global Representations for Image Search. In Proceedings of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; pp. 241–257. [Google Scholar]

- Radenović, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1655–1668. [Google Scholar] [CrossRef]

- Zheng, L.; Yang, Y.; Tian, Q. SIFT meets CNN: A decade survey of instance retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1224–1244. [Google Scholar] [CrossRef]

- Sünderhauf, N.; Shirazi, S.; Jacobson, A.; Dayoub, F.; Pepperell, E.; Upcroft, B.; Milford, M. Place recognition with convnet landmarks: Viewpoint-robust, condition-robust, training-free. In Proceedings of the Robotics: Science Systems XII, Roma, Italy, 13–17 July 2015; pp. 13–17. [Google Scholar]

- He, N.; Fang, L.; Li, S.; Plaza, A.; Plaza, J. Remote sensing scene classification using multilayer stacked covariance pooling. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6899–6910. [Google Scholar] [CrossRef]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep learning earth observation classification using ImageNet pretrained networks. IEEE Geosci. Remote Sens. Lett. 2015, 13, 105–109. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep Learning for Hyperspectral Image Classification: An Overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When deep learning meets metric learning: Remote sensing image scene classification via learning discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef]

- Xiong, W.; Lv, Y.; Cui, Y.; Zhang, X.; Gu, X. A Discriminative Feature Learning Approach for Remote Sensing Image Retrieval. Remote Sens. 2019, 11, 281. [Google Scholar] [CrossRef]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Deep metric and hash-code learning for content-based retrieval of remote sensing images. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2018), Valencia, Spain, 22–27 July 2018; pp. 4539–4542. [Google Scholar]

- Cao, R.; Zhang, Q.; Zhu, J.; Li, Q.; Li, Q.; Liu, B.; Qiu, G. Enhancing Remote Sensing Image Retrieval with Triplet Deep Metric Learning Network. Int. J. Remote Sens. 2020, 41, 740–751. [Google Scholar] [CrossRef]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Metric-Learning based Deep Hashing Network for Content Based Retrieval of Remote Sensing Images. arXiv 2019, arXiv:1904.01258. [Google Scholar]

- Gong, Z.; Zhong, P.; Yu, Y.; Hu, W. Diversity-promoting deep structural metric learning for remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 56, 371–390. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Benediktsson, J.A. Deep Hashing Learning for Visual and Semantic Retrieval of Remote Sensing Images. arXiv 2019, arXiv:1909.04614. [Google Scholar]

- Lowe, D.G. Similarity metric learning for a variable-kernel classifier. Neural Comput. 1995, 7, 72–85. [Google Scholar] [CrossRef]

- Mika, S.; Ratsch, G.; Weston, J.; Scholkopf, B.; Mullers, K.-R. Fisher discriminant analysis with kernels. In Proceedings of the Neural Networks for Signal Processing IX: Proceedings of the 1999 IEEE Signal Processing Society Workshop (Cat. No. 98TH8468), Madison, WI, USA, 25 August 1999; pp. 41–48. [Google Scholar]

- Xing, E.P.; Jordan, M.I.; Russell, S.J.; Ng, A.Y. Distance metric learning with application to clustering with side-information. In Proceedings of the Advances in Neural Information Processing Systems, British Columbia, Canada, 8–13 December 2003; pp. 521–528. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 1735–1742. [Google Scholar]

- Hoffer, E.; Ailon, N. Deep metric learning using triplet network. In Proceedings of the International Workshop on Similarity-Based Pattern Recognition, Copenhagen, Denmark, 12–14 October 2015; pp. 84–92. [Google Scholar]

- Sohn, K. Improved deep metric learning with multi-class n-pair loss objective. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 1857–1865. [Google Scholar]

- Oh Song, H.; Xiang, Y.; Jegelka, S.; Savarese, S. Deep metric learning via lifted structured feature embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4004–4012. [Google Scholar]

- Wang, X.; Han, X.; Huang, W.; Dong, D.; Scott, M.R. Multi-Similarity Loss with General Pair Weighting for Deep Metric Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5022–5030. [Google Scholar]

- Wang, X.; Hua, Y.; Kodirov, E.; Hu, G.; Garnier, R.; Robertson, N.M. Ranked List Loss for Deep Metric Learning. arXiv 2019, arXiv:1903.03238. [Google Scholar]

- Law, M.T.; Thome, N.; Cord, M. Quadruplet-wise image similarity learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2013), Sydney, Australia, 1–8 December 2013; pp. 249–256. [Google Scholar]

- Chen, W.; Chen, X.; Zhang, J.; Huang, K. Beyond Triplet Loss: A Deep Quadruplet Network for Person Re-identification. In Proceedings of the Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1320–1329. [Google Scholar]

- Imbriaco, R.; Sebastian, C.; Bondarev, E. Aggregated Deep Local Features for Remote Sensing Image Retrieval. Remote Sens. 2019, 11, 493. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, X.; Liu, F.; Jiao, L. Unsupervised deep feature learning for remote sensing image retrieval. Remote Sens. 2018, 10, 1243. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Wohlhart, P.; Lepetit, V. Learning descriptors for object recognition and 3d pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3109–3118. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. Proceedings of European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 499–515. [Google Scholar]

- Zheng, X.; Ji, R.; Sun, X.; Wu, Y.; Huang, F.; Yang, Y. Centralized Ranking Loss with Weakly Supervised Localization for Fine-Grained Object Retrieval. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; pp. 1226–1233. [Google Scholar]

- Zheng, X.; Ji, R.; Sun, X.; Zhang, B.; Wu, Y.; Wu, Y. Towards Optimal Fine Grained Retrieval via Decorrelated Centralized Loss with Normalize-Scale layer. In Proceedings of the National Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9291–9298. [Google Scholar]

- Oh Song, H.; Jegelka, S.; Rathod, V.; Murphy, K. Deep metric learning via facility location. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5382–5390. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- He, X.; Zhou, Y.; Zhou, Z.; Bai, S.; Bai, X. Triplet-center loss for multi-view 3d object retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1945–1954. [Google Scholar]

- Harwood, B.; Kumar, B.G.; Carneiro, G.; Reid, I.; Drummond, T. Smart Mining for Deep Metric Learning. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2840–2848. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Datcu, M.; Seidel, K.; Walessa, M. Spatial information retrieval from remote-sensing images. I. Information theoretical perspective. Int. Geosci. Remote Sens. Symp. 1998, 36, 1431–1445. [Google Scholar] [CrossRef]

- Schroder, M.; Rehrauer, H.; Seidel, K.; Datcu, M. Spatial information retrieval from remote-sensing images. II. Gibbs-Markov random fields. Int. Geosci. Remote Sens. Symp. 1998, 36, 1446–1455. [Google Scholar] [CrossRef]

- Daschiel, H.; Datcu, M.P. Cluster structure evaluation of dyadic k-means algorithm for mining large image archives. In Proceedings of the Image and Signal Processing for Remote Sensing VIII, Crete, Greece, 23–27 September 2002; pp. 120–130. [Google Scholar]

- Shyu, C.-R.; Klaric, M.; Scott, G.J.; Barb, A.S.; Davis, C.H.; Palaniappan, K. GeoIRIS: Geospatial information retrieval and indexing system—Content mining, semantics modeling, and complex queries. IEEE Trans. Geosci. Remote Sens. 2007, 45, 839–852. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Pham, M.-T.; Mercier, G.; Regniers, O.; Michel, J. Texture retrieval from VHR optical remote sensed images using the local extrema descriptor with application to vineyard parcel detection. Remote Sens. 2016, 8, 368. [Google Scholar] [CrossRef]

- Yang, J.; Wong, M.S.; Ho, H.C. Retrieval of Urban Surface Temperature Using Remote Sensing Satellite Imagery. In Big Data for Remote Sensing: Visualization, Analysis and Interpretation; Springer: Cham, Switzerland, 2019; pp. 129–154. [Google Scholar]

- Mushore, T.D.; Dube, T.; Manjowe, M.; Gumindoga, W.; Chemura, A.; Rousta, I.; Odindi, J.; Mutanga, O. Remotely sensed retrieval of Local Climate Zones and their linkages to land surface temperature in Harare metropolitan city, Zimbabwe. Urban Clim. 2019, 27, 259–271. [Google Scholar] [CrossRef]

- Bai, Y.; Yu, W.; Xiao, T.; Xu, C.; Yang, K.; Ma, W.-Y.; Zhao, T. Bag-of-words based deep neural network for image retrieval. In Proceedings of the MM’14 22nd ACM International Conference on Multimedia; Association for Computing Machinery: New York, NY, USA, 2014; pp. 229–232. [Google Scholar]

- Li, Y.; Zhang, Y.; Tao, C.; Zhu, H. Content-based high-resolution remote sensing image retrieval via unsupervised feature learning and collaborative affinity metric fusion. Remote Sens. 2016, 8, 709. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Zhu, H.; Ma, J. Large-scale remote sensing image retrieval by deep hashing neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 56, 950–965. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Ma, J. Learning source-invariant deep hashing convolutional neural networks for cross-source remote sensing image retrieval. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6521–6536. [Google Scholar] [CrossRef]

- Hermans, A.; Beyer, L.; Leibe, B.; Recognition, P. In Defense of the Triplet Loss for Person Re-Identification. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.S. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Tang, X.; Jiao, L.; Emery, W.J.; Liu, F.; Zhang, D. Two-stage reranking for remote sensing image retrieval. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5798–5817. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.; Xia, G.-S.; Zhang, L. Dirichlet-derived multiple topic scene classification model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 54, 2108–2123. [Google Scholar] [CrossRef]

| Baseline | Feature Representations | Representation Size |

|---|---|---|

| DN7 [28] | Convolutional | 4096 |

| DN8 [28] | Convolutional | 4096 |

| ResNet50 [51] | Convolutional + VLAD | 1500 |

| DBOW [50] | Convolutional + BoW | 16,384 |

| ADLF [49] | Convolutional + VLAD | 16,384 |

| Method | UCMD | SATREM | SIRI | NWPU |

|---|---|---|---|---|

| DN7 [28] | 70.4 | 74.0 | 70.0 | 60.5 |

| DN8 [28] | 70.5 | 74.0 | 69.6 | 59.5 |

| ResNet50 [51] | 81.6 | 76.4 | 86.2 | 79.8 |

| DBOW [50] | 83.0 | 93.3 | 92.6 | 82.1 |

| ADLF [49] | 91.6 | 89.5 | 83.8 | 85.7 |

| GOSLm | 85.8 | 91.1 | 96.6 | 90.3 |

| Categories | DN7 [28] | DN8 [28] | ResNet50 [51] | DBOW [50] | ADLF [49] | GOSLm |

|---|---|---|---|---|---|---|

| Agriculture | 94 | 93 | 85 | 92 | 80 | 95 |

| Airplane | 74 | 75 | 93 | 95 | 97 | 82 |

| Baseball | 78 | 77 | 73 | 87 | 77 | 90 |

| Beach | 94 | 97 | 99 | 88 | 94 | 92 |

| Buildings | 51 | 47 | 74 | 93 | 85 | 78 |

| Chaparral | 98 | 98 | 95 | 94 | 100 | 95 |

| Dense | 36 | 33 | 62 | 96 | 90 | 55 |

| Forest | 98 | 98 | 87 | 99 | 98 | 95 |

| Freeway | 72 | 71 | 69 | 78 | 99 | 83 |

| Golf | 63 | 65 | 73 | 85 | 83 | 92 |

| Harbor | 85 | 84 | 97 | 95 | 100 | 95 |

| Intersection | 65 | 61 | 81 | 77 | 86 | 80 |

| Medium-density | 66 | 60 | 80 | 74 | 92 | 59 |

| Mobile | 66 | 65 | 74 | 76 | 94 | 80 |

| Overpass | 57 | 60 | 97 | 86 | 99 | 78 |

| Parking | 92 | 90 | 92 | 67 | 99 | 95 |

| River | 48 | 51 | 66 | 74 | 87 | 86 |

| Runway | 87 | 83 | 93 | 66 | 99 | 91 |

| Sparse | 67 | 78 | 69 | 79 | 79 | 91 |

| Storage | 40 | 45 | 86 | 50 | 93 | 95 |

| Tennis | 48 | 53 | 70 | 94 | 94 | 95 |

| Average | 70.4 | 70.5 | 81.6 | 83.0 | 91.6 | 85.8 |

| Categories | DN7 [28] | DN8 [28] | ResNet50 [51] | DBOW [50] | ADLF [49] | GOSLm |

|---|---|---|---|---|---|---|

| Agriculture | 85 | 85 | 86 | 97 | 90 | 92 |

| Airplane | 64 | 64 | 86 | 96 | 88 | 100 |

| Artificial | 74 | 78 | 93 | 97 | 81 | 98 |

| Beach | 68 | 66 | 86 | 95 | 87 | 98 |

| Buildings | 74 | 71 | 92 | 97 | 94 | 94 |

| Chaparral | 71 | 69 | 79 | 96 | 90 | 100 |

| Cloud | 100 | 100 | 97 | 99 | 97 | 100 |

| Container | 72 | 74 | 97 | 96 | 100 | 92 |

| Dense | 87 | 85 | 89 | 100 | 94 | 92 |

| Factory | 59 | 58 | 69 | 91 | 74 | 72 |

| Forest | 94 | 93 | 89 | 96 | 95 | 98 |

| Harbor | 60 | 65 | 80 | 98 | 96 | 98 |

| Medium-density | 68 | 66 | 67 | 100 | 67 | 53 |

| Ocean | 95 | 94 | 91 | 92 | 92 | 100 |

| Parking | 69 | 63 | 87 | 95 | 96 | 88 |

| River | 60 | 63 | 83 | 71 | 74 | 83 |

| Road | 64 | 60 | 85 | 82 | 93 | 90 |

| Runway | 84 | 82 | 96 | 86 | 97 | 97 |

| Sparse | 69 | 75 | 75 | 92 | 85 | 78 |

| Storage | 63 | 70 | 98 | 91 | 100 | 99 |

| Average | 74.0 | 74.0 | 86.2 | 93.3 | 89.5 | 91.1 |

| Categories | DN7 [28] | DN8 [28] | ResNet50 [51] | DBOW [50] | ADLF [49] | GOSLm |

|---|---|---|---|---|---|---|

| Agriculture | 82 | 79 | 95 | 99 | 94 | 100 |

| Commercial | 80 | 80 | 90 | 99 | 97 | 100 |

| Harbor | 55 | 56 | 63 | 89 | 74 | 98 |

| Idle | 58 | 60 | 63 | 97 | 80 | 99 |

| Industrial | 72 | 70 | 88 | 90 | 96 | 98 |

| Meadow | 71 | 63 | 77 | 93 | 82 | 95 |

| Overpass | 71 | 76 | 80 | 89 | 94 | 100 |

| Park | 67 | 67 | 82 | 87 | 90 | 100 |

| Pond | 47 | 50 | 57 | 97 | 74 | 96 |

| Residential | 81 | 78 | 84 | 97 | 94 | 98 |

| River | 59 | 57 | 44 | 89 | 69 | 77 |

| Water | 99 | 99 | 94 | 86 | 99 | 100 |

| Average | 69.9 | 69.5 | 76.4 | 92.6 | 86.9 | 96.6 |

| Categories | DN7 [28] | DN8 [28] | ResNet50 [51] | DBOW [50] | ADLF [49] | GOSLm |

|---|---|---|---|---|---|---|

| Airplane | 56 | 57 | 88 | 98 | 93 | 96 |

| Airport | 50 | 47 | 72 | 95 | 81 | 90 |

| Baseball Diamond | 43 | 45 | 69 | 86 | 64 | 93 |

| Basketball Court | 33 | 32 | 61 | 83 | 71 | 90 |

| Beach | 56 | 58 | 77 | 85 | 83 | 96 |

| Bridge | 67 | 66 | 73 | 95 | 81 | 93 |

| Chaparral | 93 | 93 | 98 | 96 | 99 | 98 |

| Church | 25 | 26 | 56 | 80 | 64 | 64 |

| Circular Farmland | 83 | 84 | 97 | 94 | 99 | 97 |

| Cloud | 91 | 91 | 92 | 98 | 98 | 98 |

| Commercial Area | 53 | 45 | 82 | 79 | 88 | 78 |

| Dense Residential | 62 | 58 | 89 | 90 | 95 | 92 |

| Desert | 85 | 83 | 87 | 97 | 92 | 90 |

| Forest | 91 | 89 | 95 | 95 | 97 | 94 |

| Freeway | 55 | 52 | 65 | 64 | 86 | 88 |

| Golf Course | 63 | 60 | 96 | 82 | 97 | 96 |

| Ground Track Field | 59 | 61 | 63 | 80 | 77 | 96 |

| Harbor | 64 | 65 | 93 | 88 | 97 | 99 |

| Industrial Area | 57 | 52 | 75 | 85 | 88 | 90 |

| Intersection | 57 | 51 | 64 | 80 | 72 | 97 |

| Island | 78 | 73 | 88 | 88 | 94 | 93 |

| Lake | 69 | 69 | 80 | 85 | 85 | 89 |

| Meadow | 82 | 82 | 84 | 90 | 93 | 93 |

| Medium Residential | 57 | 51 | 78 | 94 | 77 | 82 |

| Mobile Home Park | 52 | 52 | 93 | 83 | 97 | 94 |

| Mountain | 74 | 71 | 88 | 95 | 96 | 86 |

| Overpass | 51 | 53 | 87 | 74 | 90 | 95 |

| Palace | 25 | 23 | 41 | 80 | 56 | 51 |

| Parking Lot | 71 | 68 | 95 | 70 | 97 | 98 |

| Railway | 60 | 58 | 88 | 84 | 89 | 77 |

| Railway Station | 48 | 46 | 62 | 86 | 73 | 81 |

| Rectangular Farmland | 71 | 66 | 82 | 66 | 88 | 86 |

| River | 50 | 50 | 70 | 76 | 75 | 90 |

| Roundabout | 61 | 61 | 72 | 83 | 90 | 95 |

| Runway | 63 | 58 | 80 | 78 | 89 | 90 |

| Sea Ice | 91 | 89 | 98 | 90 | 99 | 99 |

| Ship | 43 | 46 | 61 | 65 | 69 | 95 |

| Snowberg | 78 | 79 | 97 | 83 | 98 | 99 |

| Sparse Residential | 58 | 62 | 69 | 84 | 70 | 93 |

| Stadium | 59 | 57 | 81 | 57 | 86 | 92 |

| Storage Tank | 61 | 62 | 88 | 48 | 94 | 98 |

| Tennis Court | 34 | 37 | 80 | 72 | 78 | 95 |

| Terrace | 54 | 54 | 88 | 76 | 90 | 89 |

| Thermal Power Station | 43 | 45 | 68 | 72 | 78 | 89 |

| Wetland | 50 | 49 | 82 | 70 | 80 | 85 |

| Average | 60.5 | 59.4 | 79.8 | 82.1 | 85.7 | 90.3 |

| Method | UCMD | SATREM | SIRI | NWPU |

|---|---|---|---|---|

| N-pairs | 82.2 | 85.3 | 92.8 | 84.3 |

| GLSL | 82.6 | 85.1 | 94.9 | 85.5 |

| GLSLm | 84.3 | 87.2 | 95.2 | 88.6 |

| GOSL | 85.1 | 86.8 | 95.3 | 85.8 |

| GOSLm | 85.8 | 91.1 | 96.6 | 90.3 |

| Recall@K (%) | 1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| N-pairs | 95.3 | 98.3 | 98.5 | 99.0 | 99.2 | 99.7 |

| GLSL | 94.2 | 96.1 | 96.9 | 98.3 | 98.3 | 99.5 |

| GLSLm | 94.7 | 96.4 | 97.1 | 97.6 | 98.1 | 99.7 |

| GOSL | 95.4 | 98.1 | 98.3 | 98.5 | 99.0 | 99.7 |

| GOSLm | 98.5 | 98.8 | 99.0 | 99.0 | 99.2 | 99.7 |

| Recall@K (%) | 1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| N-pairs | 93.6 | 95.6 | 97.5 | 98.6 | 99.3 | 99.8 |

| GLSL | 92.8 | 96.5 | 97.3 | 98.3 | 99.3 | 99.6 |

| GLSLm | 94.5 | 97.1 | 98.6 | 99.5 | 99.6 | 99.6 |

| GOSL | 93.3 | 96.0 | 98.0 | 98.5 | 99.3 | 99.6 |

| GOSLm | 94.8 | 97.0 | 98.5 | 99.3 | 100 | 100 |

| Recall@K (%) | 1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| N-pairs | 95.0 | 96.0 | 96.8 | 97.7 | 98.5 | 99.5 |

| GLSL | 95.4 | 96.2 | 97.5 | 98.1 | 98.9 | 98.9 |

| GLSLm | 95.8 | 96.4 | 96.8 | 98.1 | 98.5 | 99.5 |

| GOSL | 96.0 | 96.6 | 97.2 | 97.5 | 97.9 | 98.7 |

| GOSLm | 97.2 | 97.5 | 98.1 | 98.7 | 99.1 | 99.5 |

| Recall@K (%) | 1 | 2 | 4 | 8 | 16 | 32 |

|---|---|---|---|---|---|---|

| N-pairs | 87.3 | 92.5 | 95.1 | 96.9 | 98.0 | 98.7 |

| GLSL | 87.2 | 91.0 | 93.0 | 94.5 | 95.3 | 96.0 |

| GLSLm | 90.3 | 93.6 | 95.8 | 97.1 | 98.0 | 98.5 |

| GOSL | 87.4 | 91.2 | 93.3 | 94.8 | 95.7 | 96.1 |

| GOSLm | 91.1 | 94.3 | 96.3 | 97.6 | 98.3 | 98.7 |

| α | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 |

|---|---|---|---|---|---|---|

| AveP (%) | 96.3 | 96.6 | 96.1 | 96.0 | 95.8 | 95.7 |

| m | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 |

|---|---|---|---|---|---|---|

| AveP (%) | 95.4 | 95.6 | 95.6 | 95.8 | 96.6 | 96.0 |

| AveP (%) | 64 | 128 | 256 | 512 | 1024 |

|---|---|---|---|---|---|

| UCMD | 84.4 | 85.0 | 85.1 | 85.8 | 85.6 |

| SATREM | 85.2 | 85.6 | 86.8 | 91.1 | 86.9 |

| SIRI | 95.2 | 95.9 | 96.0 | 96.6 | 95.9 |

| NWPU | 87.9 | 88.2 | 88.6 | 90.3 | 88.8 |

| AveP (%) | 10 | 20 | 40 | 60 | 100 | 160 |

|---|---|---|---|---|---|---|

| UCMD | 84.7 | 85.7 | 85.8 | 85.6 | 85.5 | - |

| SATREM | 86.5 | 88.3 | 91.1 | 86.5 | 86.1 | - |

| SIRI | 95.5 | 95.6 | 96.6 | 95.5 | - | - |

| NWPU | 83.9 | 87.3 | 90.3 | 88.1 | 88.4 | 85.9 |

| DB Size | DN7 [50] | DN8 [50] | DBOW [50] | ADLF (1024) [49] | ADLF (512) [49] | ADLF (256) [49] | GOSLm (1024) | GOSLm (512) | GOSLm (256) |

|---|---|---|---|---|---|---|---|---|---|

| 50 | 5.80 | 5.70 | 2.30 | 1.70 | 0.97 | 0.61 | 0.34 | 0.29 | 0.28 |

| 100 | 17.10 | 17.30 | 6.10 | 3.31 | 3.43 | 1.85 | 0.89 | 0.46 | 0.40 |

| 200 | 58.70 | 58.40 | 21.40 | 11.54 | 11.13 | 6.43 | 1.90 | 0.72 | 0.66 |

| 300 | 127.40 | 127.80 | 45.90 | 28.18 | 16.56 | 10.72 | 2.59 | 1.32 | 1.03 |

| 400 | 223.10 | 224.30 | 79.60 | 49.01 | 29.72 | 14.87 | 3.37 | 1.60 | 1.49 |

| 500 | 246.00 | 344.90 | 123.90 | 77.83 | 44.90 | 22.98 | 4.20 | 2.35 | 2.31 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, P.; Gou, G.; Shan, X.; Tao, D.; Zhou, Q. Global Optimal Structured Embedding Learning for Remote Sensing Image Retrieval. Sensors 2020, 20, 291. https://doi.org/10.3390/s20010291

Liu P, Gou G, Shan X, Tao D, Zhou Q. Global Optimal Structured Embedding Learning for Remote Sensing Image Retrieval. Sensors. 2020; 20(1):291. https://doi.org/10.3390/s20010291

Chicago/Turabian StyleLiu, Pingping, Guixia Gou, Xue Shan, Dan Tao, and Qiuzhan Zhou. 2020. "Global Optimal Structured Embedding Learning for Remote Sensing Image Retrieval" Sensors 20, no. 1: 291. https://doi.org/10.3390/s20010291

APA StyleLiu, P., Gou, G., Shan, X., Tao, D., & Zhou, Q. (2020). Global Optimal Structured Embedding Learning for Remote Sensing Image Retrieval. Sensors, 20(1), 291. https://doi.org/10.3390/s20010291