Coarse-to-Fine Classification of Road Infrastructure Elements from Mobile Point Clouds Using Symmetric Ensemble Point Network and Euclidean Cluster Extraction

Abstract

1. Introduction

1.1. Rule-Feature-Based Classification Methods

1.2. Deep-Learning-Based Classification

1.3. Motivations and Main Contributions

- A novel road infrastructure classification method is developed by combining an SEP network that directly classifies massive point clouds and an ECE method which has potential to adjust falsely predicted points;

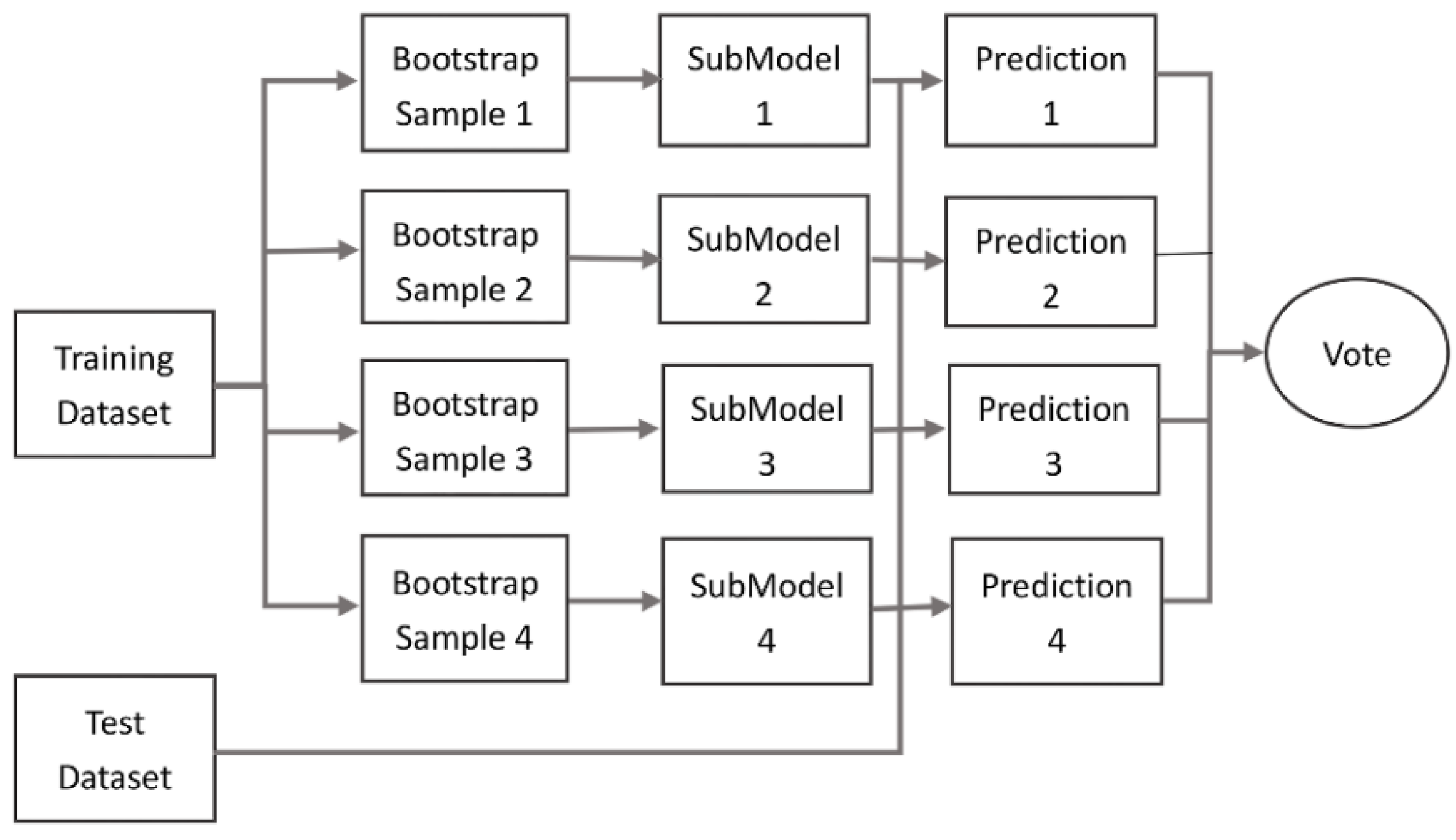

- To enhance the robustness of the network and to avoid over-fitting by introducing an ensemble method that trains sub-models and casts them into four bootstrap sample sets; and

- To validate the proposed method with public and an experimental data set.

2. Materials and Methods

- Coarse classification with an SEP network by normalisation of raw point clouds and extraction of object features based on an encoding and decoding network (Section 2.1);

- Application of an ensemble method for optimising the classification results (Section 2.2).

- Fine classification with the ECE method (Section 2.3) for the adjustment of false predictions that often occur when classifying objects with similar local features, such as traffic signs, streetlights, trees, buildings and walls.

2.1. Encoder-Decoder with Normalised Point Clouds

2.2. Optimal Ensemble Method

2.3. Refining Classification with ECE Method

- (1)

- a k-d tree structure for the input point cloud dataset is created;

- (2)

- an empty list of clusters and a queue of the points that need to be analysed is set up;

- (3)

- the following steps are run per every point :

- is added to the current queue ;

- The following operations are executed per every point :

- ■

- search for the set of point neighbours of in a sphere with radius ;

- ■

- for every neighbour , if the point has not been processed yet, it is added to ;

- when all points in have been processed, add to the list of clusters and reset to an empty list.

- (4)

- the algorithm terminates when all points have been processed and are assigned to a cluster;

- (5)

- for a cluster in C do:

- all the classes in this cluster are counted and the main class is selected as the representative class of this cluster;

- the properties of the cluster are checked with prior knowledge, such as bounding boxes, density, gravity centres, heights.

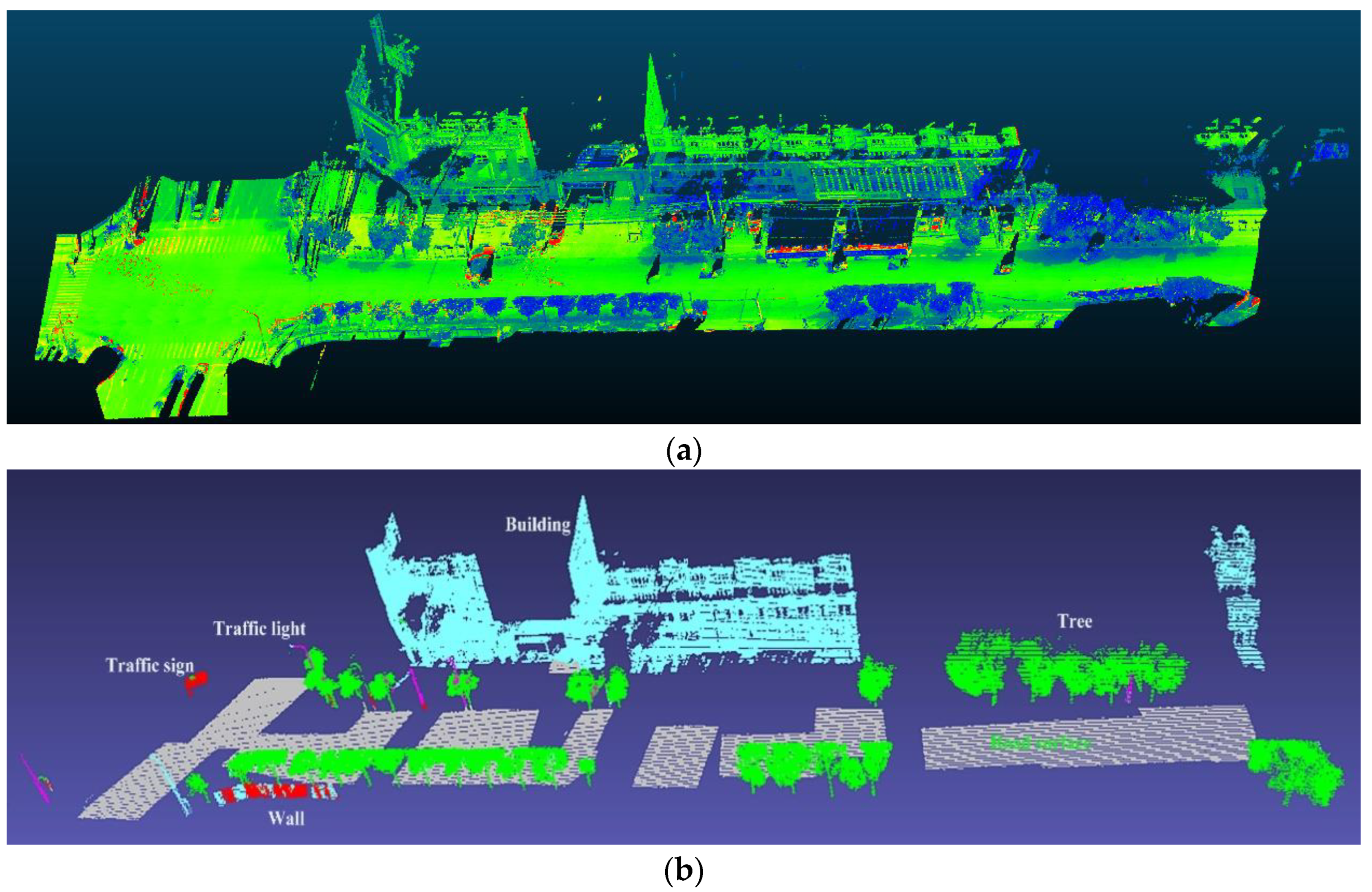

3. Experimental Data

3.1. Stanford 3D Semantic Parsing Data Set 1

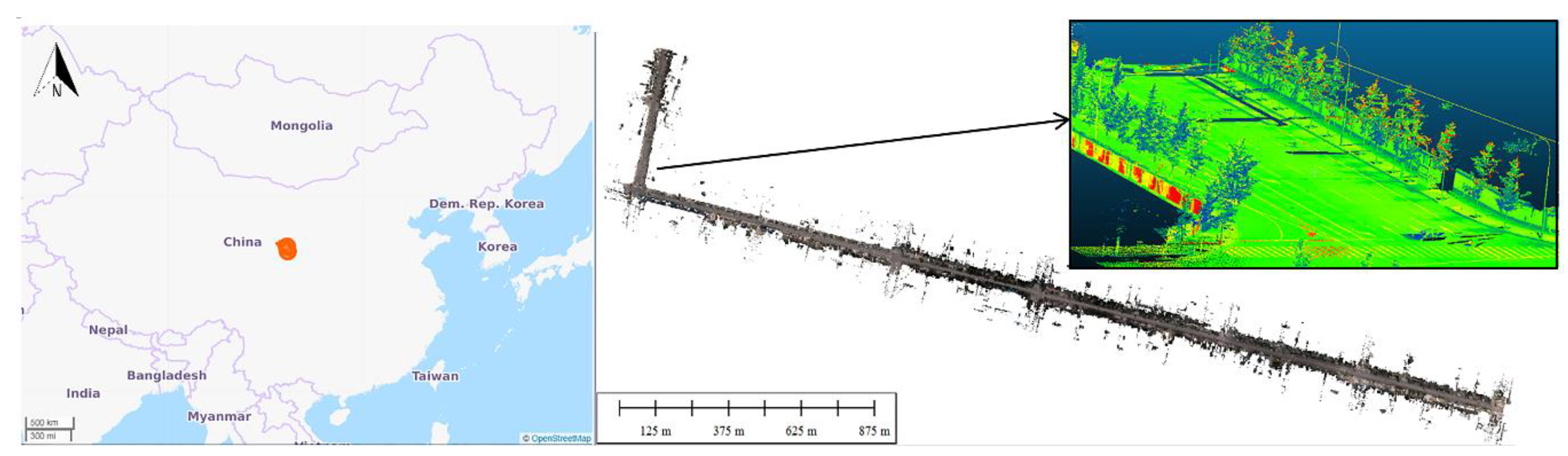

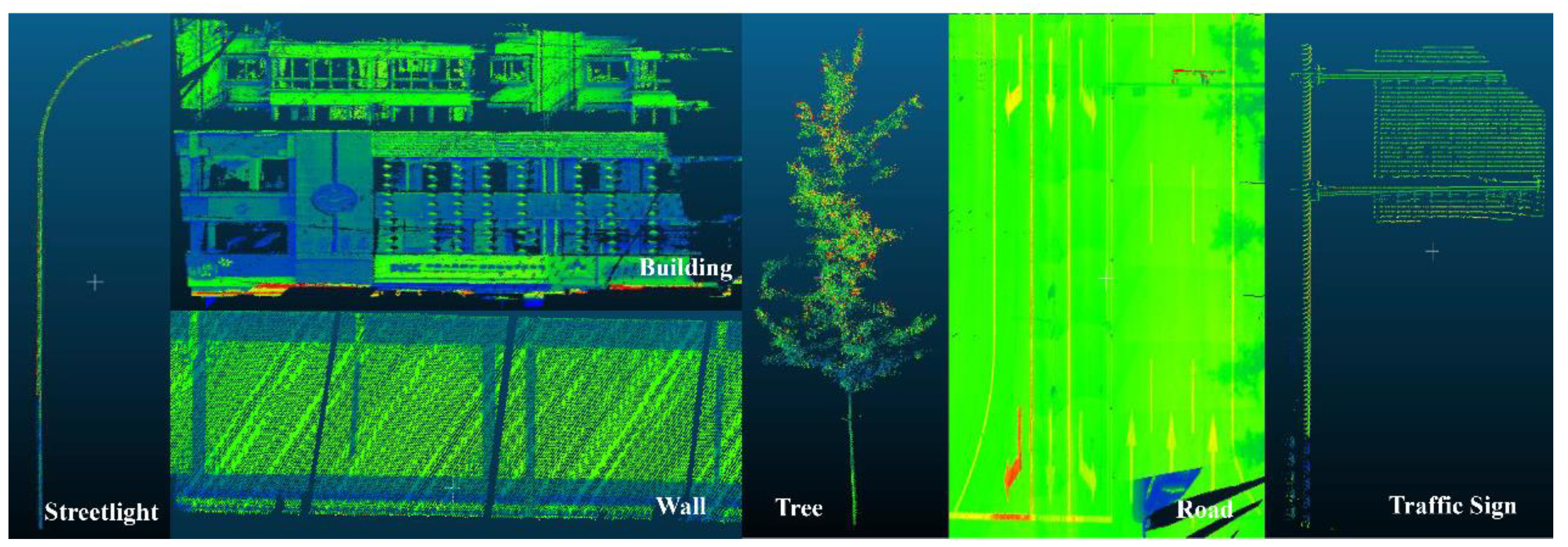

3.2. Experimental Road Data Set 2

4. Implementation Details, Results and Discussion

4.1. Comparative Analysis of Data Set 1

4.2. Implementation Details and Classification Results of Data Set 2

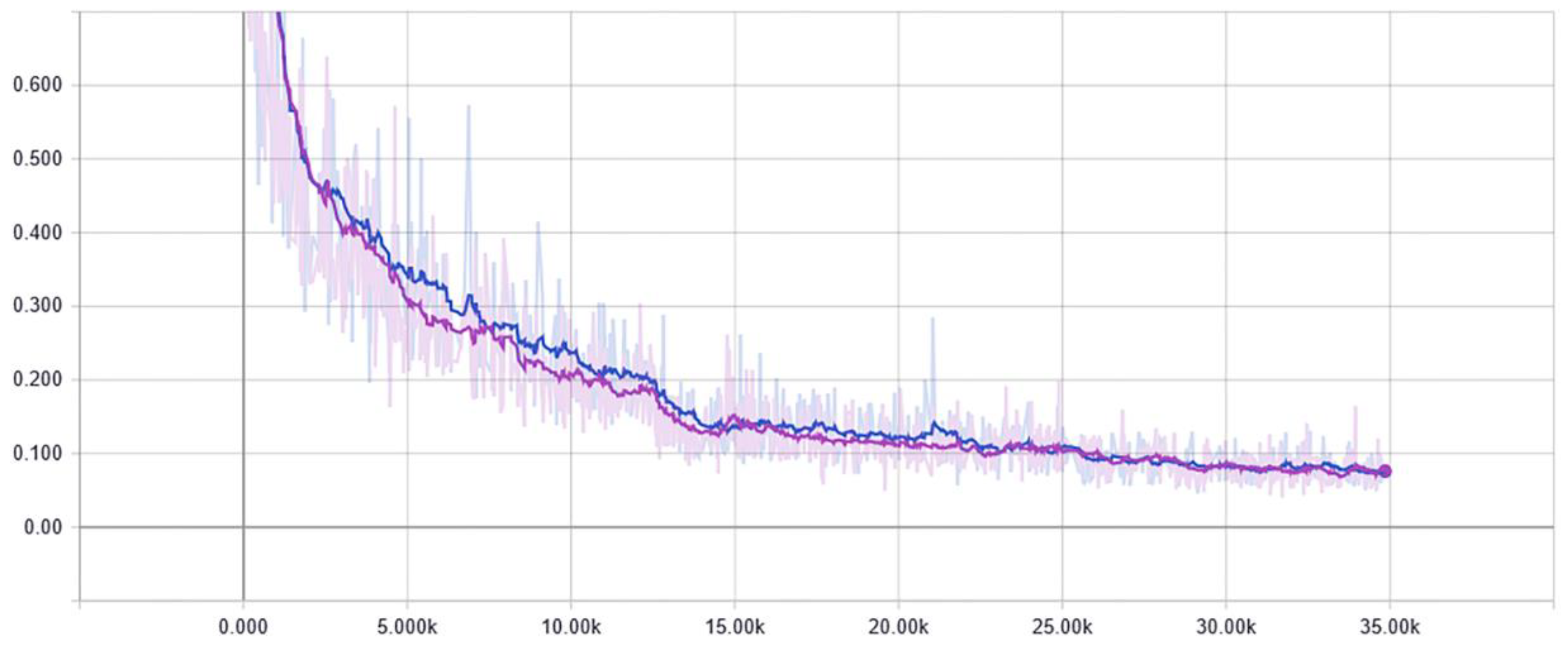

4.2.1. Implementation Details

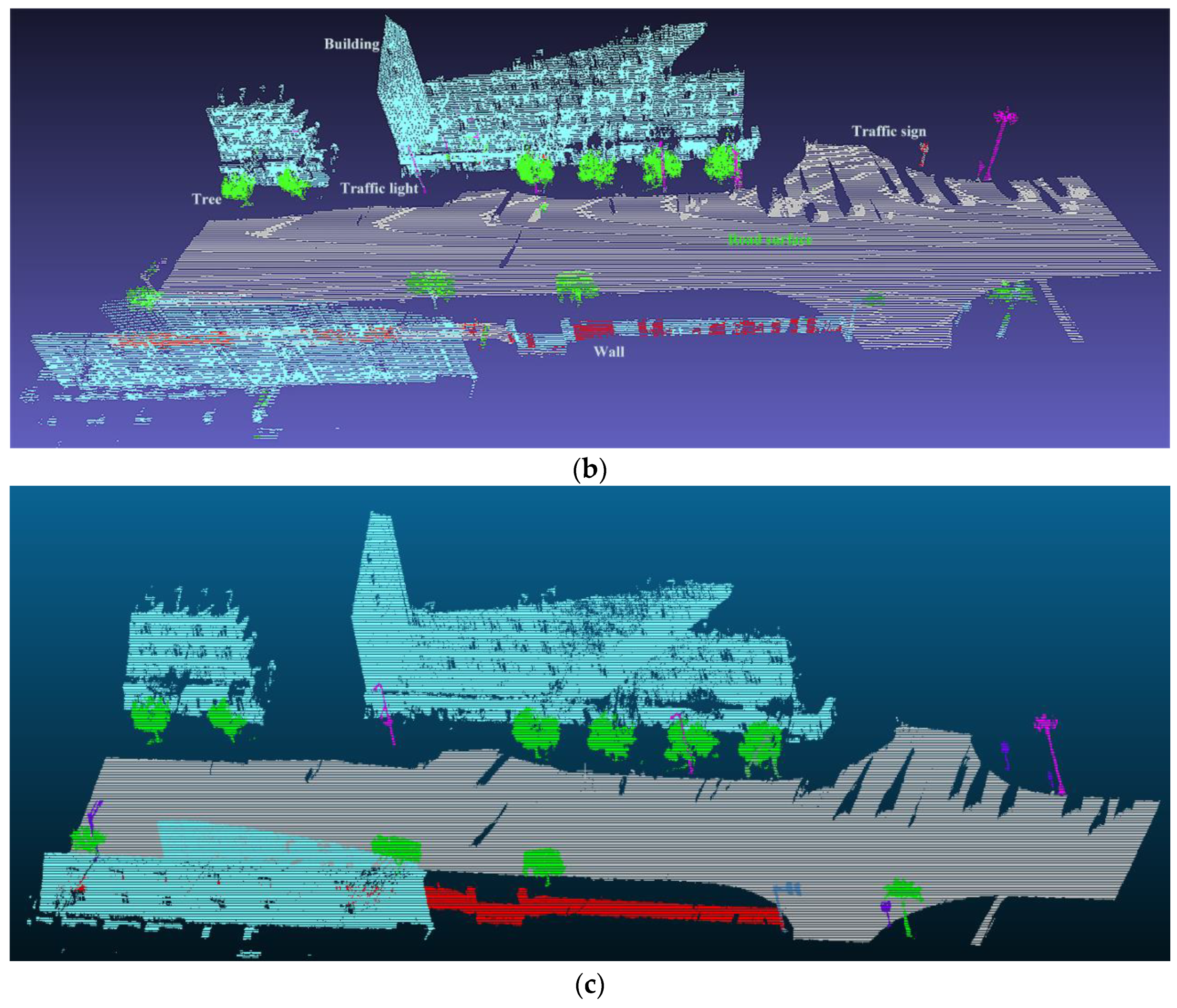

4.2.2. Coarse Classification Results with SEP Network

4.2.3. Refining Classification Results with SEP-ECE Method

4.2.4. Accuracy, Precision and Recall of the SEP-ECE Method

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Lampinen, J.; Kaartinen, H.; Kukko, A. Object classification and recognition from mobile laser scanning point clouds in a road environment. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1226–1239. [Google Scholar] [CrossRef]

- Yang, B.; Liu, Y.; Dong, Z.; Liang, F.; Li, B.; Peng, X. 3D local feature BKD to extract road information from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2017, 130, 329–343. [Google Scholar] [CrossRef]

- Krüger, T.; Nowak, S.; Hecker, P. Towards autonomous navigation with unmanned ground vehicles using LiDAR. In Proceedings of the 2015 International Technical Meeting of the Institute of Navigation, Dana Point, CA, USA, 26–28 January 2015; pp. 778–788. [Google Scholar]

- Rutzinger, M.; Hoefle, B.; Hollaus, M.; Pfeifer, N. Object-based point cloud analysis of full-waveform airborne laser scanning data for urban vegetation classification. Sensors 2008, 8, 4505–4528. [Google Scholar] [CrossRef] [PubMed]

- Mongus, D.; Žalik, B. Parameter-free ground filtering of LiDAR data for automatic DTM generation. ISPRS J. Photogramm. Remote Sens. 2012, 67, 1–12. [Google Scholar] [CrossRef]

- Guo, B.; Huang, X.; Zhang, F.; Sohn, G. Classification of airborne laser scanning data using Joint Boost. ISPRS J. Photogramm. Remote Sens. 2014, 92, 124–136. [Google Scholar]

- Zhao, R.; Pang, M.; Wang, J. Classifying airborne LiDAR point clouds via deep features learned by a multi-scale convolutional neural network. Int. J. Geogr. Inf. Sci. 2018, 32, 960–979. [Google Scholar] [CrossRef]

- Pu, S.; Rutzinger, M.; Vosselman, G.; Oude Elberink, S. Recognizing basic structures from mobile laser scanning data for road inventory studies. ISPRS J. Photogramm. Remote Sens. 2011, 66, S28–S39. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the segmentation of 3D LIDAR point clouds. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’11), Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Rusu, R.B. Semantic 3D Object maps for everyday manipulation in human living environments. KI Künstliche Intelligenz 2010, 24, 345–348. [Google Scholar] [CrossRef]

- Becker, C.; Häni, N.; Rosinskaya, E.; D’Angelo, E.; Strecha, C. Classification of aerial photogrammetric 3D point clouds. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Hannover, Germany, 6–9 June 2017; Volume 4, pp. 3–10. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of LiDAR data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Xu, S.; Vosselman, G.; Elberink, S.O. Multiple-entity based classification of airborne laser scanning data in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 88, 1–15. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Vastaranta, M.; Holopainen, M.; Viitala, R. Predicting individual tree attributes from airborne laser point clouds based on the random forests technique. ISPRS J. Photogramm. Remote Sens. 2011, 66, 28–37. [Google Scholar] [CrossRef]

- Tran, T.H.G.; Ressl, C.; Pfeifer, N. Integrated change detection and classification in urban areas based on airborne laser scanning point clouds. Sensors 2018, 18, 448. [Google Scholar] [CrossRef] [PubMed]

- Serna, A.; Marcotegui, B. Detection, segmentation and classification of 3D urban objects using mathematical morphology and supervised learning. ISPRS J. Photogramm. Remote Sens. 2014, 93, 243–255. [Google Scholar] [CrossRef]

- Han, X.; Huang, X.; Li, J.; Li, Y.; Yang, M.Y.; Gong, J. The edge-preservation multi-classifier relearning framework for the classification of high-resolution remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2018, 138, 57–73. [Google Scholar] [CrossRef]

- Xiang, B.; Yao, J.; Lu, X.; Li, L.; Xie, R.; Li, J. Segmentation-based classification for 3D point clouds in the road environment. Int. J. Remote Sens. 2018, 39, 6182–6212. [Google Scholar] [CrossRef]

- Alexander, C.; Tansey, K.; Kaduk, J.; Holland, D.; Tate, N.J. Backscatter coefficient as an attribute for the classification of full-waveform airborne laser scanning data in urban areas. ISPRS J. Photogramm. Remote Sens. 2010, 65, 423–432. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1097–1105. [Google Scholar] [CrossRef]

- Che, E.; Jung, J.; Olsen, M.J. Object recognition, segmentation, and classification of mobile laser scanning point clouds: A state of the art review. Sensors 2019, 19, 810. [Google Scholar] [CrossRef]

- Mizoguchi, T.; Ishii, A.; Nakamura, H.; Inoue, T.; Takamatsu, H. Lidar-based individual tree species classification using convolutional neural network. In Proceedings of the SPIE 10332, Videometrics, Range Imaging, and Applications XIV, Munich, Germany, 26 June 2017. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Yuille, A.L. Deep networks under scene-level supervision for multi-class geospatial object detection from remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 182–196. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CSCCVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 1–8. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Liu, F.; Li, S.; Zhang, L.; Zhou, C.; Ye, R.; Lu, J. 3DCNN-DQN-RNN: A deep reinforcement learning framework for semantic parsing of large-scale 3d point clouds. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5678–5687. [Google Scholar]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3D Shape recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Wen, C.; Sun, X.; Li, J.; Wang, C.; Guo, Y.; Habib, A. A deep learning framework for road marking extraction, classification and completion from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 147, 178–192. [Google Scholar] [CrossRef]

- Li, Y.; Bu, R.; Sun, M.; Chen, B. PointCNN: Convolution on X-transformed points. Adv. Neural Inf. Process. Syst. 2018, 31, 820–830. [Google Scholar]

- Zhang, L.; Li, Z.; Li, A.; Liu, F. Large-scale urban point cloud labeling and reconstruction. ISPRS J. Photogramm. Remote Sens. 2018, 138, 86–100. [Google Scholar] [CrossRef]

- Klokov, R.; Lempitsky, V. Escape from cells: Deep Kd-Networks for the recognition of 3D point cloud models. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Riegler, G.; Ulusoy, A.; Geiger, A. Octnet: Learning deep 3D representations at high resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3577–3586. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 630–645. [Google Scholar]

- Guerrero, P.; Kleiman, Y.; Ovsjanikov, M.; Mitra, N.J. PCPNET: Learning local shape properties from raw point clouds. Comput. Graph. Forum 2017, 37, 75–85. [Google Scholar] [CrossRef]

- Balado, J.; Martínez-Sánchez, J.; Arias, P.; Novo, A. Road environment semantic segmentation with deep learning from MLS point cloud data. Sensors 2019, 19, 3466. [Google Scholar] [CrossRef] [PubMed]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5099–5108. [Google Scholar]

- Briechle, S.; Krzystek, P.; Vosselman, G. Semantic labelling of ALS point clouds for tree species mapping using the deep neural network PointNet++. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019; Volume 42. [Google Scholar]

- Qi, C.R.; Litany, O.; He, K.; Guibas, L.J. Deep hough voting for 3d object detection in point clouds. In Proceedings of the Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhang, K.; Hao, M.; Wang, J.; de Silva, C.W.; Fu, C. Linked dynamic graph cnn: Learning on point cloud via linking hierarchical features. In Proceedings of the Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 1–8. [Google Scholar]

- Scaioni, M.; Höfle, B.; Baungarten-Kersting, A.P.; Barazzetti, L.; Previtali, M.; Wujanz, D. Methods for information extraction from lidar intensity data and multispectral lidar technology. In Proceedings of the ISPRS TC III Mid-term Symposium Developments, Technologies and Applications in Remote Sensing, Beijing, China, 7–10 May 2018; pp. 1503–1510. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; pp. 206–209. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam. A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 26–31 May 2013. [Google Scholar]

- Zhou, Z.H. Ensemble Methods: Foundations and Algorithms; Chapman and Hall/CRC: Boca Raton, FL, USA, 2012; pp. 47–66. [Google Scholar]

- Zhou, Z.H. Ensemble Learning. Encyclopaedia of Biometrics; Springer: Boston, MA, USA, 2015; pp. 411–416. [Google Scholar]

- Samet, H. Foundations of Multidimensional and Metric Data Structures; Morgan Kaufmann: Burlington, MA, USA, 2006; p. 1024. [Google Scholar]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3d semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition (ICCVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 6–7. [Google Scholar]

| Layer Number | Network Components | Layer Name | Properties |

|---|---|---|---|

| 1 | Point cloud normalisation | Input layer | Input patch size |

| 2 | T-net1 | Matrix multiply | |

| 3 | Convolution (Conv.) 1 | Number of filters 64; filter size ; ExpandDims | |

| 4 | Conv.2 | Number of filters 64; filter size ExpandDims | |

| 5 | T-net2 | MatMul | |

| 6 | MLP encoder from Conv.3 to Conv.7; MLP decoder from Conv.8 to Conv.12 | Conv.3 | Number of filters 64; filter size ExpandDims |

| 7 | Conv.4 | Number of filters 128; filter size ExpandDims | |

| 8 | Conv.5 | Number of filters 256; filter size ExpandDims | |

| 9 | Conv.6 | Number of filters 512; filter size ExpandDims | |

| 10 | Conv.7 | Number of filters 1024; filter size ExpandDims | |

| 11 | MaxPool | MaxPoolGrad ExpandDims | |

| 12 | Concatenate | Layer 6 and Layer 11 ExpandDims | |

| 13 | Conv.8 | Number of filters 512; filter size ExpandDims | |

| 14 | Conv.9 | Number of filters 256; filter size ExpandDims | |

| 15 | Conv.10 | Number of filters 128; filter size ExpandDims | |

| 16 | Conv.11 | Number of filters 128; filter size ExpandDims | |

| 17 | Conv.12 | Number of filters 64; filter size ExpandDims | |

| 18 | - | Conv.13 | Number of filters 7; filter size ExpandDims |

| 19 | - | Softmax | - |

| PointNet [1] | SP Network | |

|---|---|---|

| Overall accuracy |

| Buildings | Road Surfaces | Trees | Walls | Traffic Signs | Streetlights | |

|---|---|---|---|---|---|---|

| Precision | 82.66 | 98.55 | 77.23 | 80.17 | 100 | 25.00 |

| Recall | 96.16 | 99.95 | 97.05 | 47.96 | 1.47 | 5.35 |

| Accuracy | 96.46 | 96.46 | 96.81 | 96.08 | 97.93 | 97.05 |

| Accuracy | Buildings | Roads | Trees | Walls | Traffic Signs | Streetlights | Mean |

|---|---|---|---|---|---|---|---|

| SEP-ECE | 99.43 | 99.95 | 99.59 | 99.74 | 99.80 | 99.91 | 99.74 |

| SEP | 96.51 | 97.03 | 96.86 | 96.15 | 97.93 | 97.09 | 96.93 |

| SP | 96.46 | 96.46 | 96.81 | 96.08 | 97.93 | 97.05 | 96.80 |

| PointNet | 95.66 | 96.05 | 95.60 | 95.61 | 96.48 | 95.22 | 95.77 |

| Precision | Buildings | Roads | Trees | Walls | Traffic Signs | Streetlights |

|---|---|---|---|---|---|---|

| SEP-ECE | 97.64 | 99.96 | 97.93 | 96.02 | 65.98 | 100 |

| SEP | 82.92 | 98.54 | 77.26 | 80.19 | 100 | 28.57 |

| SP | 82.66 | 98.55 | 77.23 | 80.17 | 100 | 25.00 |

| PointNet | 78.25 | 99.30 | 70.51 | 72.61 | 20.00 | 16.67 |

| Recall | Buildings | Roads | Trees | Walls | Traffic Signs | Streetlights |

|---|---|---|---|---|---|---|

| SEP-ECE | 99.13 | 99.97 | 99.13 | 90.15 | 77.70 | 55.77 |

| SEP | 96.16 | 99.95 | 97.16 | 50.16 | 1.50 | 5.27 |

| SP | 96.16 | 99.95 | 97.05 | 47.96 | 1.47 | 5.35 |

| PointNet | 94.41 | 98.71 | 92.11 | 68.28 | 23.12 | 11.06 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Wang, J.; Scaioni, M.; Si, Q. Coarse-to-Fine Classification of Road Infrastructure Elements from Mobile Point Clouds Using Symmetric Ensemble Point Network and Euclidean Cluster Extraction. Sensors 2020, 20, 225. https://doi.org/10.3390/s20010225

Wang D, Wang J, Scaioni M, Si Q. Coarse-to-Fine Classification of Road Infrastructure Elements from Mobile Point Clouds Using Symmetric Ensemble Point Network and Euclidean Cluster Extraction. Sensors. 2020; 20(1):225. https://doi.org/10.3390/s20010225

Chicago/Turabian StyleWang, Duo, Jin Wang, Marco Scaioni, and Qi Si. 2020. "Coarse-to-Fine Classification of Road Infrastructure Elements from Mobile Point Clouds Using Symmetric Ensemble Point Network and Euclidean Cluster Extraction" Sensors 20, no. 1: 225. https://doi.org/10.3390/s20010225

APA StyleWang, D., Wang, J., Scaioni, M., & Si, Q. (2020). Coarse-to-Fine Classification of Road Infrastructure Elements from Mobile Point Clouds Using Symmetric Ensemble Point Network and Euclidean Cluster Extraction. Sensors, 20(1), 225. https://doi.org/10.3390/s20010225