Neural Network Ensembles for Sensor-Based Human Activity Recognition Within Smart Environments

Abstract

1. Introduction

2. Related Works

2.1. Human Activity Recognition (HAR)

2.2. Neural Networks

2.3. Ensemble Learners

2.3.1. Ensemble Generation

2.3.2. Ensemble Integration

3. Dataset for Data-Driven HAR

3.1. UCAmI Cup Dataset

3.1.1. Data Challenges

- Number of classes. The number of classes in the original dataset were very high given the low number of instances per activity and low amount of data overall. As discussed previously, data-driven approaches rely on large amounts of good quality data. Furthermore, certain classes were too closely related to one another to recognize with binary data alone. For example, the following activities relied on one door sensor: entering the smart lab, leaving the smart lab, and having a visitor to the smart lab. Binary sensors are limited in inferring activities in that they provide information at an abstract level [77], therefore Act08 eating a snack was difficult to distinguish compared to Act03 prepare breakfast, Act04 prepare lunch, and Act05 prepare dinner, as these activities all used similar sensors. Thus in order to capture activities at a finer level, the presentation and interpretation of binary data often requires further knowledge of the environment [78]. This issue was discussed by a UCAmI Cup participant in [75], where conclusions had stated that their achieved activity recognition performance was reasonable given the large number of activity classes present in the dataset.

- Imbalanced dataset. The distribution of instances per class in the original dataset were highly diverse, which may have caused minority classes to be overlooked by the classification model. For example, Act19 wash dishes was represented by 13 instances of data, whereas other activities such as Act17 brush teeth had more than 100 instances. Furthermore, the distribution of instances per class in the provided training and test sets were highly varied. For example, Act09 was very under-represented in the training set, yet the test set included a large number of Act09 instances. Noteworthy, Act09 also produced very similar sensor characteristics to Act12, which was problematic in the initial experiments, as the training set included large amounts of Act12 data. This issue was discussed in [74], where researchers stated that their approach also found difficulty in classifying Act12, due to the poor representation of this activity in the training set, and suggested that the data should be better distributed to improve HAR performance.

- Quantity of data. As previously stated, data-driven approaches require lots of data during the training phase to learn activity models and to ensure these models can generalize well to new data. NN require lots of data to learn complex activity models [79], though the original dataset was relatively small. Thus, more labelled training data could have improved initial experiments. In [76], UCAmI Cup participants suggested the cause for their low HAR performance was the high imbalance of classes in the training set and stated that the training algorithm required more labelled training data to perform better.

- Missing sensors. Act21 work at table had no binary sensor located near the table to distinguish this activity, as presented in Figure 1. This issue caused confusion as the sensor firing for Act21 in the labelled training set was seen to be a motion sensor located in the bedroom, which is irrelevant to Act21 and therefore seen as erroneous. In addition to missing sensors, there were also missing values from sensors that were expected to fire during certain activities. As previously stated, some researchers participating in the UCAmI Cup challenge reported that they found missing values or mislabeling of some activities within the training set. In [73] this issue was discussed, where participants stated that during one instance of Act10 enter the smart lab, the only binary sensor that is expected to fire (M01), does not change states.

- Interclass similarity. This is a common HAR challenge that occurs when certain activities generate similar sensor characteristics, though they are physically different [80]. Table 3 shows the activities that produced similar sensor characteristics, resulting in difficulties arising in discriminating between these activities during classification.

3.2. Restructured Dataset

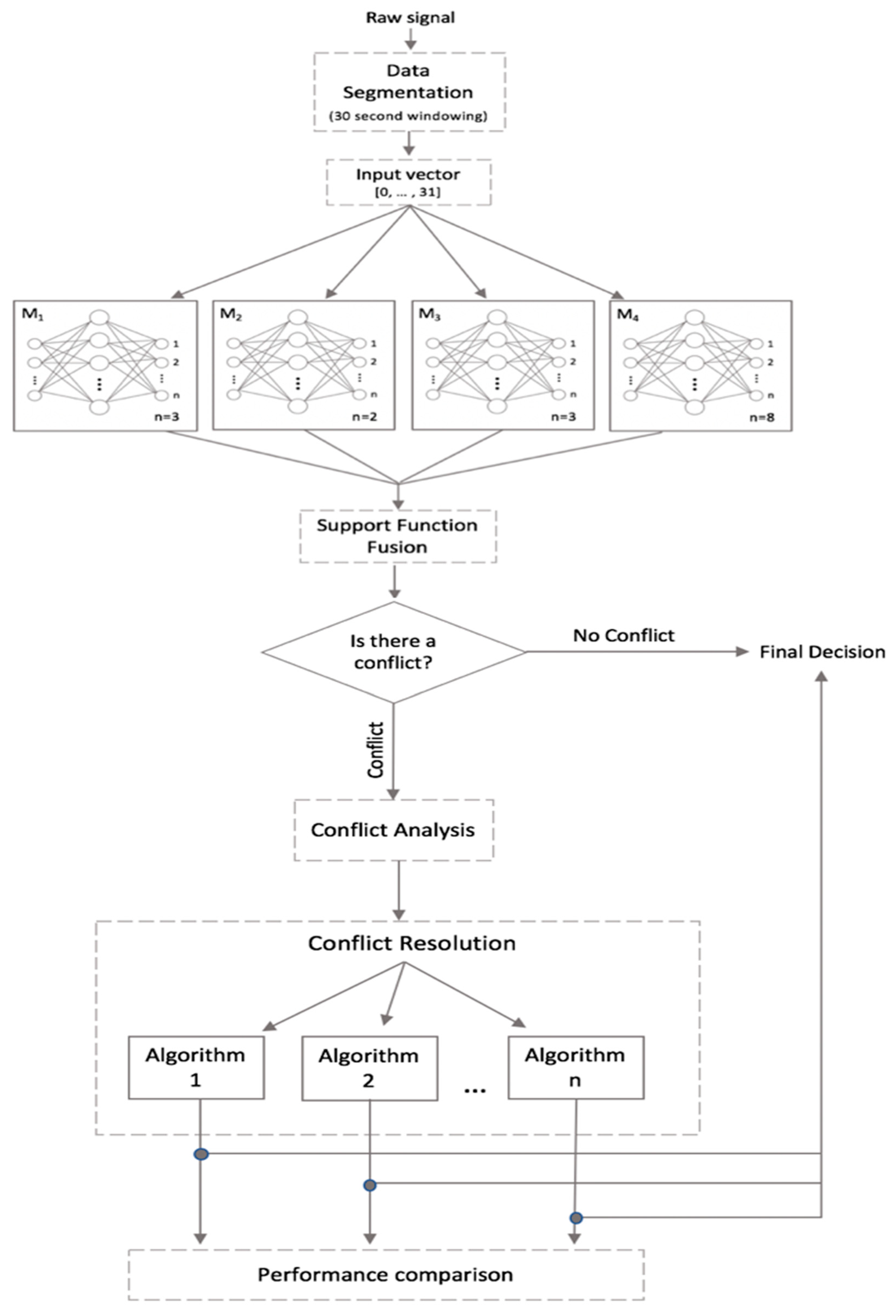

4. Proposed HAR Classification Model

4.1. Data Pre-Processing

4.2. Ensemble Approach

4.2.1. Complement Class Generation at a Model Level

4.2.2. Complement Class Generation at a Class Level

4.3. Model Conflict Resolution

| Algorithm 1. Process of finding conflicts between models | |

| 1: | For Each instance |

| 2: | |

| 3: | Then use conflict resolution approaches in Algorithms 2/3/4/5 as there are at least 2 conflicting cases |

| Algorithm 2. Conflict resolution approach 1 | |

| Input: , base models Mr, Ms | |

| Output: class | |

| 1: | |

| 2: | Then |

| 3: | Else |

| Algorithm 3. Conflict resolution approach 2 | |

| Input: , base models Mr, Ms | |

| Output: class | |

| 1: | |

| 2: | Then |

| 3: | Else |

| Algorithm 4. Conflict resolution approach 3 | |

| Input: , base models Mr, Ms | |

| Output: class | |

| 1: | |

| 2: | Then |

| 3: | Else |

| Algorithm 5. Conflict resolution approach 4 | |

| Input: , base models Mr, Ms | |

| Output: class | |

| 1: | represents training performance for base model |

| 2: | represents training performance for base model |

| 3: | |

| 4: | Then |

| 5: | Else |

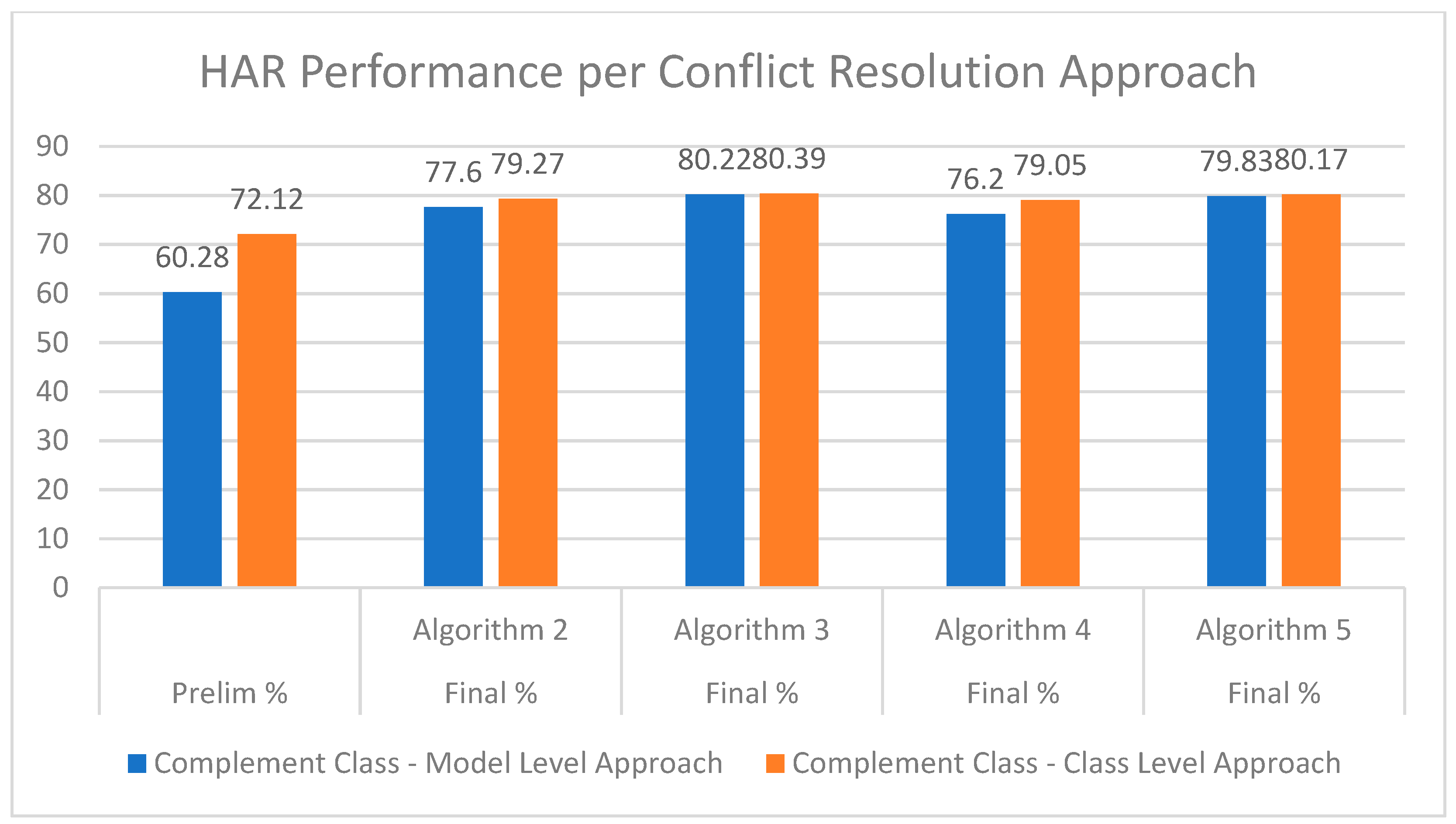

5. Results and Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ranasinghe, S.; al Machot, F.; Mayr, H.C. A review on applications of activity recognition systems with regard to performance and evaluation. Int. J. Distrib. Sens. Netw. 2016, 12, 1550147716665520. [Google Scholar] [CrossRef]

- Zhao, W.; Yan, L.; Zhang, Y. Geometric-constrained multi-view image matching method based on semi-global optimization. Geo-Spat. Inf. Sci. 2018, 21, 115–126. [Google Scholar] [CrossRef]

- Abdallah, Z.S.; Gaber, M.M.; Srinivasan, B.; Krishnaswamy, S. Adaptive mobile activity recognition system with evolving data streams. Neurocomputing 2015, 150, 304–317. [Google Scholar] [CrossRef]

- Bakli, M.S.; Sakr, M.A.; Soliman, T.H.A. A spatiotemporal algebra in Hadoop for moving objects. Geo-Spat. Inf. Sci. 2018, 21, 102–114. [Google Scholar] [CrossRef]

- Awad, M.M. Forest mapping: A comparison between hyperspectral and multispectral images and technologies. J. For. Res. 2018, 29, 1395–1405. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable sensor-based human behavior understanding and recognition in daily life for smart environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Lee, Y.; Choi, T.J.; Ahn, C.W. Multi-objective evolutionary approach to select security solutions. CAAI Trans. Intell. Technol. 2017, 2, 64–67. [Google Scholar] [CrossRef]

- Cook, D.J.; Crandall, A.S.; Thomas, B.L.; Krishnan, N.C. CASAS: A Smart Home in a Box. Computing Practices 2013, 46, 62–69. [Google Scholar] [CrossRef] [PubMed]

- Helal, S.; Chen, C. The Gator Tech Smart House: Enabling Technologies and Lessons Learned. In Proceedings of the 3rd International Convention on Rehabilitation Engineering & Assistive Technology, Singapore, 22–26 April 2009. [Google Scholar]

- Cook, D.J.; Youngblood, M.; Heierman, E.O.; Gopalratnam, K.; Rao, S.; Litvin, A.; Khawaja, F. MavHome: An Agent-Based Smart Home. In Proceedings of the First IEEE International Conference on Pervasive Computing and Communications, 2003 (PerCom 2003), Fort Worth, TX, USA, 26 March 2003; pp. 521–524. [Google Scholar]

- The DOMUS Laboratory. Available online: http://domuslab.fr (accessed on 8 November 2019).

- The Aware Home. Available online: http://awarehome.imtc.gatech.edu (accessed on 8 November 2019).

- Krishnan, N.C.; Cook, D.J. Activity Recognition on Streaming Sensor Data. Pervasive Mob. Comput. 2014, 10, 138–154. [Google Scholar] [CrossRef] [PubMed]

- Kamal, S.; Jalal, A.; Kim, D. Depth images-based human detection, tracking and activity recognition using spatiotemporal features and modified HMM. J. Electr. Eng. Technol. 2016, 11, 1857–1862. [Google Scholar] [CrossRef]

- Buys, K.; Cagniart, C.; Baksheev, A.; de Laet, T.; de Schutter, J.; Pantofaru, C. An adaptable system for RGB-D based human body detection and pose estimation. J. Vis. Commun. Image Represent. 2014, 25, 39–52. [Google Scholar] [CrossRef]

- Chen, L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z.; Member, S. Sensor-Based Activity Recognition. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Azkune, G.; Almeida, A.; López-De-Ipiña, D.; Chen, L. Extending Knowledge-Driven Activity Models through Data-Driven Learning Techniques. Expert Syst. Appl. 2015, 42, 3115–3128. [Google Scholar] [CrossRef]

- Cleland, I.; Donnelly, M.P.; Nugent, C.D.; Hallberg, J.; Espinilla, M. Collection of a Diverse, Naturalistic and Annotated Dataset for Wearable Activity Recognition. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 19–23 March 2018. [Google Scholar]

- Akhand, M.A.H.; Murase, K. Neural Networks Ensembles: Existing Methods and New Techniques; LAP LAMBERT Academic Publishing: Khulna, Bangladesh, 2010. [Google Scholar]

- Sharkey, A.J.C. Combining Artificial Neural Nets; Springer: London, UK, 1999. [Google Scholar]

- Aggarwal, J.K.; Xia, L.; Ann, O.C.; Theng, L.B. Human activity recognition: A review. In Proceedings of the 2014 IEEE International Conference on Control System, Computing and Engineering (ICCSCE 2014), Batu Ferringhi, Malaysia, 28–30 November 2014; pp. 389–393. [Google Scholar]

- Hegde, N.; Bries, M.; Swibas, T.; Melanson, E.; Sazonov, E. Automatic Recognition of Activities of Daily Living utilizing Insole-Based and Wrist-Worn Wearable Sensors. IEEE J. Biomed. Health Inform. 2017, 22, 979–988. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Sohn, J.; Kim, S. Classification of Daily Activities for the Elderly Using Wearable Sensors. J. Healthc. Eng. 2017, 2017, 8934816. [Google Scholar] [CrossRef]

- Pirsiavash, H.; Ramanan, D. Detecting activities of Daily Living in First-Person Camera Views. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2847–2854. [Google Scholar]

- Roy, N.; Misra, A.; Cook, D. Ambient and Smartphone Sensor Assisted ADL Recognition in Multi-Inhabitant Smart Environments. J. Ambient Intell. Humaniz. Comput. 2016, 7, 1–19. [Google Scholar] [CrossRef]

- Moriya, K.; Nakagawa, E.; Fujimoto, M.; Suwa, H.; Arakawa, Y.; Kimura, A.; Miki, S.; Yasumoto, K. Daily Living Activity Recognition with Echonet Lite Appliances and Motion Sensors. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017. [Google Scholar]

- Gochoo, M.; Tan, T.; Huang, S. DCNN-Based Elderly Activity Recognition Using Binary Sensors. In Proceedings of the 2017 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 21–23 November 2017. [Google Scholar]

- Singh, D.; Merdivan, E.; Hanke, S. Convolutional and Recurrent Neural Networks for Activity Recognition in Smart Environment. In Towards Integrative Machine Learning and Knowledge Extraction; Springer: Cham, Switzerland, 2017; pp. 194–209. [Google Scholar]

- Cook, D.J.; Krishnan, N.C. Activity Learning: Discovering, Recognizing and Predicting Human Behavior from Sensor Data, 1st ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Mannini, A.; Rosenberger, M.; Haskell, W.L.; Sabatini, A.M.; Intille, S.S. Activity Recognition in Youth Using Single Accelerometer Placed at Wrist or Ankle. Med. Sci. Sports Exerc. 2017, 49, 801–812. [Google Scholar] [CrossRef]

- Huang, Q.; Yang, J.; Qiao, Y. Person re-identification across multi-camera system based on local descriptors. In Proceedings of the 2012 Sixth International Conference on Distributed Smart Cameras (ICDSC), Hong Kong, China, 30 Octorber–2 November 2012; pp. 1–6. [Google Scholar]

- Farooq, A.; Jalal, A.; Kamal, S. Dense RGB-D map-based human tracking and activity recognition using skin joints features and self-organizing map. KSII Trans. Internet Inf. Syst. 2015, 9, 1856–1869. [Google Scholar]

- Kamal, S.; Jalal, A. A Hybrid Feature Extraction Approach for Human Detection, Tracking and Activity Recognition Using Depth Sensors. Arab. J. Sci. Eng. 2016, 41, 1043–1051. [Google Scholar] [CrossRef]

- Böttcher, S.; Scholl, P.M.; van Laerhoven, K. Detecting Transitions in Manual Tasks from Wearables: An Unsupervised Labeling Approach. Informatics 2018, 5, 16. [Google Scholar] [CrossRef]

- Trost, S.G.; Wong, W.-K.; Pfeiffer, K.A.; Zheng, Y. Artificial Neural Networks to Predict Activity Type and Energy Expenditure in Youth. Med. Sci. Sport Exerc. 2012, 44, 1801–1809. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Synnott, J.; Nugent, C.; Zhang, S.; Calzada, A.; Cleland, I.; Espinilla, M.; Quero, J.M.; Lundstrom, J. Environment Simulation for the Promotion of the Open Data Initiative. In Proceedings of the 2016 IEEE International Conference on Smart Computing (SMARTCOMP), St. Louis, MO, USA, 18–20 May 2016. [Google Scholar]

- Oniga, S.; József, S. Optimal Recognition Method of Human Activities Using Artificial Neural Networks. Meas. Sci. Rev. 2015, 15, 323–327. [Google Scholar] [CrossRef][Green Version]

- Greengard, S. GPUs Reshape Computing. Commun. ACM 2016, 59, 14–16. [Google Scholar] [CrossRef]

- Suto, J.; Oniga, S. Efficiency investigation from shallow to deep neural network techniques in human activity recognition. Cogn. Syst. Res. 2019, 54, 37–49. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. Eur. Symp. Artif. Neural Netw. 2013, 437–442, 9782874190827. [Google Scholar]

- Rooney, N.; Patterson, D.; Nugent, C. Ensemble Learning for Regression. Encyclopedia Data Warehous. Mining Inf. Sci. Ref. N. Y. US 2010, 2, 777–782. [Google Scholar]

- Mendes-Moreira, J.; Soares, C.; Jorge, A.M.; de Sousa, J.F. Ensemble Approaches for Regression: A Survey. ACM Comput. Surv. 2012, 45, 10. [Google Scholar] [CrossRef]

- Fatima, I.; Fahim, M.; Lee, Y.-K.; Lee, S. Classifier ensemble optimization for human activity recognition in smart homes. In Proceedings of the 7th International Conference on Ubiquitous Information Management and Communication, Kota Kinabalu, Malaysia, 17–19 January 2013; pp. 1–7. [Google Scholar]

- Ni, Q.; Zhang, L.; Li, L. A Heterogeneous Ensemble Approach for Activity Recognition with Integration of Change Point-Based Data Segmentation. Appl. Sci. 2018, 8, 1695. [Google Scholar] [CrossRef]

- Feng, Z.; Mo, L.; Li, M. A Random Forest-based ensemble method for activity recognition. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 5074–5077. [Google Scholar]

- Kim, Y.J.; Kang, B.N.; Kim, D. Hidden Markov Model Ensemble for Activity Recognition Using Tri-Axis Accelerometer. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2016; pp. 3036–3041. [Google Scholar]

- Sagha, H.; Bayati, H.; Millán, J.D.R.; Chavarriaga, R. On-line anomaly detection and resilience in classifier ensembles. Pattern Recognit. Lett. 2013, 34, 1916–1927. [Google Scholar] [CrossRef]

- A Genetic Algorithm-based Classifier Ensemble Optimization for Activity Recognition in Smart Homes. KSII Trans. Internet Inf. Syst. 2013, 7, 2853–2873. [CrossRef][Green Version]

- JMin, K.; Cho, S.B. Activity recognition based on wearable sensors using selection/fusion hybrid ensemble. In Proceedings of the 2011 IEEE International Conference on Systems, Man, and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 1319–1324. [Google Scholar]

- Diep, N.N.; Pham, C.; Phuong, T.M. Motion Primitive Forests for Human Activity Recognition Using Wearable Sensors. In Pacific Rim International Conference on Artificial Intelligence; Springer: Cham, Switzerland, 2016; pp. 340–353. [Google Scholar]

- Ijjina, E.P.; Mohan, C.K. Hybrid deep neural network model for human action recognition. Appl. Soft Comput. J. 2016, 46, 936–952. [Google Scholar] [CrossRef]

- Hwang, I.; Park, H.M.; Chang, J.H. Ensemble of deep neural networks using acoustic environment classification for statistical model-based voice activity detection. Comput. Speech Lang. 2016, 38, 1–12. [Google Scholar] [CrossRef]

- Guan, Y.; Ploetz, T. Ensembles of Deep LSTM Learners for Activity Recognition using Wearables. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 11. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Mujtaba, G.; Al-garadi, M.A. Data fusion and multiple classifier systems for human activity detection and health monitoring. Inf. Fusion 2019, 46, 147–170. [Google Scholar] [CrossRef]

- Woz’niak, M.; Woz’niak, W.; Graña, M.; Corchado, E. A survey of multiple classifier systems as hybrid systems. Inf. Fusion 2014, 16, 3–17. [Google Scholar] [CrossRef]

- Díez-Pastor, J.F.; Rodríguez, J.J.; García-Osorio, C.; Kuncheva, L.I. Random Balance: Ensembles of variable priors classifiers for imbalanced data. Knowl.-Based Syst. 2015, 85, 96–111. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Ren, J. Class imbalance ensemble learning based on the margin theory. Appl. Sci. 2018, 8, 815. [Google Scholar] [CrossRef]

- Ahmed, S.; Mahbub, A.; Rayhan, F.; Jani, R.; Shatabda, S.; Farid, D.M. Hybrid Methods for Class Imbalance Learning Employing Bagging with Sampling Techniques. In Proceedings of the 2017 2nd International Conference on Computational Systems and Information Technology for Sustainable Solution (CSITSS), Bangalore, India, 21–23 December 2017; pp. 1–5. [Google Scholar]

- Farooq, M.; Sazonov, E. Detection of chewing from piezoelectric film sensor signals using ensemble classifiers. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 4929–4932. [Google Scholar]

- Mohammadi, E.; Wu, Q.M.J.; Saif, M. Human activity recognition using an ensemble of support vector machines. In Proceedings of the 2016 International Conference on High Performance Computing & Simulation (HPCS), Innsbruck, Austria, 18–22 July 2016; pp. 549–554. [Google Scholar]

- Bayat, A.; Pomplun, M.; Tran, D.A. A study on human activity recognition using accelerometer data from smartphones. Procedia Comput. Sci. 2014, 34, 450–457. [Google Scholar] [CrossRef]

- Zappi, P.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Tröster, G. Activity recognition from on-body sensors by classifier fusion: Sensor scalability and robustness. In Proceedings of the 2007 3rd international conference on intelligent sensors, sensor networks and information, Melbourne, QLD, Australia, 3–6 December 2007; pp. 281–286. [Google Scholar]

- Krawczyk, B.; Woźniak, M. Untrained weighted classifier combination with embedded ensemble pruning. Neurocomputing 2016, 196, 14–22. [Google Scholar] [CrossRef]

- Roh, Y.; Heo, G.; Whang, S.E. A Survey on Data Collection for Machine Learning: A Big Data—AI Integration Perspective. IEEE Trans. Knowl. Data Eng. 2019, 1, 1–19. [Google Scholar] [CrossRef]

- Gorunescu, F. Data Mining: Concepts, Models and Techniques, 1st ed.; Springer: Berlin, Germany, 2011. [Google Scholar]

- Kantardzic, M. Data Mining: Concepts, Models, Methods and Algorithms, 2nd ed.; Wiley-IEEE Press: Hoboken, NJ, USA, 2002. [Google Scholar]

- Maimon, O.; Rokach, L. Data Mining and Knowledge Discovery Handbook, 1st ed.; Springer: Boston, MA, USA, 2005. [Google Scholar]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Elsevier Inc.: Waltham, MA, USA, 2012. [Google Scholar]

- Zhu, X.; Wu, X. Class Noise vs. Attribute Noise: A Quantitative Study of Their Impacts. Artif. Intell. Rev. 2004, 22, 177–210. [Google Scholar] [CrossRef]

- Folleco, A.A.; Khoshgoftaar, T.M.; van Hulse, J.; Napolitano, A. Identifying Learners Robust to Low Quality Data. Informatica 2009, 33, 245–259. [Google Scholar]

- Espinilla, M.; Medina, J.; Nugent, C. UCAmI Cup. Analyzing the UJA Human Activity Recognition Dataset of Activities of Daily Living. MDPI Proc. UCAmI 2018, 2, 1267. [Google Scholar] [CrossRef]

- Karvonen, N.; Kleyko, D. A Domain Knowledge-Based Solution for Human Activity Recognition: The UJA Dataset Analysis. MDPI Proc. UCAmI 2018, 2, 1261. [Google Scholar] [CrossRef]

- Lago, P.; Inoue, S. A Hybrid Model Using Hidden Markov Chain and Logic Model for Daily Living Activity Recognition. MDPI Proc. UCAmI 2018, 2, 1266. [Google Scholar] [CrossRef]

- Seco, F.; Jiménez, A.R. Event-Driven Real-Time Location-Aware Activity Recognition in AAL Scenarios. MDPI Proc. UCAmI 2018, 2, 1240. [Google Scholar]

- Cerón, J.D.; López, D.M.; Eskofier, B.M. Human Activity Recognition Using Binary Sensors, BLE Beacons, an Intelligent Floor and Acceleration Data: A Machine Learning Approach. MDPI Proc. UCAmI 2018, 2, 1265. [Google Scholar] [CrossRef]

- Ding, D.; Cooper, R.A.; Pasquina, P.F.; Fici-Pasquina, L. Sensor technology for smart homes. Maturitas 2011, 69, 131–136. [Google Scholar] [CrossRef]

- Amiribesheli, M.; Benmansour, A.; Bouchachia, A. A review of smart homes in healthcare. J. Ambient Intell. Humaniz. Comput. 2015, 6, 495–517. [Google Scholar] [CrossRef]

- Jain, N.; Srivastava, V. DATA MINING TECHNIQUES: A SURVEY PAPER. Int. J. Res. Eng. Technol. 2013, 2, 116–119. [Google Scholar]

- Bulling, A.; Blanke, U.; Schiele, B. A Tutorial on Human Activity Recognition using Body-Worn Inertial Sensors. ACM Comput. Surv. 2014, 46, 33. [Google Scholar] [CrossRef]

- Banos, O.; Galvez, J.-M.; Damas, M.; Pomares, H.; Rojas, I. Window Size Impact in Human Activity Recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Mohandes, M.; Deriche, M.; Aliyu, S.O. Classifiers Combination Techniques: A Comprehensive Review. IEEE Access 2018, 6, 19626–19639. [Google Scholar] [CrossRef]

- Yijing, L.; Haixiang, G.; Xiao, L.; Yanan, L.; Jinling, L. Adapted ensemble classification algorithm based on multiple classifier system and feature selection for classifying multi-class imbalanced data. Knowl.-Based Syst. 2016, 94, 88–104. [Google Scholar] [CrossRef]

- Suto, J.; Oniga, S.; Sitar, P.P. Comparison of wrapper and filter feature selection algorithms on human activity recognition. In Proceedings of the 2016 6th International Conference on Computers Communications and Control (ICCCC), Oradea, Romania, 10–14 May 2016; pp. 124–129. [Google Scholar]

| ID | Name | Instances | Routine | ID | Name | Instances | Routine |

|---|---|---|---|---|---|---|---|

| Act01 | Take Medication | 52 | A, E | Act13 | Leave Smart Lab | 33 | M, A |

| Act02 | Prepare Breakfast | 63 | M | Act14 | Visitor to Smart Lab | 7 | M, A |

| Act03 | Prepare lunch | 118 | A | Act15 | Put waste in the bin | 75 | A, E |

| Act04 | Prepare Dinner | 76 | E | Act16 | Wash hands | 22 | M |

| Act05 | Breakfast | 78 | M | Act17 | Brush teeth | 132 | M, A, E |

| Act06 | Lunch | 101 | A | Act18 | Use the toilet | 44 | M, A, E |

| Act07 | Dinner | 86 | E | Act19 | Wash dishes | 13 | A, E |

| Act08 | Eat a snack | 12 | A | Act20 | Put washing in machine | 20 | M, A |

| Act09 | Watch TV | 70 | A, E | Act21 | Work at the table | 20 | M |

| Act10 | Enter Smart Lab | 21 | A, E | Act22 | Dressing | 86 | M, A, E |

| Act11 | Play a videogame | 28 | M, E | Act23 | Go to bed | 30 | E |

| Act12 | Relax on the sofa | 85 | M, A, E | Act24 | Wake up | 32 | M |

| ID | Object | Type | States |

|---|---|---|---|

| SM1 | Kitchen area | Motion | Movement/No movement |

| SM3 | Bathroom area | Motion | Movement/No movement |

| SM4 | Bedroom area | Motion | Movement/No movement |

| SM5 | Sofa area | Motion | Movement/No movement |

| M01 | Door | Contact | Open/Close |

| TV0 | TV | Contact | Open/Close |

| D01 | Refrigerator | Contact | Open/Close |

| D02 | Microwave | Contact | Open/Close |

| D03 | Wardrobe | Contact | Open/Close |

| D04 | Cups cupboard | Contact | Open/Close |

| D05 | Dishwasher | Contact | Open/Close |

| D07 | WC | Contact | Open/Close |

| D08 | Closet | Contact | Open/Close |

| D09 | Washing machine | Contact | Open/Close |

| D10 | Pantry | Contact | Open/Close |

| C01 | Medication box | Contact | Open/Close |

| C02 | Fruit platter | Contact | Open/Close |

| C03 | Cutlery | Contact | Open/Close |

| C04 | Pots | Contact | Open/Close |

| C05 | Water bottle | Contact | Open/Close |

| C07 | XBOX Remote | Contact | Present/Not present |

| C08 | Trash | Contact | Open/Close |

| C09 | Tap | Contact | Open/Close |

| C10 | Tank | Contact | Open/Close |

| C12 | Laundry basket | Contact | Present/Not present |

| C13 | Pyjamas drawer | Contact | Open/Close |

| C14 | Bed | Pressure | Pressure/No pressure |

| C15 | Kitchen faucet | Contact | Open/Close |

| H01 | Kettle | Contact | Open/Close |

| S09 | Sofa | Pressure | Pressure/No pressure |

| Activity Group | Activity Name | Common Sensors |

|---|---|---|

| Act10, Act13, Act14 | Enter Smart Lab, Leave Smart Lab, and Visitor to Smart Lab | M01 Door |

| Act23, Act24 | Go to Bed and Wake Up | C14 Bed |

| Act09, Act12 | Watch TV and Relax on Sofa | S09 Pressure Sofa SM5 Sofa Motion |

| Act02, Act03, Act04, Act08 | Prepare Breakfast, Prepare Lunch, Prepare Dinner, Prepare Snack | SM1 Kitchen Motion D10 Pantry C03 Cutlery |

| ID | Name | Instances | Routine | ID | Name | Instances | Routine |

|---|---|---|---|---|---|---|---|

| Act01 | Take Medication | 52 | A, E | Act24 | Wake up | 32 | M |

| Act15 | Put waste in the bin | 75 | A, E | ActN1 | Door | 61 | M, A, E |

| Act17 | Brush teeth | 132 | M, A, E | ActN2 | Watch TV on sofa | 155 | M, A, E |

| Act18 | Use the toilet | 44 | M, A, E | ActN3 | Breakfast | 141 | M |

| Act22 | Dressing | 86 | M, A, E | ActN4 | Lunch | 219 | A |

| Act23 | Go to bed | 30 | E | ActN5 | Dinner | 162 | E |

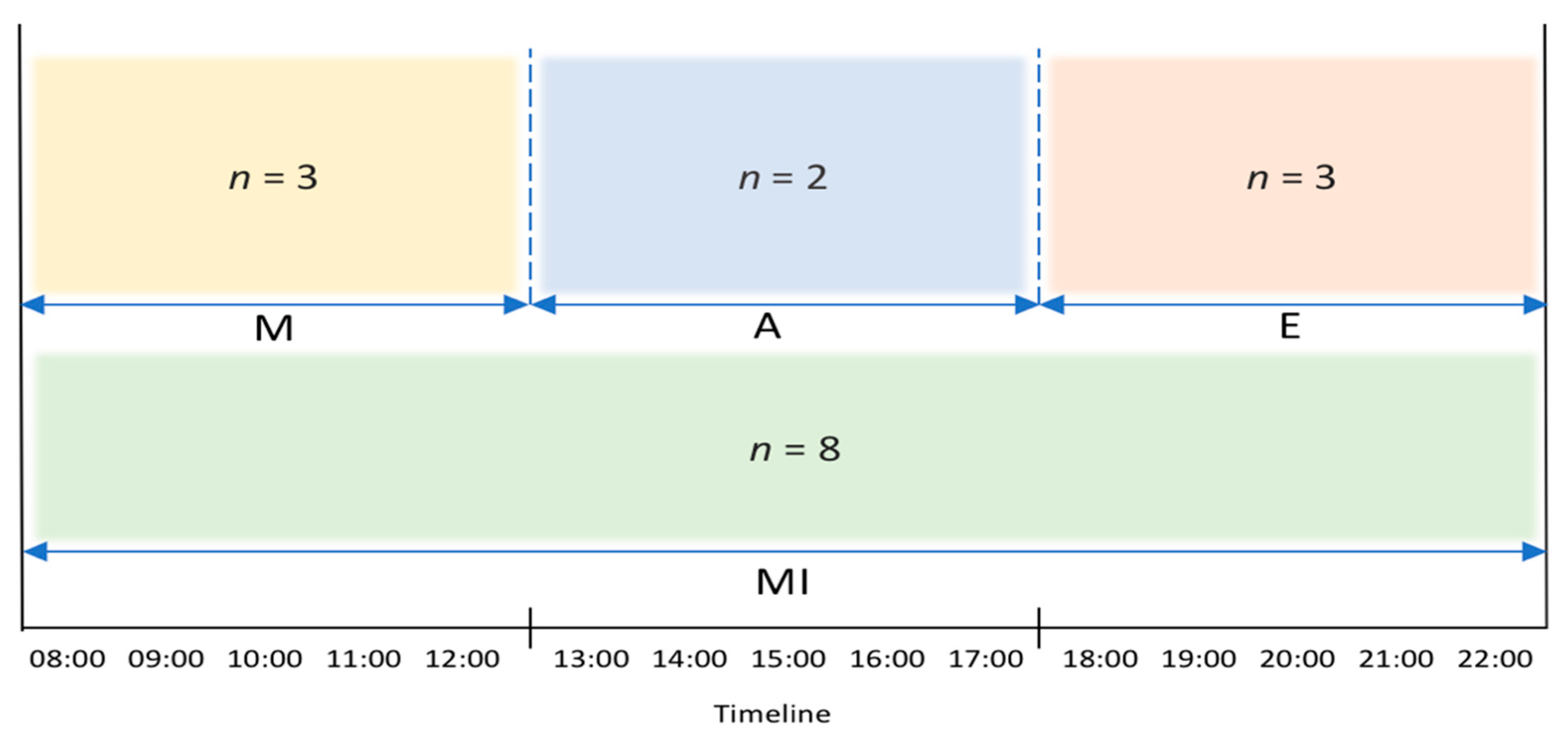

| #output | Model ID | Name | Activity Classes |

|---|---|---|---|

| m1 = 3 | M1 | Morning | = [Act24, ActN3] = [ActN4, Act23, ActN5, Act01, Act15, Act17, Act18, Act22, ActN1, ActN2] |

| m2 = 2 | M2 | Afternoon | = [ActN4] = [Act24, ActN3, Act23, ActN5, Act01, Act15, Act17, Act18, Act22, ActN1, ActN2] |

| m3 = 3 | M3 | Evening | = [Act23, ActN5] = [Act24, ActN3, ActN4, Act01, Act15, Act17, Act18, Act22, ActN1, ActN2] |

| m4 = 8 | M4 | Mixed | = [Act01, Act15, Act17, Act18, Act22, ActN1 ActN2] = [Act24, ActN3, ActN4, Act23, ActN5] |

| Complement | Model Distribution (No. of Instances) | Class Distribution (No. of Instances) | |

|---|---|---|---|

| complement class of M1 | Afternoon (24) Evening (24) Mixed (25) | ActN4 (24) Act23 (12) ActN5 (12) Act01 (03) Act15 (03) | Act17 (03) Act18 (04) Act22 (04) ActN1 (04) ActN2 (04) |

| complement class of M2 | Morning (62) Evening (62) Mixed (62) | Act24 (31) ActN3 (31) Act23 (31) ActN5 (31) Act18 (08) | Act15 (09) Act17 (09) Act01 (09) Act22 (09) ActN1 (09) ActN2 (09) |

| complement class of M3 | Morning (27) Afternoon (27) Mixed (27) | Act24 (13) ActN3 (14) ActN4 (27) Act18 (03) Act01 (04) | Act15 (04) Act17 (04) Act22 (04) ActN1 (04) ActN2 (04) |

| complement class of M4 | Morning (24) Afternoon (24) Evening (25) | Act24 (12) ActN3 (12) ActN4 (24) | Act23 (12) ActN5 (12) |

| Complement | Model Distribution (No. of Instances) | Class Distribution (No. of Instances) | |

|---|---|---|---|

| complement class of M1 | Afternoon (15) Evening (30) Mixed (105) | ActN4 (15) Act23 (15) ActN5 (15) Act01 (15) Act15 (15) | Act17 (15) Act18 (15) Act22 (15) ActN1 (15) ActN2 (15) |

| complement class of M2 | Morning (68) Evening (68) Mixed (238) | Act24 (34) ActN3 (34) Act23 (34) ActN5 (34) Act18 (34) | Act15 (34) Act17 (34) Act01 (34) Act22 (34) ActN1 (34) ActN2 (34) |

| complement class of M3 | Morning (32) Afternoon (16) Mixed (112) | Act24 (16) ActN3 (16) ActN4 (16) Act18 (16) Act01 (16) | Act15 (16) Act17 (16) Act22 (16) ActN1 (16) ActN2 (16) |

| complement class of M4 | Morning (58) Afternoon (29) Evening (58) | Act24 (29) ActN3 (29) ActN4 (29) | Act23 (29) ActN5 (29) |

| No. of Conflicts Per Fold | |||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg. | |

| Complement Class – Model Level Approach | 76 | 57 | 69 | 52 | 49 | 35 | 60 | 45 | 62 | 56 | 56.1 |

| Complement Class – Class Level Approach | 21 | 37 | 11 | 13 | 13 | 42 | 29 | 39 | 11 | 17 | 23.3 |

| Fold | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg. | ||

| Algorithm 2 | Incorrect | 22 | 22 | 21 | 29 | 29 | 20 | 30 | 22 | 20 | 22 | 23.7 |

| Right but Incorrect | 17 | 18 | 21 | 12 | 17 | 16 | 9 | 14 | 20 | 20 | 16.4 | |

| Algorithm 3 | Incorrect | 23 | 22 | 21 | 29 | 29 | 22 | 29 | 22 | 20 | 24 | 24.1 |

| Right but Incorrect | 10 | 14 | 10 | 9 | 12 | 12 | 9 | 12 | 14 | 11 | 11.3 | |

| Algorithm 4 | Incorrect | 22 | 23 | 21 | 29 | 29 | 22 | 29 | 22 | 20 | 22 | 23.9 |

| Right but Incorrect | 31 | 22 | 13 | 23 | 11 | 15 | 23 | 18 | 10 | 21 | 18.7 | |

| Algorithm 5 | Incorrect | 22 | 22 | 21 | 29 | 29 | 22 | 29 | 22 | 20 | 22 | 23.8 |

| Right but Incorrect | 14 | 10 | 13 | 7 | 13 | 15 | 9 | 17 | 14 | 11 | 12.3 | |

| Fold | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Avg. | ||

| Algorithm 2 | Incorrect | 33 | 26 | 35 | 33 | 25 | 32 | 27 | 28 | 40 | 26 | 30.5 |

| Right but Incorrect | 6 | 9 | 2 | 6 | 4 | 11 | 8 | 10 | 2 | 8 | 6.6 | |

| Algorithm 3 | Incorrect | 33 | 26 | 35 | 33 | 25 | 31 | 27 | 28 | 40 | 26 | 30.4 |

| Right but Incorrect | 5 | 7 | 3 | 2 | 6 | 7 | 6 | 5 | 0 | 6 | 4.7 | |

| Algorithm 4 | Incorrect | 33 | 26 | 35 | 33 | 25 | 31 | 27 | 28 | 40 | 25 | 30.3 |

| Right but Incorrect | 8 | 21 | 4 | 3 | 6 | 6 | 11 | 7 | 1 | 5 | 7.2 | |

| Algorithm 5 | Incorrect | 33 | 26 | 34 | 33 | 25 | 31 | 27 | 28 | 40 | 25 | 30.2 |

| Right but Incorrect | 8 | 8 | 5 | 2 | 6 | 5 | 6 | 7 | 1 | 5 | 5.3 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Irvine, N.; Nugent, C.; Zhang, S.; Wang, H.; NG, W.W.Y. Neural Network Ensembles for Sensor-Based Human Activity Recognition Within Smart Environments. Sensors 2020, 20, 216. https://doi.org/10.3390/s20010216

Irvine N, Nugent C, Zhang S, Wang H, NG WWY. Neural Network Ensembles for Sensor-Based Human Activity Recognition Within Smart Environments. Sensors. 2020; 20(1):216. https://doi.org/10.3390/s20010216

Chicago/Turabian StyleIrvine, Naomi, Chris Nugent, Shuai Zhang, Hui Wang, and Wing W. Y. NG. 2020. "Neural Network Ensembles for Sensor-Based Human Activity Recognition Within Smart Environments" Sensors 20, no. 1: 216. https://doi.org/10.3390/s20010216

APA StyleIrvine, N., Nugent, C., Zhang, S., Wang, H., & NG, W. W. Y. (2020). Neural Network Ensembles for Sensor-Based Human Activity Recognition Within Smart Environments. Sensors, 20(1), 216. https://doi.org/10.3390/s20010216