1. Introduction

Speech emotion recognition (SER) is the natural and fastest way of exchanging and communication between humans and computers and plays an important role in real-time applications of human-machine interaction. The speech signals generated using sensors for SER is an active area of research in digital signal processing used to recognize the qualitative emotional state of speakers using speech signals, which has more information than spoken words [

1]. Many researchers are working in this domain to make a machine intelligent enough that can understand the state from an individual’s speech to analyze or identify the emotional condition of the speaker. In SER, the salient and discriminative features selection and extraction is a challenging task [

2]. Recently researchers are trying to finding the robust and salient features for SER using artificial intelligence and deep learning approaches [

3] to extracting hidden information, CNN features to trained different CNN models [

4,

5] to increasing the performance and decreasing the computational complexity of SER for human behavior assessment. In this research era, the SER have faced many challenges and limitation due to the vast users of social media, low coast and fast bandwidth of the Internet. Due to the usage of low-cost internet and social media occur semantic gape. To cover the semantic gap in this area, researchers are worked to covered and introduced new methods to extract the most salient features from speech signals and trained models to accurately recognize the speaker’s emotion during speech. The technology is developed day by day to provide new and flexible platforms for researchers to introduce new methods using artificial intelligence.

The development skills, technology and usage of artificial intelligence and deep learning approaches play a vital role in the enhancement of human-computer interaction (HCI) such as emotions recognition. SER is an effective area of HCI, which has many real-time applications such as it can be used at call centers to identify user satisfaction, human reboot interaction to detect the human emotion, emergency call centers to identify the emotional state of a user for appropriate response and virtual reality. Fiore et al. [

6] Implemented SER for car board system to detect the mental condition or emotional state of car drivers to take necessary steps to ensure the safety of passengers. SER is also playing a role in the automatic translation systems and understanding human physical interaction in crowds for violent and destructive actions which difficult to do manually [

7]. Badshah et al. [

8] used the SER for smart effective services to describe methods using CNN architectures with rectangular shape filters to show the effectiveness of the SER for smart health care centers. Mao et al. [

9] improved the effectiveness of SER for real-time applications using the salient and discriminative features analysis, for feature extraction to increase the significance of the HCI. Min et al. [

10] used the SER for describing emotion in movies using the content analysis of arousal and violence discriminative features for estimating emotion intensity and emotion type in hierarchically. Miguel et al. [

11] used SER for privacy purposes to use the paralinguistic features and privacy-preserving-based hashing method to recognize the speaker.

SER is an emerging area of research where many researchers presented a variety of techniques in this domain. Most researchers are working to find effective, salient, and discriminative features of speech signals for classification to detect the accurate emotion of a speaker. Recently, researchers have used deep learning approaches to detect the salient and discriminative features for SER. High-level features are erected on the topmost of low-level features to perceive and recognize lines, dots, curves, and shapes using convolutional neural networks. The deep learning models (CNN, CNN-LSTM, DNN, DBN, and others) approach to detect the high-level salient features to achieve better accuracy compared to low-level handcrafted features. The usage of deep neural networks boosts the computational complexity of the whole model. There are many challenges in SER domain; (i) current CNN architectures have not revealed any significant improvement in terms of accuracy and cost complexity in speech signal processing. (ii) The usage of RNN and long short-term memory (LSTM) is useful to train sequential data but are difficult to train effectively and are more computational complex. (iii) Most researchers have used the frame-level representation and concatenation methods for feature fusion, which is not suitable for utterance-level SER. (iv) Data sparseness results in large concatenation feature fusion and cannot detect the exact boundary of the word.

Due to the above-mentioned issues and challenges we proposed a novel CNN architecture with special strides rather than a pooling scheme to extract the salient high-level features from spectrograms of speech signals. We detect the hidden patterns of speech signals in convolutional layers that use the special strides for down-sampling the feature maps. The extensive experiments were conducted on two standards benchmarked Interactive Emotional Dyadic Motion Capture (IEMOCAP) [

12] and Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) [

13] datasets to reveal the significance and efficiency of the suggested model with other states of the art approaches. The detailed experiments and discussion of the proposed method which compares with other baseline methods are mentioned in the experimental section of this paper.

Our major contributions in this article are documented below:

Pre-Processing: SER inputs data in refined form which always need fine-grained form of speech signals to ensure an accurate output prediction of emotions. Existing techniques in SER literature lack the focus on preprocessing steps which effectively refines the data and assists in boosting the accuracy of the final classifier. In this paper, we present a preprocessing strategy where we remove the noises through a novel adoptive thresholding technique followed by silent portions removal in aural data. Thus, our preprocessing strategy plays a prominent role in the overall SER system.

CNN Model: We use the strategy of plain convolutional neural network [

14] and proposed a new CNN architecture, DSCNN for SER to learn salient and discriminative features in convolutional layers which uses the special strides within convolutional layer for down-sampling the feature maps rather than pooling layers. The DSCNN model is particularly made for the SER problem using spectrograms.

Computational Complexity: We use minimum convolutional layers in our proposed CNN architectures with small respective fields to learn deep, salient and discriminative features from speech spectrograms to increase the accuracy and achieve reduced computational complexity due to the simple structure of the proposed CNN model, as proved from the experiments.

The rest of the paper is divided as follows: a literature review of SER is explained in

Section 2, the proposed framework of SER is described in

Section 3, the extensive experimental results and discussion of the proposed technique are mentioned in

Section 4, and in

Section 5 is the conclusion and an examination of future work of speech emotion recognition.

3. Proposed Methodology

In this section, we present a CNN-based framework for SER. The proposed framework utilizes a discriminative CNN for feature learning scheme using spectrograms to specify the controversial state of the speaker. The proposed stride CNN architecture has input layers, convolutional layers, and fully connected layers followed by a SoftMax classifier. A spectrogram of the speech signal is a 2D representation of the frequencies with respect to time, that have more information than text transcription words for recognizing the emotions of a speaker. Spectrograms hold rich information and such information cannot be extracted and applied when we transform the audio speech signal to text or phonemes. Due to this capability, spectrogram improve the speech emotion recognition. The main idea is to learn high-level discriminative features from speech signals, for this purpose we utilized a CNN architecture to learn high-level features, the spectrogram is well suited for this task. In [

8], the spectrogram and MFCC features are used together using a CNN for SER and classification. In [

32] the spectrogram features are used to achieve good performance in SER. The key portion of the recommended framework is described in the following sections.

3.1. Pre-Processing

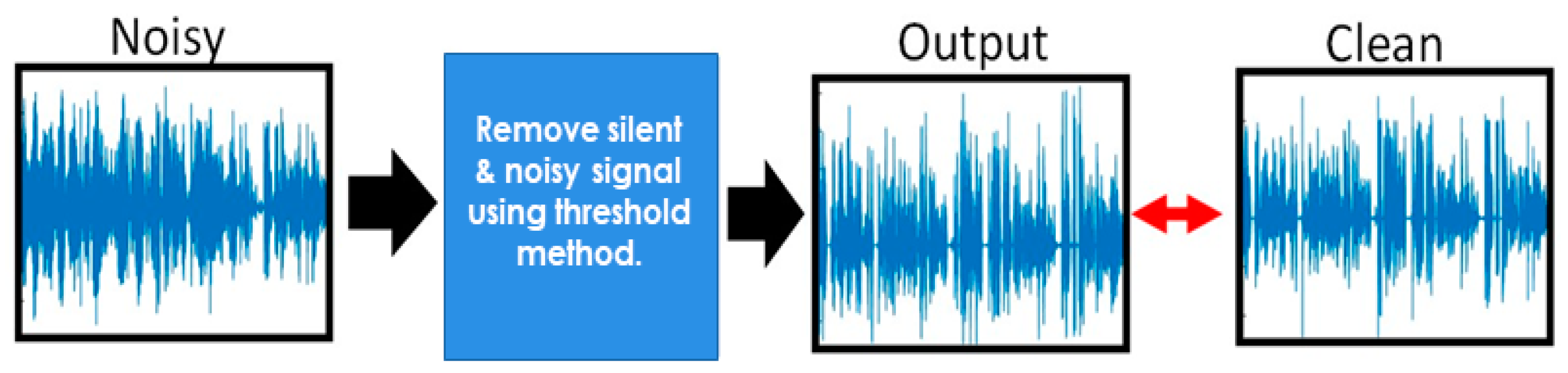

Pre-processing is an important part of preparing data to achieve model accuracy and efficiency. In this phase, we clean the audio signals to remove the background noises, silent portion and other irrelevant information from speech signal using the adaptive threshold-based preprocessing method [

33]. In this method, we find the relationship of energy with amplitude in speech signal using direct relation policy. The energy amplitude relationship is that the amount of energy passed by a wave is correlated to the amplitude of the wave. A high energy wave is considered by a high amplitude; a low energy wave is considered by a low amplitude. The amplitude of a wave mentions the extreme amount of displacement of an element in the middle from its rest location. The logic underlying the energy-amplitude relationship is as follows to remove the silent and unnecessary particle from speech signals. Three steps are included in this process; first, read the audio file step by step with 16,000 sampling rates. In the next step, we find the energy-amplitude relationship in waves and then compute the maximum amplitude in each frame using Equation (1) and passed from a suitable threshold to remove the noises and salient portion and save it in an array. In the last step, we reconstruct a new audio file with the same sample rates without any noise and silent signals. In Equation (1), Ɗ represent the displacements of the particle, f denoted the frequency with respect to time t, and A is a peak of signal or amplitude. The block diagram of the pre-processing is shown in

Figure 1.

3.2. Spectrogram Generation

Dimension of speech signal is one of the challenges tasks in SER using 2D CNN. Since the main aim of this research to learn high-level features from speech signals using the CNN model, so we must convert the one-dimensional representation of the speech signal into an appropriate 2D representation for 2D CNN. Spectrogram is the best and suitable representation of audio speech signals in two dimensions, which represent the strength of speech signals over different frequencies [

8].

The short-term Fourier transformation (STFT) is applied to speech signal for visual representation of frequencies over different times. Applying STFT, to convert longer time speech signal to shorter segment or frame which has an equal length and then applied fast Fourier transformation FFT on frame to compute the Fourier spectrum of that frame. In spectrograms, the time

t is represented by x-axis and the y-axis represents the frequencies f, of every short time. Spectrogram

S contains multiple type frequencies f, over different time t, in corresponding speech signal S (t, f). Dark colors in spectrograms illustrate the frequency in a low magnitude, whereas light colors show the frequency in higher magnitudes. Spectrograms are perfectly suitable for a variety of speech analysis including SER [

34]. Sample of extracted spectrograms of each audio file by applying STFT are shown in

Figure 2.

3.3. CNN

CNNs are current state-of-the-art models that are used to extract high-level features from low-level raw pixel information. CNN uses the numbers of kernels to extract high-level features from images and such features is used for training a CNN model to perform significant classification task [

34]. CNN architecture is a combination of three components; convolutional layers, which contain some numbers of filters to apply on input. Every filter scans the input using the dot product and submission method to produce the numbers of features maps in a single convolutional layer. The second component is pooling layers, which is used for reducing or down-sampling the dimensionality of features maps. There are some schemes used for reducing dimensionality like; max pooling, min pooling, mean pooling, average pooling, etc. The last component is fully connected layers (FC) of CNN, which mainly used for extracting the global features that are fed to a SoftMax classifier to find out the probability for each class. A CNN arranges these all layers in hierarchical structure, convolutional layers (CL), pooling layers (PL), and then FC followed by the SoftMax classifier. The proposed architectures are explained in the coming section.

3.4. Proposed Deep Stride CNN Architecture (DSCNN)

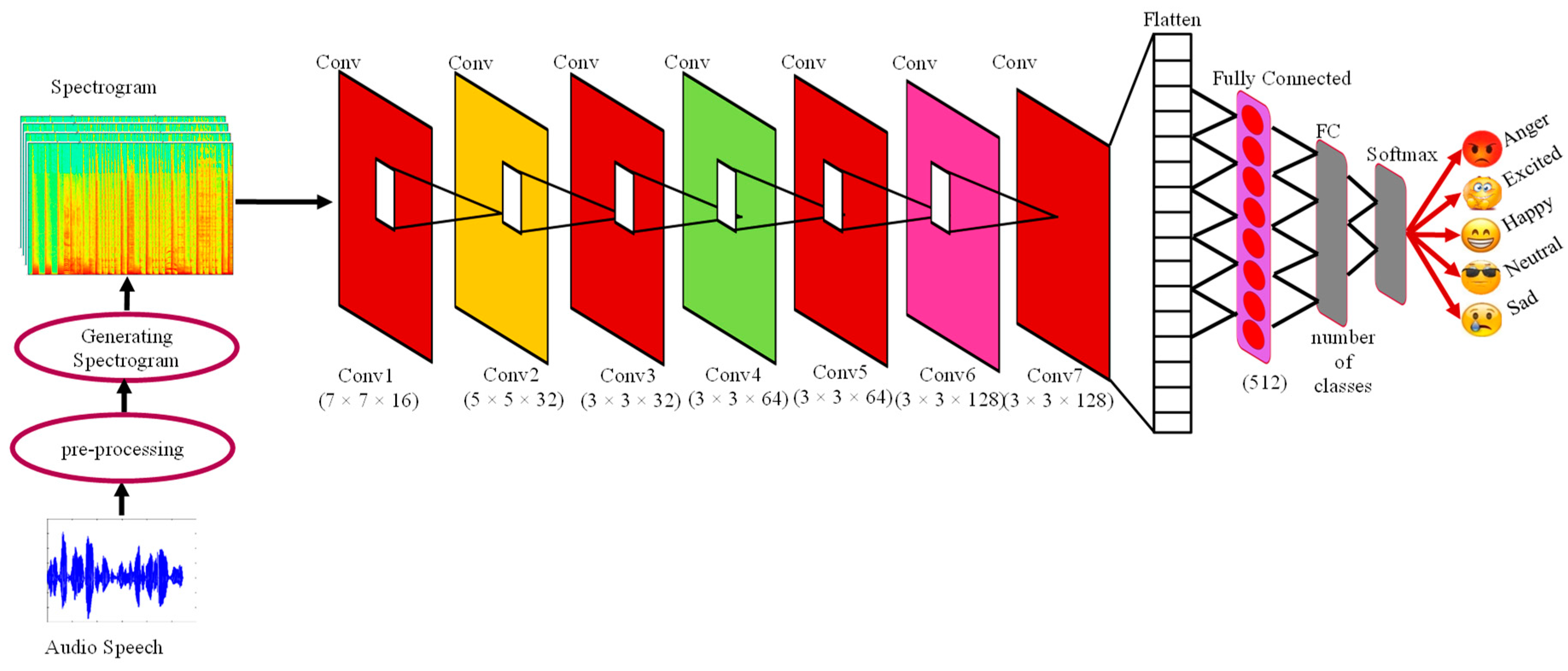

The proposed deep stride CNN model for SER is shown in

Figure 3. Our deep stride CNN model is mostly encouraged by the idea of plain nets [

14] which are specially designed for computer vision problems, like image classification, localization, tracking, and recognition to secure high-level accuracy [

35]. We explore the plain network from image classification to speech emotion recognition and classification. We define the stride deep CNN architecture, which has used mostly the same and small filter size, (3 × 3), to learn deep features with a small respective field in convolutional layers. It follows simple rules, the number of kernels is the same which gives the same output features maps and if the size of the feature maps is reduced to half, the number of filters must be doubled to maintain the time complexity per layer. To follow this strategy, we designed the DSCNN model for SER, which has used the stride (2 × 2) scheme to down-sample the size of features maps directly in convolutional layers rather than the pooling layer. The total number of layers in DSCNN is nine (9), seven (7) convolutional layers, and two (2) fully connected layers are fed to SoftMax for producing the probabilities of speech emotions. The generated spectrograms take as input and applied convolutional filters to extract features maps from a given speech spectrogram.

In the proposed architecture, we consecutively arranged CL. In the first convolutional layer (C1) has 16 number of kernels with squire shape of size (7 × 7) are applied to the input spectrogram with the same padding and stride setting of (2 × 2) pixel. Similarly, in the second convolutional layer (C2) it has 32 filters of size (5 × 5) with (2 × 2) stride setting. The C3 layer uses the same number of filters, stride, and padding as C2, but the size of the filters is 3 × 3. C4 and C5 layers have 64 (3 × 3) kernels with a stride setting of 2 × 2 pixels. In the same way, C6 and C7 layers have 128 kernels of size (3 × 3) with the same stride and padding. The last convolutional layer, which is followed by a flattening layer to convert the data shape into vector form and then the features are fed to FC. The first FC layer has 512 neurons and the last FC layer has the same number of neurons as classes. In the proposed DSCNN uses the rectified linear unit activation function which is followed by batch normalization to regularize the model after every convolutional layer. The first FC is followed by a 25% dropout ratio to deal with the model overfitting [

35]. The last FC layer fed to the SoftMax classifier to calculate the probability of each class. The DSCNN is designed for SER using spectrograms. The DSCNN contains convolutional layers and FC layers while eliminating the pooling layer from the whole network and used the same and small filter size and special strides. In first CL has a large kernel size to learn local feature and step-by-step increase the number of kernels but remains same the size and shape of filters to extract the discriminative features from spectrograms. The key component of this architecture is the usage of strides, for down-sampling while eliminating the pooling layer, using the same filter size and shape throughout the network to learn deep features, and limiting the number of FC layers that are used to avoid the redundancy. Due to the above-mentioned characteristics, the proposed DSCNN model captures the robust salient features from spectrograms.

3.5. Model Organization and Computational Setup

The recommended DSCNN model layout is implemented in python using the scikit-learn package for machine learning and other resources. The spectrograms are generated from each file, 128 × 128 in size. The whole generated spectrograms are divided by an 80%/20% split ratio for training and testing, respectively. The model training process was evaluated on a single NVIDIA GeForce GTX 1070 GPU with 12 GB of on-board memory for the proposed DSCNN model for SER. The model was trained on 50 epochs with a 0.001 learning rate and a decay one later every 10 epochs. The batch size is 128 in the whole training process and the best accuracy was achieved after 49 epochs with 0.3215 lost on training and 0.5462 lost on validation. The model trains in very little time with a reduced model size (34.5 MB), indicating the computational simplicity.