1. Introduction

Buildings segmentation using remote-sensing (RS) images plays an important role in monitoring and modeling the process of urban landscape changes. Quantification of these changes can deliver useful products to individual users and public administrations and most importantly, it can be used to support the sustainable urban development and to balance both the economic and environmental benefits [

1,

2,

3,

4,

5]. Via analyzing the data source of Landsat TM/ETM+ in 1990s, 2000s and 2010s, a study estimated China’s urban expansion from the urban built-up area, the area of croplands converted into urban as well as the speed of urbanization in different cities [

6]. It gave clues on the relationship among urbanization, land use efficiency of urban expansion and population growth. Moreover, these factors are highly related to carbon emissions, climate change and urban environmental development that facilitate urban planning and management [

7].

A large number of computational methods have been developed for RS image segmentation [

8], since manual annotation of high-resolution RS images is not only time-consuming and labor-intensive but also error-prone, and therefore, to develop high-performance methods is urgent for RS image analysis. Techniques for image segmentation can be broadly grouped into semi- and full-automatic methods. Semi-automatic methods require user assistance and graph cuts is one of the most notable methods [

9,

10,

11,

12]. The method takes intensity, textures and edges of an image into consideration and after some pixels are manually localized in background, foreground or unknown regions, it addresses the problem of binary segmentation by using Gaussian mixture models [

13]. Finally, one shot of object segmentation is achieved from iterative energy minimization. Wang et al. [

14] integrated a graph cuts model into spectral-spatial classification of hyper-spectral images and in each smoothed probability map, the model extracted the object to a certain information class. Peng et al. [

15] took advantage of a visual attention model and a graph cuts model to extract the rare-earth ore mining area information using high-resolution RS images. Notably, semi-automatic methods enable a user to incorporate prior knowledge, to validate results and to correct errors in the process of iterative image segmentation.

It is imperative to develop full-automated methods for RS image analysis, particularly when the spatial and temporal resolution of RS imaging has been considerably and continuously increased. The approaches for full-automated segmentation of RS images can be divided into conventional methods and deep learning (DL) methods in general. The former is developed based on the analysis of pixels, edges, textures and regions [

8,

16,

17]. Hu et al. [

18] designed an approach that consisted of algorithms for determination of region-growing criteria, edge-guided image object detection and assessment of edges. The approach detected image edges with embedded confidence and the edges were stored in an R-tree structure. After that, initial objects were coarsely segmented and then organized in a region adjacency graph. In the end, multi-scale segmentation was incorporated and the curve of edge completeness was analyzed. Interestingly, some methods incorporate machine learning principles and recast RS image segmentation as a pixel- or region-level classification problem [

19,

20]. However, parameters in most approaches are set empirically or adjusted toward high performance and thus, the generalization capacities might be restricted.

Recently, DL has revolutionized image representation [

21], visual understanding [

22], numerical regression [

23] and cancer diagnosis [

24]. Many novel methods have been developed for RS image segmentation [

25,

26,

27,

28,

29]. Volpi and Tuia [

30] presented a fully convolutional neural network (FCN) and it achieved high geometric accuracy of land-cover prediction. Kampffmeyer et al. [

31] incorporated median frequency balance and uncertainty estimation which aimed to address class imbalance in semantic segmentation of small objects in urban images. Langkvist et al. [

32] compared various design choices of a deep network for land use classification and the land areas were labeled with vegetation, ground, roads, buildings and water. Wu et al. [

33] explored an ensemble of convolutional networks for better generalization and less over-fitting and furthermore, an alignment framework was designed to balance the similarity and variety in multi-label land-cover segmentation. Alshehhi et al. [

34] proposed a convolutional network model for the extraction of roads and buildings and to improve the segmentation performance, low-level features of roads and buildings were integrated with deep features for post-processing. Vakalopoulou et al. [

35] used deep features to represent image patches and support vector machine was employed to differentiate buildings from the background regions. Gao et al. [

36] designed a deep residual network and it consisted of a residual connected unit and a dilated perception unit and in the post-processing stage, a morphologic operation and a tensor voting algorithm were employed. Yuan [

37] proposed a deep network with a final stage that integrated activations from multiple preceding stages for pixel-wise prediction. The network introduced the signed distance function of building boundaries as the output representation and the segmentation performance was improved.

It has achieved promising results by using DL methods for automated objects segmentation in RS images. However, DL requires considerable data for hyper-parameter optimization [

38]. In the field of RS imaging, to collect sufficient images with accurately annotated labels is challenging, since a lot of objects of interest are buried in a complex background and a large area mapping [

39]. To address this issue, a concept of “one view per city” (OVPC) is proposed. It explores to make the most of one RS image for parameter settings in the stage of model training, with the hope of handling the rest images of the same city by the trained model. As such, challenges could be relieved to some extent. It requires only one image per city and thus, time and labor can be reduced in the labeling of ground truth for specific purposes as well as the model training. Moreover, an algorithm is trained and tested on images from the same city and thus, intrinsic similarity between the foreground and background regions could be well explored. In fact, the concept comes from the observation that buildings of a same city in singe-source RS images illustrate similar intensity distributions. To verify its feasibility, a prove-of-concept study is conducted and five FCN models are evaluated in the segmentation of buildings in RS images. In addition, five cities in the Inria Aerial Image Labeling (IAIL) database [

40] are analyzed.

The rest of this paper is organized as follows.

Section 2 shows the observation that the buildings of a same city acquired by a same sensor show similar intensity distributions.

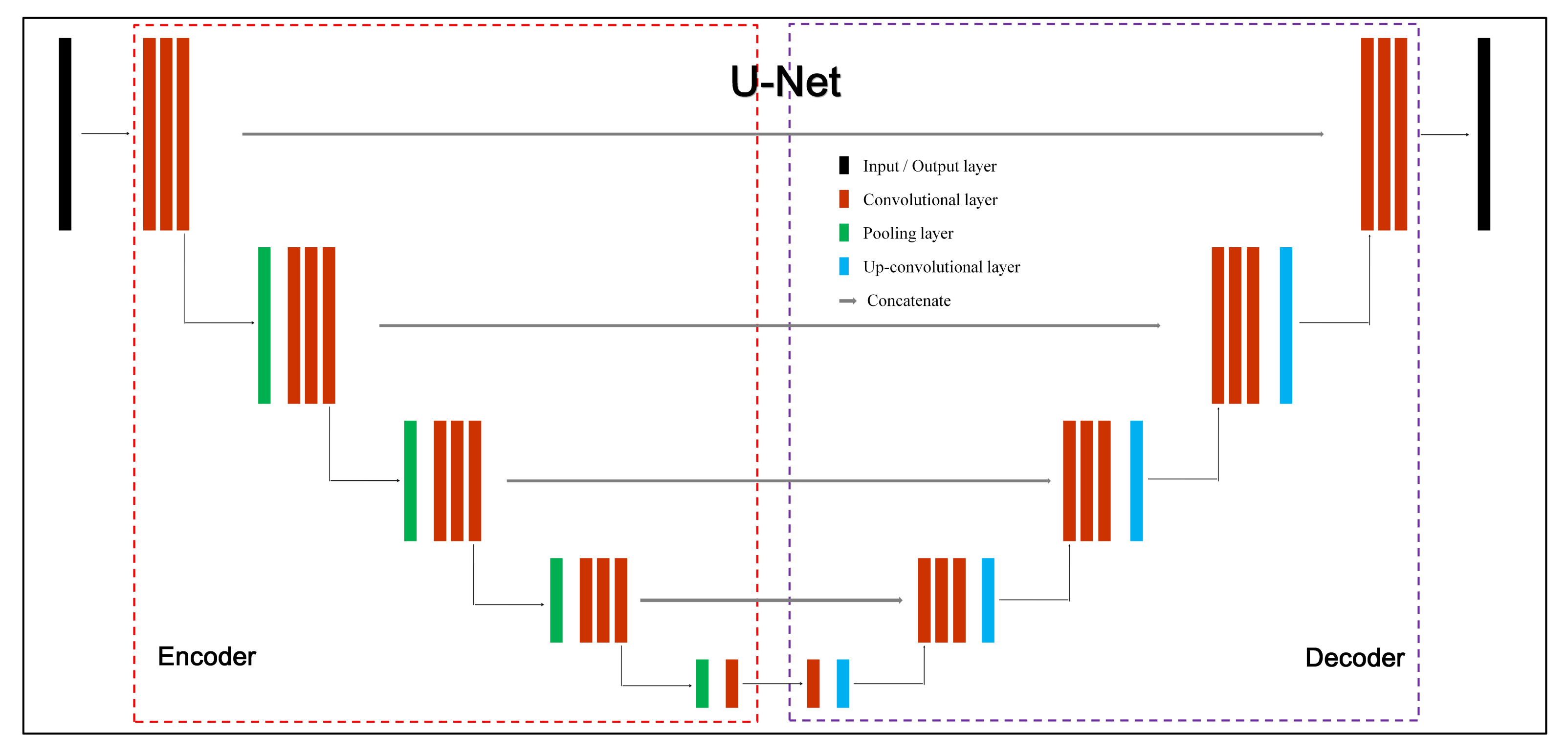

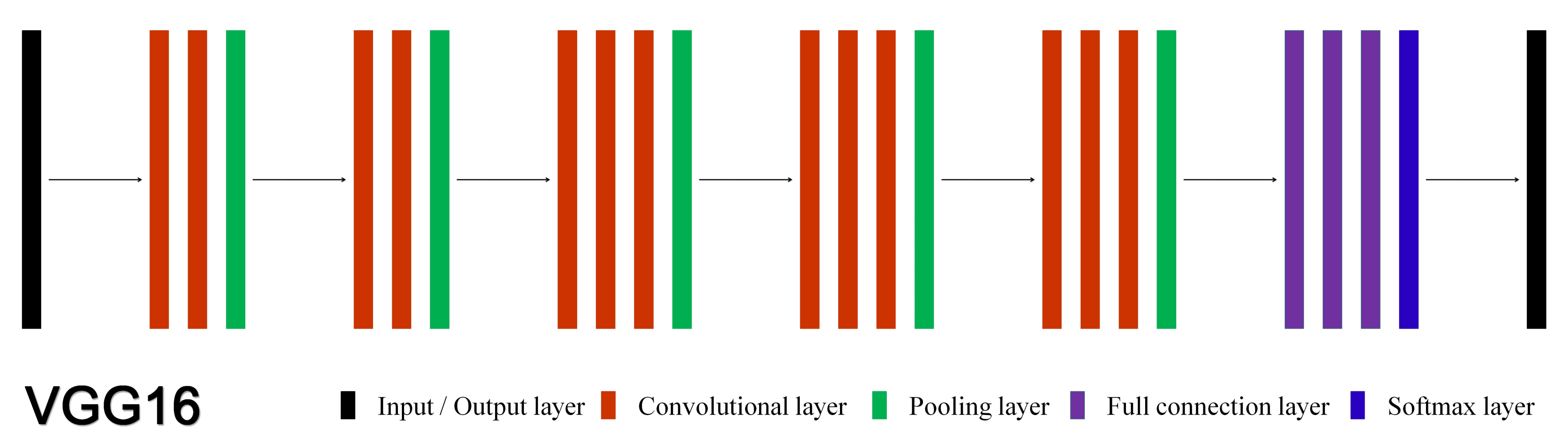

Section 3 describes the involved FCN models and then introduces the data collection, experiment design, performance metrics and algorithm implementation.

Section 4 demonstrates experimental results and

Section 5 discusses the findings. This proof-of-concept study is concluded in

Section 6.

2. One View Per City

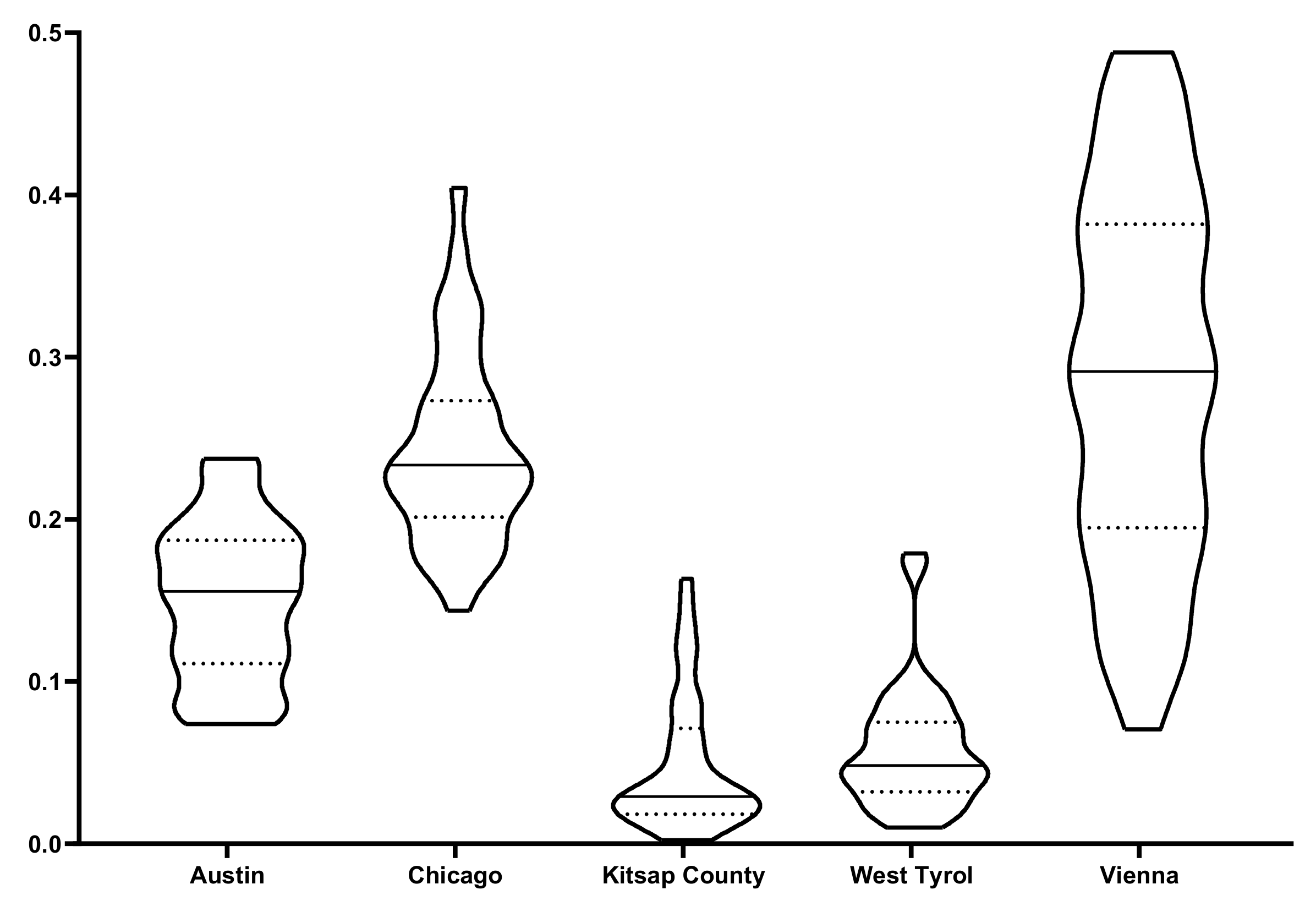

The proposal of the concept “one view per city” (OVPC) comes from the observation that most of buildings from a same city acquired by a same sensor demonstrate a similar appearance in RS images. To show the observation, the IAIL database [

40] is analyzed. Specially, the appearance of buildings is quantified with the distribution of pixel intensities in the annotated regions in RS images. As shown in

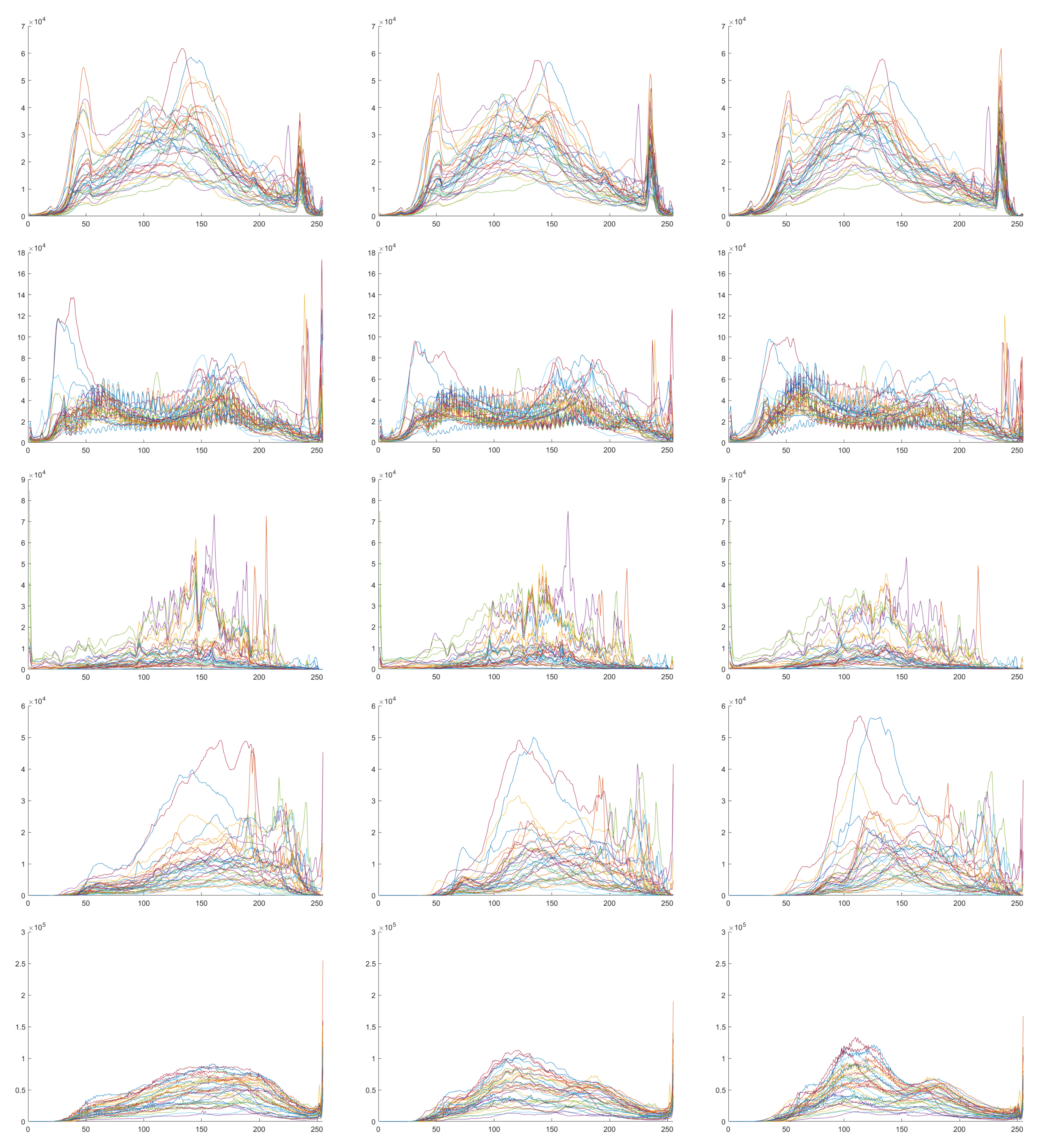

Figure 1, each row stands for a city (Austin, Chicago, Kitsap County, Western Tyrol and Vienna), each column indicates the red, green or blue channel of images, and each plot shows the intensity distributions of all 36 images. Moreover, in each plot, the horizontal axis shows the intensity range ([1, 255]), and the vertical axis shows the number of pixels to each intensity value.

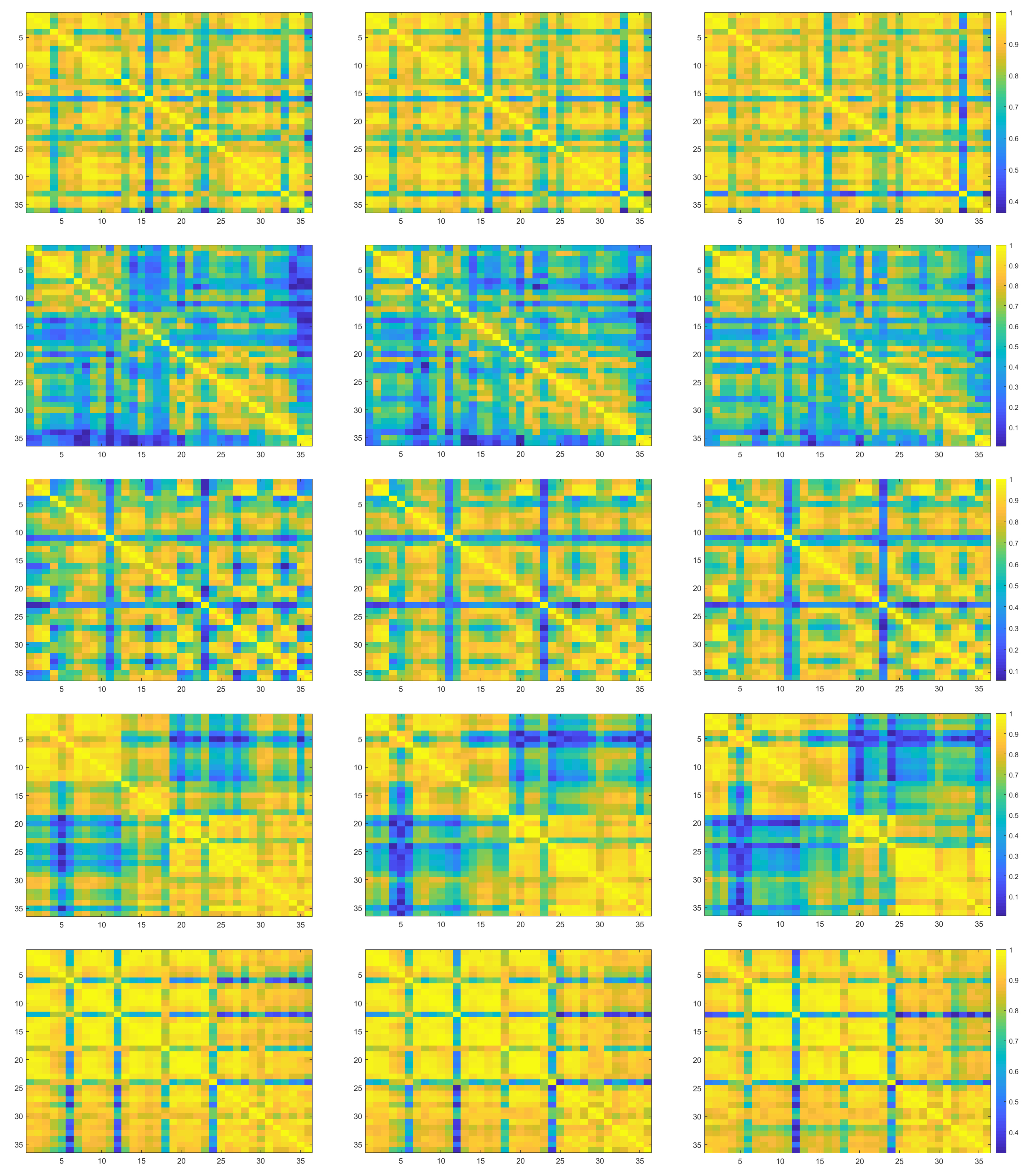

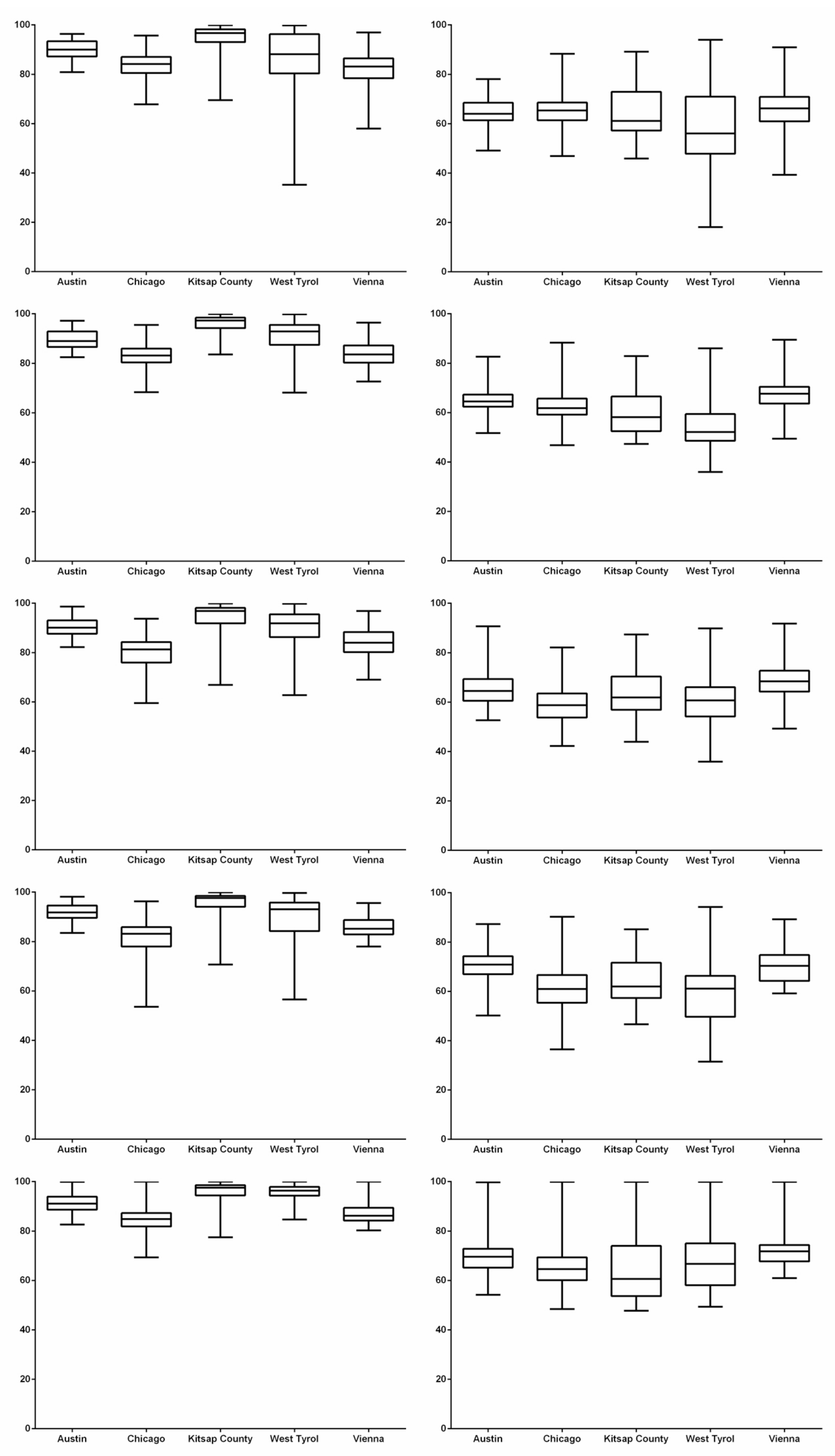

Pair-wise linear correlation coefficients (LCCs) of distributions of pixel intensities are calculated. In

Figure 2, each row stands for a city, each column indicates the red, green and blue channel of images and each plot shows a LCC matrix. Note that in each plot, both the horizontal and the vertical axis shows the image index. It is observed that when 0.5 is defined as the threshold of LCC values, 98.61% RS image pairs from the city Austin shows a higher correlation, followed by Vienna (97.69%), Western Tyrol (82.72%), Kitsap County (75.15%) and Chicago (57.87%).

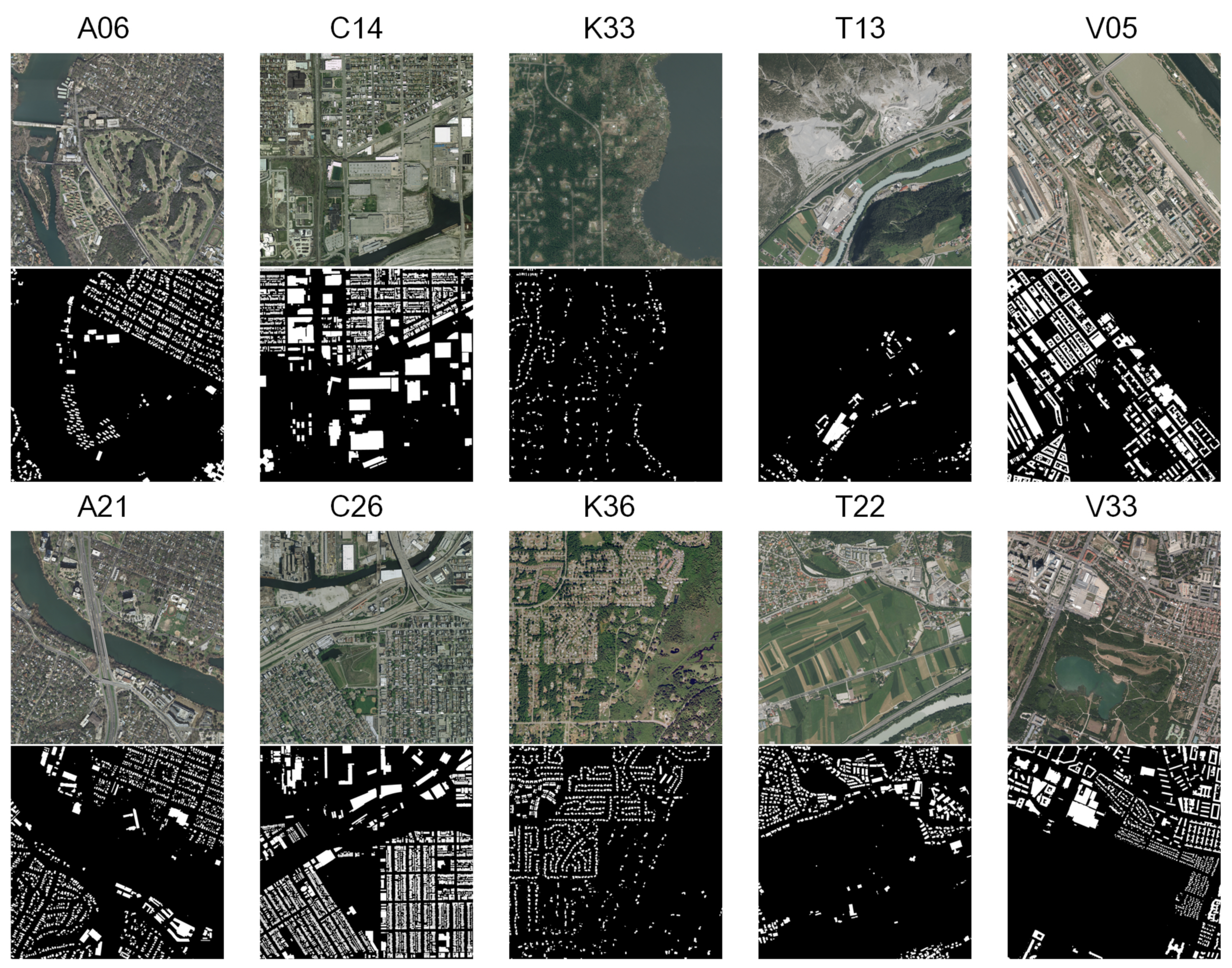

The observation can be visually perceived.

Figure 3 shows RS images of the 6th and 21st (

Austin, noted as A06 and A21), the 14th and 26th (

Chicago, noted as C14 and C26), the 33rd and 36th (

Kitsap County, noted as K33 and K36), the 13th and 22nd (Western

Tyrol, noted as T13 and T22), and the 5th and 33rd (

Vienna, noted as V05 and V33). It is found that these cities can be visually distinguished from each other by comparing the major appearances of buildings.

Both the quantitative comparison (

Figure 2) and the visual observation (

Figure 3) suggest that the buildings of a same city illustrate a similar appearance in single-source RS images. Intuitively, this kind of information redundancy can be utilized to address the issue of limited data in DL based RS image analysis. Therefore, the proposal of OVPC might benefit pixel-wise segmentation of buildings in RS images.

5. Discussion

This study proposes the concept of OVPC. It explores to relieve the requirement of a large-scale data set to some degree in DL based RS image analysis. The concept proposal is inspired by the observation that buildings of a same city share similar appearance in single-source RS images. This study illustrates the observation qualitatively (

Figure 2) and quantitatively (

Figure 3). Furthermore, the concept is verified on buildings segmentation in RS images via DL methods and five FCN models are evaluated on RS images of five cities in the IAIL database. At last, its pros and cons are analyzed through intra-city test, inter-city test and time consumption.

The building regions from a same city shares similar intensity distribution in RS images. The quantitative analysis (pair-wise LCCs) of intensity histograms indicates that building regions between the RS images from Austin correlates strongly, followed by the images from Vienna (

Figure 2). The similar appearance can also be observed from visual comparison (

Figure 3). Therefore, the proposal of the concept is reasonable and it is possible to use the similarity in intensity distributions in RS image analysis. And further, it might be able to reduce time and labor in data annotation, algorithm design and parameter optimization.

In this proof-of-concept study, intra-city test shows that the OVPC-based Unet achieves superior performance over other networks (

Table 2 and

Table 3), and its generalization capacity is inadequate as shown in inter-city test (

Table 5 and

Table 6). The result of this study is similar to the findings in [

60] which suggests the Unet architecture is well suited for image dense labeling, while outcome of the cross-city test is not satisfactory yet (ACC ≈ 95% and IoU ≈ 73%). In particular, in this study, the intra-city test shows that FCN models achieve moderate to excellent performance. The metric ACC indicates that RS images are correctly portioned into

and

(>80%), in particular in the images from Kitsap County and Austin (

Table 2), while the metric IoU shows that background regions are misclassified into

regions and several values are less than 60%, such as SegNet on Chicago (

Table 3). Unsurprisingly, the inter-city test finds out that it is challenging to accurately and precisely isolate

regions of one city by using an OVPC based Unet model trained on another city (

Table 5 and

Table 6). In detail, ACC values show slight decrease (

Table 5), while IoU values reveal around 10% drop in buildings segmentation (

Table 6).

To OVPC-based deep models, the reason of promising results in intra-city test mainly comes from the high-performance image representation of deep networks and these networks can represent complex patterns with hierarchical features. Moreover, these models have demonstrated capacities of pixel-wise semantic segmentation in various fields, such as computer vision [

46] and biomedical imaging [

42,

44,

45]. In particular, OVPC utilizes a large number of patches (i.e., 15,625) from one image as the input of deep networks and then, the information redundancy of building appearance is further used for the segmentation of

in other images from the same city. On the other hand, reasons for moderate IoU values are manifold. First, OVPC decreases the capacity of image representation of DL models due to limited training samples. Furthermore, the area of

regions over the RS image (i.e.,

) is tiny, such as 0.0485 ± 0.0420 of Kitsap County (

Figure 6), that dramatically imposes difficulties on deep networks to learn effective representation. Second, some key parameters, such as loss function, should be fine-tuned or carefully designed [

60]. From the technical point of view, data augmentation, batch normalization and transfer learning can be further integrated to improve the segmentation performance. In addition, RS image segmentation is a long-standing problem. Due to the unique cultures of western countries, buildings in RS images are distributed with different sizes and shapes. For instance, the buildings in Kitsap County are scattered, while buildings in Chicago are densely distributed and most buildings are small in size [

54]. Furthermore, boundaries between buildings are ambiguous that makes accurate segmentation challenging.

This study suggests that OVPC is beneficial to RS image analysis. It requires one RS image for model training and thereby, the time and labor in manual annotation can be reduced. To annotate a large scale of images, in particular high-resolution RS images, is always an expensive task, and cross checking should be carried out to minimize the risk of false annotation [

61]. To address the challenge, few-shot learning becomes a hot topic in RS image classification [

62,

63,

64]. Impressively, Song and Xu explored zero-shot learning for automatic target recognition in synthetic aperture radar (SAR) images [

65]. Moreover, using one single RS image for model training might save computing resources and decrease time cost in model training. Under the context of a GPU card with 12 GB memory, the experimental design, model implementation and time cost should be fully considered when a large number of samples are as input for training. Based on the IAIL database, when using the entire data set as the input, one epoch would last more than 2.5 h which is inconceivable [

50]. In this study, one epoch takes about 2 to 5 min dependant on the FCN model and subsequently, a total of 3.5 to 8.5 h to complete the whole model training. Based on the Unet with same architecture and parameter settings, the time cost is further compared when using different numbers of images as the input for model training. It finds out that one epoch takes about 3.2 min to the OVPC based Unet and ≈21.0 min to the Unet with 31 images as its input. In other words, the proposed OVPC based Unet achieves competitive performance with dramatically decreased time consumption. More importantly, the performance of OVPC based RS image analysis could be further improved when advanced networks are used, which can be observed by comparing the results in

Table 2 and

Table 3 with the recent outcomes in

Table 4. This study uses original networks, such as U-Net [

41], and advanced networks [

50,

51,

52,

53,

54] can improve the segmentation results (

Table 4). In detail, multi-task SegNet [

52] embeds a multi-task loss that can leverage multiple output representation of the segmentation mask and meanwhile bias the network to focus more on pixels near boundaries; MSMT [

53] is a multi-task multi-stage network that can handle both semantic segmentation and geolocalization using different loss functions in a unified framework; and GAN-SCA [

54] integrated spatial and channel attention mechanisms into a generative adversarial network [

59]. At last, the proposed concept can be further extent to different types of RS images and applications. RS image segmentation is indispensable to measure urban metrics [

66,

67], to monitor landscape changes [

68] and to model the pattern and extent of urban sprawl [

69]. It is also important to define urban typologies [

5], to classify land use [

4], to manage urban environment [

2] and to support sustainable urban development [

6,

7]. While for accurate decision making, diverse techniques should be involved [

70,

71,

72,

73,

74], such as LiDAR and aerial imagery.

On further improving the performance of OVPC based buildings segmentation in RS images, additional techniques could be considered. Above all, the concept requires images should be acquired from a same imaging sensor. To enhance its generalization capacity, universal image representation is indispensable which aims to transform the source and the target images into a same space. For instance, Zhang et al. [

75] improved the Kalman filter and harmonized multi-source RS images for summer corn growth monitoring. Notably, generative adversarial network [

59] has been used to align both panchromatic and multi-spectral images for data fusion [

76]. These methods provide insights on how to generalize the proposed concept into multi-source RS image analysis. Moreover, data augmentation is helpful to enhance representation capacity of deep networks (

https://github.com/aleju/imgaug). Data transformation, shape deformation and other various distortions can be used to represent buildings characteristics. Attention can also be paid to architecture design, batch normalization and parameter optimization. Besides, transfer learning is suggested to enhance network performance [

77] and it requires domain adaption to balance the data distributions of the source and the target domain [

78]. In addition, except for the appearance, it is potential to model buildings from shape and texture and to enrich our understanding of urban buildings in RS images. Last but not the least, post-processing strategies can be employed and prior knowledge and empirical experiences become helpful.

This study has some limitations. At first, the pros and cons of the concept OVPC are not explicitly revealed. It is better to use each of the 180 RS images (36 images per city × 5 cities) for OVOC based buildings segmentation, while that would cost more than 1000 h for model training (≈6 h per experiment × 180 experiments) to one FCN model. Secondly, it is also interesting to compare the OVPC based approaches with the multi-view based approaches and definitely, time consumption would be dramatically increased. Fortunately, results of several multi-view based approaches [

50,

51,

52,

53,

54] are available for comparison as shown in

Table 4. In addition, this study focuses on one database and the five cities show unique characteristics in urban environment among western cities (America and Austria), while large databases with global cities [

61,

79] would be more general.