Intensity-Assisted ICP for Fast Registration of 2D-LIDAR

Abstract

1. Introduction

2. Preliminaries

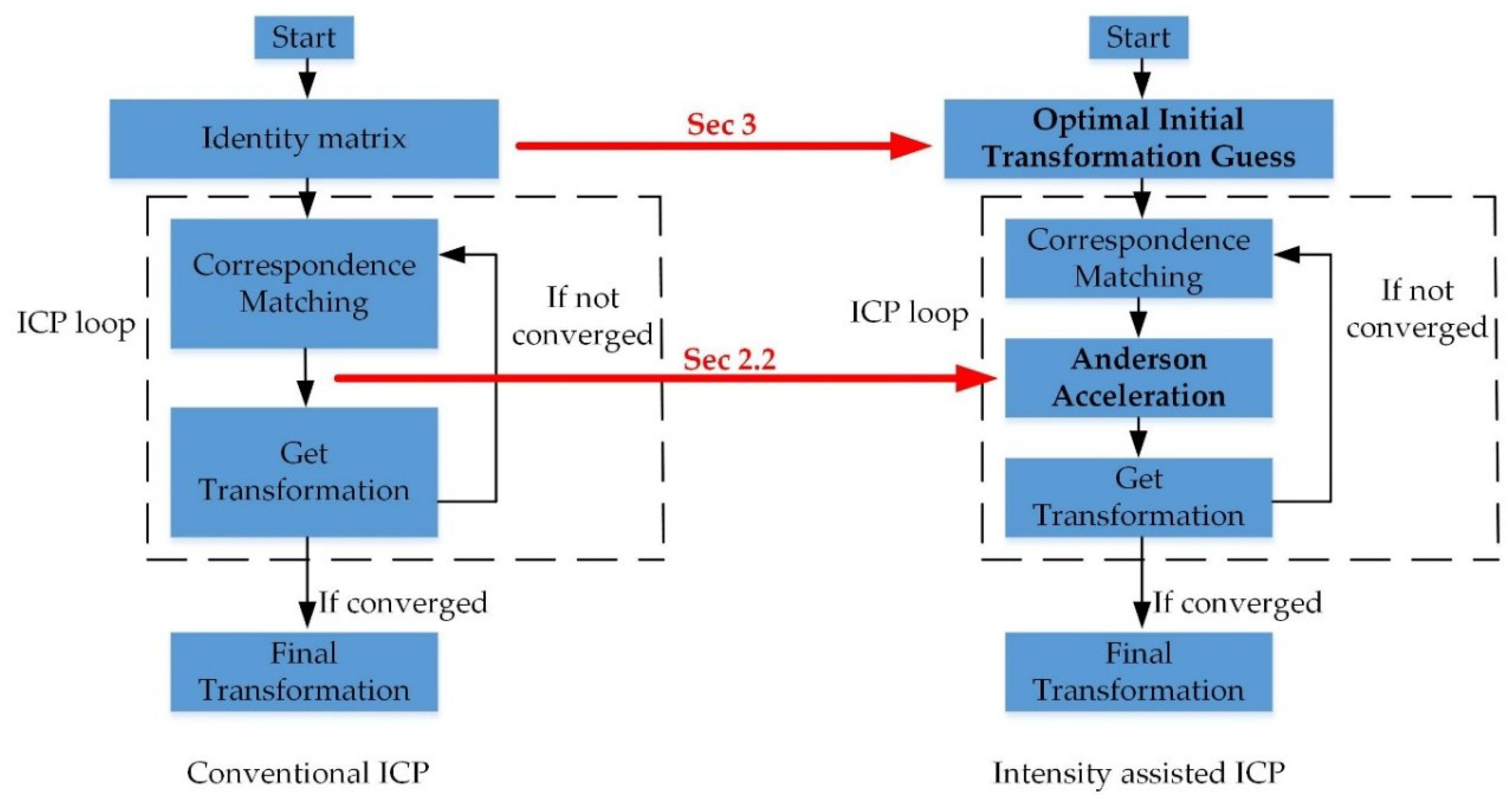

2.1. Conventional Iterative Closest Point (ICP)

2.2. Anderson Acceleration

3. Intensity-Assisted Iterative Closest Point

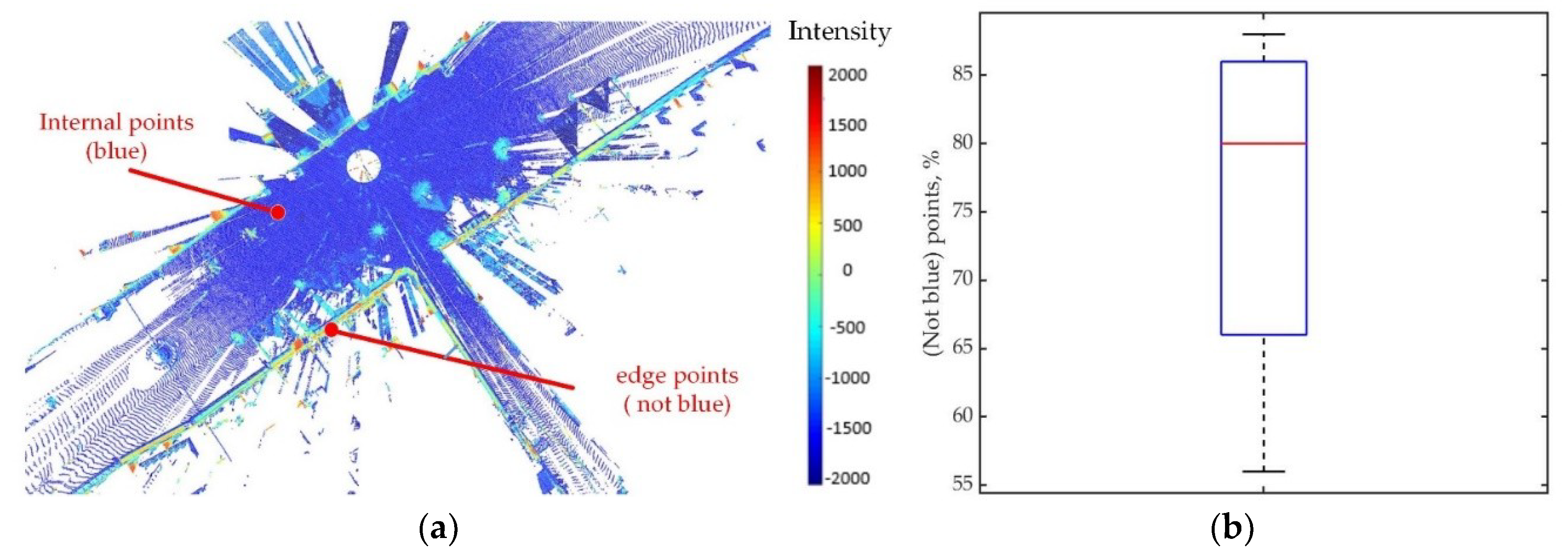

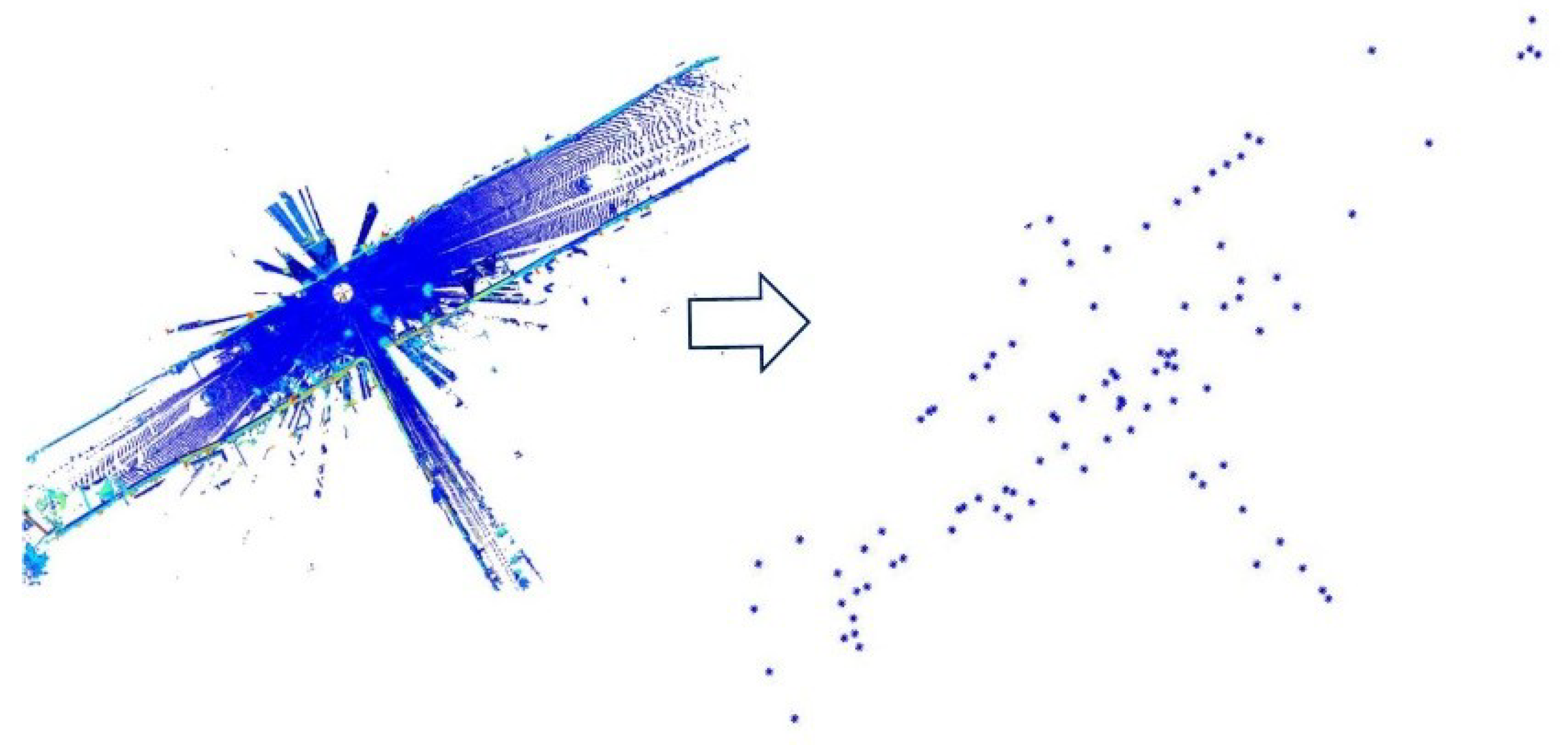

3.1. Salient Intensity Point Selection

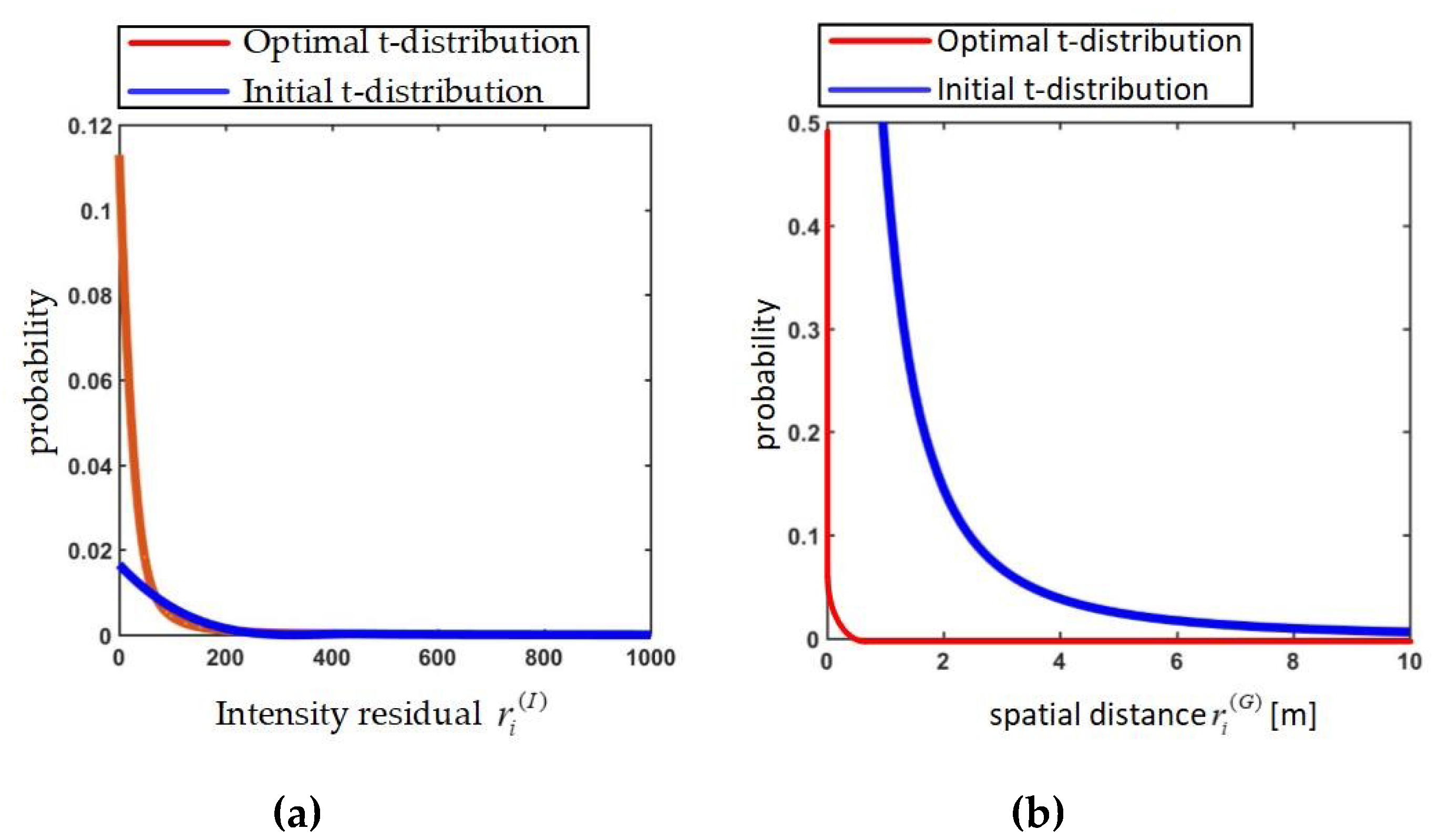

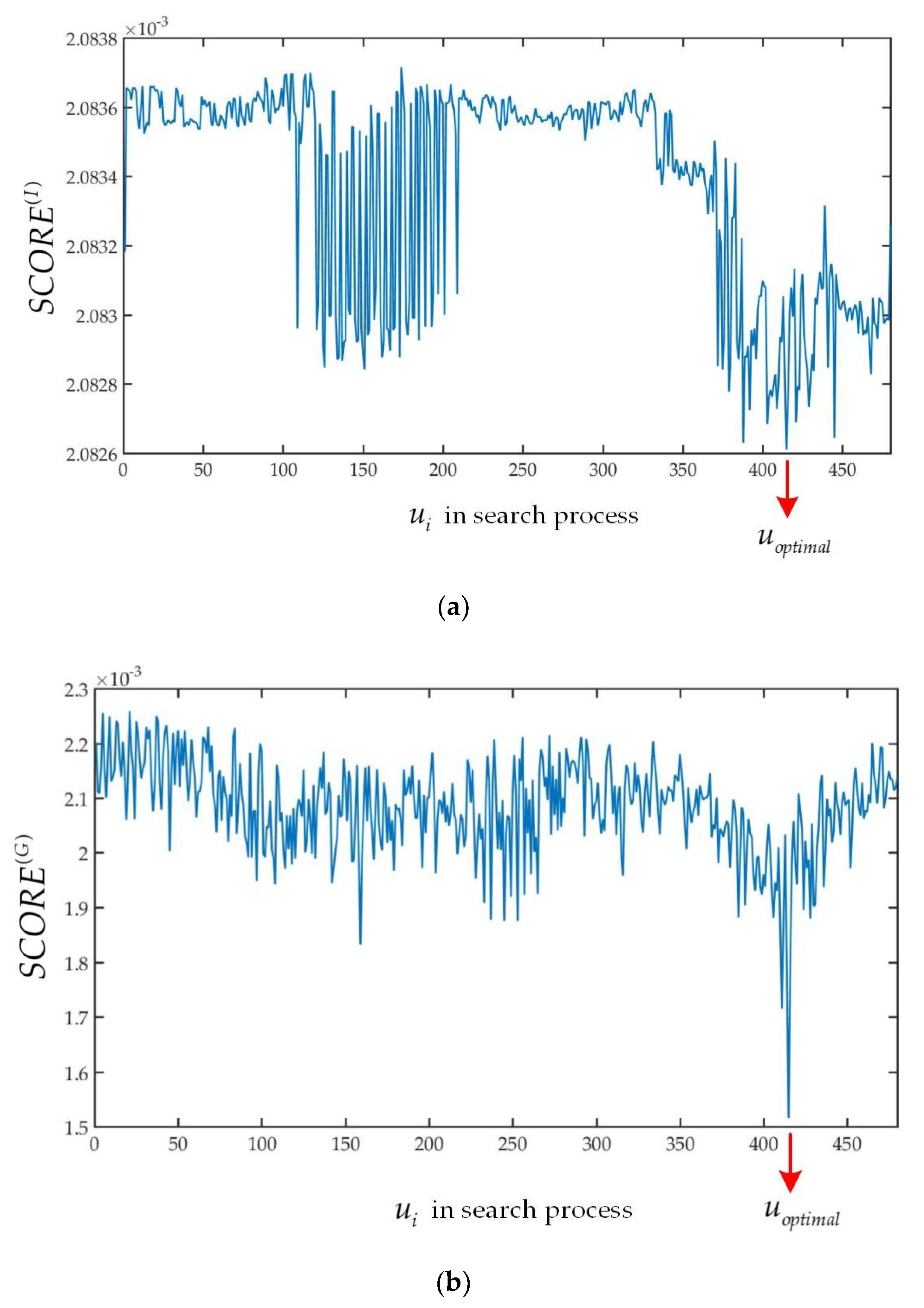

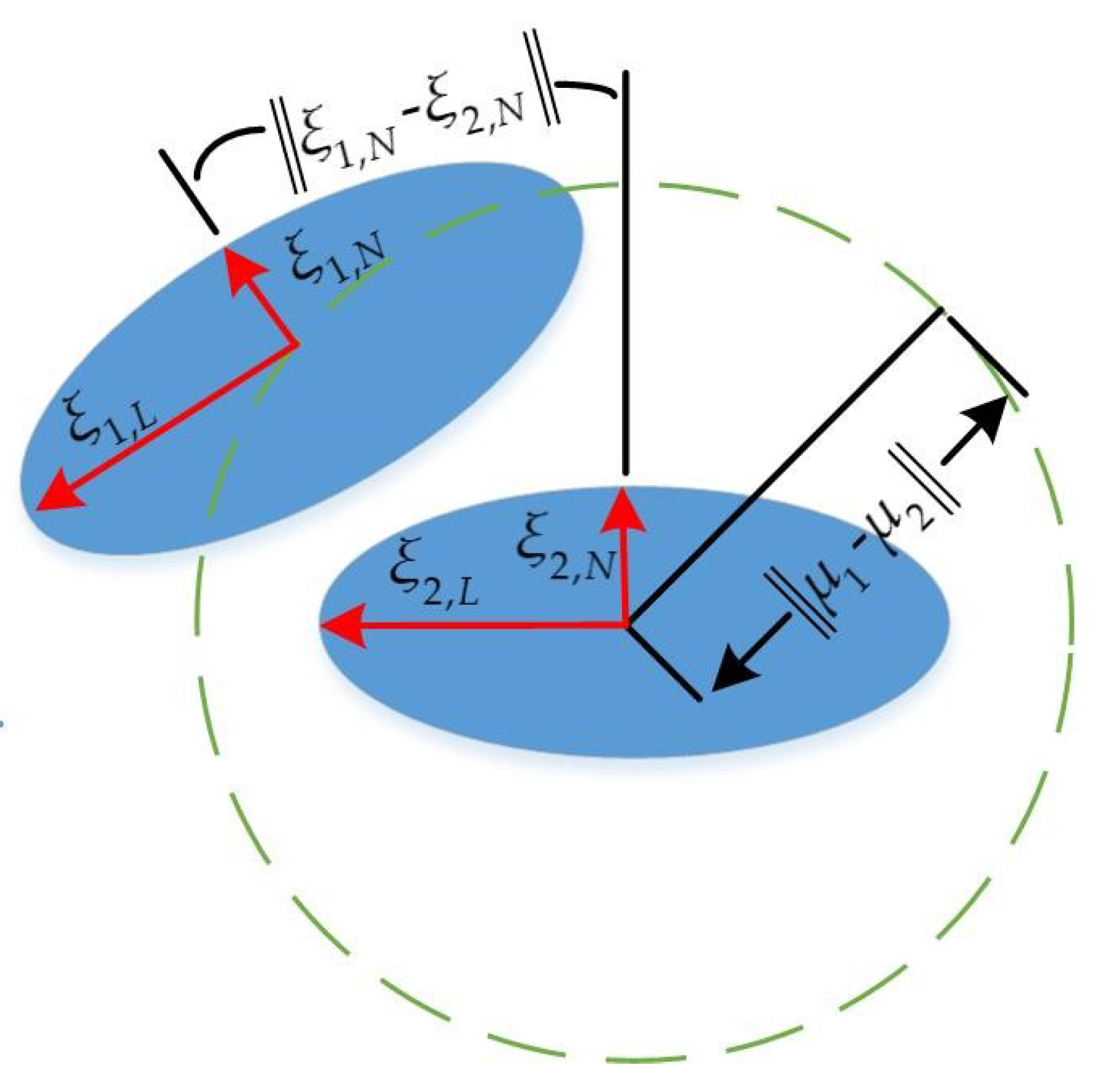

3.2. Discover Optimal Initial Transformation Guess

| Algorithm 1: Intensity-Assisted ICP |

| Input: Source frame <P1, I1> and target frame <P2, I2>, the search criteria Cθ, Cx, Cy, the search step Sθ, Sx, Sy Output: Convergence transformation Tfinal get sampled point cloud from P1, P2 with Section 3.1 get Cθ, Cx, Cy, Sθ, Sx, Sy with Algorithm 2 n = 1 for θ = −Cθ: Sθ: Cθ do for x = −Cx: Sx: Cx do for y = −Cy: Sy: Cy do get the SCORE(I) and SCORE(G) with un produced by θ, x, y n++ end for end for end for get uoptimal with Section 3.2 T0 = uoptimal Anderson acceleration begin with T0 between P1, P2 when convergence criteria is true, return Tfinal |

| Algorithm 2: Adaptive Threshold Selection |

| Input: The filtered source frame P1 and the filtered target frame P2 Output: The search criteria Cθ, Cx, Cy, the search step Sθ, Sx, Sy get µ1, µ2, ξ1,N, ξ2,N with Equations (18) and (19) if satisfy with Equation (20) || satisfy with Equation (21) then Cθ = 10°, Cx = 0.2, Cy = 0.2 and Sθ = 1, Sx = 0.04, Sy = 0.04 else Cθ = 45°, Cx = 1, Cy = 1 and Sθ = 3, Sx = 0.2, Sy = 0.2 end if return the Cθ, Cx, Cy and Sθ, Sx, Sy |

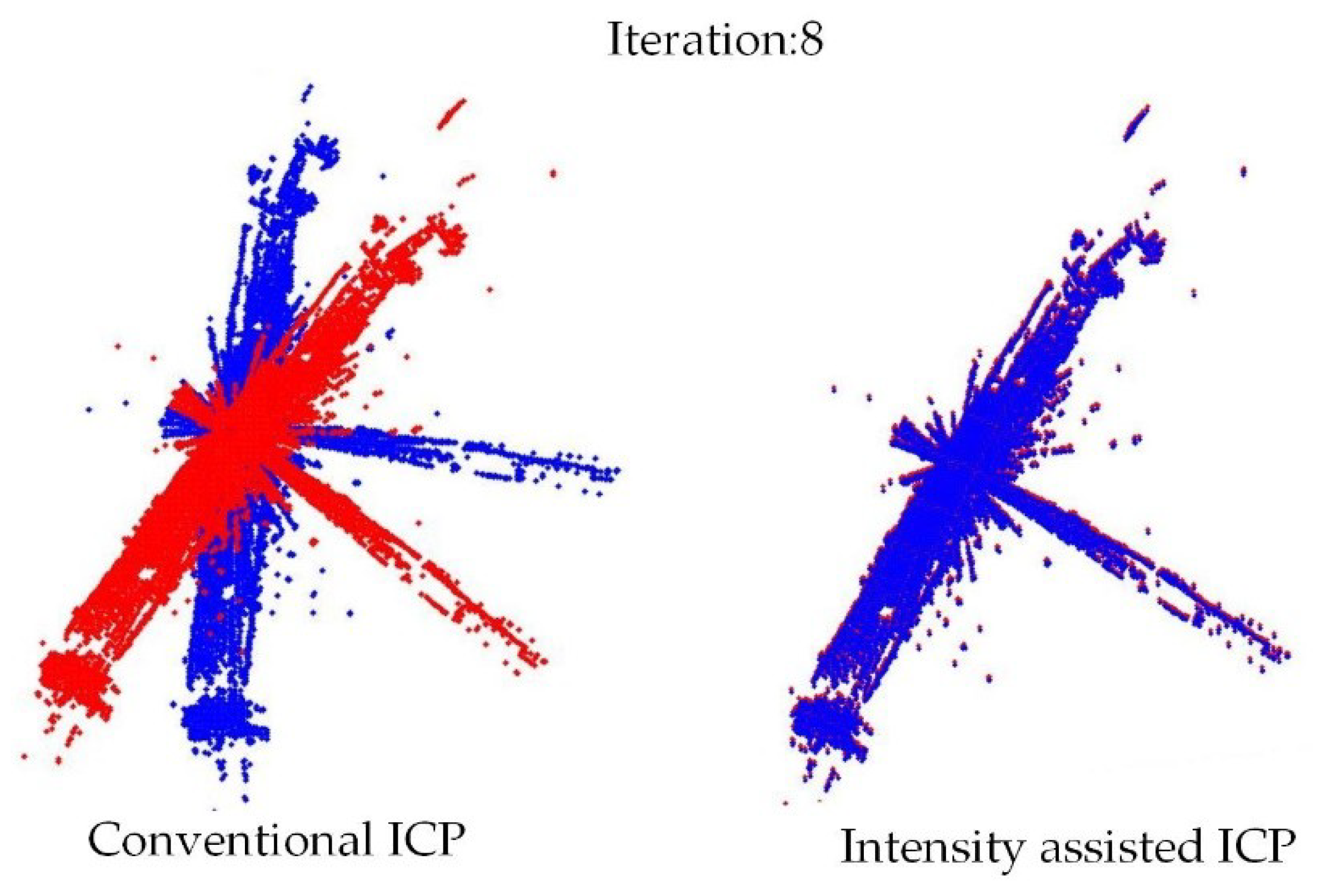

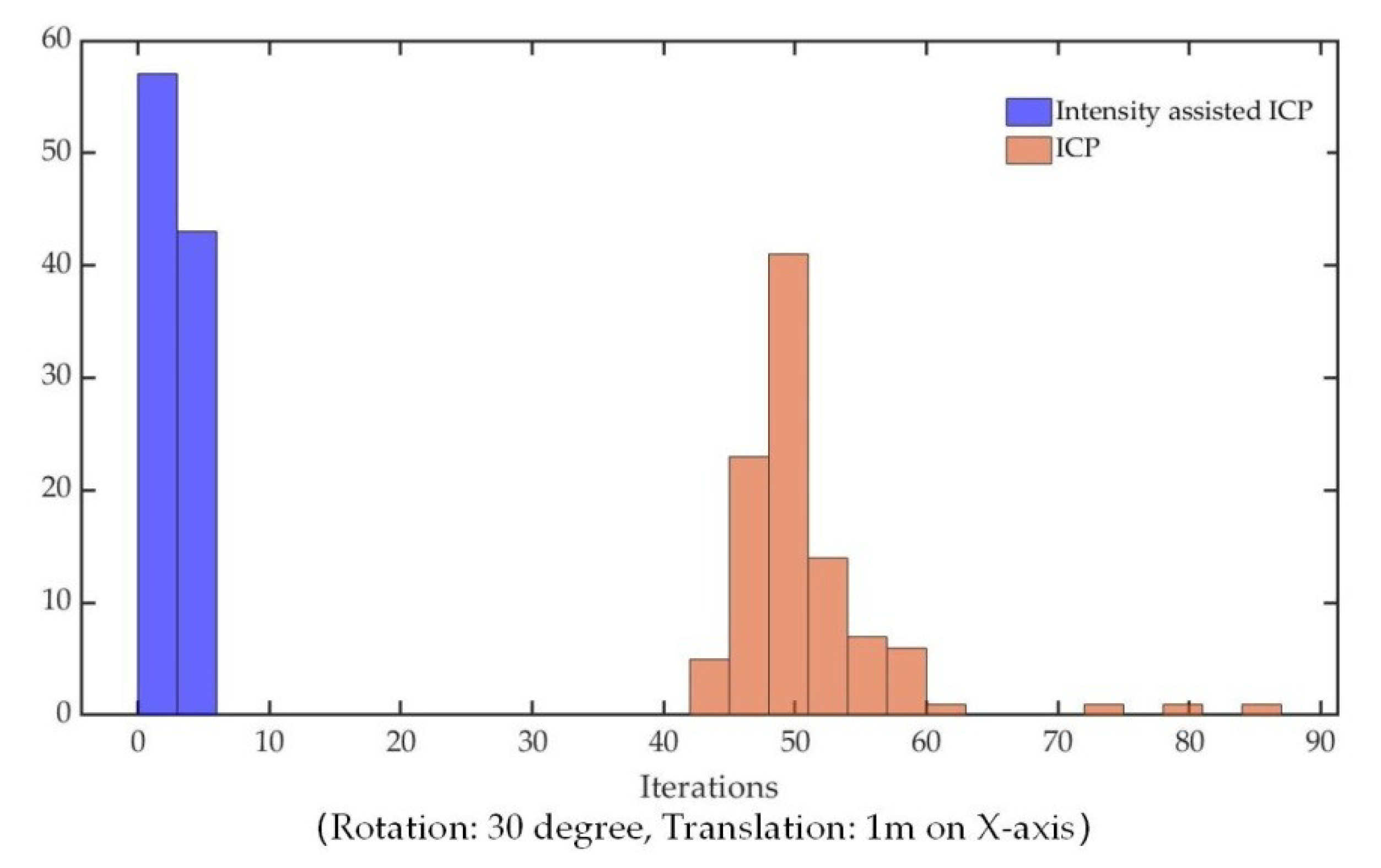

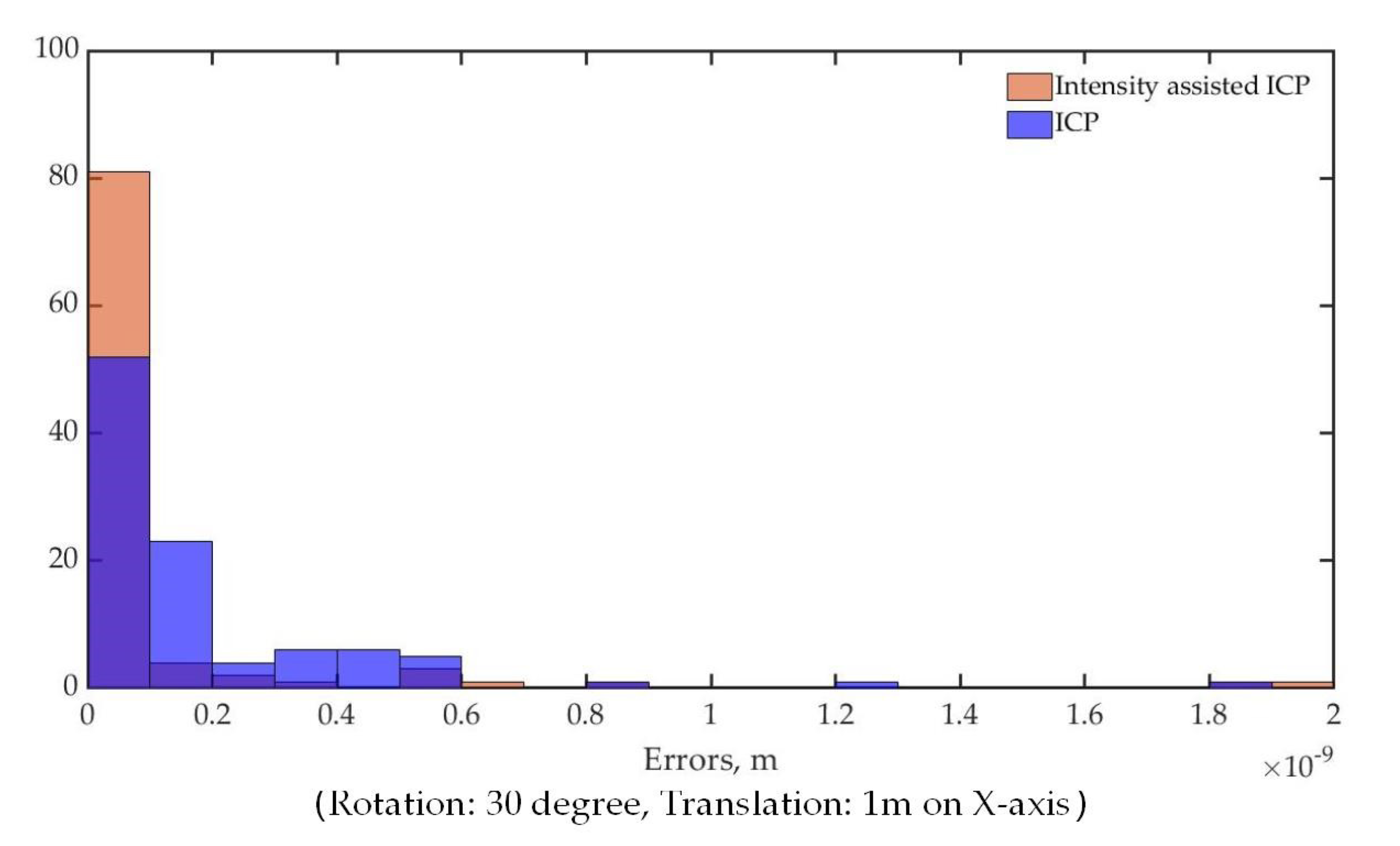

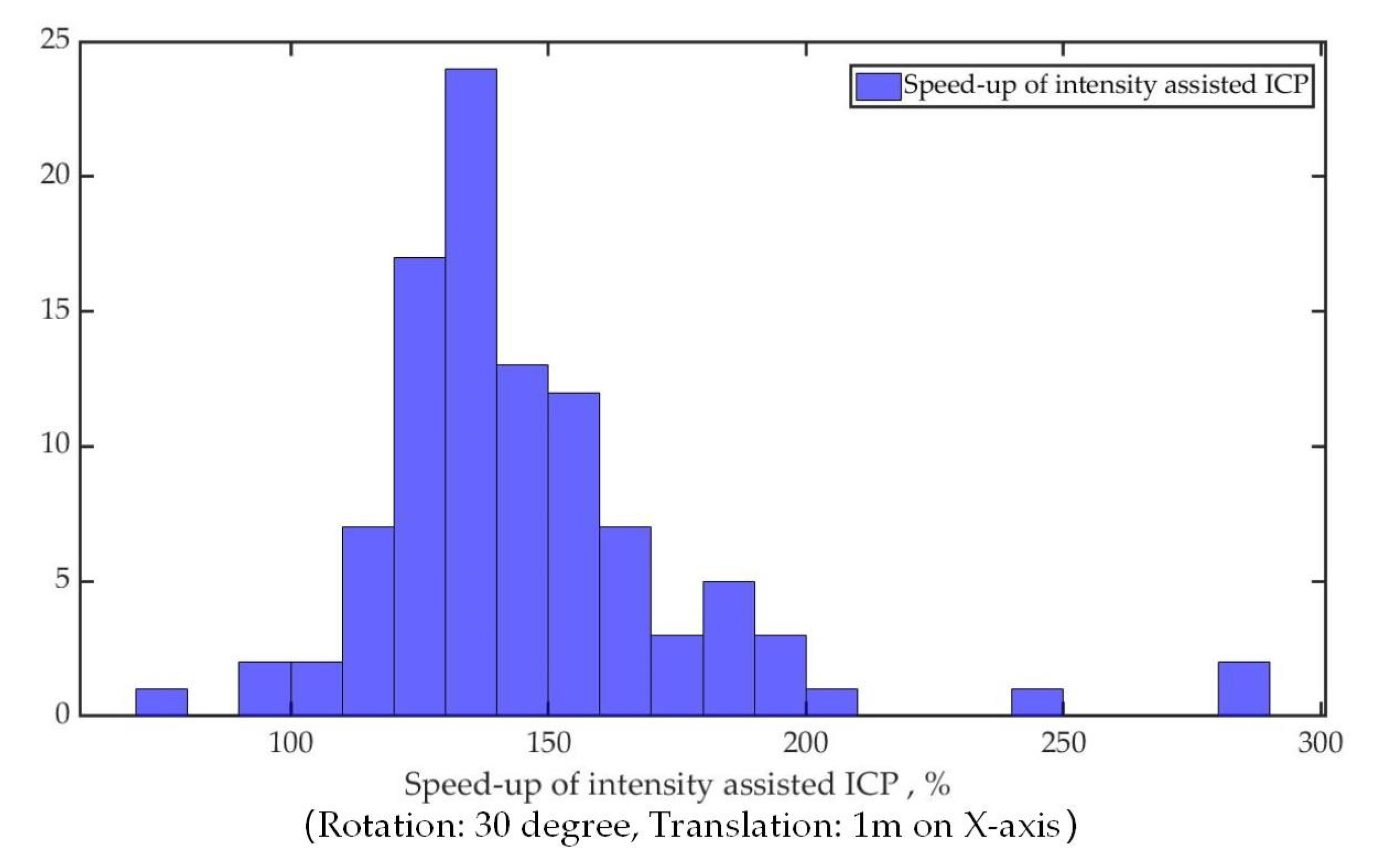

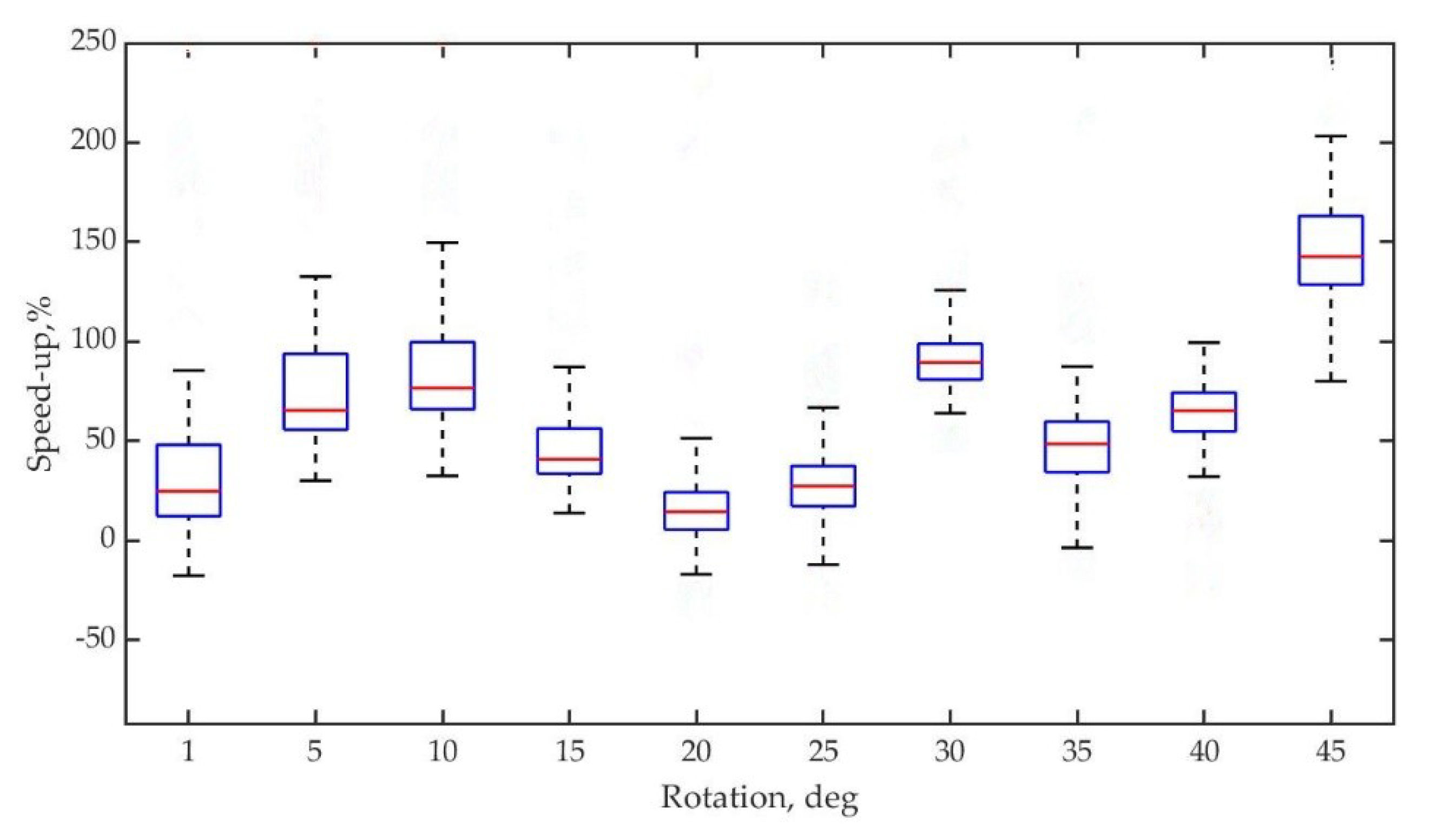

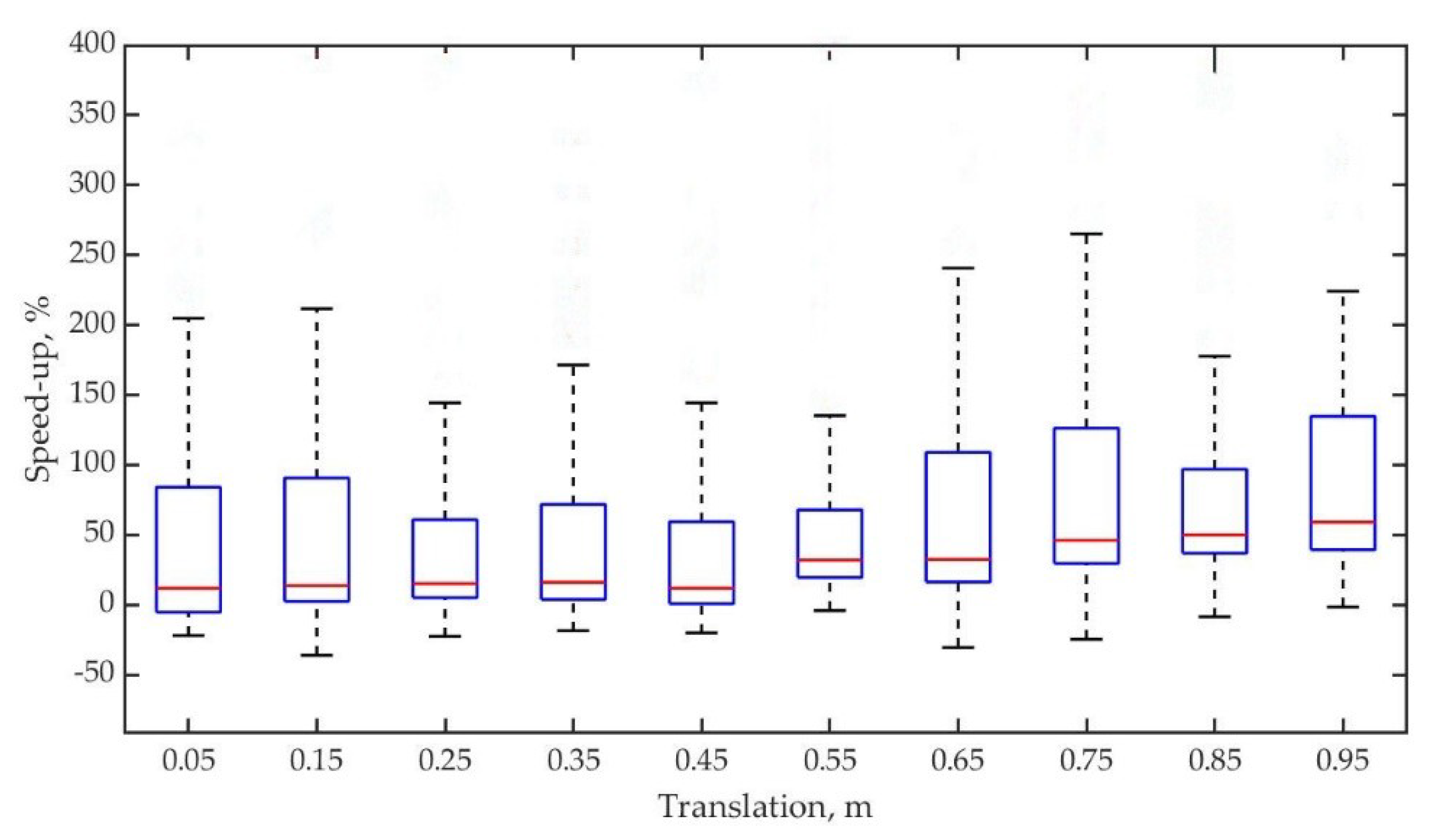

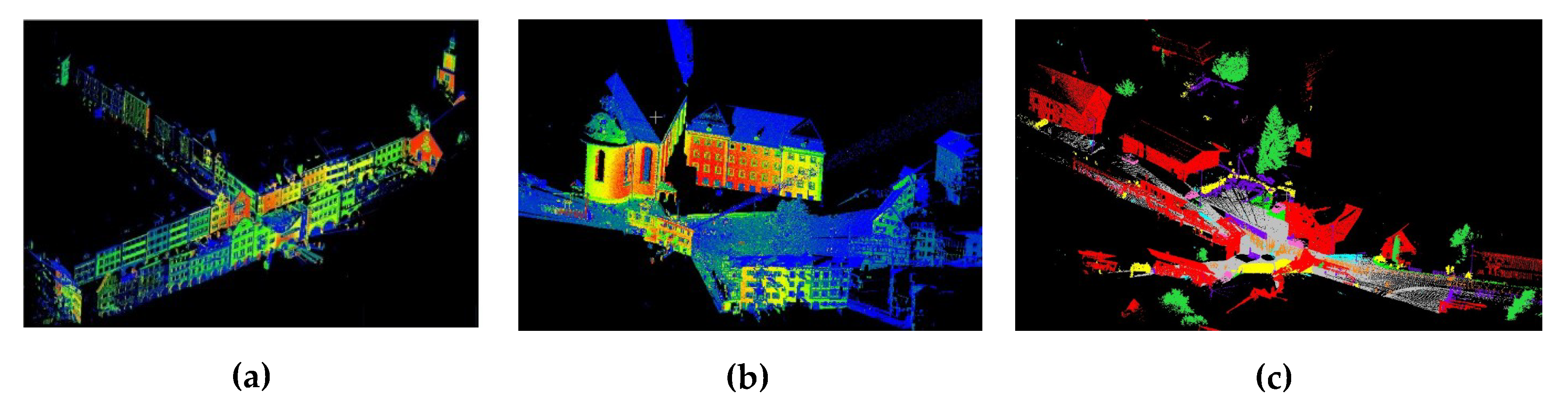

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J. Past, present, and future of simultaneous localization and mapping: toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Jaakkola, A.; Liu, J.; Hyyppã, J.; Hyyppã, H. NAVIS—An UGV indoor positioning system using laser scan matching for large-area real-time applications. Sensors 2014, 14, 11805–11824. [Google Scholar] [CrossRef] [PubMed]

- Im, J.H.; Im, S.H.; Jee, G.I. Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area. Sensors 2016, 16, 1268. [Google Scholar] [CrossRef] [PubMed]

- Almeida, J.; Santos, V.M. Real time egomotion of a nonholonomic vehicle using LIDAR measurements. J. Field Robot. 2012, 30, 129–141. [Google Scholar] [CrossRef]

- Kuramachi, R.; Ohsato, A.; Sasaki, Y.; Mizoguchi, H. G-ICP SLAM: An odometry-free 3D mapping system with robust 6DoF pose estimation. In Proceedings of the IEEE International Conference on Robotics & Biomimetics, Zhuhai, China, 6–9 December 2015. [Google Scholar]

- Yang, Y.; Yang, G.; Zheng, T.; Tian, Y.; Li, L. Feature extraction method based on 2.5-dimensions lidar platform for indoor mobile robots localization. In Proceedings of the 2017 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Ningbo, China, 19–21 November 2017; pp. 736–741. [Google Scholar]

- Yang, Y.; Yang, G.; Tian, Y.; Zheng, T.; Li, L.; Wang, Z. A robust and accurate SLAM algorithm for omni-directional mobile robots based on a novel 2.5D lidar device. In Proceedings of the 2018 13th IEEE Conference on Industrial Electronics and Applications (ICIEA), Wuhan, China, 31 May–2 June 2018; pp. 2123–2127. [Google Scholar]

- Witt, J.; Weltin, U. Robust Stereo Visual Odometry Using Iterative Closest Multiple Lines. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Zhao, S.; Fang, Z. Direct Depth SLAM: Sparse Geometric Feature Enhanced Direct Depth SLAM System for Low-Texture Environments. Sensors 2018, 18, 3339. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Li, H.; Jia, Y. Go-ICP: Solving 3D Registration Efficiently and Globally Optimally. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 3–6 December 2013. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the IEEE International Conference on Robotics & Automation, Pasadena, CA, USA, 19–23 May 2008. [Google Scholar]

- Burlacu, A.; Cohal, A.; Caraiman, S.; Condurache, D. Iterative closest point problem: A tensorial approach to finding the initial guess. In Proceedings of the International Conference on System Theory, Sinaia, Romania, 13–15 October 2016. [Google Scholar]

- Attia, M.; Slama, Y.; Attia, M.; Slama, Y.; Attia, M.; Slama, Y. Efficient Initial Guess Determination Based on 3D Point Cloud Projection for ICP Algorithms. In Proceedings of the International Conference on High Performance Computing & Simulation, Genoa, Italy, 17–21 July 2017. [Google Scholar]

- Men, H.; Gebre, B.; Pochiraju, K. Color point cloud registration with 4D ICP algorithm. In Proceedings of the IEEE International Conference on Robotics & Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Serafin, J.; Grisetti, G. NICP: Dense Normal Based Point Cloud Registration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Greenspan, M.; Yurick, M. Approximate k-d tree search for efficient ICP. In Proceedings of the International Conference on 3-d Digital Imaging & Modeling, Banff, AB, Canada, 6–10 October 2003. [Google Scholar]

- Granger, S.; Pennec, X. Multi-scale EM-ICP: A Fast and Robust Approach for Surface Registration. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient Variants of the ICP Algorithm. In Proceedings of the International Conference on 3-d Digital Imaging & Modeling, Quebec, QC, Canada, 28 May–1 June 2001. [Google Scholar]

- Savva, A.D.; Economopoulos, T.L.; Matsopoulos, G.K. Geometry-based vs. intensity-based medical image registration: A comparative study on 3D CT data. Comput. Biol. Med. 2016, 69, 120–133. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Lee, D. Fast Visual Odometry Using Intensity-Assisted Iterative Closest Point. IEEE Robot. Autom. Lett. 2016, 1, 992–999. [Google Scholar] [CrossRef]

- Smith, E.R.; Kinga, B.J.; Stewarta, C.V.; Radkea, R.J. Registration of combined range–intensity scans: Initialization through verification. Comput. Vis. Image Underst. 2008, 110, 226–244. [Google Scholar] [CrossRef]

- Kim, H.; Liu, B.; Chi, Y.G.; Lee, S.; Myung, H. Robust Vehicle Localization using Entropy-weighted Particle Filter-based Data Fusion of Vertical and Road Intensity Information for a Large Scale Urban Area. IEEE Robot. Autom. Lett. 2017, 2, 1518–1524. [Google Scholar] [CrossRef]

- Pavlov, A.L.; Ovchinnikov, G.V.; Derbyshev, D.Y.; Tsetserukou, D.; Oseledets, I.V. AA-ICP: Iterative Closest Point with Anderson Acceleration. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Walker, H.F.; Ni, P. Anderson Acceleration for Fixed-Point Iterations. Math. Sci. Fac. Publ. 2007, 49, 1715–1735. [Google Scholar]

- Potra, F.A.; Engler, H. A characterization of the behavior of the Anderson acceleration on linear problems. Linear Algebra Its Appl. 2013, 438, 1002–1011. [Google Scholar] [CrossRef]

- Kerl, C.; Sturm, J.; Cremers, D. Robust odometry estimation for RGB-D cameras. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Lange, K.; Little, R.A.; Taylor, J.G. Robust Statistical Modeling Using the t Distribution. Publ. Am. Stat. Assoc. 1989, 84, 881–896. [Google Scholar] [CrossRef]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3D.net: A new Large-scale Point Cloud Classification Benchmark. arXiv 2017, arXiv:1704.03847. [Google Scholar] [CrossRef]

- Deschaud, J.E. IMLS-SLAM: scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

| Dataset | Parameters | Algorithms | |||

|---|---|---|---|---|---|

| ICP | AA-ICP | IMLS-ICP | Our ICP | ||

| Market | Experiment1 (1 m, 0 m, 30°) | ||||

| Errors (m) | 5.808 × 10−3 | 3.644 × 10−3 | 1.317 × 10−4 | 1.556 × 10−3 | |

| Time (s) | 125.936 | 76.455 | 45.469 | 12.674 | |

| Experiment2 (0.5 m, 0 m, 15°) | |||||

| Errors (m) | 5.806 × 10−3 | 3.261 × 10−3 | 3.746 × 10−5 | 2.185 × 10−3 | |

| Time (s) | 56.454 | 23.113 | 42.924 | 13.901 | |

| Experiment3 (0.1 m, 0 m, 10°) | |||||

| Errors (m) | 1.755 × 10−3 | 1.757 × 10−3 | 3.293 × 10−4 | 1.659 × 10−9 | |

| Time (s) | 54.458 | 37.008 | 48.154 | 22.093 | |

| Stgallen | Experiment1 (1 m, 0 m, 30°) | ||||

| Errors (m) | 8.662 × 10−3 | 5.891 × 10−3 | 1.523 × 10−4 | 3.265 × 10−3 | |

| Time (s) | 239.642 | 168.634 | 138.276 | 21.272 | |

| Experiment2 (0.5 m, 0 m, 15°) | |||||

| Errors (m) | 6.039 × 10−3 | 1.971 × 10−3 | 6.603 × 10−5 | 1.853 × 10−3 | |

| Time (s) | 158.853 | 70.818 | 121.159 | 23.416 | |

| Experiment3 (0.1 m, 0 m, 10°) | |||||

| Errors (m) | 4.159 × 10−3 | 9.012 × 10−3 | 4.203 × 10−5 | 1.942 × 10−3 | |

| Time (s) | 156.046 | 59.321 | 114.177 | 20.718 | |

| Station | Experiment1 (1 m, 0 m, 30°) | ||||

| Errors (m) | 3.096 × 10−2 | 1.527 × 10−2 | 2.417 × 10−5 | 2.357 × 10−3 | |

| Time (s) | 57.688 | 47.728 | 56.993 | 12.524 | |

| Experiment2 (0.5 m, 0 m, 15°) | |||||

| Errors (m) | 1.368 × 10−2 | 1.079 × 10−2 | 2.387 × 10−4 | 9.748 × 10−4 | |

| Time (s) | 51.574 | 37.425 | 64.251 | 12.576 | |

| Experiment3 (0.1 m, 0 m, 10°) | |||||

| Errors (m) | 4.911 × 10−3 | 8.655 × 10−3 | 9.817 × 10−5 | 1.049 × 10−4 | |

| Time (s) | 54.861 | 41.817 | 42.546 | 12.876 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Y.; Liu, X.; Li, L.; Wang, W. Intensity-Assisted ICP for Fast Registration of 2D-LIDAR. Sensors 2019, 19, 2124. https://doi.org/10.3390/s19092124

Tian Y, Liu X, Li L, Wang W. Intensity-Assisted ICP for Fast Registration of 2D-LIDAR. Sensors. 2019; 19(9):2124. https://doi.org/10.3390/s19092124

Chicago/Turabian StyleTian, Yingzhong, Xining Liu, Long Li, and Wenbin Wang. 2019. "Intensity-Assisted ICP for Fast Registration of 2D-LIDAR" Sensors 19, no. 9: 2124. https://doi.org/10.3390/s19092124

APA StyleTian, Y., Liu, X., Li, L., & Wang, W. (2019). Intensity-Assisted ICP for Fast Registration of 2D-LIDAR. Sensors, 19(9), 2124. https://doi.org/10.3390/s19092124