Wearable Sensor-Based Human Activity Recognition via Two-Layer Diversity-Enhanced Multiclassifier Recognition Method

Abstract

1. Introduction

2. Related Work

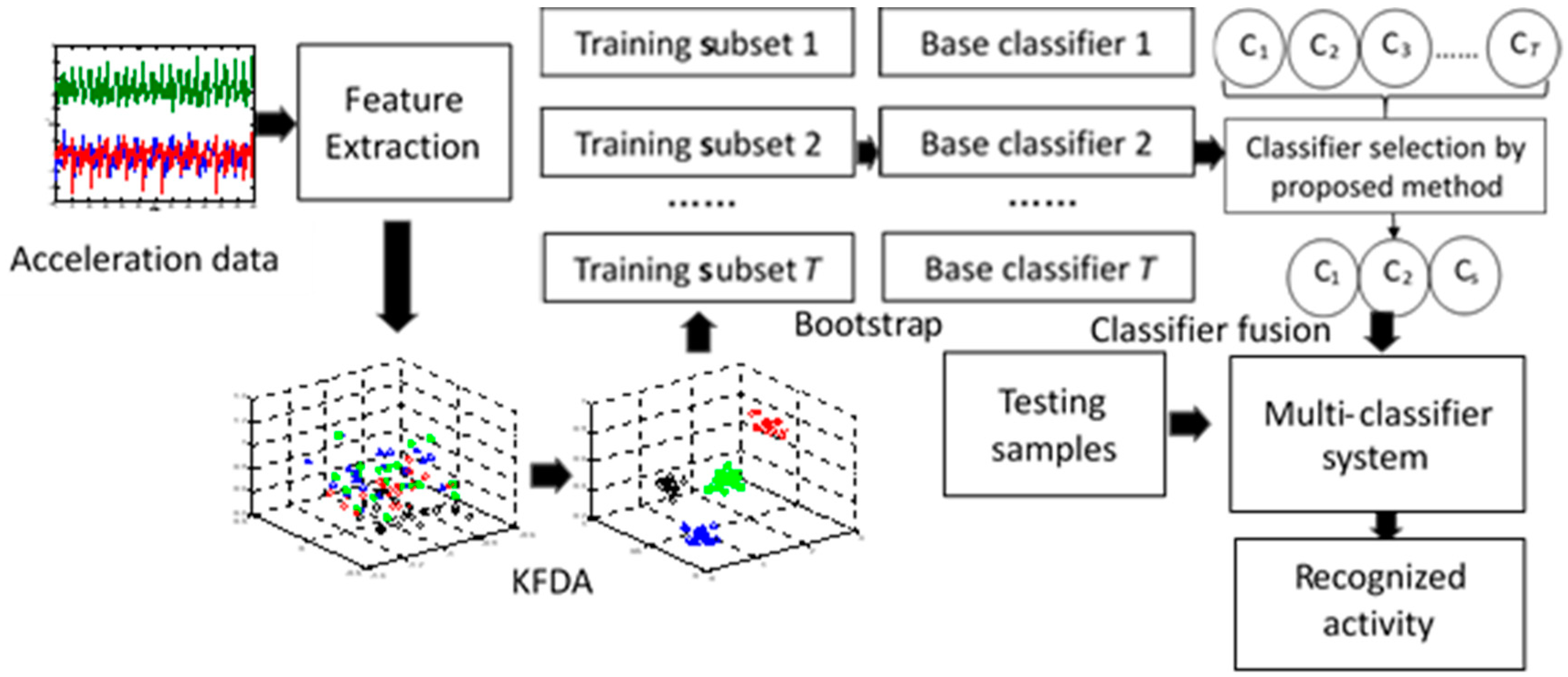

3. The Proposed Framework

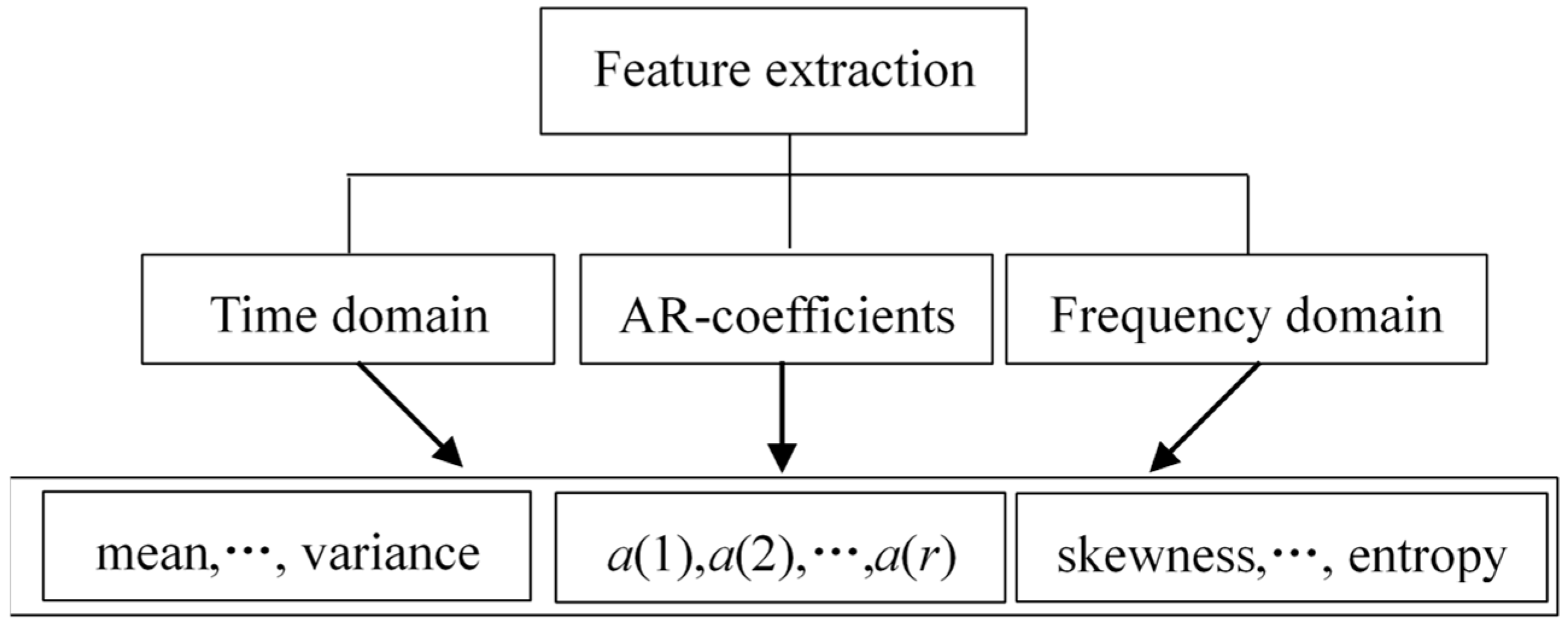

3.1. Feature Extraction

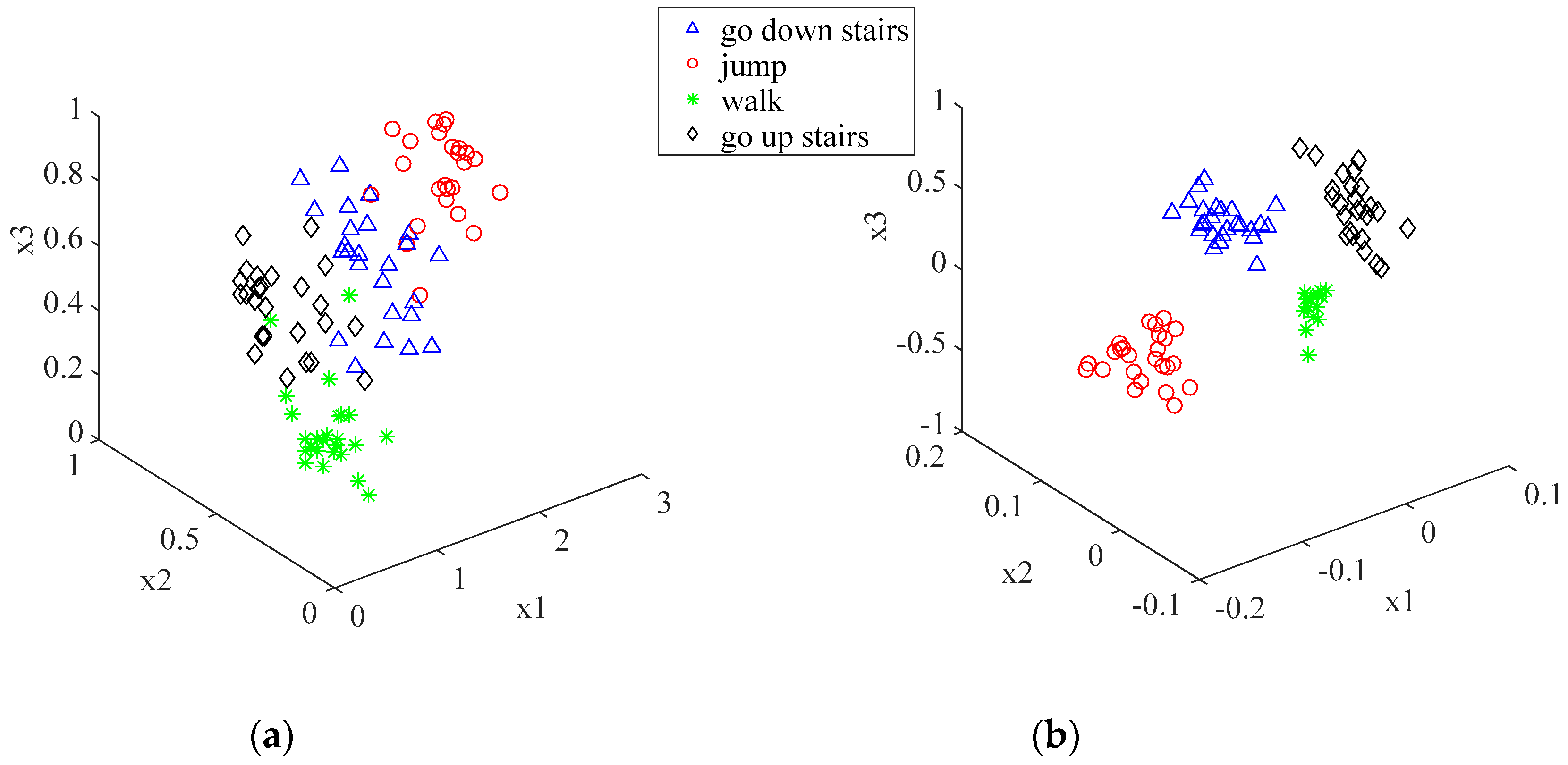

3.2. Kernel Fisher Discriminant Analysis (KFDA)

3.3. Bootstrap Resampling

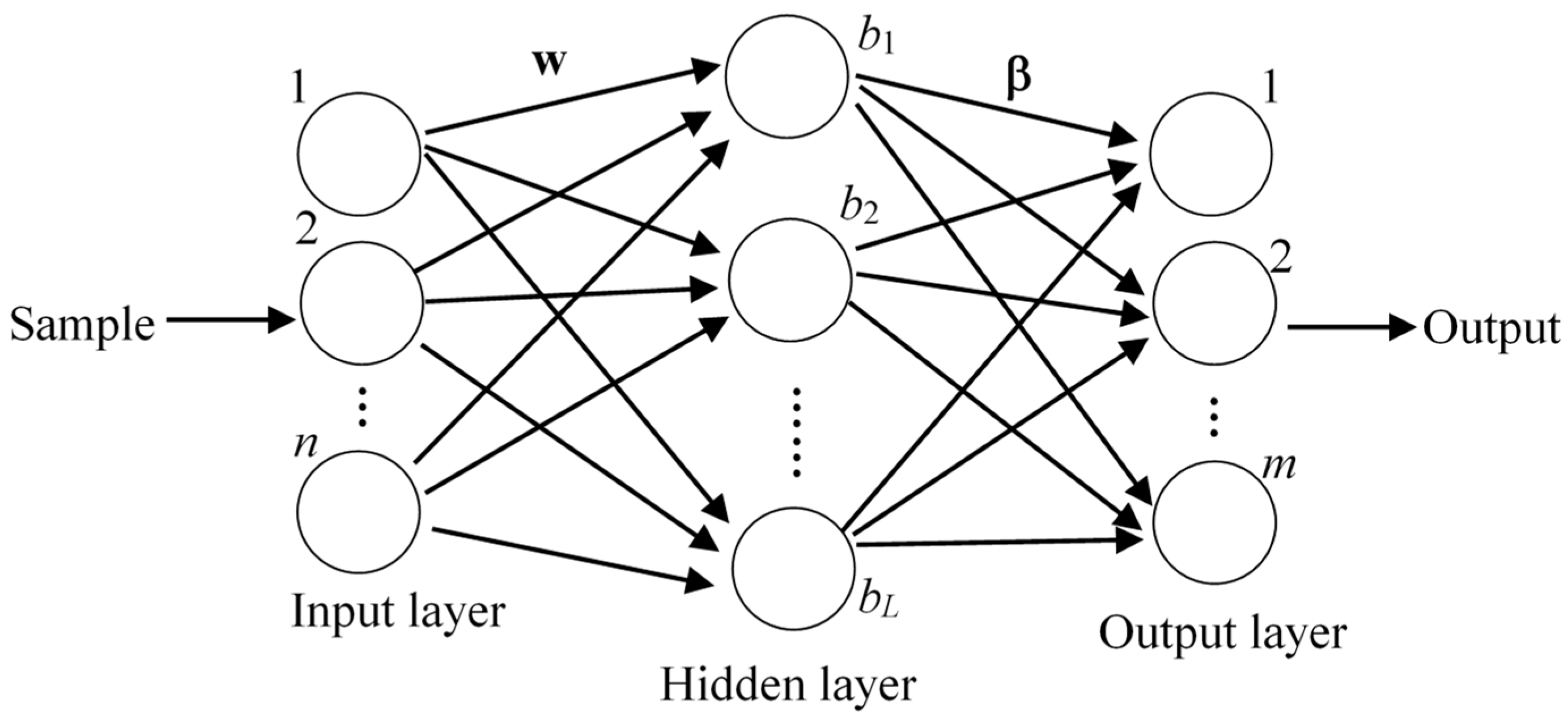

3.4. Classification Algorithm

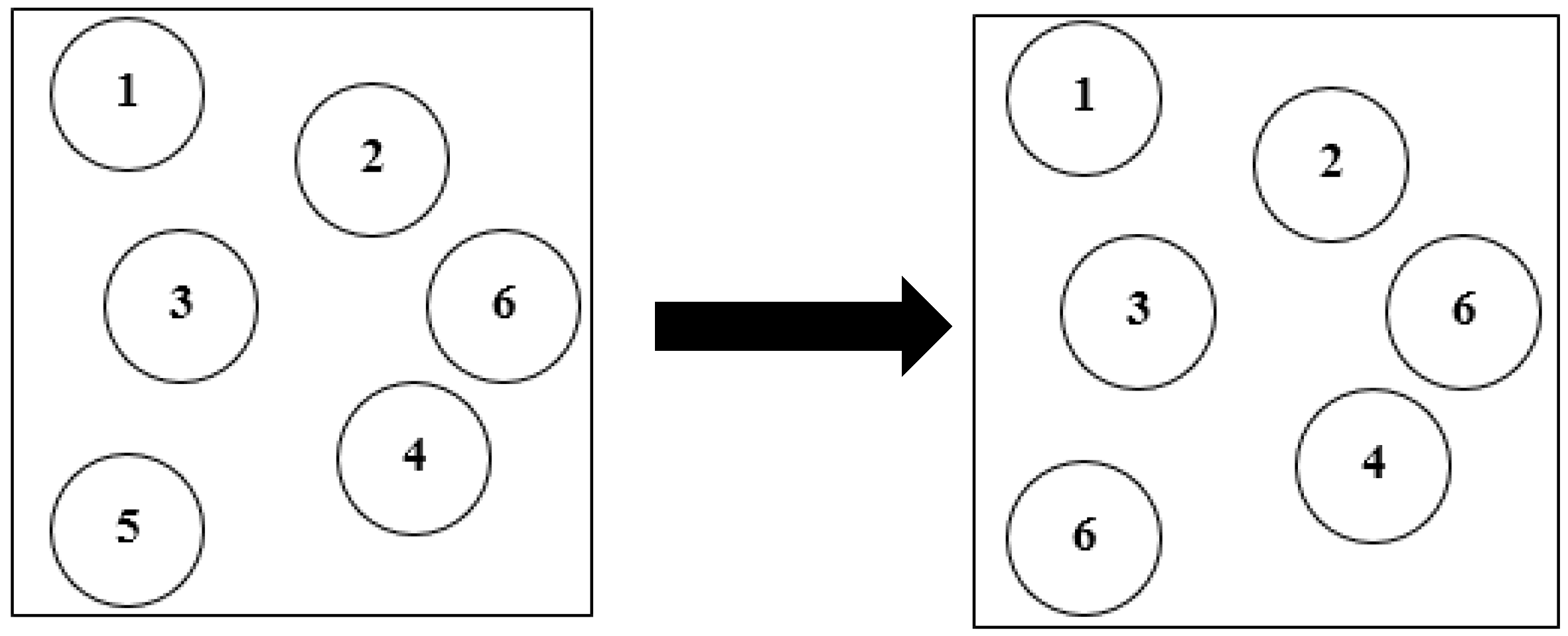

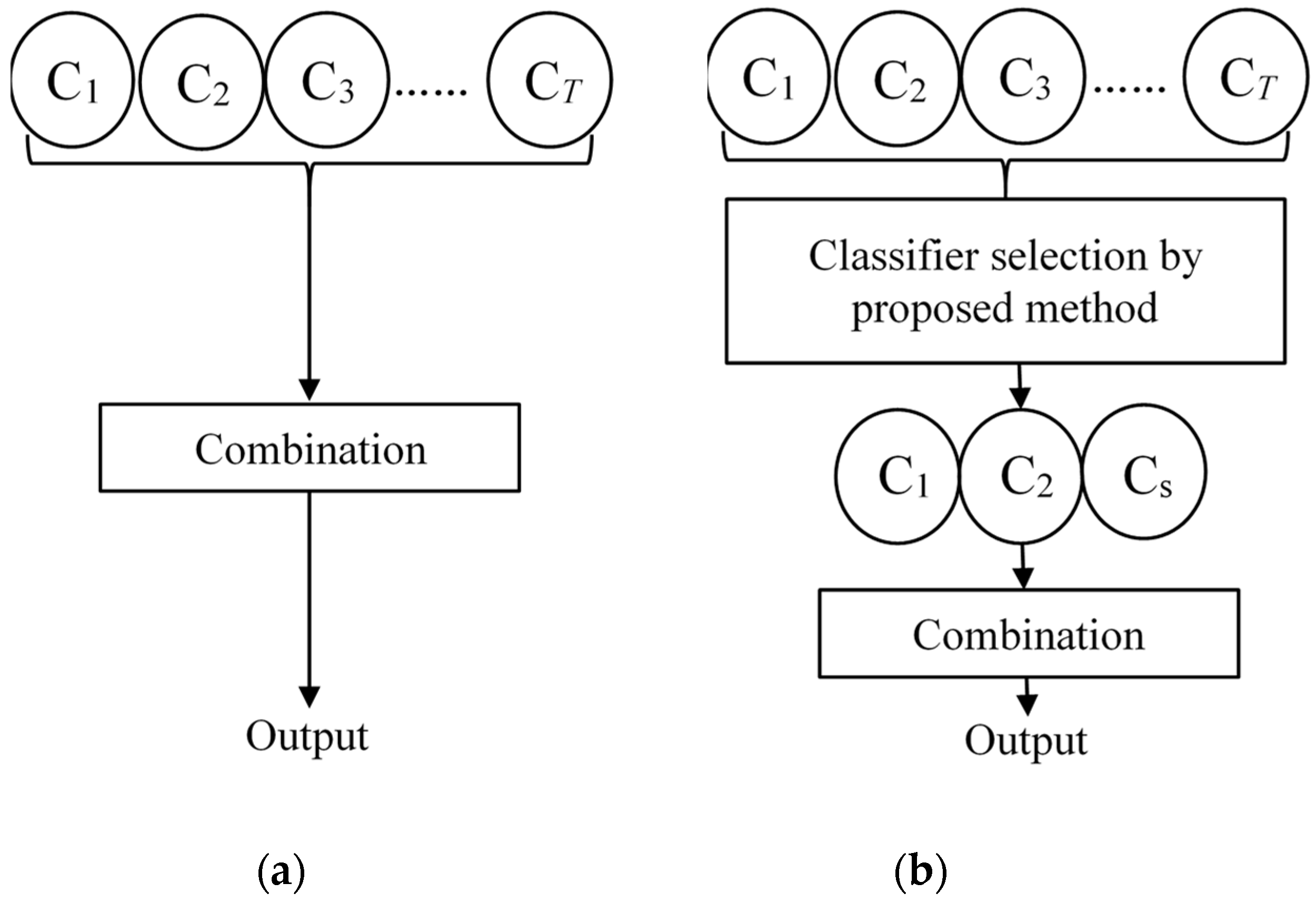

3.5. Diversity Measures and the Proposed Classifier Selection Method

3.5.1. Diversity Measures

Disagreement Measure

Cunningham’s Entropy

Coincident Failure Diversity

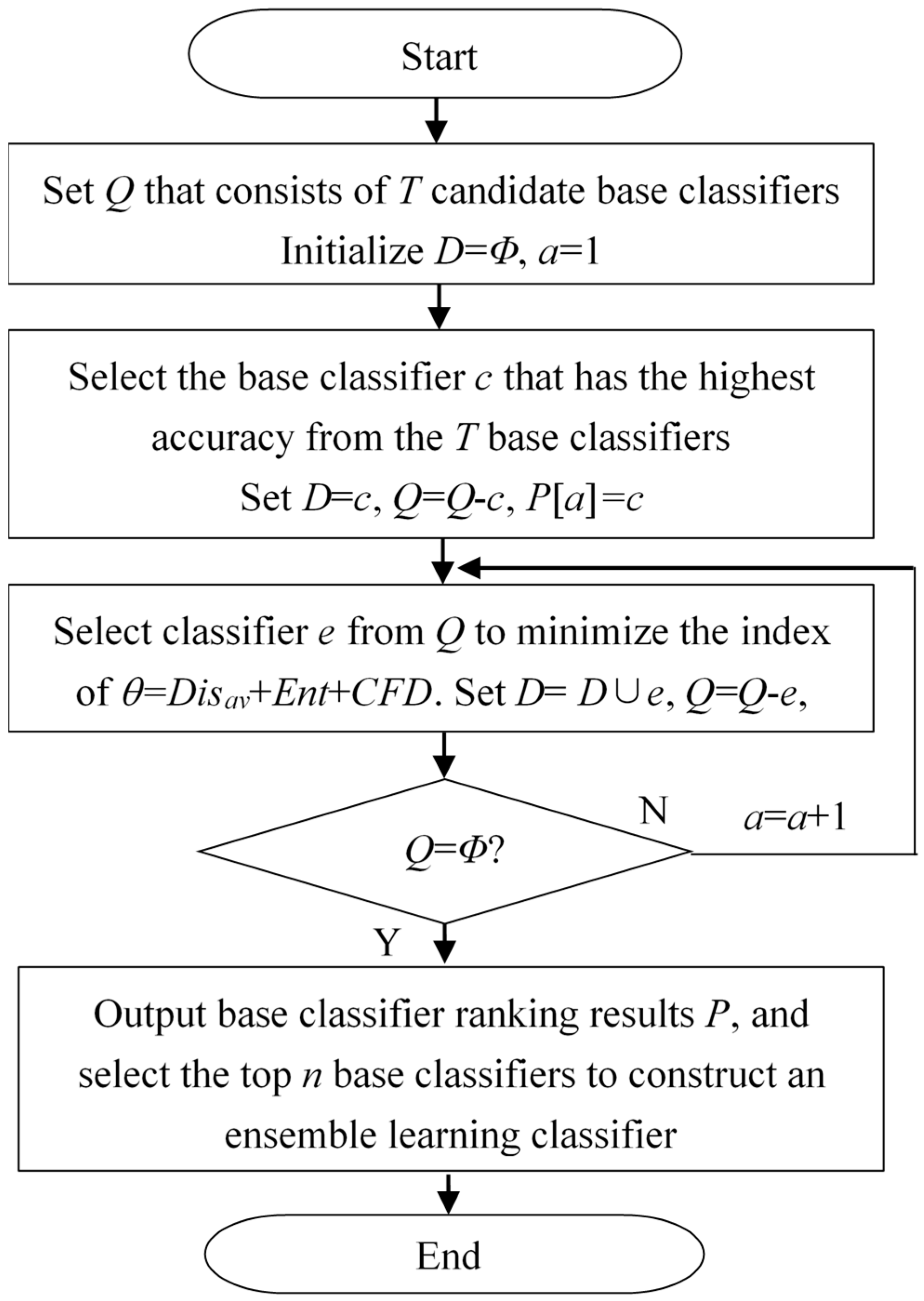

3.5.2. The Proposed Classifier Selection Method

4. Experimental Results and Analysis

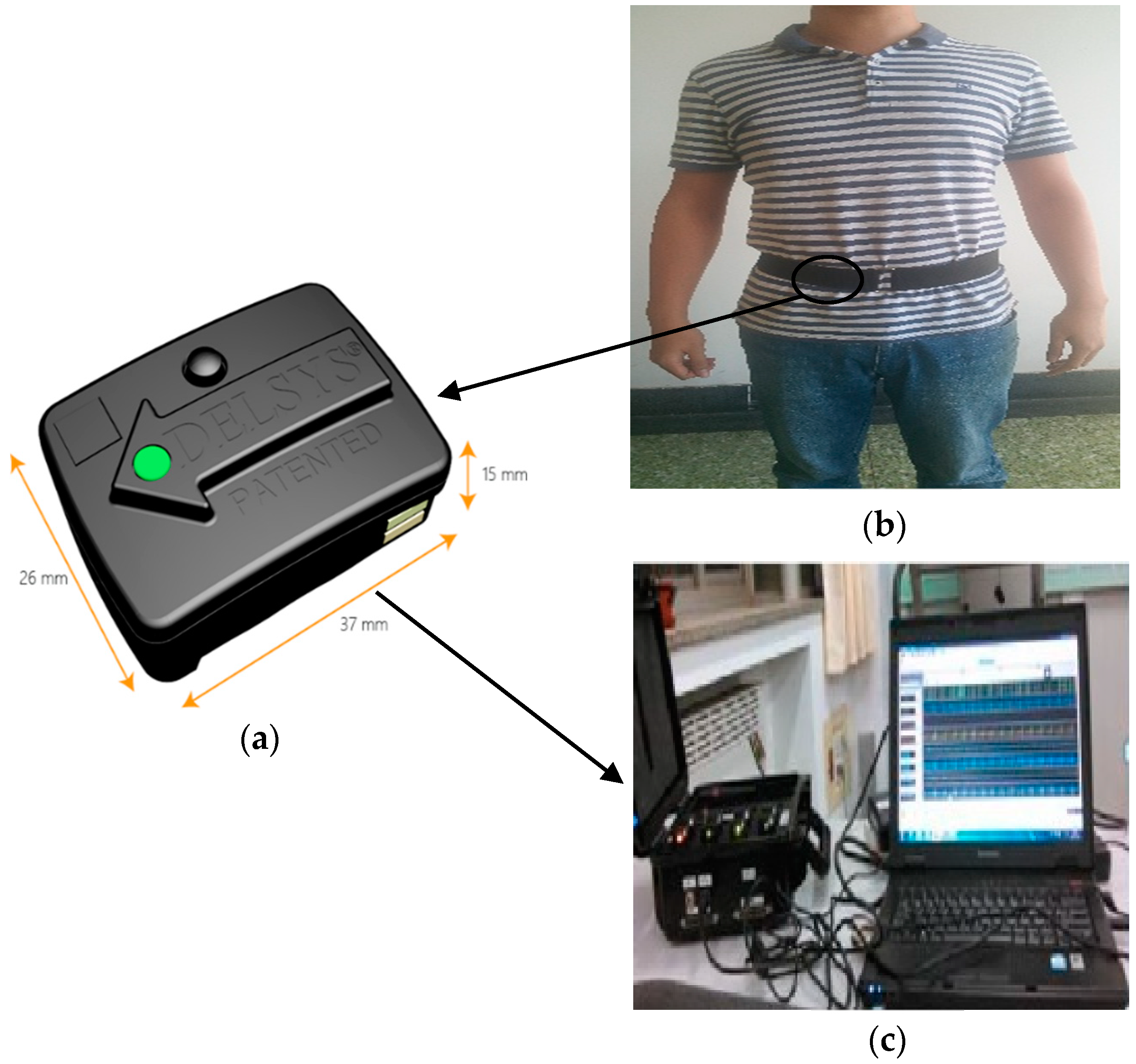

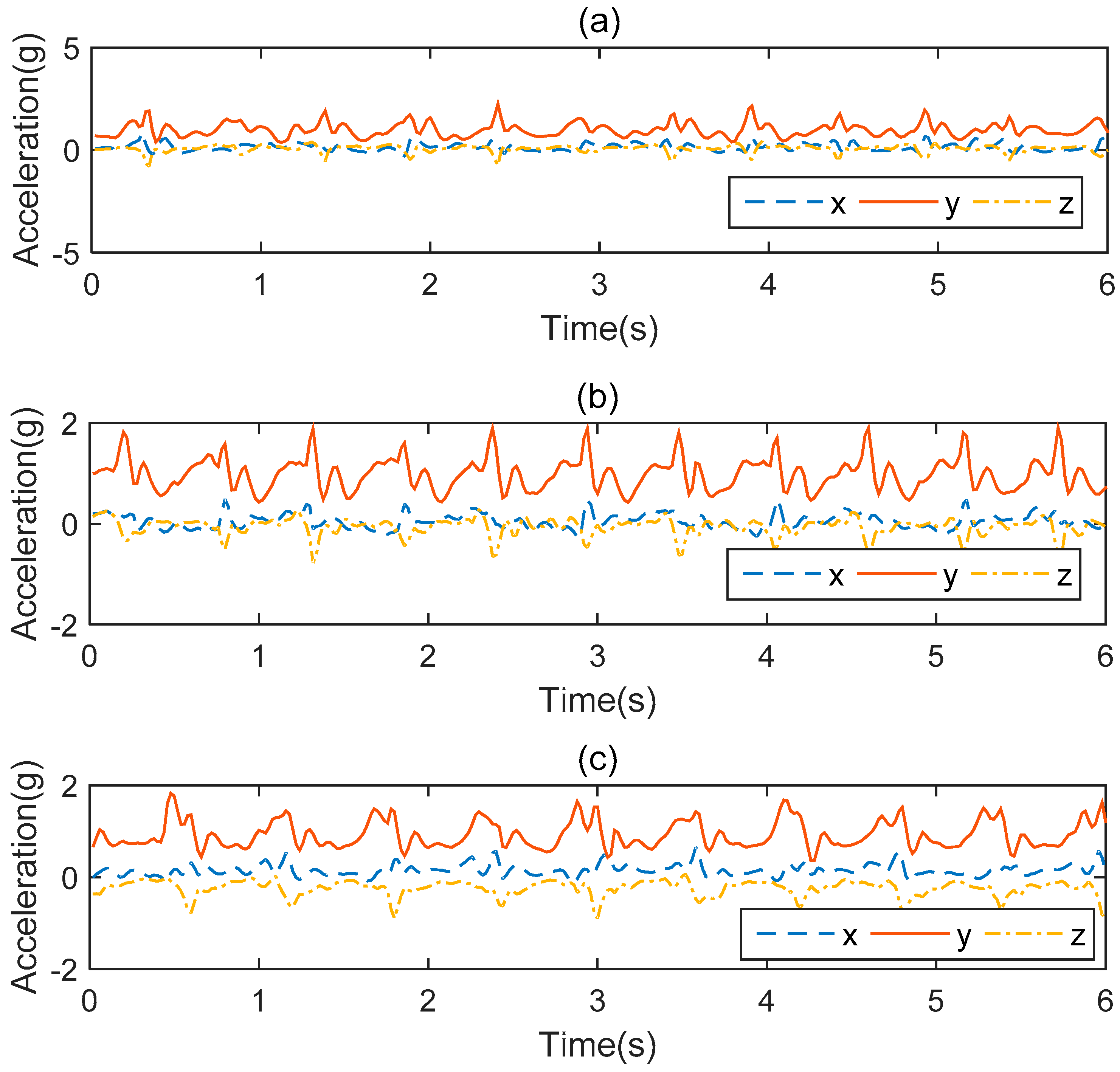

4.1. Experimental Setup and Experimental Dataset

4.2. Performance Measures

4.3. Experimental Results

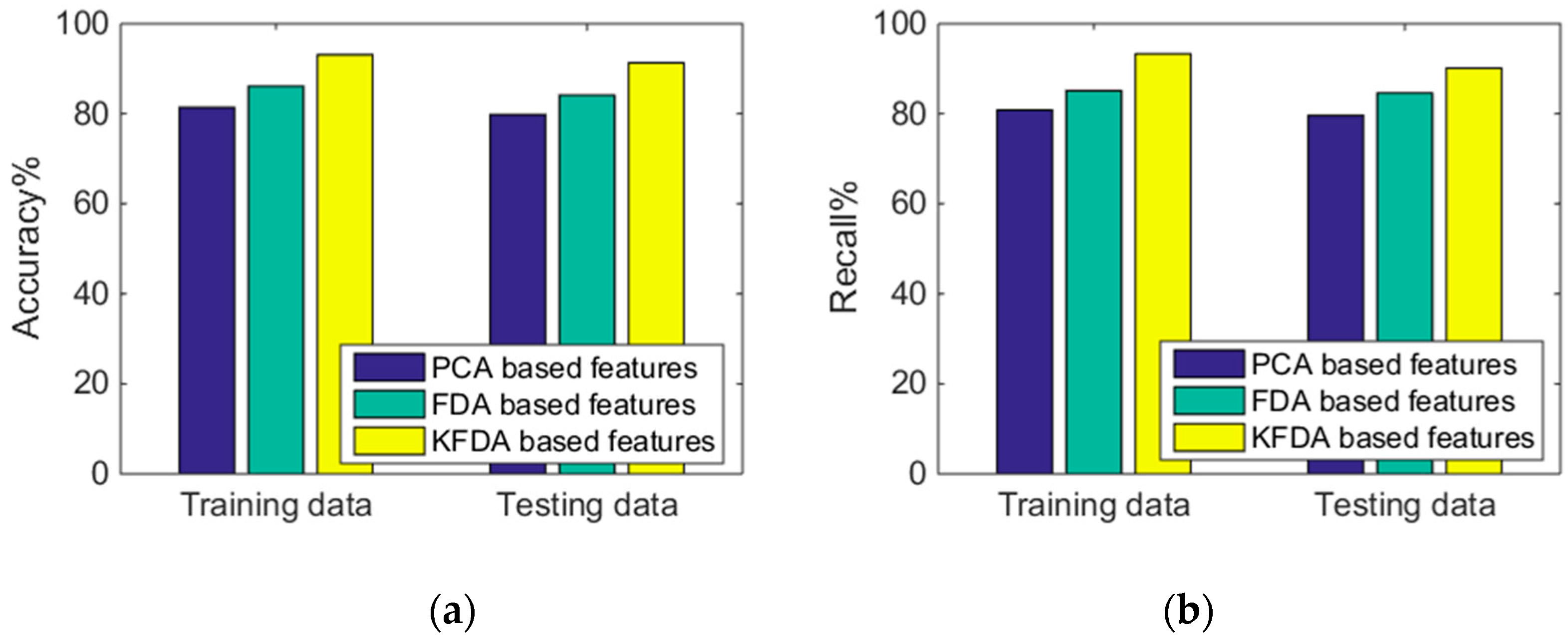

4.3.1. PCA-Based Features versus FDA-Based Features versus KFDA-Based Features

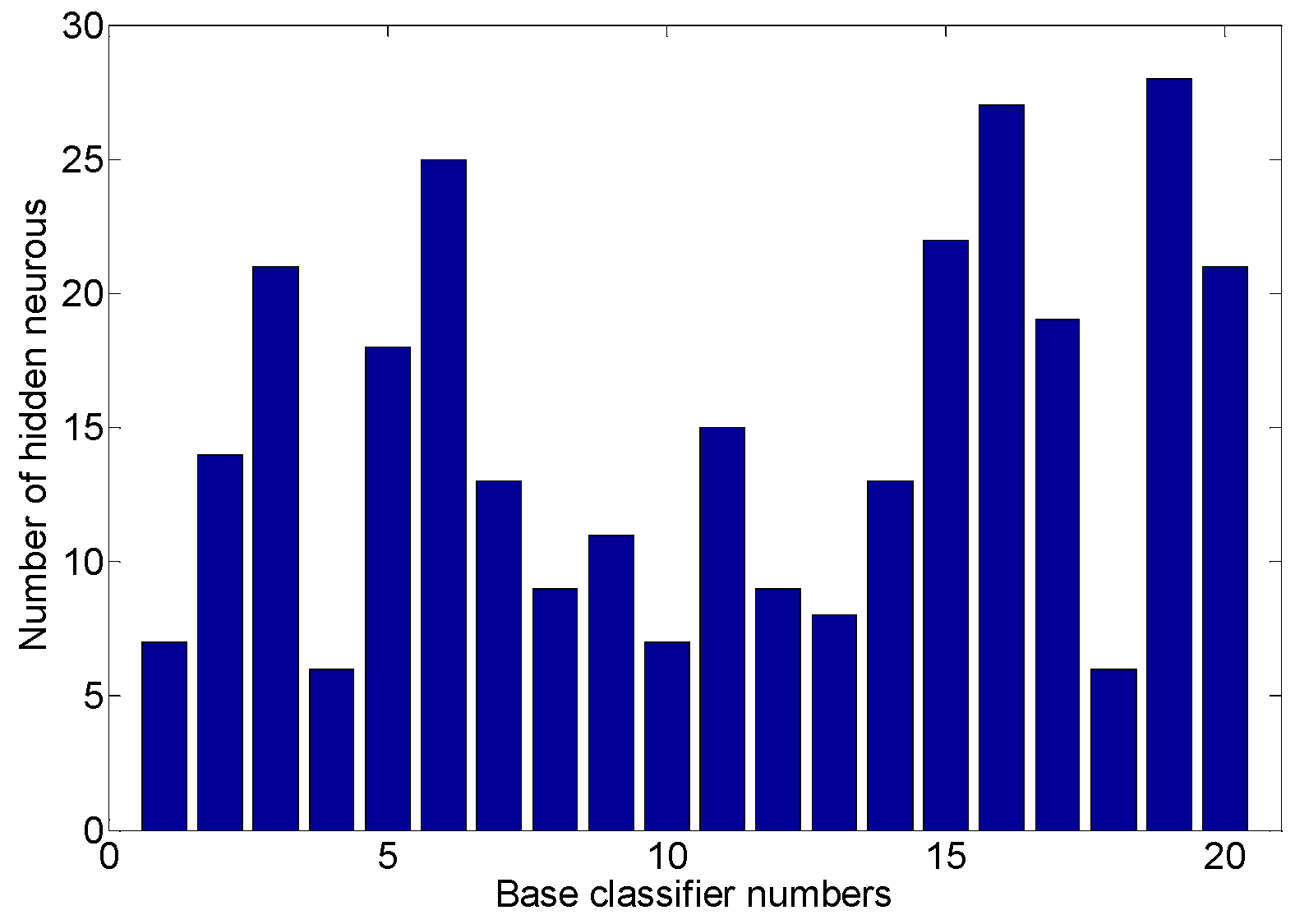

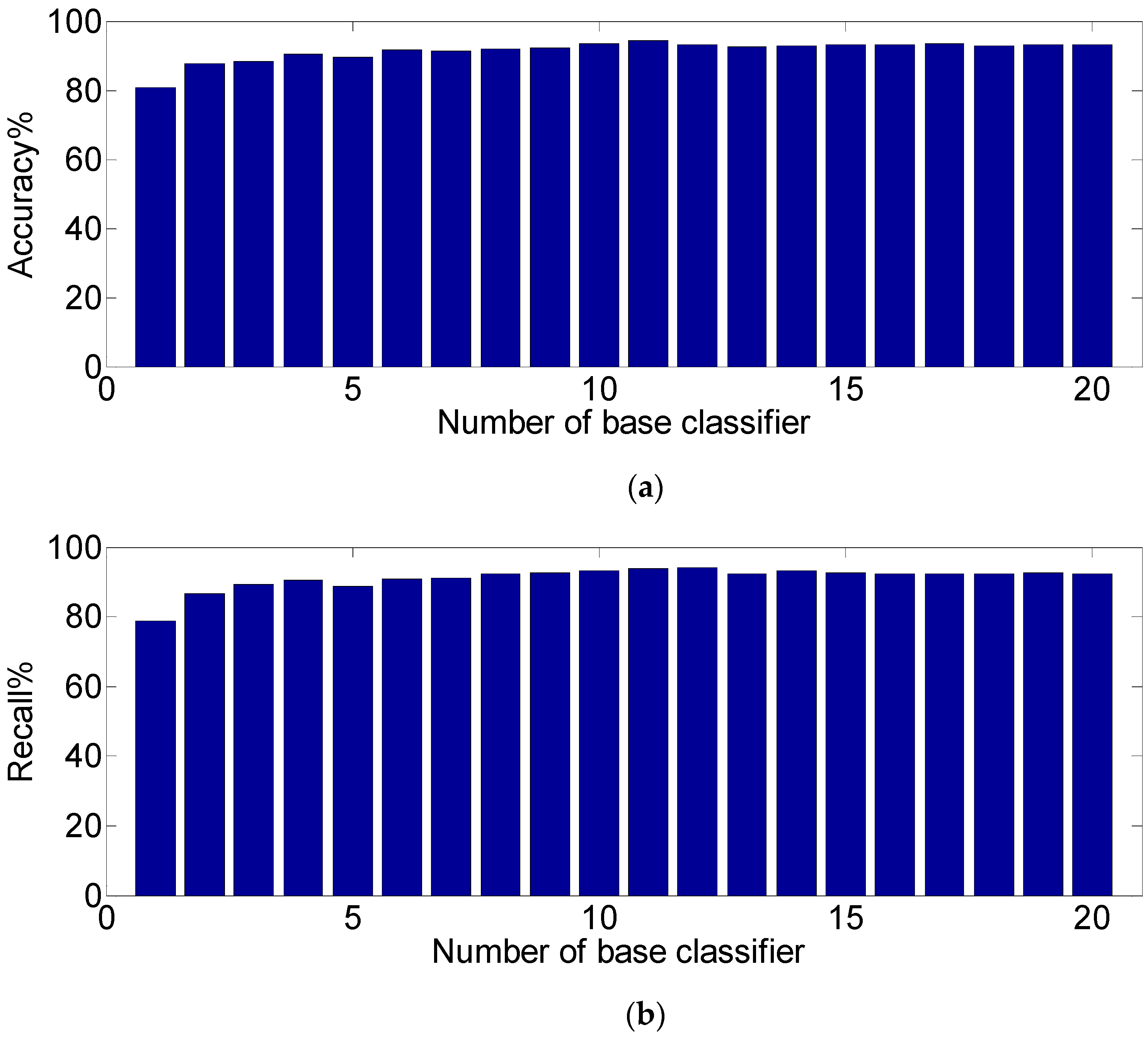

4.3.2. The Performance of Base Classifier

4.3.3. The Influence of Base Classifiers with or without Selection

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Choudhury, T.; Borriello, G.; Consolvo, S.; Haehnel, D.; Harrison, B.; Hemingway, B.; Hightower, J.; Koscher, K.; LaMarca, A.; Landay, J.A.; et al. The mobile sensing platform: An embedded activity recognition system. IEEE Pervasive Comput. 2008, 7, 32–41. [Google Scholar] [CrossRef]

- Alshurafa, N.; Xu, W.; Liu, J.J.; Huang, M.C.; Mortazavi, B.; Roberts, C.K.; Sarrafzadeh, M. Designing a robust activity recognition framework for health and exergaming using wearable sensors. IEEE J. Biomed. Health Inform. 2014, 18, 1636–1646. [Google Scholar] [CrossRef] [PubMed]

- Mooney, R.; Corley, G.; Godfrey, A.; Quinlan, L.R.; ÓLaighin, G. Inertial sensor technology for elite swimming performance analysis: A systematic review. Sensors 2016, 16, 18. [Google Scholar] [CrossRef] [PubMed]

- Cornacchia, M.; Ozcan, K.; Zheng, Y.; Velipasalar, S. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sens. J. 2016, 17, 386–403. [Google Scholar] [CrossRef]

- Xia, J.; Siochi, A. A real-time respiratory motion monitoring system using KINECT: Proof of concept. Med. Phys. 2012, 39, 2682–2685. [Google Scholar] [CrossRef] [PubMed]

- Qin, S.; Zhu, X.; Yang, Y. Real-time hand gesture recognition from depth images using convex shape decomposition method. J. Signal Process. Syst. 2014, 74, 47–58. [Google Scholar] [CrossRef]

- Procházka, A.; Charvátová, H.; Vyšata, O.; Kopal, J.; Chambers, J. Breathing analysis using thermal and depth imaging camera video records. Sensors 2017, 17, 1408. [Google Scholar] [CrossRef] [PubMed]

- Sargano, A.B.; Angelov, P.; Habib, Z. Comprehensive Review on Handcrafted and Learning-Based Action Representation Approaches for Human Activity Recognition. Appl. Sci. 2017, 7, 110. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Xue, B.; Zhou, M.; Ji, B.; Li, Y. Depth-based human fall detection via shape features and improved extreme learning machine. IEEE J. Biomed. Health Inform. 2014, 18, 1915–1922. [Google Scholar] [CrossRef]

- Chaquet, J.M.; Carmona, E.J.; Fernández-Caballero, A. A survey of video datasets for human action and activity recognition. Comput. Vis. Image Underst. 2013, 117, 633–659. [Google Scholar] [CrossRef]

- Suryadevara, N.K.; Mukhopadhyay, S.C. Wireless Sensor Network Based Home Monitoring system for Wellness Determination of Elderly. IEEE Sens. J. 2012, 12, 1965–1972. [Google Scholar] [CrossRef]

- Tapia, E.M.; Intille, S.S.; Larson, K. Activity recognition in the home using simple and ubiquitous sensors. Proc. Pervasive 2004, 3001, 158–175. [Google Scholar]

- Chen, Z.; Zhu, Q.; Chai, S.Y.; Zhang, L. Robust human activity recognition using smartphone sensors via CT-PCA and online SVM. IEEE Trans. Ind. Inform. 2017, 13, 3070–3080. [Google Scholar] [CrossRef]

- Garciaceja, E.; Brena, R.F.; Carrascojimenez, J.C.; Garrido, L. Long-term activity recognition from wristwatch accelerometer data. Sensors 2014, 14, 22500–22524. [Google Scholar] [CrossRef]

- Gupta, P.; Dallas, T. Feature selection and activity recognition system using a single triaxial accelerometer. IEEE Trans. Bio-Med. Eng. 2014, 61, 1780–1786. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L. Physical human activity recognition using wearable sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

- Zhang, Z.; Poslad, S. Improved use of foot force sensors and mobile phone GPS for mobility activity recognition. IEEE Sens. J. 2014, 14, 4340–4347. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Pervasive Computing; Springer: Berlin, Germany, 2004; pp. 1–17. [Google Scholar]

- Wu, D.; Wang, Z.; Chen, Y.; Zhao, H. Mixed-kernel based weighted extreme learning machine for inertial sensor based human activity recognition with imbalanced dataset. Neurocomputing 2016, 190, 35–49. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. A survey of online activity recognition using mobile phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1166–1172. [Google Scholar] [CrossRef] [PubMed]

- Cheng, W.C.; Jhan, D.M. Triaxial accelerometer-based fall detection method using a self-constructing cascade-AdaBoost-SVM classifier. IEEE J. Biomed. Health Inform. 2013, 17, 411–419. [Google Scholar] [CrossRef] [PubMed]

- Maurer, U.; Smailagic, A.; Siewiorek, D.P.; Deisher, M. Activity recognition and monitoring using multiple sensors on different body positions. In Proceedings of the Workshop BSN, Cambridge, MA, USA, 3–5 April 2006; pp. 113–116. [Google Scholar]

- Zhang, M.; Sawchuk, A.A. Human daily activity recognition with sparse representation using wearable sensors. IEEE J. Biomed. Health Inform. 2013, 17, 553–560. [Google Scholar] [CrossRef]

- Banos, O.; Pomares, H.; Rojas, F.; Delgado-Marquez, B.; Valenzuela, O. Human activity recognition based on a sensor weighting hierarchical classifier. Soft Comput. 2013, 17, 333–343. [Google Scholar] [CrossRef]

- Kwon, Y.; Kang, K.; Bae, C. Unsupervised learning for human activity recognition using smartphone sensor. Expert Syst. Appl. 2014, 41, 6067–6074. [Google Scholar] [CrossRef]

- Chernbumroong, S.; Shuang, C.; Yu, H. Genetic algorithm-based classifiers fusion for multisensor activity recognition of elderly people. IEEE J. Biomed. Health Inform. 2014, 19, 282–289. [Google Scholar] [CrossRef]

- Catal, C.; Tufekci, S.; Pirmit, E.; Kocabag, G. On the use of ensemble of classifiers for accelerometer-based activity recognition. Appl. Soft Comput. 2015, 37, 1018–1022. [Google Scholar] [CrossRef]

- Gibson, R.M.; Amira, A.; Ramzan, N.; Casaseca-De-La-Higuera, P.; Pervez, Z. Multiple comparator classifier framework for accelerometer-based fall detection and diagnostic. Appl. Soft Comput. 2016, 39, 94–103. [Google Scholar] [CrossRef]

- Visentini, I.; Snidaro, L.; Foresti, G.L. Diversity-aware classifier ensemble selection via f-score. Inf. Fusion 2016, 28, 24–43. [Google Scholar] [CrossRef]

- Mousavi, R.; Eftekhari, M. A new ensemble learning methodology based on hybridization of classifier ensemble selection approaches. Appl. Soft Comput. 2015, 37, 652–666. [Google Scholar] [CrossRef]

- Bouchaffra, D.; Bouchaffra, D. An efficient ensemble pruning approach based on simple coalitional games. Inf. Fusion 2017, 34, 28–42. [Google Scholar]

- Pavey, T.G.; Gilson, N.D.; Gomersall, S.R.; Clark, B.; Trost, S.G. Field evaluation of a random forest activity classifier for wrist-worn accelerometer data. J. Sci. Med. Sport 2017, 20, 75–80. [Google Scholar] [CrossRef]

- Lee, Y.S.; Cho, S.B. Activity recognition with android phone using mixture-of-experts co-trained with labeled and unlabel. Neurocomputing 2014, 126, 106–115. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, C.; Zhang, J.; Xu, J.; Li, M. An ensemble approach for activity recognition with accelerometer in mobile-phone. In Proceedings of the 2014 IEEE 17th International Conference on Computational Science and Engineering, Chengdu, China, 19–21 December 2014; pp. 1469–1474. [Google Scholar]

- Cao, J.; Li, W.; Ma, C.; Tao, Z. Optimizing multi-sensor deployment via ensemble pruning for wearable activity recognition. Inf. Fusion 2018, 41, 68–79. [Google Scholar] [CrossRef]

- Bayat, A.; Pomplun, M.; Tran, D.A. A study on human activity recognition using accelerometer data from smartphones. Procedia Comput. Sci. 2014, 34, 450–457. [Google Scholar] [CrossRef]

- Daghistani, T.; Alshammari, R. Improving accelerometer-based activity recognition by using ensemble of classifiers. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 128–133. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Hassan, M.M.; Huda, S.; Uddin, M.Z.; Almogren, A.; Alrubaian, M. Human Activity Recognition from Body Sensor Data using Deep Learning. J. Med. Syst. 2018, 42, 99. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, Z.; Wang, S.; Chen, Z. Extreme learning machine-based device displacement free activity recognition model. Soft Comput. 2012, 16, 1617–1625. [Google Scholar] [CrossRef]

- Wang, A.; Chen, G.; Yang, J.; Zhao, S.; Chang, C.Y. A comparative study on human activity recognition using inertial sensors in a smart-phone. IEEE Sens. J. 2016, 16, 4566–4578. [Google Scholar] [CrossRef]

- Tao, D.; Wen, Y.; Hong, R. Multi-column bi-directional long short-termmemory for mobile devices-based human activity recognition. IEEE Internet Things J. 2016, 3, 1124–1134. [Google Scholar] [CrossRef]

- Forster, K.; Brem, P.; Roggen, D.; Tröster, G. Evolving discriminative features robust to sensor displacement for activity recognition in body area sensor net-works. In Proceedings of the 5th International Conference on Intelligent Sensors, Sensor Networks and Information, Melbourne, VIC, Australia, 7–10 December 2009; pp. 43–48. [Google Scholar]

- Wang, Z.; Wu, D.; Chen, J.; Ghoneim, A.; Hossain, M.A. A triaxial accelerometer-based human activity recognition via EEMD-based features and game-theory-based feature selection. IEEE Sens. J. 2016, 16, 3198–3207. [Google Scholar] [CrossRef]

- Damaševičius, R.; Vasiljevas, M.; Šalkevičius, J.; Woźniak, M. Human activity recognition in AAL environments using random projections. Comput. Math Method Med. 2016, 2016, 4073584. [Google Scholar] [CrossRef]

- Bersch, S.; Azzi, D.; Khusainov, R.; Achumba, I.; Ries, J. Sensor data acquisition and processing parameters for human activity classification. Sensors 2014, 14, 4239–4270. [Google Scholar] [CrossRef]

- Mika, S.; Ratsch, G.; Muller, K.R. A mathematical pro-gramming approach to the kernel Fisher algorithm. Adv. Neural Inf. Process. Syst. 2001, 13, 591–597. [Google Scholar]

- Peng, C.S. Data Classification Algorithm Based on Kernel Fisher Discriminant; Zhejiang Sci-Tech University: Hangzhou, China, 2011. [Google Scholar]

- Hung, W.L.; Lee, E.S.; Chuang, S.C. Balanced bootstrap resampling method for neural model selection. Comput. Math. Appl. 2011, 62, 4576–4581. [Google Scholar] [CrossRef]

- Li, F.; Zhang, J.; Oko, E.; Wang, M. Modelling of a post-combustion CO2 capture process using neural networks. Fuel 2015, 151, 156–163. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, D.; Gravina, R.; Fortino, G.; Jiang, Y.; Tang, K. Kernel fusion based extreme learning machine for cross-location activity recognition. Inf. Fusion 2017, 37, 1–9. [Google Scholar] [CrossRef]

- Hu, L.; Chen, Y.; Wang, J.; Hu, C.; Jiang, X. OKRELM: Online kernelized and regularized extreme learning machine for wearable-based activity recognition. Int. J. Mach. Learn. Cybern. 2017, 6256, 1577–1590. [Google Scholar] [CrossRef]

- Skalak, D.B. The sources of increased accuracy for two proposed boosting algorithms. In Proceedings of the 13th American Association for Artificial Intelligence, Integrating Multiple Learned Models Workshop, Portland, OR, USA, 4–8 August 1996; pp. 120–125. [Google Scholar]

- Cunningham, P.; Carney, J. Diversity versus quality in classification ensembles based on feature selection. In Machine Learning: ECML 2000; López de Mántaras, R., Plaza, E., Eds.; Lecture Notes in Computer Science (Lecture Notes in Artificial Intelligence); Springer: Berlin/Heidelberg, Germany, 2000; Volume 1810. [Google Scholar]

- Partridge, D.; Krzanowski, W.J. Software diversity: Practical statistics for its measurement and exploitation. Inf. Softw. Technol. 1997, 39, 707–717. [Google Scholar] [CrossRef]

| Author | Year | Activities (Number Studied) | Classifier and Accuracy | Contribution |

|---|---|---|---|---|

| Catal [29] | 2015 | Walking, upstairs, downstairs, sitting, jogging, and standing (6) | Ensemble J48 decision tree, multilayer perceptron (MLP) and logistic regression (72.73%–98.7%) | Examining the power of ensemble of classifiers for activity recognition |

| Lee [35] | 2014 | Still, walk, and run (3) | Mixture-of-experts (ME) model (92.56% ± 1.05%) | The global–local cotraining algorithm was used to train the ME model |

| Yuan [36] | 2014 | Walking, running, standing, ascending and descending stairs (5) | Average combining extreme learning machine (ELM) (95.02%) | A novel ensemble learning algorithm was proposed |

| Cao [37] | 2018 | Daily and sports activities dataset (18) Opportunity (4) | ELM-based ensemble pruning for sports activities dataset (0.7848 ± 0.0077), opportunity dataset (0.9142 ± 0.0098) | Optimizing multisensor deployment by ensemble pruning |

| Bayat [38] | 2014 | Slow-walk, fast-walk, aerobic dancing, stairs-up, stairs-down (5) | MLP, LogitBoost, and SVM classifiers (91.15%) | Investigating different fusion methods to obtain an optimal set of classifiers |

| Ronao [40] | 2016 | Stand, walk, stair up, stair down, run, and lying (6) | Deep convolutional neural network; 94.79% accuracy with raw sensor data | Exploiting the inherent characteristics of activities by smartphone sensors |

| Khan [22] | 2010 | Three activity states including activities such as walking, standing, etc. (15) | Artificial neural nets (97.9%) | Linear discriminant analysis and a hierarchical approach |

| Hassan [41] | 2018 | Activities including standing, sitting, walking, lying down, stand-to-sit, etc. (12) | Deep belief network (DBN) (97.5%) | Kernel principal component analysis and linear discriminant analysis were performed to obtain more robust features |

| Chen [42] | 2012 | Daily activities including staying still, walking, running, going upstairs, and going downstairs (5) | ELM (79.68%) | Principal component analysis and ELM were utilized to realize location-adaptive activity recognition |

| Wang [43] | 2016 | Walking, upstairs, downstairs, sitting, standing, and lying (6) | k-Nearest Neighbor, KNN (87.8%) Naïve Bayes (90.1%) | Hybrid feature selection method for smart-phone-based activity recognition |

| Tao [44] | 2016 | Jumping, running, walking, step walking, walking quickly, down stairs, up stairs (7) | A new ensemble classifier termed multicolumn bidirectional long short-term memory (BLSTM); average error rates: 10.6% | Two-directional feature for BLSTM-based activity recognition |

| Wang [46] | 2016 | Standing, walking jumping, bicycling, etc. (9) | KNN with 21 features (76.42%) | Game-theory-based feature selection was used for selecting distinguished features |

| Age | Height (cm) | Weight (kg) | |

|---|---|---|---|

| Range | 20–38 | 160–178 | 45–85 |

| Mean | 29.6 | 166 | 65.6 |

| Std | 6.7 | 5.6 | 13.5 |

| Activity Number | Sum (in Seconds) | Activity Number | Sum (in Seconds) |

|---|---|---|---|

| 1 walk (W) | 1342 | 5 go up stairs (GU) | 1123 |

| 2 stand (S) | 1253 | 6 sit on a chair (SC) | 879 |

| 3 jump (J) | 976 | 7 run forward (R) | 1143 |

| 4 go down stairs (GD) | 1034 | 8 lie (L) | 769 |

| W | S | J | GD | GU | SC | R | L | |

|---|---|---|---|---|---|---|---|---|

| W | 458 | 6 | 6 | 28 | 24 | 17 | 19 | 6 |

| S | 5 | 449 | 4 | 6 | 6 | 1 | 10 | 2 |

| J | 9 | 6 | 371 | 22 | 34 | 12 | 12 | 7 |

| GD | 31 | 6 | 17 | 399 | 5 | 17 | 11 | 3 |

| GU | 21 | 6 | 26 | 4 | 395 | 4 | 11 | 5 |

| SC | 13 | 3 | 9 | 10 | 2 | 432 | 15 | 3 |

| R | 15 | 9 | 13 | 9 | 14 | 16 | 441 | 5 |

| L | 2 | 1 | 0 | 0 | 1 | 3 | 2 | 350 |

| W | S | J | GD | GU | SC | R | L | |

|---|---|---|---|---|---|---|---|---|

| W | 528 | 2 | 3 | 13 | 8 | 5 | 2 | 3 |

| S | 3 | 458 | 2 | 3 | 4 | 3 | 8 | 2 |

| J | 2 | 1 | 447 | 6 | 8 | 5 | 2 | 2 |

| GD | 11 | 2 | 5 | 452 | 3 | 10 | 5 | 1 |

| GU | 14 | 7 | 9 | 2 | 432 | 1 | 6 | 1 |

| SC | 9 | 4 | 4 | 12 | 2 | 442 | 9 | 5 |

| R | 6 | 8 | 2 | 4 | 7 | 14 | 476 | 5 |

| L | 1 | 1 | 1 | 0 | 1 | 1 | 2 | 352 |

| W | S | J | GD | GU | SC | R | L | |

|---|---|---|---|---|---|---|---|---|

| W | 531 | 2 | 3 | 10 | 8 | 5 | 2 | 3 |

| S | 3 | 461 | 2 | 3 | 4 | 2 | 6 | 2 |

| J | 2 | 1 | 450 | 5 | 6 | 5 | 2 | 2 |

| GD | 10 | 2 | 4 | 456 | 3 | 9 | 4 | 1 |

| GU | 12 | 5 | 8 | 2 | 439 | 1 | 5 | 0 |

| SC | 8 | 3 | 3 | 12 | 1 | 446 | 9 | 5 |

| R | 5 | 9 | 3 | 2 | 6 | 12 | 480 | 5 |

| L | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 353 |

| Classifier | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 |

| Diversity | 0.634 | 0.275 | 0.876 | 0.403 | 0.852 | 0.605 | 0.247 | 0 | 0.284 | 0.786 |

| Ranking | 8 | 19 | 4 | 13 | 5 | 9 | 20 | 1 | 18 | 6 |

| Classifier | C11 | C12 | C13 | C14 | C15 | C16 | C17 | C18 | C19 | C20 |

| Diversity | 0.389 | 0.685 | 0.372 | 0.968 | 0.417 | 0.322 | 0.587 | 0.914 | 0.462 | 0.303 |

| Ranking | 14 | 7 | 15 | 2 | 12 | 16 | 10 | 3 | 11 | 17 |

| Combination Rule | Nr Classifiers | Accuracy% | Recall% |

|---|---|---|---|

| Fusion | 20 | 93.15 | 92.35 |

| Selection | 15 | 93.08 | 92.78 |

| Selection | 10 | 93.37 | 93.17 |

| Selection | 5 | 89.68 | 88.68 |

| Random | 15 | 84.68 | 84.32 |

| Random | 10 | 85.56 | 84.47 |

| Random | 5 | 82.43 | 81.67 |

| Method | Best Base ELM | SVM | Bagging | Adaboost | Proposed Method |

|---|---|---|---|---|---|

| Number of classifiers | 1 | 1 | 11 | 11 | 11 |

| Accuracy % | 81.85 | 83.42 | 85.38 | 88.63 | 94.28 |

| Recall % | 80.18 | 83.29 | 84.72 | 87.69 | 93.89 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Y.; Wang, X.; Chen, L.; Liu, Z. Wearable Sensor-Based Human Activity Recognition via Two-Layer Diversity-Enhanced Multiclassifier Recognition Method. Sensors 2019, 19, 2039. https://doi.org/10.3390/s19092039

Tian Y, Wang X, Chen L, Liu Z. Wearable Sensor-Based Human Activity Recognition via Two-Layer Diversity-Enhanced Multiclassifier Recognition Method. Sensors. 2019; 19(9):2039. https://doi.org/10.3390/s19092039

Chicago/Turabian StyleTian, Yiming, Xitai Wang, Lingling Chen, and Zuojun Liu. 2019. "Wearable Sensor-Based Human Activity Recognition via Two-Layer Diversity-Enhanced Multiclassifier Recognition Method" Sensors 19, no. 9: 2039. https://doi.org/10.3390/s19092039

APA StyleTian, Y., Wang, X., Chen, L., & Liu, Z. (2019). Wearable Sensor-Based Human Activity Recognition via Two-Layer Diversity-Enhanced Multiclassifier Recognition Method. Sensors, 19(9), 2039. https://doi.org/10.3390/s19092039