3.2.1. Empathy Module

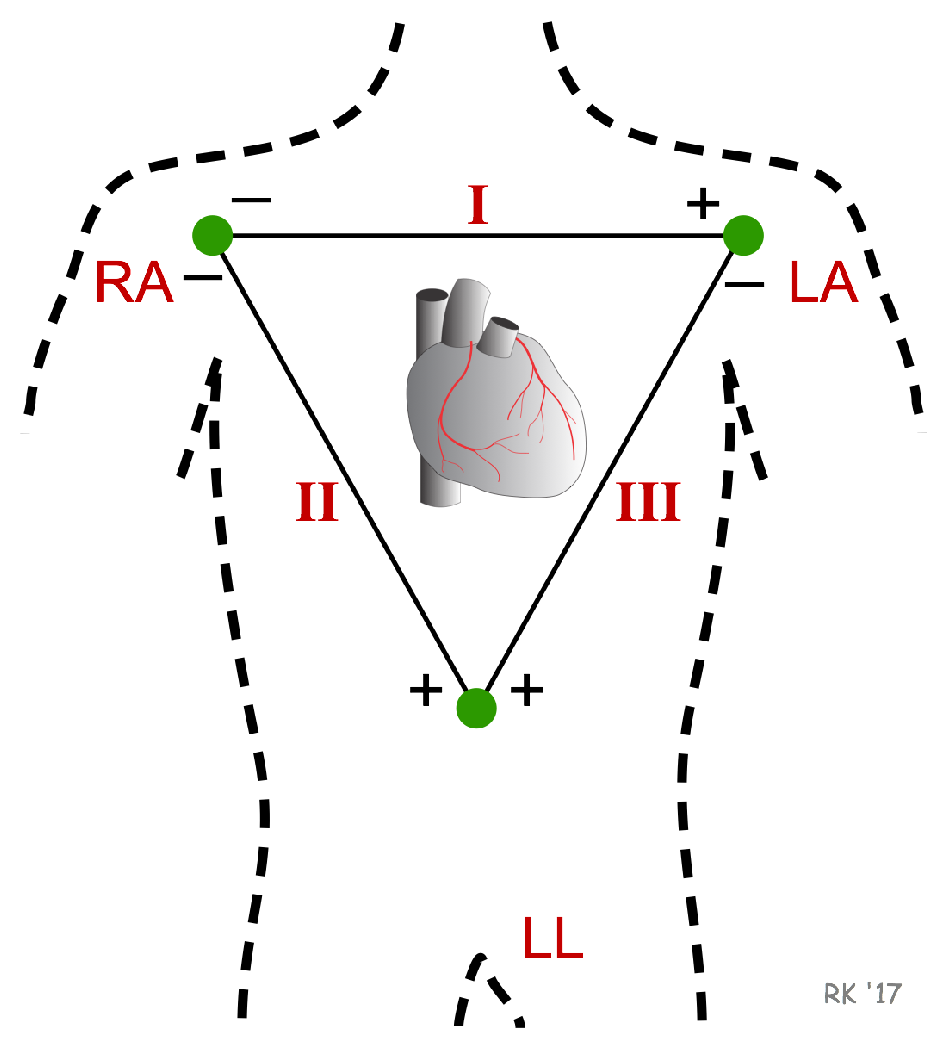

One of the problems encountered is directly related to the generalization of the model, which allows us to detect emotion for any person. To try to make this generalization, an experiment was developed in which a group of individuals were subjected to different visual and auditory stimuli. During this process, four data sets were extracted. The first one comprises the personality, the emotion detected through a webcam, different bio-signals and the emotion that the user felt before each stimulus. The personality was extracted using the OCEAN test [

39]. This test allowed us to group the subjects by their personalities. The second parameter set was formed by the emotions that the users expressed through the variations of their faces, when they were submitted to the stimuli. The third data set relates to the bio-signals (ECG, PPG, EDA) that were acquired during the stimulus. Finally, a subjective input, which was obtained through the SAM (Self-Assessment Manikin) test [

40]. In this test, the user expressed the emotion he felt when the stimulus presented. This process can be seen in the following

Figure 6. In order to obtain generalized models of emotion detection, a total of 150 people were used in a residence in the north of Portugal.

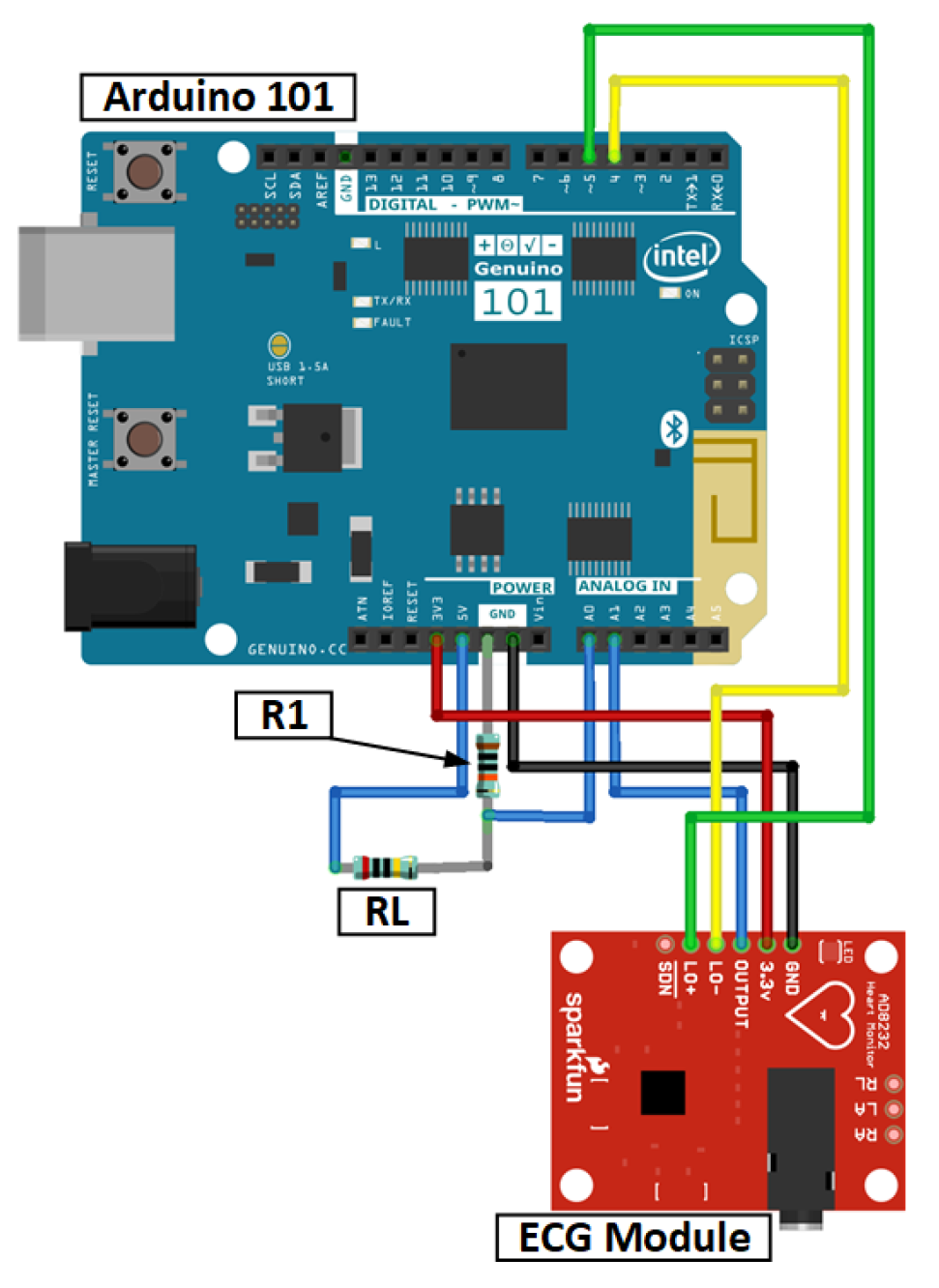

The cognitive service was divided into two parts: one part specialized in the recognition of emotions through image processing (capturing data through the camera) and the other part in which bio-signals are used to recognize emotions (capturing data through sensors). These elements are explained below.

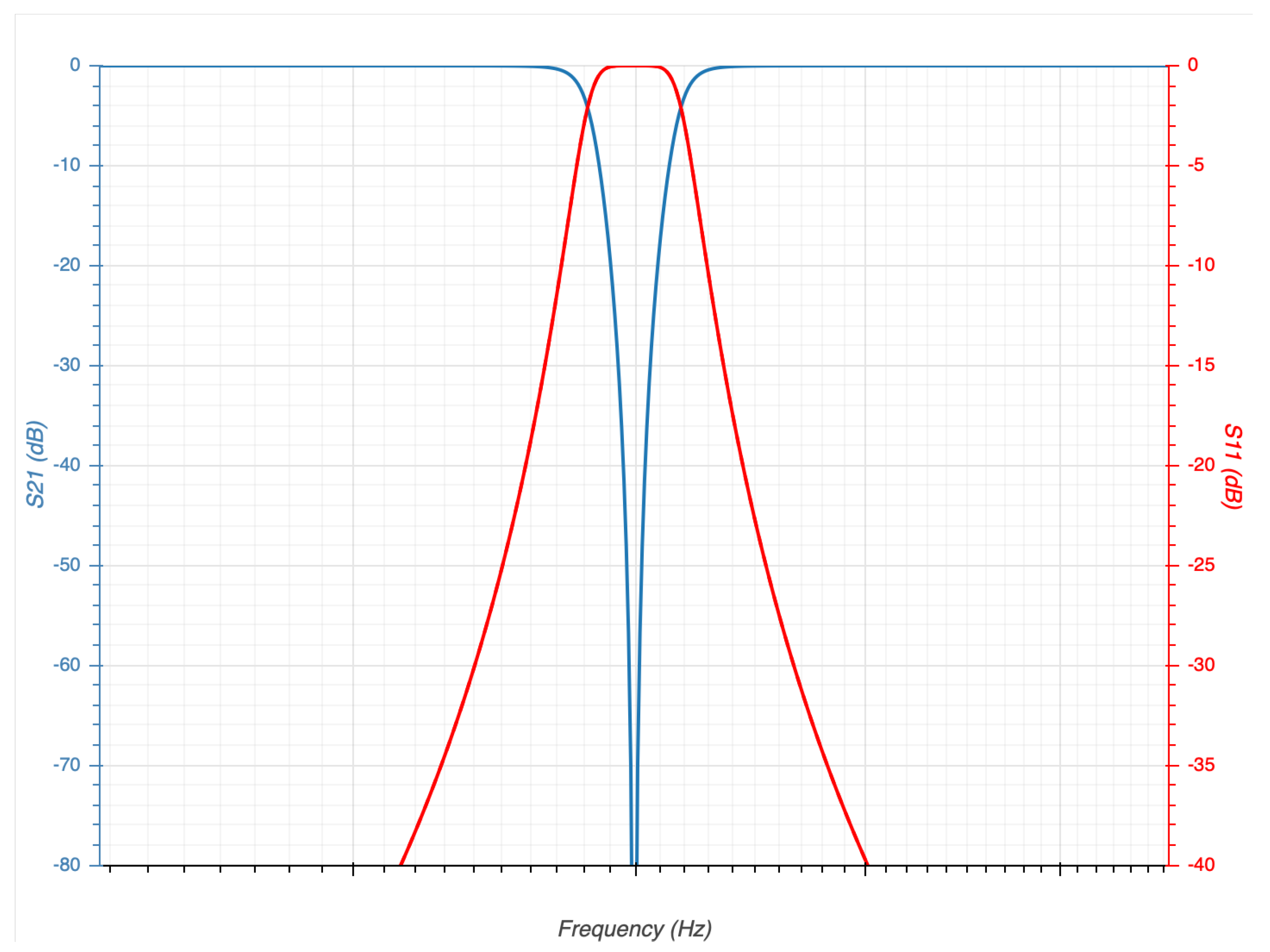

This database incorporates signals such as electroencephalography, respiratory frequency, electrocardiography, electrical activity of the skin, among others. For our tests, we have decided to use only three of these signals (ECG, PPG and EDA), since they do not generate any kind of stress in the users unlike the EEG [

42,

43]. In this dataset, all the signals were filtered to eliminate the electrical noise of 50Hz; this is important to perform a good classification process. Our system performs pre-processing signals, for which a software

Butterworth filter band-stop type was applied. The structure of our filtering design is detailed in

Table 4 and the response of our filter can be seen in

Figure 7.

This filter cuts the noise introduced by the electrical network; in addition, this filter architecture is the most used in applications of signal filtering [

44].

Once a clean signal is obtained, the next step is to extract the statistical characteristic of each of the signals; six characteristics have been extracted from each of the signals as suggested by Picard et al. in [

45]:

Mean of the absolute values of the first difference (AFD)

Mean of the normalized absolute values of the first difference (AFDN)

Mean of the absolute values of the second difference (ASD)

Mean of the normalized absolute values of the first difference (ASDN)

The six characteristics, extracted by each signal (ECG, EDA, PPG), represent the inputs of the neural network. In this way, we have a neural network model with 18 inputs and seven outputs. These seven outputs correspond to the seven emotions that the system is able to recognize. The seven emotions to recognize are basic emotions, which are the same emotions to recognize using the camera. These seven emotions are the following:

Afraid,

Angry,

Disgusted,

Happy,

Neutral,

Sad and

Surprised. The parameters of the best model obtained are represented in

Table 5:

In the same way that the parameters of the network used to analyse the images were modified, the network that analyses the signal was modified to try to obtain the best results.

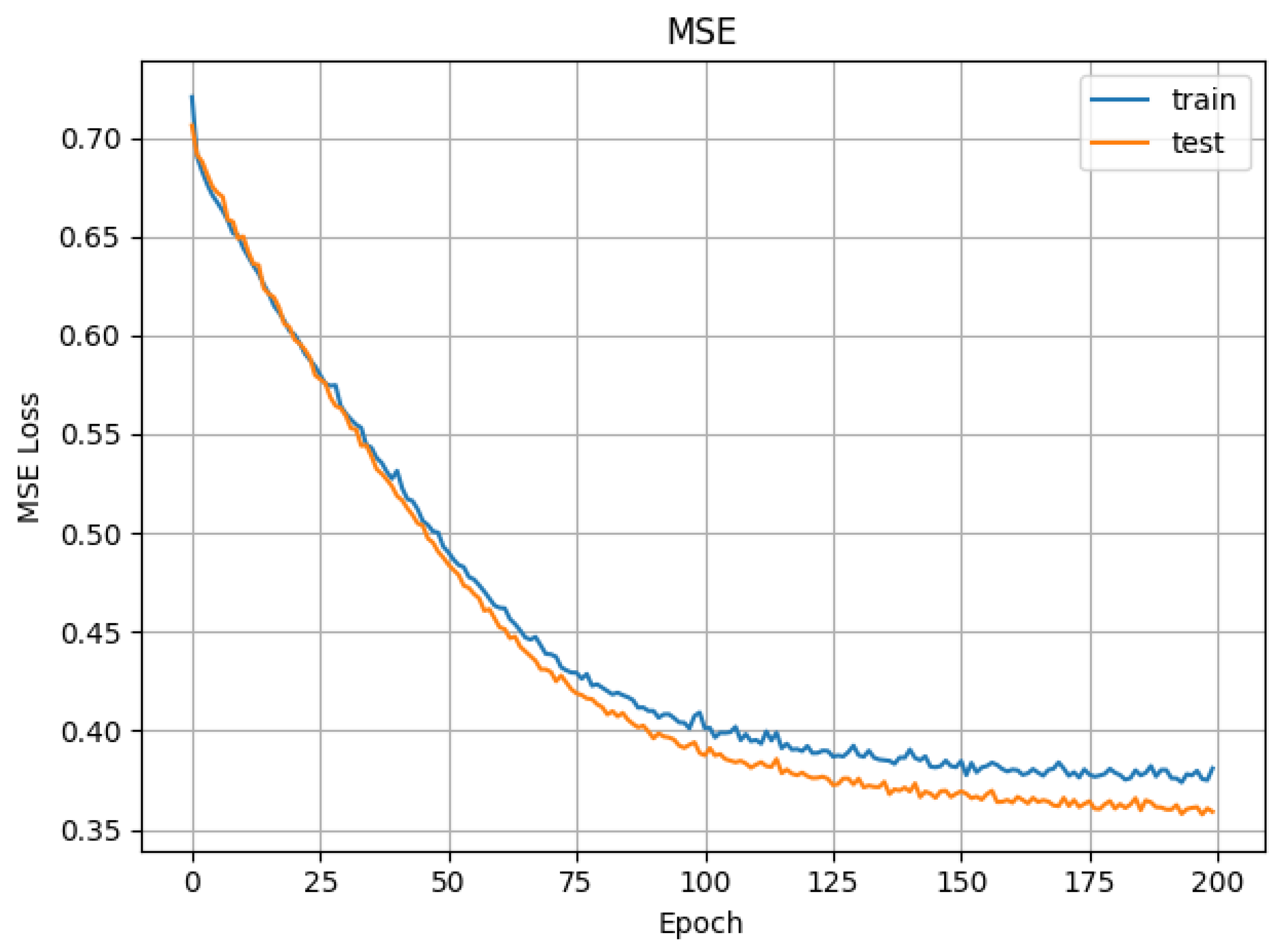

The mean square error obtained in the training and test phases are displayed in

Figure 8. After the very first epochs, the values converge rapidly and remain constant almost linearly throughout the remaining epochs. The low values of the mean square error indicate that the training process is being done correctly (the attained values have low variation).

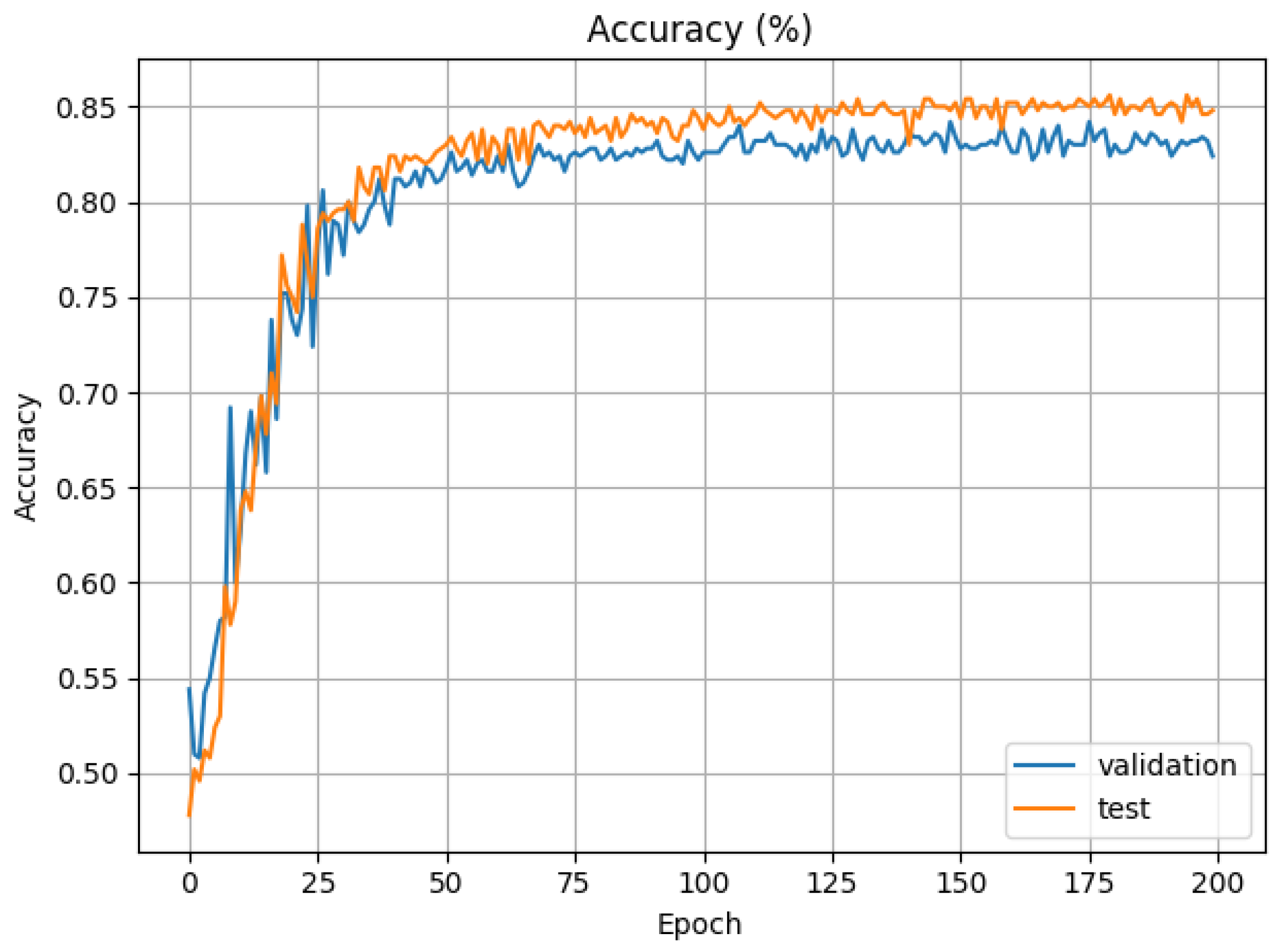

Figure 9 shows the accuracy (or precision) in the validation and test phases. We have achieved a stable 75% of accuracy in the validation process. This shows that, although it is possibly improvable, our current approach already produces relevant results.

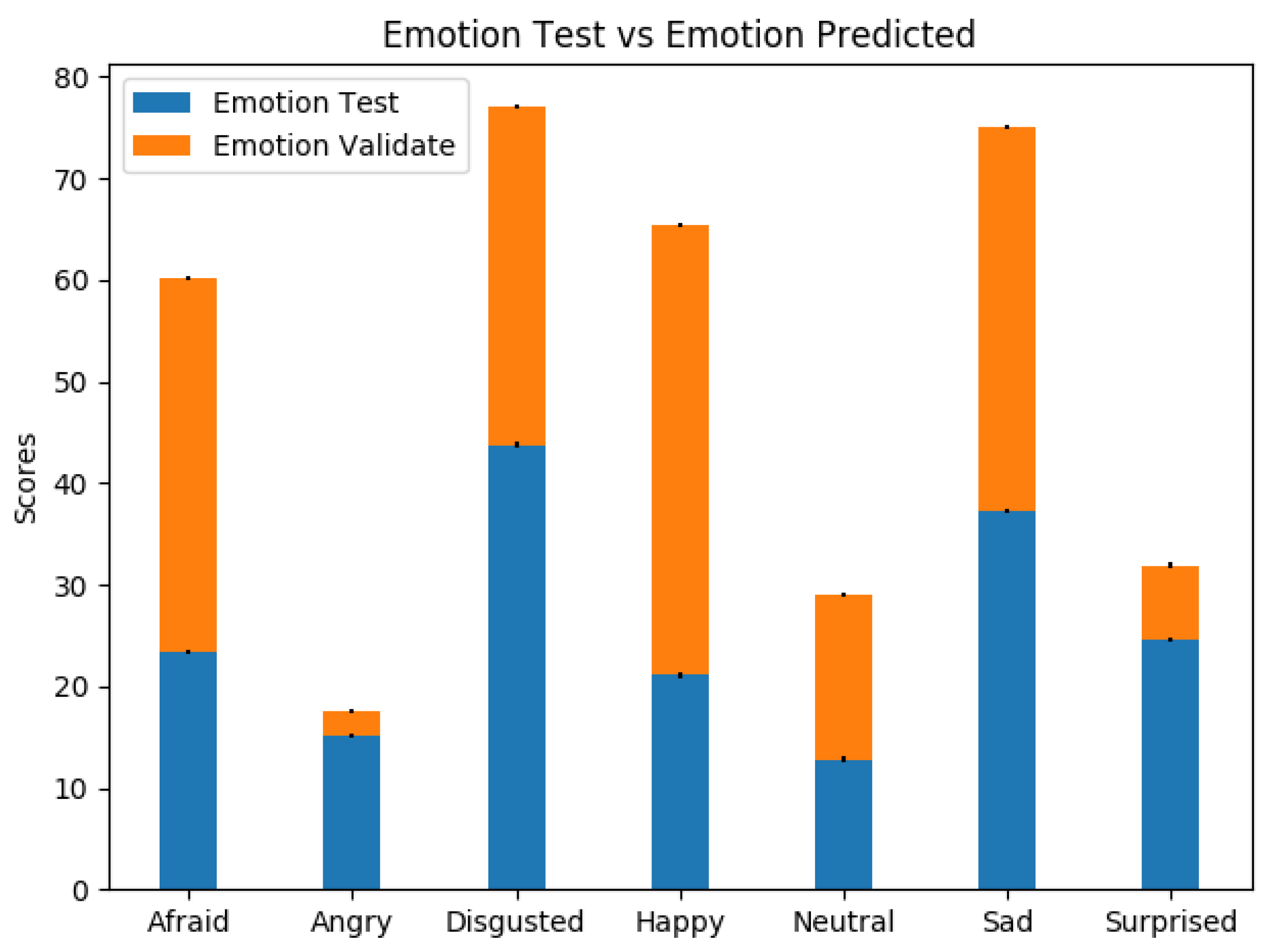

Figure 10 shows a variability in the results obtained in the test phase vs. the validation phase. This variation is due to the fact that the users in the validation face were not static. If some type of movement was not performed, these movements can introduce erroneous data to the systems. When people move, electromyography signals (EMG muscle activity) are introduced into the ECG and, at the same time, these movements can affect the photoplethysmography signals by varying the measurement of PPM. This introduced noise is very difficult to eliminate and, to try to solve it, we have incorporated software filtering employed in [

46,

47].

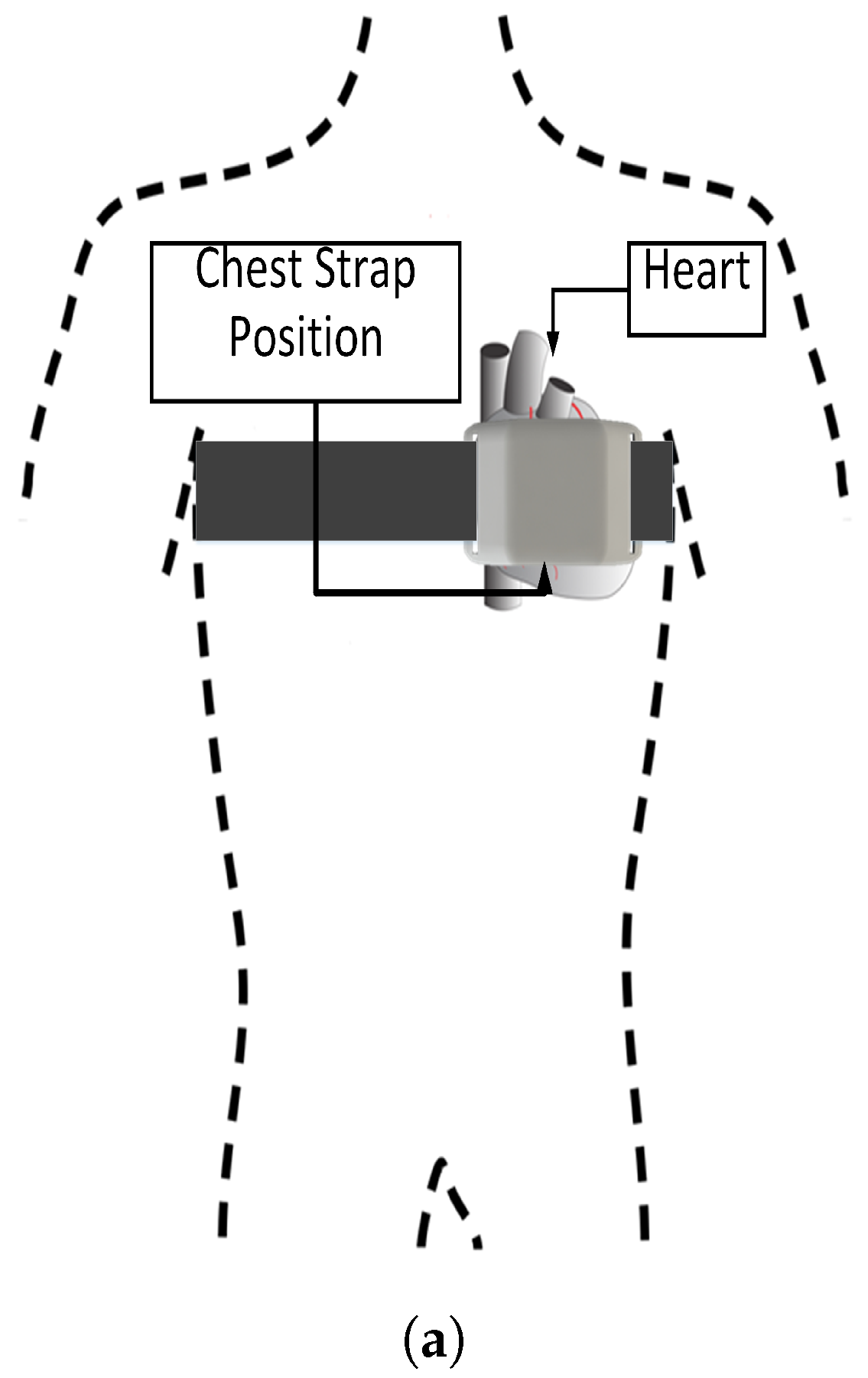

We believe that, with optimized sensor systems (reducing the noise and data drop) and with a more robust CNN, we are able to achieve a higher accuracy and reduce the mean square error. We are currently working in improving the chest strap sensors to reduce the mean square error.

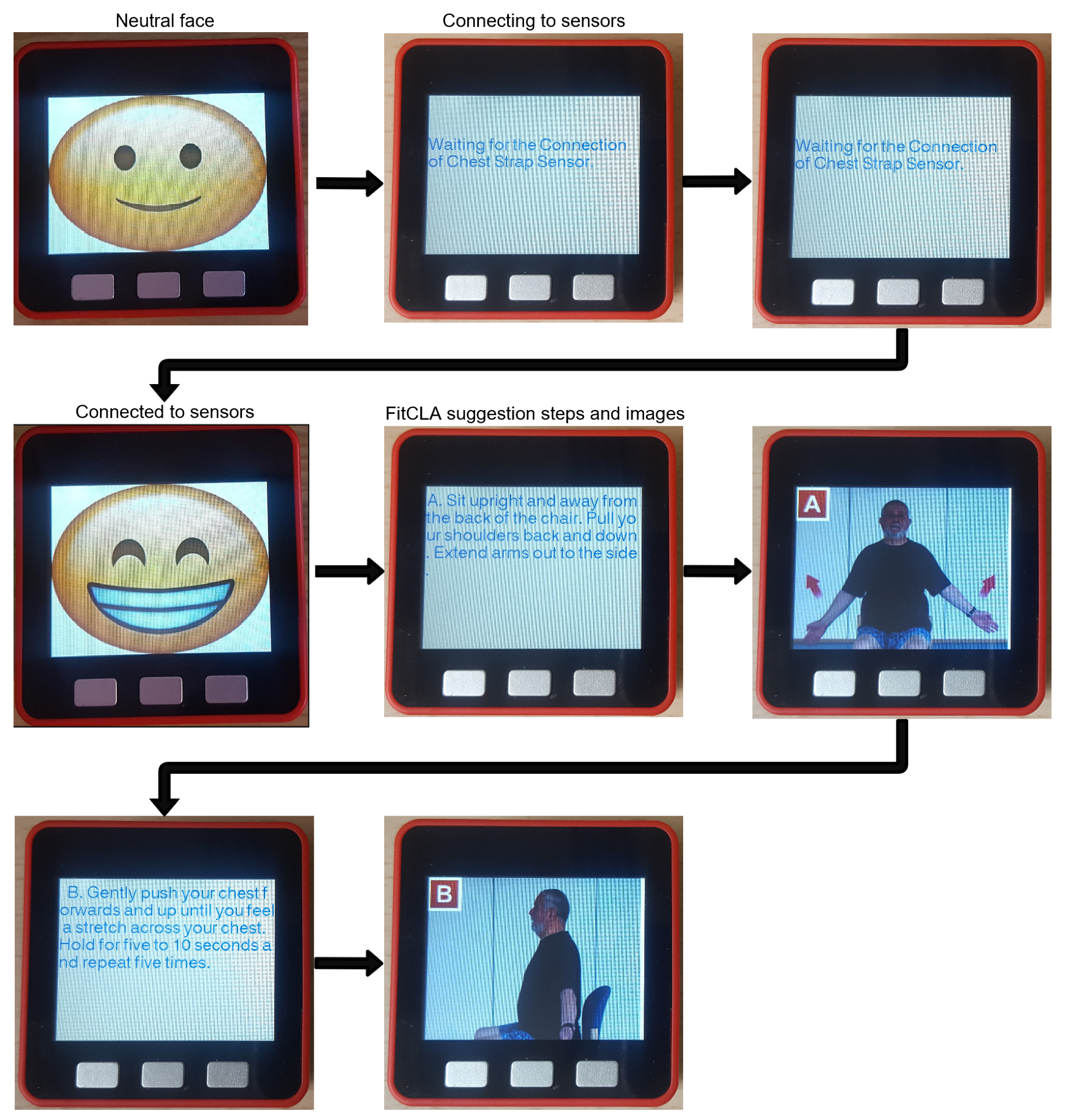

3.2.2. FitCLA

The FitCLA is, in a broader sense, a cognitive assistant platform that aims to help people with cognitive and physical impairments (e.g., memory loss, assisted mobility) by reminding them about future and current events and connecting them with the caregivers for constant monitoring and suggesting playful exercises. It does this by establishing a monitoring environment (with the wristband) and using diverse visual interfaces (the visual assistant, webpages, etc.) to convey information to the users, while providing medical and general information to the caregivers.

The platform uses an interactive process of scheduling events and managing tasks that require little interaction from the users (caregivers and care-receivers alike), thus making the scheduling process simple. Furthermore, the FitCLA has an activities recommender that suggests to the care-receivers activities that have a physical and mental positive impact; this feature follows the active ageing effort. By engaging elderly people in activities (either alone or accompanied), their cognitive and physical functions are improved, and arguably most importantly, they are happier. For instance, there are several findings that simple group memory games helped contain the advance of Alzheimer’s [

48,

49].

The FitCLA is a development spun from the iGenda project [

50,

51,

52], giving way to a robust platform that is interoperable with other systems. To this, the FitCLA is adjusted to currently only suggest exercises, which are the objective of this assistant, keeping the interaction simple with the users to observe their long-term adoption of the EMERALD.

The main components of the FitCLA are four: the agenda manager, the activities recommender, the module manager and the message transport system. Briefly explained, they have the following functionalities:

The agenda manager keeps the information of each user (caregiver and care-receiver) updated and, upon receiving new events, it schedules them in the correct placement;

The activities recommender regularly fetches an activity that the user enjoys performing and is appropriate according to the health condition (often people enjoy activities that are not physically or mentally advised) and schedules them in the user’s free time. It is evolutionary, as it adapts to the user’s likes (by the user acceptance or refusal of the suggestion) and to the current medical condition, e.g., if the user has a broken leg the platform refrains from suggesting walking activities;

The module manager is the gateway for coupling new features and communicating with the different agents.

The message transport system establishes the API of the system. It is the door to the platform internal workings.

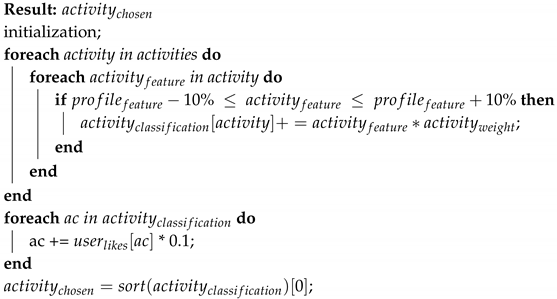

Being more specific in terms of the activities recommender, it uses an internal algorithm that optimizes the activities selection procedure. Each user has a profile in the system, consisting of a table of the personal and medical information, with fields like: “is able to perform hard physical activities”, “should/arms conditioning”. These fields are used to access the user ability to perform certain activities. Each activity is categorized in groups that detail their physical/cognitive impact. For instance, the activity Light gardening is ranked with cognitive impact, with mild impact to shoulders, arms, column and light impact to legs and feet, and using dangerous tools. Meaning that a person with shoulders, arms and column problems would not be advised (minding the level of impairment each user has), neither people with hand problems nor high cognitive disabilities (due to the dangerous tools usage). Each problem is factored in a straightforward algorithm (showed in Algorithm 1) that filters the activities.

| Algorithm 1: FitCLA selection algorithm |

![Sensors 19 01953 i001 Sensors 19 01953 i001]() |

For each activity feature, a weight of importance (at the moment, all weights are the same) is multiplied and summed to the activity classification. The maximum value that can be obtained is , leaving the remainder to the user preference. These values are configurable to each user (meaning that one user may have a higher percentage on their preference), these values being the initials. All of the weights can be changed by the caregivers.

Thereon, the users are inquired at the end of the suggestion if they agree with the suggestion. The response is factored in the weight of the user likes to each activity. This means that the system is steadily learning the preferences of each user. Thus, being slowly optimized to respond to each user preference and needs. Finally, the FitCLA has thresholds that, when achieved, result in either notifying the caregiver of slightly nudging the weights. For instance, in a normal setting, if a user denies activities that closely fit the health profile seven times, the maximum weight of the activity features is decreased and the user likes is increased, the system being able to re-weight the values if this "nudge" is not enough. The caregiver is notified if abnormal behaviours (like the negation of several activities) are reached.

Currently, the FitCLA assumes that, upon acceptance of the users to perform exercises, they truly perform them, thus being the data captured during that period cross-linked with those exercises.

The FitCLA is, at its core, a multi-agent system. Thus, new features are implemented in the form of new agents. Thus, the module manager is an archive of features of each agent/module and, when any agent/module requires a feature, the module manager responds with the agent/module identification, as well as their address and API structure. This component was built to streamline the connection of foreign systems to the platform. It was designed to not only communicate with other digital agents, but also with visual clients (smartphones, robots, televisions).

The FitCLA tightly integrates with the hardware available (wristband, chest strap, and virtual assistant) and provides information about each user schedule and forwards recommended activities to the virtual assistant. Moreover, it receives the information from the hardware platform and uses that information to change the recommendation parameters (as a post-process response to the recommendation) influencing positively or negatively in accordance to the emotional response. The way it does this is using the internal classification of the exercises: high-intensity exercises are classified as emotional boosters, and low-intensity exercises are classified as emotional de-stresser.

Apart from this, the FitCLA is able to provide information to the caregiver about the interactions of the users and their emotional status, allowing them to make informed decisions about the ongoing treatments.

We present a functional example below of the EMERALD operation of both software and hardware components.