Monocular Visual-Inertial Odometry with an Unbiased Linear System Model and Robust Feature Tracking Front-End

Abstract

:1. Introduction

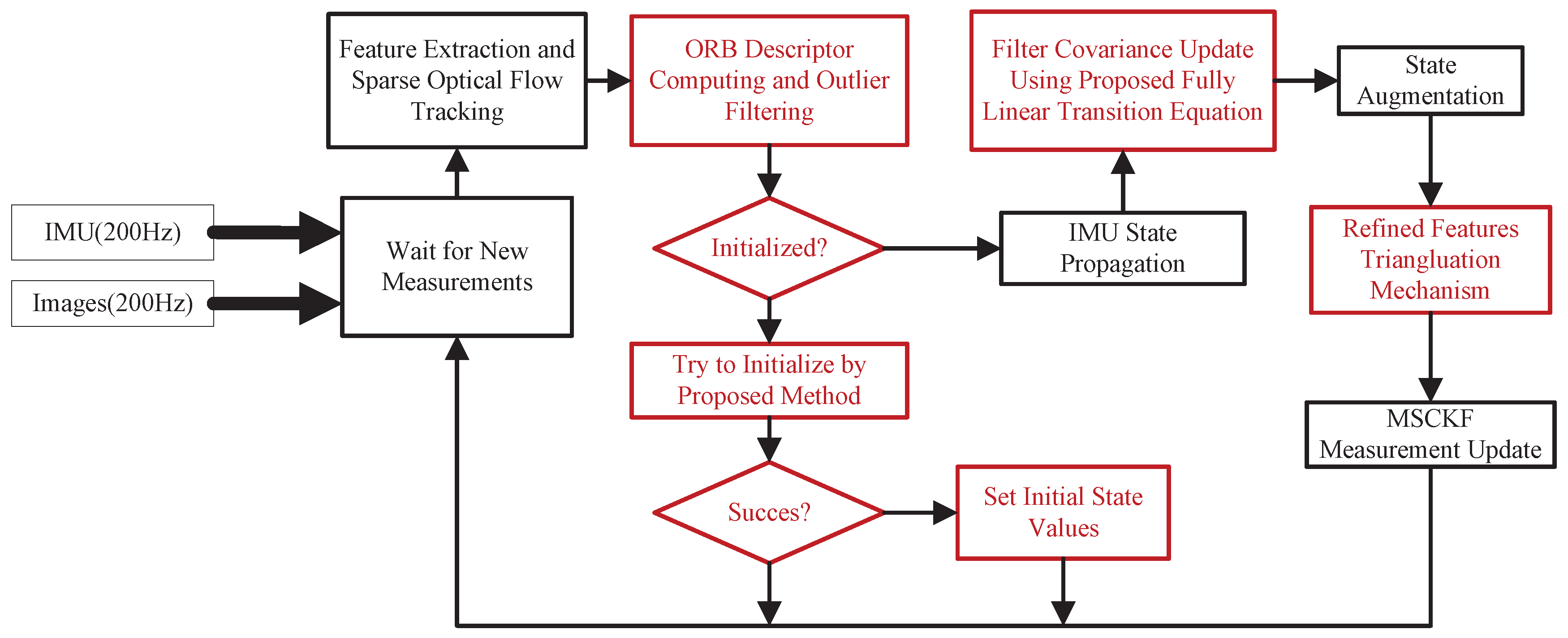

- We deduced a closed-form IMU error state transition equation based on the more cognitive Hamilton notation of quaternion. By solving integration terms analytically, a novel fully linear formulation was further obtained, which is also closed-form, and furthermore, is readily implemented.

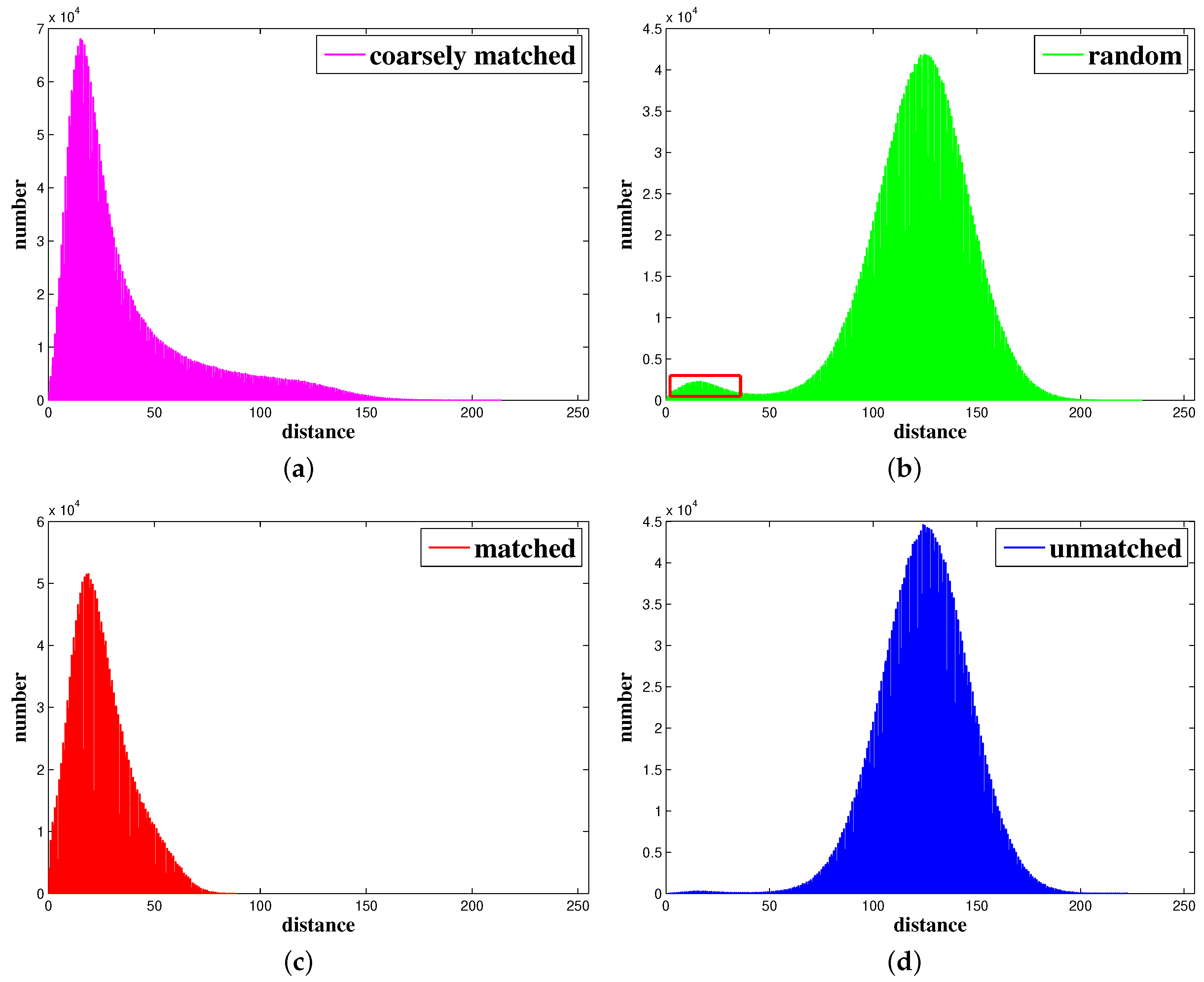

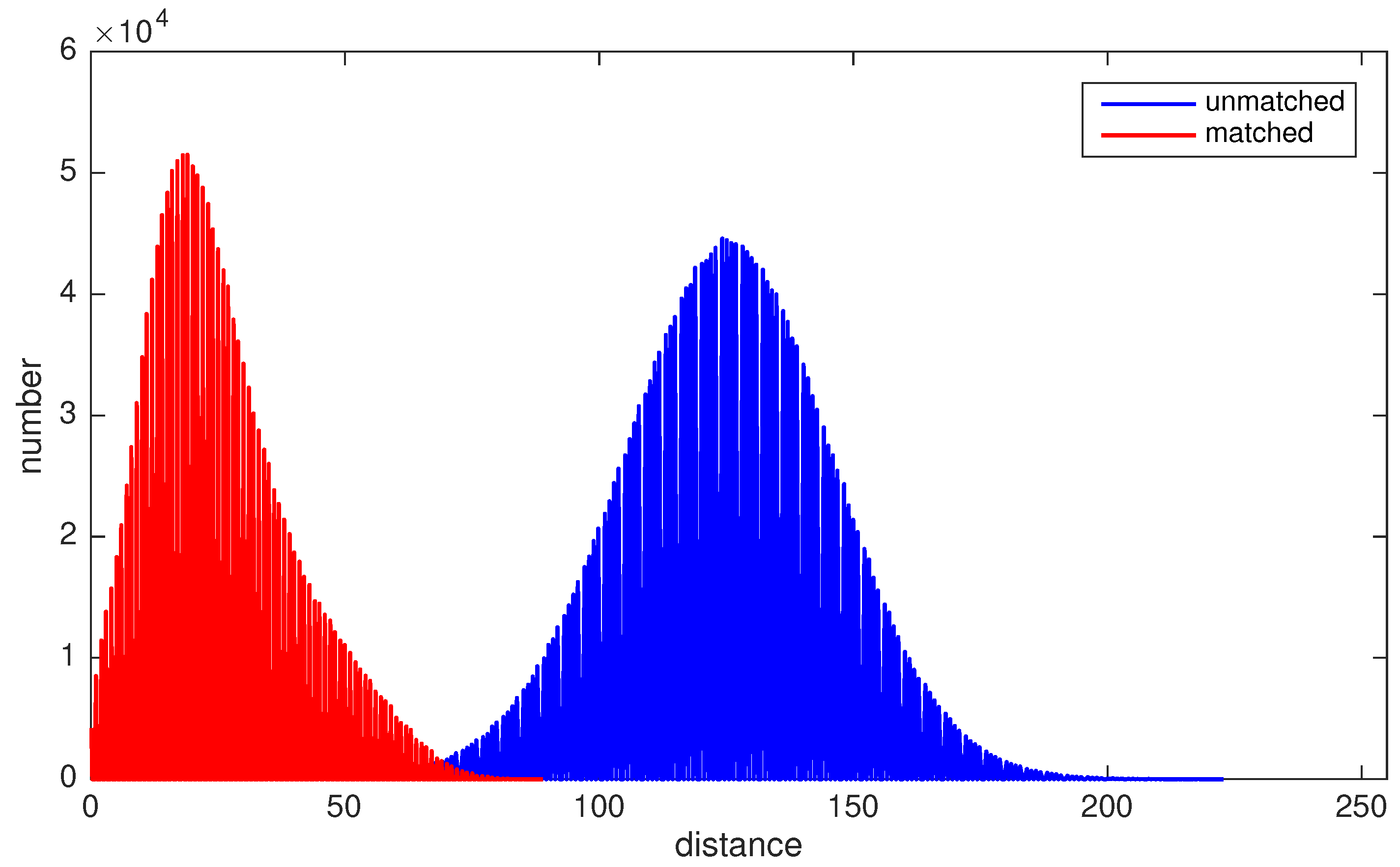

- By analyzing the statistical properties of ORB descriptor [32] distances of matched and unmatched feature points, we introduced a novel descriptor-assisted sparse optical flow tracking technique, which enhances the feature tracking robustness and barely adds any computation complexity.

- More improvements are made to improve the usability and performance of the filter. An initialization procedure is developed that automatically detects stationary scenes by analyzing tracked features and initializes the filter state based on static IMU data. The feature triangulation mechanism is carefully refined to provide efficient measurement updates.

- A filter-based monocular VIO using the proposed state transition equation, visual front-end, and initialization procedure under Sun et al.’s [24] framework is implemented. The performances of our VIO and MSCKF-MONO [23], an open-source monocular implementation of MSCKF, are compared with parameters setup as similarly as possible. Ours is also compared with other state-of-the-art open-source VIOs including ROVIO [5], OKVIS [6], and VINS-MONO [2]. In addition, we analyze the process time of our algorithm. All of the evaluations above are done on EuRoC datasets [33]. Detailed evaluations are reported.

2. Quaternion Notation Confusion

3. IMU Error State Differential Equation

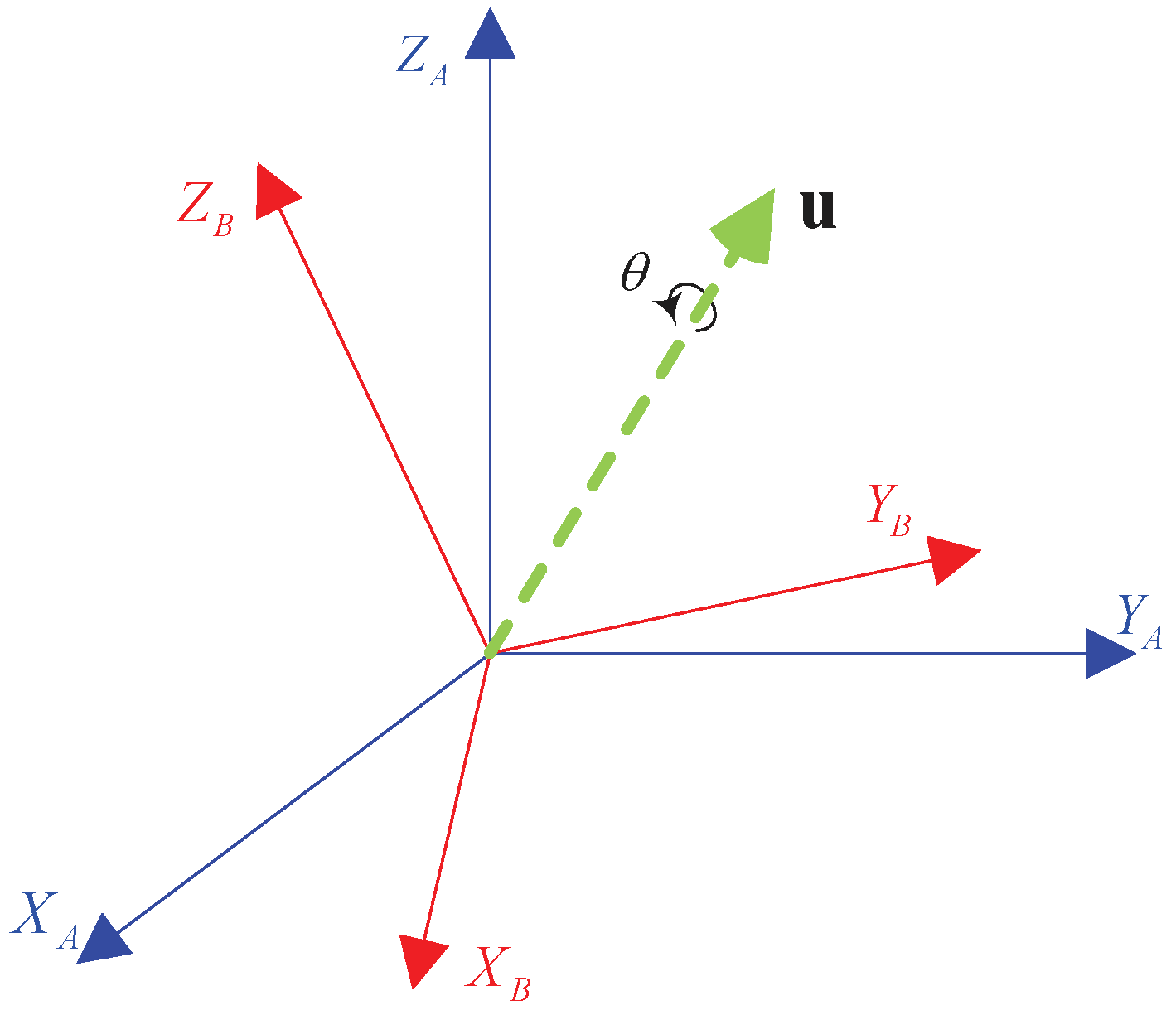

3.1. Notation

3.2. IMU Measurement Model

3.3. IMU Error State Definition

3.4. Differential Equation

4. Fully Linear State Transition Equation Formulation

4.1. Original Closed-Form Equation

4.2. Fully Linear Closed-Form Formulation

4.2.1. Two-Sample Fitting of Axis-Angle

4.2.2. Solve Integration Terms in

4.2.3. Process Noise Terms

4.3. Summarization

5. ORB Descriptor-Assisted Optical Flow Front-End

- The ORB descriptor is a binary string, so the distance between two descriptors can be expressed as a Hamming distance, which can be computed efficiently.

- The rotation between consecutive images in a real-time application is usually very gentle, so invariance to rotation is not very important for a descriptor.

Descriptor Distance Analysis for General Corner Features

- Coarsely matched feature pairs based on Shi-Tomasi corner detection and optical flow tracking.

- Relatively strictly matched feature pairs based on ORB descriptor matching and RANSAC.

- Randomly constructed feature pairs.

- Unmatched feature pairs generated by inverse order of one of the strictly matched feature sequences.

- For feature pairs with distances lower than the smaller peak value, classify them as inliers.

- For feature pairs with distances higher than the bigger peak value, classify them as outliers.

- For feature pairs whose distances are between two peaks, calculate and compare the Mahalanobis distances to both peak to decide their classification.

6. EKF-Based VIO Implementation Details and Improvements

6.1. Filter State and Measurement Model

6.2. Automatic Initialization Procedure

| Algorithm 1 Automatic initialization procedure |

|

6.3. Refined Feature Triangulation Mechanism

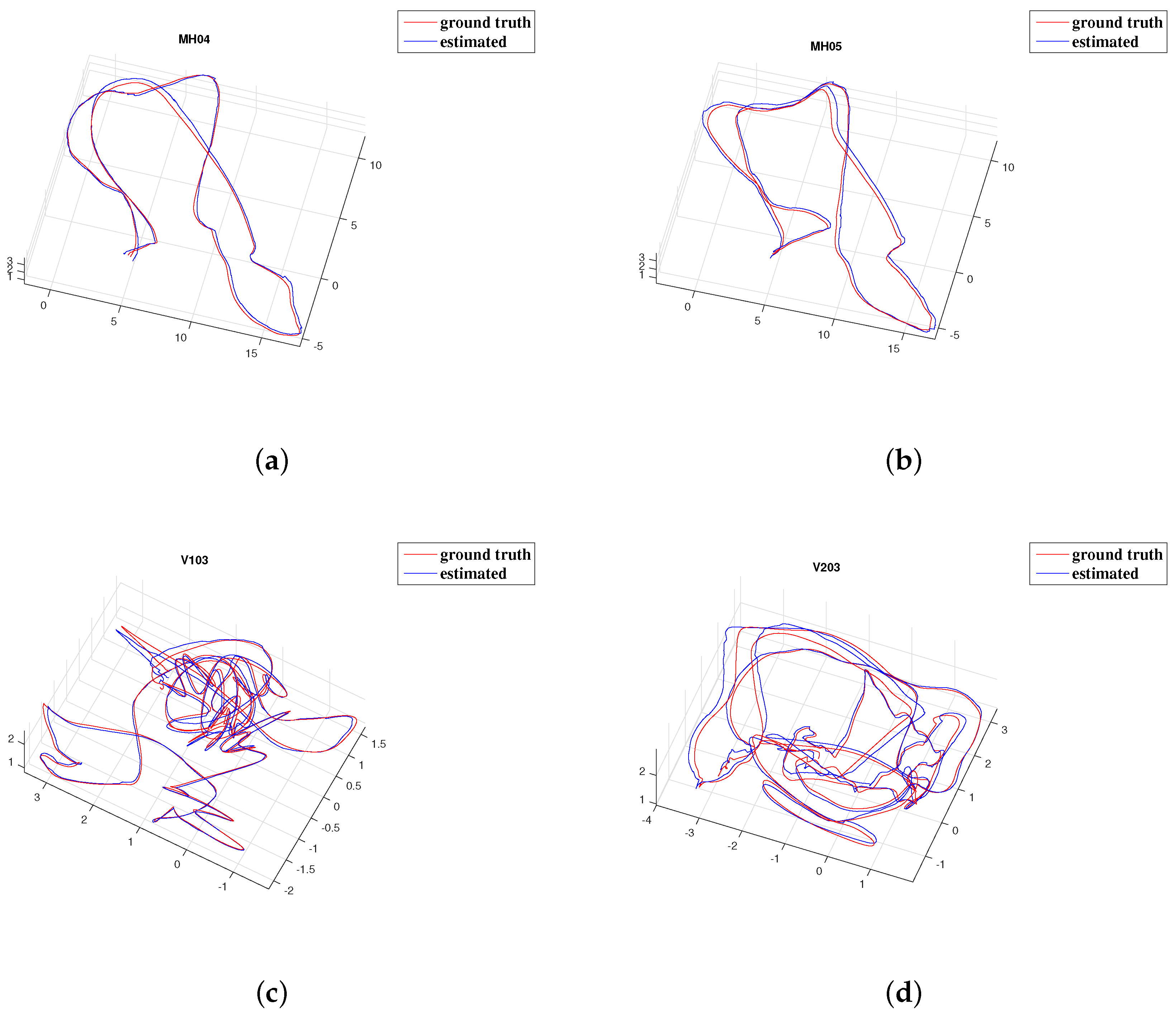

7. Experimental Results

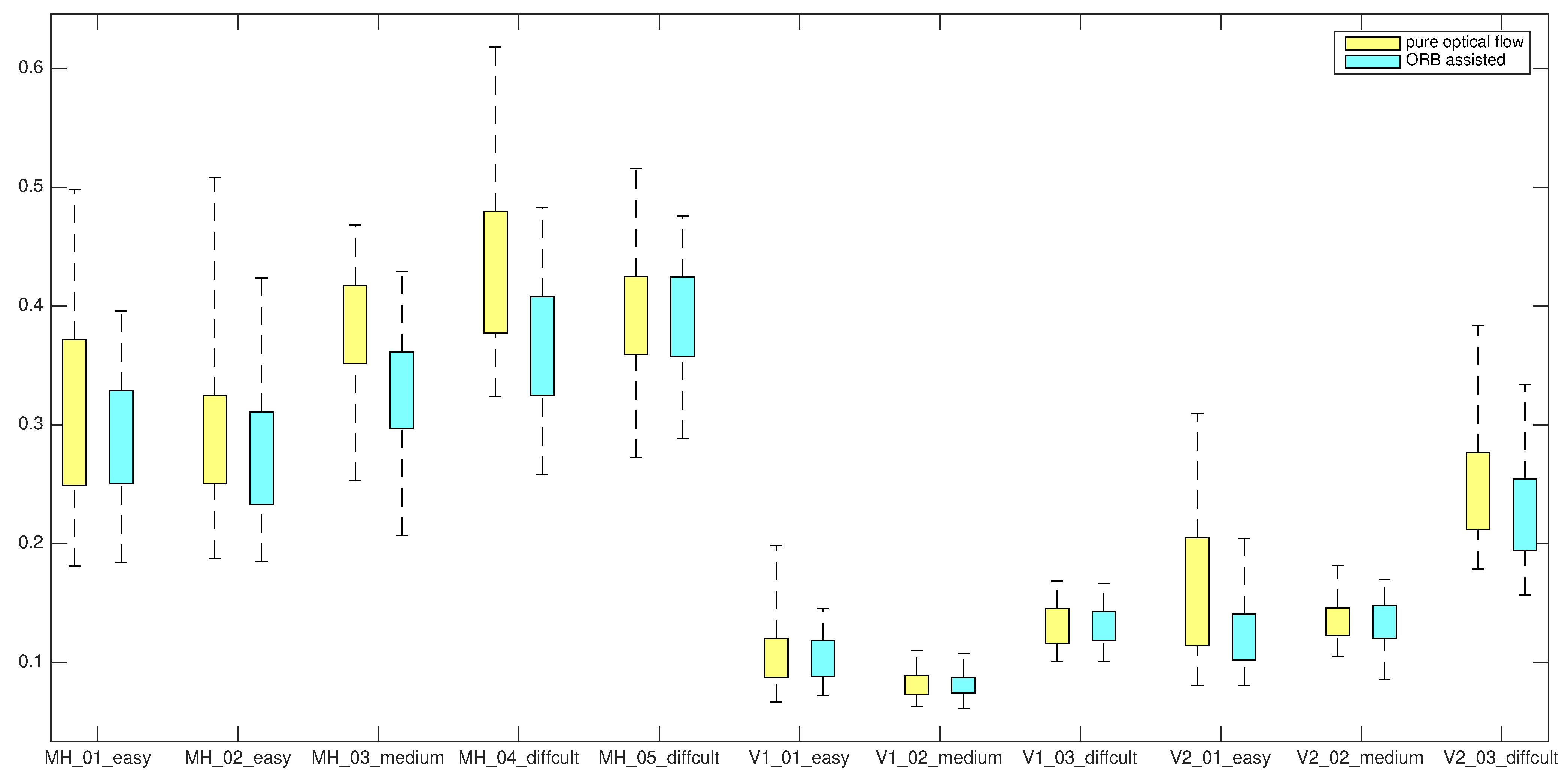

7.1. Front-End Improvement

7.2. Comparison with MSCKF-MONO

7.3. Comparison with the State-Of-The-Art

7.4. Processing Time

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Roma, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Von Stumberg, L.; Usenko, V.; Cremers, D. Direct Sparse Visual-Inertial Odometry using Dynamic Marginalization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2510–2517. [Google Scholar]

- He, Y.; Zhao, J.; Guo, Y.; He, W.; Yuan, K. PL-VIO: Tightly-Coupled Monocular Visual-Inertial Odometry Using Point and Line Features. Sensors 2018, 18, 1159. [Google Scholar] [CrossRef] [PubMed]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based visual-inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g2o: A general framework for graph optimization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental smoothing and mapping using the Bayes tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef]

- Liu, H.; Chen, M.; Zhang, G.; Bao, H.; Bao, Y. ICE-BA: Incremental, Consistent and Efficient Bundle Adjustment for Visual-Inertial SLAM. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1974–1982. [Google Scholar]

- Gui, J.; Gu, D.; Wang, S.; Hu, H. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

- Aqel, M.O.; Marhaban, M.H.; Saripan, M.I.; Ismail, N.B. Review of visual odometry: Types, approaches, challenges, and applications. SpringerPlus 2016, 5, 1897. [Google Scholar] [CrossRef] [PubMed]

- Strasdat, H.; Montiel, J.; Davison, A.J. Real-time monocular SLAM: Why filter? In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 2657–2664. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the 1999 International Workshop on Vision Algorithms, Corfu, Greece, 20–25 September 1999; Springer: Berlin, Germany, 1999; pp. 298–372. [Google Scholar]

- Lourakis, M.I.; Argyros, A.A. SBA: A software package for generic sparse bundle adjustment. ACM Trans. Math. Softw. (TOMS) 2009, 36, 2. [Google Scholar] [CrossRef]

- Hsiung, J.; Hsiao, M.; Westman, E.; Valencia, R.; Kaess, M. Information Sparsification in Visual-Inertial Odometry. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Agarwal, S.; Mierle, K. Ceres Solver. Available online: http://ceres-solver.org (accessed on 16 August 2018).

- Delmerico, J.; Scaramuzza, D. A Benchmark Comparison of Monocular Visual-Inertial Odometry Algorithms for Flying Robots. Memory 2018, 10, 20. [Google Scholar]

- Eade, E.; Drummond, T. Scalable monocular SLAM. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 469–476. [Google Scholar]

- Li, M.; Mourikis, A.I. Improving the accuracy of EKF-based visual-inertial odometry. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St Paul, MN, USA, 14–18 May 2012; pp. 828–835. [Google Scholar]

- Hesch, J.A.; Kottas, D.G.; Bowman, S.L.; Roumeliotis, S.I. Observability-Constrained Vision-Aided Inertial Navigation; Technical Report; University of Minnesota, Departmen of Computer Science & Engineering: Minneapolis, MN, USA, 2012; Volume 1, p. 6. [Google Scholar]

- Huang, G.P.; Mourikis, A.I.; Roumeliotis, S.I. Observability-based rules for designing consistent EKF SLAM estimators. Int. J. Robot. Res. 2010, 29, 502–528. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A.I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Group of Prof; Kostas Daniilidis, R. Msckf-Mono. Available online: https://github.com/daniilidis-group/msckf_mono (accessed on 16 August 2018).

- Sun, K.; Mohta, K.; Pfrommer, B.; Watterson, M.; Liu, S.; Mulgaonkar, Y.; Taylor, C.J.; Kumar, V. Robust stereo visual inertial odometry for fast autonomous flight. IEEE Robot. Autom. Lett. 2018, 3, 965–972. [Google Scholar] [CrossRef]

- Zheng, X.; Moratto, Z.; Li, M.; Mourikis, A.I. Photometric patch-based visual-inertial odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3264–3271. [Google Scholar]

- Zheng, F.; Tsai, G.; Zhang, Z.; Liu, S.; Chu, C.C.; Hu, H. Trifo-VIO: Robust and Efficient Stereo Visual Inertial Odometry using Points and Lines. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Trawny, N.; Roumeliotis, S.I. Indirect Kalman Filter for 3D Attitude Estimation; Technical Report; University of Minnesota, Departmen of Computer Science & Engineering: Minneapolis, MN, USA, 2005; Volume 2. [Google Scholar]

- Sommer, H.; Gilitschenski, I.; Bloesch, M.; Weiss, S.M.; Siegwart, R.; Nieto, J. Why and How to Avoid the Flipped Quaternion Multiplication. Aerospace 2018, 5, 72. [Google Scholar] [CrossRef]

- Yang, N.; Wang, R.; Gao, X.; Cremers, D. Challenges in monocular visual odometry: Photometric calibration, motion bias, and rolling shutter effect. IEEE Robot. Autom. Lett. 2018, 3, 2878–2885. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Bloesch, M.; Burri, M.; Omari, S.; Hutter, M.; Siegwart, R. Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback. Int. J. Robot. Res. 2017, 36, 1053–1072. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Titterton, D.; Weston, J.L.; Weston, J. Strapdown Inertial Navigation Technology; IET: Stevenage, UK, 2004; Volume 17. [Google Scholar]

- Solà, J. Quaternion Kinematics for the Error-State Kalman Filter; Technical Report; Laboratoire dAnalyse et dArchitecture des Systemes-Centre National de la Recherche Scientifique (LAAS-CNRS): Toulouse, France, 2017. [Google Scholar]

- Qin, Y. Inertial Navigation; Science Press: Berlin, Germany, 2006. (In Chinese) [Google Scholar]

- Qin, Y.; Zhang, H.; Wang, S. Kalman Filtering and Integrated Navigation Principles, 3rd ed.; Northwestern Polytechnical University Press: Xi’an, China, 2015. (In Chinese) [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi, C. Good Features to Track; Technical Report; Cornell University: Ithaca, NY, USA, 1993. [Google Scholar]

- Bouguet, J.Y. Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel Corp. 2001, 5, 4. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 17 May 2009; Volume 3, p. 5. [Google Scholar]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 4, 376–380. [Google Scholar] [CrossRef]

| Sequence | MH_01 | MH_02 | MH_03 | MH_04 | MH_05 | V1_01 | V1_02 | V1_03 | V2_01 | V2_02 | V2_03 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | mean | std | |

| pure optical flow | 0.309 | 0.076 | 0.297 | 0.065 | 0.381 | 0.050 | 0.435 | 0.071 | 0.393 | 0.051 | 0.108 | 0.026 | 0.082 | 0.012 | 0.130 | 0.018 | 0.162 | 0.057 | 0.137 | 0.019 | 0.248 | 0.047 |

| ORB assisted | 0.294 | 0.055 | 0.273 | 0.056 | 0.330 | 0.048 | 0.366 | 0.058 | 0.391 | 0.046 | 0.104 | 0.018 | 0.082 | 0.010 | 0.131 | 0.017 | 0.127 | 0.030 | 0.134 | 0.019 | 0.231 | 0.039 |

| Sequence | MH_01 | MH_02 | MH_03 | MH_04 | MH_05 | V1_01 | V1_02 | V1_03 | V2_01 | V2_02 | V2_03 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| process time | 1.3942 | 1.6480 | 1.3373 | 1.3983 | 1.0870 | 1.3297 | 1.0410 | 0.9574 | 1.2506 | 1.0525 | 0.7465 |

| MH_01 | MH_02 | MH_03 | MH_04 | MH_05 | V1_01 | V1_02 | V1_03 | V2_01 | V2_02 | V2_03 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| MSCKF-MONO | 1.015 | 0.534 | 0.427 | 2.102 | 0.968 | 0.169 | 0.275 | 1.551 | 0.281 | 0.341 | × |

| Proposed | 0.299 | 0.280 | 0.342 | 0.350 | 0.384 | 0.096 | 0.078 | 0.132 | 0.121 | 0.137 | 0.224 |

| MH_01 | MH_02 | MH_03 | MH_04 | MH_05 | V1_01 | V1_02 | V1_03 | V2_01 | V2_02 | V2_03 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| VINS-MONO | 0.159 | 0.182 | 0.199 | 0.350 | 0.313 | 0.090 | 0.110 | 0.188 | 0.089 | 0.163 | 0.305 |

| ROVIO | 0.250 | 0.653 | 0.449 | 1.007 | 1.448 | 0.159 | 0.198 | 0.172 | 0.299 | 0.642 | 0.190 |

| OKVIS | 0.376 | 0.378 | 0.277 | 0.323 | 0.451 | 0.087 | 0.157 | 0.224 | 0.132 | 0.185 | 0.305 |

| Proposed | 0.289 | 0.258 | 0.331 | 0.394 | 0.423 | 0.117 | 0.089 | 0.134 | 0.097 | 0.140 | 0.211 |

| Sequence | MH_01 | MH_02 | MH_03 | MH_04 | MH_05 | V1_01 | V1_02 | V1_03 | V2_01 | V2_02 | V2_03 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | Time | Rate | ||

| VINS-MONO | front-end | 18.0 | 55 | 18.3 | 55 | 18.6 | 54 | 19.3 | 52 | 21.3 | 47 | 20.2 | 49 | 21.4 | 47 | 23.2 | 43 | 22.3 | 45 | 23.8 | 42 | 30.6 | 33 |

| back-end | 50.2 | 20 | 50.9 | 20 | 50.1 | 20 | 50.1 | 20 | 53.0 | 19 | 53.1 | 19 | 45.9 | 22 | 37.9 | 26 | 54.4 | 18 | 48.3 | 21 | 33.4 | 30 | |

| ROVIO | front-end | 2.0 | 505 | 1.9 | 526 | 2.0 | 497 | 2.1 | 476 | 2.0 | 490 | 1.9 | 538 | 2.0 | 508 | 2.1 | 481 | 2.0 | 503 | 2.0 | 510 | 2.0 | 478 |

| back-end | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | 15.7 | 63 | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | 15.9 | 63 | |

| OKVIS | front-end | 46.7 | 21 | 45.3 | 22 | 47.4 | 21 | 40.9 | 24 | 41.4 | 24 | 38.5 | 26 | 38.8 | 26 | 31.3 | 32 | 38.8 | 26 | 37.3 | 27 | 31.4 | 32 |

| back-end | 39.8 | 25 | 39.4 | 25 | 39.9 | 25 | 32.1 | 31 | 33.1 | 30 | 30.6 | 33 | 25.5 | 39 | 19.2 | 52 | 29.6 | 34 | 27.9 | 36 | 18.0 | 56 | |

| Proposed | front-end | 16.2 | 62 | 16.5 | 61 | 15.9 | 63 | 16.1 | 62 | 15.7 | 64 | 15.7 | 64 | 15.3 | 65 | 16.4 | 61 | 15.8 | 63 | 15.9 | 63 | 17.3 | 58 |

| back-end | 5.5 | 182 | 5.9 | 169 | 6.1 | 164 | 5.5 | 181 | 6.0 | 166 | 5.7 | 174 | 5.4 | 185 | 4.9 | 203 | 5.7 | 176 | 5.6 | 178 | 4.6 | 218 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, X.; Zhang, H.; Fu, W.; Zhao, C.; Jin, Y. Monocular Visual-Inertial Odometry with an Unbiased Linear System Model and Robust Feature Tracking Front-End. Sensors 2019, 19, 1941. https://doi.org/10.3390/s19081941

Qiu X, Zhang H, Fu W, Zhao C, Jin Y. Monocular Visual-Inertial Odometry with an Unbiased Linear System Model and Robust Feature Tracking Front-End. Sensors. 2019; 19(8):1941. https://doi.org/10.3390/s19081941

Chicago/Turabian StyleQiu, Xiaochen, Hai Zhang, Wenxing Fu, Chenxu Zhao, and Yanqiong Jin. 2019. "Monocular Visual-Inertial Odometry with an Unbiased Linear System Model and Robust Feature Tracking Front-End" Sensors 19, no. 8: 1941. https://doi.org/10.3390/s19081941

APA StyleQiu, X., Zhang, H., Fu, W., Zhao, C., & Jin, Y. (2019). Monocular Visual-Inertial Odometry with an Unbiased Linear System Model and Robust Feature Tracking Front-End. Sensors, 19(8), 1941. https://doi.org/10.3390/s19081941