2D Rotation-Angle Measurement Utilizing Least Iterative Region Segmentation

Abstract

1. Introduction

2. Basic Theory

2.1. Image Moment

2.2. Deficiency of Rotation-Angle Measurement Based on Geometric Moments

3. Method for Rotation-Angle Measurement

- (1)

- We assume that the plane workpiece is uniform and the center of mass is located on the workpiece.

- (2)

- We assume that the optical axis of the camera is perpendicular to the work plane.

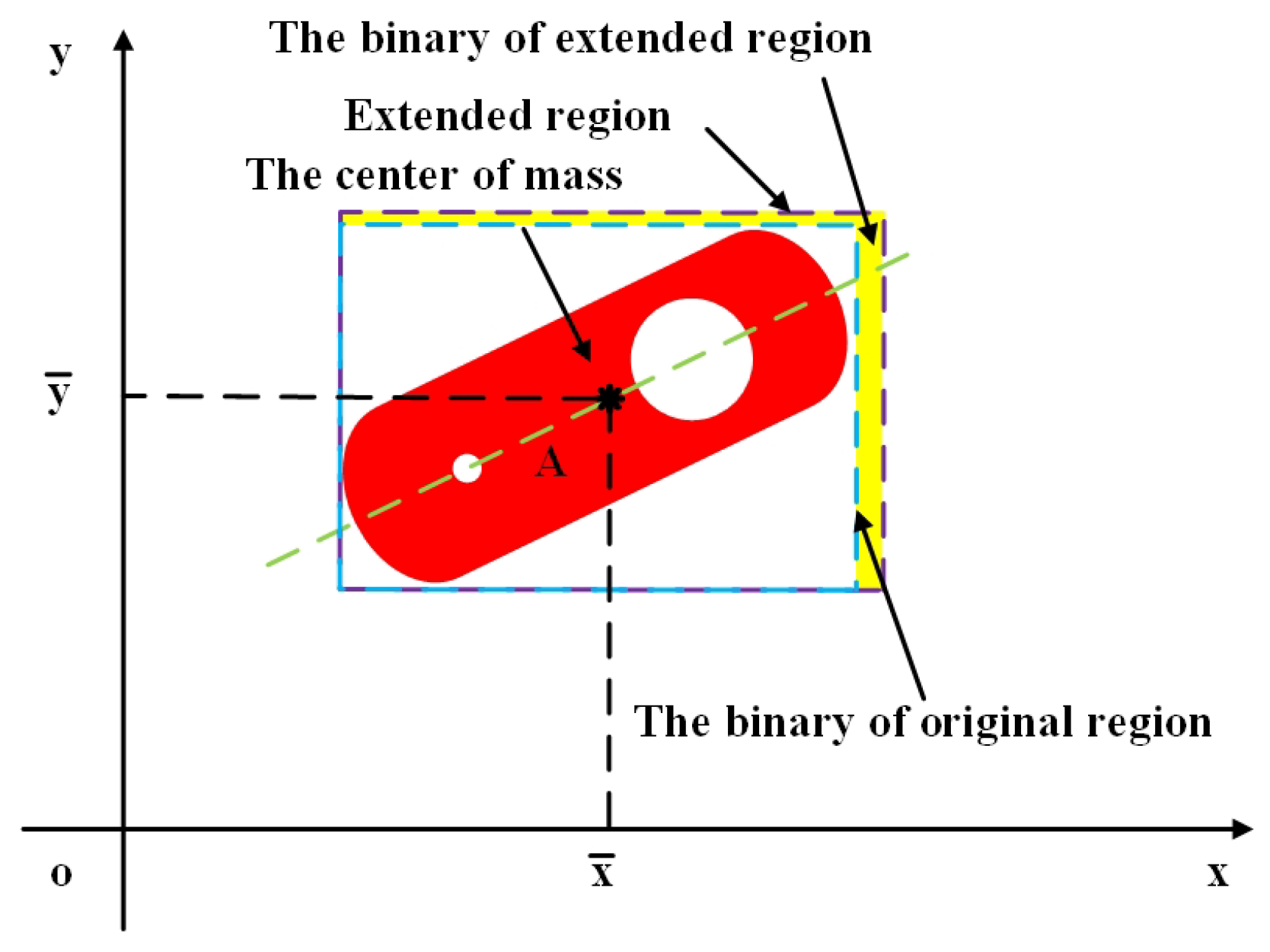

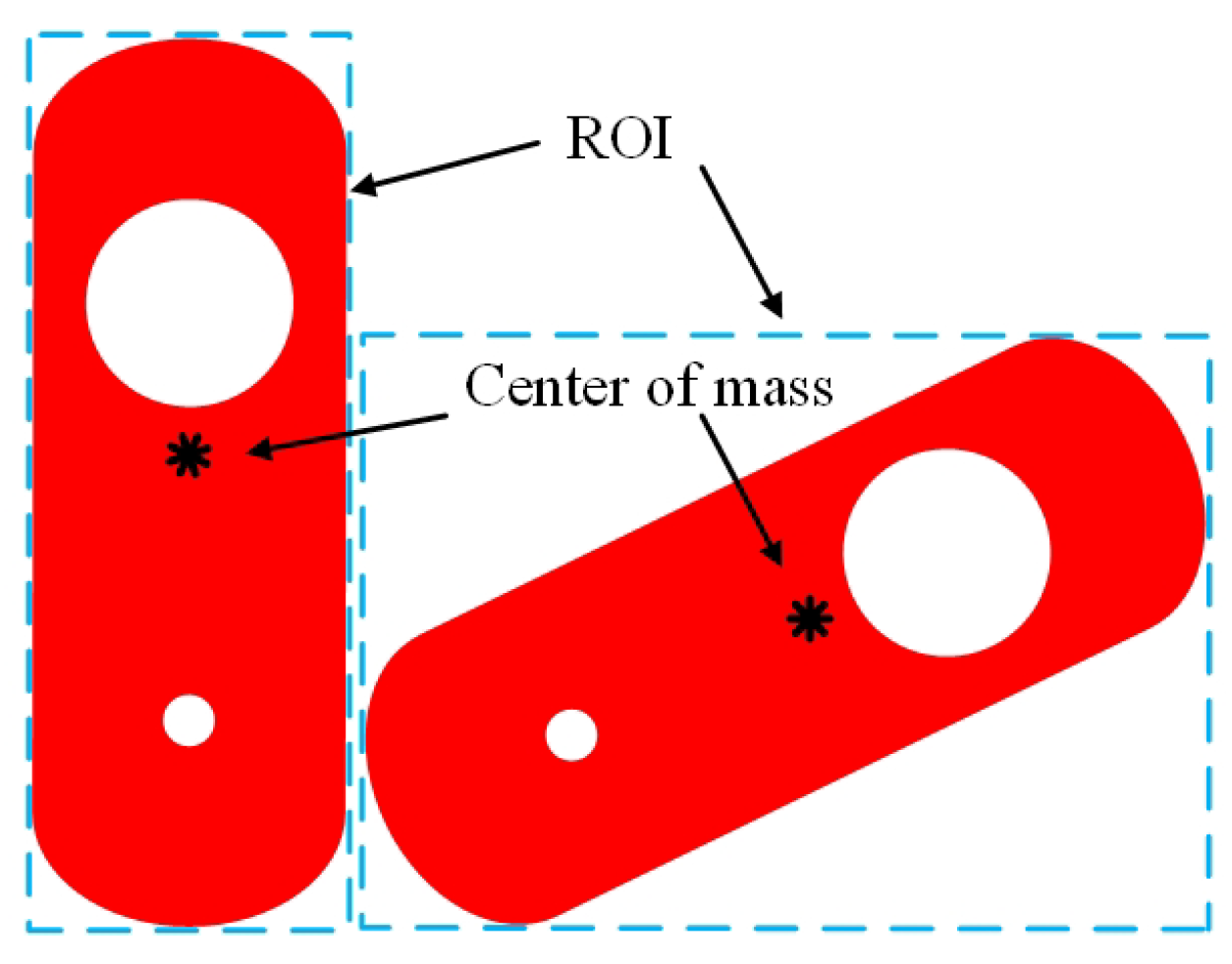

3.1. Image Preprocessing

3.2. Least Iterative Region Segmentation Method

3.2.1. Judgment of Centrosymmetry

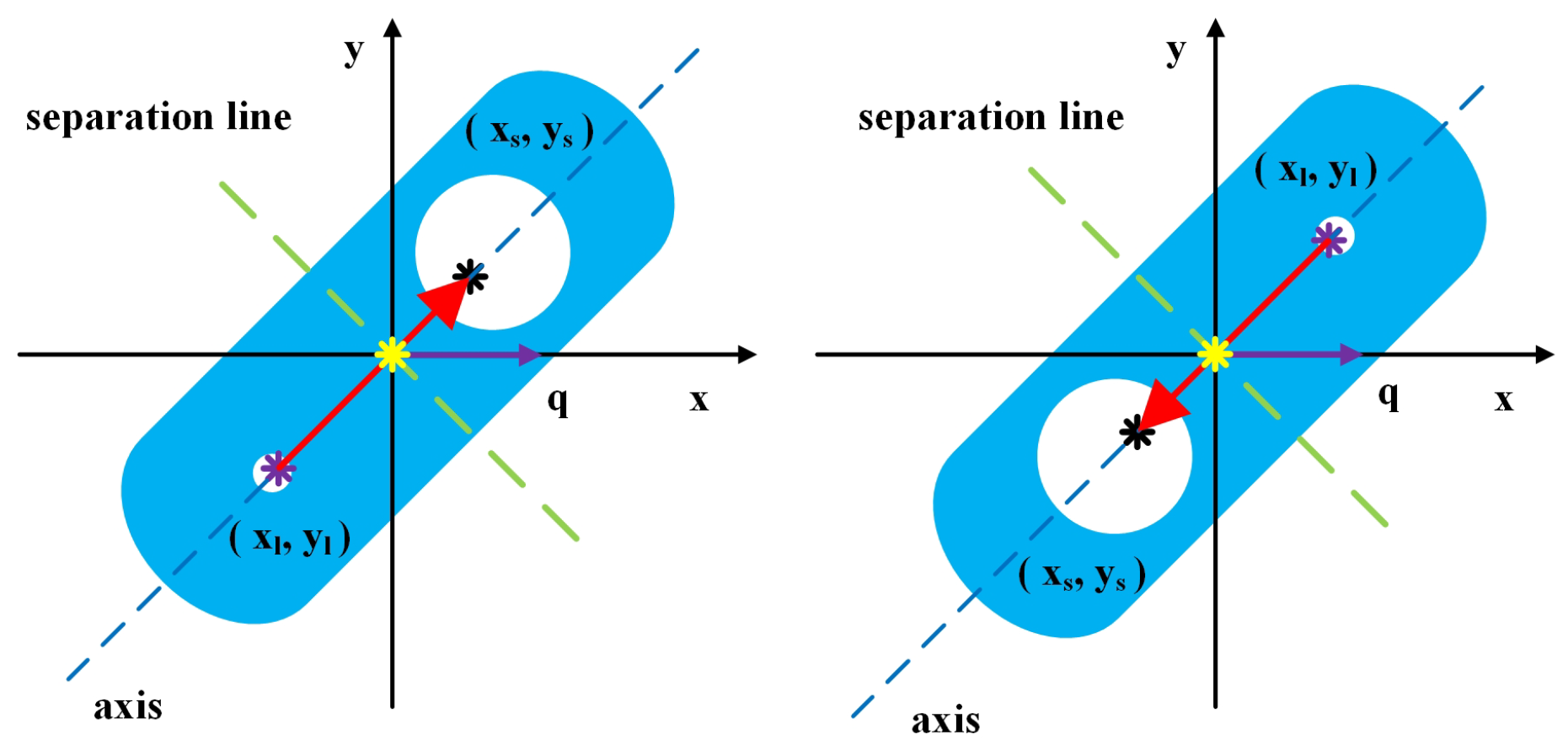

3.2.2. Region Segmentation and Identification

- (1)

- The deviation angle needs to be selected reasonably. We should avoid choosing the symmetry axis or its perpendicular axis as the separation line because these axes divide a symmetric workpiece into two parts with the same area.

- (2)

- The area of the workpiece will not be exactly equal after the workpiece is rotated at different angles because the images captured by the industrial camera have been already discretized by a charge-coupled device and a discretization error will always exist. To eliminate the effect of discretization on the measurement, a threshold is employed. The areas of and are considered equal when the absolute area difference is less than the threshold.

3.2.3. Rotation-Angle Calculation

3.3. Evaluation of LIRS Method

3.3.1. Accuracy

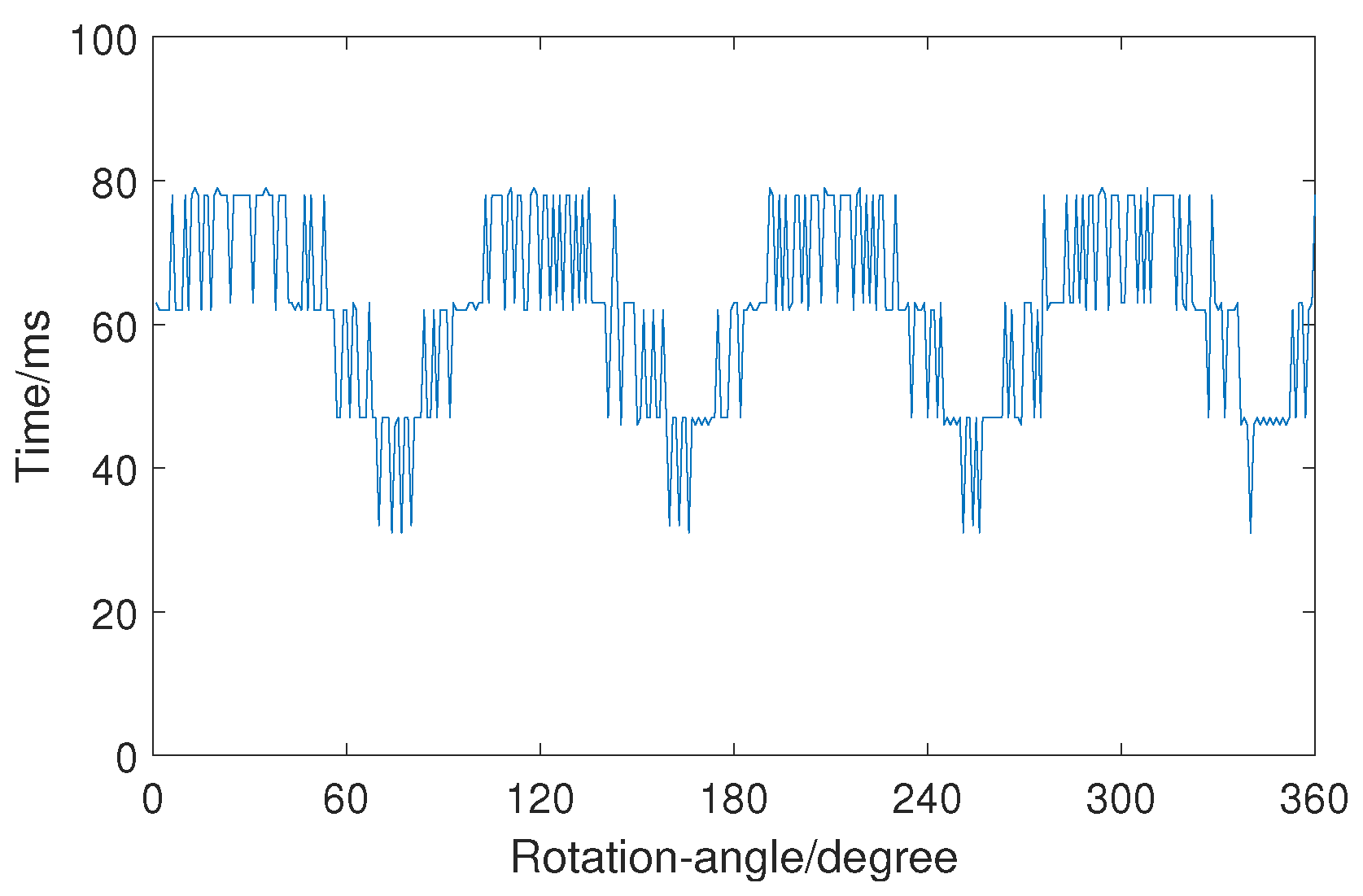

3.3.2. Time Consumption

3.3.3. Generality

3.3.4. Image Size

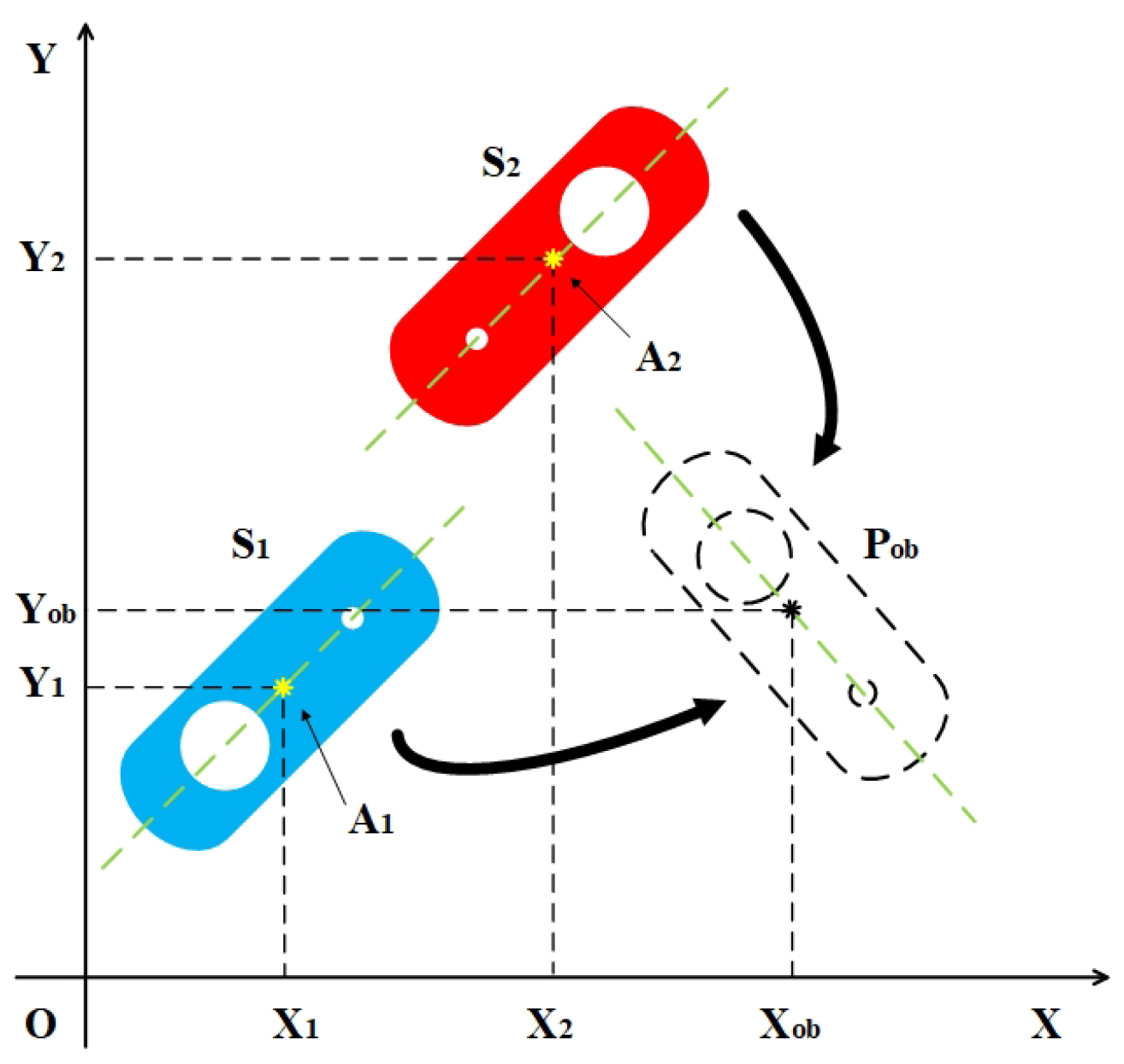

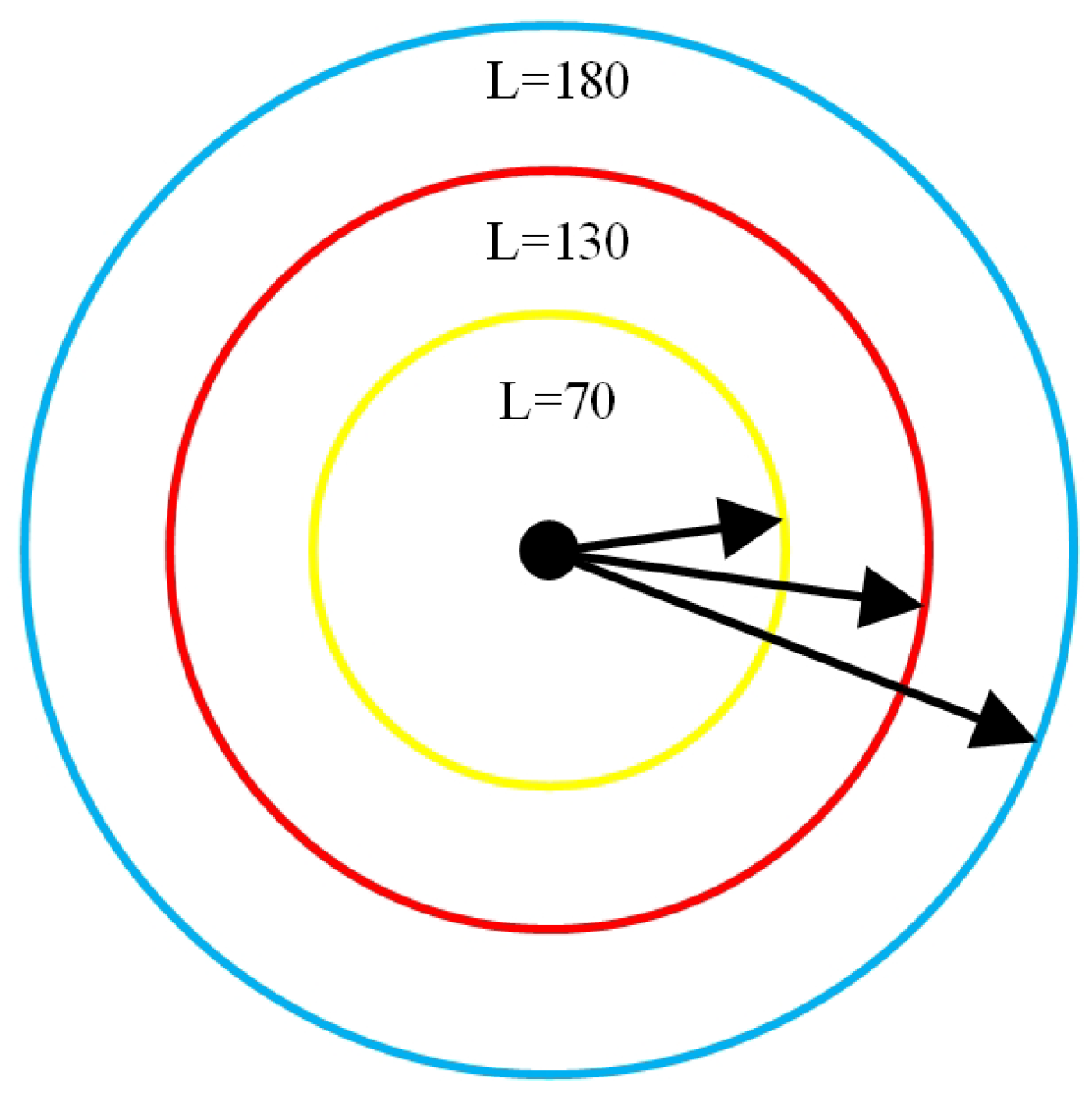

4. Rotation-Angle Measurement Model

4.1. Modeling

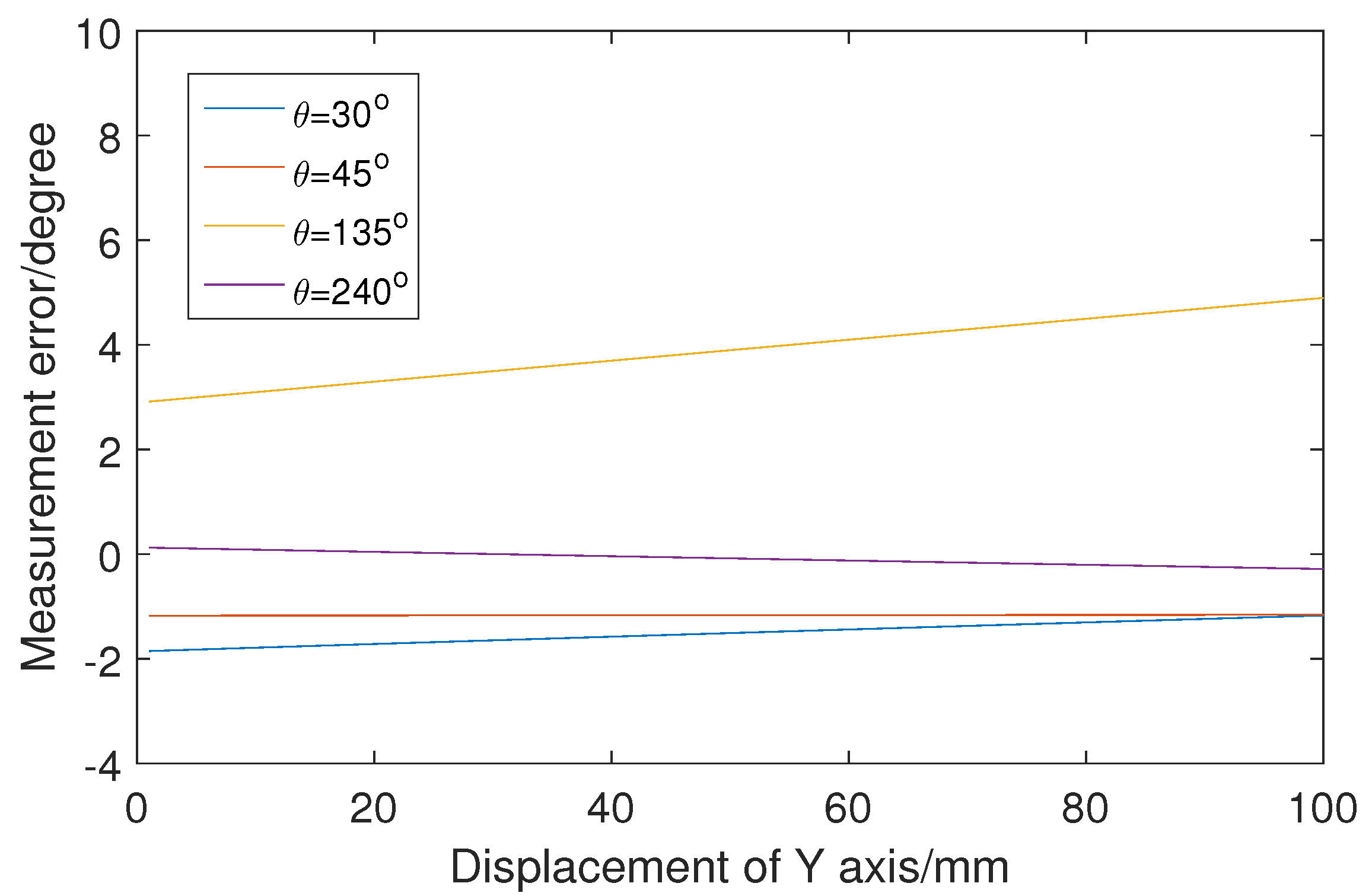

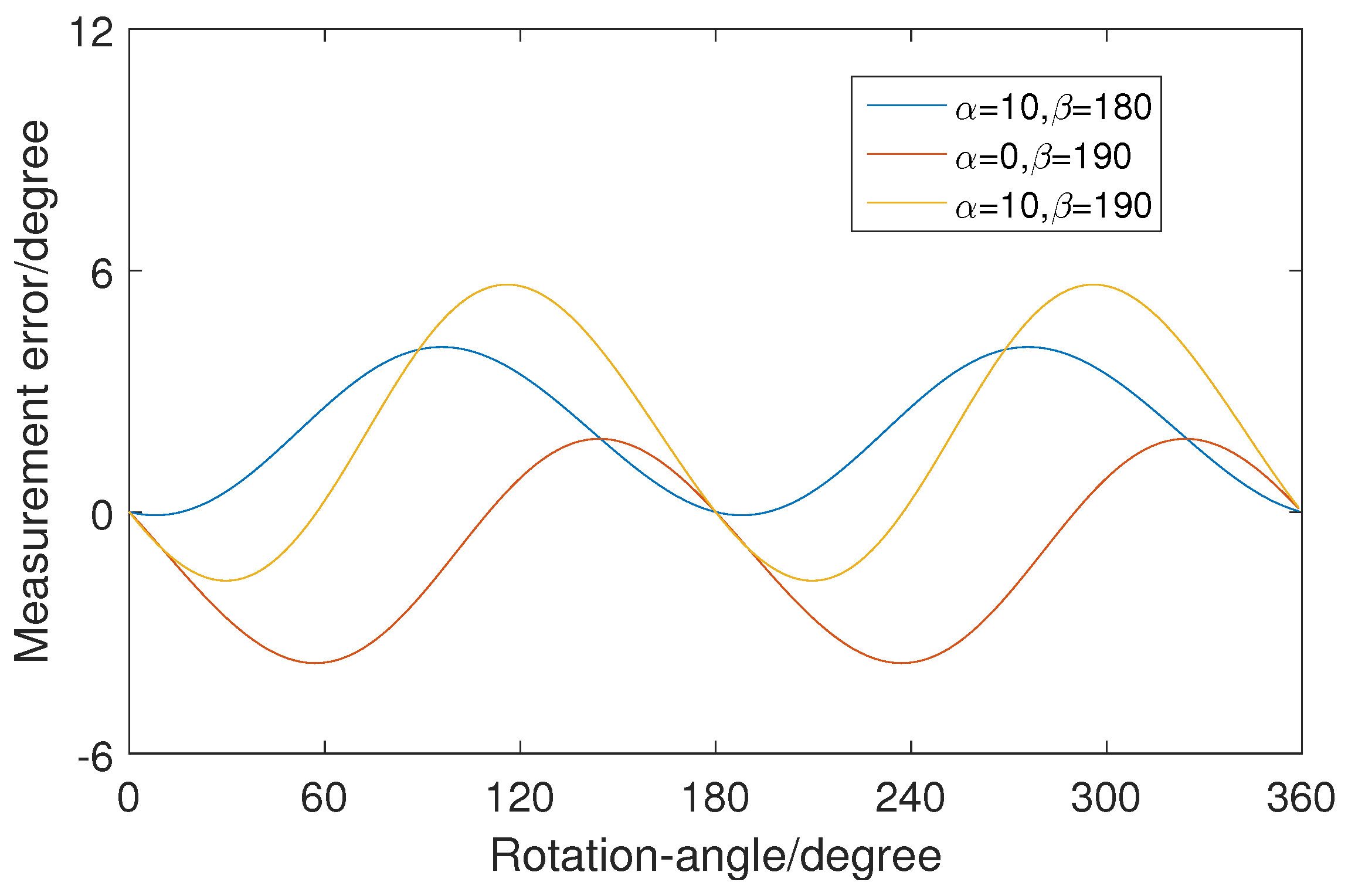

4.2. Simulation and Discussion

4.3. Method for Correction of Rotation-Angle Measurement Error

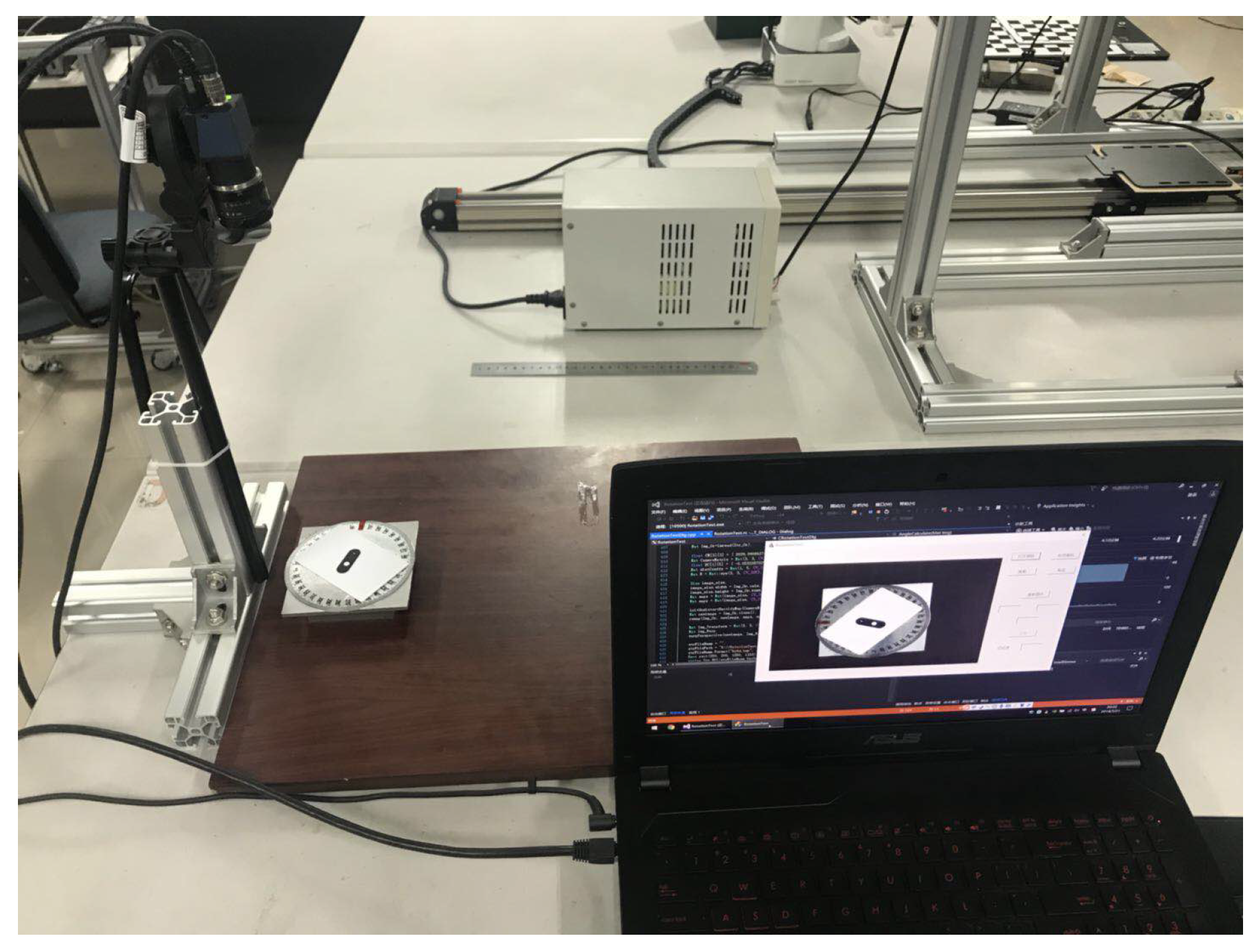

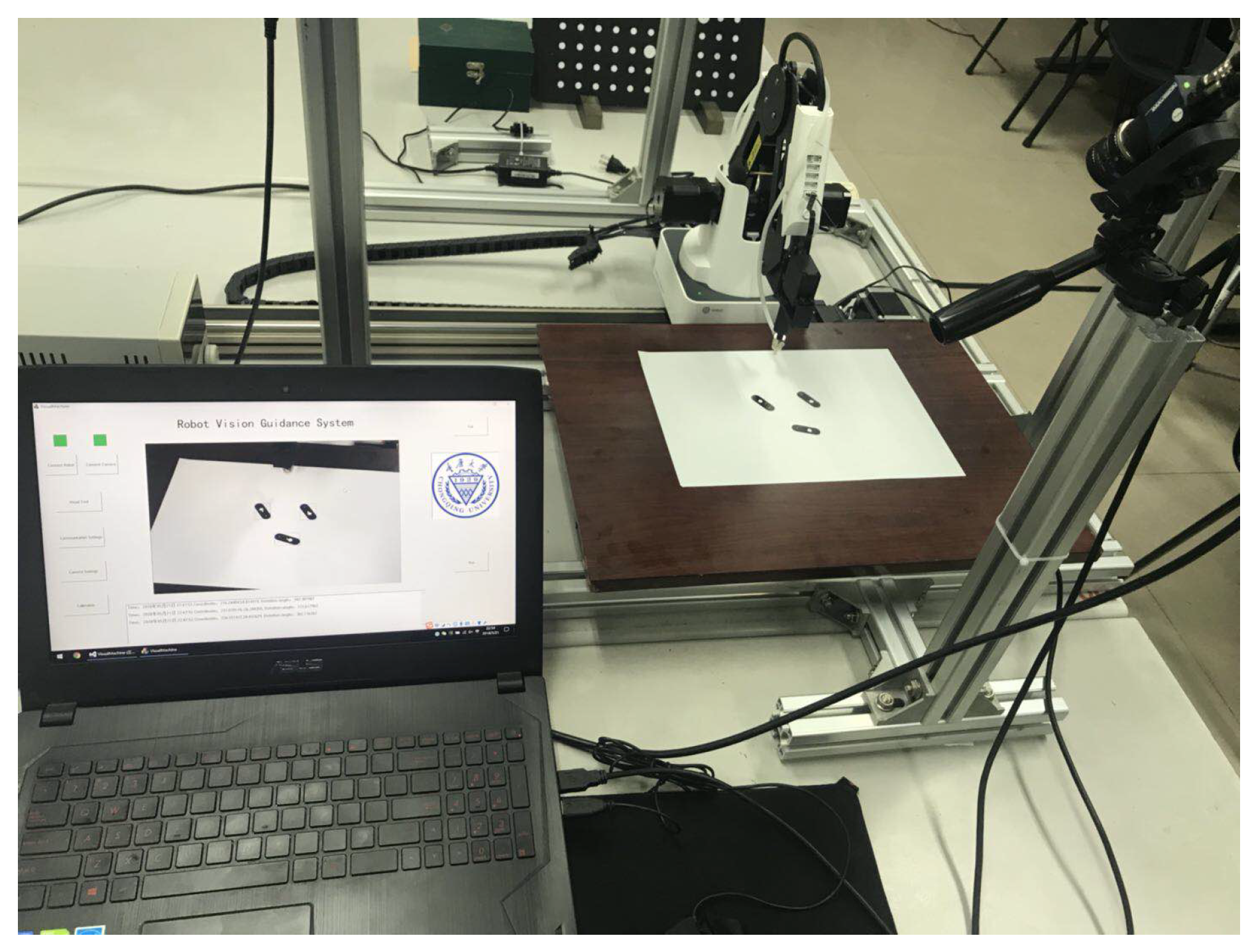

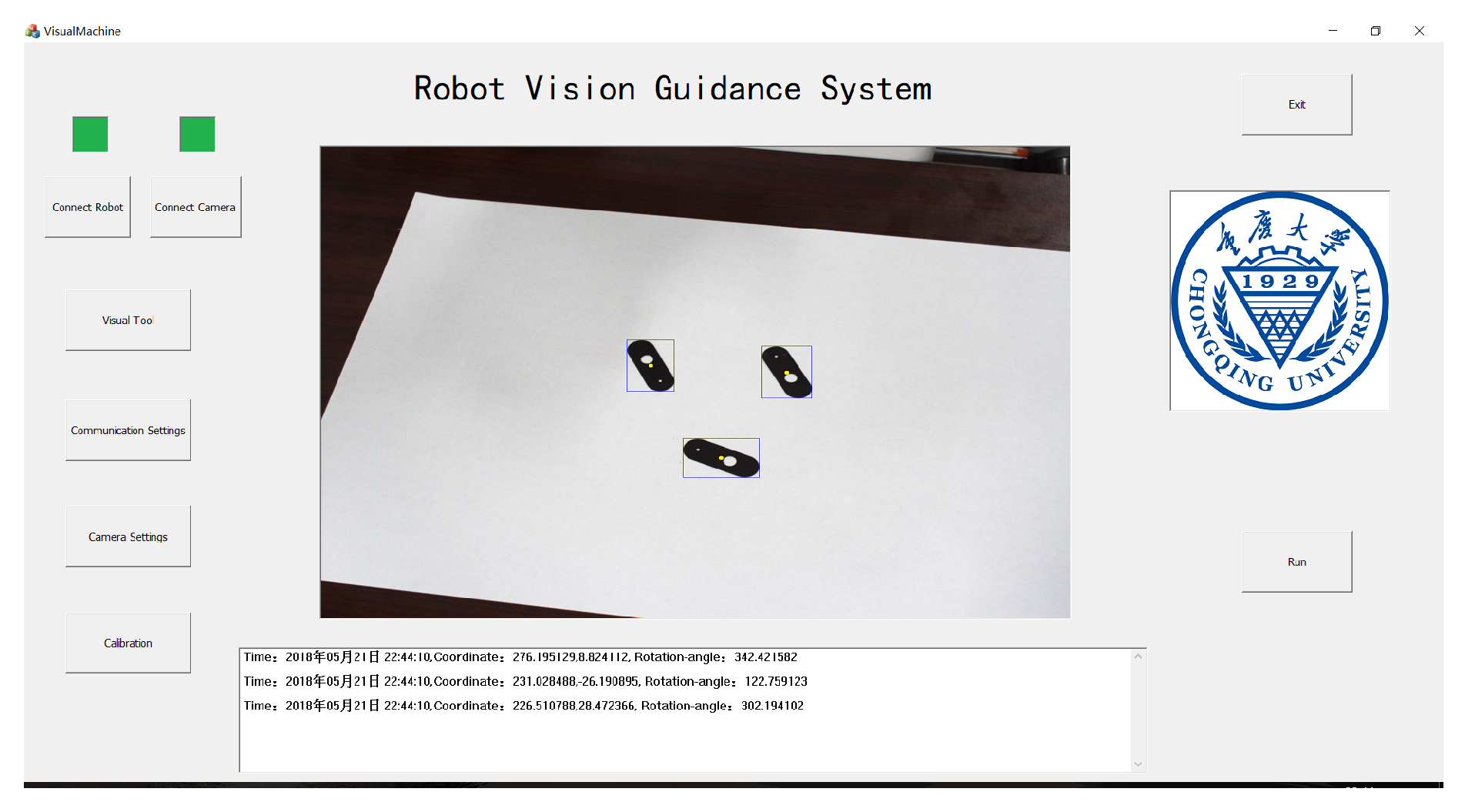

5. Experiment

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cho, N.H.; Chang, D.I.; Lee, S.H.; Hwang, H.; Lee, Y.H.; Park, J.R. Development of automatic sorting system for green pepper using machine vision. J. Biosyst. Eng. 2007, 30, 110–113. [Google Scholar]

- Zheng, H.; Lu, H.F.; Zheng, Y.P.; Lou, H.Q.; Chen, C.Q. Automatic sorting of Chinese jujube (Zizyphus jujuba, Mill. cv. ‘hongxing’) using chlorophyll fluorescence and support vector machine. J. Food Eng. 2010, 101, 402–408. [Google Scholar] [CrossRef]

- Basu, S.; Das, N.; Sarkar, R.; Kundu, M.; Nasipuri, M.; Basu, D.K. A novel framework for automatic sorting of postal documents with multi-script address blocks. Pattern Recognit. 2010, 43, 3507–3521. [Google Scholar] [CrossRef]

- Mesina, M.B.; de Jong, T.P.R.; Dalmijn, W.L. Automatic sorting of scrap metals with a combined electromagnetic and dual energy X-ray transmission sensor. Int. J. Miner. Process. 2007, 82, 222–232. [Google Scholar] [CrossRef]

- Jiu, H.; Thomas, P.; Bian, Z.F. Automatic Sorting of Solid Black Polymer Wastes Based on Visual and Acoustic Sensors. Energy Procedia 2011, 11, 3141–3150. [Google Scholar]

- Wilson, J.R.; Lee, N.Y.; Saechao, A.; Tickle-Degnen, L.; Scheutz, M. Supporting Human Autonomy in a Robot-Assisted Medication Sorting Task. Int. J. Soc. Robot. 2018, 10, 621–641. [Google Scholar] [CrossRef]

- Urizar, M.; Petuya, V.; Amezua, E.; Hernandez, A. Characterizing the configuration space of the 3-SPS-S spatial orientation parallel manipulator. Meccanica 2014, 49, 1101–1114. [Google Scholar] [CrossRef]

- De Saxe, C.; Cebon, D. A Visual Template-Matching Method for Articulation Angle Measurement. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Las Palmas, Spain, 15–18 September 2015; pp. 626–631. [Google Scholar]

- Matungka, R.; Zheng, Y.F.; Ewing, R.L. Image registration using adaptive polar transform. In Proceedings of the 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 2416–2419. [Google Scholar]

- Revaud, J.; Lavoue, G.; Baskurt, A. Improving Zernike Moments Comparison for Optimal Similarity and Rotation Angle Retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 627–636. [Google Scholar] [CrossRef]

- Delponte, E.; Isgro, F.; Odone, F.; Verri, A. SVD-matching using SIFT features. Graph. Models 2006, 68, 415–431. [Google Scholar] [CrossRef]

- Munoz-Rodriguez, J.A.; Asundi, A.; Rodriguez-Vera, R. Recognition of a light line pattern by Hu moments for 3-D reconstruction of a rotated object. Opt. Laser Technol. 2004, 37, 131–138. [Google Scholar] [CrossRef]

- Li, W.M.; Jin, J.; Li, X.F.; Li, B. Method of rotation angle measurement in machine vision based on calibration pattern with spot array. Appl. Opt. 2010, 49, 1001–1006. [Google Scholar] [CrossRef] [PubMed]

- Dong, H.X.; Fu, Q.; Zhao, X.; Quan, Q.; Zhang, R.F. Practical rotation angle measurement method by monocular vision. Appl. Opt. 2015, 54, 425–435. [Google Scholar] [CrossRef]

- Fang, J.Y.; Qin, S.Q.; Wang, X.S.; Huang, Z.S.; Zheng, J.X. Frequency Domain Analysis of Small Angle Measurement with Moire Fringe. Acta Photonica Sin. 2010, 39, 709–713. [Google Scholar] [CrossRef]

- Wu, Y.M.; Cheng, H.B.; Wen, Y.F. High-precision rotation angle measurement method based on a lensless digital holographic microscope. Appl. Opt. 2018, 57, 112–118. [Google Scholar] [CrossRef] [PubMed]

- Yun, H.G.; Kim, S.H.; Jeong, H.S.; Kim, K.H. Rotation angle measurement based on white-light interferometry with a standard optical flat. Appl. Opt. 2012, 51, 720–725. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Khotanzad, A.; Hong, Y.H. Invariant Image Recognition by Zernike Moments. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 489–497. [Google Scholar] [CrossRef]

- Marin, D.; Aquino, A.; Gegundez-Arias, M.E.; Bravo, J.M. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans. Med. Imaging 2011, 30, 146–158. [Google Scholar] [CrossRef] [PubMed]

- Mandal, M.K.; Aboulnasr, T.; Panchanathan, S. Image indexing using moments and wavelets. IEEE Trans. Consum. Electron. 1996, 42, 557–565. [Google Scholar] [CrossRef]

- Berthold, K.P.H. Robot Vision; MIT: Boston, MA, USA, 1987; p. 50. [Google Scholar]

- Liu, Z.J.; Li, Q.; Xia, Z.W.; Wang, Q. Target recognition of ladar range images using even-order Zernike moments. Appl. Opt. 2012, 51, 7529–7536. [Google Scholar] [CrossRef]

- Ouyang, Q.; Wen, C.; Song, Y.D.; Dong, X.C.; Zhang, X.L. Approach for designing and developing high-precision integrative systems for strip flatness detection. Appl. Opt. 2015, 54, 8429–8438. [Google Scholar] [CrossRef] [PubMed]

- Munoz-Rodriguez, J.A. Online self-camera orientation based on laser metrology and computer algorithms. Opt. Commun. 2011, 284, 5601–5612. [Google Scholar] [CrossRef]

- Tian, J.D.; Peng, X. Three-dimensional digital imaging based on shifted point-array encoding. Appl. Opt. 2005, 44, 5491–5496. [Google Scholar] [CrossRef] [PubMed]

| Ideal Value | Measured Value | Correction Value | Error |

|---|---|---|---|

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, C.; Ouyang, Q. 2D Rotation-Angle Measurement Utilizing Least Iterative Region Segmentation. Sensors 2019, 19, 1634. https://doi.org/10.3390/s19071634

Cao C, Ouyang Q. 2D Rotation-Angle Measurement Utilizing Least Iterative Region Segmentation. Sensors. 2019; 19(7):1634. https://doi.org/10.3390/s19071634

Chicago/Turabian StyleCao, Chenguang, and Qi Ouyang. 2019. "2D Rotation-Angle Measurement Utilizing Least Iterative Region Segmentation" Sensors 19, no. 7: 1634. https://doi.org/10.3390/s19071634

APA StyleCao, C., & Ouyang, Q. (2019). 2D Rotation-Angle Measurement Utilizing Least Iterative Region Segmentation. Sensors, 19(7), 1634. https://doi.org/10.3390/s19071634