Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area

Abstract

1. Introduction

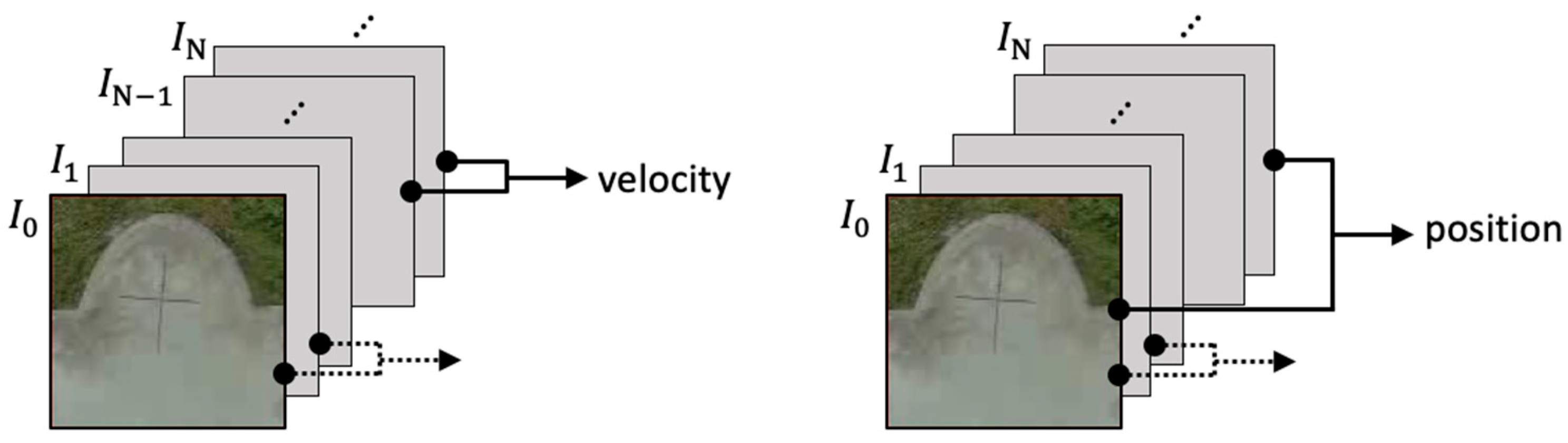

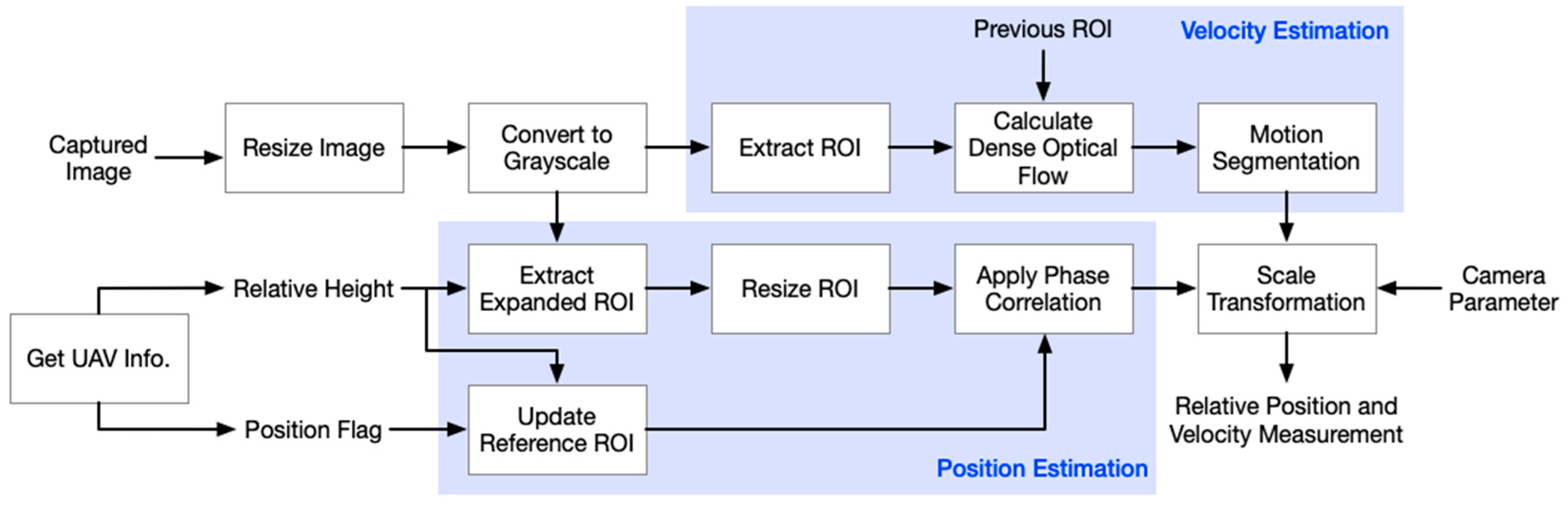

2. Vision-Based Motion Estimation by Hybrid Optical Flow

2.1. Grunnar–Farnebäck Optical Flow

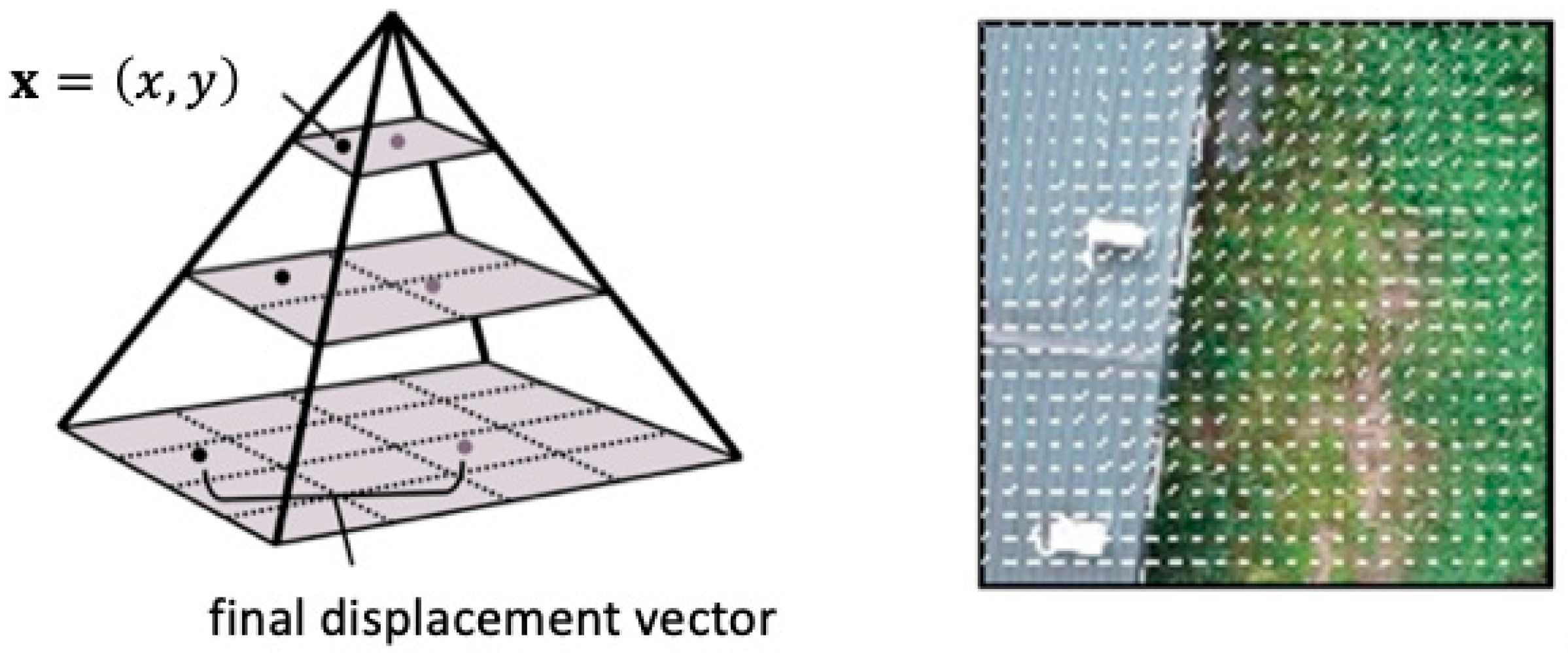

2.2. Multi-Scale Phase Correlation

3. Experimental Results

3.1. Experimental Setup

3.2. Results and Data Analysis

3.3. Landing Controls using Vision-Based Motion Estimation

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Dickmanns, E.D. A general dynamic vision architecture for UGV and UAV. J. Appl. Intell. 1992, 2, 251–270. [Google Scholar] [CrossRef]

- Saripalli, S.; Montgomery, J.F.; Sukhatme, G.S. Vision-based autonomous landing of an unmanned aerial vehicle. In Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 2799–2804. [Google Scholar]

- Lange, S.; Sünderhauf, N.; Protzel, P. Autonomous landing for a multirotor UAV using vision. In Proceedings of the International Conference on Simulation, Modeling, and Programming for Autonomous Robots, Venice, Italy, 3–7 November 2008; pp. 482–491. [Google Scholar]

- Lange, S.; Sunderhauf, N.; Protzel, P. A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In Proceedings of the International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6. [Google Scholar]

- Nguyen, P.H.; Kim, K.W.; Lee, Y.W.; Park, K.R. Remote Marker-Based Tracking for UAV Landing Using Visible-Light Camera Sensor. Sensors 2017, 17, 1987. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.; Arsalan, M.; Koo, J.; Naqvi, R.; Truong, N.; Park, K. LightDenseYOLO: A Fast and Accurate Marker Tracker for Autonomous UAV Landing by Visible Light Camera Sensor on Drone. Sensors 2018, 18, 1703. [Google Scholar] [CrossRef]

- Kyristsis, S.; Antonopoulos, A.; Chanialakis, T.; Stefanakis, E.; Linardos, C.; Tripolitsiotis, A.; Partsinevelos, P. Towards Autonomous Modular UAV Missions: The Detection, Geo-Location and Landing Paradigm. Sensors 2016, 16, 1844. [Google Scholar] [CrossRef] [PubMed]

- Falanga, D.; Zanchettin, A.; Simovic, A.; Delmerico, J.; Scaramuzza, D. Vision-based autonomous quadrotor landing on a moving platform. In Proceedings of the IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Shanghai, China, 11–13 October 2017; pp. 200–207. [Google Scholar]

- Lin, S.; Garratt, M.A.; Lambert, A.J.J.A.R. Monocular vision-based real-time target recognition and tracking for autonomously landing an UAV in a cluttered shipboard environment. Auton. Rob. 2017, 41, 881–901. [Google Scholar] [CrossRef]

- Desaraju, V.R.; Michael, N.; Humenberger, M.; Brockers, R.; Weiss, S.; Nash, J.; Matthies, L. Vision-based landing site evaluation and informed optimal trajectory generation toward autonomous rooftop landing. Auton. Rob. 2015, 39, 445–463. [Google Scholar] [CrossRef]

- Forster, C.; Faessler, M.; Fontana, F.; Werlberger, M.; Scaramuzza, D. Continuous on-board monocular-vision-based elevation mapping applied to autonomous landing of micro aerial vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 111–118. [Google Scholar]

- Huang, A.S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual Odometry and Mapping for Autonomous Flight Using an RGB-D Camera. In Robotics Research: The 15th International Symposium ISRR; Christensen, H.I., Khatib, O., Eds.; Springer International Publishing: Cham, Swizerland, 2017; pp. 235–252. [Google Scholar]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S.; Systems, R. A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks. J. Int. Robot. Syst. 2009, 57, 233. [Google Scholar] [CrossRef]

- Loquercio, A.; Maqueda, A.I.; del-Blanco, C.R.; Scaramuzza, D. DroNet: Learning to Fly by Driving. IEEE Robotics Autom. Lett. 2018, 3, 1088–1095. [Google Scholar] [CrossRef]

- Campbell, J.; Sukthankar, R.; Nourbakhsh, I. Techniques for evaluating optical flow for visual odometry in extreme terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; Volume 3704, pp. 3704–3711. [Google Scholar]

- Dille, M.; Grocholsky, B.; Singh, S. Outdoor Downward-Facing Optical Flow Odometry with Commodity Sensors. In Proceedings of the 7th International Conference on Field and Service Robotics, Cambridge, MA, USA, 14–16 July 2009; pp. 183–193. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th international joint conference on Artificial intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Jianbo, S.; Tomasi. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Barron, J.L.; Fleet, D.J.; Beauchemin, S.S. Performance of optical flow techniques. Int. J. Comput. Vis. 1994, 12, 43–77. [Google Scholar] [CrossRef]

- Kondermann, D.; Abraham, S.; Brostow, G.J.; Förstner, W.; Gehrig, S.K.; Imiya, A.; Jähne, B.; Klose, F.; Magnor, M.A.; Mayer, H.; et al. On Performance Analysis of Optical Flow Algorithms. In Proceedings of the 15th International Workshop on Theoretical Foundations of Computer Vision, Dagstuhl Castle, Germany, 26 June–1 July 2011. [Google Scholar]

- Horn, B.K.P.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Proceedings of the 13th Scandinavian conference on Image analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Kuglin, C.D.; Hines, D.C. The phase correlation image alignment method. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, New York, NY, USA, September 1975; pp. 163–165. [Google Scholar]

- Stiller, C.; Konrad, J. Estimating motion in image sequences. IEEE Signal Process. Mag. 1999, 16, 70–91. [Google Scholar] [CrossRef]

- Foroosh, H.; Zerubia, J.B.; Berthod, M. Extension of phase correlation to subpixel registration. IEEE Trans. Image Process. 2002, 11, 188–200. [Google Scholar] [CrossRef]

- Ma, N.; Sun, P.-F.; Men, Y.-B.; Men, C.-G.; Li, X. A Subpixel Matching Method for Stereovision of Narrow Baseline Remotely Sensed Imagery. Math. Probl. Eng. 2017, 2017, 14. [Google Scholar] [CrossRef]

- Thoduka, S.; Hegger, F.; Kraetzschmar, G.; Plöger, P. Motion Detection in the Presence of Egomotion Using the Fourier-Mellin Transform. In RoboCup 2017: Robot World Cup XXI, 1st ed.; Springer: Cham, Switzerland, 2018; pp. 252–264. [Google Scholar]

- Van de Loosdrecht, J.; Dijkstra, K.; Postma, J.H.; Keuning, W.; Bruin, D. Twirre: Architecture for autonomous mini-UAVs using interchangeable commodity. In Proceedings of the International Micro Air Vehicle Competition and Conference, Delft, The Netherlands, 12–15 August 2014; pp. 26–33. [Google Scholar]

- Maluleke, H. Motion Detection from a Moving Camera. Bachelor’s Thesis, University of the Western Cape, Cape Town, South Africa, 2016. [Google Scholar]

- Sarvaiya, J.; Patnaik, S.; Kothari, K. Image Registration Using Log Polar Transform and Phase Correlation to Recover Higher Scale. J. Pattern Recognit. Res. 2012, 7, 90–105. [Google Scholar] [CrossRef]

- Lijun Lai, Z.X. Global Motion Estimation Based on Fourier Mellin and Phase Correlation. In Proceedings of the International Conference on Civil, Materials and Environmental Sciences (CMES), London, UK, 14–15 March 2015. [Google Scholar]

- Baker, S.; Scharstein, D.; Lewis, J.P.; Roth, S.; Black, M.J.; Szeliski, R. A Database and Evaluation Methodology for Optical Flow. Int. J. Comput. Vision 2011, 92, 1–31. [Google Scholar] [CrossRef]

- Castro, E.D.; Morandi, C. Registration of Translated and Rotated Images Using Finite Fourier Transforms. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 700–703. [Google Scholar] [CrossRef]

- Goodman, J.W. Introduction to Fourier Optics, 4th ed.; W.H. Freeman: New York, NY, USA, 2017. [Google Scholar]

- Brunelli, R. Template Matching Techniques in Computer Vision: Theory and Practice; Wiley Publishing: Hoboken, NJ, USA, 2009; p. 348. [Google Scholar]

| Landing Mode | Landing Target | Final Position | GPS | Vision System |

|---|---|---|---|---|

| (+) | (+) | (● - +) | (● - +) | |

| Mode 1 | (0, 0) | (0.11, 0.07) | (0, −0.13) | (−0.08, −0.04) |

| Mode 1 | (0, 0) | (−0.07, 0.40) | (0.91, 0.93) | (−0.02, −0.30) |

| Mode 1 | (0, 0) | (−0.45, −0.05) | (0.05, 0.58) | (0.47, 0.05) |

| Mode 2 | (0, 0) | (−1.14, −0.48) | (0.99, 0.18) | (0.06. 0.01) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, H.-W.; Chen, T.-L.; Tien, C.-H. Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area. Sensors 2019, 19, 1380. https://doi.org/10.3390/s19061380

Cheng H-W, Chen T-L, Tien C-H. Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area. Sensors. 2019; 19(6):1380. https://doi.org/10.3390/s19061380

Chicago/Turabian StyleCheng, Hsiu-Wen, Tsung-Lin Chen, and Chung-Hao Tien. 2019. "Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area" Sensors 19, no. 6: 1380. https://doi.org/10.3390/s19061380

APA StyleCheng, H.-W., Chen, T.-L., & Tien, C.-H. (2019). Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area. Sensors, 19(6), 1380. https://doi.org/10.3390/s19061380