An Intelligent Vision Based Sensing Approach for Spraying Droplets Deposition Detection

Abstract

1. Introduction

- We explored a novel intelligent droplets collection node, which is an automatic control and closed device with less environment interference. What’s most important is that our node can adapt to different lighting conditions and large-scale detection for aviation droplet, and avoid the human errors existing in common detection approaches.

- Combining with T-310 achromatic ink which has water-transparent and dry-rejuvenating properties, we design T-310 achromatic oil paper based on silicone oil paper as a droplet deposition carrier, which can repeatedly collect droplets image, and make up for some disadvantages of the common droplets carriers with a low utilization rate, high cost, and easy contamination.

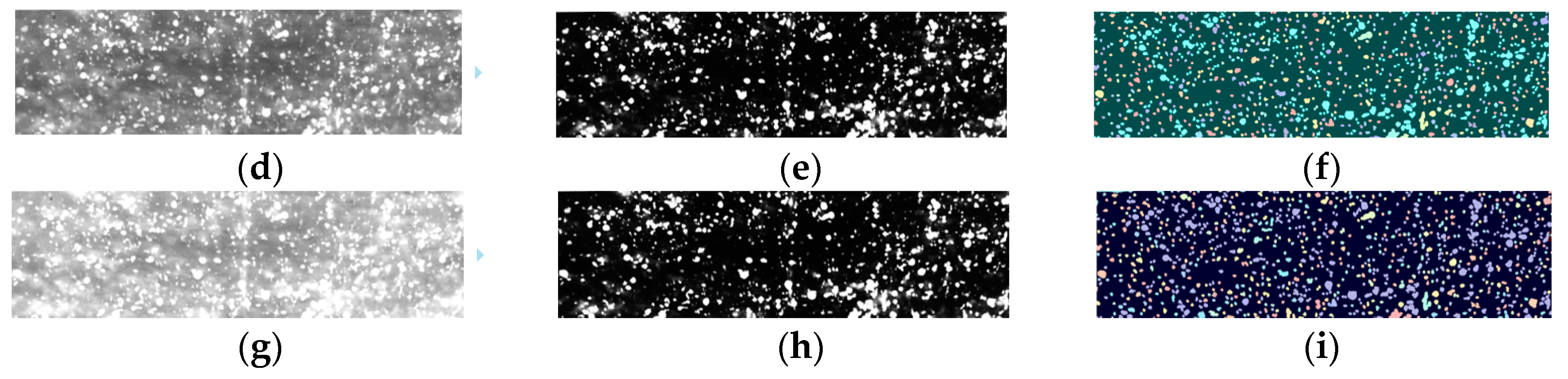

- On the basis of the morphology, we introduce the shape degree and area threshold as the basis for the determination of droplets’ adhesion, and then modify the marker-controlled watershed segmentation algorithm to segment the adhesive droplets.

2. Related Works

3. Materials and Methods

3.1. Intelligent Collection Node Setup

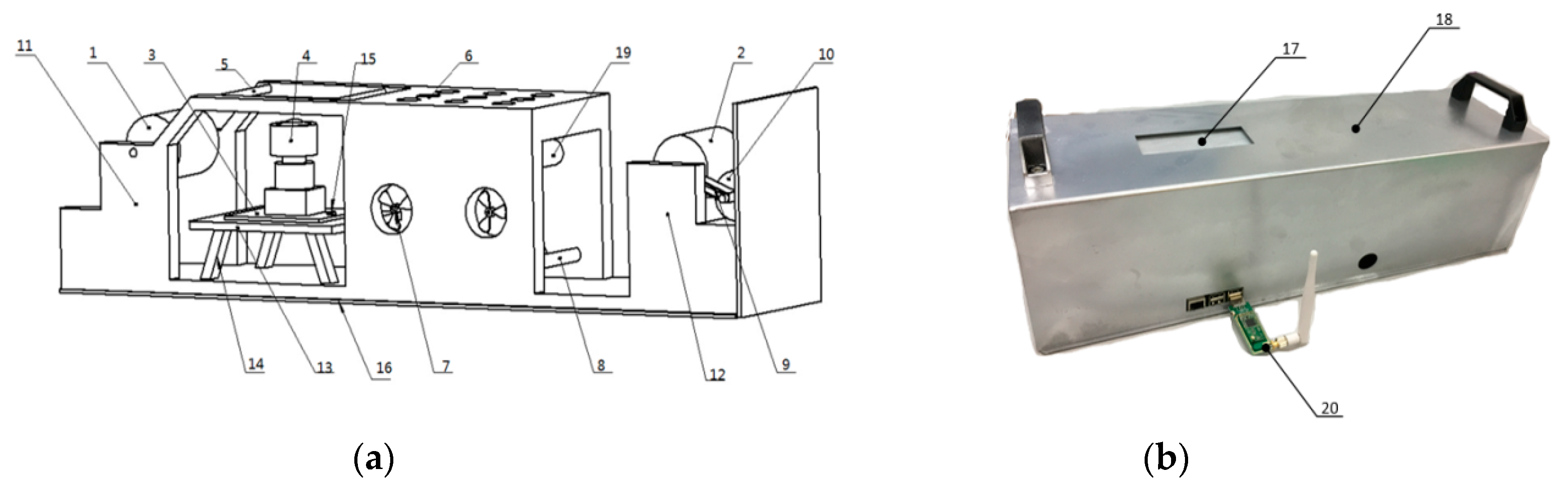

3.1.1. Design an Intelligent Collection Node Based on Vision Sensor for Droplets Deposition Image

3.1.2. Software Control Process

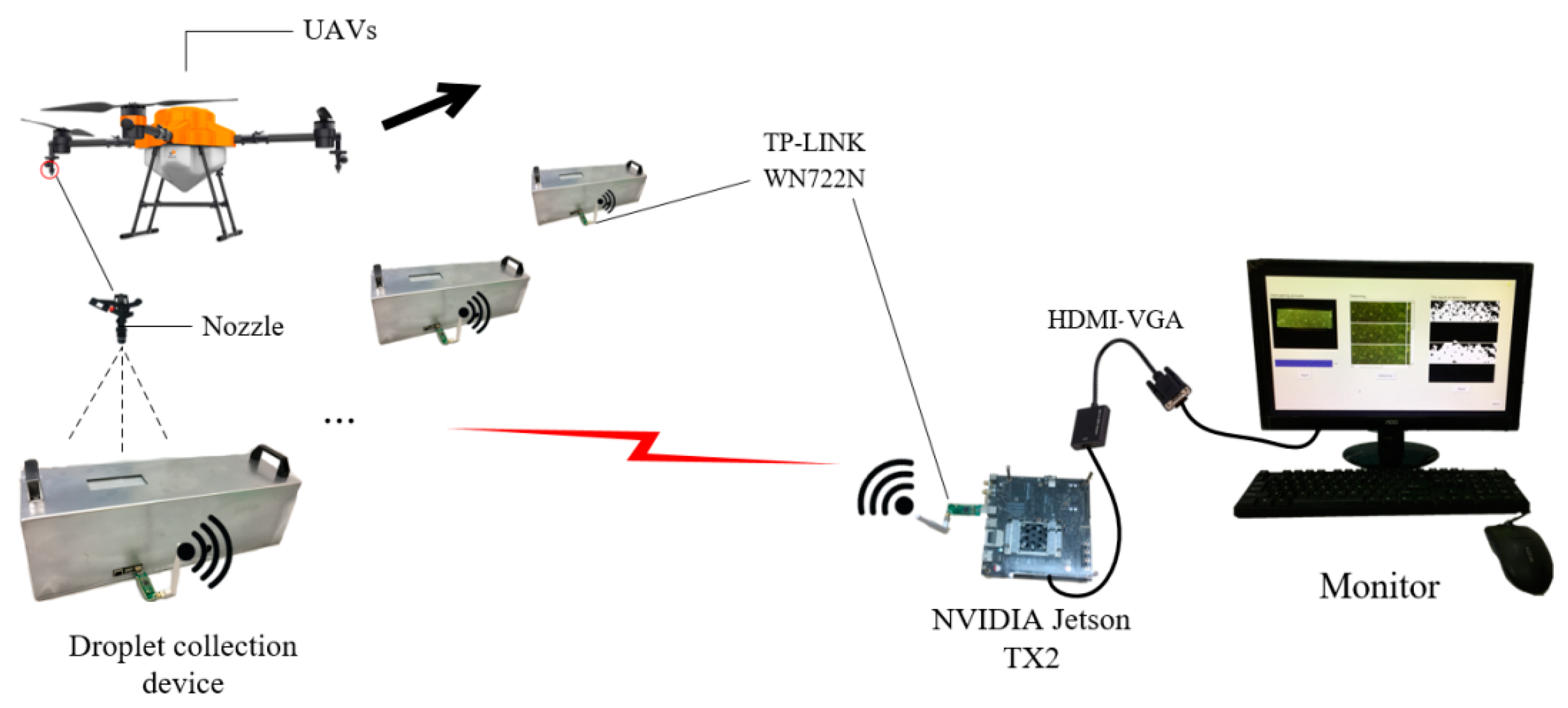

- Reasonable software control algorithm is necessary for system automation. The control algorithm of the node consists of 4 parts:When droplet fall on its deposition hole, the T-310 achromatic oil paper reacts with the droplet and becomes transparent, thereby increasing the amount of light transmission in the semi-closed device environment and allowing the light sensor to detect the increase in the light value. Then, the system waits for 10 s to maximize the reaction. Next, turn on the CSI camera to acquire the droplet deposition image and upload it which along with illuminance data to the human-computer interaction system in the NVIDIA Jetson TX2 (NVIDIA Corporation, Santa Clara, California, USA) through the WIFI-WN722N module, as shown in Figure 2.

- After collecting the droplet image, we turn on the motor to drive the achromatic oil paper to move to a specified distance, so that they can place the achromatic paper with droplet in the drying box.

- Turn off the motor and turn on the relay of the drying box at the same time so as to keep the fan and the heating tube in working conditions. The drying process will last for the 30 s to restore the dry achromatic oil paper to its original color.

- Turn on the motor to move the achromatic paper to a specified distance, and the roll paper collection device gathers the restored achromatic paper for reuse.

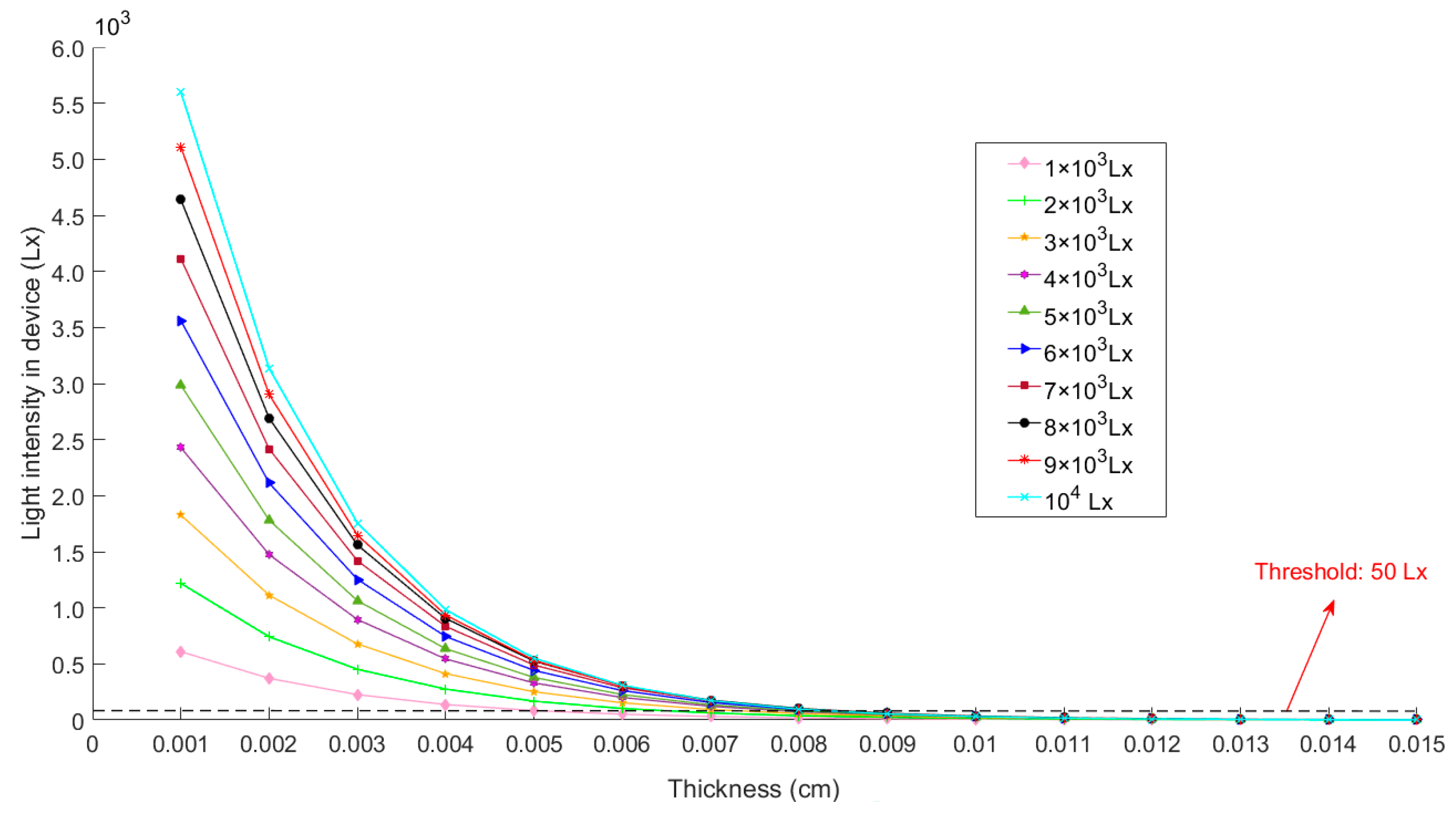

3.2. The Mathematical Model of T-310 Achromatic Ink Thickness and Light Intensity

3.2.1. T-310 Achromatic Oil Paper Droplets Deposition Carrier

3.2.2. The Generation of Droplet Spot

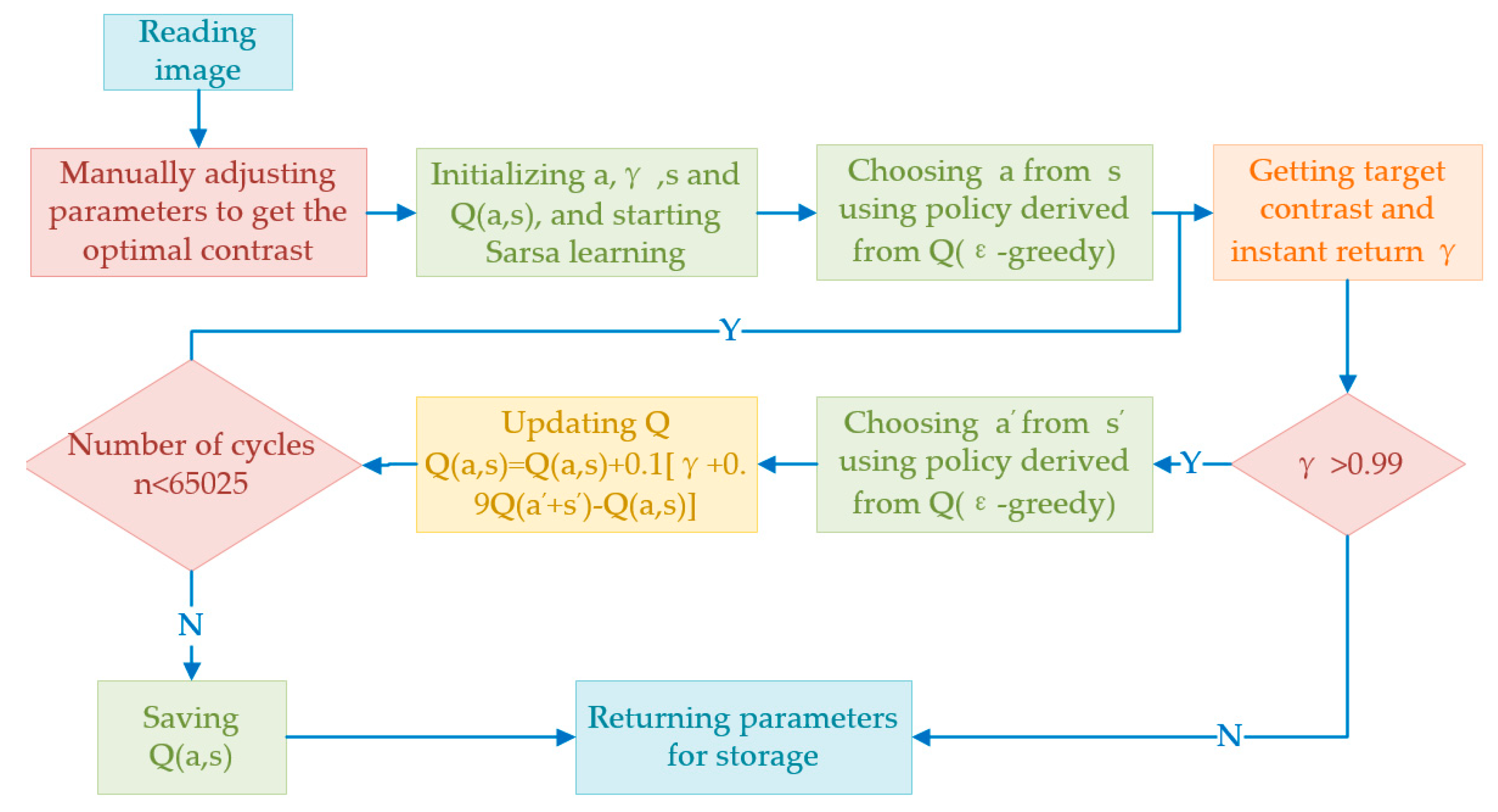

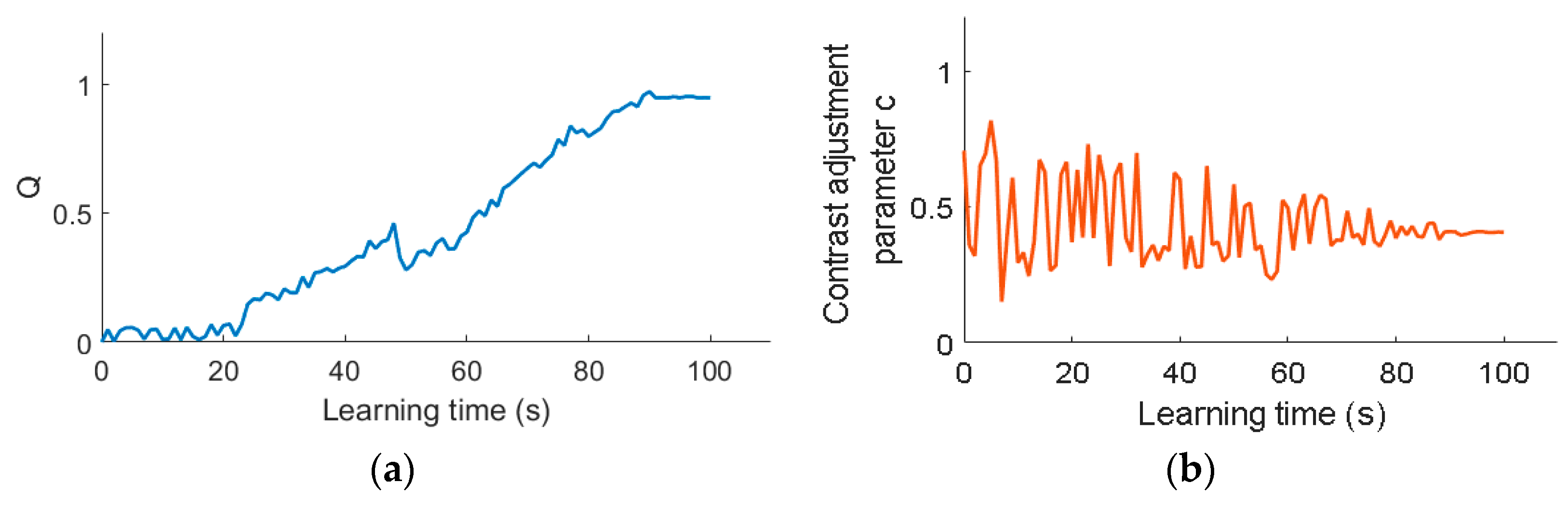

3.2.3. Light Adaptation Based on Reinforcement Learning

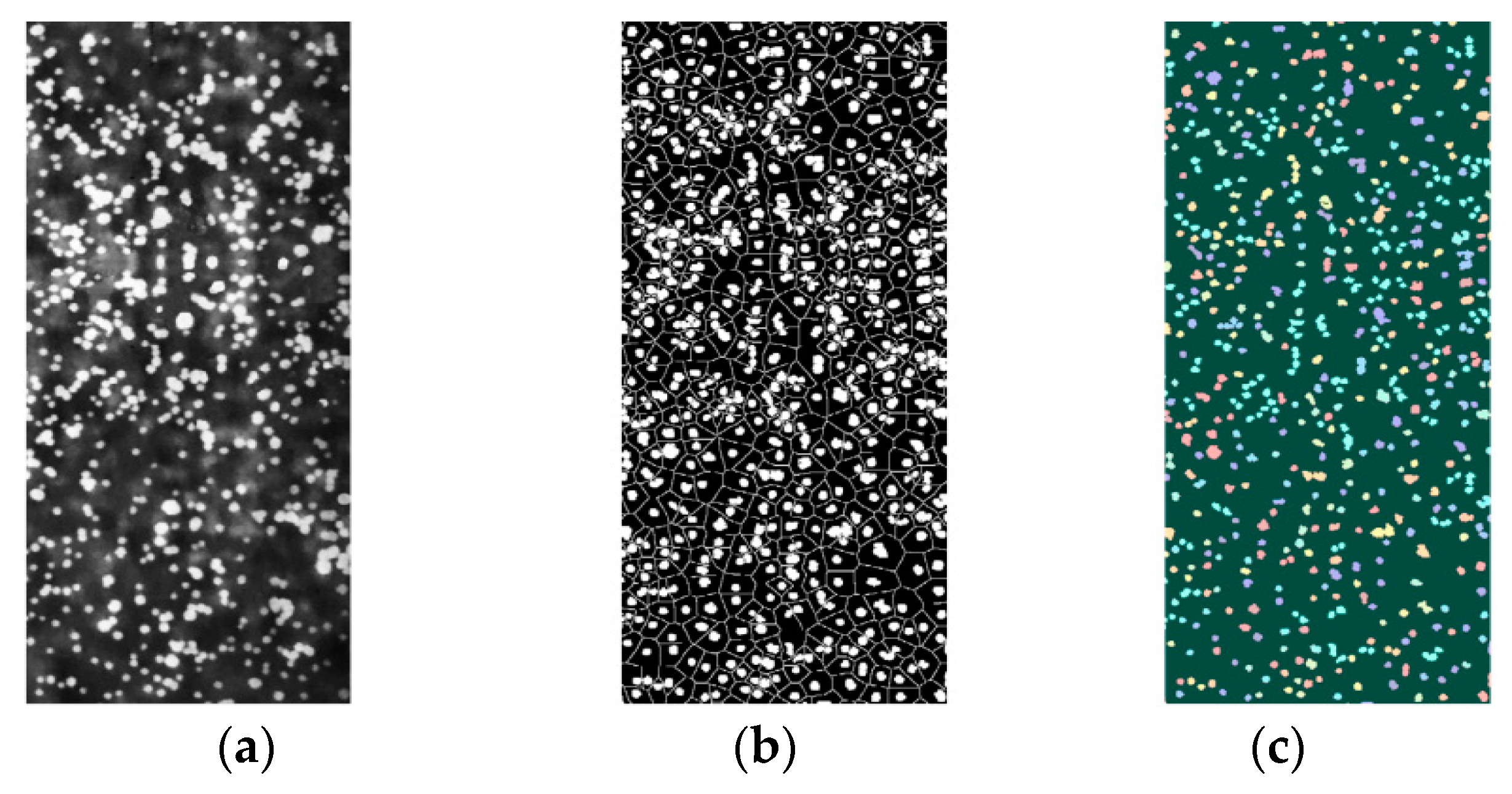

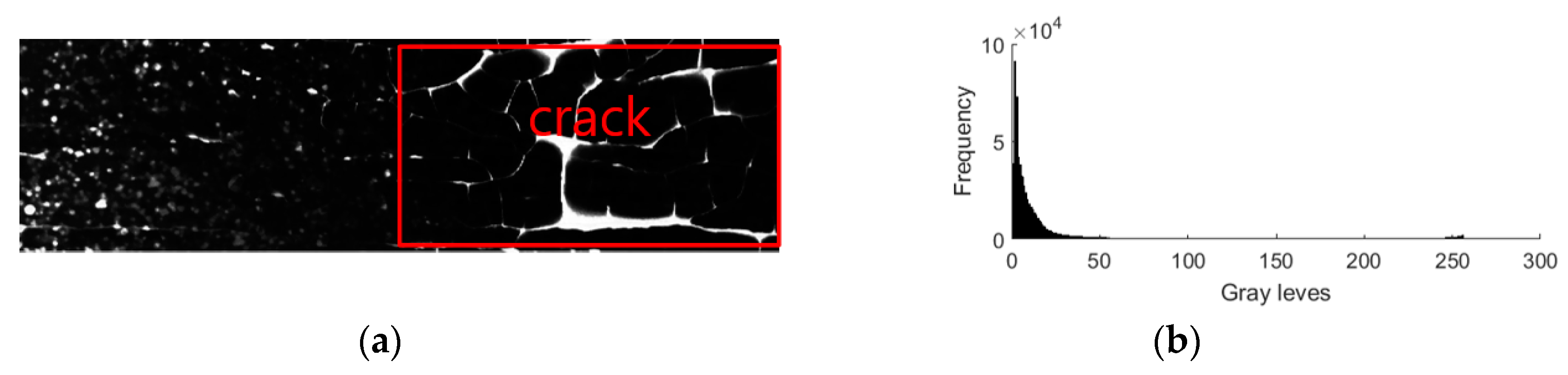

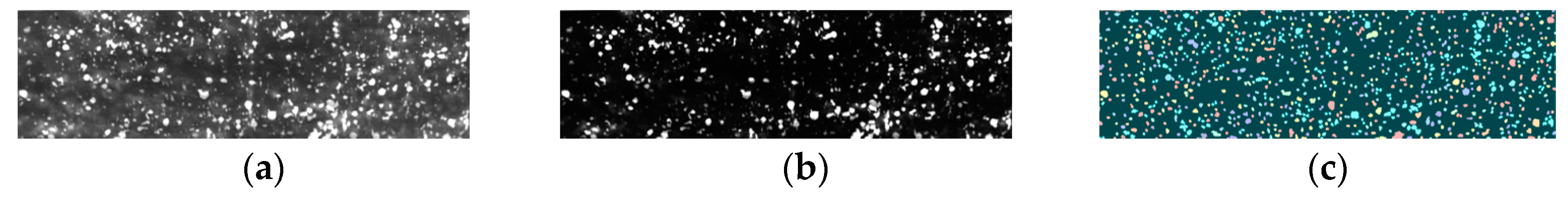

3.3. The Modified Morphology Algorithm of Droplet Measurement

3.3.1. Separation of Adhesive Droplets

3.3.2. Droplet Deposition Parameters

3.3.3. Modified Marker Controllable Watershed Segmentation Algorithm

4. Experiments and Results

4.1. Verification of the Relationship between the Thickness of Achromatic Oil Paper and the Light Intensity

4.2. Illumination Adaptive Simulation Analysis Based on Reinforcement Learning

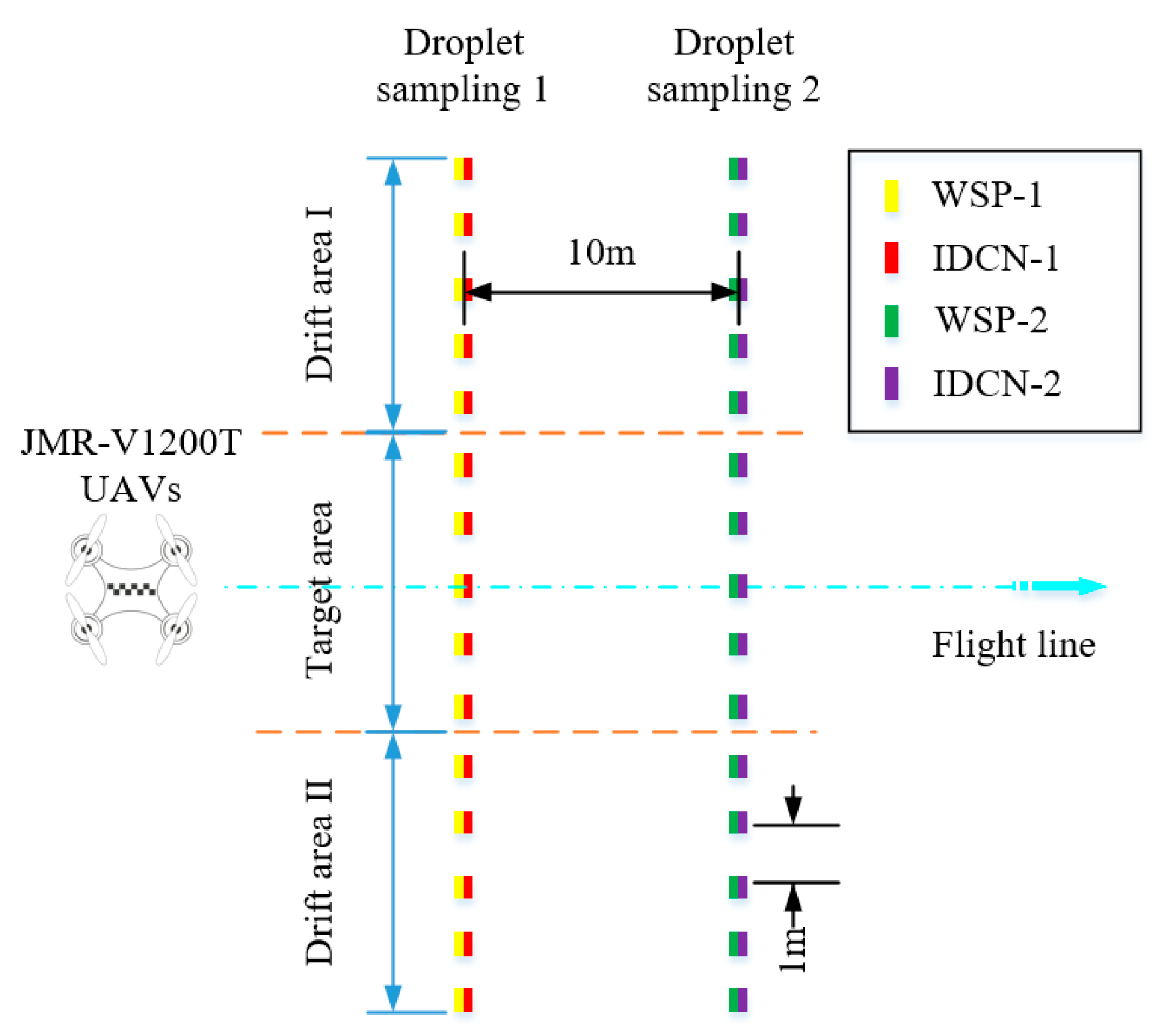

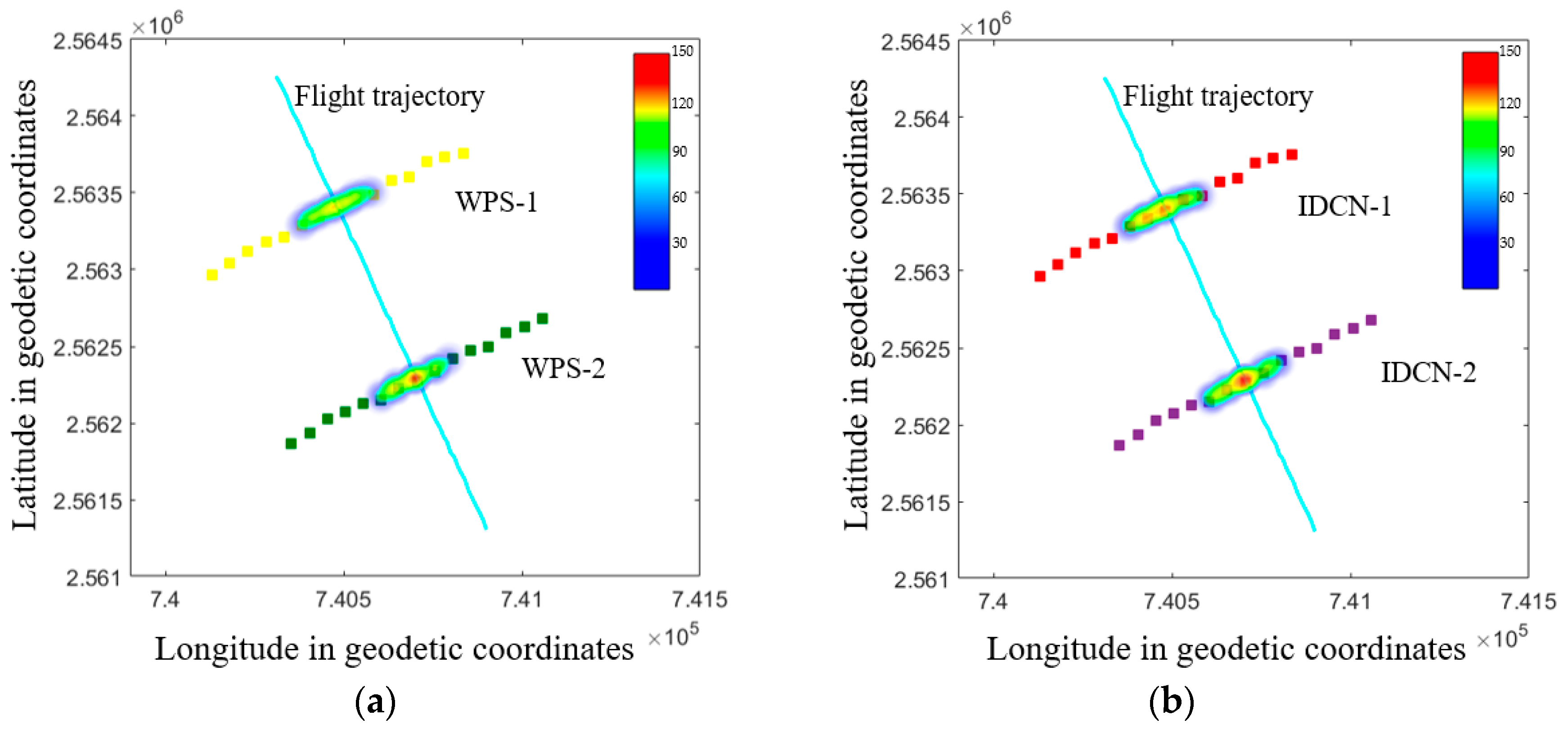

4.3. Comparative Test and Analysis of Distribution Characteristics for Droplet Deposition

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zheng, Y.J.; Yang, S.H.; Zhao, C.J.; Chen, L.P. Modelling operation parameters of UAV on spray effects at different growth stages of corns. Int. J. Agric. Biol. Eng. 2017, 10, 57–66. [Google Scholar] [CrossRef]

- Kirk, I.W. Measurement and prospection of atomization parameters from fixed-wing aircraft spray nozzles. Trans. ASABE 2007, 53, 693–703. [Google Scholar] [CrossRef]

- Yu, C.K.; Hsieh, P.R.; Yuter, S.E. Measuring droplet fall speed with a high-speed camera: Indoor accuracy and potential outdoor applications. Atmos. Meas. Tech. 2016, 9, 1755–1766. [Google Scholar] [CrossRef]

- Guildenbecher, D.R.; Cooper, M.A.; Sojka, P.E. High-speed (20 kHz) digital in-line holography for transient particle tracking and sizing in multiphase flows. Appl. Opt. 2016, 55, 2892–2903. [Google Scholar] [CrossRef] [PubMed]

- Kawaguchi, T.; Takushi, S.; Isao, S. Measurement accuracy of dynamic interferometric laser imaging for droplet size. Symp. Atom. 2012, 21, 183–188. [Google Scholar]

- Bradley, K.F.; Hoffmann, W.C.; Wolf, R.E. Wind tunnel and field evaluation of drift from aerial spray applications with multiple spray formulations. In Pesticide Formulations and Delivery Systems: Innovating Legacy Products for New Uses; ASTM International: West Conshohocken, PA, USA, 2012; pp. 1–18. [Google Scholar]

- Liang, P. Study on Droplet Size Detection System Based on Computer Vision. For. Mach. Woodwork. Equip. 2009, 37, 38–40. [Google Scholar]

- Bizjan, B.; Kuznetsov, A.; Jeromen, A. High-speed camera thermometry of laser droplet generation. Appl. Therm. Eng. 2017, 110, 298–305. [Google Scholar] [CrossRef]

- Liu, R.; Le, J.; Chen, L. Experimental and numerical study on spray atomization in a double-swirler combustor. J. Exp. Fluid Mech. 2017, 31, 24–31. [Google Scholar]

- Kawaguchi, T.; Kobayashi, T.; Maeda, M. (3-24) Application of Improved Interferometric Laser Imaging Droplet Sizing (ILIDS) System to Hollow-Cone Sprays ((D-3) Diagnostics 3-Applications and Advanced Technology). Int. Symp. Diagn. Model. 2017, 1, 87. [Google Scholar] [CrossRef]

- Bradley, K.F.; Hoffmann, W.C.; Jank, P. A fluorescent tracer method for evaluation spray transport and fate of field and laboratory spray application. J. ASTM Int. 2011, 8, 1–9. [Google Scholar]

- Wang, L.; Lan, Y.B.; Hoffmann, W.C. Design of Variable Spraying System and Influencing Factors on Droplets Deposition of Small UAV. Trans. Chin. Soc. Agric. Mach. 2016, 1, 15–22. [Google Scholar]

- Wang, C.L.; He, X.K.; Wang, X.N. Testing method of spatial pesticide spraying deposition quality balance for unmanned aerial vehicle. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2016, 32, 54–61. [Google Scholar] [CrossRef]

- Jeschke, S.; Brecher, C.; Song, H.B.; Rawat, D. Industrial Internet of Things: Cyber manufacturing Systems; Springer: Cham, Switzerland, 2017; pp. 1–715. ISBN 978-3-319-42558-0. [Google Scholar]

- Hermosilla, J.S.; Medina, R. Adaptive threshold for droplet spot analysis using water-sensitive paper. Appl. Eng. Agric. 2004, 20, 547–551. [Google Scholar] [CrossRef]

- Qi, L.J.; Hu, K.Q.; Mang, L. Droplet detection based on image processing. Trans. Chin. Soc. Agric. Mach. 2009, 40, 48–51. [Google Scholar]

- Zhang, D.Y.; Chen, L.P.; Zhang, R.R. Evaluating effective swath width and droplet distribution of aerial spraying systems on M-18B and Thrush 510 G airplanes. Int. J. Agric. Biol. Eng. 2015, 8, 21–30. [Google Scholar]

- Wang, S.H.; Zheng, J.; Hu, H.M. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.B.; Jiang, H.; Wu, S. Decentralized Cognitive MAC Protocol Based on SARSA. In Proceedings of the 2012 IEEE 14th International Conference on Communication Technology, Chengdu, China, 9–11 November 2012; pp. 392–396. [Google Scholar]

- Wiering, M.A. Multi-Agent Reinforcement Learning for Traffic Light Control. In Proceedings of the Seventeenth International Conference on Machine Learning, Stanford, CA, USA, 2 July 2000; pp. 1151–1158. [Google Scholar]

- Yang, X.T.; Sun, W.J.; Li, M. Water droplets fluorescence image segmentation of cucumber leaves based on K-means clustering with opening and closing alternately filtering. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2016, 32, 136–143. [Google Scholar]

- Qiu, B.J.; Wang, T.B.; Li, J.J. Image recognition and counting for glass house aphis gossypii. Trans. Chin. Soc. Agric. Mach. 2010, 41, 151–155. [Google Scholar]

- Niu, J.; Bu, X.Z.; Qian, K. Touching corn kernels based on skeleton features information. Trans. Chin. Soc. Agric. Mach. 2014, 45, 280–284. [Google Scholar]

- Liang, P.; Cheng, W. Research of conglutinated droplets image segmentation based on watershed algorithm. J. Chongqing Inst. Technol. Natl. Sci. 2008, 22, 170–174. [Google Scholar]

- Wang, W.X. Binary image segmentation of aggregates base on polygonal approximation and classification of concavities. Pattern Recogn. 1998, 31, 1503–1524. [Google Scholar] [CrossRef]

- Guimaraes, L.V.; Suzim, A.A.; Maeda, J. A new automatic circular decomposition algorithm applied to blood cells image. In Proceedings of the IEEE International Symposium on Bio-Informatics and Biomedical, Arlington, VA, USA, 8–10 November 2000; pp. 277–280. [Google Scholar]

- Wu, Y.L.; Qi, L.J.; Zhang, Y. Optimized Method of Improved Characteristics Judgment and Separation Counting for Adhesive Droplets. Trans. Chin. Soc. Agric. Eng. 2017, 7, 220–227. [Google Scholar]

- Derksen, R.C.; Jiang, C. Automated detection of fluorescent spray deposits with a computer vision system. Trans. ASAE 1995, 38, 1647–1653. [Google Scholar] [CrossRef]

- Zhang, S.C.; Xue, X.Y.; Qin, W.C. Simulation and experimental verification of aerial spraying drift on N-3 unmanned spraying helicopter. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2015, 31, 87–93. [Google Scholar]

- Lian, D.Q.; Wang, S.H.; Xu, M.C. Experimental study and analysis on spray characteristics of small scale unmanned aerial spraying in soybean field. J. Agric. Mech. Res. 2017, 39, 197–201. [Google Scholar]

- Chen, Y.M.; Zhan, X.X.; He, J.C. Sprayed droplet sampling and data gathering. J. Fujian Agric. For. Univ. Natl. Sci. Ed. 2006, 35, 445–448. [Google Scholar]

- Zhang, R.; Wen, Y.; Yi, T.C.; Chen, L.P.; Xu, G. Development and application of aerial spray droplets deposition performance measurement system based on spectral analysis technology. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2017, 33, 80–87. [Google Scholar]

- Movahedi, V.; Elder, J.H. Design and Perceptual Validation of Performance Measure for Salient Object Segmentation. In Proceedings of the Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 49–56. [Google Scholar]

- Zhang, C.J.; Wang, X.D.; Zhang, H.R. Non-linear transform algorithm for image contrast enhancement. Opto-Electron. Eng. 2006, 33, 65–67. [Google Scholar]

- Zhou, T.J.; Zhang, T.Z.; Yang, L. Comparison of two algorithms based on mathematical morphology for segmentation of touching strawberry fruits. Trans. Chin. Soc. Agric. Eng. 2007, 23, 164–168. [Google Scholar]

- Cunha, J.; Farnese, A.C.; Olivet, J.J. Computer programs for analysis of droplets sprayed on water sensitive papers. Planta Daninha 2013, 31, 715–720. [Google Scholar] [CrossRef]

- Ge, Y.; Yuan, Y.; Jia, N. More efficient methods among commonly used robust estimation methods for GPS coordinate transformation. Surv. Rev. 2013, 45, 229–234. [Google Scholar] [CrossRef]

- Song, H.B.; Rawat, D.; Jeschke, S.; Brecher, C. Cyber-Physical Systems: Foundations, Principles and Applications; Academic Press: Boston, MA, USA, 2016; pp. 1–514. ISBN 978-0-12-803801-7. [Google Scholar]

| Input: droplets’ YCbCr image | |

| Output: marked droplets image , droplets’ number , Coverage C, Coverage density | |

| 1 | Read the Image and Convert it to binary image with Otsu method |

| 2 | Use the Gradient Magnitude as the Segmentation Function |

| 3 | Judging sticky droplets. , do If and go to step 4 else go to step 5 end |

| 4 | Mark Foreground Objects, and count the number of droplets )) |

| 5 | Compute Background Markers, and calculate coverage |

| 6 | Compute the Watershed Transform of the Segmentation Function (modify the gradient magnitude image) |

| 7 | |

| Product Parameter | Norms and Numerical |

|---|---|

| Size/cm × cm × cm | 110 × 125 × 68 |

| load/kg | 10 |

| Flight speed/(m·s−1) | 3–6 |

| Spraying width/m | 3–8 |

| Flight height/m | 1.2–6 |

| Flight time/min | 10–14 |

| Test | Approach | Flight Speed (m·s−1) | Flight Height (m) | Average Coverage Density (n·cm−2) | |

|---|---|---|---|---|---|

| Sampling Belt 1 | Sampling Belt 2 | ||||

| 1 | WPS | 3.11 | 1.41 | 73 | 99 |

| IDCN | 79 | 93 | |||

| 2 | WPS | 3.09 | 5.05 | 137 | 65 |

| IDCN | 128 | 68 | |||

| 3 | WPS | 5.89 | 1.46 | 59 | 89 |

| IDCN | 63 | 85 | |||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Yue, X.; Liu, Y.; Wang, J.; Wang, H. An Intelligent Vision Based Sensing Approach for Spraying Droplets Deposition Detection. Sensors 2019, 19, 933. https://doi.org/10.3390/s19040933

Wang L, Yue X, Liu Y, Wang J, Wang H. An Intelligent Vision Based Sensing Approach for Spraying Droplets Deposition Detection. Sensors. 2019; 19(4):933. https://doi.org/10.3390/s19040933

Chicago/Turabian StyleWang, Linhui, Xuejun Yue, Yongxin Liu, Jian Wang, and Huihui Wang. 2019. "An Intelligent Vision Based Sensing Approach for Spraying Droplets Deposition Detection" Sensors 19, no. 4: 933. https://doi.org/10.3390/s19040933

APA StyleWang, L., Yue, X., Liu, Y., Wang, J., & Wang, H. (2019). An Intelligent Vision Based Sensing Approach for Spraying Droplets Deposition Detection. Sensors, 19(4), 933. https://doi.org/10.3390/s19040933