Virtual Touch Sensor Using a Depth Camera

Abstract

:1. Introduction

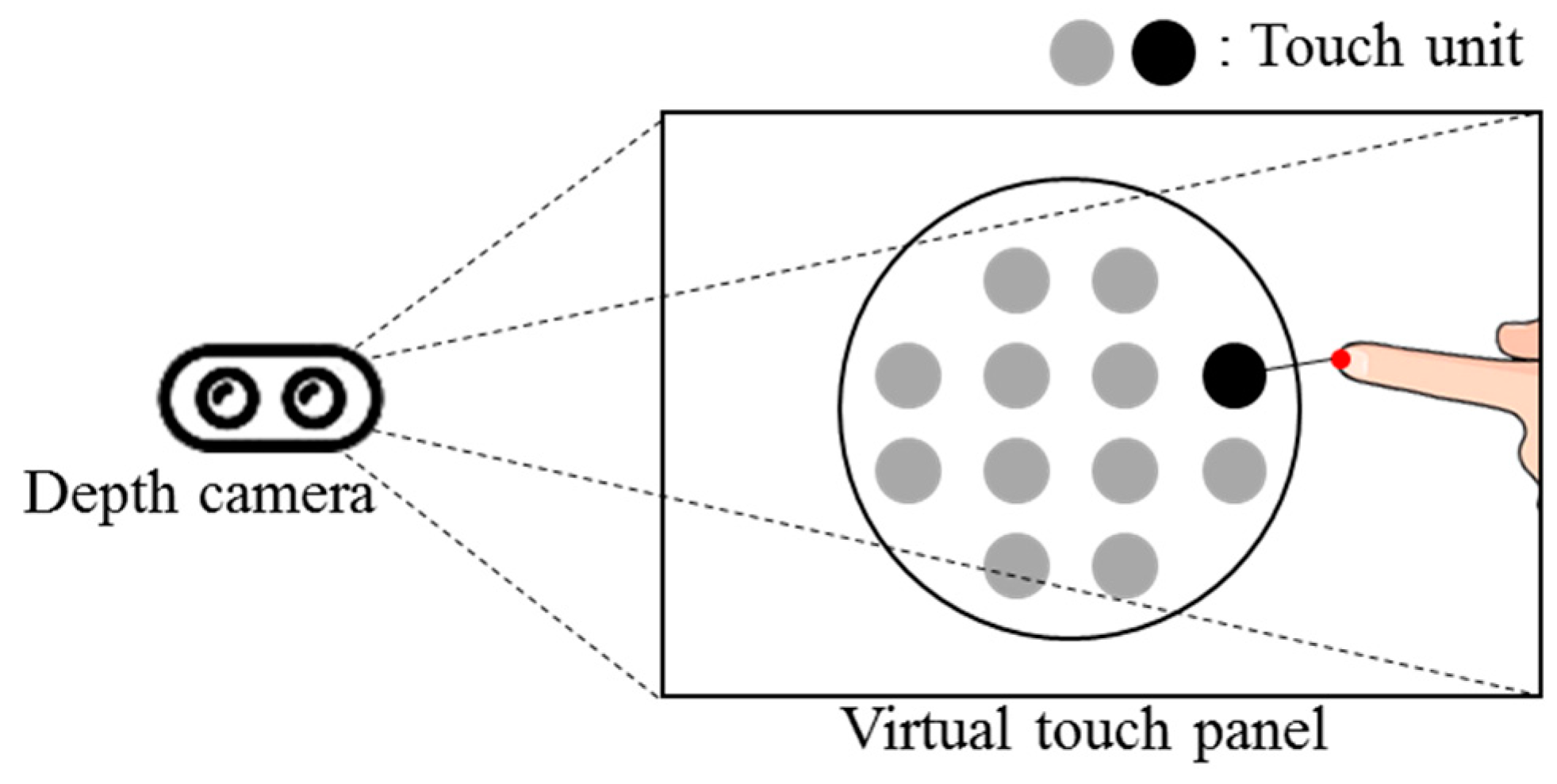

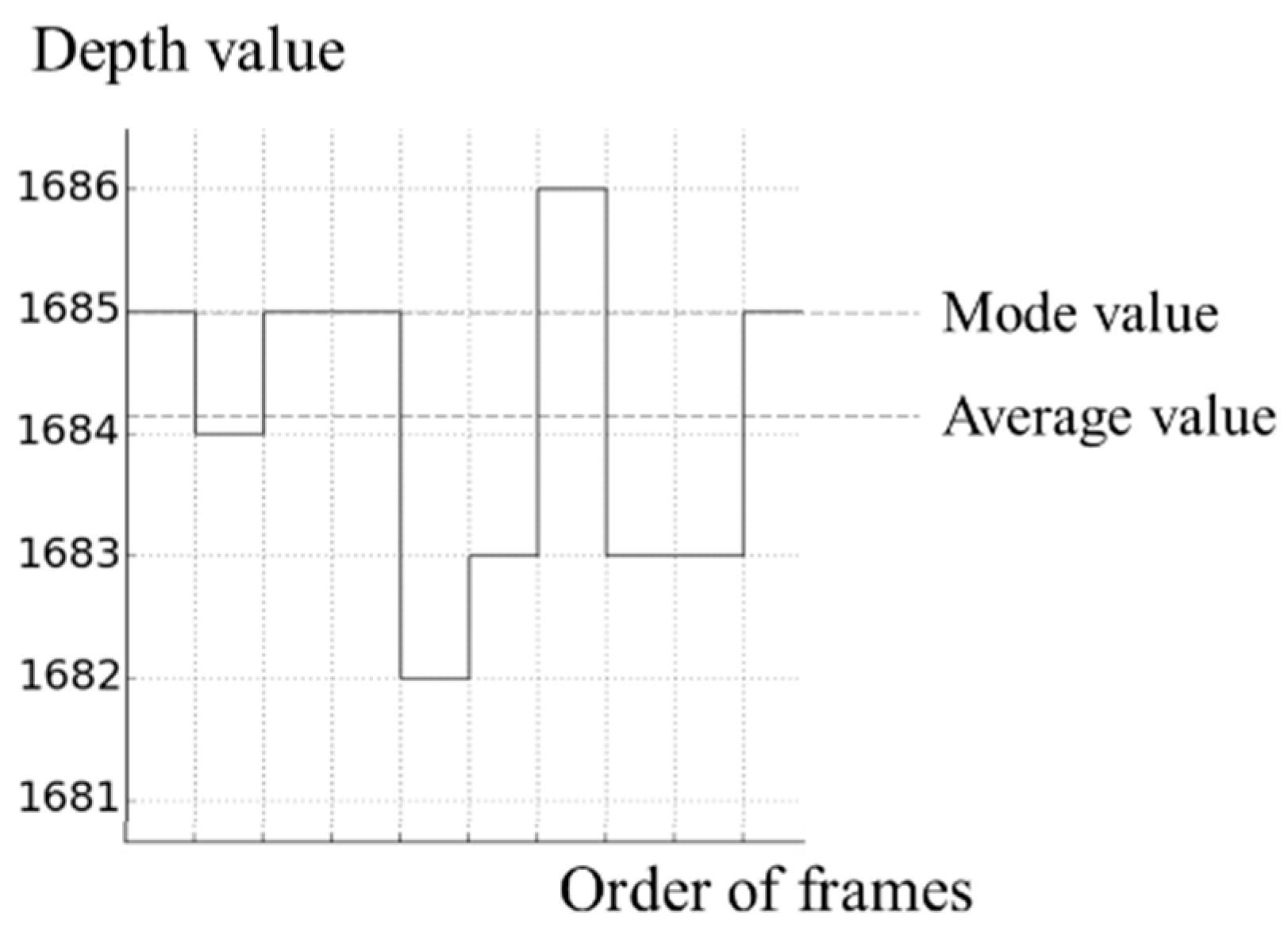

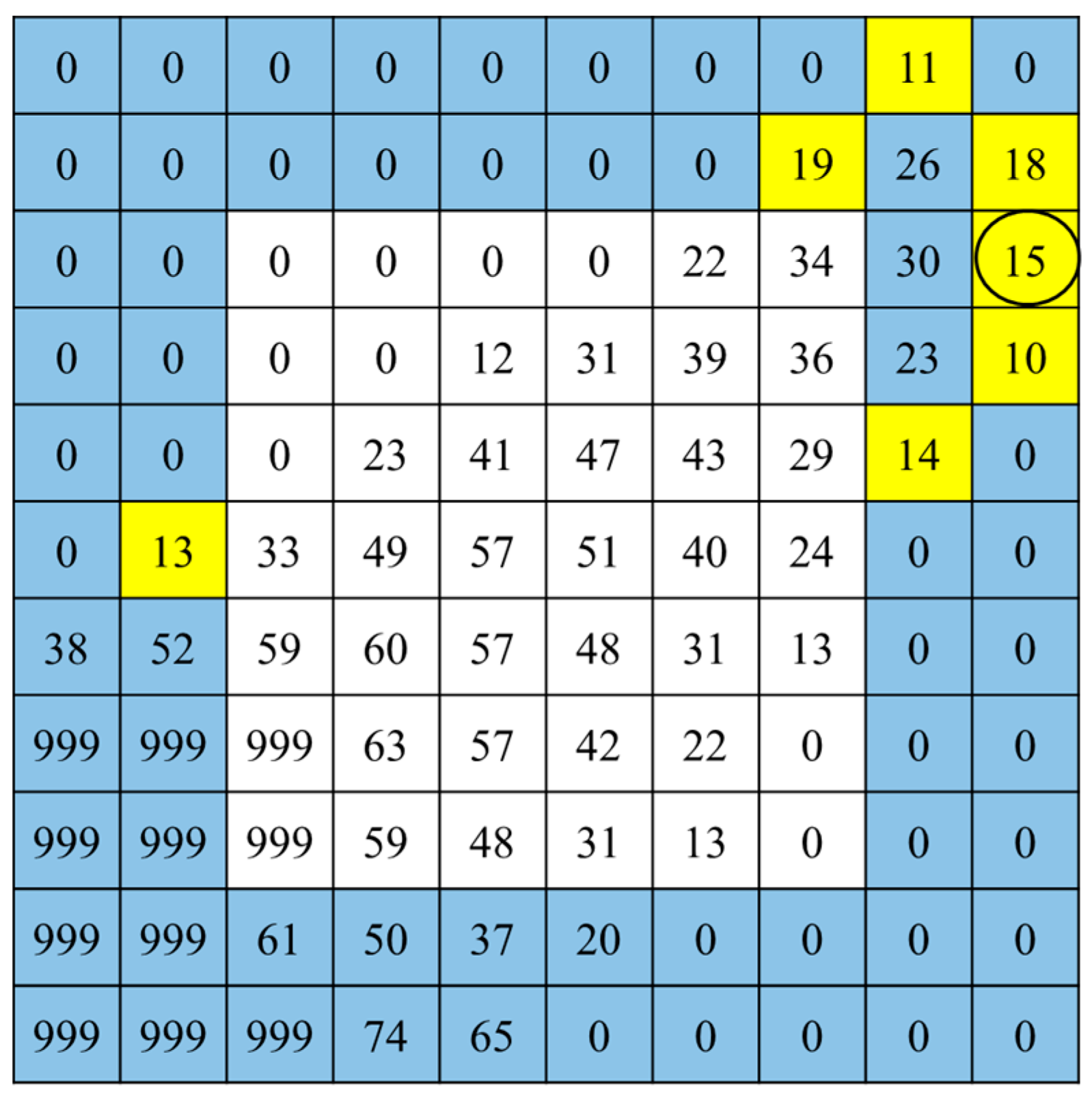

2. Characteristics of Noise in a Depth Picture

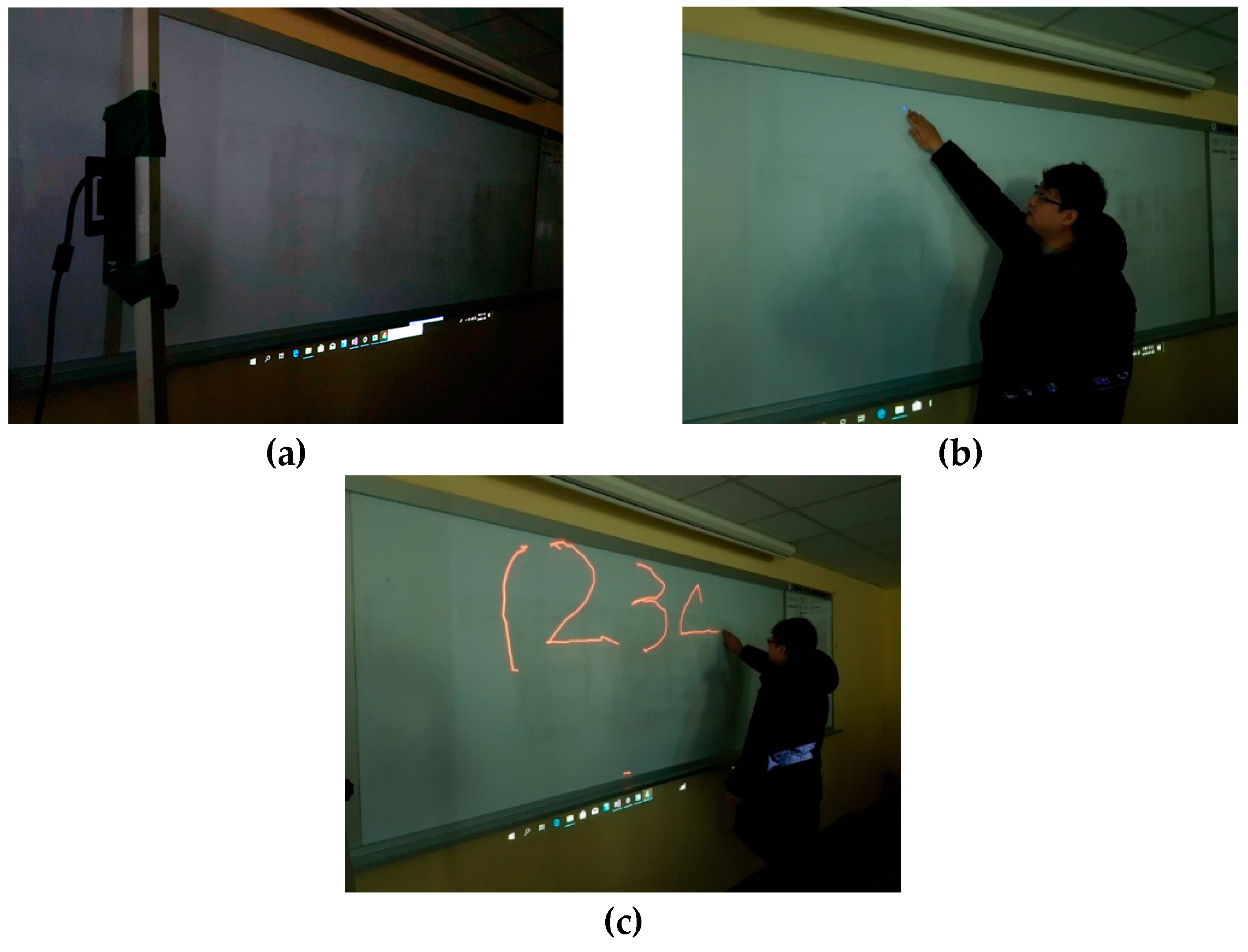

3. Virtual Touch Sensor Using Depth Camera

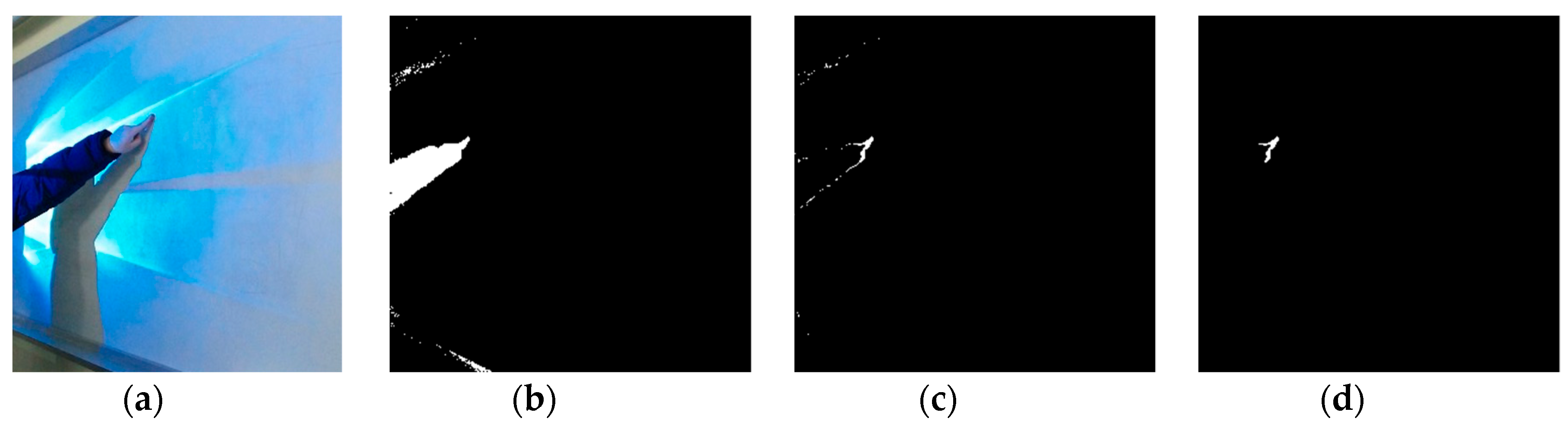

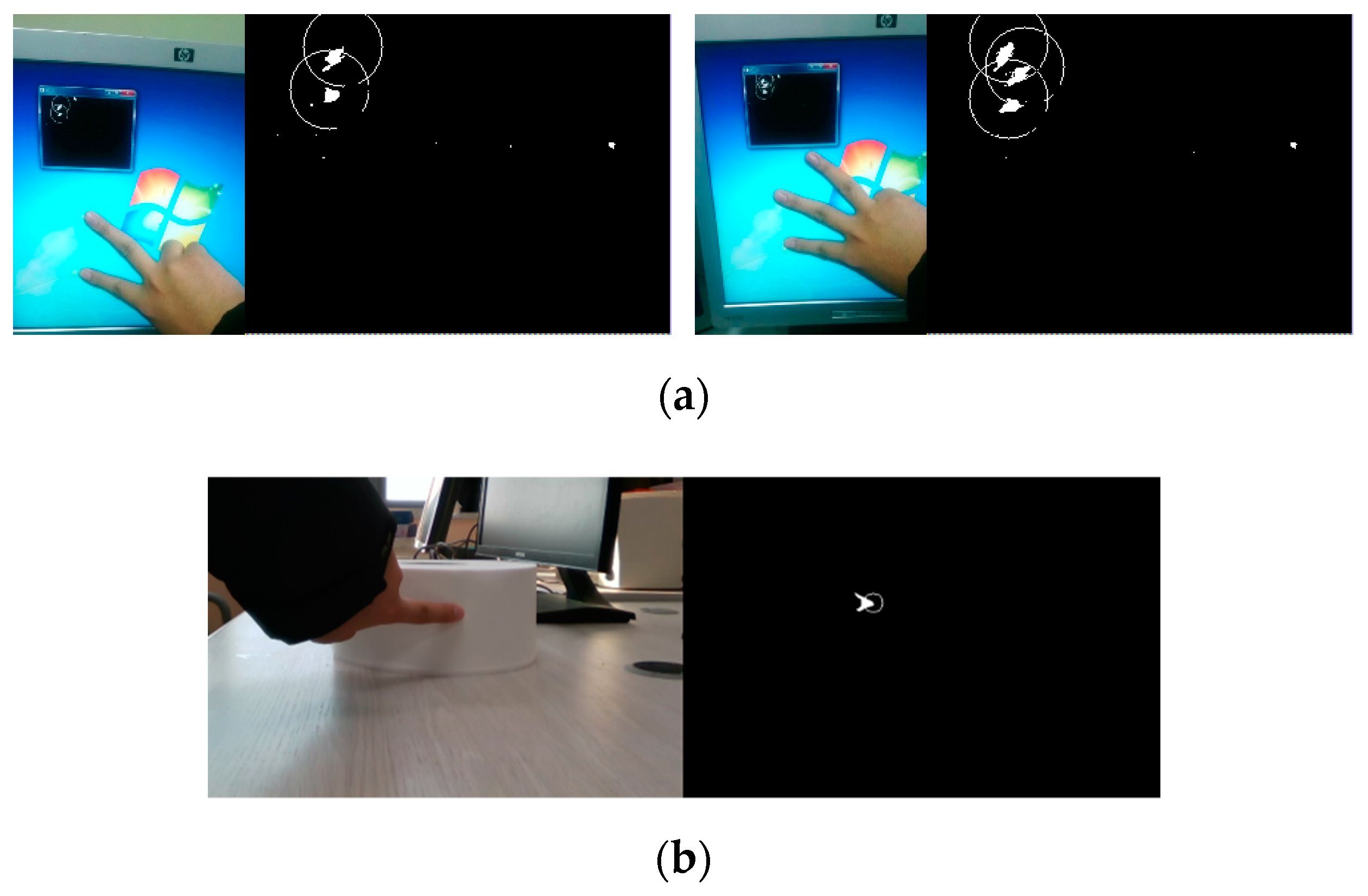

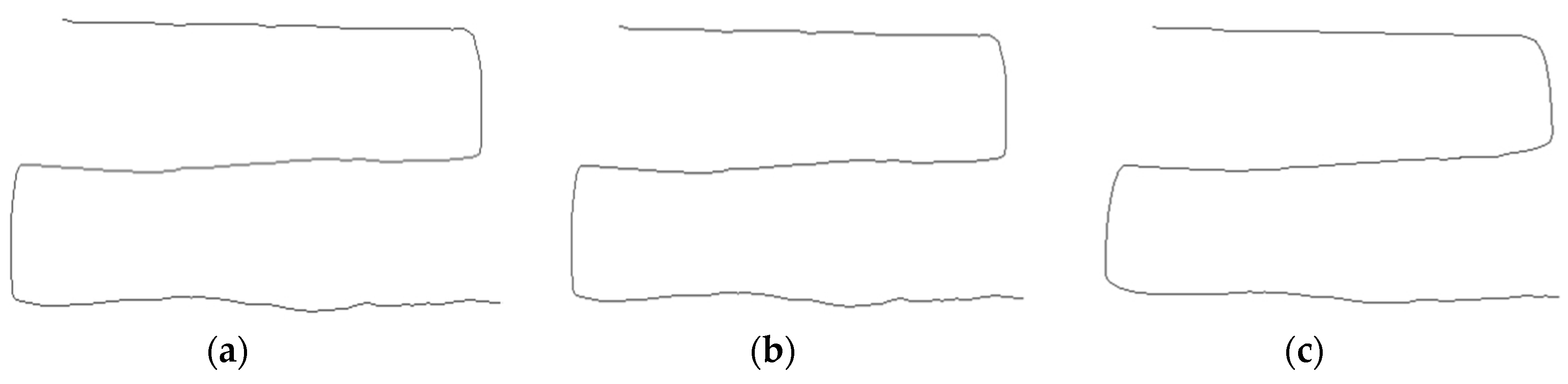

3.1. Touch Region Detection

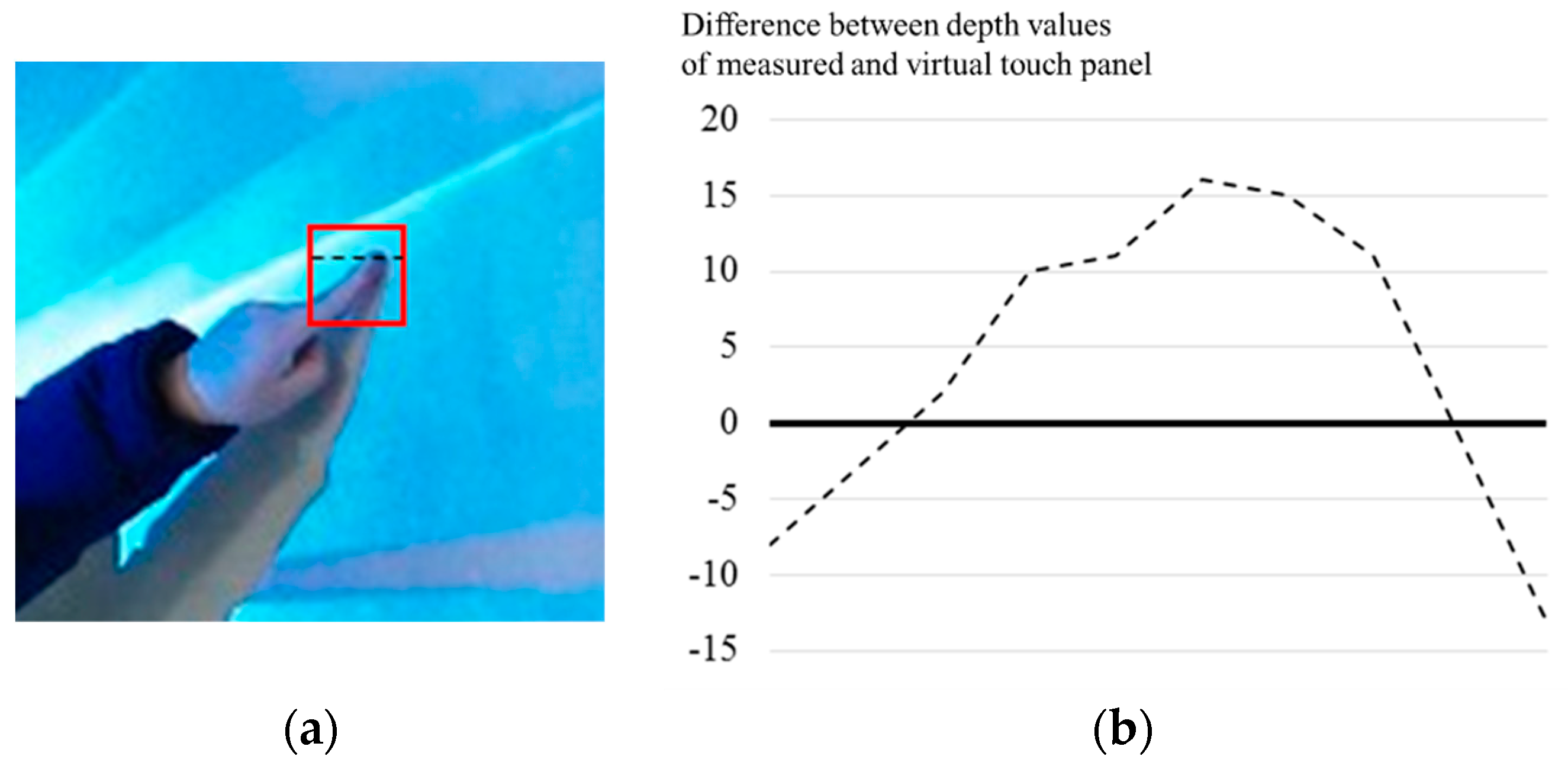

3.2. Touch Point Detection

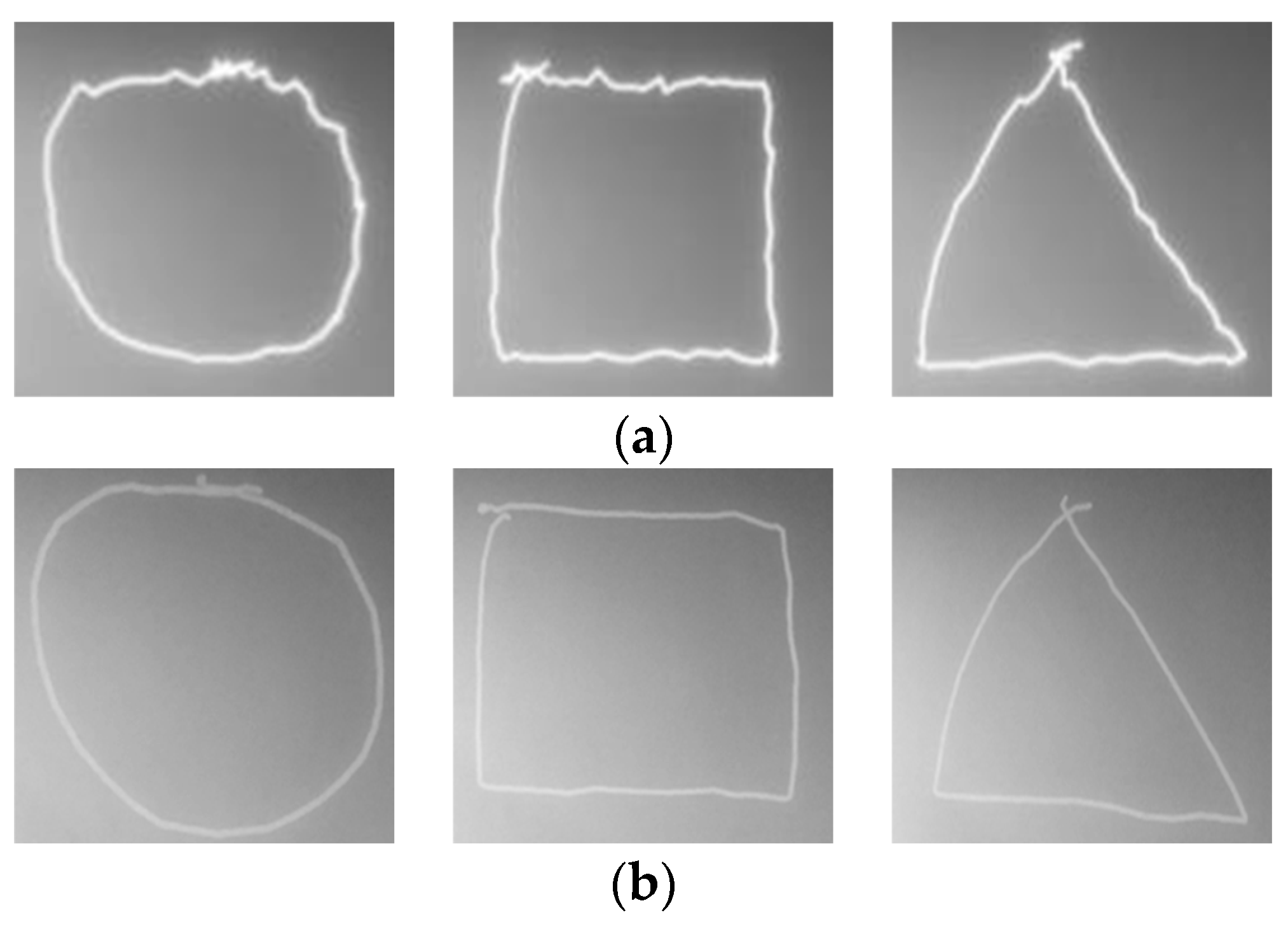

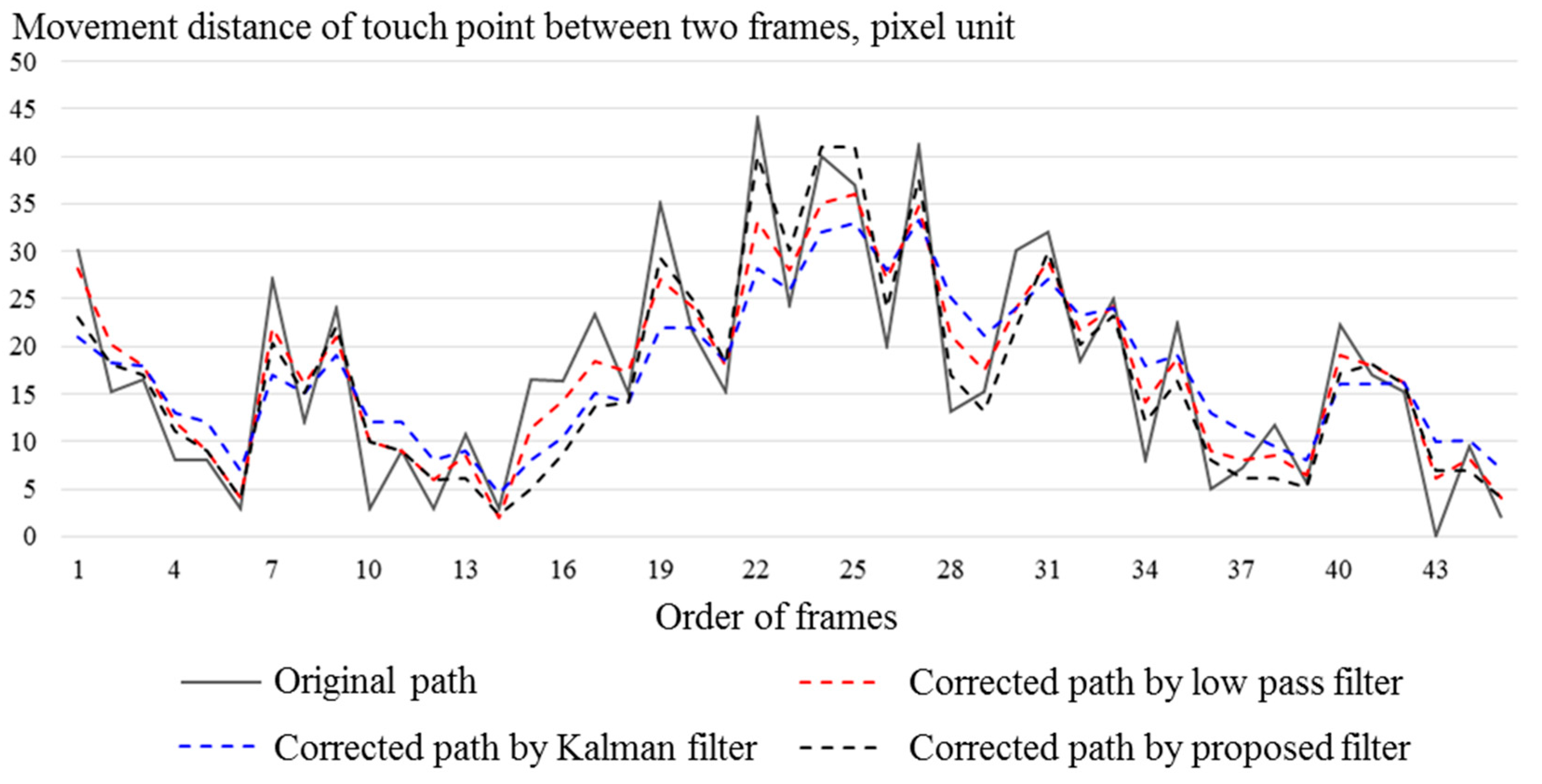

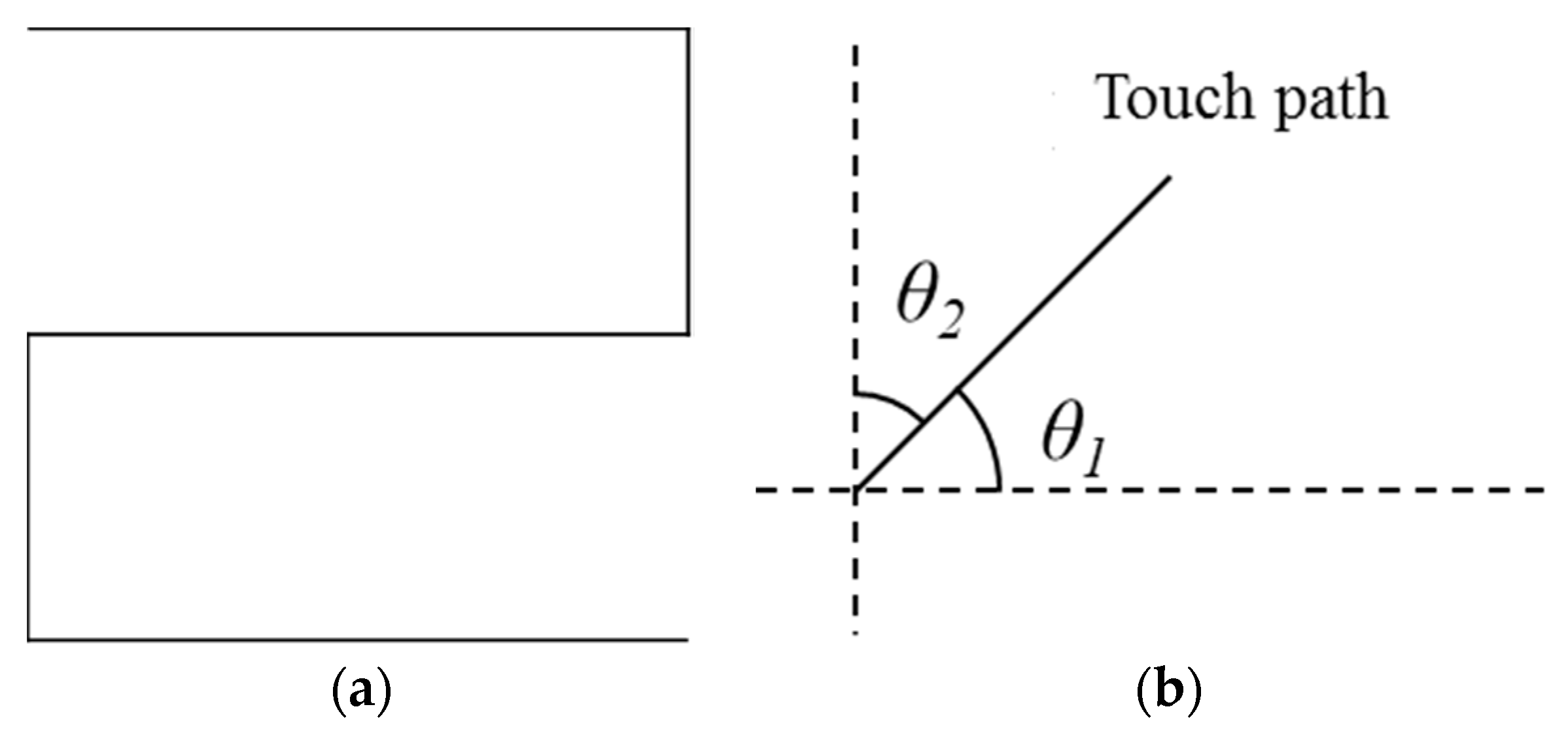

3.3. Touch Path Correction

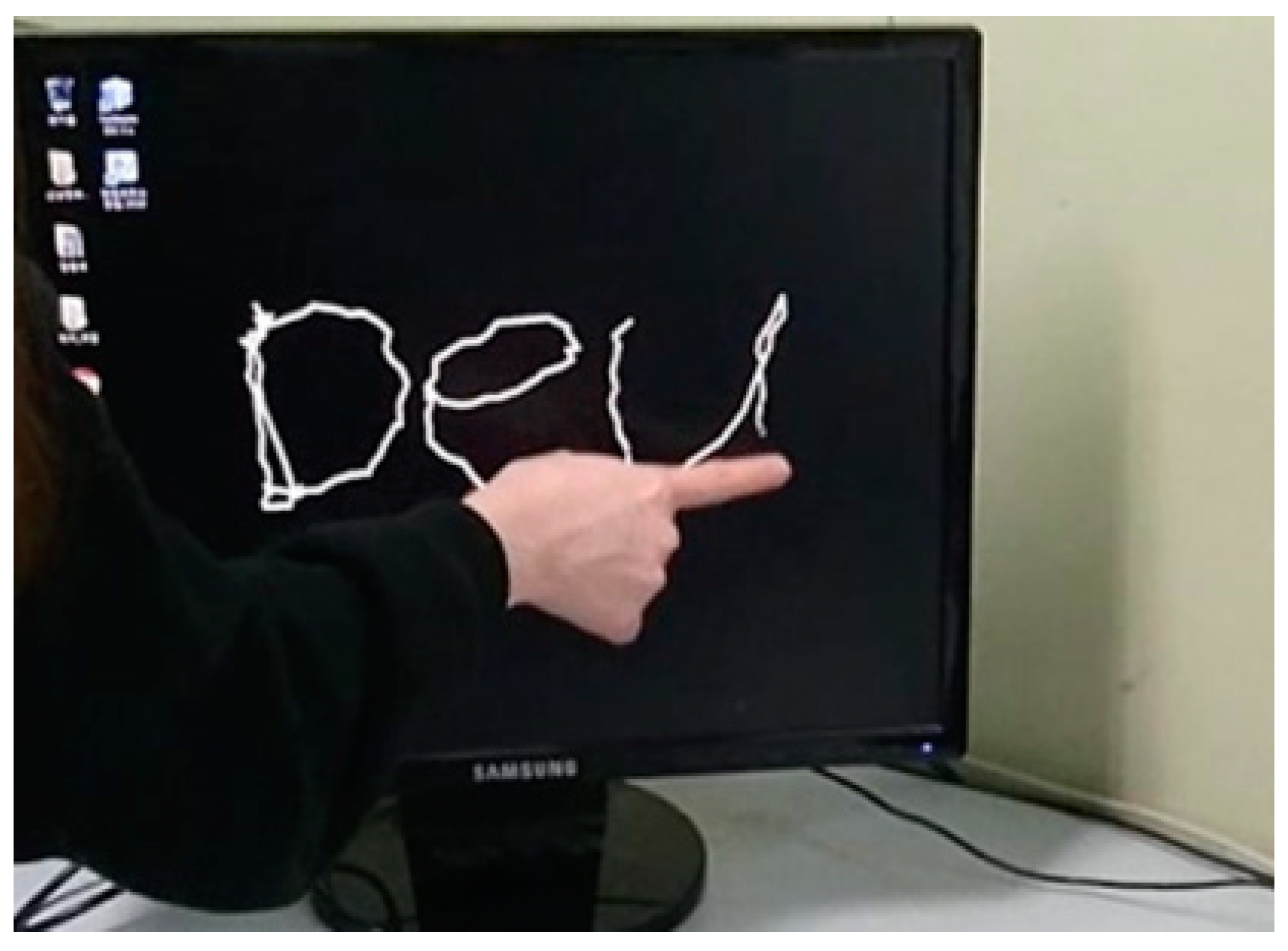

3.4. Implementation of Touch-Pen Interface

3.5. Limitations

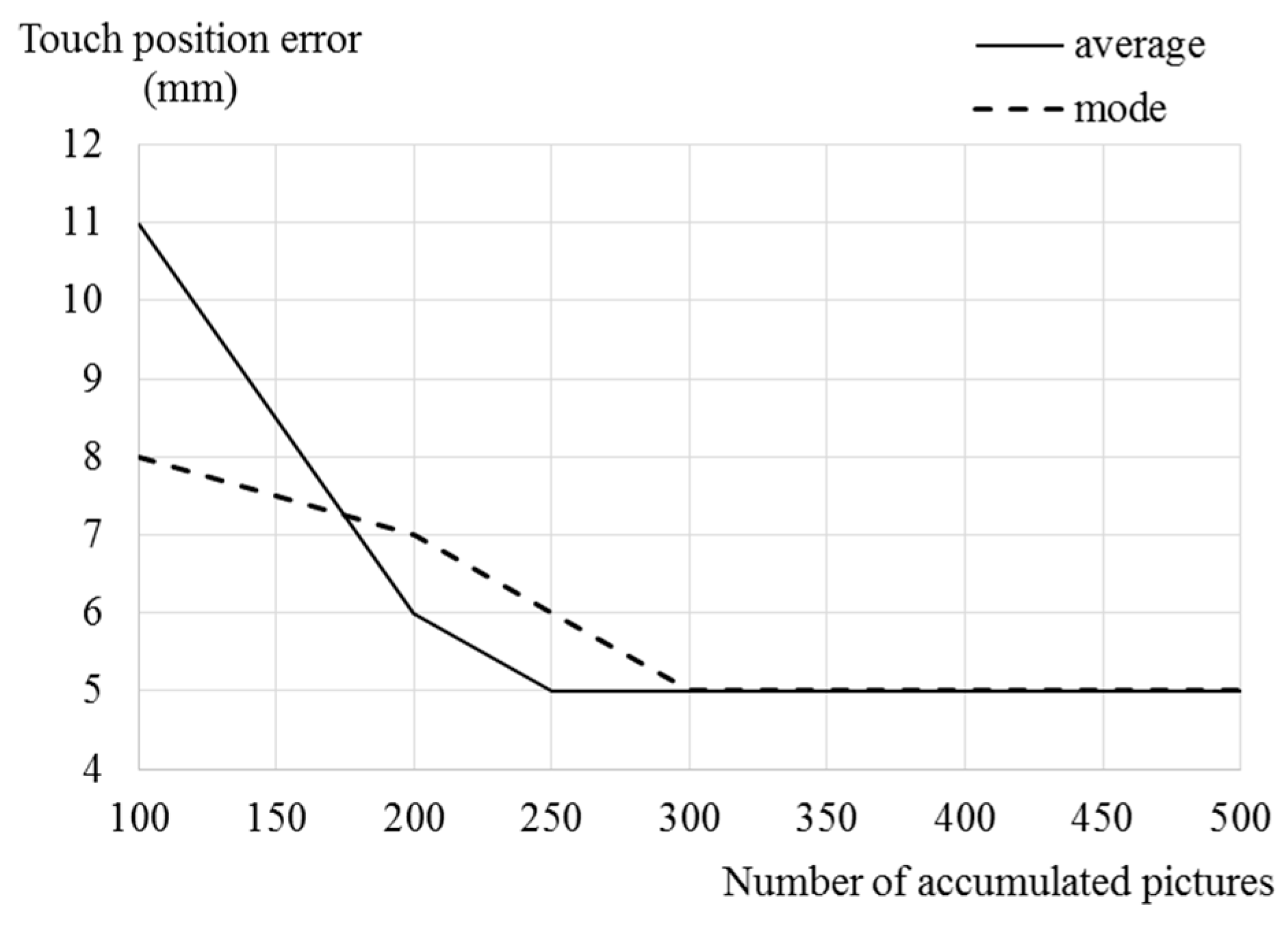

4. Simulation Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kin, K.; Agrawala, M.; DeRose, T. Determining the benefits of direct-touch, bimanual, and multifinger input on a multitouch workstation. In Proceedings of the Graphics Interface 2009, Kelowna, BC, Canada, 25–27 May 2009; pp. 119–124. [Google Scholar]

- Lee, S.K.; Buxton, W.; Smith, K.C. A multi-touch three dimensional touch-sensitive tablet. ACM SIGCHI Bull. 1985, 16, 21–25. [Google Scholar] [CrossRef]

- Walker, G. A review of technologies for sensing contact location on the surface of a display. J. Soc. Inf. Disp. 2012, 20, 413–440. [Google Scholar] [CrossRef]

- Barrett, G.; Omote, R. Projected-capacitive touch technology. Inf. Disp. 2010, 26, 16–21. [Google Scholar] [CrossRef]

- Yang, I.S.; Kwon, O.K. A touch controller using differential sensing method for on-cell capacitive touch screen panel systems. IEEE Trans. Consum. Electron. 2011, 57, 1027–1032. [Google Scholar] [CrossRef]

- Bhalla, M.R.; Bhalla, A.V. Comparative study of various touchscreen technologies. Int. J. Comput. Appl. 2010, 6, 12–18. [Google Scholar] [CrossRef]

- Touch Technology Brief: Projected Capacitive Technology. 3M Company. Available online: http://multimedia.3m.com/mws/media/788463O (accessed on 30 January 2019).

- Soni, V.; Patel, M.; Narde, R.S. An interactive infrared sensor based multi-touch panel. Int. J. Sci. Res. Publ. 2013, 3, 610–623. [Google Scholar]

- Monnai, Y.; Hasegawa, K.; Fujiwara, M.; Yoshino, K.; Inoue, S.; Shinoda, H. HaptoMime: mid-air haptic interaction with a floating virtual screen. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 663–667. [Google Scholar]

- Lee, Y.; Omkaram, I.; Park, J.; Kim, H.S.; Kyung, K.U.; Park, W.; Kim, S. Aα-Si:H thin-film phototransistor for a near-infrared touch sensor. IEEE Electron Device Lett. 2015, 36, 41–43. [Google Scholar] [CrossRef]

- Nonaka, H.; Da-te, T. Ultrasonic position measurement and its applications to human interface. IEEE Trans. Instrum. Meas. 1995, 44, 771–774. [Google Scholar] [CrossRef]

- Firouzi, K.; Nikoozadeh, A.; Carver, T.E.; Khuri-Yakub, B.P.T. Lamb wave multitouch ultrasonic touchscreen. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2016, 63, 2174–2186. [Google Scholar] [CrossRef] [PubMed]

- Quaegebeur, N.; Masson, P.; Beaudet, N.; Sarret, P. Touchscreen surface based on interaction of ultrasonic guided waves with a contact impedance. IEEE Sens. J. 2016, 16, 3564–3571. [Google Scholar] [CrossRef]

- Malik, S.; Laszlo, J. Visual touchpad: A two-handed gestural input device. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 289–296. [Google Scholar]

- Sugita, N.; Iwai, D.; Sato, K. Touch sensing by image analysis of fingernail. In Proceedings of the SICE Annual Conference, Tokyo, Japan, 20–22 August 2008; pp. 1520–1525. [Google Scholar]

- Fanelli, G.; Dantone, M.; Van Gool, L. Real time 3D face alignment with random forests-based active appearance models. In Proceedings of the IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, Shanghai, China, 22–26 April 2013; pp. 1–8. [Google Scholar]

- Dantone, M.; Gall, J.; Fanelli, G.; Van Gool, L. Real-time facial feature detection using conditional regression forests. In Proceedings of the IEEE International Conference and Workshops on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2578–2585. [Google Scholar]

- Min, R.; Kose, N.; Dugelay, J.L. KinectFaceDB: A Kinect Database for Face Recognition Systems. IEEE Trans. Man Cybern. Syst. 2014, 44, 1534–1548. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Pomerleau, F.; Magnenat, S.; Colas, F.; Liu, M.; Siegwart, R. Tracking a depth camera: Parameter exploration for fast ICP. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3824–3829. [Google Scholar]

- Siddiqui, M.; Medioni, G. Human pose estimation from a single view point, real-time range sensor. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 1–8. [Google Scholar]

- Munoz-Salinas, R.; Medina-Carnicer, R.; Madrid-Cuevas, F.J.; Carmona-Poyato, A. Depth silhouettes for gesture recognition. Pattern Recognit. Lett. 2008, 29, 319–329. [Google Scholar] [CrossRef]

- Suryanarayan, P.; Subramanian, A.; Mandalapu, D. Dynamic hand pose recognition using depth data. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3105–3108. [Google Scholar]

- Preis, J.; Kessel, M.; Werner, M.; Linnhoff-Popien, C.L. Gait recognition with Kinect. In Proceedings of the Workshop on Kinect in Pervasive Computing, Newcastle, UK, 18 June 2012; pp. P1–P4. [Google Scholar]

- Song, S.; Xiao, J. Tracking revisited using RGBD camera: Unified benchmark and baselines. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 233–240. [Google Scholar]

- Sung, J.; Ponce, C.; Selman, B.; Saxena, A. Human activity detection from RGBD images. Plan Act. Intent Recognit. 2011, 64, 47–55. [Google Scholar]

- Spinello, L.; Arras, K.O. People detection in RGB-D data. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3838–3843. [Google Scholar]

- Luber, M.; Spinello, L.; Arras, K.O. People tracking in RGB-D data with on-line boosted target models. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3844–3849. [Google Scholar]

- Ren, Z.; Yuan, J.; Meng, J.; Zhang, Z. Robust part-based hand gesture recognition using kinect sensor. IEEE Trans. Multimedia 2013, 15, 1110–1120. [Google Scholar] [CrossRef]

- Rubner, Y.; Tomasi, C.; Guibas, L.J. The earth mover’s distance as a metric for image retrieval. Int. J. Comput. Vision 2000, 40, 99–121. [Google Scholar] [CrossRef]

- Biswas, K.K.; Basu, S.K. Gesture recognition using microsoft kinect®. In Proceedings of the 5th International Conference on Automation, Robotics and Applications, Wellington, New Zealand, 6–8 December 2011; pp. 100–103. [Google Scholar]

- Li, Y. Hand gesture recognition using Kinect. In Proceedings of the 2012 IEEE International Conference on Computer Science and Automation Engineering, Beijing, China, 22–24 June 2012; pp. 196–199. [Google Scholar]

- Harrison, C.; Benko, H.; Wilson, A.D. OmniTouch: wearable multitouch interaction everywhere. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 441–450. [Google Scholar]

- Wilson, A.D. Using a depth camera as a touch sensor. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Saarbrücken, Germany, 7–10 November 2010; pp. 69–72. [Google Scholar]

- Nayar, S.K.; Ben-Ezra, M. Motion-based motion deblurring. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 689–698. [Google Scholar] [CrossRef] [PubMed]

| Tl | Success Rate of Touch Region Detection (%) | Position Error of Touch Point(mm) |

|---|---|---|

| 1 | 0 | - |

| 2 | 75 | 8 |

| 3 | 100 | 5 |

| 4 | 100 | 6 |

| 5 | 100 | 7 |

| Tt | Position Error of Touch Point (mm) |

|---|---|

| 1 | 3 |

| 2 | 5 |

| 3 | 7 |

| 4 | 9 |

| 5 | 11 |

| Angle(°) | Distance between Camera and Screen (m) | ||||

|---|---|---|---|---|---|

| 1.5 | 2 | 2.5 | 3 | 3.5 | |

| 10 | 16 | 17 | 18 | 18 | 19 |

| 15 | 12 | 13 | 13 | 14 | 14 |

| 20 | 8 | 8 | 10 | 12 | 12 |

| 25 | 5 | 6 | 9 | 10 | 10 |

| 30 | 5 | 6 | 6 | 7 | 7 |

| 35 | 6 | 6 | 6 | 7 | 7 |

| 40 | 7 | 7 | 8 | 8 | 9 |

| 45 | 8 | 9 | 9 | 10 | 13 |

| 50 | 9 | 11 | 13 | 15 | 17 |

| 55 | 10 | 12 | 13 | 16 | 18 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.-s.; Kwon, S.-k. Virtual Touch Sensor Using a Depth Camera. Sensors 2019, 19, 885. https://doi.org/10.3390/s19040885

Lee D-s, Kwon S-k. Virtual Touch Sensor Using a Depth Camera. Sensors. 2019; 19(4):885. https://doi.org/10.3390/s19040885

Chicago/Turabian StyleLee, Dong-seok, and Soon-kak Kwon. 2019. "Virtual Touch Sensor Using a Depth Camera" Sensors 19, no. 4: 885. https://doi.org/10.3390/s19040885

APA StyleLee, D.-s., & Kwon, S.-k. (2019). Virtual Touch Sensor Using a Depth Camera. Sensors, 19(4), 885. https://doi.org/10.3390/s19040885