Single-Equipment with Multiple-Application for an Automated Robot-Car Control System

Abstract

:1. Introduction

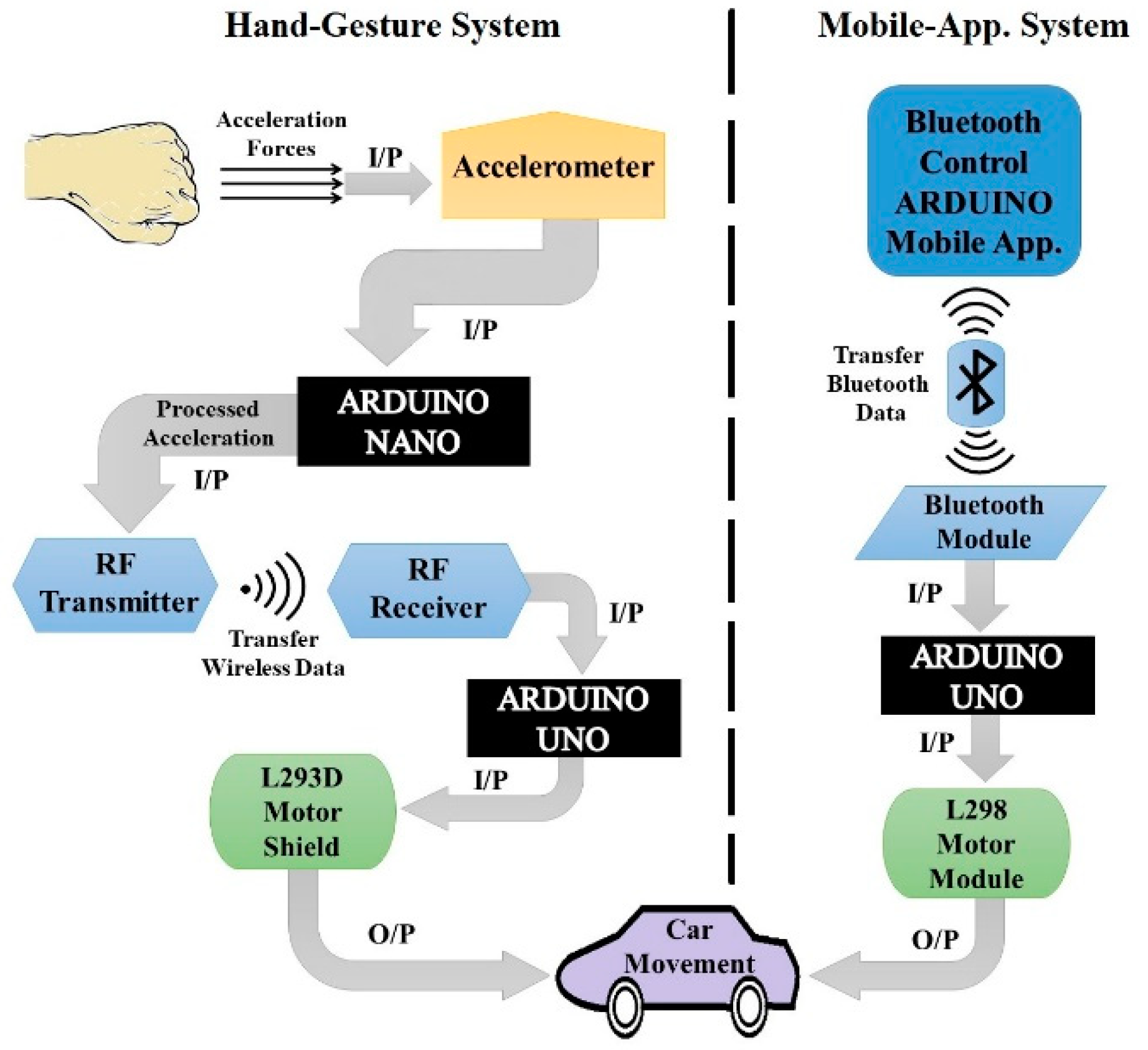

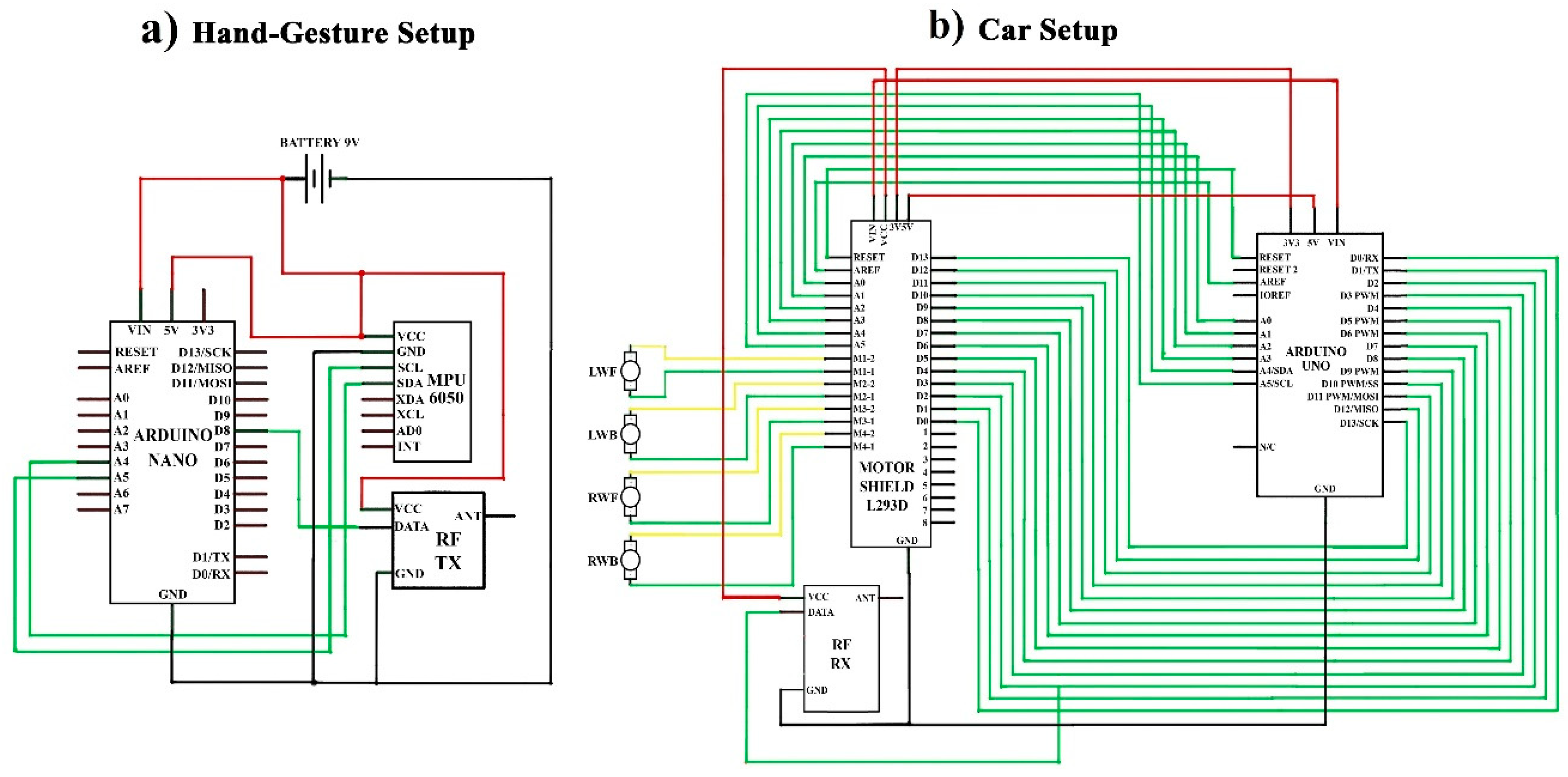

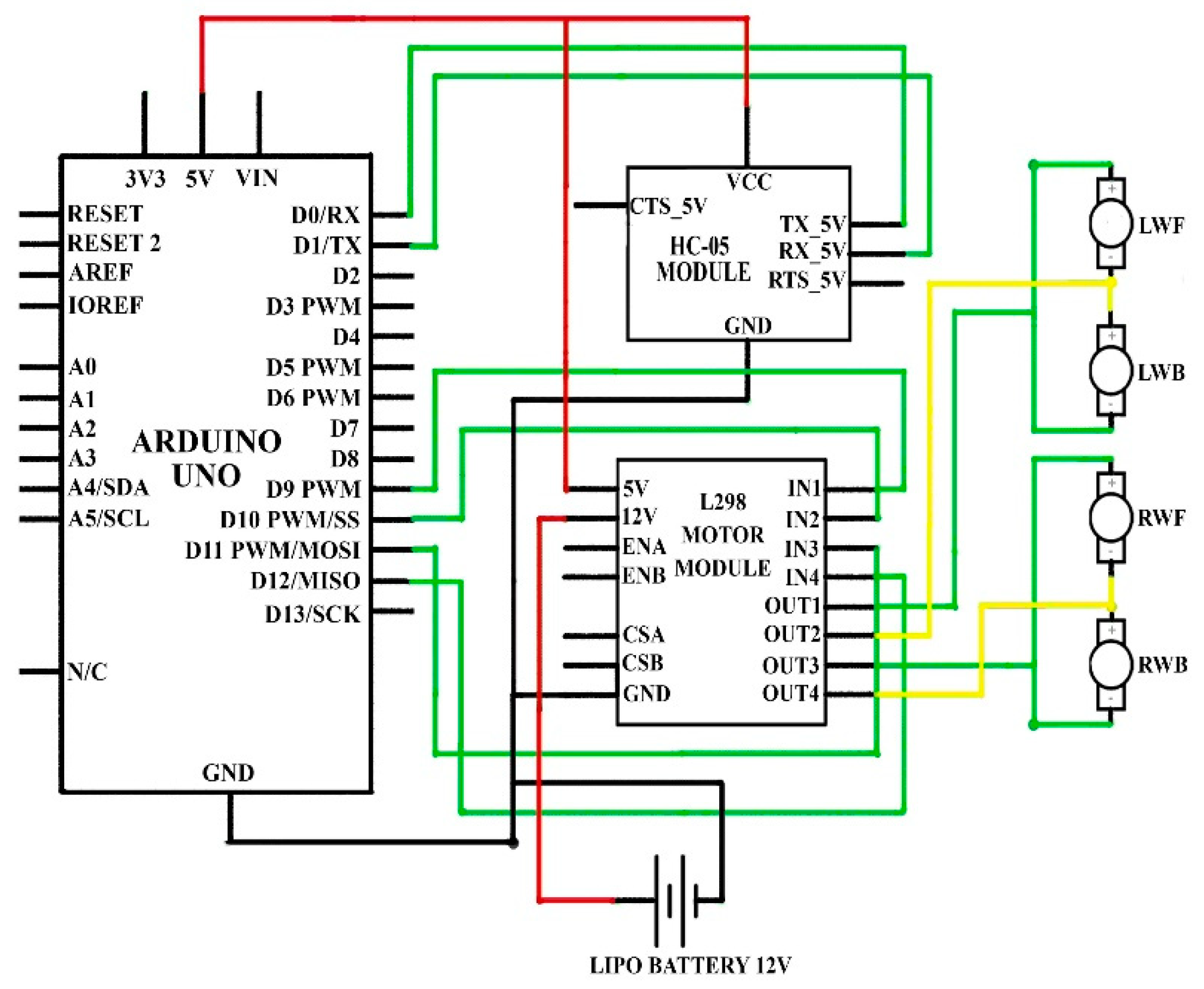

2. Materials and Methods

2.1. Electronic Components

2.1.1. Arduino UNO

2.1.2. Arduino Nano

2.1.3. MPU-6050 Accelerometer

2.1.4. RF Transmitter and Receiver

2.1.5. L293D

2.1.6. Bluetooth Module HC-05

2.1.7. Android Mobile Application

2.1.8. L298N Motor Module

3. Designing Methodology

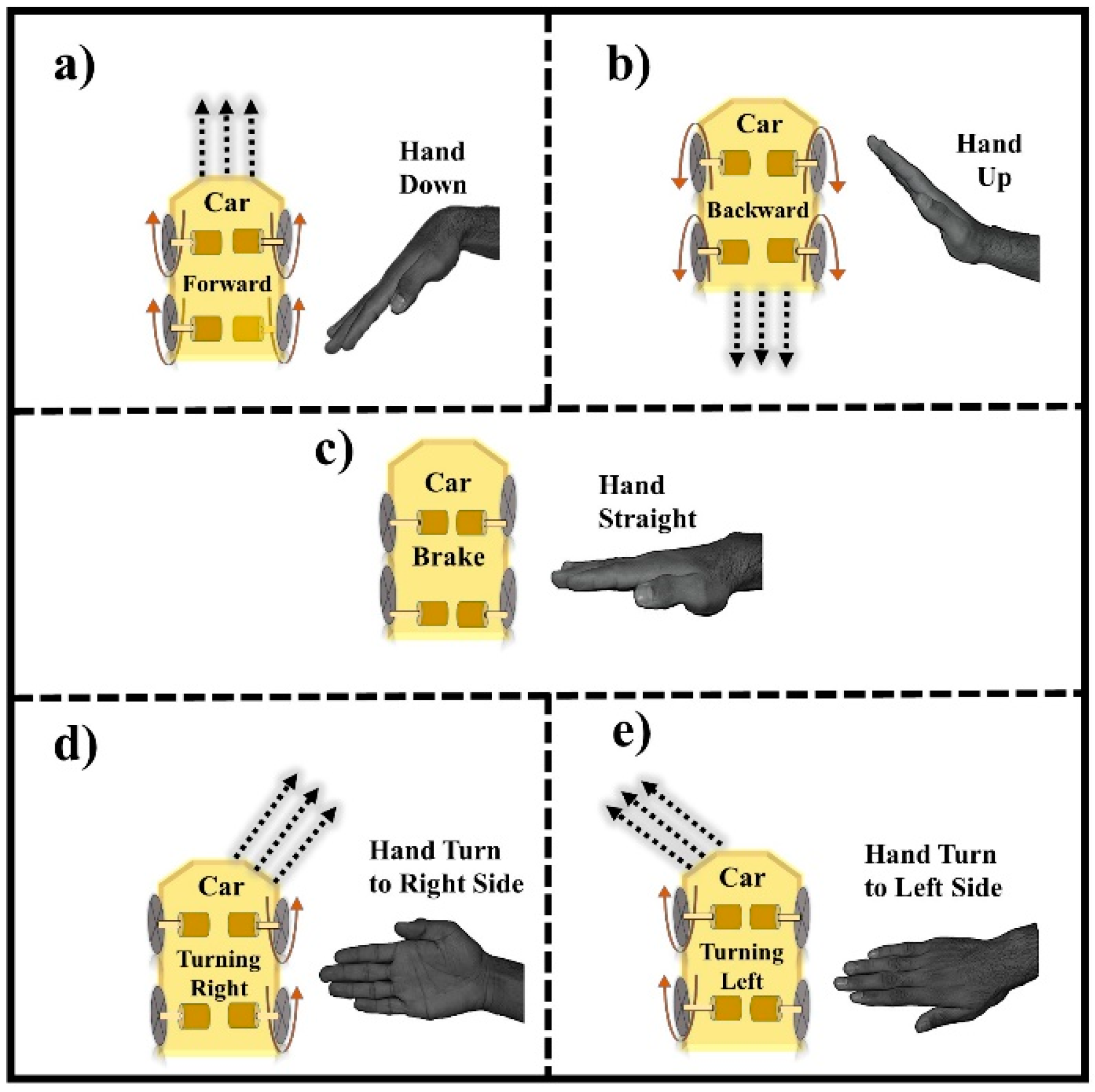

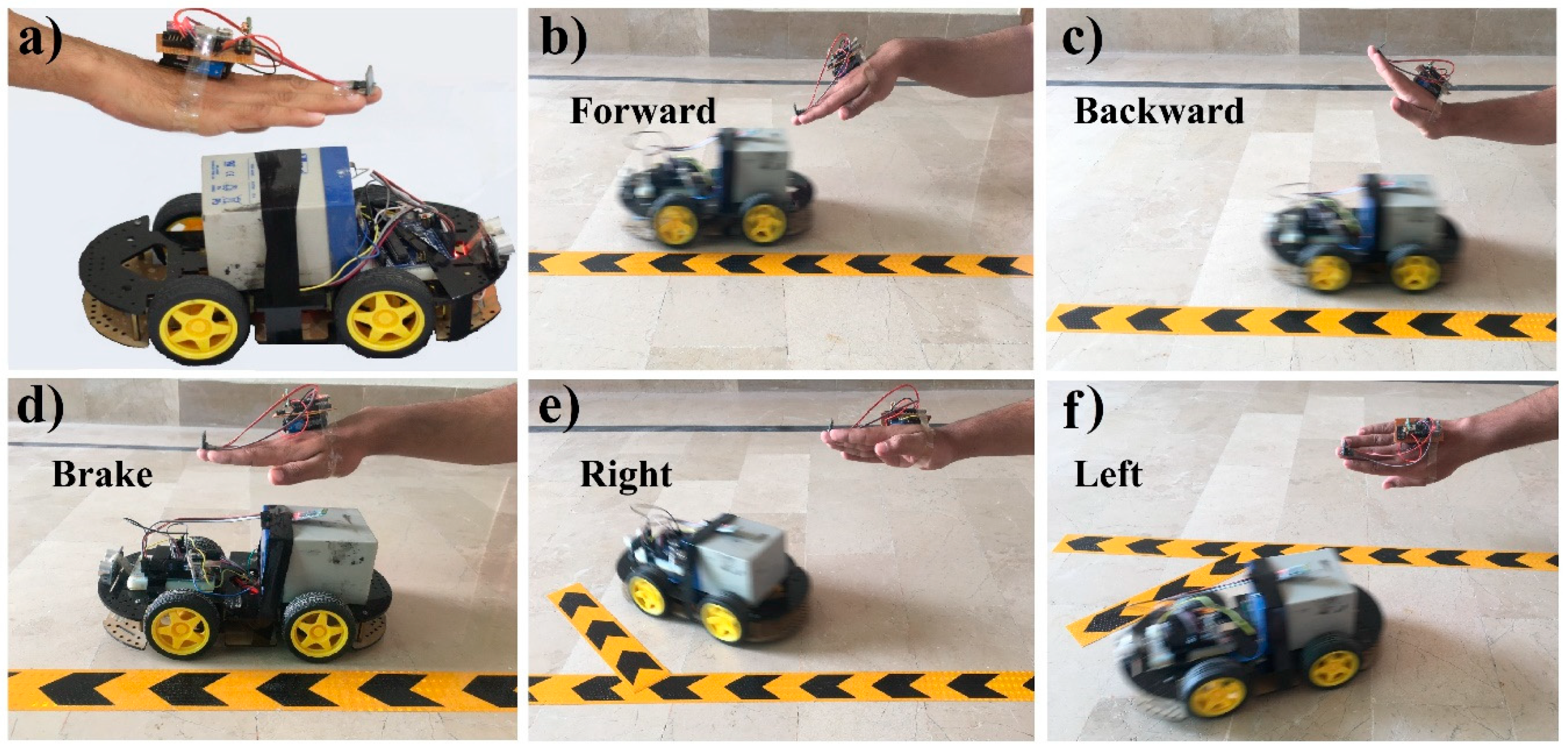

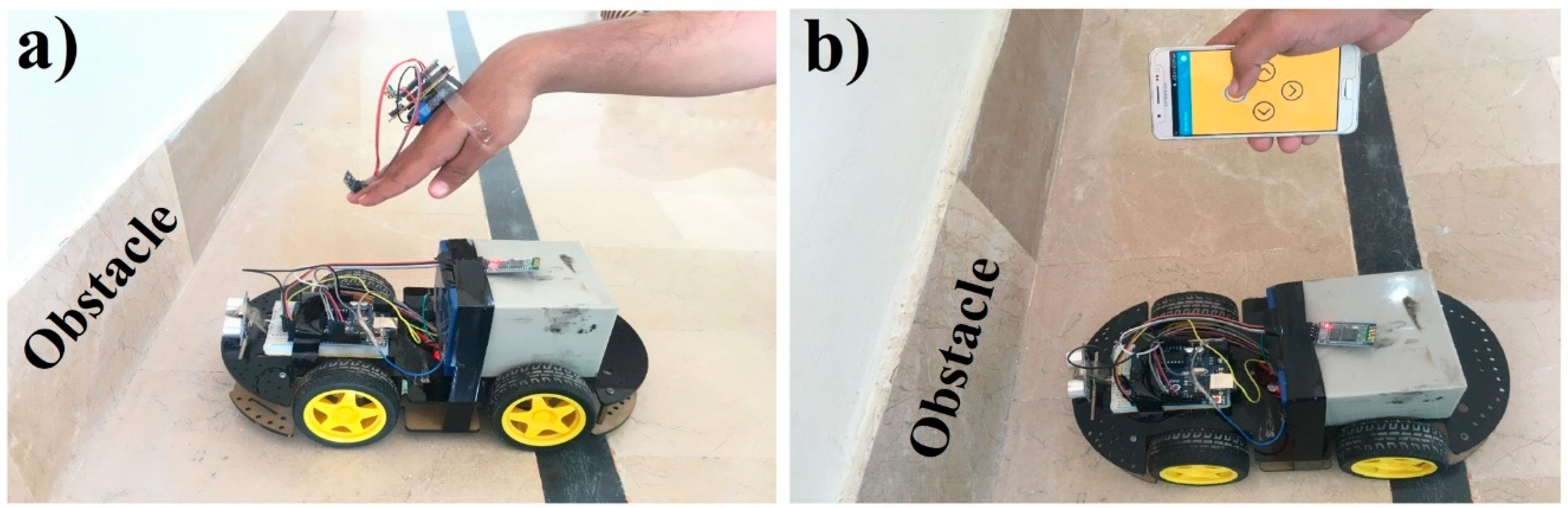

3.1. Hand-Gestures Recognition

3.1.1. Movement of Motors with Hand-Gesture

3.1.2. Results and Discussions

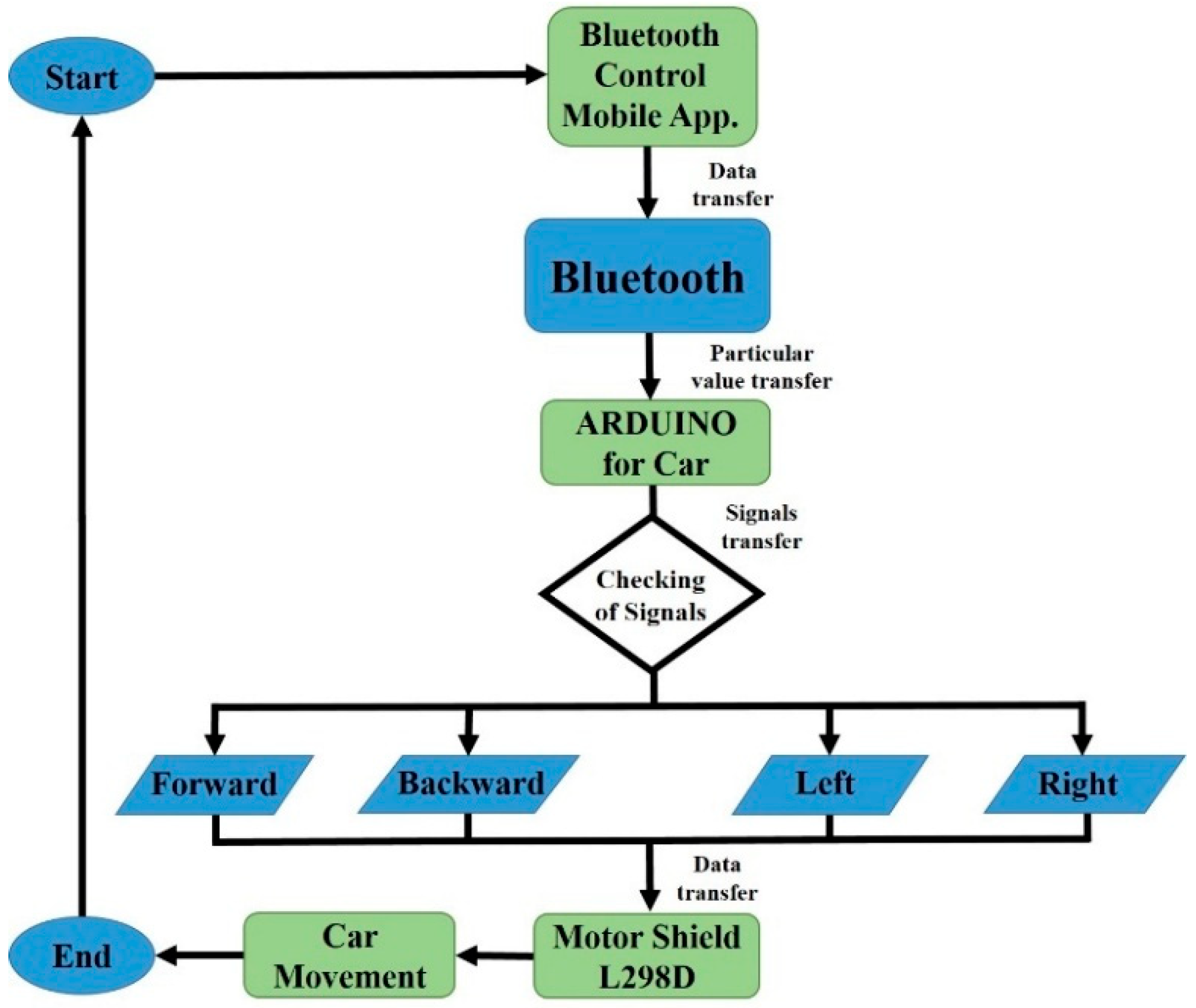

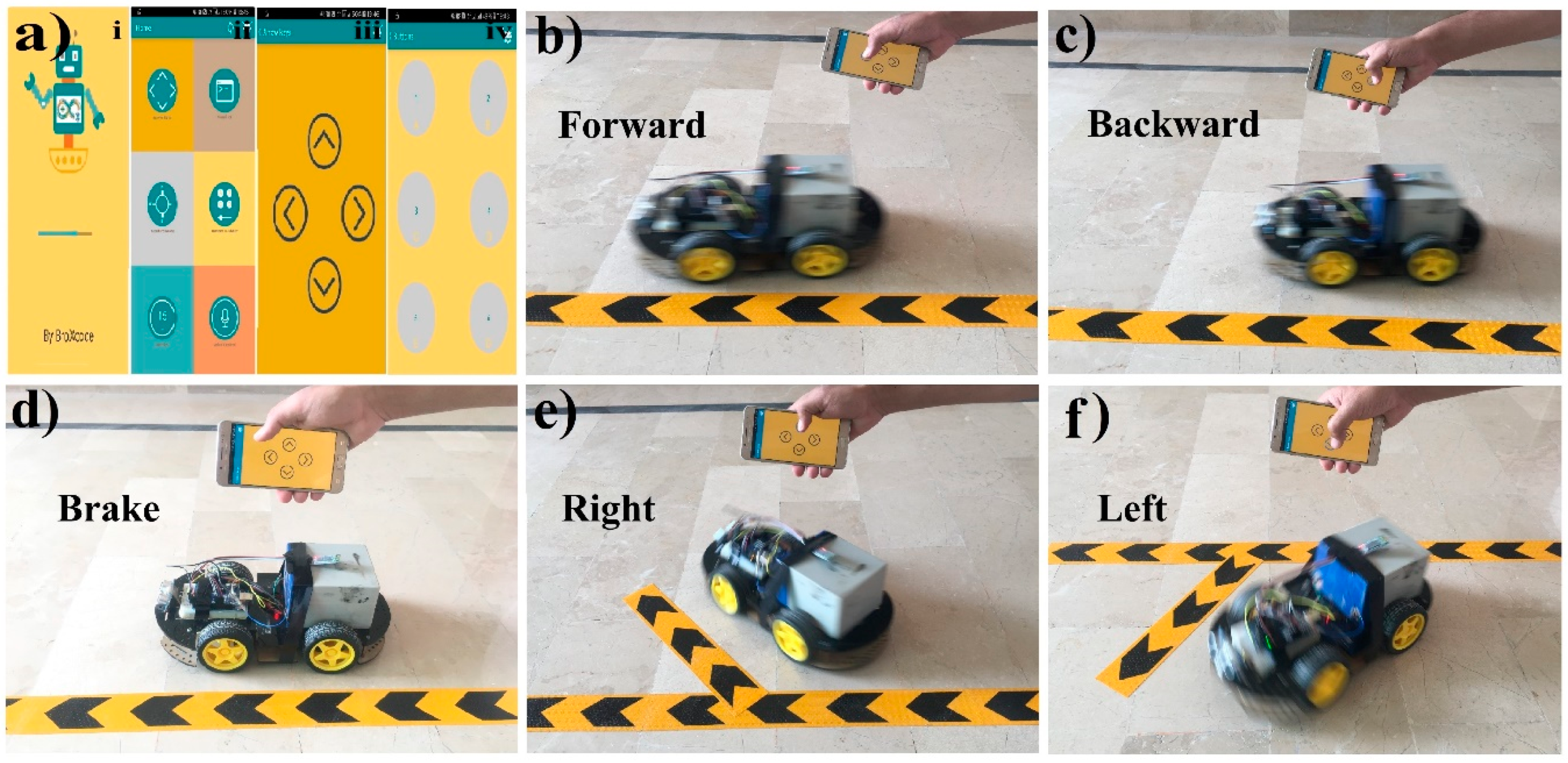

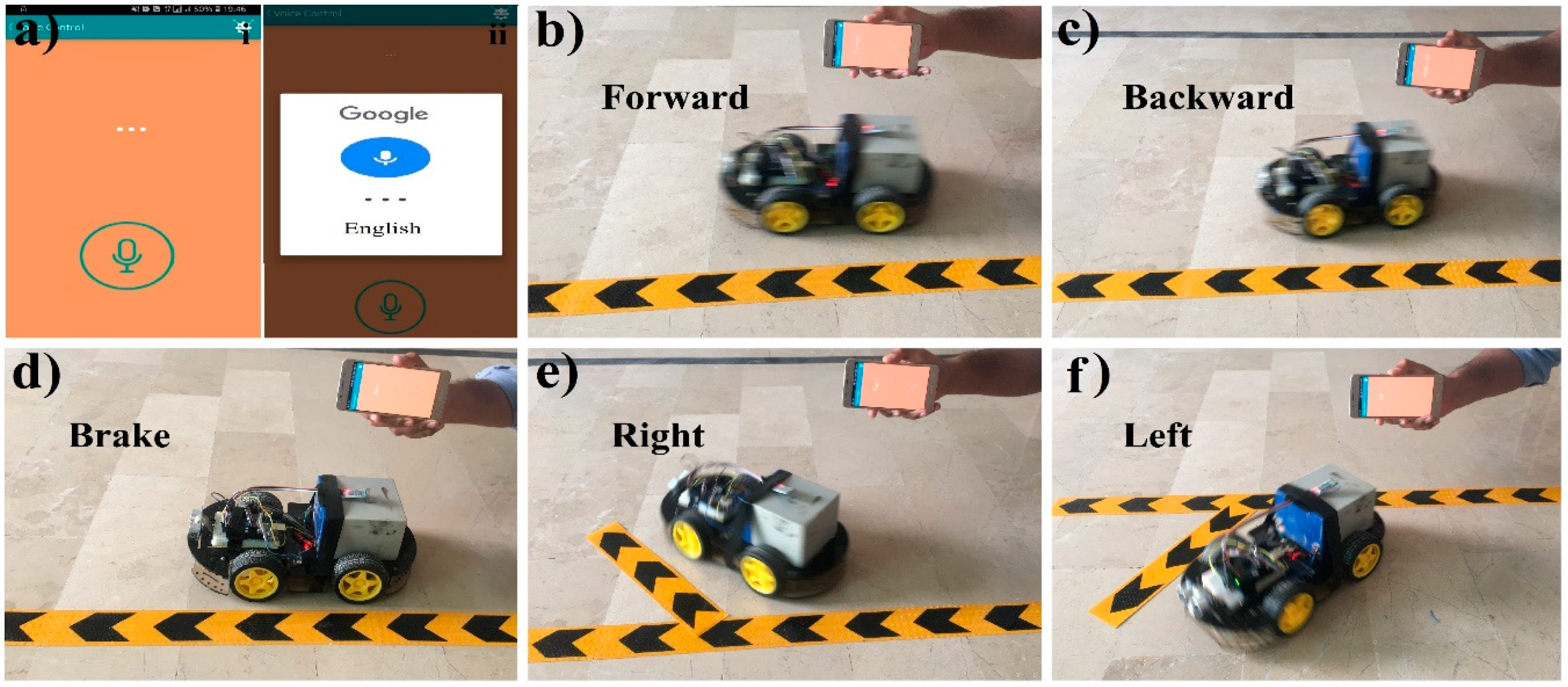

3.2. Mobile Application System

Results and Discussions

4. Conclusions

5. Future Work

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Qureshi, M.O.; Syed, R.S. The impact of robotics on employment and motivation of employees in the service sector, with special reference to health care. Saf. Health Work 2014, 5, 198–202. [Google Scholar] [CrossRef] [PubMed]

- Fernando, Y.A.; Mathath, A.; Murshid, M.A. Improving productivity: A review of robotic applications in food industry. Int. J. Rob. Appl. Technol. 2016, 4, 43–62. [Google Scholar] [CrossRef]

- Siramshetti, B.K.; Priyanka, K.; Rajitha, C. I won’t fall down; Edge detector robot. Int. J. Sci. Eng. Technol. Res. 2017, 6, 113–116. [Google Scholar]

- Pakdaman, M.; Sanaatiyan, M.M.; Ghahroudi, M.R. A line follower robot from design to implementation: Technical issues and problems. In Proceedings of the 2nd International Conference on Computer and Automation Engineering (ICCAE), Singapore, 26–28 February 2010; pp. 5–9. [Google Scholar]

- Singh, R.R.; Das, R.; Pal, K. A novel modus of hand gesture controlled wireless robot. Int. J. Res. Appl. Sci. Eng. Technol. 2017, 5, 641–647. [Google Scholar]

- Fang, B.; Sun, F.; Liu, H.; Liu, C. 3D human gesture capturing and recognition by the IMMU-based data glove. Neurocomputing 2018, 277, 198–207. [Google Scholar] [CrossRef]

- Shi, Y.; Taib, R.; Lichman, S. Gesturecam: A smart camera for gesture recognition and gesture-controlled web navigation. In Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–6. [Google Scholar]

- Liu, T.; Luo, X.; Liu, J.; Cui, H. Compressive infrared sensing for arm gesture acquisition and recognition. In Proceedings of the IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 1882–1886. [Google Scholar]

- Dahl, T.S.; Gibbons, P.; Jones, O. Identification and production of simple tactile gestures. In Proceedings of the IEEE International Conference on Human-Robot Interaction Workshop on Advances in Tactile Sensing and Touch based Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012. [Google Scholar] [CrossRef]

- Cao, S.; Yang, P.; Li, X.; Chen, M.; Zhu, P. iPand: Accurate gesture input with smart acoustic sensing on hand. In Proceedings of the 15th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Hong Kong, China, 11–13 June 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Jost, C.; De Loor, P.; Nédélec, L.; Bevacqua, E.; Stanković, I. Real-time gesture recognition based on motion quality analysis. In Proceedings of the 7th International Conferences on Intelligent Technologies for Interactive Entertainment (INTETAIN), Turin, Italy, 10–12 June 2015; pp. 47–56. [Google Scholar]

- Ju, M.H.; Kang, H.B. Emotional interaction with a robot using facial expressions, face pose and hand gestures. Int. J. Adv. Rob. Syst. 2012, 9, 1–13. [Google Scholar] [CrossRef]

- Chen, C.-H.; Lee, I.-J.; Lin, L.-Y. Augmented reality-based self-facial modeling to promote the emotional expression and social skills of adolescents with autism spectrum disorders. Res. Dev. Disabil. 2015, 36, 396–403. [Google Scholar] [CrossRef] [PubMed]

- Vyas, K.K.; Pareek, A.; Vyas, S. Gesture recognition and control. Int. J. Recent Innov. Trends Comput. Commun. 2013, 1, 575–581. [Google Scholar]

- Cervantes-Villanueva, J.; Carrillo-Zapata, D.; Terroso-Saenz, F.; Valdes-Vela, M.; Skarmeta, A.F. Vehicle maneuver detection with accelerometer-based classification. Sensors 2016, 16, 1618. [Google Scholar] [CrossRef]

- Aggarwal, L.; Gaur, V.; Verma, P. Design and implementation of a wireless gesture controlled robotic arm with vision. Int. J. Comput. Appl. 2013, 79, 39–43. [Google Scholar] [CrossRef]

- Patel, N.K.; Patel, S.B.; Ammar, M.M. Accelerometer based gesture controlled wheel chair with GPS, GSM navigation. Int. J. Innov. Emerg. Res. Eng. 2015, 2, 110–113. [Google Scholar]

- Goyal, D.; Saini, S.P.S. Accelerometer based hand gesture controlled wheelchair. Int. J. Emerg. Technol. 2013, 4, 15–20. [Google Scholar]

- Jena, S.P.; Nayak, S.K.; Sahoo, S.K.; Sahoo, S.R.; Dash, S.; Sahoo, S.K. Accelerometer based gesture controlled robot using arduino. Int. J. Eng. Sci. Res. Technol. 2015, 4, 469–475. [Google Scholar]

- Budheliya, C.S.; Solanki, R.K.; Acharya, H.D.; Thanki, P.P.; Ravia, J.K. Accelerometer based gesture controlled robot with robotic arm. Int. J. Innov. Res. Sci. Technol. 2017, 3, 92–97. [Google Scholar]

- Mojeebi, T.; Tulo, S.K. Accelerometer gesture controlled robot using Arduino. Int. J. Eng. Technol. 2016, 3, 38–41. [Google Scholar]

- Jayabala, P. Design and implementation of gesture controlled robotic arm for industrial applications. Int. J. Sci. Res. 2018, 3, 202–209. [Google Scholar]

- Pławiak, P.; Sośnicki, T.; Niedźwiecki, M.; Tabor, Z.; Rzecki, K. Hand body language gesture recognition based on signals from specialized glove and machine learning algorithms. IEEE Trans. Ind. Inf. 2016, 12, 1104–1113. [Google Scholar] [CrossRef]

- Rzecki, K.; Paweł, P.; Niedźwiecki, M.; Sośnicki, T.; Leśkow, J.; Ciesielski, M. Person recognition based on touch screen gestures using computational intelligence methods. Inf. Sci. 2017, 415, 70–84. [Google Scholar] [CrossRef]

- Jawalekar, P.A. Robot control by using human hand gestures. Int. Res. J. Eng. Technol. 2018, 5, 389–391. [Google Scholar]

- Patil, P.V.; Shete, M.B.; Padalkar, T.M. Wireless hand gesture robot using accelerometer. Int. Res. J. Eng. Technol. 2016, 3, 353–356. [Google Scholar]

- Verma, S. Hand gestures remote controlled robotic arm. Adv. Electron. Electr. Eng. 2013, 3, 601–606. [Google Scholar]

- Suriya, T.S.U.; Khan, S.; Selvaganapathy, S.; Solaimani; Prasannakumar, V. Gesture controlled prosthetic arm. Int. J. Adv. Res. Dev. 2017, 2, 56–59. [Google Scholar]

- Jhaveri, R.B.; Mehta, H.M.; Gala, P.T. Gesture controlled robot. Int. J. Electron. Electr. Compute. Syst. 2015, 4, 15–19. [Google Scholar]

- Rao, S.; Rajasekhar, C. Password based hand gesture controlled robot. Int. J. Eng. Res. Appl. 2016, 6, 63–69. [Google Scholar]

- Setia, A.; Mittal, S.; Nigam, P.; Singh, S.; Ganwar, S. Hand gesture recognition based robot using accelerometer sensor. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2015, 4, 4470–4476. [Google Scholar]

- Swetha, N. Design and implementation of accelerometer based robot motion and speed control with obstacle detection. Int. J. Sci. Eng. Technol. 2013, 2, 749–755. [Google Scholar]

- Mishra, A.; Malhotra, S.; Pradesh, U. Design of hand glove for wireless gesture control of robot. Int. J. Pure Appl. Math. 2017, 114, 69–79. [Google Scholar]

- Verma, S. Android app controlled bluetooth robot. Int. J. Comput. Appl. 2016, 152, 35–40. [Google Scholar] [CrossRef]

- Maity, A.; Paul, A.; Goswami, P.; Bhattacharya, A. Android application based bluetooth controlled robotic car. Int. J. Intell. Inf. Syst. 2017, 6, 62–66. [Google Scholar] [CrossRef]

- Pahuja, R.; Kumar, N. Android mobile phone controlled bluetooth robot using 8051 microcontroller. Int. J. Sci. Eng. Res. 2014, 2, 14–17. [Google Scholar]

- Kumar, R.; Ushapreethi, P.; Kubade, P.R.; Kulkarni, H.B. Android phone controlled bluetooth robot. Int. Res. J. Eng. Technol. 2016, 3, 104–114. [Google Scholar]

- Roy, S.; Wangchuk, T.P.; Bhatt, R. Arduino based bluetooth controlled robot. Int. J. Eng. Trends Technol. 2016, 32, 216–219. [Google Scholar]

- Kanere, S.; Shinde, R.; Tornekar, V.; Zagade, D.; Sonar, V.S. Bluetooth controlled car system. Int. J. Adv. Res. Comput. Commun. Eng. 2017, 6, 44–46. [Google Scholar] [CrossRef]

- Gandotra, S.; Sharma, B.; Mahajan, S.; Motup, T.; Choudhary, T.; Thakur, P. Bluetooth controlled RC car using arduino. Int. J. Interdiscip. Res. 2016, 2, 144–147. [Google Scholar]

- Rai, N.; Rasaily, D.; Wangchuk, T.R.; Gurung, M.; Khawas, R.K. Bluetooth remote controlled car using Arduino. Int. J. Eng. Trends Technol. 2016, 33, 381–384. [Google Scholar] [CrossRef]

- Kannan, K.; Selvakumar, J. Arduino based voice controlled robot. Int. Res. J. Eng. Technol. 2015, 2, 49–55. [Google Scholar]

- Rashid, H.; Ahmed, I.U.; Osman, S.B.; Newaz, B.; Rasheduzzaman, M.; Reza, S.M.T. Design and implementation of a voice controlled robot with human interaction ability. In Proceedings of the International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering, Rajshahi, Bangladesh, 26–27 January 2017; Volume 65, pp. 148–151. [Google Scholar]

- Memon, Y.A.; Motan, I.; Akbar, M.A.; Hameed, S.; Hasan, M.U. Speech recognition system for a voice controlled robot with real time obstacle detection and avoidance. Int. J. Electr. Electron. Data Commun. 2016, 4, 33–37. [Google Scholar]

- Zope, S.; Muluk, P.; Mohite, R.; Lanke, A.; Bamankar, M. Voice control robot using android application. Int. J. Interdiscip. Res. 2017, 3, 1723–1726. [Google Scholar]

- Harshad, P.; Amol, J.; Dnyaneshwar, K.; Bhosale, A.C. Voice control robot using Arduino pick and place object. Int. J. Innov. Eng. Res. Technol. 2017, 4, 27–30. [Google Scholar]

- Kumar, A.; Chauhan, R. Voice controlled robot. Int. J. Innov. Res. Technol. 2014, 1, 338–344. [Google Scholar]

- Saravanan, D.; Parthiban, R.; Archanaa, G.I. Voice controlled robotic car using Arduino for smart agriculture. Int. J. Pure Math. 2018, 118, 2097–2105. [Google Scholar]

- Chikhale, V.; Gharat, R.; Gogate, S.; Amireddy, R. Voice controlled robotic system using Arduino microcontroller. Int. J. New Technol. Res. 2017, 3, 92–94. [Google Scholar]

- Louis, L. Working principle of Arduino and using it as a tool for study and research. Int. J. Control Autom. Commun. Syst. 2016, 1, 21–29. [Google Scholar]

- Punetha, D.; Kumar, N.; Mehta, V. Development and applications of line following robot based health care management system. Int. J. Adv. Res. Comput. Technol. 2013, 2, 2446–2450. [Google Scholar]

- Ebiesuwa, O.O.; Adekunle, Y.A.; Akinyemi, L.A.; Oyerinde, O.D. Line follower robot using a sophisticated sensor approach. Int. J. Eng. Res. Technol. 2013, 2, 1980–1982. [Google Scholar]

- Gumus, O.; Topaloglu, M.; Ozcelik, D. The use of computer controlled line follower robots in public transport. Procedia Comput. Sci. 2016, 102, 202–208. [Google Scholar] [CrossRef]

- Mi, J.; Takahashi, Y. An design of hf-band rfid system with multiple readers and passive tags for indoor mobile robot self-localization. Sensors 2016, 16, 1200. [Google Scholar] [CrossRef] [PubMed]

- Chavan, A.S.; Pansare, D.D.; Kadam, S.P.; Mayekar, N.K.; Jha, K.V.; Bhagwat, P.R. Design of accident prevention system using QRD 1114 and CNY 70 Sensors. Int. J. Emerg. Technol. Adv. Eng. 2013, 3, 525–530. [Google Scholar]

- Mohamed, A.; Yang, C.; Cangelosi, A. Stereo vision based object tracking control for a movable robot head. Int. Fed. Autom. Control 2016, 49, 155–162. [Google Scholar] [CrossRef]

- Pati, C.S.; Kala, R. Vision-based robot following using pid control. Technologies 2017, 5, 34. [Google Scholar] [CrossRef]

- Nadiger, N.; Madhusudan, K.; Naik, S. humanoids implementation using sixth sense. Int. J. Inf. Syst. Comput. Sci. 2012, 1, 31–34. [Google Scholar]

- Purohit, R.; Vyas, S.; Mathur, P. AI and its application: Sixth sense technology. Int. J. Emerg. Trends Technol. Comput. Sci. 2013, 2, 184–186. [Google Scholar]

- Daroga, R.; Pandey, N. Sixth Sense Technology & Its Applications. Int. J. Sci. Res. Publ. 2015, 5, 1–4. [Google Scholar]

- Dhanalakshmi, V.; Jayasri, D.; Vishnupriya, R. Zigbee based wireless intelligent security robot for defence camp. Int. J. Adv. Eng. Glob. Technol. 2014, 2, 576–583. [Google Scholar]

- Patel, A.; Chaudhari, K.; Patel, D. Touch screen controlled multipurpose spy robot using zigbee. Int. J. Adv. Res. Comput. Eng. Technol. 2014, 3, 1058–1062. [Google Scholar]

- Premkumar, M. Unmanned multi-functional robot using zigbee adopter network for defense application. Int. J. Adv. Res. Compute. Eng. Technol. 2013, 2, 47–55. [Google Scholar]

- Song, B.; Lu, X.; Bai, X. Zigbee based wireless sensor and actuator network for service robot intelligent space. Wirel. Sens. Netw. 2012, 4, 235–242. [Google Scholar] [CrossRef]

- Iswarya, P.; Ramarao, D.; Kumar, B.D.; Kumar, K.D.; Manikyalarao, T. Obstacle avoidance robot using Arduino. Int. J. Adv. Res. Sci. Eng. 2017, 6, 634–638. [Google Scholar]

- Bhagat, K.; Deshmukh, S.; Dhonde, S.; Ghag, S. Obstacle Avoidance Robot. Int. J. Sci. Eng. Technol. Res. 2016, 5, 439–442. [Google Scholar]

- Ankit, V.; Jigar, P.; Savan, V. Obstacle avoidance robotic vehicle using ultrasonic sensor, android and bluetooth for obstacle detection. Int. Res. J. Eng. Technol. 2016, 3, 339–348. [Google Scholar]

- Dumbre, K.; Ganeshkar, S.; Dhekne, A. Robotic vehicle control using internet via webpage and keyboard. Int. J. Comput. Appl. 2015, 114, 15–19. [Google Scholar] [CrossRef]

- Kadir, W.M.H.W.; Samin, R.E.; Ibrahim, B.S.K. Internet controlled robotic arm. Int. Symp. Rob. Intell. Sens. 2012, 41, 1065–1071. [Google Scholar] [CrossRef]

- Patil, A.D.; Kadiri, H.I.; Joshi, A.S.; Wani, A.B. IoT based remote access human control robot using mems sensor. Int. J. Comput. Sci. Mob. Comput. 2016, 5, 816–826. [Google Scholar]

- Kalaiarasi, D.; Pavithra, S.; Pratheeba, S.; Priyaadharshini, R.L. IoT based motion control system of a robotic car. Int. Res. J. Eng. Technol. 2018, 5, 3073–3076. [Google Scholar]

- Nanda Kishor, M.; Prasad, A.P.; Vinod Kumar, M.; Manikanta, R.; Geetha, M.N.; Kavyashree, B. IoT based smart car parking using line following robot. Int. J. Electr. Electron. Data. Commun. 2017, 5, 46–48. [Google Scholar]

- Nayyar, A.; Puri, V.; Nguyen, N.G.; Le, D.N. Smart surveillance robot for real-time monitoring and control system in environment and industrial applications. Inf. Syst. Des. Intell. Appl. Adv. Intell. Syst. Comput. 2018, 229–243. [Google Scholar] [CrossRef]

- Ananthapadmanabhan, J.; Abraham, A.M.; George, L.M.; Saji, V.A.; Anil, A.R. Smart robotic assistant using IoT. Int. Res. J. Eng. Technol. 2017, 4, 857–859. [Google Scholar]

- Waldherr, S.; Romero, R.; Thrun, S. A gesture based interface for human-robot interaction. Auton. Rob. Springer 2000, 9, 151–173. [Google Scholar] [CrossRef]

- Perrin, S.; Cassinelli, A.; Ishikawa, M. Gesture recognition using laser-based tracing system. In Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition, Seoul, Korea, 19 May 2004; pp. 541–546. [Google Scholar]

- Google Play. Arduino Bluetooth Control. Available online: https://play.google.com/store/apps/details?id=com.broxcode.arduinobluetoothfree&hl=en (accessed on 31 December 2018).

- Arduino Uno Rev3. Available online: https://store.arduino.cc/usa/arduino-uno-rev3 (accessed on 31 December 2018).

- Compenets101. Arduino Nano. Available online: https://components101.com/microcontrollers/arduino-nano (accessed on 31 December 2018).

- SunRom. Gyro + Accelerometer Sensor, 3 Axis based on MPU-6050. Available online: https://www.sunrom.com/p/gyro-accelerometer-sensor-3-axis-based-on-mpu-6050 (accessed on 31 December 2018).

- ElProCus. RF Module—Transmitter & Receiver. Available online: https://www.elprocus.com/rf-module-transmitter-receiver/ (accessed on 31 December 2018).

- SunFounder. L293D Motor Driver Shield. Available online: http://wiki.sunfounder.cc/index.php?title=L293D_Motor_Driver_Shield (accessed on 31 December 2018).

- Itead. Serial Port Bluetooth Module HC-05. Available online: https://www.itead.cc/wiki/Serial_Port_Bluetooth_Module_(Master/Slave)_:_HC-05 (accessed on 31 December 2018).

- How to Mechatronics. L298 Motor Module. Available online: https://howtomechatronics.com/tutorials/ arduino/arduino-dc-motor-control-tutorial-l298n-pwm-h-bridge/ (accessed on 31 December 2018).

- Youtube. An Automated Robot Car Control System with Hand Gestures and Mobile Application Using Arduino. Available online: https://www.youtube.com/watch?v=3Nqz9jewack&feature=youtu.be (accessed on 31 December 2018).

- Reddy, B.R.; Mahender, E. Speech to text conversion using android platform. Int. J. Eng. Res. Appl. 2013, 3, 253–258. [Google Scholar]

- Insteon. Insteon WiFi Cameras. Available online: https://www.insteon.com/wifi-cameras/ (accessed on 31 December 2018).

- Krotak, T.; Simlova, M. The analysis of the acceleration of the vehicle for assessing the condition of the driver. In Proceedings of the IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012; pp. 571–576. [Google Scholar]

- González, A.; Olazagoitia, J.L.; Vinolas, J. A low-cost data acquisition system for automobile dynamics applications. Sensors 2018, 18, 366. [Google Scholar] [CrossRef]

| Components | Specifications |

|---|---|

| Arduino UNO [50,78] | 28 pins; Operating voltage: 7–12 V |

| Arduino Nano [79] | 30 pins; Operating voltage: 7–12 V |

| MPU6050 Accelerometer [80] | 8 pins; Operating voltage 3.3 V |

| RF Sender/Receiver [81] | Sender (3 pins; Operating voltage 3–12 V; Transmission range: 90 m), Receiver (4 pins, Operating voltage 5 VDC) |

| L293D Motor Shield [82] | Supply-Voltage Range: 4.5–36 V; Output current: 600 mA/channel |

| Bluetooth Module HC-05 [83] | 6 pins; Operating voltage: 3.3–5 V; Transmission range: 100 m |

| Android Mobile Application [77] | Android compatible |

| L298 Motor Module [84] | Operating voltage: 5 V; Max power: 25 W |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ullah, S.; Mumtaz, Z.; Liu, S.; Abubaqr, M.; Mahboob, A.; Madni, H.A. Single-Equipment with Multiple-Application for an Automated Robot-Car Control System. Sensors 2019, 19, 662. https://doi.org/10.3390/s19030662

Ullah S, Mumtaz Z, Liu S, Abubaqr M, Mahboob A, Madni HA. Single-Equipment with Multiple-Application for an Automated Robot-Car Control System. Sensors. 2019; 19(3):662. https://doi.org/10.3390/s19030662

Chicago/Turabian StyleUllah, Saleem, Zain Mumtaz, Shuo Liu, Mohammad Abubaqr, Athar Mahboob, and Hamza Ahmad Madni. 2019. "Single-Equipment with Multiple-Application for an Automated Robot-Car Control System" Sensors 19, no. 3: 662. https://doi.org/10.3390/s19030662

APA StyleUllah, S., Mumtaz, Z., Liu, S., Abubaqr, M., Mahboob, A., & Madni, H. A. (2019). Single-Equipment with Multiple-Application for an Automated Robot-Car Control System. Sensors, 19(3), 662. https://doi.org/10.3390/s19030662