Pedestrian Dead Reckoning-Assisted Visual Inertial Odometry Integrity Monitoring

Abstract

1. Introduction

- We analyzed the error source and divided it into four error situations when the vision-based positioning system had a large positioning error under special indoor environments that had fewer textures, dynamic obstacles or low lightings.

- We proposed autonomous integrity monitoring of a visual observation-based pedestrian dead reckoning system. According to the characteristic of short-term reliability of PDR, the proposed PDR-assisted visual integrity monitoring system switches states between VIO (or VO) and PDR automatically to provide more accurate positions in an indoor environment.

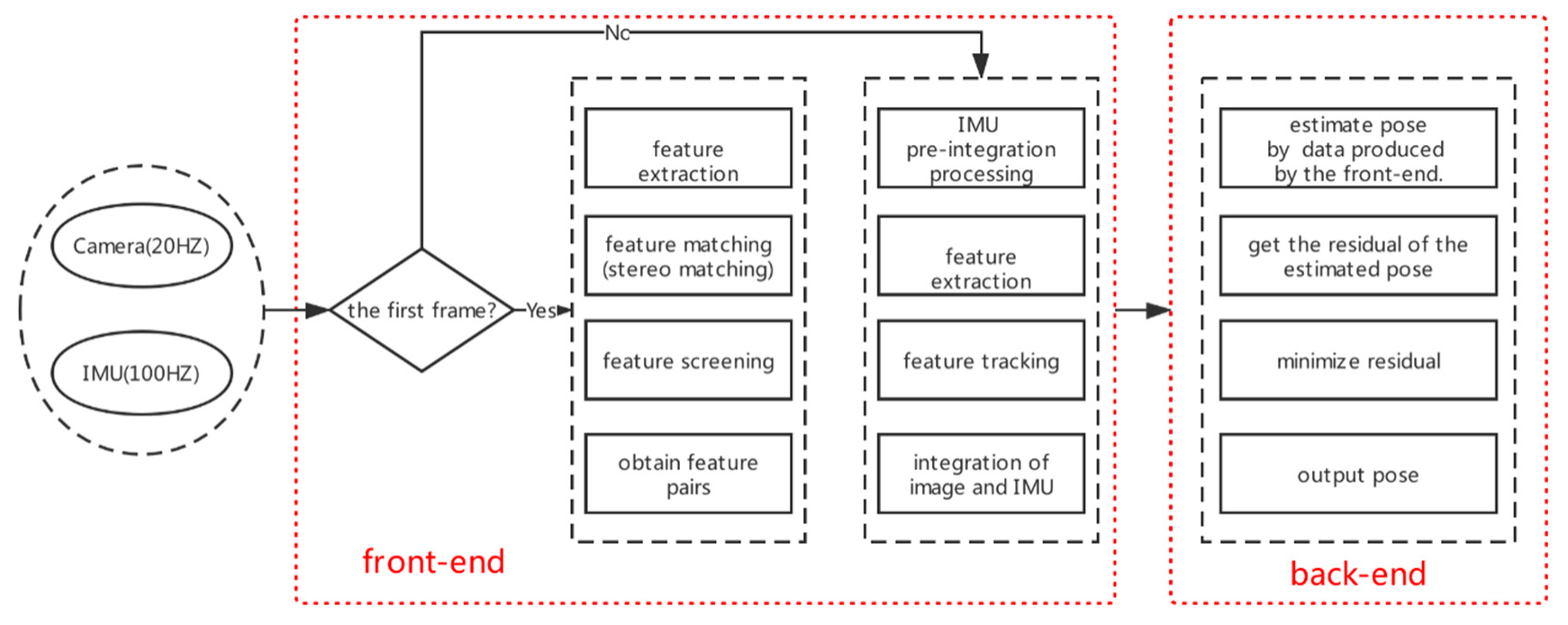

2. Background

3. Visual Error Analysis and Autonomous Integrity Monitoring

3.1. Visual Error Analysis

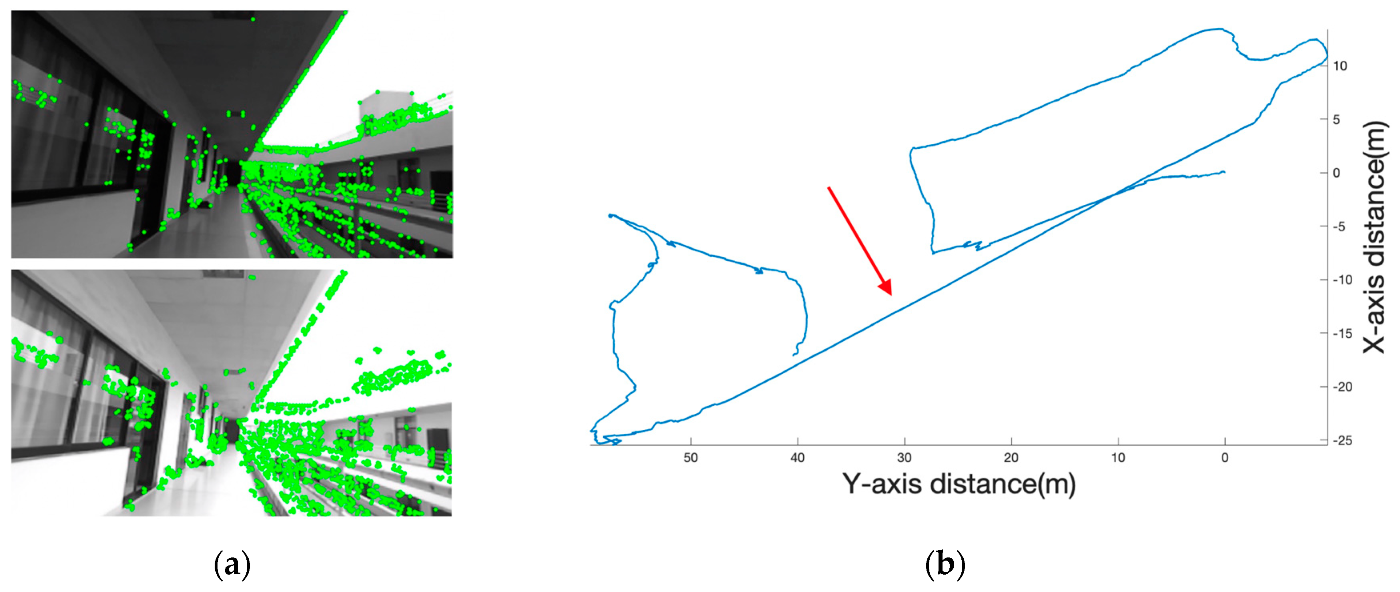

3.1.1. Insufficient Features

3.1.2. Lighting Causes the Failure of Feature Tracking

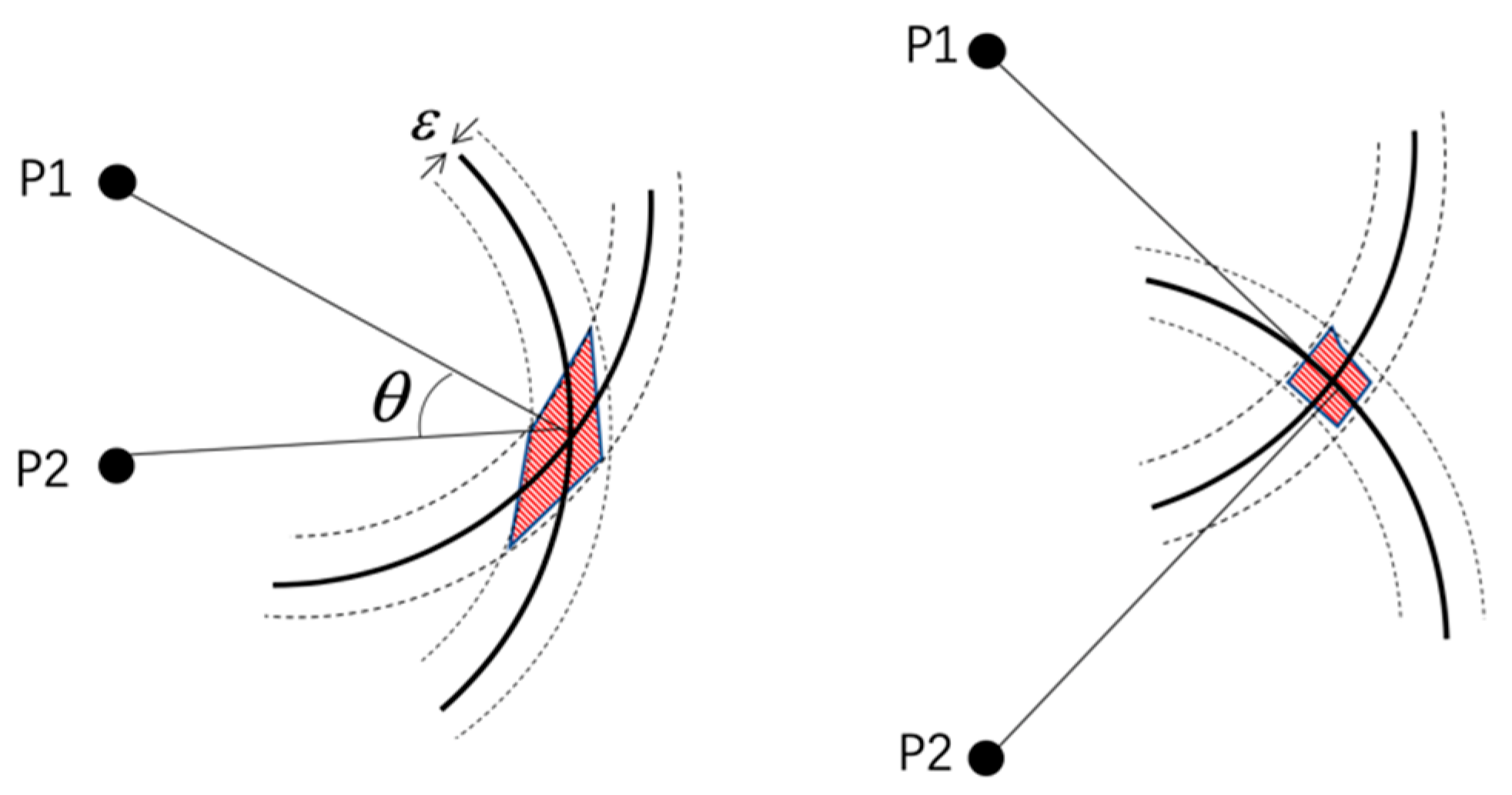

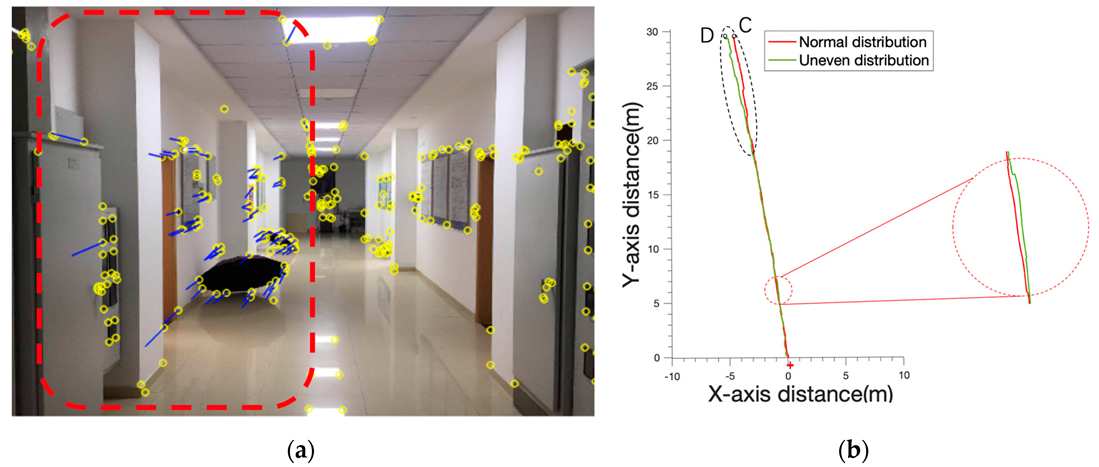

3.1.3. Uneven Distribution of Features

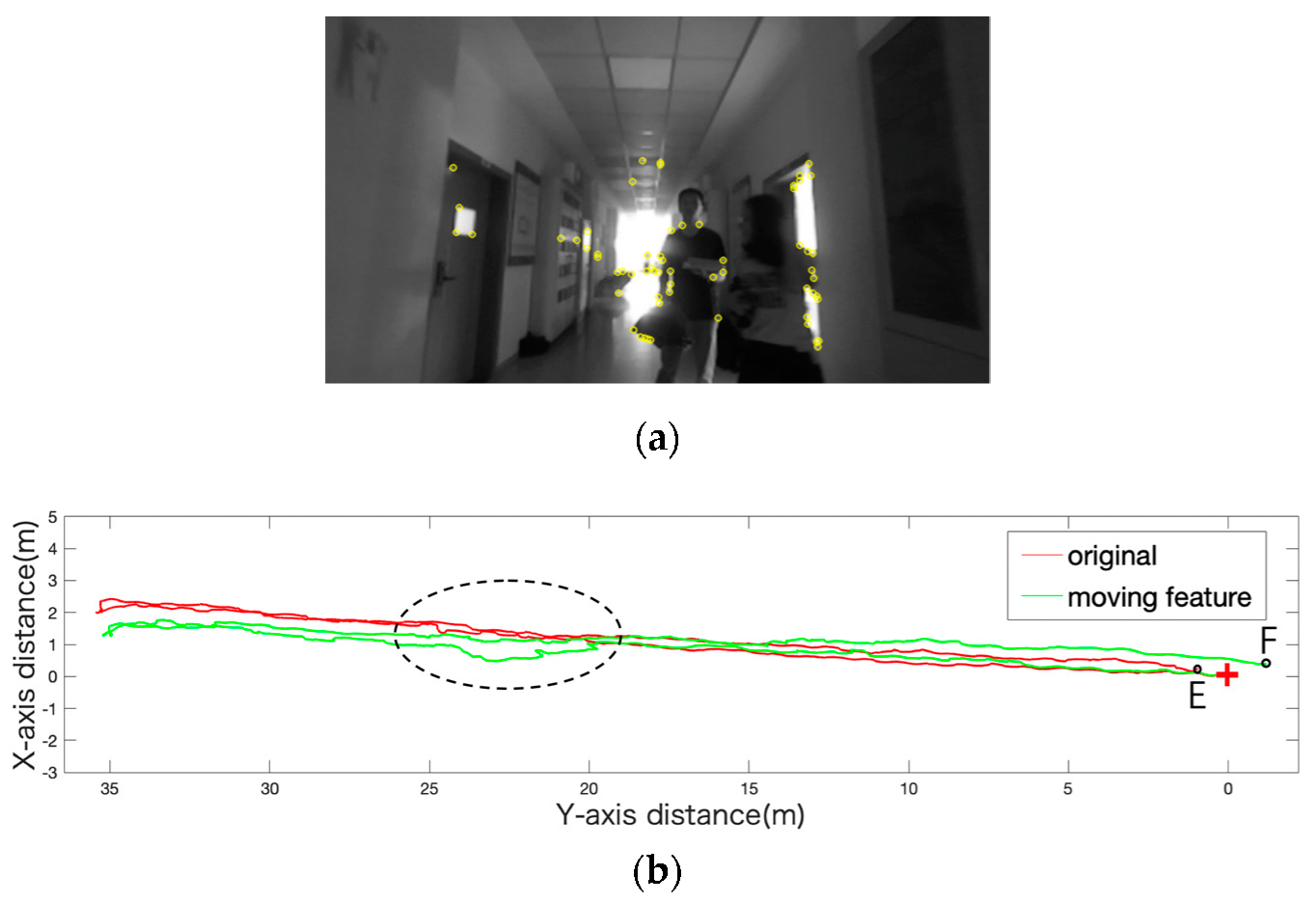

3.1.4. Moving Features

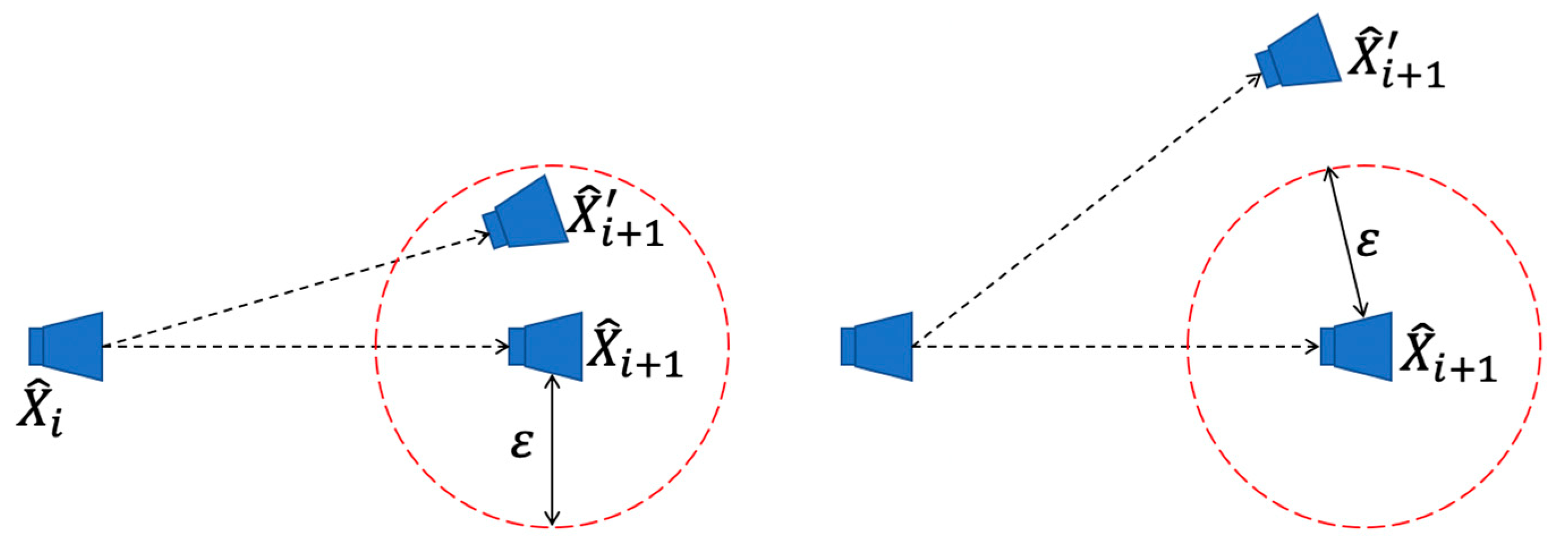

3.2. PDR-Assisted Visual Integrity Monitoring

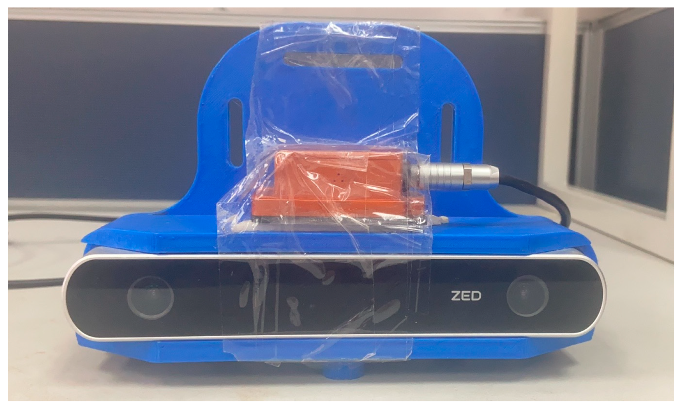

4. Experiments and Evaluation

4.1. Assessing Environment Impacts

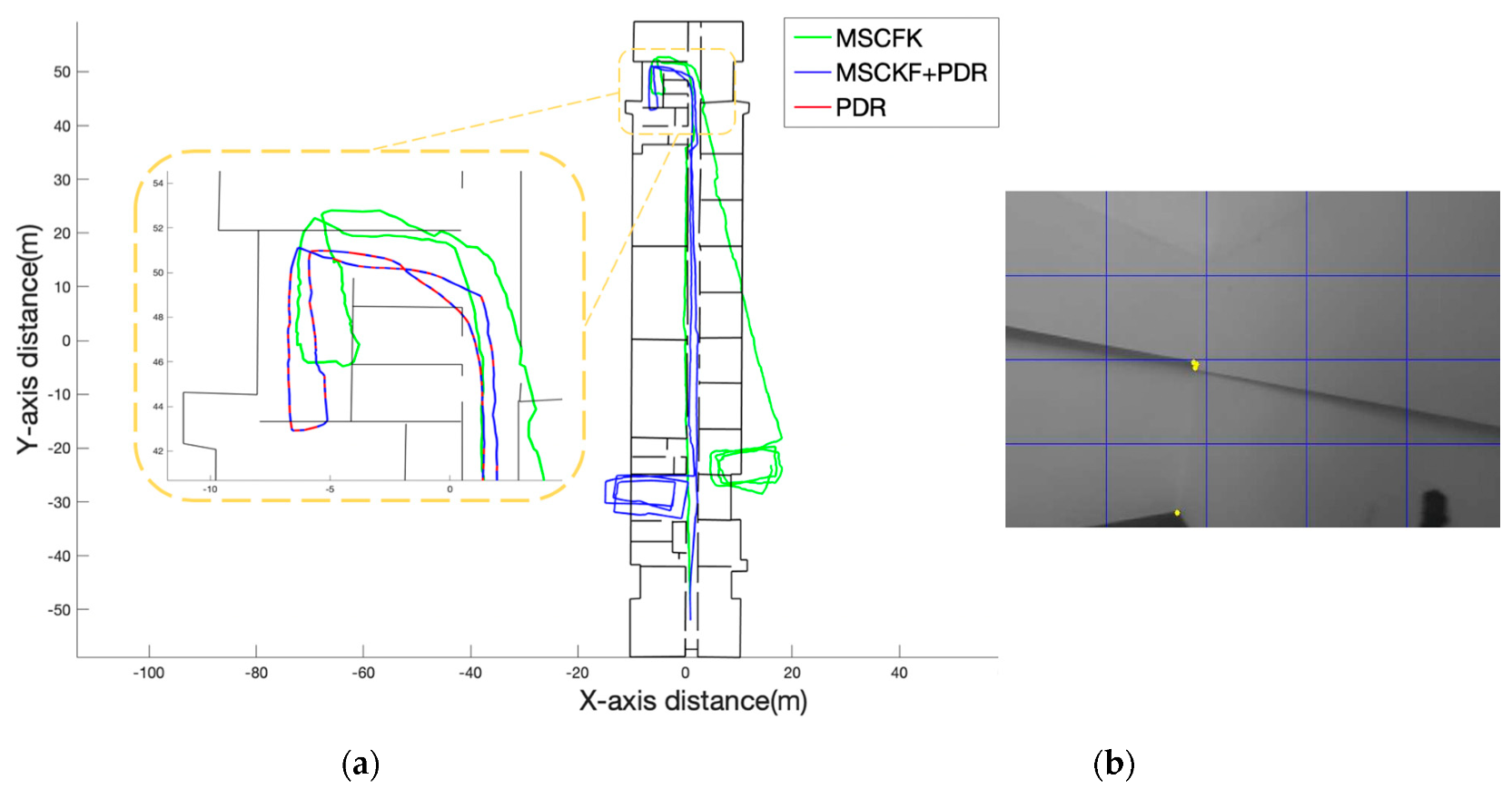

4.1.1. Insufficient Features

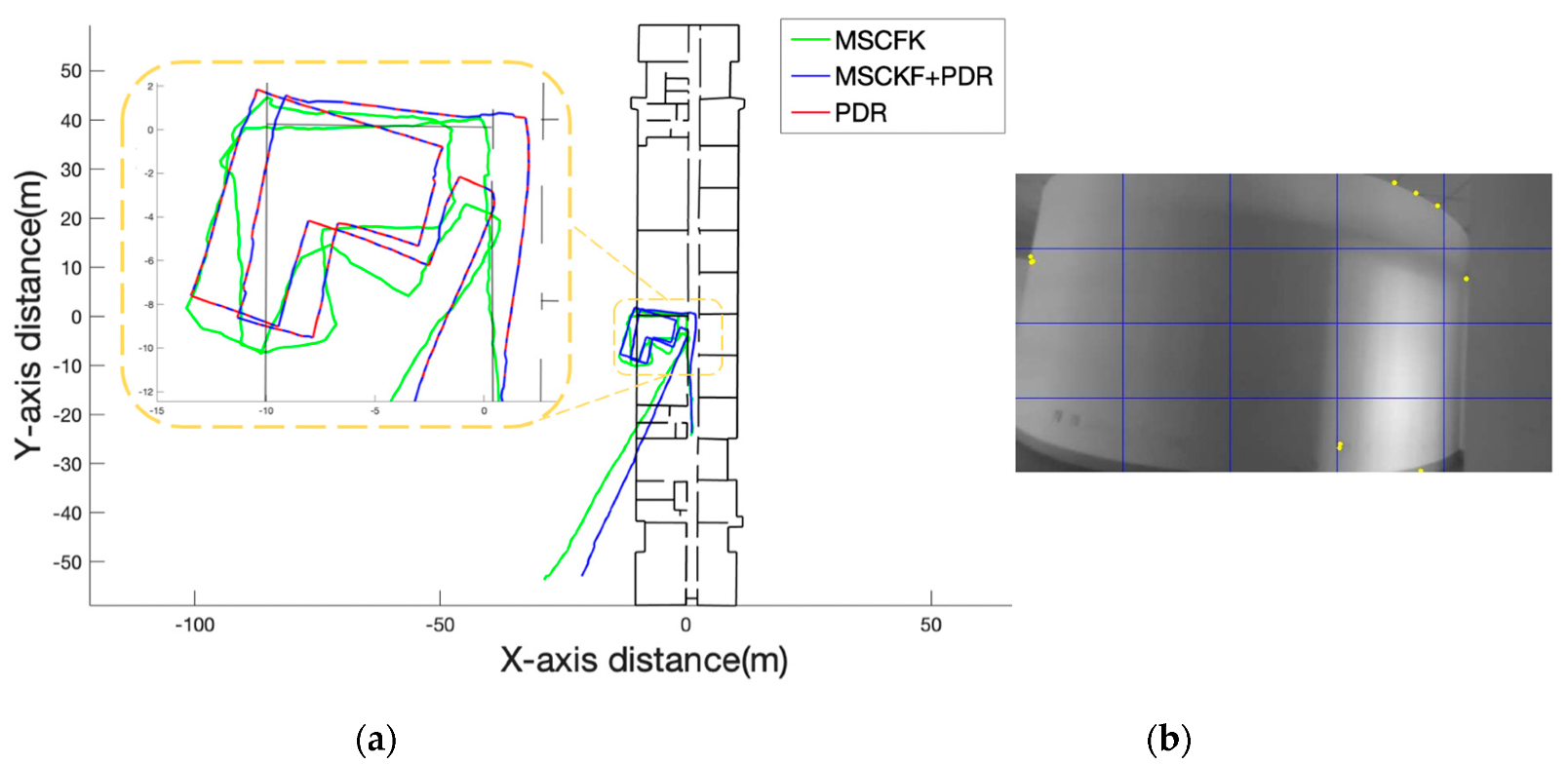

4.1.2. Lighting Causes the Failure of Feature Tracking

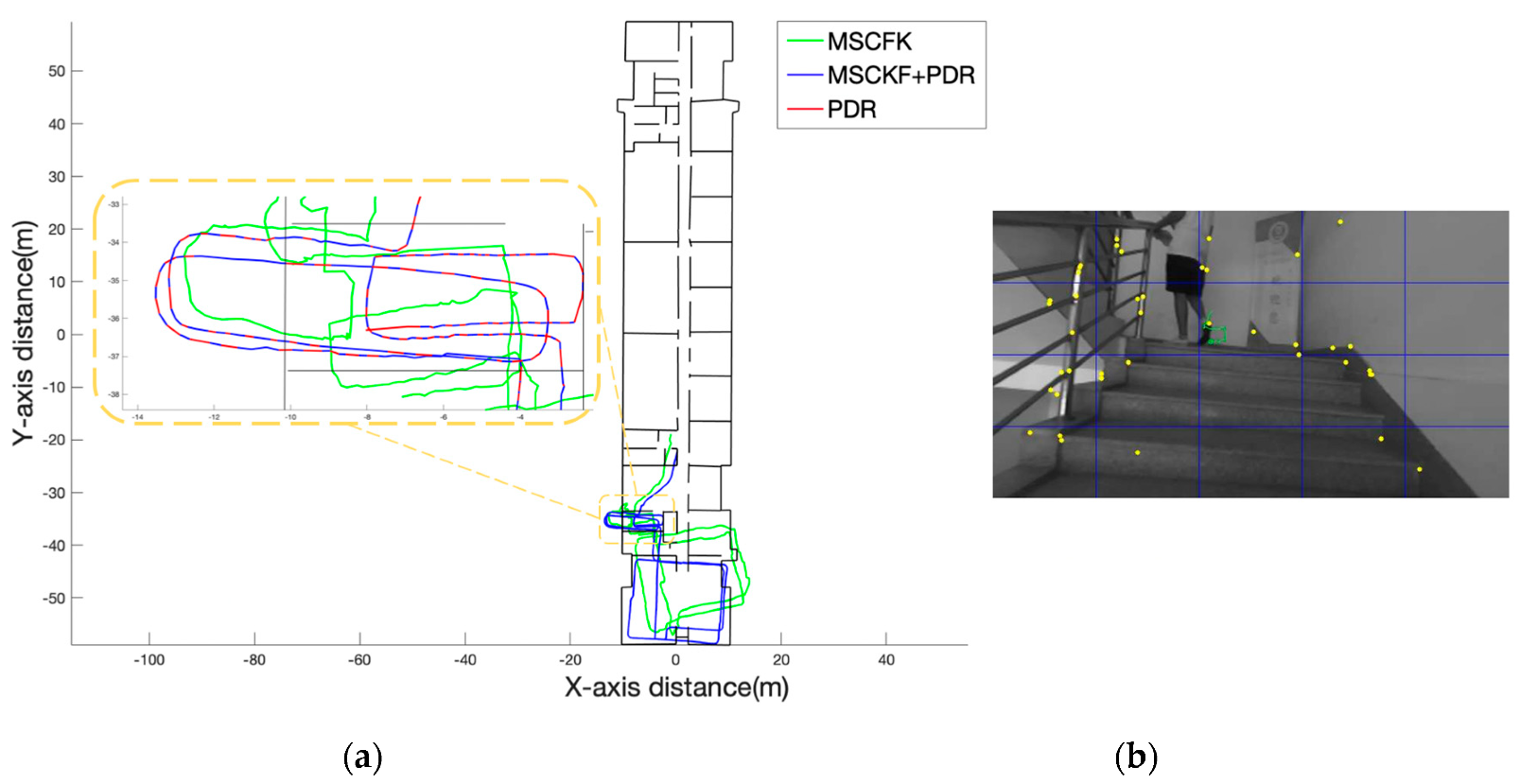

4.1.3. Uneven Distribution of Features

4.1.4. Moving Feature Point

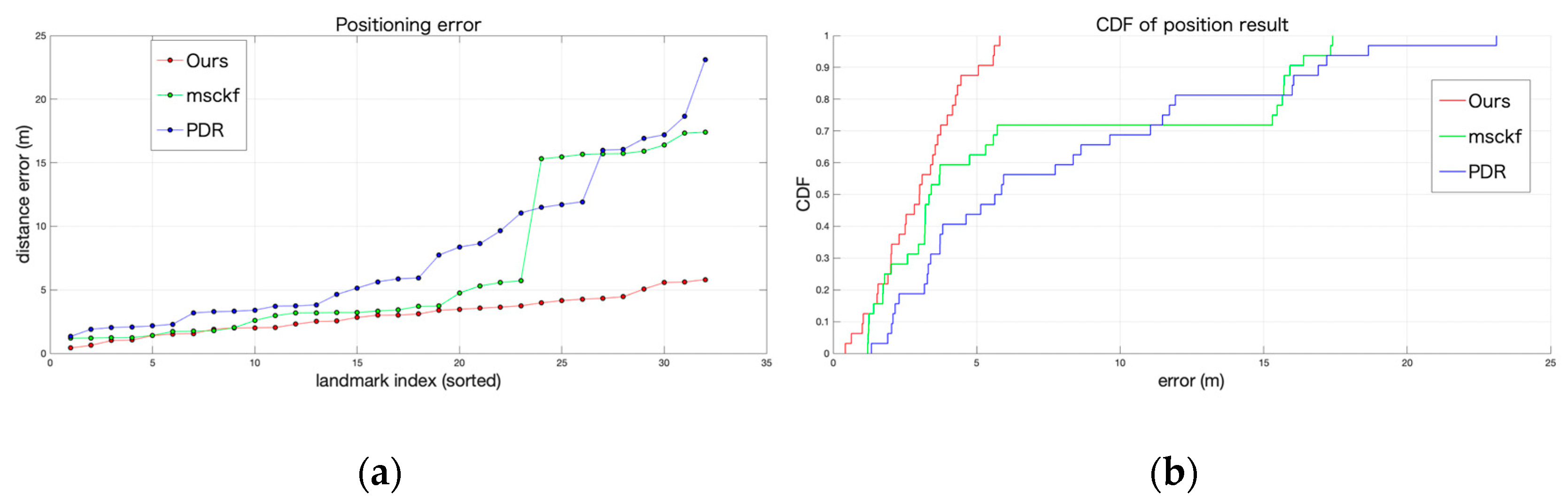

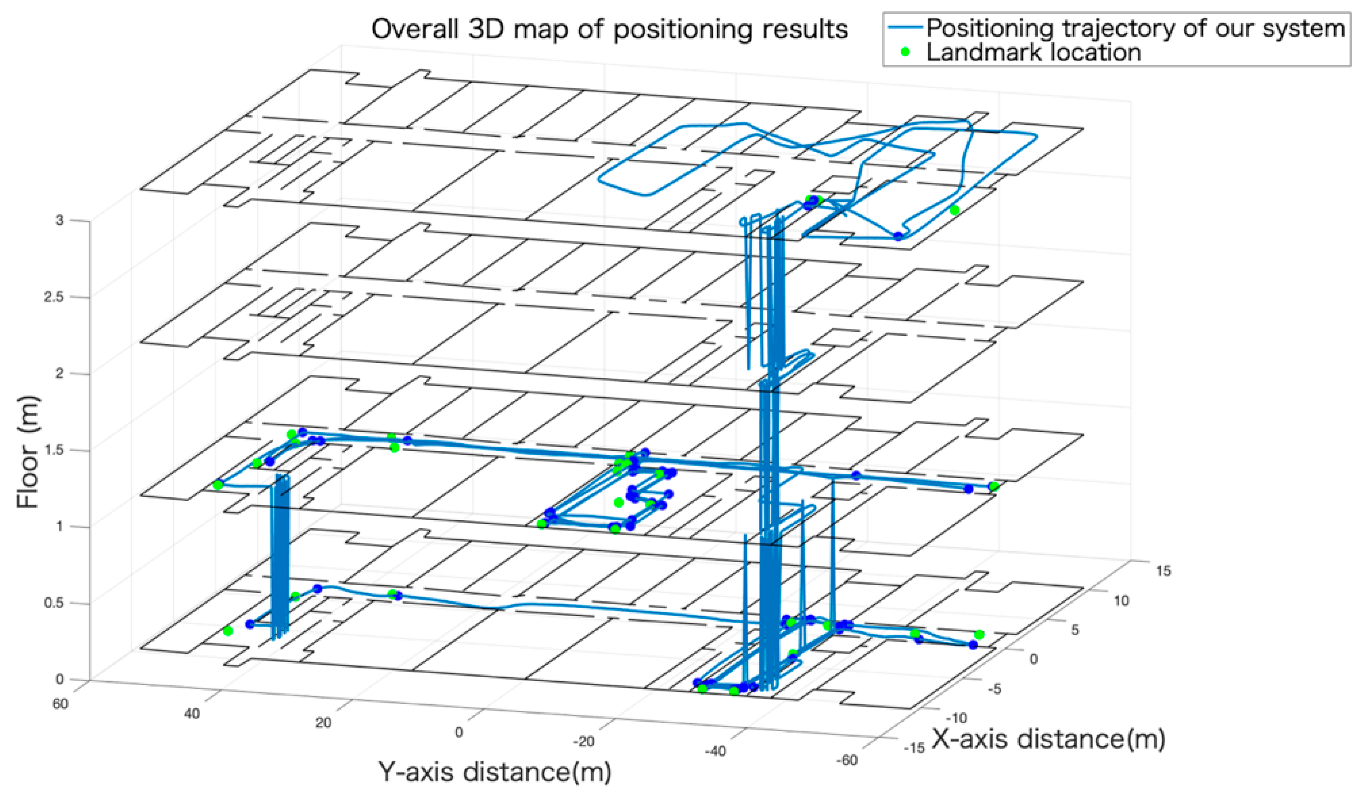

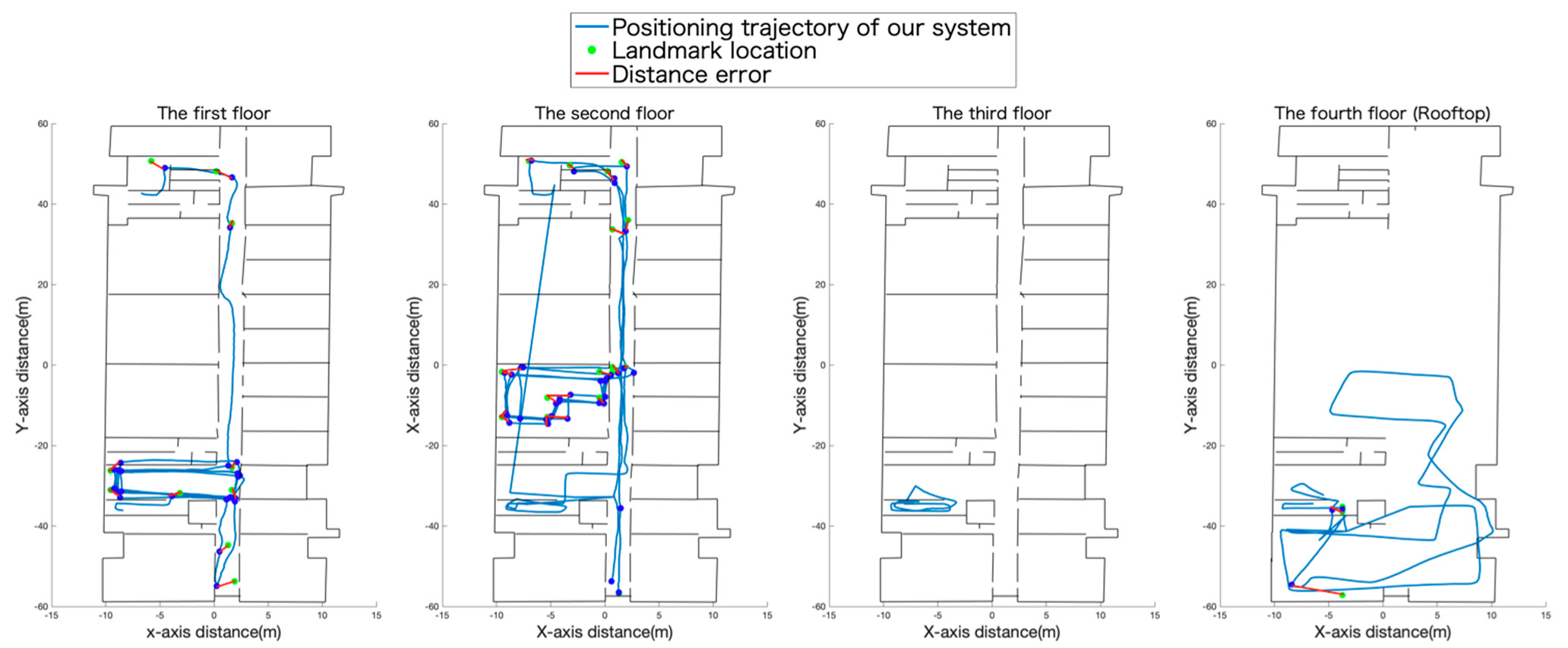

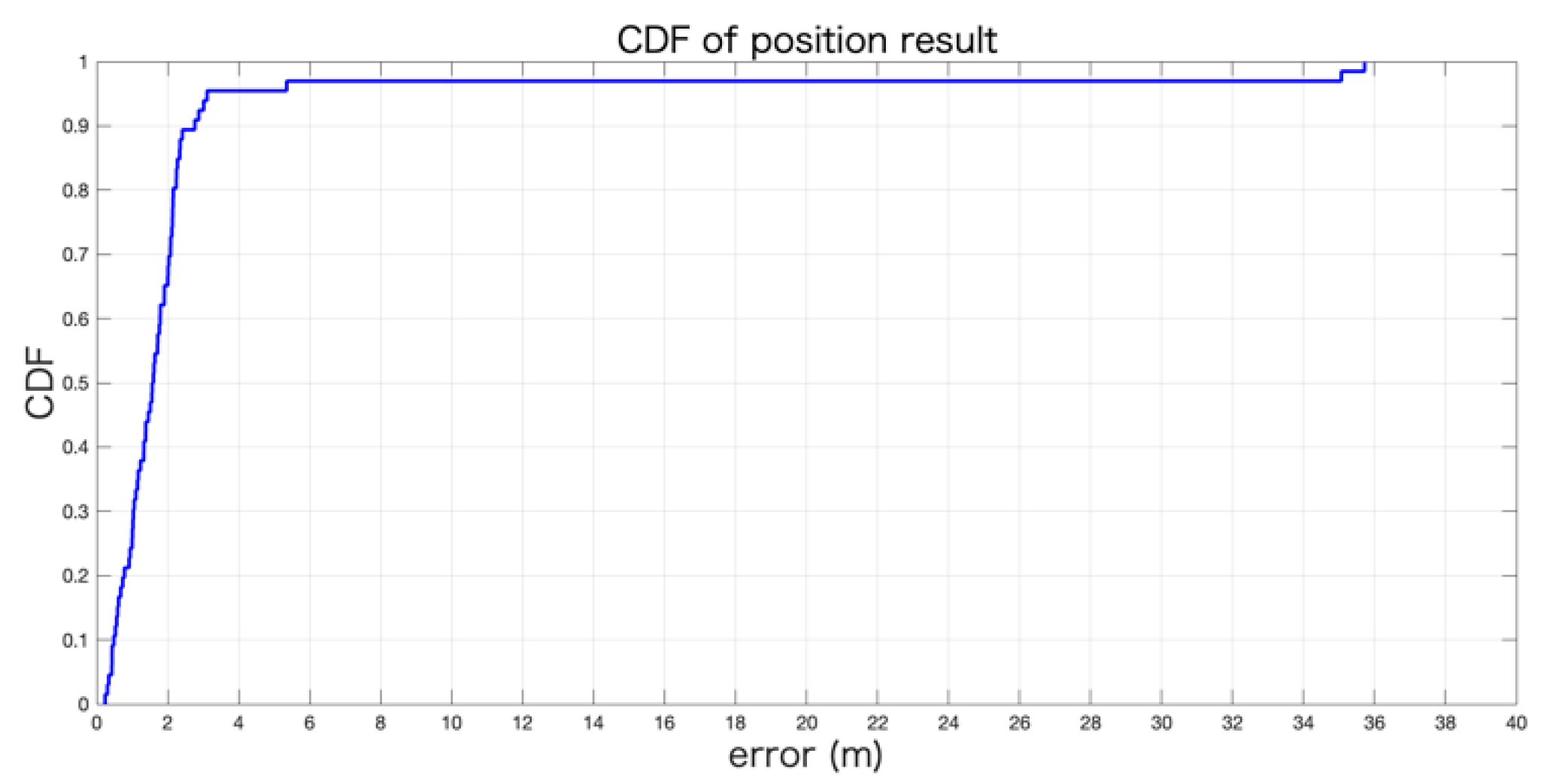

4.2. Evaluation of Proposed PDR-Assisted Visual Integrity Monitoring

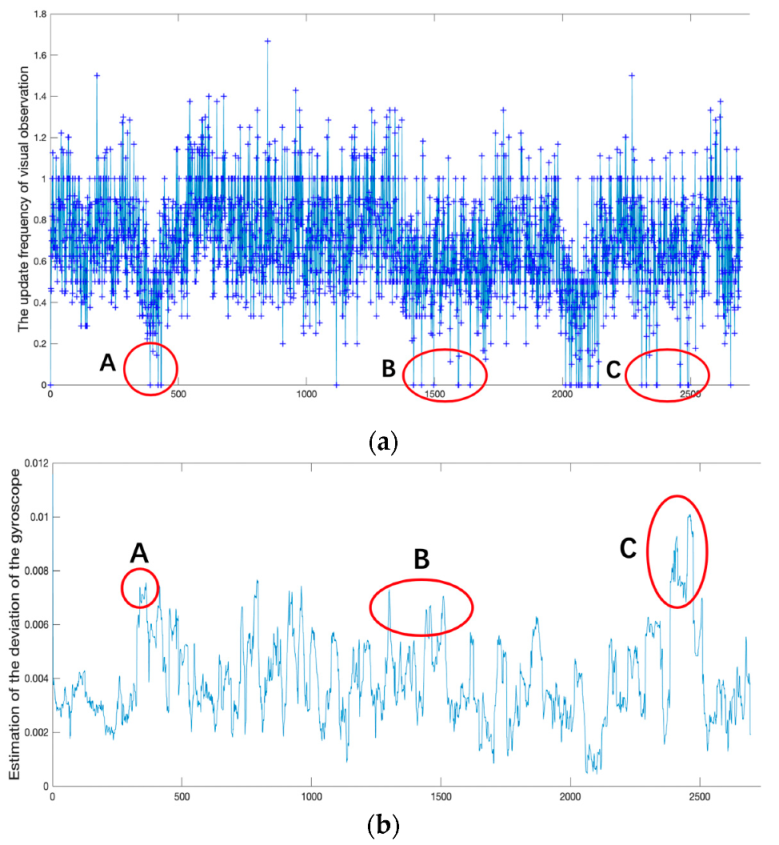

4.2.1. Section A

4.2.2. Section B

4.2.3. Section C

5. Summary and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Causa, F.; Vetrella, A.R.; Fasano, G.; Accardo, D. Multi-UAV formation geometries for cooperative navigation in GNSS-challenging environments. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018. [Google Scholar]

- Al-Ammar, M.A.; Alhadhrami, S.; Al-Salman, A.; Alarifi, A.; Al-Khalifa, H.S.; Alnafessah, A.; Alsaleh, M. Comparative Survey of Indoor Positioning Technologies, Techniques, and Algorithms. In Proceedings of the International Conference on Cyberworlds, Santander, Spain, 6–8 October 2014. [Google Scholar]

- Hameed, A.; Ahmed, H.A. Survey on indoor positioning applications based on different technologies. In Proceedings of the 12th International Conference on Mathematics, Actuarial Science, Computer Science and Statistics (MACS), Karachi, Pakistan, 24–25 November 2018. [Google Scholar]

- Alkhawaja, F.; Jaradat, M.; Romdhane, L. Techniques of Indoor Positioning Systems (IPS): A Survey. In Proceedings of the Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 26 March–10 April 2019. [Google Scholar]

- Mohamed, S.A.; Haghbayan, M.H.; Westerlund, T.; Heikkonen, J.; Tenhunen, H.; Plosila, J. A Survey on Odometry for Autonomous Navigation Systems. IEEE Access 2019, 7, 97466–97486. [Google Scholar] [CrossRef]

- He, S.; Chan, S.H.G. Wi-Fi Fingerprint-Based Indoor Positioning: Recent Advances and Comparisons. IEEE Commun. Surv. Tutorials 2016, 18, 466–490. [Google Scholar] [CrossRef]

- De Blasio, G.; Quesada-Arencibia, A.; García, C.R.; Rodríguez-Rodríguez, J.C.; Moreno-Díaz, R. A Protocol-Channel-Based Indoor Positioning Performance Study for Bluetooth Low Energy. IEEE Access 2018, 6, 33440–33450. [Google Scholar] [CrossRef]

- Feng, Z.; Hao, S. Low-Light Image Enhancement by Refining Illumination Map with Self-Guided Filtering. In Proceedings of the IEEE International Conference on Big Knowledge (ICBK), Hefei, China, 9–10 August 2017. [Google Scholar]

- Elloumi, W.; Latoui, A.; Canals, R.; Chetouani, A.; Treuillet, S. Indoor Pedestrian Localization With a Smartphone: A Comparison of Inertial and Vision-Based Methods. IEEE Sens. J. 2016, 16, 5376–5388. [Google Scholar] [CrossRef]

- Filipenko, M.; Afanasyev, I. Comparison of Various SLAM Systems for Mobile Robot in an Indoor Environment. In Proceedings of the International Conference on Intelligent Systems (IS), Funchal-Madeira, Portugal, 25–27 September 2018. [Google Scholar]

- Huang, G. Visual-Inertial Navigation: A Concise Review. arXiv 2019, arXiv:Robotics/1906.02650. Available online: https://arxiv.org/abs/1906.02650 (accessed on 8 October 2019).

- Panahandeh, G.; Jansson, M. Vision-Aided Inertial Navigation Based on Ground Plane Feature Detection. IEEE/ASME Trans. Mechatron. 2014, 19, 1206–1215. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Rob. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Pire, T.; Fischer, T.; Castro, G.; De Cristóforis, P.; Civera, J.; Berlles, J.J. S-PTAM: Stereo Parallel Tracking and Mapping. Rob. Autom. Syst. 2017, 93, 27–42. [Google Scholar] [CrossRef]

- Mainetti, L.; Patrono, L.; Sergi, I. A survey on indoor positioning systems. In Proceedings of the 22nd International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 17–19 September 2014. [Google Scholar]

- Garcia-Villalonga, S.; Perez-Navarro, A. Influence of human absorption of Wi-Fi signal in indoor positioning with Wi-Fi fingerprinting. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Banff, AB, Canada, 13–16 October 2015. [Google Scholar]

- Kourogi, M.; Kurata, T. Personal positioning based on walking locomotion analysis with self-contained sensors and a wearable camera. In Proceedings of the 2nd IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 7–10 October 2003. [Google Scholar]

- Li, C.; Yu, L.; Fei, S. Real-Time 3D Motion Tracking and Reconstruction System Using Camera and IMU Sensors. IEEE Sens. J. 2019, 19, 6460–6466. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-aided Inertial Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 Octomber 2015. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Rob. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Albrecht, A.; Heide, N. Improving stereo vision based SLAM by integrating inertial measurements for person indoor navigation. In Proceedings of the 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018. [Google Scholar]

- Karamat, T.B.; Lins, R.G.; Givigi, S.N.; Noureldin, A. Novel EKF-Based Vision/Inertial System Integration for Improved Navigation. IEEE Trans. Instrum. Meas. 2018, 67, 116–125. [Google Scholar] [CrossRef]

- Calhoun, S.M.; Raquet, J. Integrity determination for a vision based precision relative navigation system. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Savannah, GA, USA, 11–14 April 2016. [Google Scholar]

- Calhoun, S.; Raquet, J.; Peterson, G. Vision-aided integrity monitor for precision relative navigation systems. In Proceedings of the International Technical Meeting of the Institute of Navigation, Dana Point, CA, USA, 26–28 January 2015. [Google Scholar]

- Koch, H.; Konig, A.; Weigl-Seitz, A.; Kleinmann, K.; Suchy, J. Multisensor Contour Following With Vision, Force, and Acceleration Sensors for an Industrial Robot. IEEE Trans. Instrum. Meas. 2013, 62, 268–280. [Google Scholar] [CrossRef]

- Kyriakoulis, N.; Gasteratos, A. Color-Based Monocular Visuoinertial 3-D Pose Estimation of a Volant Robot. IEEE Trans. Instrum. Meas. 2010, 59, 2706–2715. [Google Scholar] [CrossRef]

- Altinpinar, O.V.; Yalçin, M.E. Design of a pedestrian dead-reckoning system and comparison of methods on the system. In Proceedings of the 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018. [Google Scholar]

- Gobana, F.W. Survey of Inertial/magnetic Sensors Based pedestrian dead reckoning by multi-sensor fusion method. In Proceedings of the International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 17–19 October 2018. [Google Scholar]

- Jimenez, A.R.; Seco, F.; Prieto, C.; Guevara, J. A comparison of Pedestrian Dead-Reckoning algorithms using a low-cost MEMS IMU. In Proceedings of the IEEE International Symposium on Intelligent Signal Processing, Budapest, Hungary, 26–28 August 2009. [Google Scholar]

- Lee, J.; Huang, S. An Experimental Heuristic Approach to Multi-Pose Pedestrian Dead Reckoning Without Using Magnetometers for Indoor Localization. IEEE Sens. J. 2019, 19, 9532–9542. [Google Scholar] [CrossRef]

- Yan, J.; He, G.; Basiri, A.; Hancock, C. Vision-aided indoor pedestrian dead reckoning. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018. [Google Scholar]

- Won, D.H.; Lee, E.; Heo, M.; Lee, S.W.; Lee, J.; Kim, J.; Sung, S.; Lee, Y.J. Selective Integration of GNSS, Vision Sensor, and INS Using Weighted DOP Under GNSS-Challenged Environments. IEEE Trans. Instrum. Meas. 2014, 63, 2288–2298. [Google Scholar] [CrossRef]

- Kamisaka, D.; Muramatsu, S.; Iwamoto, T.; Yokoyama, H. Design and Implementation of Pedestrian Dead Reckoning System on a Mobile Phone. IEICE Trans. Inf. Syst. 2011, 94-D, 1137–1146. [Google Scholar] [CrossRef]

- Ruppelt, J.; Trommer, G.F. Stereo-camera visual odometry for outdoor areas and in dark indoor environments. IEEE Aerosp. Electron. Syst. Mag. 2016, 31, 4–12. [Google Scholar]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef]

- Potortì, F.; Park, S.; Jiménez Ruiz, A.; Barsocchi, P.; Girolami, M.; Crivello, A.; Lee, S.Y.; Lim, J.H.; Torres-Sospedra, J.; Seco, F.; et al. Comparing the Performance of Indoor Localization Systems through the EvAAL Framework. Sensors 2017, 17, 2327. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Peng, A.; Lin, Z.; Zheng, L.; Zheng, H. Pedestrian Dead Reckoning-Assisted Visual Inertial Odometry Integrity Monitoring. Sensors 2019, 19, 5577. https://doi.org/10.3390/s19245577

Wang Y, Peng A, Lin Z, Zheng L, Zheng H. Pedestrian Dead Reckoning-Assisted Visual Inertial Odometry Integrity Monitoring. Sensors. 2019; 19(24):5577. https://doi.org/10.3390/s19245577

Chicago/Turabian StyleWang, Yuqin, Ao Peng, Zhichao Lin, Lingxiang Zheng, and Huiru Zheng. 2019. "Pedestrian Dead Reckoning-Assisted Visual Inertial Odometry Integrity Monitoring" Sensors 19, no. 24: 5577. https://doi.org/10.3390/s19245577

APA StyleWang, Y., Peng, A., Lin, Z., Zheng, L., & Zheng, H. (2019). Pedestrian Dead Reckoning-Assisted Visual Inertial Odometry Integrity Monitoring. Sensors, 19(24), 5577. https://doi.org/10.3390/s19245577