AttentivU: An EEG-Based Closed-Loop Biofeedback System for Real-Time Monitoring and Improvement of Engagement for Personalized Learning

Abstract

:1. Introduction

2. Related Work

2.1. Defining and Measuring Engagement in Learning Environments

2.2. Measuring Individual’s Engagement Level Using EEG

2.3. Delivering Feedback about Engagement Level

3. Materials and Methods: Study 1

3.1. AttentivU: Design Choices and Implementation

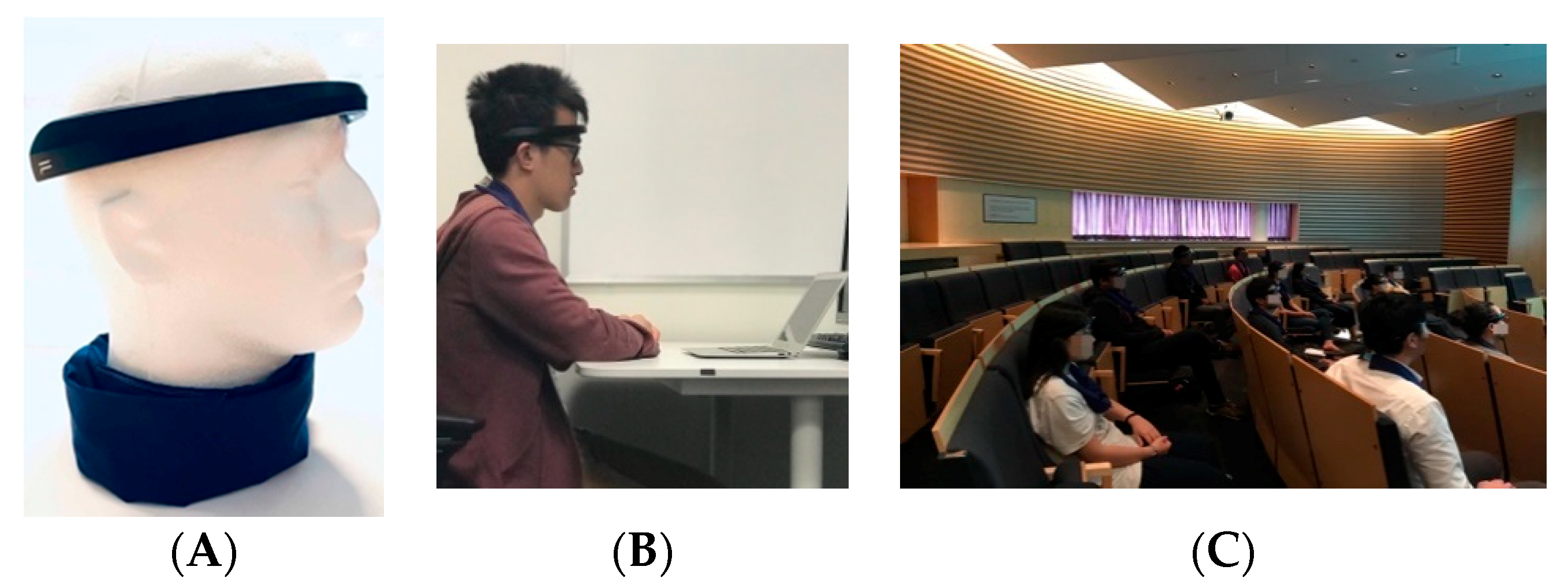

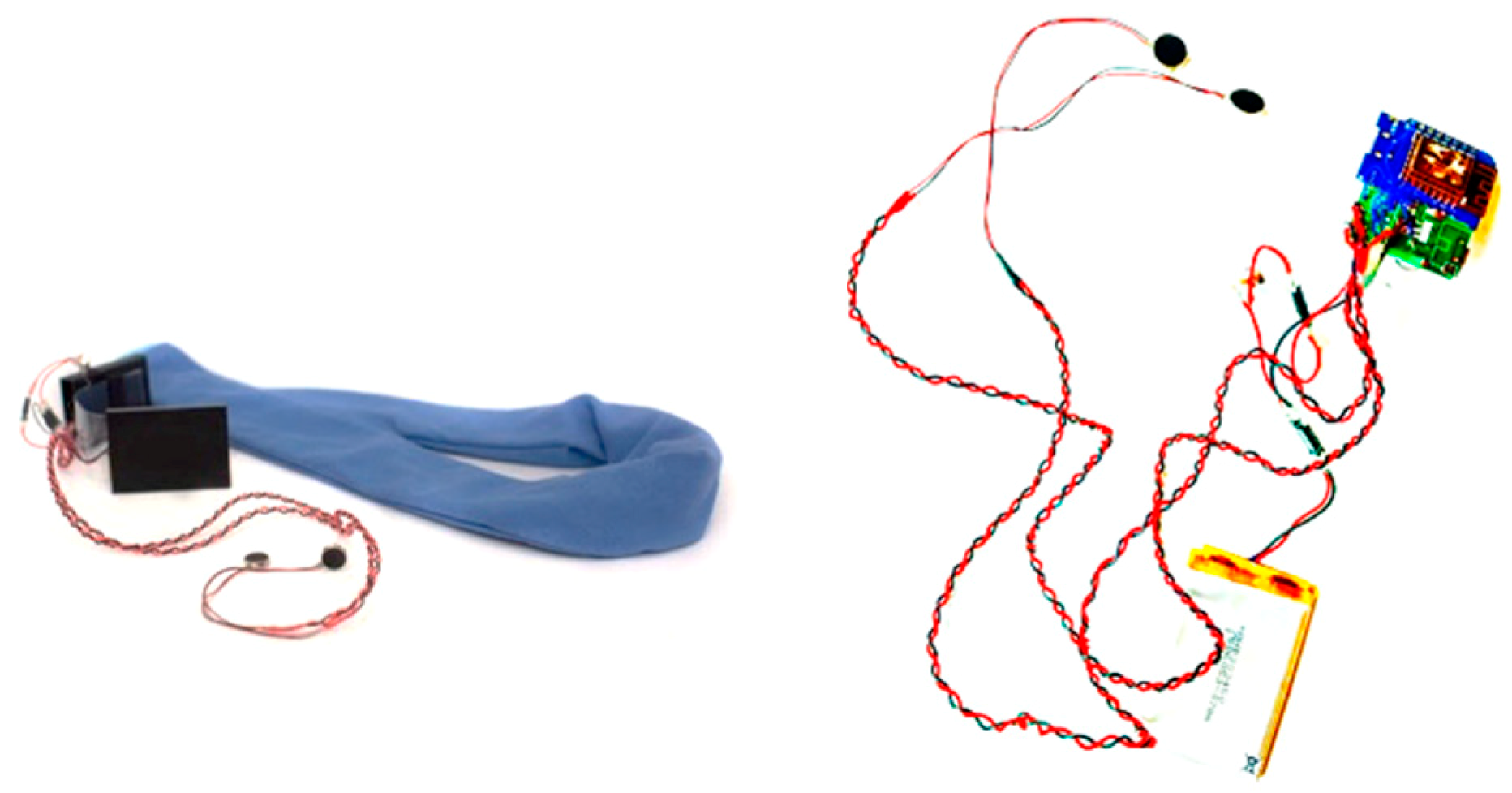

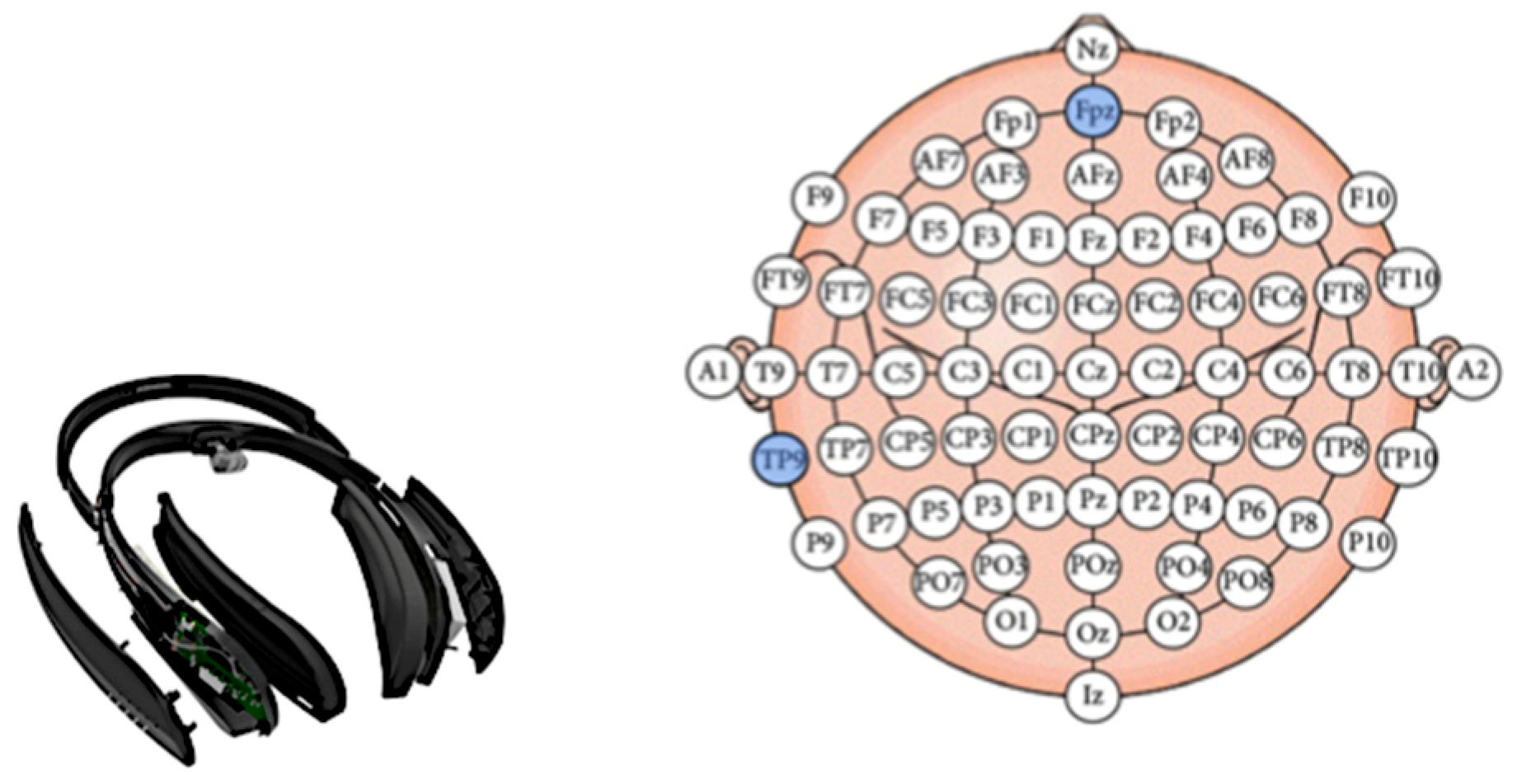

3.1.1. Form Factor for Engagement Measurement Component

3.1.2. Form Factor for the Feedback Component

3.1.3. Engagement Index and Signal Processing

3.2. Pilot Studies

3.2.1. Pilot Study 1

3.2.2. Pilot Study 2

3.2.3. Pilot Study 3

3.3. User Study 1: Methods and Measures

3.3.1. Participants

3.3.2. Experimental Task

3.3.3. Experimental Procedure

- (1)

- Biofeedback. Each time a drop in engagement from medium to low was detected, the scarf was set to vibrate. The vibration was administered within 60 s if the engagement level remained low after the first vibration was already administrated.

- (2)

- Random feedback. The scarf would vibrate, but the vibrations did not correlate or depend on the engagement level of the user. The vibrations were set up in the following manner: No vibration was allowed within 2 min following random vibration; a vibration could occur in a time period of between 4 to 6 min (4 for shorter, 6 for longer lectures) to ensure that the vibrations were not too frequent/annoying.

- (3)

- No feedback (NF). The scarf did not vibrate.

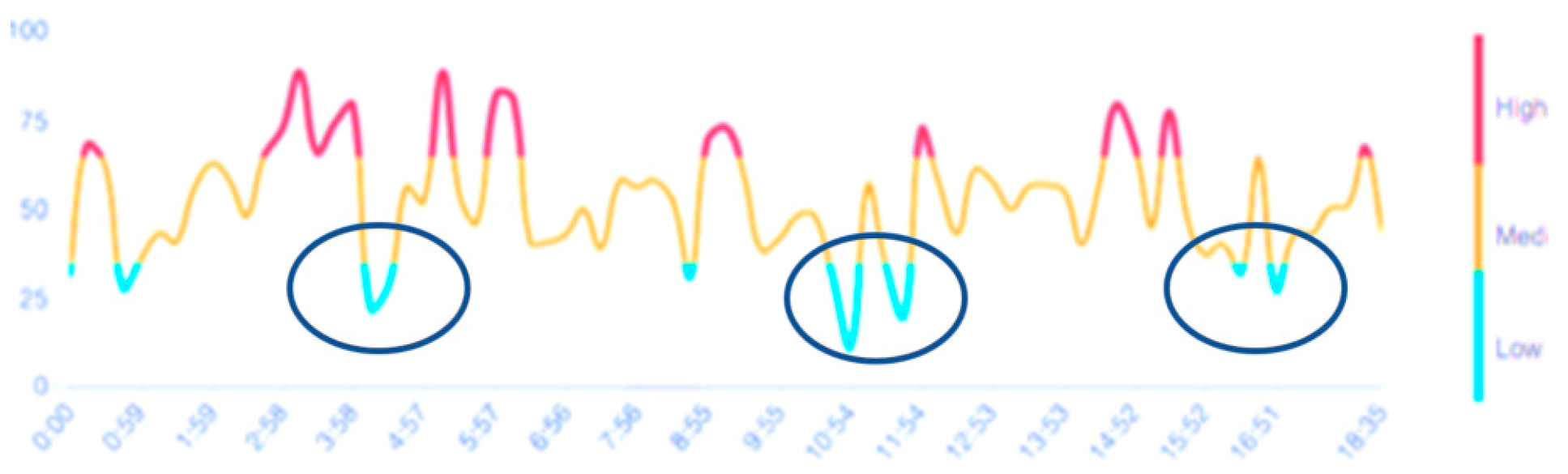

4. Results of Study 1

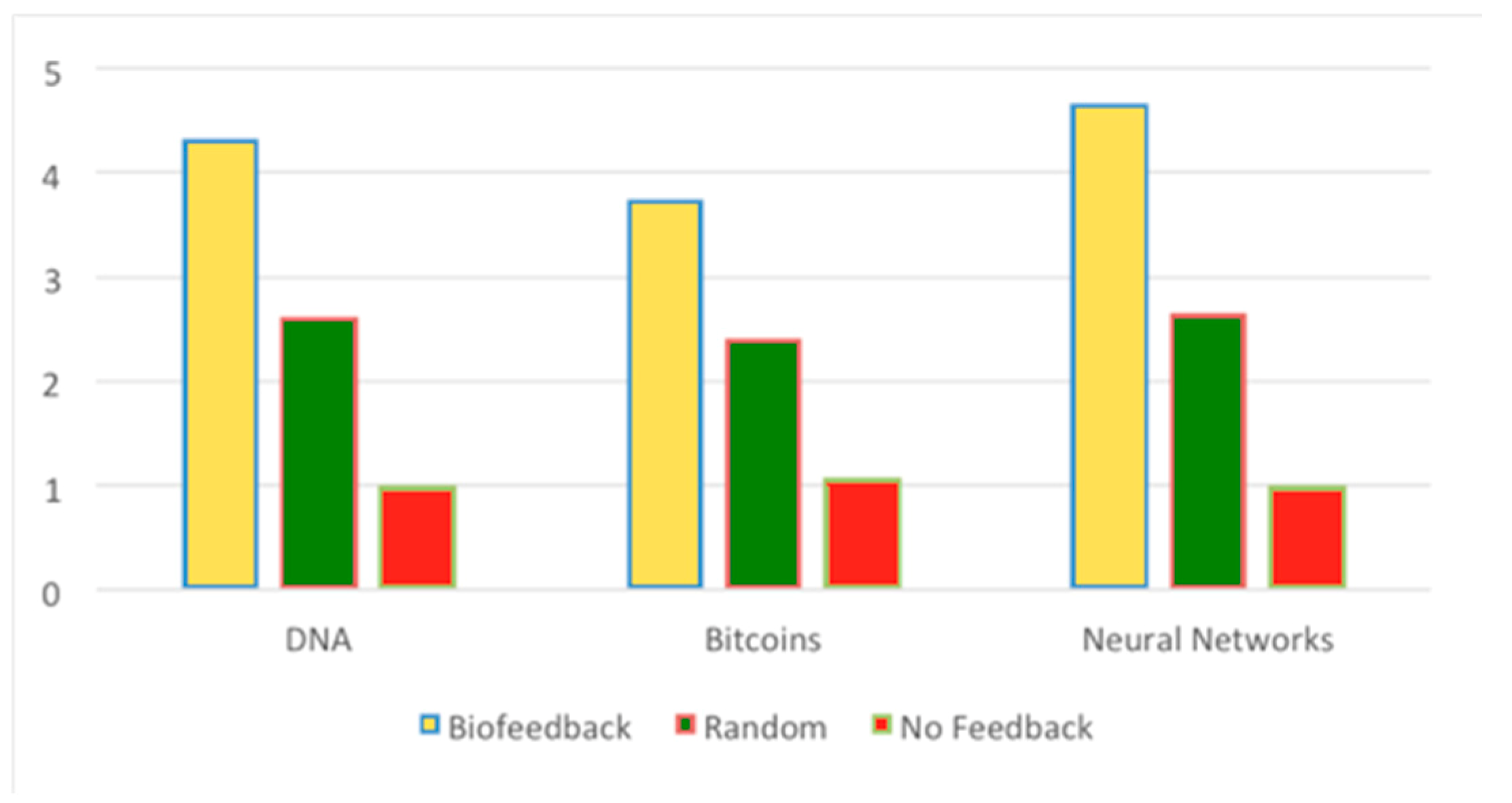

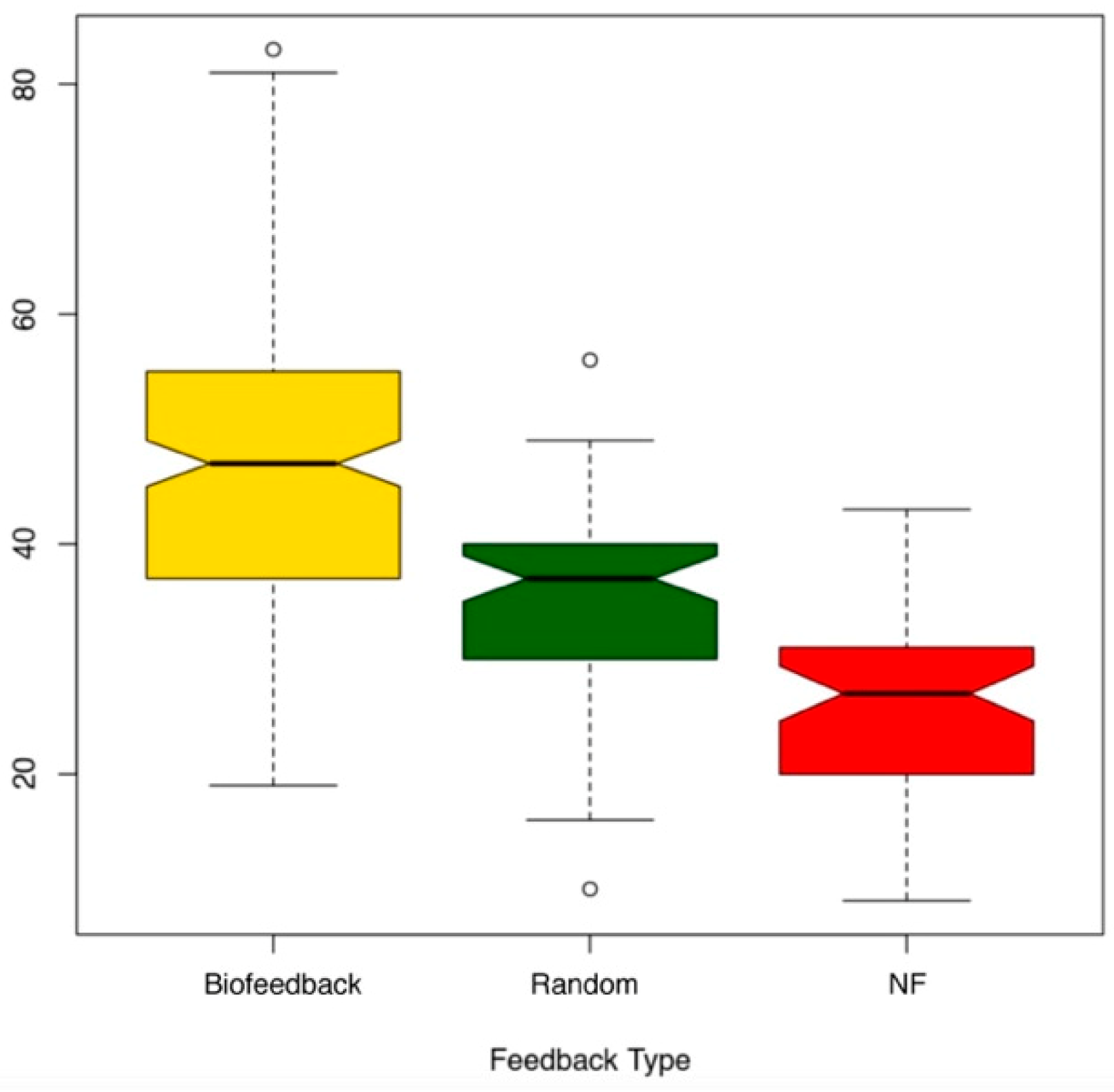

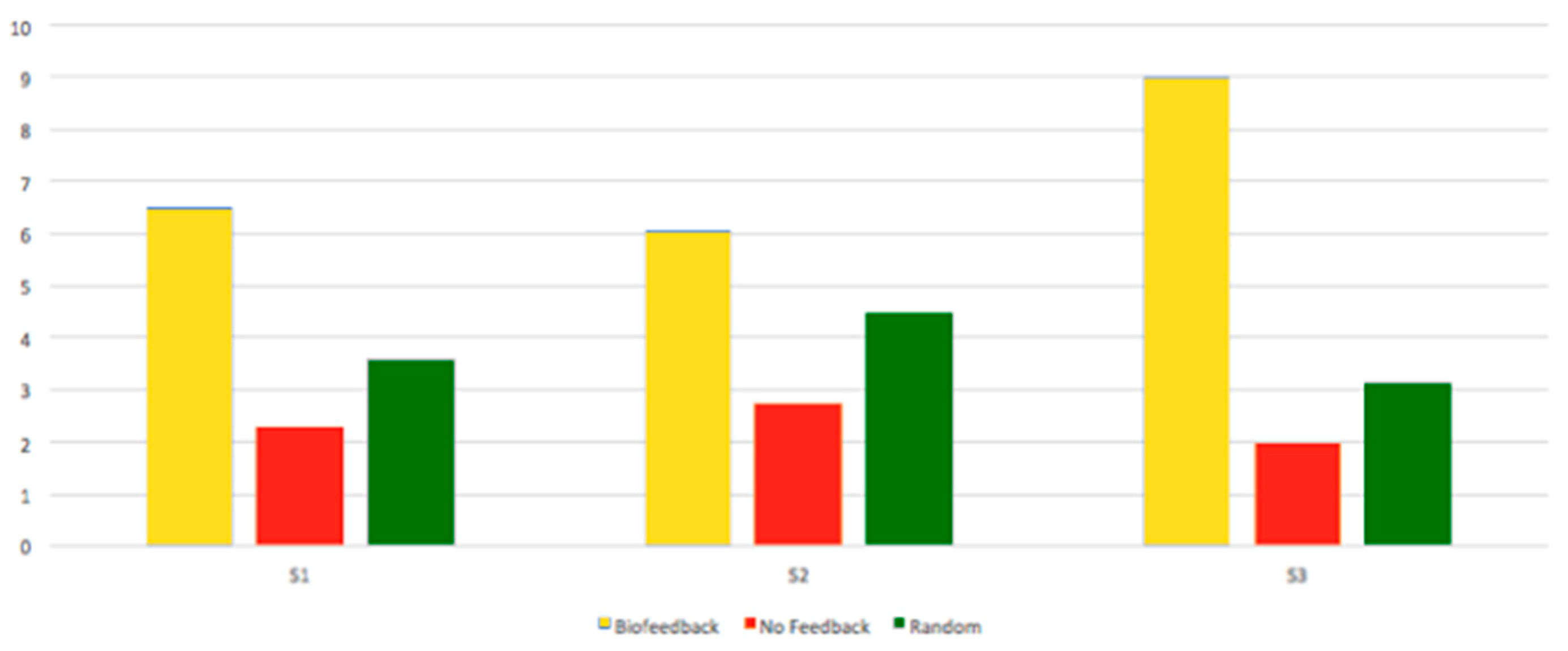

4.1. Performance on Content Tests

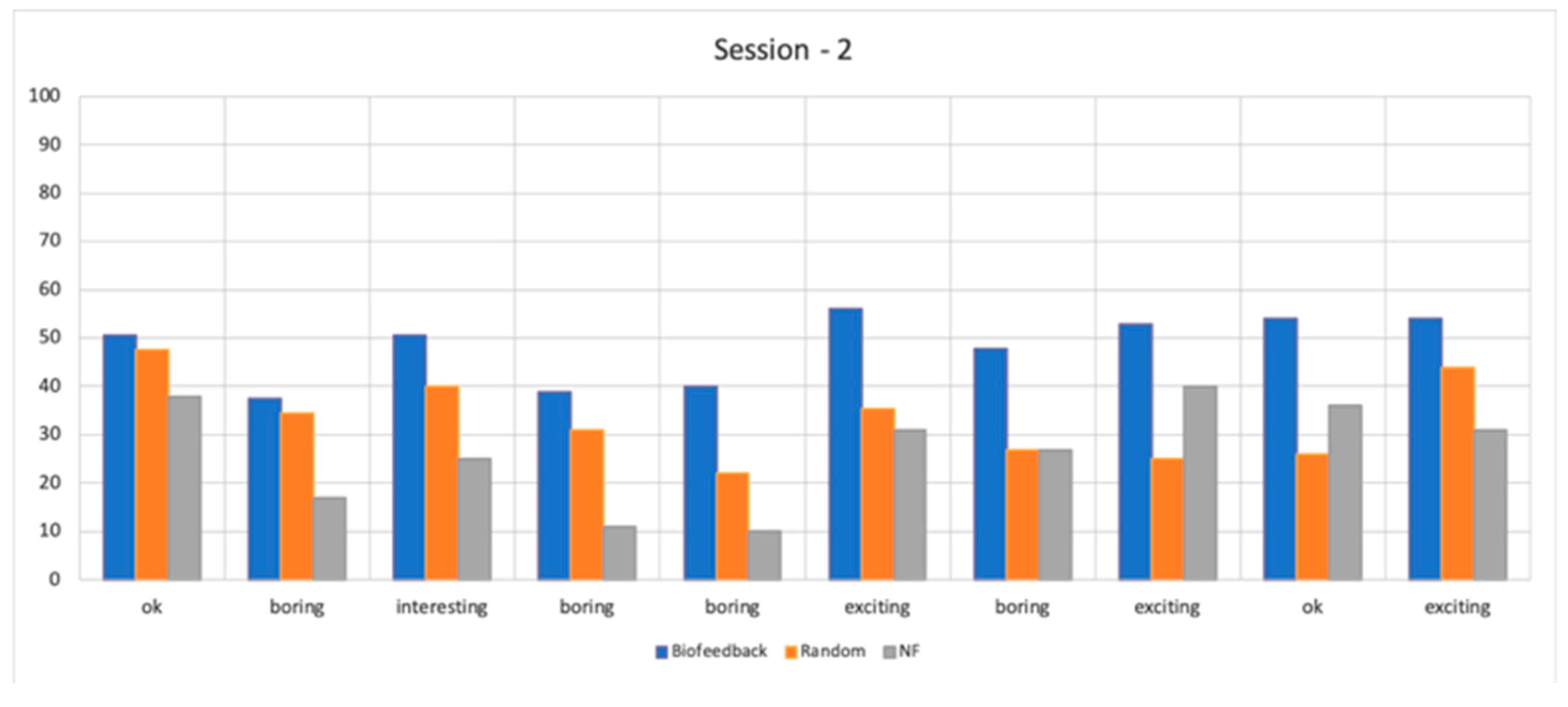

4.2. Design Insights from Questionnaires

4.3. Informal Interviews with Users

4.3.1. Remarks on Feedback Effectiveness

4.3.2. Measuring Engagement

4.3.3. Conclusions for Study 1

5. User Study 2: Methods and Measures

5.1. Participants

5.2. Experimental Task

5.3. Experimental Procedure

6. Results of Study 2

6.1. Performance on Content Tests

6.2. Informal Interviews with Users from Study 2

6.3. Conclusions for Study 2

7. Discussion, Challenges, Future Work, and Limitations

8. Ethical Considerations

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hassib, M.; Schneegass, S.; Eiglsperger, P.; Henze, N.; Schmidt, A.; Alt, F. EngageMeter: A system for implicit audience engagement sensing using electroencephalography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI 2017), Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 5114–5119. [Google Scholar]

- Silveira, F.; Eriksson, B.; Sheth, A.; Sheppard, A. Predicting audience responses to movie content from electro-dermal activity signals. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp 2013), Zurich, Switzerland, 8–12 September 2013; ACM: New York, NY, USA, 2013; pp. 707–716. [Google Scholar]

- Vi, C.T.; Takashima, K.; Yokoyama, H.; Liu, G.; Itoh, Y.; Subramanian, S.; Kitamura, Y. D-FLIP: Dynamic and Flexible interactive PhotoShow. In International Conference on Advances in Computer Entertainment; Springer: Berlin, Germany, 2013; pp. 415–427. [Google Scholar]

- Hutt, S.; Mills, C.; Bosch, N.; Krasich, K.; Brockmole, J.; D’Mello, S. Out of the Fr-Eye-ing Pan: Towards gaze-based models of attention during learning with technology in the classroom. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization (UMAP 2017), Bratislava, Slovakia, 9–12 July 2017; ACM: New York, NY, USA, 2017; pp. 94–103. [Google Scholar]

- Sinatra, G.M.; Heddy, B.C.; Lombardi, D. The challenges of defining and measuring student engagement in science. Educ. Psychol. 2015, 50, 1–13. [Google Scholar] [CrossRef]

- Abdelrahman, Y.; Hassib, M.; Marquez, M.G.; Funk, M.; Schmidt, A. Implicit engagement detection for interactive museums using brain-computer interfaces. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct (MobileHCI 2015), Copenhagen, Denmark, 24–27 August 2015; ACM: New York, NY, USA, 2015; pp. 838–845. [Google Scholar]

- Andujar, M.; Gilbert, J.E. Let’s learn: Enhancing user’s engagement levels through passive brain-computer interfaces. In CHI ’13 Extended Abstracts on Human Factors in Computing Systems (CHI EA 2013); ACM: New York, NY, USA, 2013; pp. 703–708. [Google Scholar]

- Oken, B.S.; Salinsky, M.C.; Elsas, S.M. Vigilance, alertness, or sustained attention: Physiological basis and measurement. Clin. Neurophysiol. 2006, 117, 1885–1901. [Google Scholar] [CrossRef] [PubMed]

- Kamzanova, A.T.; Matthews, G.; Kustubayeva, A.M.; Jakupov, S.M. EEG Indices to Time-On-Task Effects and to a Workload Manipulation (Cueing). World Acad. Sci. Eng. Technol. 2011, 80, 19–22. [Google Scholar]

- Reinerman, L.E.; Matthews, G.; Warm, J.S.; Langheim, L.K.; Parsons, K.; Proctor, C.A.; Siraj, T.; Tripp, L.D.; Stutz, R.M. Cerebral blood flow velocity and task engagement as predictors of vigilance performance. Proc. Hum. Factors Ergon. Soc. 2006, 50, 1254–1258. [Google Scholar] [CrossRef]

- Freeman, F.G.; Mikulka, P.J.; Prinzel, L.J.; Scerbo, M.W. Evaluation of an adaptive automation system using three EEG indices with a visual tracking task. Biol. Psychol. 1999, 50, 61–76. [Google Scholar] [CrossRef]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Reeve, J.M.; Tseng, C.-M. Agency as a fourth aspect of students’ engagement during learning activities. Contemp. Educ. Psychol. 2011, 36, 257–267. [Google Scholar] [CrossRef]

- Shernoff, D.J.; Csikszentmihalyi, M.; Schneider, B.; Shernoff, E.S. Student engagement in high school classrooms from the perspective of flow theory. In Applications of Flow in Human Development and Education; Springer: Dordrecht, The Netherlands, 2014; pp. 475–494. [Google Scholar]

- Azevedo, R. Defining and measuring engagement and learning in science: Conceptual, theoretical, methodological, and analytical issues. Educ. Psychol. 2015, 50, 84–94. [Google Scholar] [CrossRef]

- Raca, M.; Kidzinski, Ł.; Dillenbourg, P. Translating head motion into attention-towards processing of student’s body language. In Proceedings of the 8th International Conference on Educational Data Mining: International Educational Data Mining Society, Madrid, Spain, 26–29 June 2015. [Google Scholar]

- Raca, M.; Dillenbourg, P. System for assessing classroom attention. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (LAK 2013); Suthers, D., Verbert, K., Duval, E., Ochoa, X., Eds.; ACM: New York, NY, USA, 2013; pp. 265–269. [Google Scholar]

- Byrne, E.A.; Parasuraman, R. Psychophysiology and adaptive automation. Biol. Psychol. 1996, 42, 249–268. [Google Scholar] [CrossRef]

- Boucsein, W.; Haarmann, A.; Schaefer, F. Combining skin conductance and heart rate variability for adaptive automation during simulated IFR flight. In Engineering Psychology and Cognitive Ergonomics; Springer: Berlin, Germany, 2007; Volume 4562, pp. 639–647. [Google Scholar]

- Yuksel, B.F.; Oleson, K.B.; Harrison, L.; Peck, E.M.; Afergan, D.; Chang, R.; Jacob, R.J.K. Learn Piano with BACh: An adaptive learning interface that adjusts task difficulty based on brain state. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI 2016), San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA, 2016; pp. 5372–5384. [Google Scholar]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Yau, A.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Craven, P.L. EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Environ. Med. 2007, 78, B231–B244. [Google Scholar] [PubMed]

- Frey, J.; Grabli, M.; Slyper, R.; Cauchard, J.R. Breeze: Sharing Biofeedback through Wearable Technologies. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI 2018), Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; p. 12. [Google Scholar]

- Hassib, M.; Khamis, M.; Friedl, S.; Schneegass, S.; Alt, F. Brainatwork: Logging cognitive engagement and tasks in the workplace using electroencephalography. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia (MUM 2017), Stuttgart, Germany, 26–29 November 2017; ACM: New York, NY, USA, 2017; pp. 305–310. [Google Scholar]

- Szafir, D.; Mutlu, B. Pay attention: Designing adaptive agents that monitor and improve user engagement. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2012), Austin, TX, USA, 5–10 May 2012; ACM: New York, NY, USA, 2012; pp. 11–20. [Google Scholar]

- Huang, J.; Yu, C.; Wang, Y.; Zhao, Y.; Liu, S.; Mo, C.; Liu, J.; Zhang, L.; Shi, Y. FOCUS: Enhancing children’s engagement in reading by using contextual BCI training sessions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2014), Toronto, ON, Canada, 26 April–1 May 2014; ACM: New York, NY, USA, 2014; pp. 1905–1908. [Google Scholar]

- Marchesi, M.; Riccò, B. BRAVO: A brain virtual operator for education exploiting brain-computer interfaces. In Proceedings of the CHI 2013 Extended Abstracts on Human Factors in Computing Systems (CHI EA 2013), Paris, France, 27 April–2 May 2013; ACM: New York, NY, USA, 2013; pp. 3091–3094. [Google Scholar]

- Qian, X.; Loo, B.R.Y.; Castellanos, F.X.; Liu, S.; Koh, H.L.; Poh, X.W.W.; Krishnan, R.; Fung, D.; Chee, M.W.L.; Guan, C.; et al. Brain-computer-interface-based intervention re-normalizes brain functional network topology in children with attention deficit/hyperactivity disorder. Transl. Psychiatry 2018, 8, 149. [Google Scholar] [CrossRef] [PubMed]

- Sclater, N.; Peasgood, A.; Mullan, J. Learning Analytics in Higher Education: A Review of UK and International Practice. Available online: https://www.jisc.ac.uk/sites/default/files/learning-analytics-in-he-v2_0.pdf (accessed on 9 November 2019).

- Re:Vibe. Available online: https://revibetech.com (accessed on 9 November 2019).

- Mindset. Available online: https://www.thinkmindset.com/science/ (accessed on 9 November 2019).

- BrainCo. Available online: https://www.brainco.tech (accessed on 9 November 2019).

- Gwin, J.T.; Gramann, K.; Makeig, S.; Ferris, D.P. Removal of movement artifact from high-density EEG recorded during walking and running. J. Neurophysiol. 2010, 103, 3526–3534. [Google Scholar] [CrossRef] [PubMed]

- Zeagler, C. Where to wear it: Functional, technical, and social considerations in on-body location for wearable technology 20 years of designing for wearability. In Proceedings of the 2017 ACM International Symposium on Wearable Computers (ISWC 2017), Maui, HI, USA, 11–15 September 2017; ACM: New York, NY, USA, 2017; pp. 150–157. [Google Scholar]

- Karuei, I.; MacLean, K.E.; Foley-Fisher, Z.; MacKenzie, R.; Koch, S.; El-Zohairy, M. Detecting vibrations across the body in mobile contexts. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 2011), Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 3267–3276. [Google Scholar]

- Pope, A.T.; Bogart, E.H.; Bartolome, D.S. Biocybernetic system evaluates indices of operator engagement in automated task. Biol. Psychol. 1995, 40, 187–195. [Google Scholar] [CrossRef]

- Molteni, E.; Bianchi, A.M.; Butti, M.; Reni, G.; Zucca, C. Analysis of the dynamical behaviour of the EEG rhythms during a test of sustained attention. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 1298–1301. [Google Scholar]

- Fairclough, S.H.; Moores, L.J.; Ewing, K.C.; Roberts, J. Measuring task engagement as an input to physiological computing. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–9. [Google Scholar]

- Wilson, K.; Korn, J.H. Attention during lectures: Beyond ten minutes. Teach. Psychol. 2007, 34, 85–89. [Google Scholar] [CrossRef]

- Vi, C.T.; Alexander, J.; Irani, P.; Babaee, B.; Subramanian, S. Quantifying EEG Measured Task Engagement for Use in Gaming Applications; Citeseer: University Park, PA, USA, 2014. [Google Scholar]

- Hincks, S.W.; Bratt, S.; Poudel, S.; Phoha, V.; Jacob, R.J.K.; Dennett, D.C.; Hirshfield, L.M. Entropic brain-computer interfaces using fNIRS & EEG to measure attentional states in a Bayesian framework. In PhyCS; SciTePress: Setúbal, Portugal, 2017; pp. 23–34. [Google Scholar]

- Blasco-Arcas, L.; Buil, I.; Hernández-Ortega, B.; Sese, F.J. Using clickers in class. The role of interactivity, active collaborative learning and engagement in learning performance. Comput. Educ. 2013, 62, 102–110. [Google Scholar] [CrossRef]

- Yan, S.; Ding, G.Y.; Li, H.; Sun, N.; Wu, Y.; Guan, Z.; Zhang, L.; Huang, T. Enhancing audience engagement in performing arts through an adaptive virtual environment with a brain-computer interface. In Proceedings of the 21st International Conference on Intelligent User Interfaces (IUI 2016), Sonoma, CA, USA, 7–10 March 2016; ACM: New York, NY, USA, 2016; pp. 306–316. [Google Scholar]

- Kosmyna, N.; Morris, C.; Sarawgi, U.; Nguyen, T.; Maes, P. AttentivU: A wearable pair of EEG and EOG glasses for real-time physiological processing. In Proceedings of the 16th IEEE International Conference on Wearable and Implantable Body Sensor Networks (BSN 2019), Chicago, IL, USA, 19–22 May 2019. [Google Scholar]

- Vourvopoulos, A.; Niforatos, E.; Giannakos, M. EEGlass: An EEG-eyeware prototype for ubiquitous brain-computer interaction. In Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019; ACM: New York, NY, USA, 2019; pp. 647–652. [Google Scholar]

- Saadatzi, M.N.; Tafazzoli, F.; Welch, K.C.; Graham, J.H. EmotiGO: Bluetooth-enabled eyewear for unobtrusive physiology-based emotion recognition. In Proceedings of the IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 903–909. [Google Scholar]

- Dementyev, A.; Holz, C. DualBlink: A wearable device to continuously detect, track, and actuate blinking for alleviating dry eyes and computer vision syndrome. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1. [Google Scholar] [CrossRef]

- Hernandez, J.; Li, Y.; Rehg, J.M.; Picard, R.W. Cardiac and Respiratory Parameter Estimation Using Head-mounted Motion-sensitive Sensors. EAI Endorsed Trans. Pervasive Health Technol. 2015, 1, e2. [Google Scholar] [CrossRef]

- Hernandez, J.; Picard, R.W. SenseGlass: Using google glass to sense daily emotions. In Proceedings of the Adjunct Publication of the 27th Annual ACM Symposium on User Interface Software and Technology (UIST 2014 Adjunct), Honolulu, HI, USA, 5–8 October 2014; ACM: New York, NY, USA, 2014; pp. 77–78. [Google Scholar]

- Smith LowdownFocus Glasses. Available online: https://www.smithoptics.com/us/lowdownfocus (accessed on 9 November 2019).

- Uema, Y.; Inoue, K. JINS MEME algorithm for estimation and tracking of concentration of users. In Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2017 ACM International Symposium on Wearable Computers, Maui, HI, USA, 11–15 September 2017; ACM: New York, NY, USA, 2017; pp. 297–300. [Google Scholar]

- JINS MEME Glasses. Available online: https://jins-meme.com/en/researchers/ (accessed on 9 November 2019).

- Kosmyna, N.; Sarawgi, U.; Maes, P. AttentivU: Evaluating the feasibility of biofeedback glasses to monitor and improve attention. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers (UbiComp 2018), Singapore, 8–12 October 2018; ACM: New York, NY, USA, 2018; pp. 999–1005. Available online: https://doi.org/10.1145/3267305.3274124 (accessed on 25 November 2019).

- Di Lascio, E.; Gashi, S.; Santini, S. Unobtrusive assessment of students’ emotional engagement during lectures using electrodermal activity sensors. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 103. [Google Scholar] [CrossRef]

- Tag, B.; Vargo, A.W.; Gupta, A.; Chernyshov, G.; Kunze, K.; Dingler, T. Continuous alertness assessments: Using EOG glasses to unobtrusively monitor fatigue levels In-The-Wild. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI 2019), Glasgow, Scotland, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; p. 12. [Google Scholar] [CrossRef]

- Kosmyna, N.; Morris, C.; Nguyen, T.; Zepf, S.; Hernandez, J.; Maes, P. AttentivU: Designing EEG and EOG compatible glasses for physiological sensing and feedback in the car. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI 2019), Utrecht, The Netherlands, 21–25 September 2019; ACM: New York, NY, USA, 2019; pp. 355–368. [Google Scholar] [CrossRef]

| Random Effect | Variance Explained | Standard Deviation |

|---|---|---|

| Slide Number | 0 | 0 |

| Slide Type | 0.05 | 2.19 |

| Vibration Count | 0.09 | 5.36 |

| ANOVA p < 0.001 | ||

| AIC = 2401.4 | BIC = 2724.7 | Chi sq. = 248.4 |

| D.f. = 2 | Marginal R2 = 0.40 | Conditional R2 = 0.55 |

| Post-hoc (Adjusted Holm), p < 0.01 for all | ||

| Pair | Standard Error | z |

| Random vs. NF | 1.48 | −7.93 |

| Biofeedback vs. NF | 1.7 | 14.68 |

| Random vs. Biofeedback | 2.08 | −6.38 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kosmyna, N.; Maes, P. AttentivU: An EEG-Based Closed-Loop Biofeedback System for Real-Time Monitoring and Improvement of Engagement for Personalized Learning. Sensors 2019, 19, 5200. https://doi.org/10.3390/s19235200

Kosmyna N, Maes P. AttentivU: An EEG-Based Closed-Loop Biofeedback System for Real-Time Monitoring and Improvement of Engagement for Personalized Learning. Sensors. 2019; 19(23):5200. https://doi.org/10.3390/s19235200

Chicago/Turabian StyleKosmyna, Nataliya, and Pattie Maes. 2019. "AttentivU: An EEG-Based Closed-Loop Biofeedback System for Real-Time Monitoring and Improvement of Engagement for Personalized Learning" Sensors 19, no. 23: 5200. https://doi.org/10.3390/s19235200

APA StyleKosmyna, N., & Maes, P. (2019). AttentivU: An EEG-Based Closed-Loop Biofeedback System for Real-Time Monitoring and Improvement of Engagement for Personalized Learning. Sensors, 19(23), 5200. https://doi.org/10.3390/s19235200