Efficient Kernel-Based Subsequence Search for Enabling Health Monitoring Services in IoT-Based Home Setting

Abstract

:1. Introduction

- Designing a kernel learning task aimed at approximating DTW to reduce computational burden of the subsequence search.

- Comparing the proposed kernel-based DTW approximation with traditional DTW-based implementations and other state-of-the-art algorithms.

- Validating the proposed approach on a simple benchmark toy example (https://www.cs.unm.edu/mueen/FastestSimilaritySearch.html) and on a more complex one, namely, the “User Identification From Walking Activity” dataset, freely downloadable from the UCI Repository.

- Validating the results through pattern query experiments on a dataset self-rehabilitation dataset, specifically collected in a real-life project. Self-rehabilitation dataset is available from the corresponding author on reasonable request.

2. Backgound

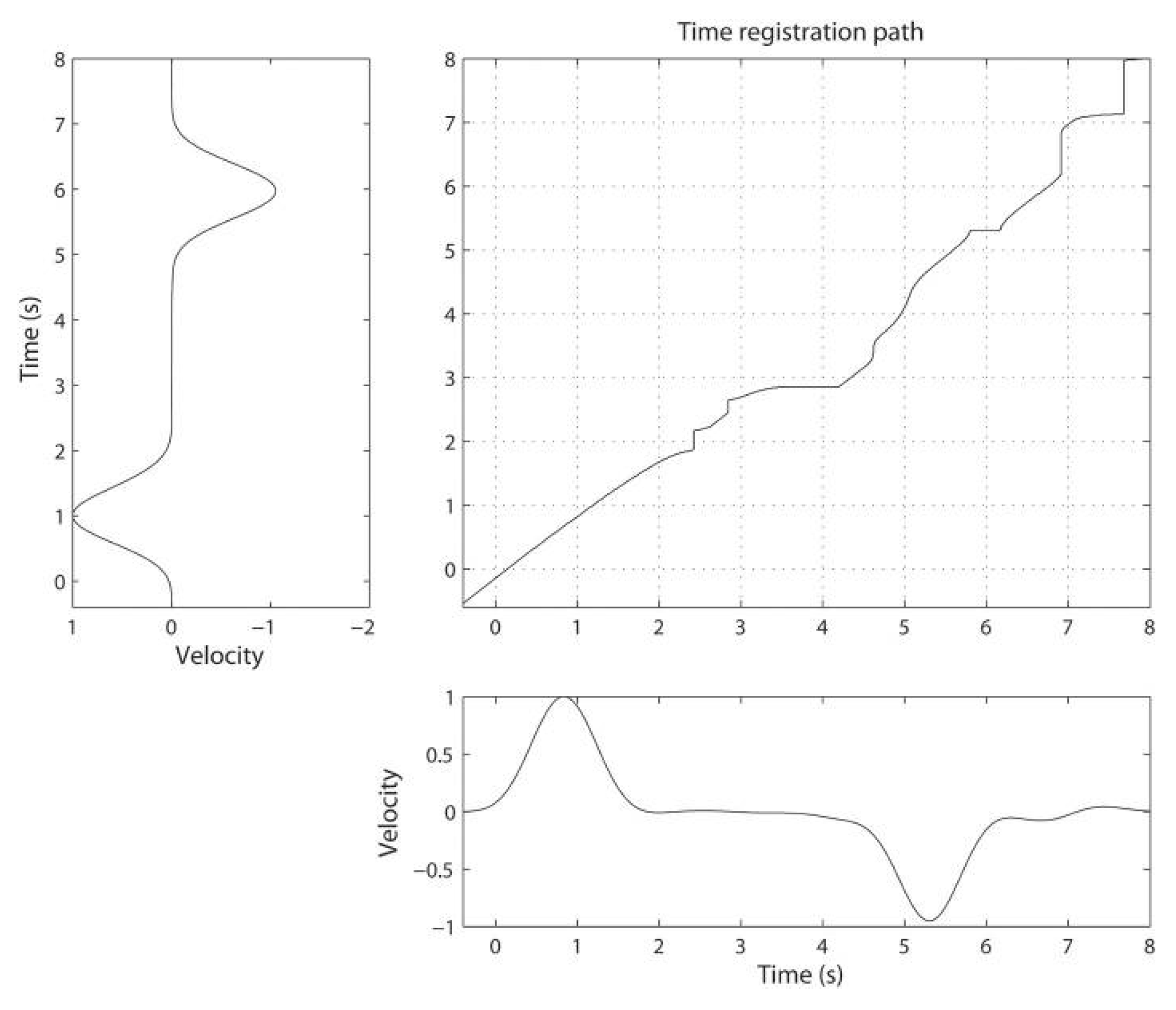

2.1. Dynamic Time Warping

- Boundary conditions and . These conditions enforce the alignment to start and finish at the extremes of the two series, meaning that the first elements of X and Y, as well as the last ones, must be aligned to each other.

- Monotonicity condition: and . This condition simply ensures that if an element in X precedes a second one this should also hold for the corresponding elements in Y, and vice versa.

- Step size condition: for . This condition ensures that no element in X and Y can be omitted and that there are no replications in the alignment, meaning that all the index pairs contained in a warping path are pairwise distinct. Note that the step size condition implies the monotonicity condition.

| Algorithm 1 Optimal warping path algorithm |

| Input Accumulated cost matrix D Output optimal warping path

|

2.2. Dynamic Time Warping for Subsequence Search

| Algorithm 2 DTW-based subsequence search algorithm |

| Input

reference pattern , a longer data stream , with , and a threshold Output a list of repetitions of X within Y having, individually, a DTW lower than . The list is ranked depending on the individual DTW

|

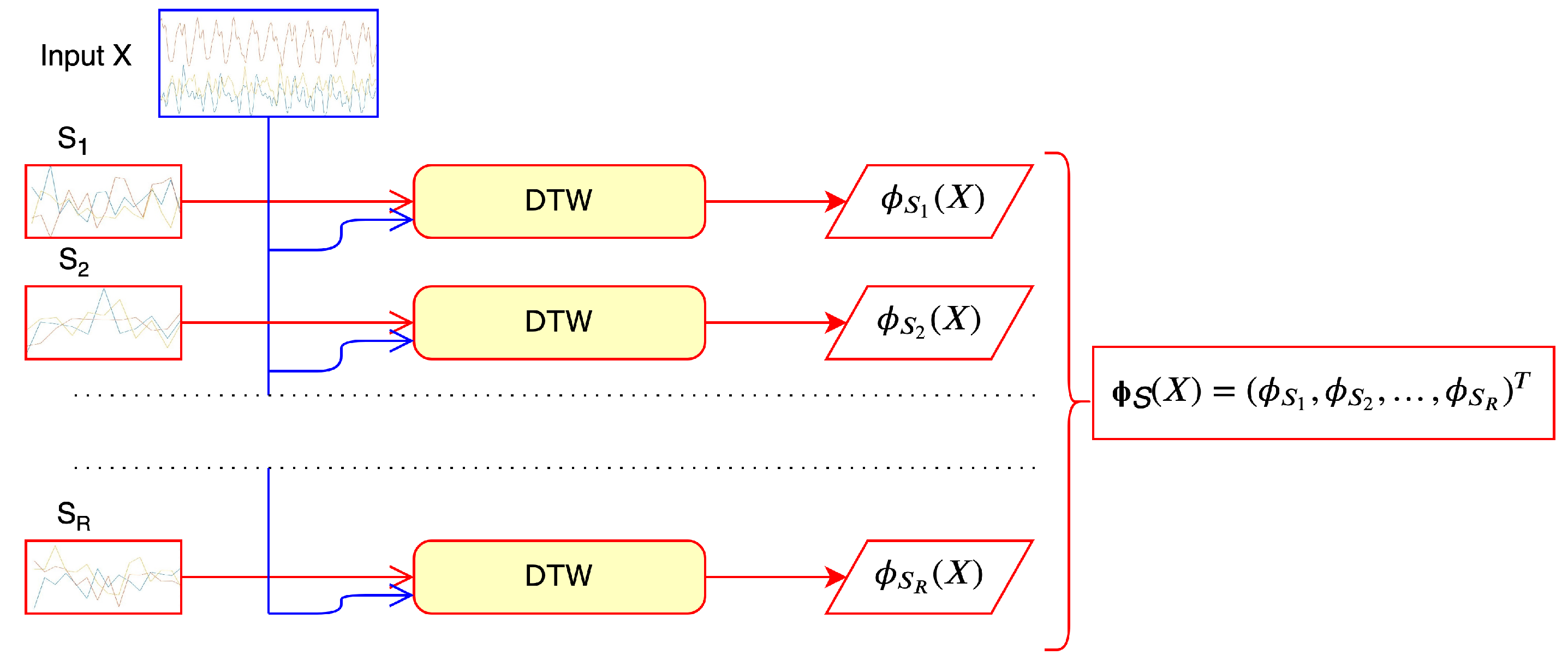

3. Learning a Kernel to Approximate DTW

3.1. Time-Series Kernels via Alignments

3.2. Learning a Kernel for Subsequence Search

3.3. Extension to Multiple Reference Patterns

| Algorithm 3 Learning a kernel for approximating DTW in the case of multiple references and multiple data streams |

| Inputn reference patterns , m data streams and parameters . Output a matrix containing the kernel values.

|

4. Experimental Setting

4.1. Organization of the Experiments

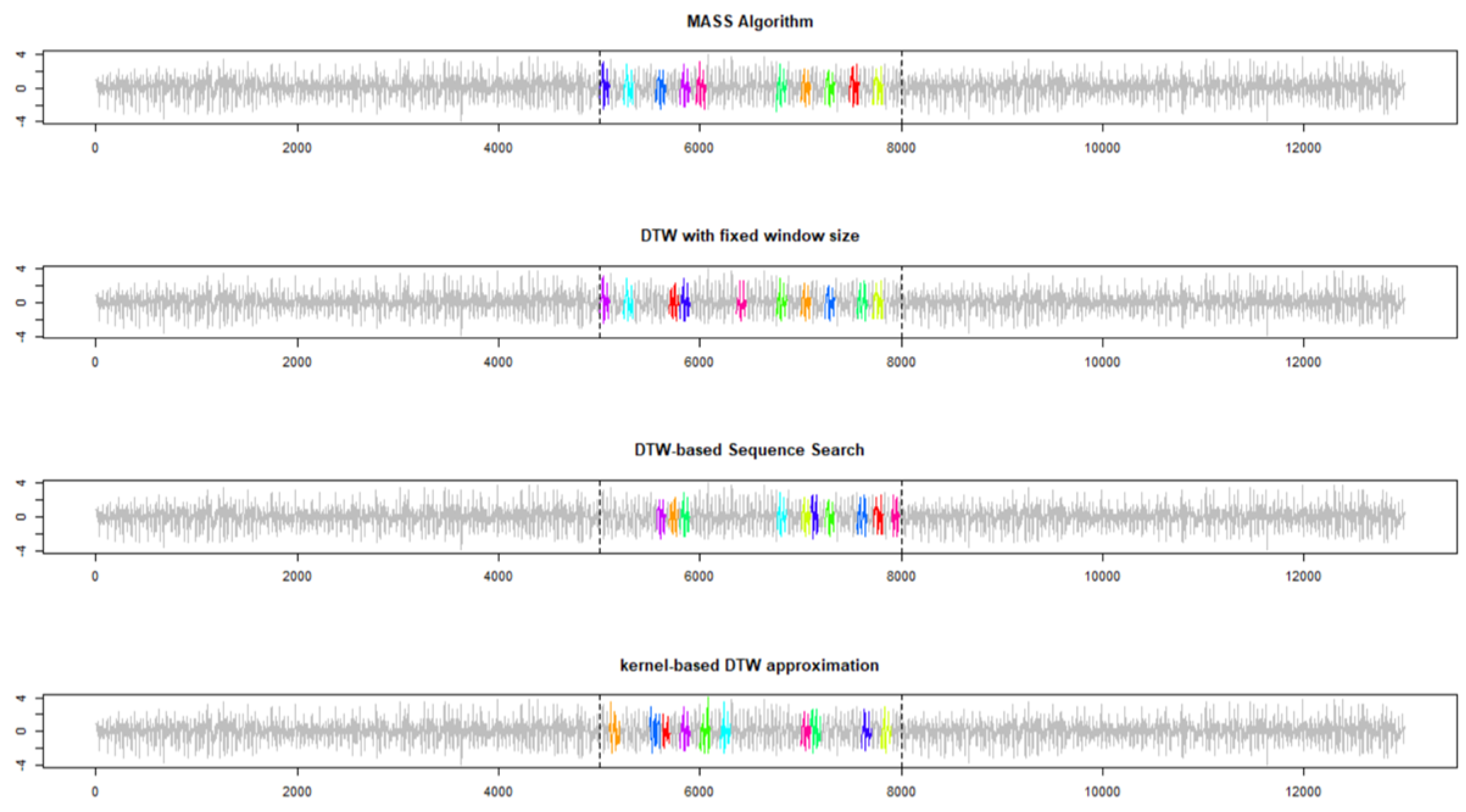

4.2. Experiment 1: A Univariate Case

- MASS [30]: a fast similarity search algorithm for subsequences under Euclidean distance and correlation coefficient (experiments refer to MASS under Euclidean distance, only). A strong assumption of MASS is that the identified subsequences have the same length of the reference.

- DTW with fixed window: based on the same assumption of MASS but using DTW instead of Euclidean distance.

- DTW-based subsequence search algorithm described in Algorithm 2.

- DTW-based Kernel constructed to approximate the exact DTW.

4.3. Experiment 2: A Multivariate Case

- Sampling frequency of the accelerometer: DELAY_FASTEST with network connections disabled.

- A separate file for each participant.

- Every row in each file consists of time-step, x acceleration, y acceleration, and z acceleration.

4.4. Experiment 3: A Real-Life Application

- Flexo-extension of the knee (sit-down position)

- Raise and lower the arms (sit-down position)

- Rotate the torso (sit-down position)

- Back extension of the legs (stand-up position)

- Light squat (stand-up position)

4.5. Computational Setting

5. Results

5.1. Results of Experiment 1

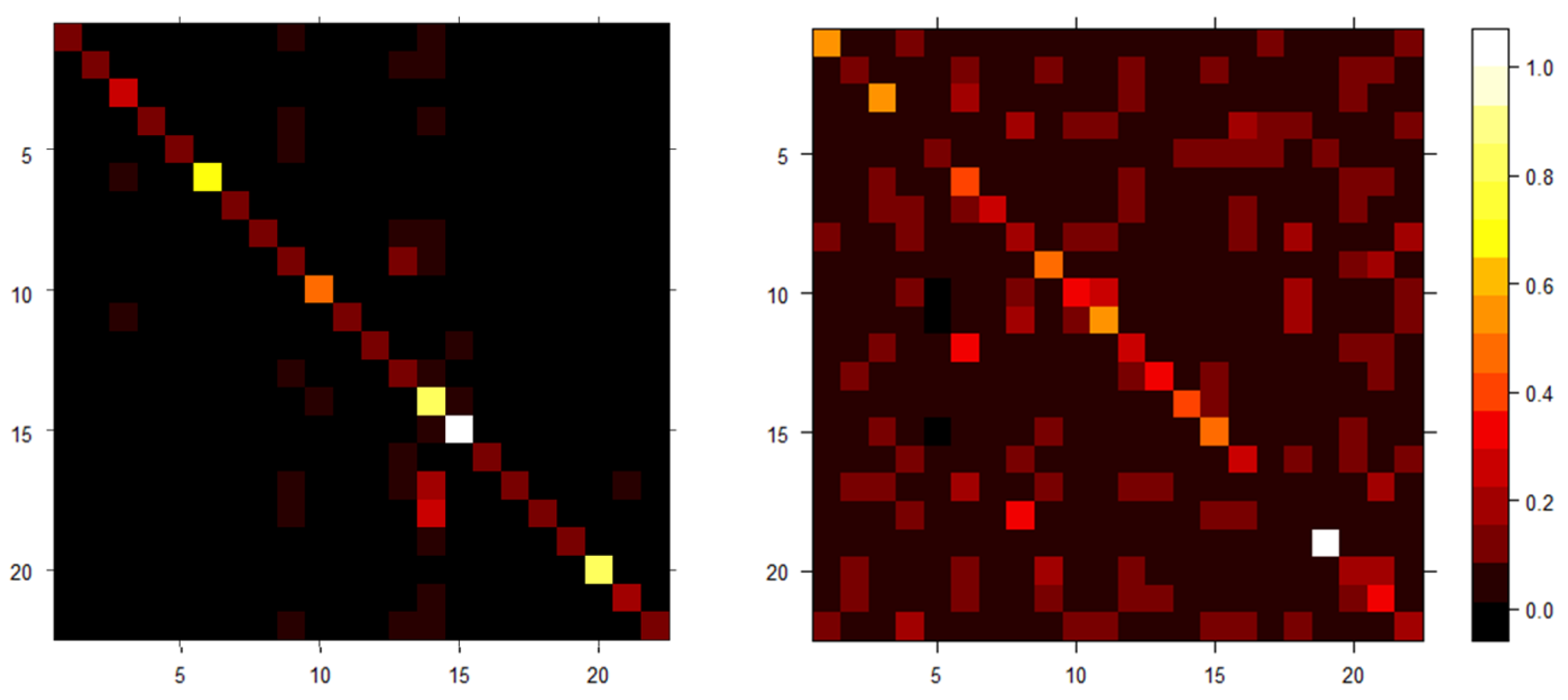

5.2. Results on Experiment 2

5.3. Results of Experiment 3

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Müller, M. Dynamic time warping. In Information Retrieval for Music and Motion; Springer: Berlin, Germany, 2007; pp. 69–84. [Google Scholar]

- Han, L.; Gao, C.; Zhang, S.; Li, D.; Sun, Z.; Yang, G.; Li, J.; Zhang, C.; Shao, G. Speech Recognition Algorithm of Substation Inspection Robot Based on Improved DTW. In International Conference on Intelligent and Interactive Systems and Applications; Springer: Berlin, Germany, 2018; pp. 47–54. [Google Scholar]

- Tang, J.; Cheng, H.; Zhao, Y.; Guo, H. Structured dynamic time warping for continuous hand trajectory gesture recognition. Pattern Recognit. 2018, 80, 21–31. [Google Scholar] [CrossRef]

- Calli, B.; Kimmel, A.; Hang, K.; Bekris, K.; Dollar, A. Path Planning for Within-Hand Manipulation over Learned Representations of Safe States. In Proceedings of the International Symposium on Experimental Robotics (ISER), Buenos Aires, Argentina, 5–8 November 2018. [Google Scholar]

- Bauters, K.; Cottyn, J.; Van Landeghem, H. Real time trajectory matching and outlier detection for assembly operator trajectories. In Proceedings of the Internation Simulation Conference, Eurosis, Ponta Delgada, Portugal, 6–8 June 2018. [Google Scholar]

- Paiyarom, S.; Tangamchit, P.; Keinprasit, R.; Kayasith, P. Fall detection and activity monitoring system using dynamic time warping for elderly and disabled people. In Proceedings of the 3rd International Convention on Rehabilitation Engineering & Assistive Technology, Singapore, 22–26 April 2009; p. 9. [Google Scholar]

- Varatharajan, R.; Manogaran, G.; Priyan, M.K.; Sundarasekar, R. Wearable sensor devices for early detection of Alzheimer disease using dynamic time warping algorithm. Clust. Comput. 2018, 21, 681–690. [Google Scholar] [CrossRef]

- Candelieri, A.; Archetti, F. Global optimization in machine learning: The design of a predictive analytics application. Soft Comput. 2019, 23, 2969–2977. [Google Scholar] [CrossRef]

- Candelieri, A.; Archetti, F. Identifying typical urban water demand patterns for a reliable short-term forecasting–the icewater project approach. Procedia Eng. 2014, 89, 1004–1012. [Google Scholar] [CrossRef]

- Izakian, H.; Pedrycz, W.; Jamal, I. Fuzzy clustering of time series data using dynamic time warping distance. Eng. Appl. Artif. Intell. 2015, 39, 235–244. [Google Scholar] [CrossRef]

- Petitjean, F.; Forestier, G.; Webb, G.I.; Nicholson, A.E.; Chen, Y.; Keogh, E. Dynamic time warping averaging of time series allows faster and more accurate classification. In Proceedings of the 2014 IEEE International Conference on Data Mining, Shenzhen, China, 14–17 December 2014; pp. 470–479. [Google Scholar]

- Radoi, A.; Burileanu, C. Retrieval of Similar Evolution Patterns from Satellite Image Time Series. Appl. Sci. 2018, 8, 2435. [Google Scholar] [CrossRef]

- Sakoe, H.; Chiba, S.; Waibel, A.; Lee, K.F. Dynamic programming algorithm optimization for spoken word recognition. Readings Speech Recognit. 1990, 159, 224. [Google Scholar]

- Itakura, F. Minimum prediction residual principle applied to speech recognition. IEEE Trans. Acoust. Speech Signal Process. 1975, 23, 67–72. [Google Scholar] [CrossRef]

- Mueen, A.; Keogh, E. Extracting optimal performance from dynamic time warping. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 2129–2130. [Google Scholar]

- Silva, D.F.; Batista, G.E. Speeding up all-pairwise dynamic time warping matrix calculation. In Proceedings of the 2016 SIAM International Conference on Data Mining, Miami, FL, USA, 5–7 May 2016; pp. 837–845. [Google Scholar]

- Silva, D.F.; Giusti, R.; Keogh, E.; Batista, G.E. Speeding up similarity search under dynamic time warping by pruning unpromising alignments. Data Min. Knowl. Discov. 2018, 32, 988–1016. [Google Scholar] [CrossRef]

- Keogh, E.J.; Pazzani, M.J. Derivative dynamic time warping. In Proceedings of the 2001 SIAM International Conference on Data Mining, Chicago, IL, USA, 5–7 April 2001; pp. 1–11. [Google Scholar]

- Nagendar, G.; Jawahar, C.V. Fast approximate dynamic warping kernels. In Proceedings of the Second ACM IKDD Conference on Data Sciences, Bangalore, India, 18–21 March 2015; pp. 30–38. [Google Scholar]

- Cuturi, M.; Vert, J.P.; Birkenes, O.; Matsui, T. A kernel for time series based on global alignments. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, Honolulu, HI, USA, 15–20 April 2007; Volume 2, p. II-413. [Google Scholar]

- Cuturi, M. Fast global alignment kernels. In Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, USA, 28 June–2 July 2011; pp. 929–936. [Google Scholar]

- Bahlmann, C.; Haasdonk, B.; Burkhardt, H. Online handwriting recognition with support vector machines-a kernel approach. In Proceedings of the Eighth International Workshop on Frontiers in Handwriting Recognition, Niagara on the Lake, ON, Canada, 6–8 August 2002; pp. 49–54. [Google Scholar]

- Sakurai, Y.; Yoshikawa, M.; Faloutsos, C. FTW: Fast similarity search under the time warping distance. In Proceedings of the twenty-fourth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, Baltimore, MD, USA, 13–15 June 2005; pp. 326–337. [Google Scholar]

- Yen, I.E.H.; Lin, T.W.; Lin, S.D.; Ravikumar, P.K.; Dhillon, I.S. Sparse random feature algorithm as coordinate descent in hilbert space. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2014; pp. 2456–2464. [Google Scholar]

- Marteau, P.F.; Gibet, S. On recursive edit distance kernels with application to time series classification. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 1121–1133. [Google Scholar] [CrossRef] [PubMed]

- Wu, L.; Yen, I.E.H.; Yi, J.; Xu, F.; Lei, Q.; Witbrock, M. Random warping series: A random features method for time-series embedding. arXiv 2018, arXiv:1809.05259. [Google Scholar]

- Frazier, P.I. Bayesian Optimization. In Recent Advances in Optimization and Modeling of Contemporary Problems; Informs PubsOnLine: Catonsville, MD, USA, 2018; Chapter 11; pp. 255–278. [Google Scholar] [CrossRef]

- Hutter, F.; Kotthoff, L.; Vanschoren, J. Automatic machine learning: Methods, systems, challenges. In Challenges in Machine Learning; Springer: Berlin, Germany, 2019. [Google Scholar]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.T.; Blum, M.; Hutter, F. Auto-sklearn: Efficient and Robust Automated Machine Learning. In Automated Machine Learning; Springer: Berlin, Germany, 2019; pp. 113–134. [Google Scholar]

- Mueen, A.; Zhu, Y.; Yeh, M.; Kamgar, K.; Viswanathan, K.; Gupta, C.; Keogh, E. The Fastest Similarity Search Algorithm for Time Series Subsequences under Euclidean Distance. 2017. Available online: http://www.cs.unm.edu/~mueen/FastestSimilaritySearch.html (accessed on 26 November 2019).

- Casale, P.; Pujol, O.; Radeva, P. Personalization and user verification in wearable systems using biometric walking patterns. Pers. Ubiquitous Comput. 2012, 16, 563–580. [Google Scholar] [CrossRef]

- Ye, L.; Keogh, E. Time series shapelets: A new primitive for data mining. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, France, 28 June–1 July 2009; pp. 947–956. [Google Scholar]

| Coherent Visually | ||

|---|---|---|

| Exercise | DTW-Based Subsequence Search | Kernel-Based DTW Approximation |

| Flexo-extension of the knee, sit-down position. Five repetitions planned. | Yes | Yes |

| Yes | Yes | |

| Yes | Yes | |

| No | Yes | |

| No | Yes | |

| Light squat, stand-up position. Five repetitions planned. | Yes | No |

| Yes | No | |

| Yes | Yes | |

| Yes | - | |

| Yes | - | |

| Back extension of the legs, stand-up position. Five repetitions planned. | Yes | Yes |

| Yes | Yes | |

| Yes | Yes | |

| No | Yes | |

| No | Yes | |

| Rotate the torso, sit-down position. Five repetitions planned. | Yes | Yes |

| Yes | Yes | |

| Yes | - | |

| Yes | - | |

| No | - | |

| Raise and lower the arms, sit-down position. Ten repetitions planned. | Yes | Yes |

| Yes | Yes | |

| Yes | Yes | |

| Yes | Yes | |

| Yes | Yes | |

| No | Yes | |

| Yes | Yes | |

| No | Yes | |

| Yes | - | |

| Yes | - | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Candelieri, A.; Fedorov, S.; Messina, E. Efficient Kernel-Based Subsequence Search for Enabling Health Monitoring Services in IoT-Based Home Setting. Sensors 2019, 19, 5192. https://doi.org/10.3390/s19235192

Candelieri A, Fedorov S, Messina E. Efficient Kernel-Based Subsequence Search for Enabling Health Monitoring Services in IoT-Based Home Setting. Sensors. 2019; 19(23):5192. https://doi.org/10.3390/s19235192

Chicago/Turabian StyleCandelieri, Antonio, Stanislav Fedorov, and Enza Messina. 2019. "Efficient Kernel-Based Subsequence Search for Enabling Health Monitoring Services in IoT-Based Home Setting" Sensors 19, no. 23: 5192. https://doi.org/10.3390/s19235192

APA StyleCandelieri, A., Fedorov, S., & Messina, E. (2019). Efficient Kernel-Based Subsequence Search for Enabling Health Monitoring Services in IoT-Based Home Setting. Sensors, 19(23), 5192. https://doi.org/10.3390/s19235192