4.2. Data Description

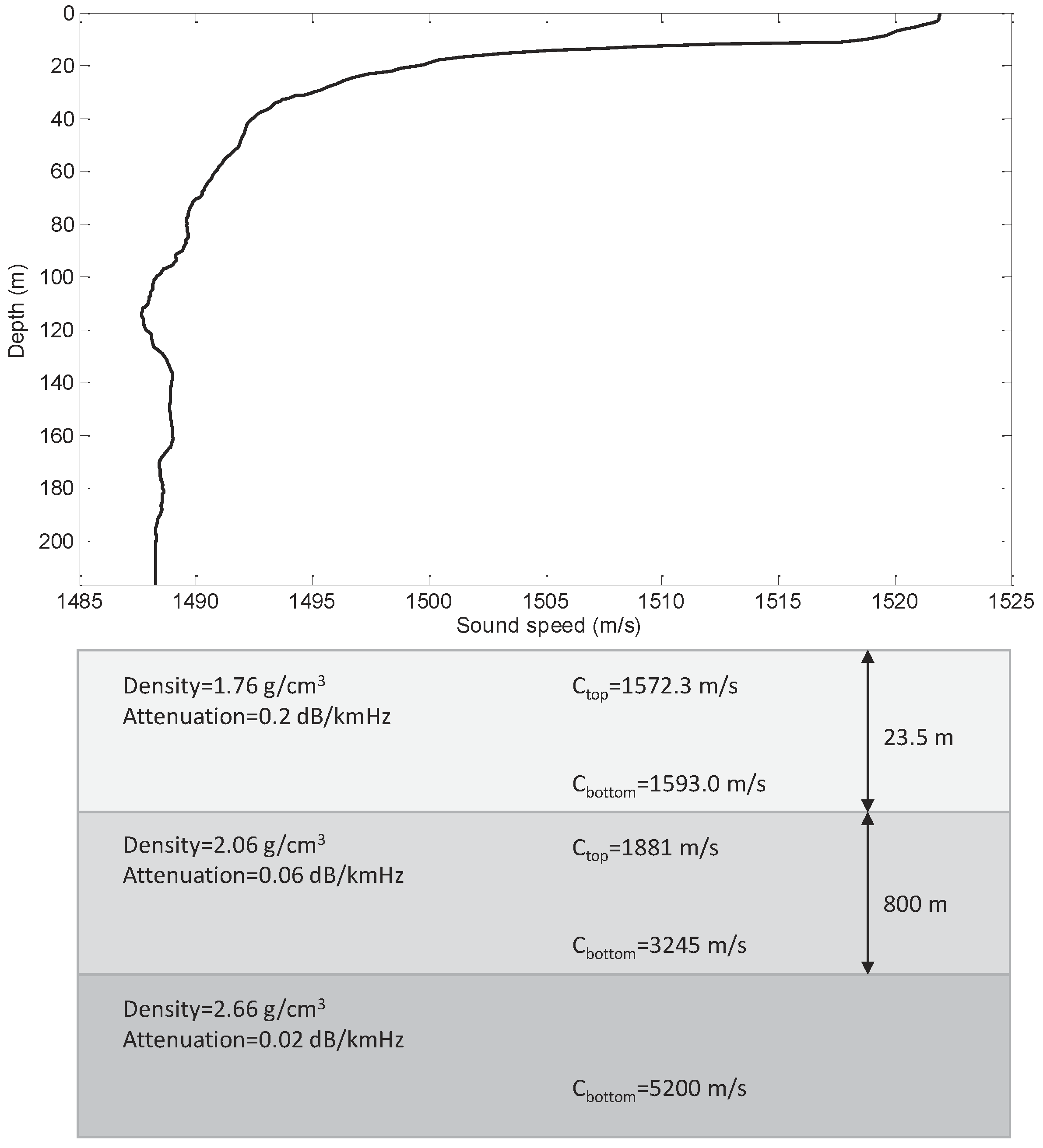

In the simulation, the bandwidth of signal was [50, 210] Hz and the sampling rate was

Hz. The hydrophone array was deployed at a 213 m depth of water. We investigated two topologies of UHAs, including a horizontal circular array (HCA) and a horizontal line array (HLA) (note that our method is suitable for UHA with arbitrary topologies). The HCA was 50-element with a 250 m radius, where the hydrophones were uniformly distributed. The HLA was 27-element, the layout of which was the same as that of the HLA North of SWellEx-96 Event S5 (the details can refer to the web page

http://swellex96.ucsd.edu/hla_north.htm). In fact, the line array was not strictly linear but had a certain degree of curvature. The map of source movement and the location of the hydrophone array are depicted in

Figure 8. The training data included sources with azimuth angles from

to

with

intervals (the course equals to azimuth angle). In each azimuth angle, the source ranged from

to

km at a speed of 5 knots (2.5 m/s). The source depth was fixed to 54 m. When testing, every testing segmentation contained ten minutes (including 960 samples) and the two-source scenario included source one from [

,

km] to [

,

km], and source two from [

,

km] to [

,

km]. The three-source scenario included source one from [

,

km] to [

,

km], source two from [

,

km] to [

,

km], and source three from [

,

km] to [

,

km]. The training data and testing data were mutually different.

The signal was transformed to the frequency domain by operating fast Fourier transformation (FFT) (Hanning windowed). The frame length was s with overlap. The bandwidth for processing was set to Hz (with 5 Hz increment, totally 21 frequency bins). For HCA, the 50 hydrophones were divided into five subarrays uniformly, that is, , , , , and . For HLA, the 27 hydrophones were divided into four subarrays, the hydrophone indexes of subarrays were , , , and . Twenty snapshots were used to calculate the SCM. Data augmentation was performed using and , generating about training samples.

4.3. The Configuration of DNNs

In direction finding, the configuration of FNN was 5 layers (one input layer + three hidden layers + one output layer) with 128 hidden nodes. The rectified linear units [

46] (ReLU),

, was used as the activation function. The initial learning rate was 0.001 and the batch size was 6. The input of FNN was the FFT coefficients of each frame, so the input dimension of FNN were 1134 (

, real and imaginary parts were concatenated) for HLA and 2100 (

) for HCA.

In source ranging, the LSTM-RNN was three layers with 896 nodes. The activation function was ReLU. The initial learning rate was and the batch size was 512. The input dimension of LSTM-RNN were 420 () for HLA and 630 () for HCA.

It should be mentioned that all parameters (e.g., hidden nodes, hidden layers, learning rate, and batch size) of FNN or LSTM-RNN were chosen based on experiments. The tensorflow [

47] toolkit was taken for FNN and LSTM-RNN training. Adam [

48] was utilized for optimization.

4.5. Simulation Results

The first simulation was conducted to investigate the performance of the proposed method under different signal-to-noise ratios (SNRs). White noise was added to the simulated signals, resulting in SNRs of 15, 5, and

dB. The SNR [

49] reported here was defined as the SNR (at 210 Hz) at a single hydrophone when the source range was 1 km (SNR would decrease with source range increasing). Both source level (SL) and noise level (NL) were attenuated by

dB/Oct. The CBF [

34] was chosen as the competing algorithms in direction finding. Twenty snapshots were used to calculate beamformer power of CBF. For the sake of fairness, the posterior probability of FNN was averaged over every twenty frames. The results of the two-source scenarios and three-source scenarios on HCA are summarized in

Table 2. The ROC curves of two-source scenario and three-source scenario are plotted in

Figure 9 and

Figure 10 (The SNR shown here is the SNR of the received signal for each source, and the SL of each source is assumed to be equal). It should be mentioned that, the number of points seen on the figures may be less than the number of points actually sampled, because (1) there are some

correspond to the same PDR and FDR and they are overlapped in the figures; (2) there are some points of CBF go out of scope because of the large FDR when

is small. From the ROC curves, although the performance degrades with the lower SNR, the FNN and CBF can detect sources effectively in general. Superficially, the three methods can give a high PDR with a low FDR by setting an appropriate threshold; however, the values of

of CBF are larger than FNN significantly. The smaller

implies the stronger ability of suppressing the interference. Thus, there are little phantom peaks of FNN than CBF, which is a good indication of its better capability of suppressing interference. When SNR decreases to

dB, the FDR of CBF rises and PDR decreases, which reveals the proposed method is more robust than CBF under a lower SNR. Furthermore, the estimation errors of FNN are smaller than CBF in all conditions as shown in

Table 2.

For source ranging, we compared the performance of LSTM-RNN with FNN. The FNN was five layers with three hidden layers and 896 hidden nodes. From

Table 2, the LSTM-RNN outperforms FNN, which demonstrates the superiority of LSTM-RNN in modeling the long-term temporal information. In addition, we may notice that the locations of the test sources may not exist in the training set. However, the proposed method can still give reliable estimates to sources’ ranges, which reveals that the proposed method can localize the sources as long as the test source locations are in the region of the training set.

We also evaluated the performance on HLA under different SNRs. The results are summarized in

Table 3. We can find that the proposed method also exhibits a good performance on direction finding and source ranging on HLA. Comparing

Table 2 and

Table 3, basically, the performance of the proposed method is similar to different array topologies. Whereas the

of HLA is larger than HCA, the reason of which considers the angular resolution of HCA is constant with the change of azimuth angles while it varies for HLA. The experimental results indicate that the proposed method can be applied to the UHA with arbitrary topologies. For simplicity, the following simulations were all conducted on HCA.

The second simulation evaluated the performance with or without data augmentation in the two-source scenario. The SNR was set to 5 dB and the neural network was LSTM-RNN. The

and

without data augmentation are

km and

. From

Table 3, with data augmentation, the

and

drop to

km and

respectively. The results demonstrate that data augmentation can improve the generalization ability of DNN model.

The third simulation was made to investigate the performance of the proposed method when the SLs of two testing sources were different, where the source with the higher SL referred to the dominant source. The SNR of the dominant source was 5 dB. Define

(dB) (

corresponds to the dominant source and

corresponds to the weak source),

Figure 11 compares the ROC curves of CBF and FNN when

dB. Both methods can give high PDR with low FDR when two SLs are comparable. Nevertheless, the false detections of CBF rise faster than FNN when the difference between the two SLs increases. In addition, the

and

of source ranging are summarized in

Table 4. With

increasing, the estimation error increases because the weak source is masked by the presence of the dominant source, which leads to the larger error of the weak source.

The last experiment investigated the spatial resolution of the proposed method. The separations of two sources were set to

,

,

,

, and

. Here, the azimuth of each source was fixed, while the range of each source was from 1 km to

km. The SNR was set to 5 dB. The detection accuracies of FNN and CBF in direction finding are shown in

Figure 12. Here, only when the source number and the azimuth angles of two sources are estimated correctly is the detection deemed to be correct. The accuracy is defined as the ratio of the number of accurate detections and the number of test samples. From

Figure 12, generally, FNN and CBF can discriminate two widely separated sources, and the accuracy of FNN outperforms CBF. When the separation of two sources becomes smaller, FNN presents its superiority in discriminating two closely separated sources. We evaluated the performance of source ranging using LSTM-RNN. The results of source ranging are summarized in

Table 5, where the

and

are calculated using the test samples with the accurate estimated azimuth angles. The results show that the separations have little influence on source ranging if the azimuth angles are estimated accurately. Note that the

and

are slightly smaller than those shown in

Table 2, because the range of testing sources here are nearer than those in the first simulation.