A Model-Based System for Real-Time Articulated Hand Tracking Using a Simple Data Glove and a Depth Camera

Abstract

1. Introduction

- Design and implementation of a multi-model articulated hand tracking system that runs in real-time without GPU and improves by about 40% of robustness with comparable or increased accuracy over the state-of-the-art.

- The re-definition and simplification on the function of the data glove as an approximate initialization in Section 3.2.2 and a strong pose-space regularization in Section 3.3.3 that increases the robustness of our system and frees our data glove from tedious calibration and heavily hampering hand movement.

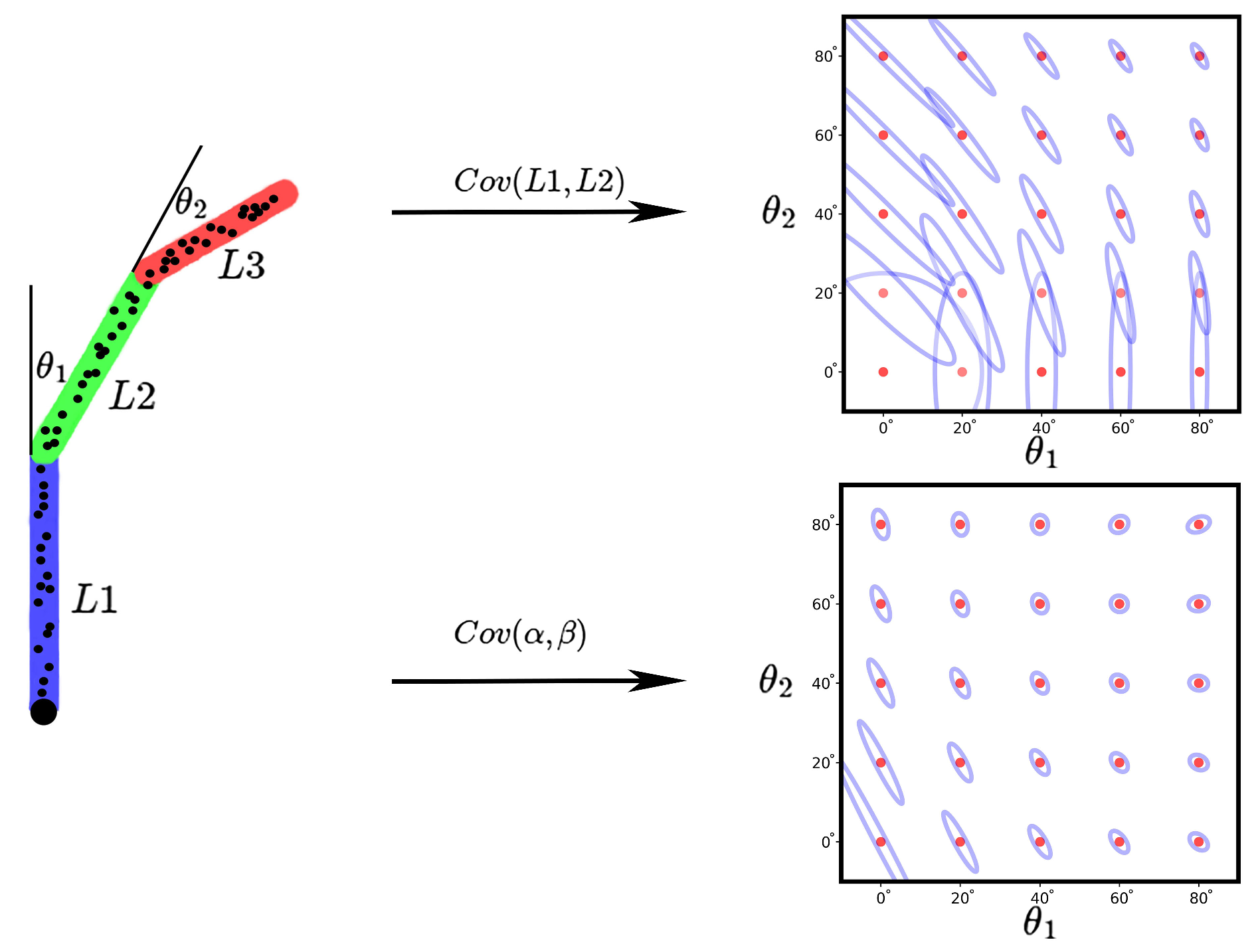

- A new proposal for DoFs setting that can avoid potential artificial error in the kinematic chain; see Section 3.1.

- The new fitting terms with a simplified approach for computing tracking correspondences that can reduce computational cost without the loss of accuracy; see Section 3.3.1.

- A new strategy to consider the shape adjustment of the triangular mesh hand model that includes a tailored shape integration term in Section 3.3.2 for better fitting the input images and an adoptive collision prior consistent with the shape adjustment in Section 3.3.3 to prevent self-intersection and produce plausible hand poses.

2. Related Works

2.1. Appearance-Based Methods

2.2. Model-Based Methods

2.2.1. Initialization

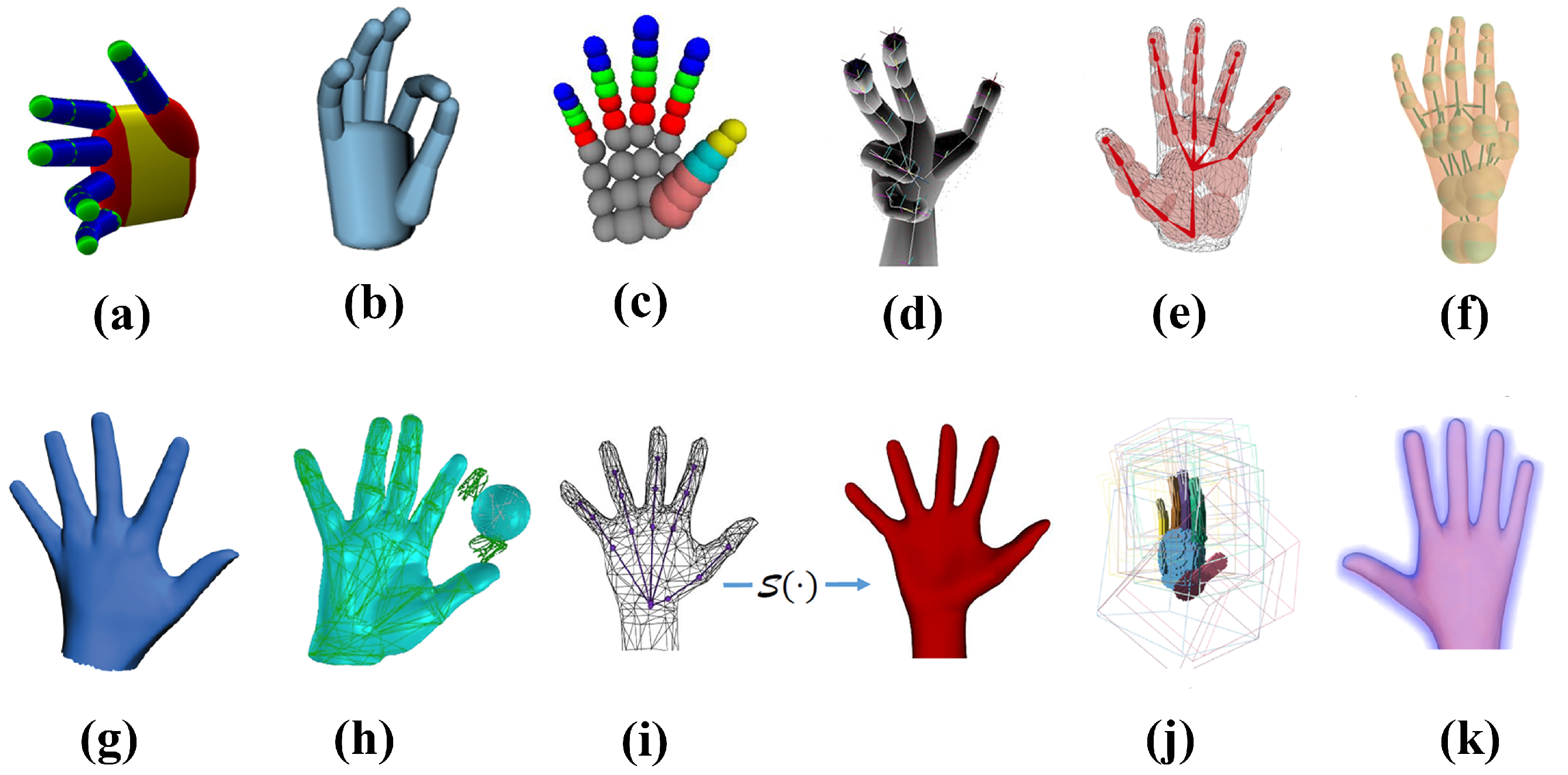

2.2.2. Hand Model

2.2.3. Objective Function

2.3. Multi Model Systems

3. Method

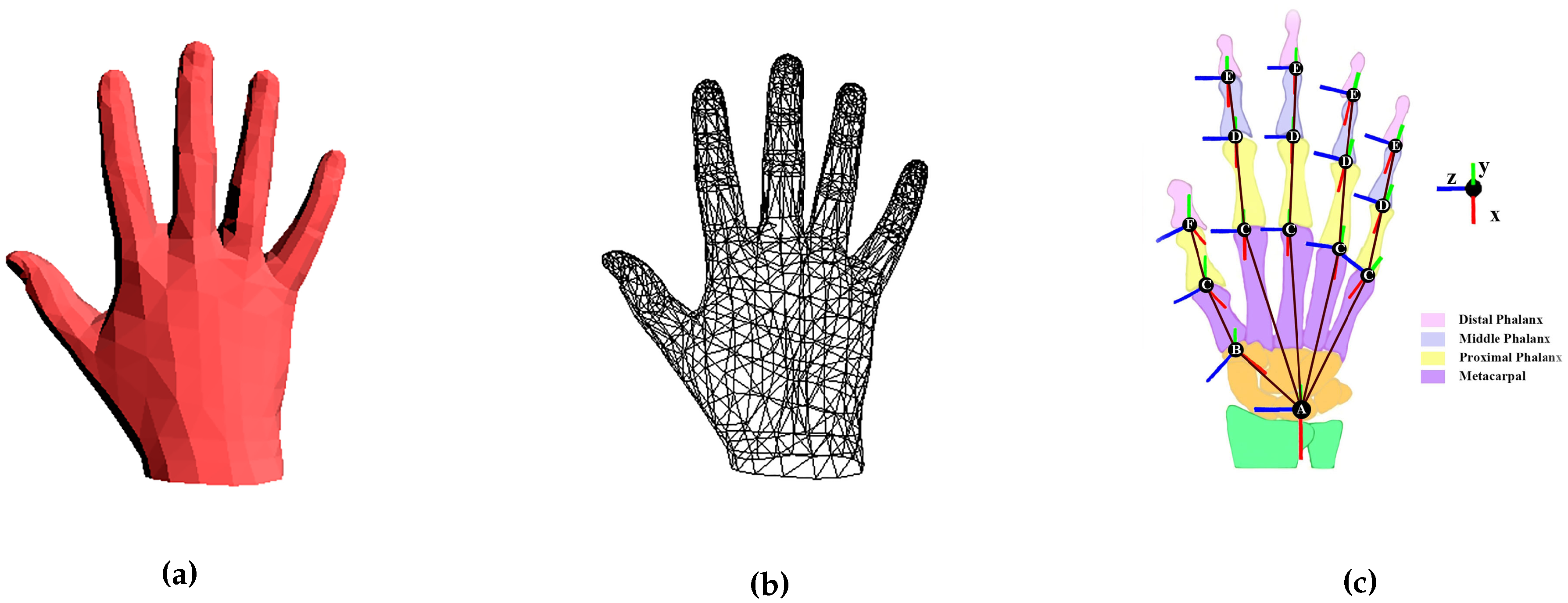

3.1. Hand Model

3.2. Data Acquisition and Processing

3.2.1. Camera Data

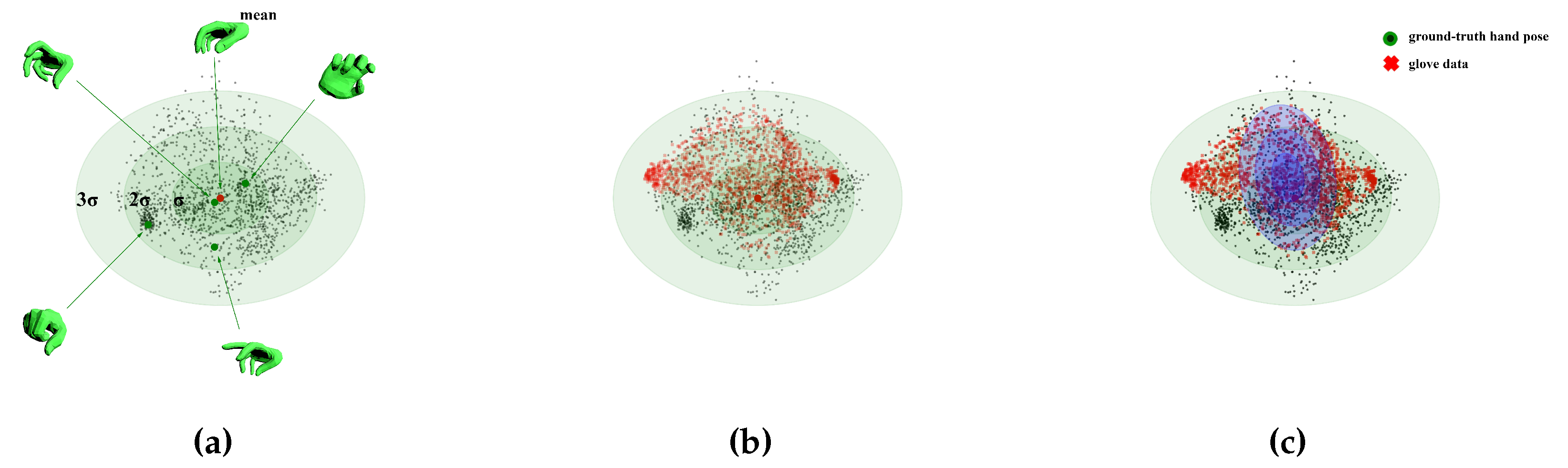

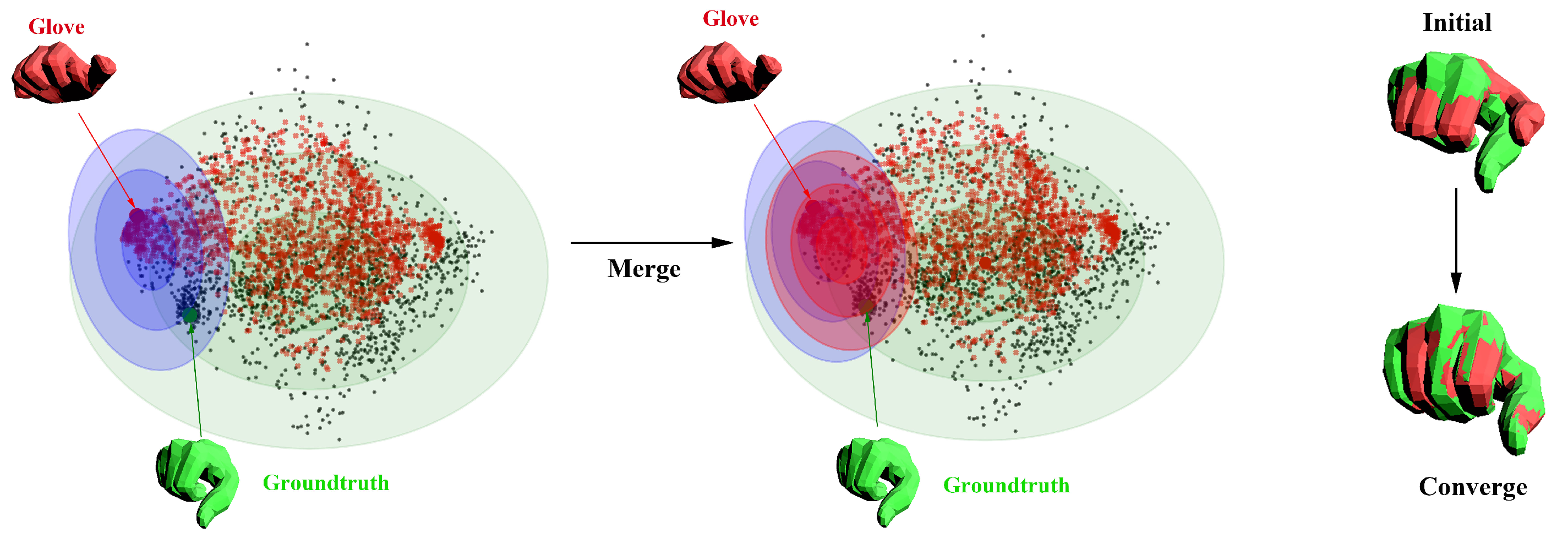

3.2.2. Glove Data

3.3. Objective Function

3.3.1. Fitting terms

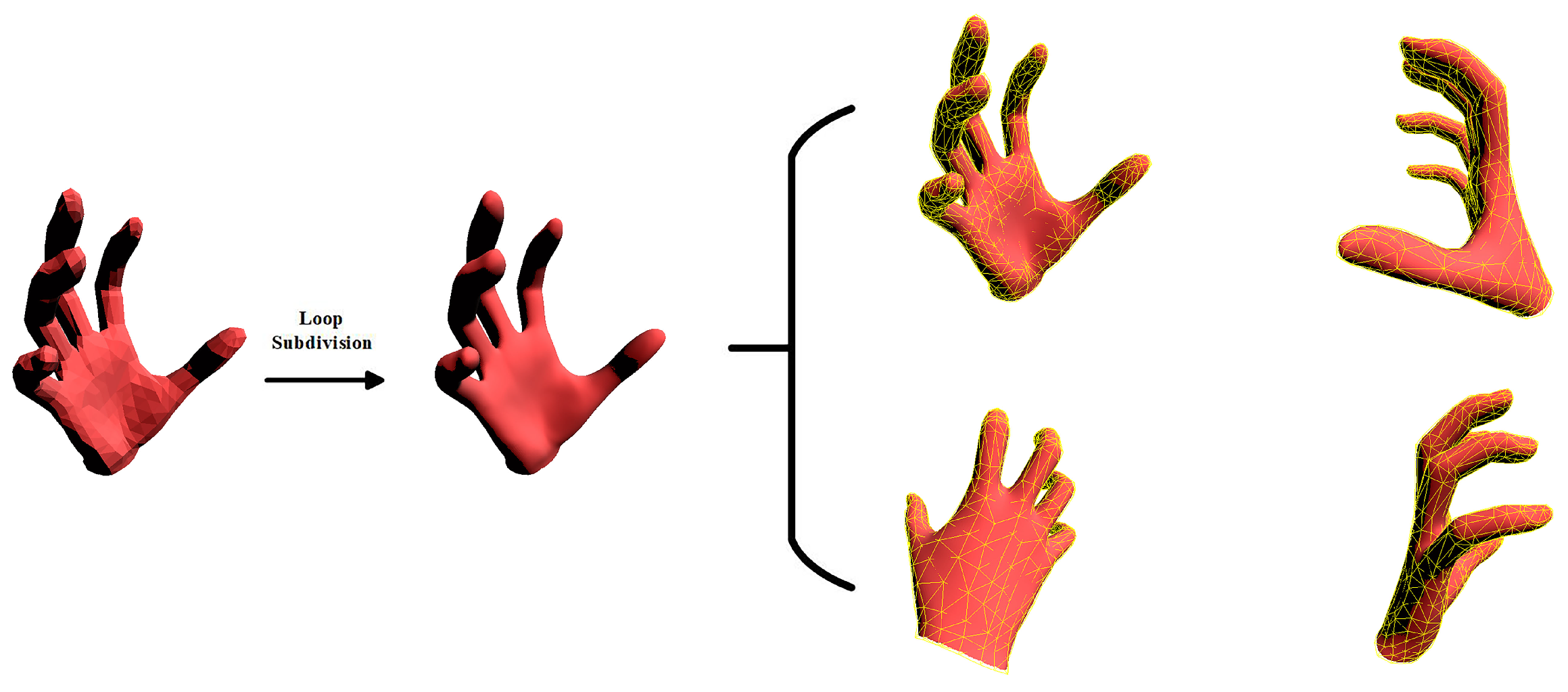

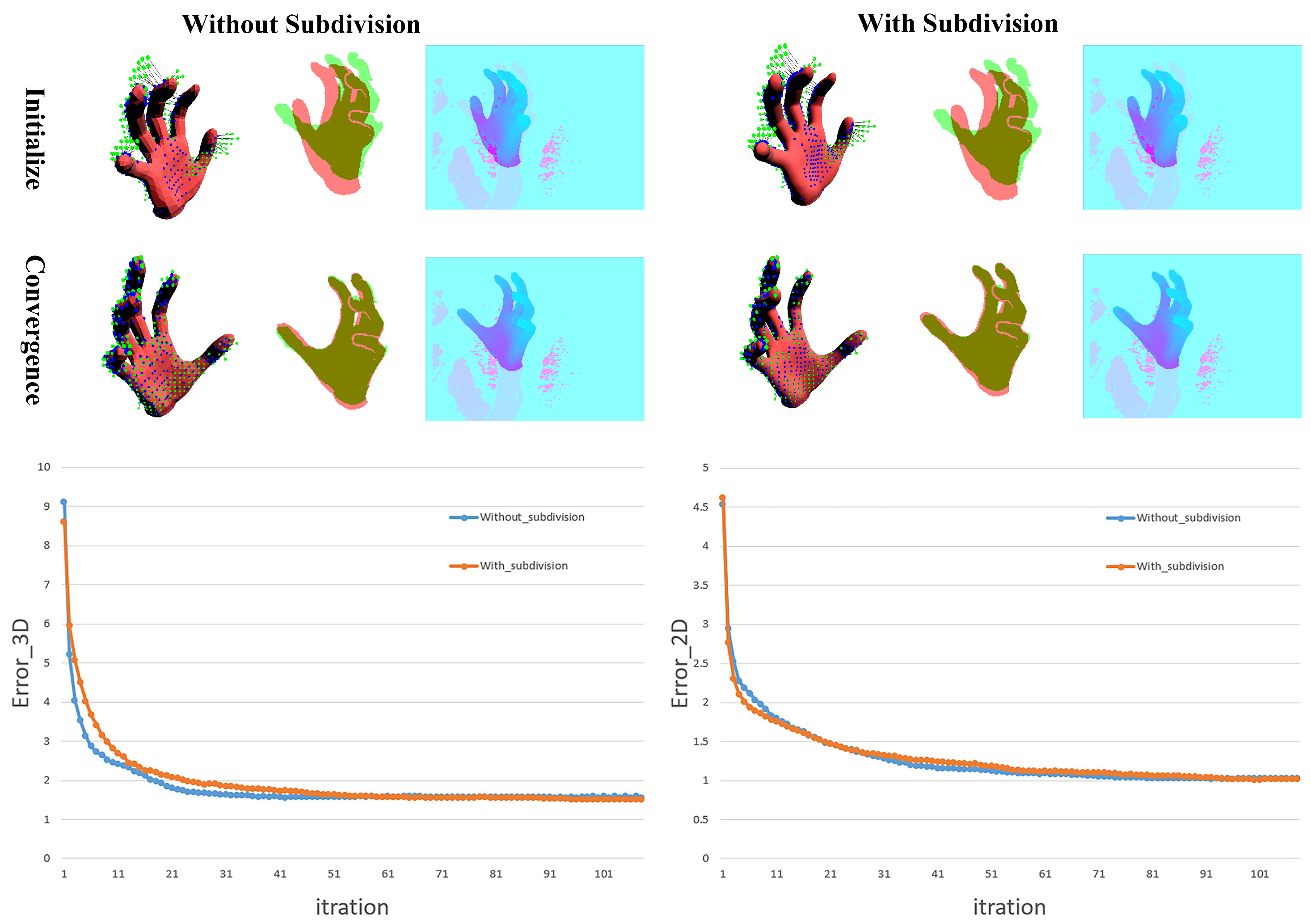

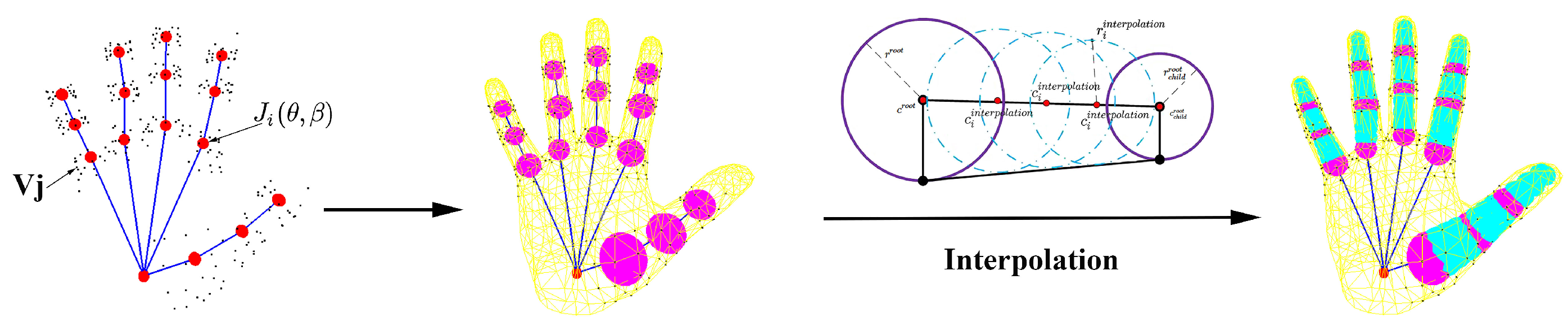

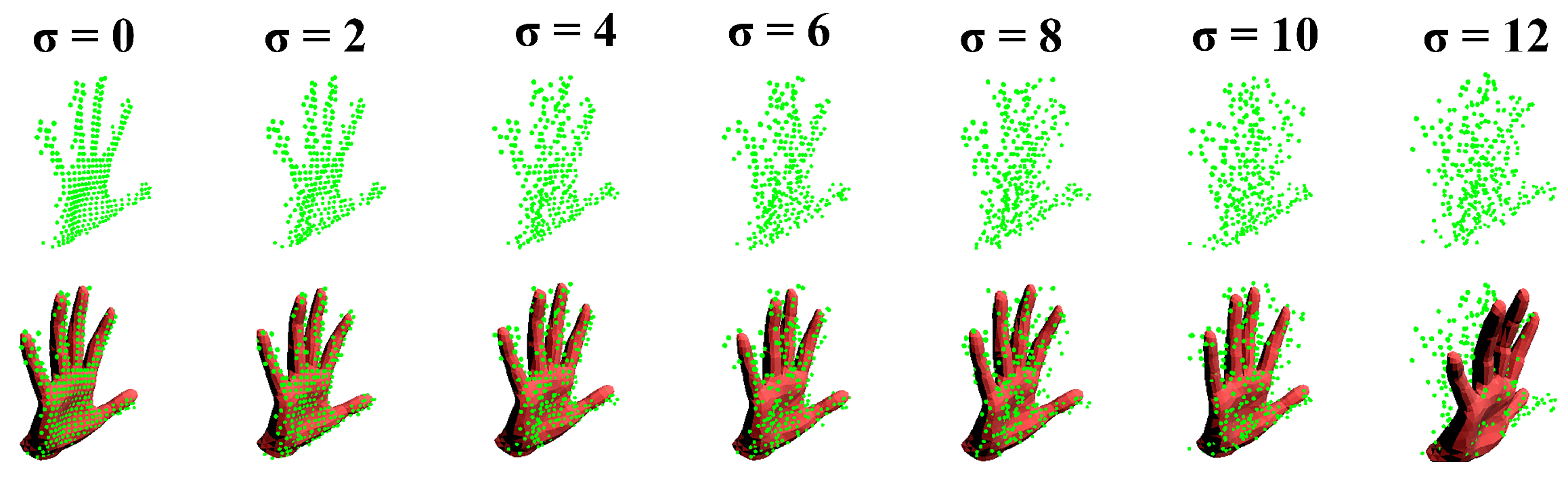

- The MANO hand model is more detailed than the hand models in [4,6,7,8,28,30,36,38] even though it is made by only 778 vertices and 1554 triangular faces. We also try to produce a more detailed model by the Loop subdivision [36], see Figure 8. The result shows no significant improvement, which enables the vertex account for these corresponding points around it.

- We conducted another way to find more detailed corresponding points on the hand model using loop subdivision and compare the performance with our simplified approach, see Figure 9. The result shows that our approach will not decrease accuracy.

3.3.2. Shape Integration

3.3.3. Prior Terms

4. Experiments and Discussion

4.1. Data-Sets

4.2. Quantitative Comparison Metrics

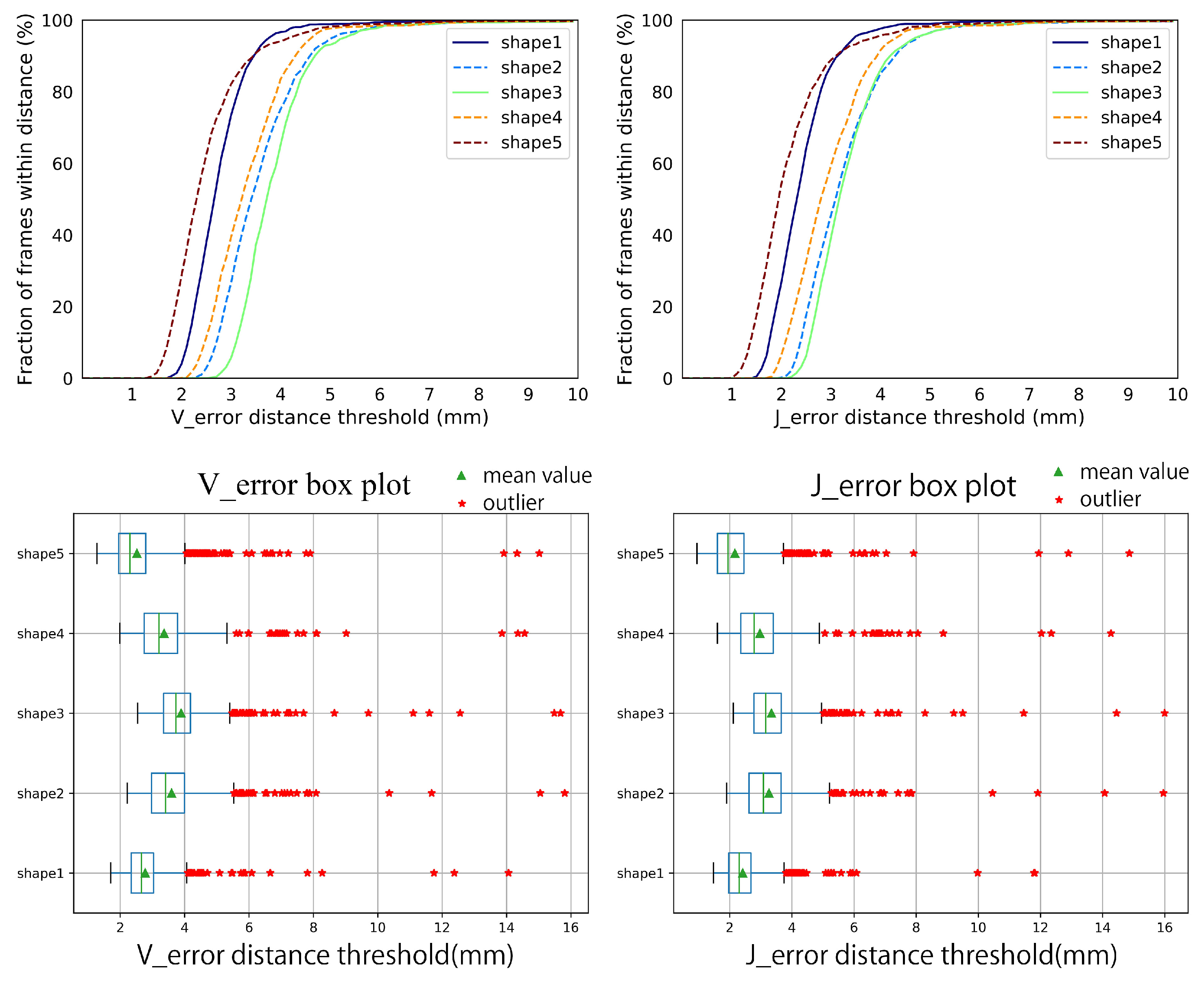

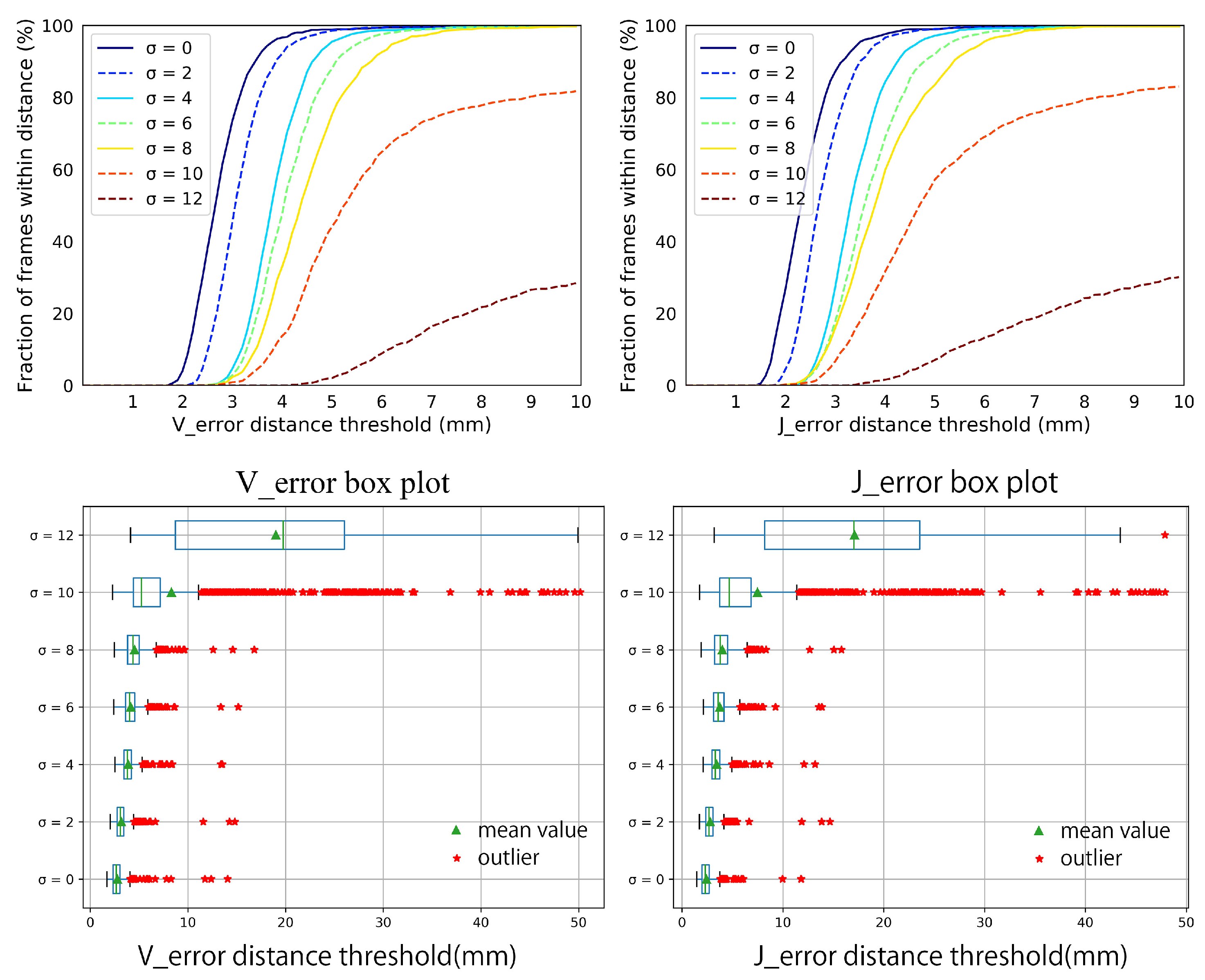

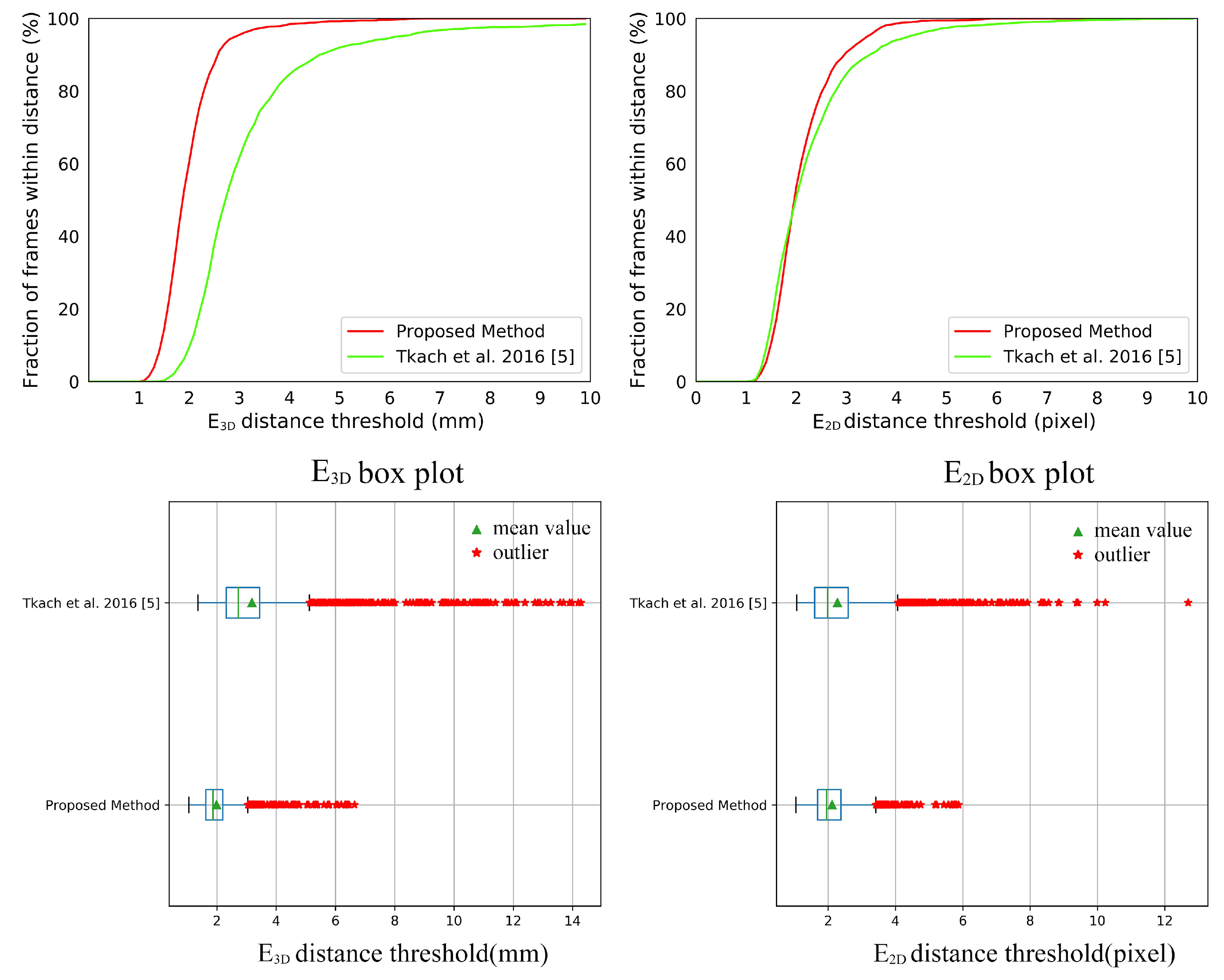

4.3. Quantitative Experiments

4.4. Qualitative Experiments

4.5. System Efficiency

5. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| HCI | Human–computer interaction |

| DoF | Degree of freedom |

| VR | Virtual reality |

| AR | Augmented reality |

| IMU | Inertial measurement unit |

| CNN | Convolutional neural network |

| LBS | Linear blend skinning |

| ICP | Iterative closest point |

| PSO | Particle swarm optimization |

| PCA | Principal components analysis |

| FPS | Frames per second |

| GPU | Graphics Processing Unit |

| CPU | Central Processing Unit |

| SDF | Signed Distance Function |

References

- Taylor, J.; Tankovich, V.; Tang, D.; Keskin, C.; Kim, D.; Davidson, P.; Kowdle, A.; Izadi, S. Articulated distance fields for ultra-fast tracking of hands interacting. ACM Trans. Graphics (TOG) 2017, 36, 244. [Google Scholar] [CrossRef]

- Malik, J.; Elhayek, A.; Nunnari, F.; Varanasi, K.; Tamaddon, K.; Heloir, A.; Stricker, D. Deephps: End-to-end estimation of 3d hand pose and shape by learning from synthetic depth. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 110–119. [Google Scholar]

- Oberweger, M.; Lepetit, V. Deepprior++: Improving fast and accurate 3d hand pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 585–594. [Google Scholar]

- Ballan, L.; Taneja, A.; Gall, J.; Van Gool, L.; Pollefeys, M. Motion capture of hands in action using discriminative salient points. In Proceedings of the European Conference on Computer Vision, Firenze, Italy, 7–13 October 2012; pp. 640–653. [Google Scholar]

- Tkach, A.; Pauly, M.; Tagliasacchi, A. Sphere-meshes for real-time hand modeling and tracking. ACM Trans. Graphics (TOG) 2016, 35, 222. [Google Scholar] [CrossRef]

- Sharp, T.; Keskin, C.; Robertson, D.; Taylor, J.; Shotton, J.; Kim, D.; Rhemann, C.; Leichter, I.; Vinnikov, A.; Wei, Y.; et al. Accurate, robust, and flexible real-time hand tracking. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3633–3642. [Google Scholar]

- Sanchez-Riera, J.; Srinivasan, K.; Hua, K.L.; Cheng, W.H.; Hossain, M.A.; Alhamid, M.F. Robust RGB-D hand tracking using deep learning priors. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2289–2301. [Google Scholar] [CrossRef]

- Tzionas, D.; Ballan, L.; Srikantha, A.; Aponte, P.; Pollefeys, M.; Gall, J. Capturing Hands in Action using Discriminative Salient Points and Physics Simulation. Int. J. Comput. Vision 2016, 118, 172–193. [Google Scholar] [CrossRef]

- Arkenbout, E.; de Winter, J.; Breedveld, P. Robust hand motion tracking through data fusion of 5DT data glove and nimble VR Kinect camera measurements. Sensors 2015, 15, 31644–31671. [Google Scholar] [CrossRef] [PubMed]

- Ponraj, G.; Ren, H. Sensor Fusion of Leap Motion Controller and Flex Sensors Using Kalman Filter for Human Finger Tracking. IEEE Sens. J. 2018, 18, 2042–2049. [Google Scholar] [CrossRef]

- Dipietro, L.; Sabatini, A.M.; Dario, P. A survey of glove-based systems and their applications. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2008, 38, 461–482. [Google Scholar] [CrossRef]

- 5DT. Available online: http://5dt.com/5dt-data-glove-ultra/ (accessed on 26 October 2019).

- CyberGlove. Available online: http://www.cyberglovesystems.com/ (accessed on 26 October 2019).

- Yuan, S.; Ye, Q.; Stenger, B.; Jain, S.; Kim, T.K. Bighand2. 2m benchmark: Hand pose dataset and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4866–4874. [Google Scholar]

- Yuan, S.; Garcia-Hernando, G.; Stenger, B.; Moon, G.; Yong Chang, J.; Mu Lee, K.; Molchanov, P.; Kautz, J.; Honari, S.; Ge, L.; et al. Depth-based 3d hand pose estimation: From current achievements to future goals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2636–2645. [Google Scholar]

- Tang, D.; Yu, T.H.; Kim, T.K. Real-time articulated hand pose estimation using semi-supervised transductive regression forests. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3224–3231. [Google Scholar]

- Tang, D.; Chang, H.J.; Tejani, A.; Kim, T. Latent Regression Forest: Structured Estimation of 3D Hand Poses. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1374–1387. [Google Scholar] [CrossRef]

- Deng, X.; Yang, S.; Zhang, Y.; Tan, P.; Chang, L.; Wang, H. Hand3d: Hand pose estimation using 3d neural network. arXiv 2017, arXiv:1704.02224. [Google Scholar]

- Guo, H.; Wang, G.; Chen, X. Two-stream convolutional neural network for accurate rgb-d fingertip detection using depth and edge information. arXiv 2016, arXiv:1612.07978. [Google Scholar]

- Rad, M.; Oberweger, M.; Lepetit, V. Feature mapping for learning fast and accurate 3d pose inference from synthetic images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4663–4672. [Google Scholar]

- Du, K.; Lin, X.; Sun, Y.; Ma, X. CrossInfoNet: Multi-Task Information Sharing Based Hand Pose Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9896–9905. [Google Scholar]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Generalized Feedback Loop for Joint Hand-Object Pose Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef] [PubMed]

- Qian, C.; Sun, X.; Wei, Y.; Tang, X.; Sun, J. Realtime and robust hand tracking from depth. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1106–1113. [Google Scholar]

- Sun, X.; Wei, Y.; Liang, S.; Tang, X.; Sun, J. Cascaded hand pose regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 824–832. [Google Scholar]

- Tompson, J.; Stein, M.; Lecun, Y.; Perlin, K. Real-time continuous pose recovery of human hands using convolutional networks. ACM Trans. Graphics (TOG) 2014, 33, 169. [Google Scholar] [CrossRef]

- Wetzler, A.; Slossberg, R.; Kimmel, R. Rule of thumb: Deep derotation for improved fingertip detection. arXiv 2015, arXiv:1507.05726. [Google Scholar]

- Tagliasacchi, A.; Schroeder, M.; Tkach, A.; Bouaziz, S.; Botsch, M.; Pauly, M. Robust Articulated-ICP for Real-Time Hand Tracking. Comput. Graphics Forum (Symp. Geom. Process.) 2015, 34, 101–114. [Google Scholar] [CrossRef]

- Taylor, J.; Bordeaux, L.; Cashman, T.; Corish, B.; Keskin, C.; Sharp, T.; Soto, E.; Sweeney, D.; Valentin, J.; Luff, B.; et al. Efficient and precise interactive hand tracking through joint, continuous optimization of pose and correspondences. ACM Trans. Graphics (TOG) 2016, 35, 143. [Google Scholar] [CrossRef]

- Valentin, J.; Dai, A.; Nießner, M.; Kohli, P.; Torr, P.; Izadi, S.; Keskin, C. Learning to navigate the energy landscape. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 323–332. [Google Scholar]

- Taylor, J.; Stebbing, R.; Ramakrishna, V.; Keskin, C.; Shotton, J.; Izadi, S.; Hertzmann, A.; Fitzgibbon, A. User-specific hand modeling from monocular depth sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 644–651. [Google Scholar]

- Oikonomidis, I.; Kyriazis, N.; Argyros, A.A. Efficient model-based 3D tracking of hand articulations using Kinect. BMVC 2011, 1, 3. [Google Scholar] [CrossRef]

- Fleishman, S.; Kliger, M.; Lerner, A.; Kutliroff, G. Icpik: Inverse kinematics based articulated-icp. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 28–35. [Google Scholar]

- Makris, A.; Argyros, A. Model-based 3D hand tracking with on-line hand shape adaptation. In Proceedings of the BMVC, Swansea, UK, 7–10 September 2015; pp. 77.1–77.12. [Google Scholar]

- Melax, S.; Keselman, L.; Orsten, S. Dynamics based 3D skeletal hand tracking. In Proceedings of the Graphics Interface 2013. In Proceedings of the Canadian Information Processing Society, Regina, SK, Canada, 29–31 May 2013; pp. 63–70.

- Sridhar, S.; Rhodin, H.; Seidel, H.P.; Oulasvirta, A.; Theobalt, C. Real-time hand tracking using a sum of anisotropic gaussians model. In Proceedings of the 2014 2nd International Conference on 3D Vision, Tokyo, Japan, 8–11 December 2014; Volume 1, pp. 319–326. [Google Scholar]

- Khamis, S.; Taylor, J.; Shotton, J.; Keskin, C.; Izadi, S.; Fitzgibbon, A. Learning an efficient model of hand shape variation from depth images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2540–2548. [Google Scholar]

- Schmidt, T.; Newcombe, R.; Fox, D. DART: dense articulated real-time tracking with consumer depth cameras. Auton. Robots 2015, 39, 239–258. [Google Scholar] [CrossRef]

- Joseph Tan, D.; Cashman, T.; Taylor, J.; Fitzgibbon, A.; Tarlow, D.; Khamis, S.; Izadi, S.; Shotton, J. Fits like a glove: Rapid and reliable hand shape personalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5610–5619. [Google Scholar]

- Remelli, E.; Tkach, A.; Tagliasacchi, A.; Pauly, M. Low-dimensionality calibration through local anisotropic scaling for robust hand model personalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2535–2543. [Google Scholar]

- Tkach, A.; Tagliasacchi, A.; Remelli, E.; Pauly, M.; Fitzgibbon, A. Online generative model personalization for hand tracking. ACM Trans. Graphics (TOG) 2017, 36, 243. [Google Scholar] [CrossRef]

- Tannous, H.; Istrate, D.; Benlarbi-Delai, A.; Sarrazin, J.; Gamet, D.; Ho Ba Tho, M.; Dao, T. A new multi-sensor fusion scheme to improve the accuracy of knee flexion kinematics for functional rehabilitation movements. Sensors 2016, 16, 1914. [Google Scholar] [CrossRef]

- Sun, Y.; Li, C.; Li, G.; Jiang, G.; Jiang, D.; Liu, H.; Zheng, Z.; Shu, W. Gesture Recognition Based on Kinect and sEMG Signal Fusion. Mob. Netw. Appl. 2018, 23, 797–805. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Salvietti, G.; Hussain, I.; Meli, L.; Prattichizzo, D. The hRing: A wearable haptic device to avoid occlusions in hand tracking. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PV, USA, 8–11 April 2016; pp. 134–139. [Google Scholar]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. ACM Trans. Graphics (TOG) 2017, 36, 245. [Google Scholar] [CrossRef]

- Sensfusion. Available online: http://www.sensfusion.com/ (accessed on 26 October 2019).

- Ganapathi, V.; Plagemann, C.; Koller, D.; Thrun, S. Real-time human pose tracking from range data. In Proceedings of the European Conference on Computer Vision, Firenze, Italy, 7–13 October 2012; pp. 738–751. [Google Scholar]

- Poier, G.; Opitz, M.; Schinagl, D.; Bischof, H. MURAUER: Mapping unlabeled real data for label austerity. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Hilton Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1393–1402. [Google Scholar]

- Ge, L.; Ren, Z.; Yuan, J. Point-to-point regression pointnet for 3d hand pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 475–491. [Google Scholar]

- Chen, X.; Wang, G.; Zhang, C.; Kim, T.K.; Ji, X. Shpr-net: Deep semantic hand pose regression from point clouds. IEEE Access 2018, 6, 43425–43439. [Google Scholar] [CrossRef]

| Thumb | CMC_x | CMC_y | CMC_z | DCP_x | DCP_y | DCP_z | IP_x | IP_y | IP_z |

| Max | 18.37° | 29.34° | 78.16° | 16.36° | 33.72° | 21.28° | 17.59° | 78.29° | 19.26° |

| Min | 3.08° | −33.06° | −24.01° | −18.85° | −75.38° | −19.70° | −13.11° | −93.88° | −15.56° |

| Index | DCP_x | DCP_y | DCP_z | PIP_x | PIP_y | PIP_z | DIP_x | DIP_y | DIP_z |

| Max | 18.53° | 22.72° | 89.40° | 16.74° | 7.66° | 101.89° | 11.71° | 5.96° | 75.80° |

| Min | −15.41° | −31.75° | 89.40° | −11.08° | −4.19° | −34.83° | −13.58° | −4.43° | −42.08° |

| Middle | DCP_x | DCP_y | DCP_z | PIP_x | PIP_y | PIP_z | DIP_x | DIP_y | DIP_z |

| Max | 16.65° | 18.74° | 105.90° | 14.43° | 4.17° | 101.65° | 6.98° | 6.27° | 77.22° |

| Min | −13.19° | −23.33° | −39.66° | −13.86° | −6.03° | −30.38° | −7.28° | −1.24° | −28.96° |

| Ring | DCP_x | DCP_y | DCP_z | PIP_x | PIP_y | PIP_z | DIP_x | DIP_y | DIP_z |

| Max | 17.53° | 46.82° | 106.52° | 16.60° | 6.69° | 101.61° | 7.27° | 6.28° | 82.49° |

| Min | −20.31° | −30.92° | −60.50° | −19.58° | −5.70° | −24.83° | −15.63° | −5.45° | −30.86° |

| Pinky | DCP_x | DCP_y | DCP_z | PIP_x | PIP_y | PIP_z | DIP_x | DIP_y | DIP_z |

| Max | 18.47° | 31.52° | 102.32° | 2.48° | 7.11° | 103.65° | 10.22° | 6.63° | 82.53° |

| Min | −16.75° | −19.83° | −39.75° | −16.44° | −2.81° | −29.03° | −11.20° | −0.12° | −32.14° |

| [5] | Proposed Method | Improvement on [5] | ||

|---|---|---|---|---|

| Mean (mm) | 3.18 | 1.99 | 37% | |

| SD (mm) | 1.61 | 0.61 | 62% | |

| Mean (pixel) | 2.27 | 2.12 | 6% | |

| SD (pixel) | 1.06 | 0.64 | 40% |

| Handy/Teaser on [5] | Handy/GuessWho on [39] | Handy/GuessWho on [40] | ||||

|---|---|---|---|---|---|---|

| p-value | 0 | 0 | 0.1907 | |||

| [39] | [40] | Proposed Method | Improvement on [39] | Inprovement on [40] | ||

|---|---|---|---|---|---|---|

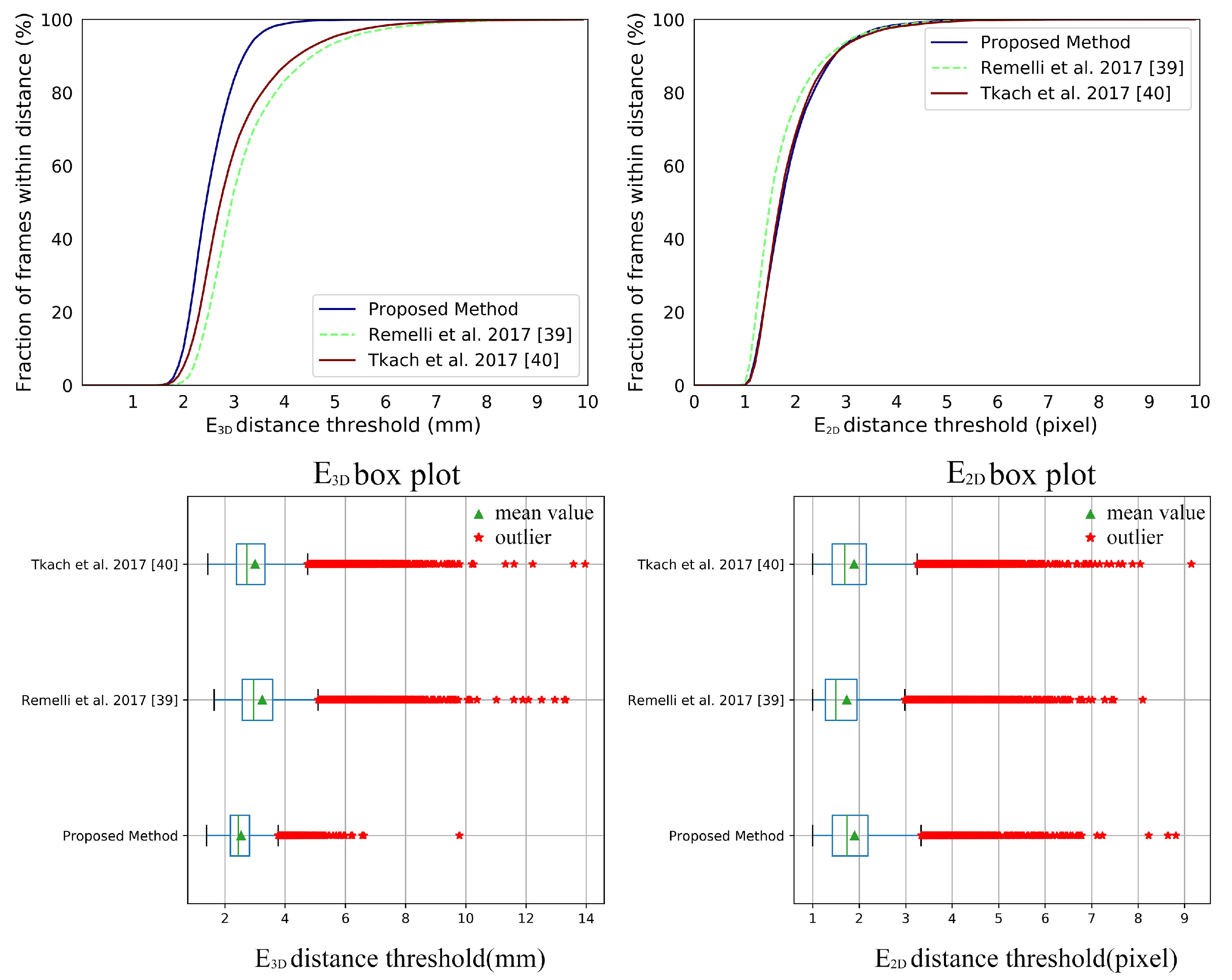

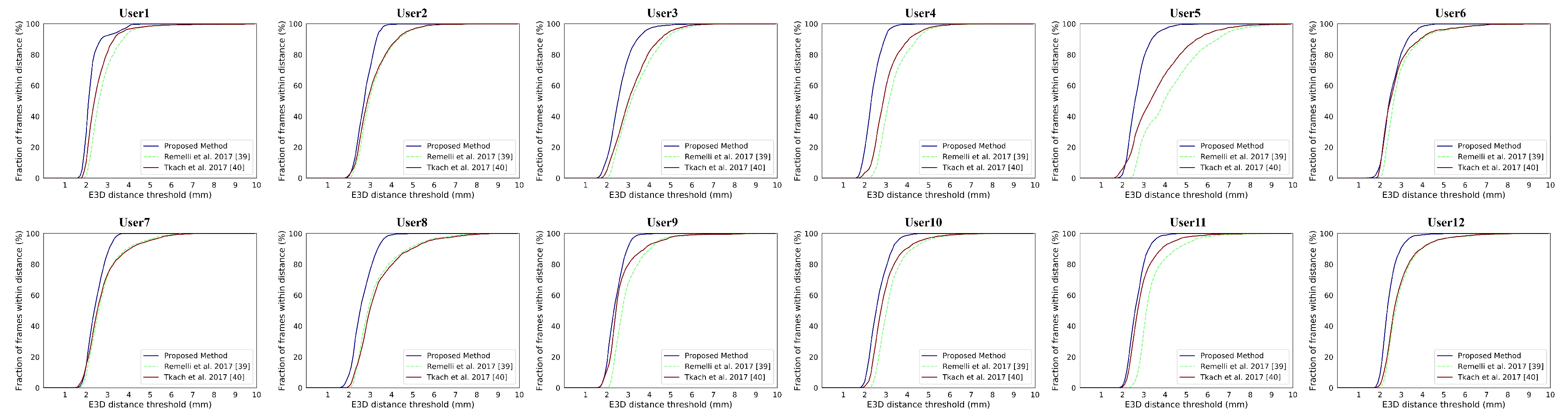

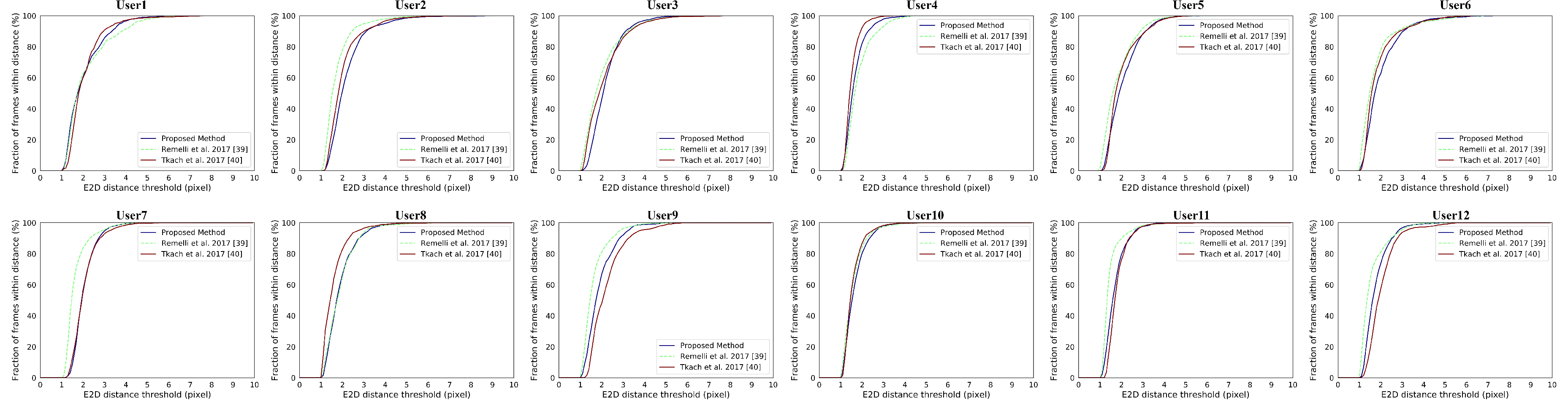

| Mean (mm) | 3.24 | 3.00 | 2.54 | 22% | 15% | |

| SD (mm) | 1.02 | 0.97 | 0.50 | 50% | 48% | |

| Mean (pixel) | 1.73 | 1.89 | 1.90 | −9% | −0.5% | |

| SD (pixel) | 0.71 | 0.71 | 0.68 | 4% | 4% |

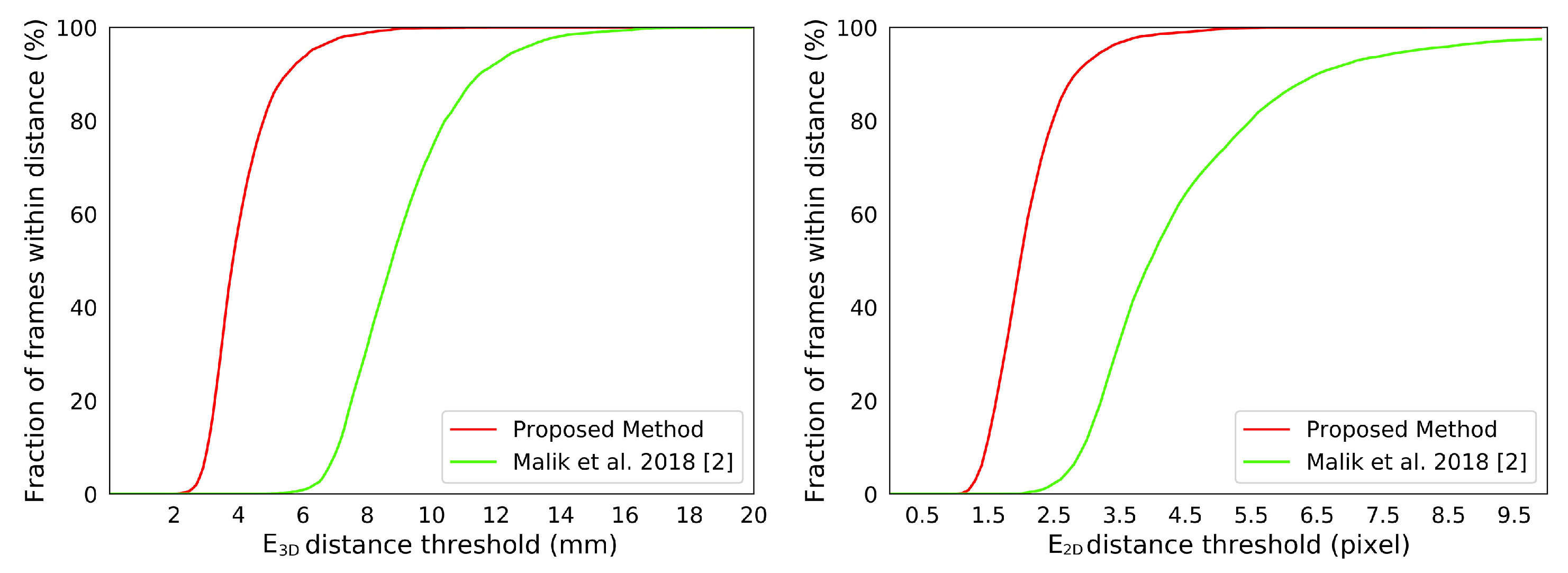

| Ours | [2] | [20] | [21] | [22] | [47] | [48] | [49] | |

|---|---|---|---|---|---|---|---|---|

| Mean (mm) | 10.45 | 14.42 | 7.44 | 10.08 | 10.89 | 9.47 | 9.05 | 10.78 |

| SD (mm) | 3.64 | 8.30 | 4.44 | 6.67 | 6.22 | 5.27 | 7.02 | 8.00 |

| Mean Improvement | – | 27% | −40% | −3% | 4% | −10% | −15% | 3% |

| SD Improvement | – | 56% | 18% | 45% | 41% | 30% | 48% | 54% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, L.; Xia, H.; Guo, C. A Model-Based System for Real-Time Articulated Hand Tracking Using a Simple Data Glove and a Depth Camera. Sensors 2019, 19, 4680. https://doi.org/10.3390/s19214680

Jiang L, Xia H, Guo C. A Model-Based System for Real-Time Articulated Hand Tracking Using a Simple Data Glove and a Depth Camera. Sensors. 2019; 19(21):4680. https://doi.org/10.3390/s19214680

Chicago/Turabian StyleJiang, Linjun, Hailun Xia, and Caili Guo. 2019. "A Model-Based System for Real-Time Articulated Hand Tracking Using a Simple Data Glove and a Depth Camera" Sensors 19, no. 21: 4680. https://doi.org/10.3390/s19214680

APA StyleJiang, L., Xia, H., & Guo, C. (2019). A Model-Based System for Real-Time Articulated Hand Tracking Using a Simple Data Glove and a Depth Camera. Sensors, 19(21), 4680. https://doi.org/10.3390/s19214680