An Augmented Reality Based Human-Robot Interaction Interface Using Kalman Filter Sensor Fusion

Abstract

1. Intruduction

1.1. Background

1.2. Related Works

- (1)

- the position from the LeapMotion was fused with the corresponding motion velocity instead of the position data measured by Kinect V2 sensor to improve the reliability of our proposed HRI interface;

- (2)

- the AR telepresence was designed for the robot teleoperation to enhance user experiences.

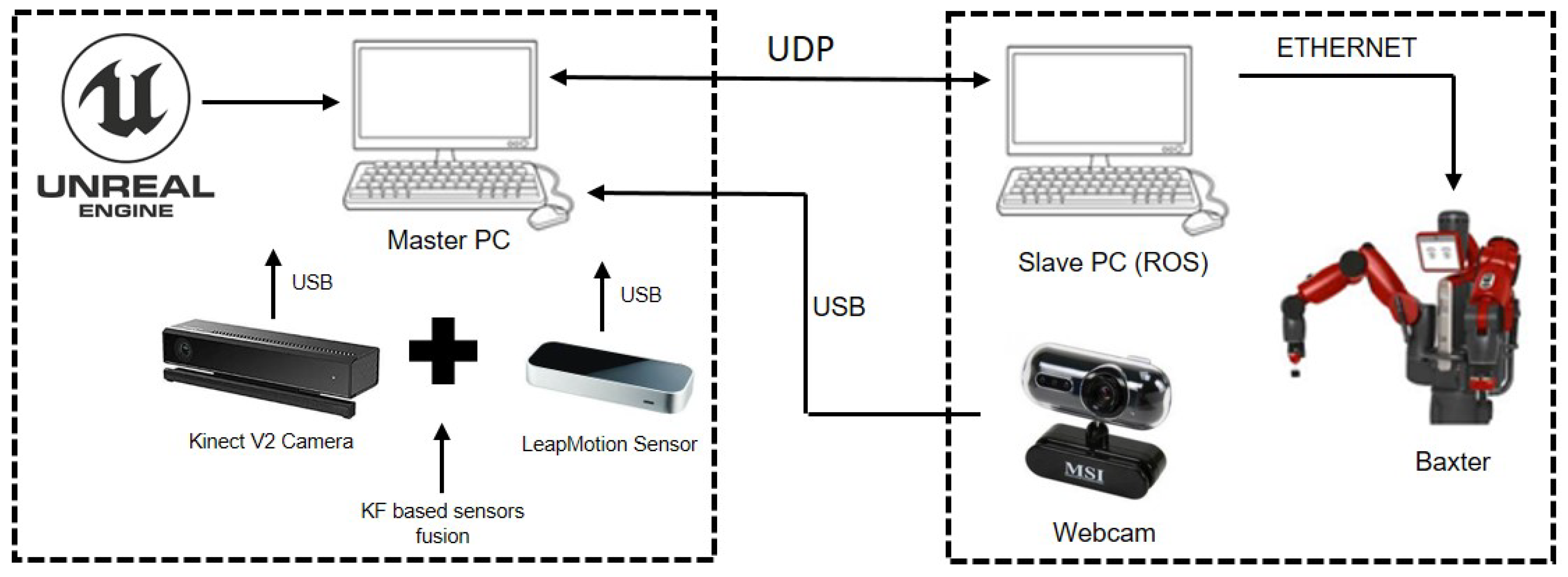

2. System Description and Theory

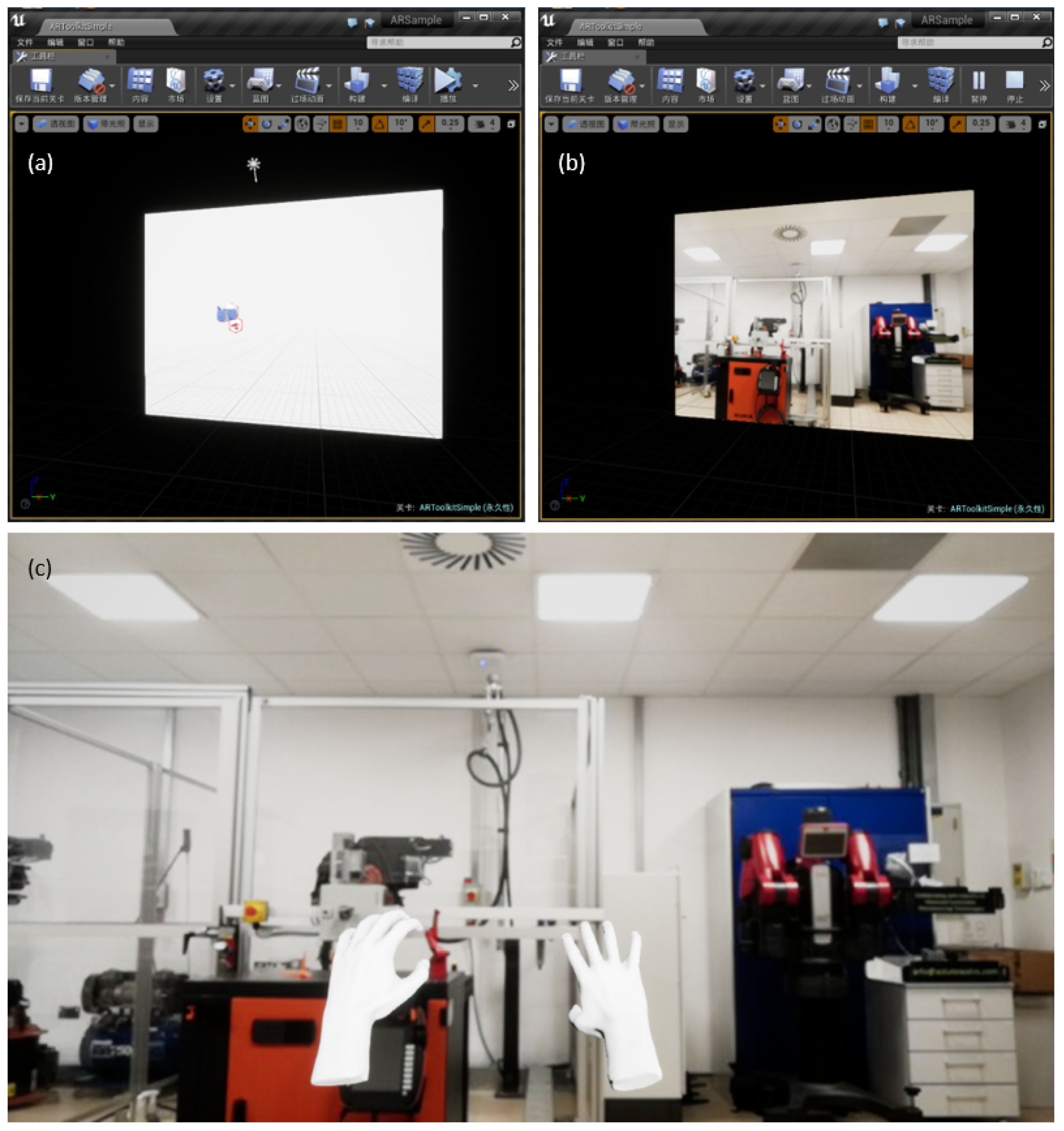

2.1. Unreal Engine Base Augmented Reality Technology

2.2. Augmented Reality Environment Generation

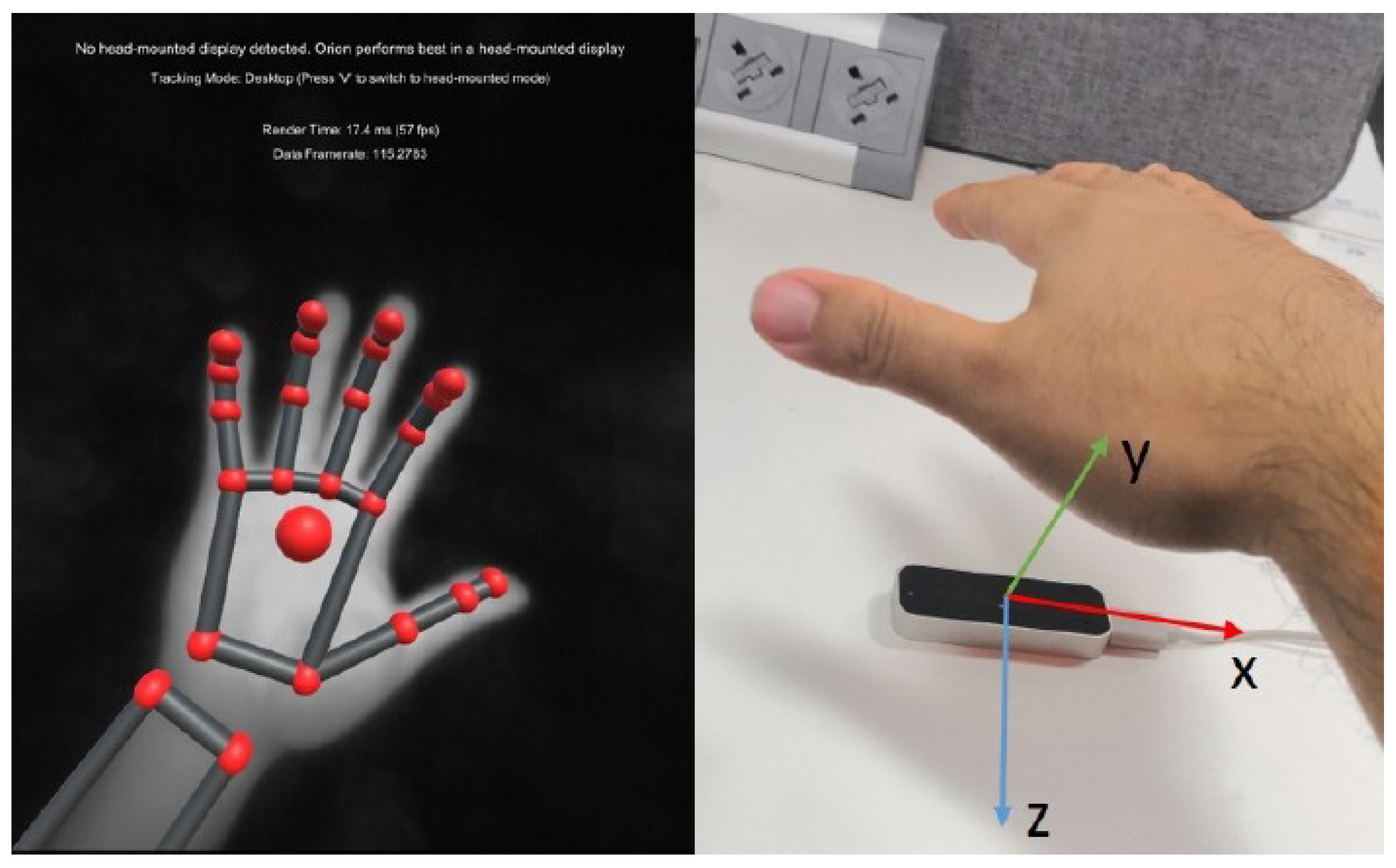

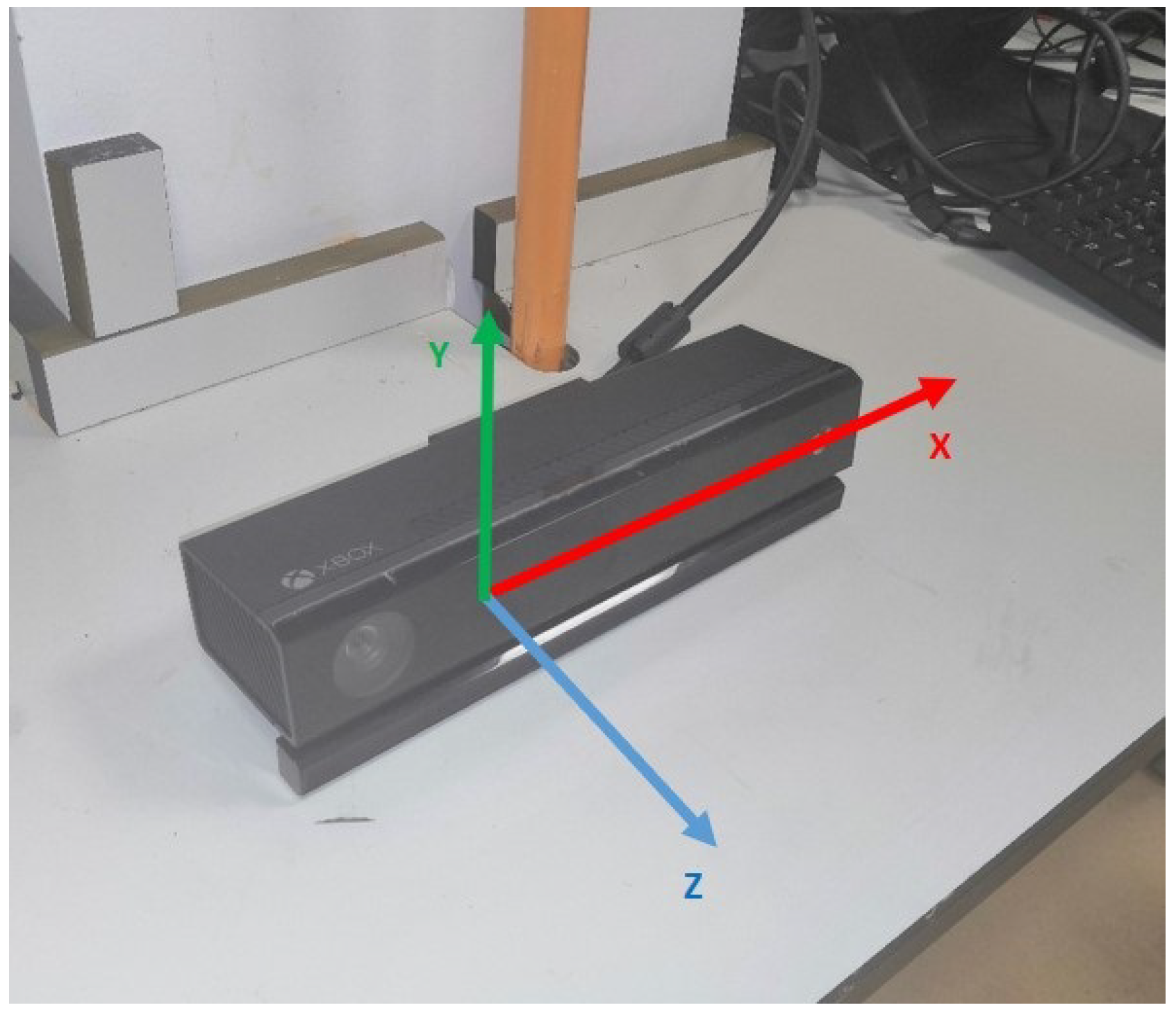

2.3. Hand Tracking Using Leap Motion & Kinect

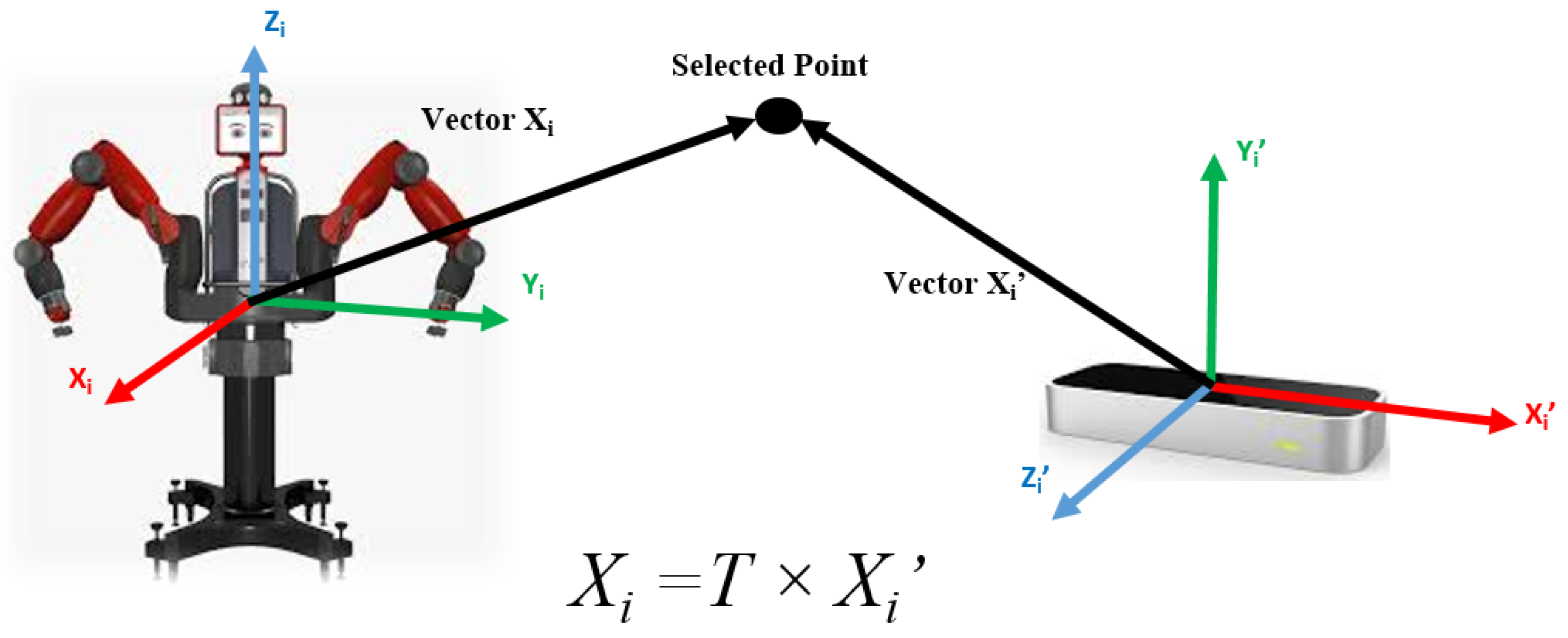

3. Calibration

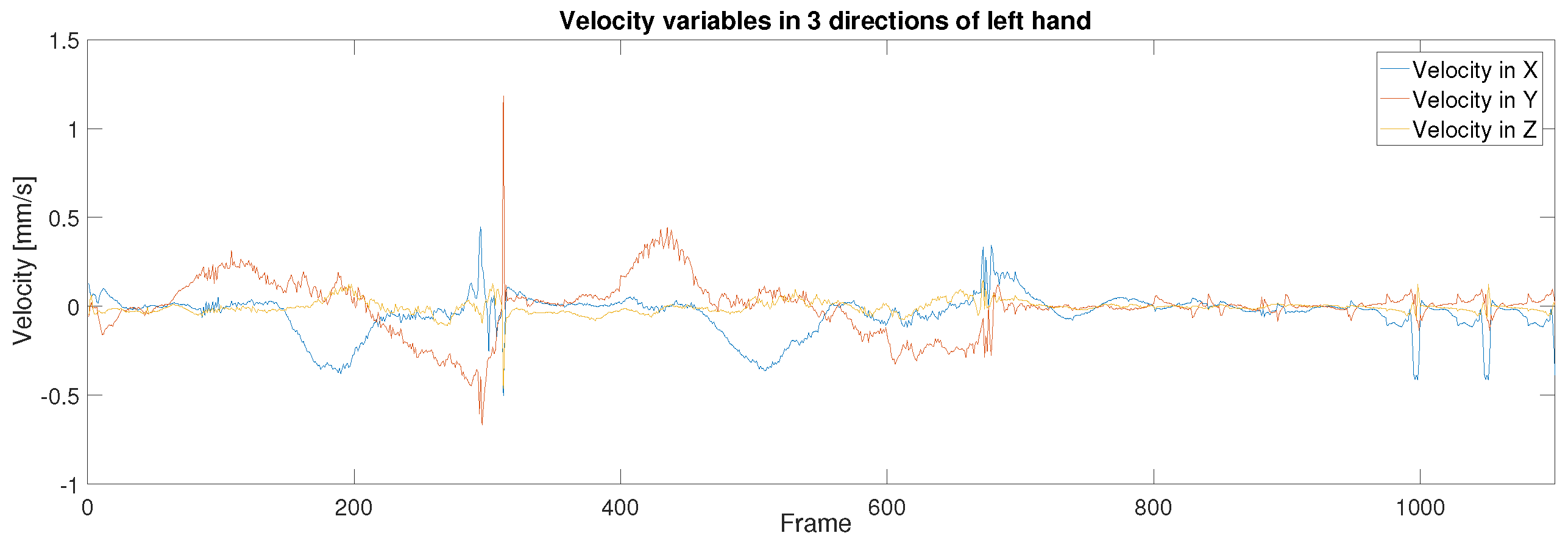

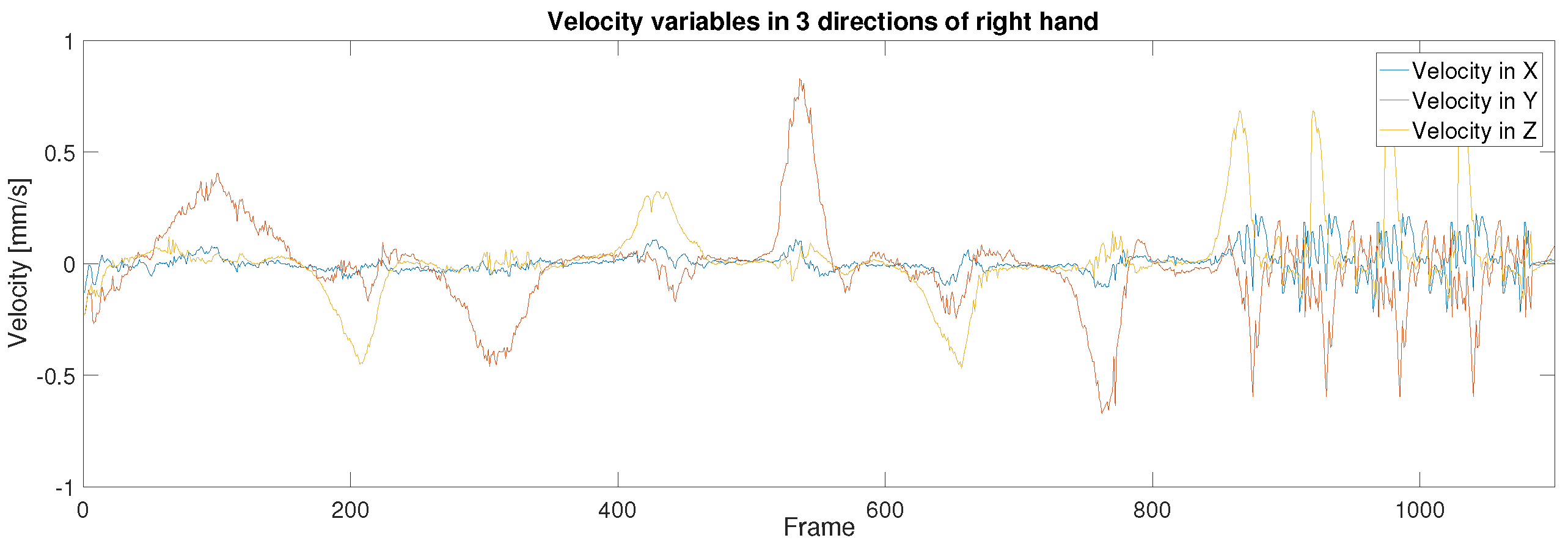

4. Measurement of Montion Velocity

4.1. Depth Information Acquisition

4.2. Pixel Matrix Generation

4.3. Hand Palm Tracking

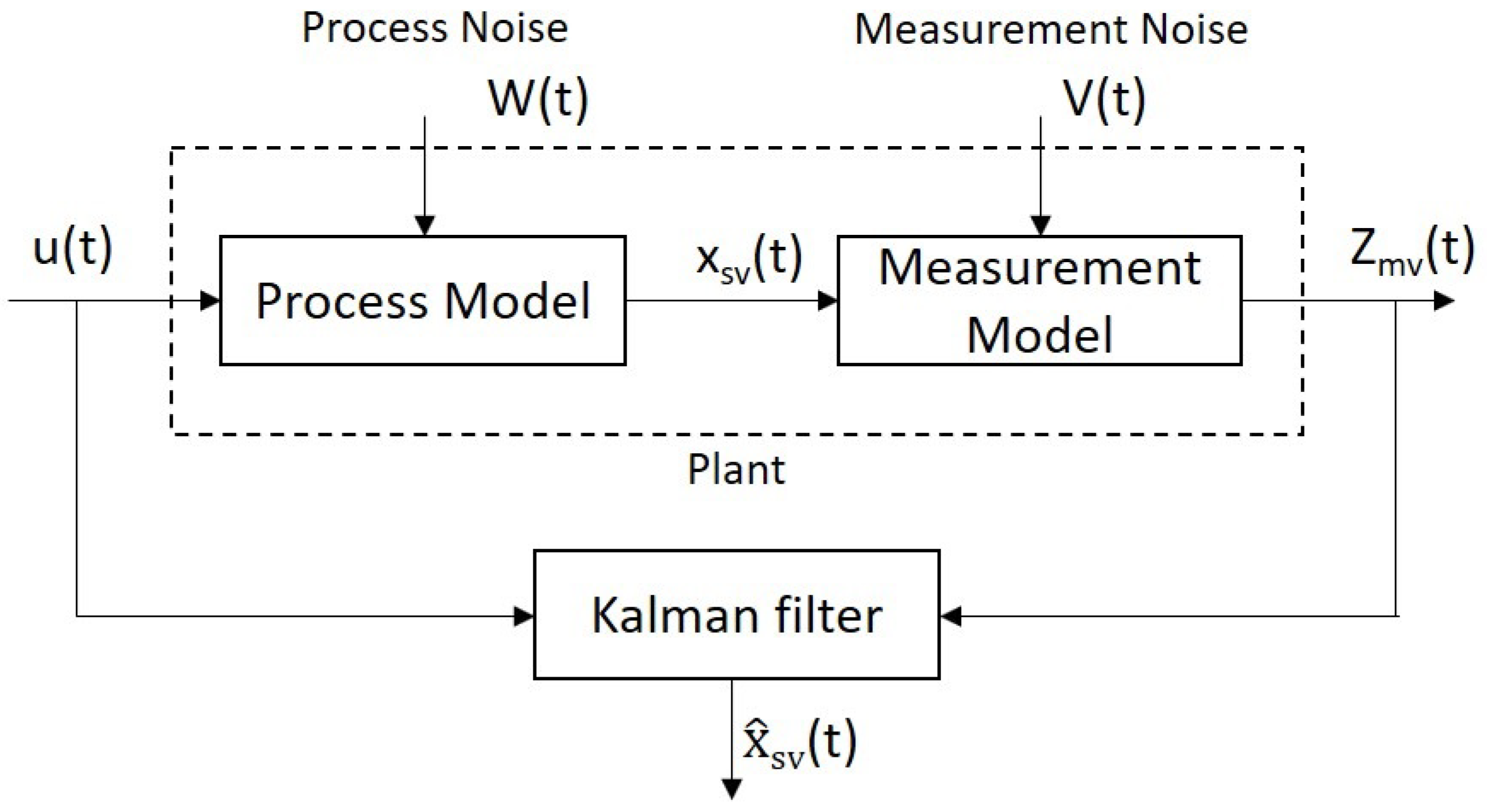

5. Kalman Filtering Based Sensor Fusion

6. Experimental Study & Aanlysis

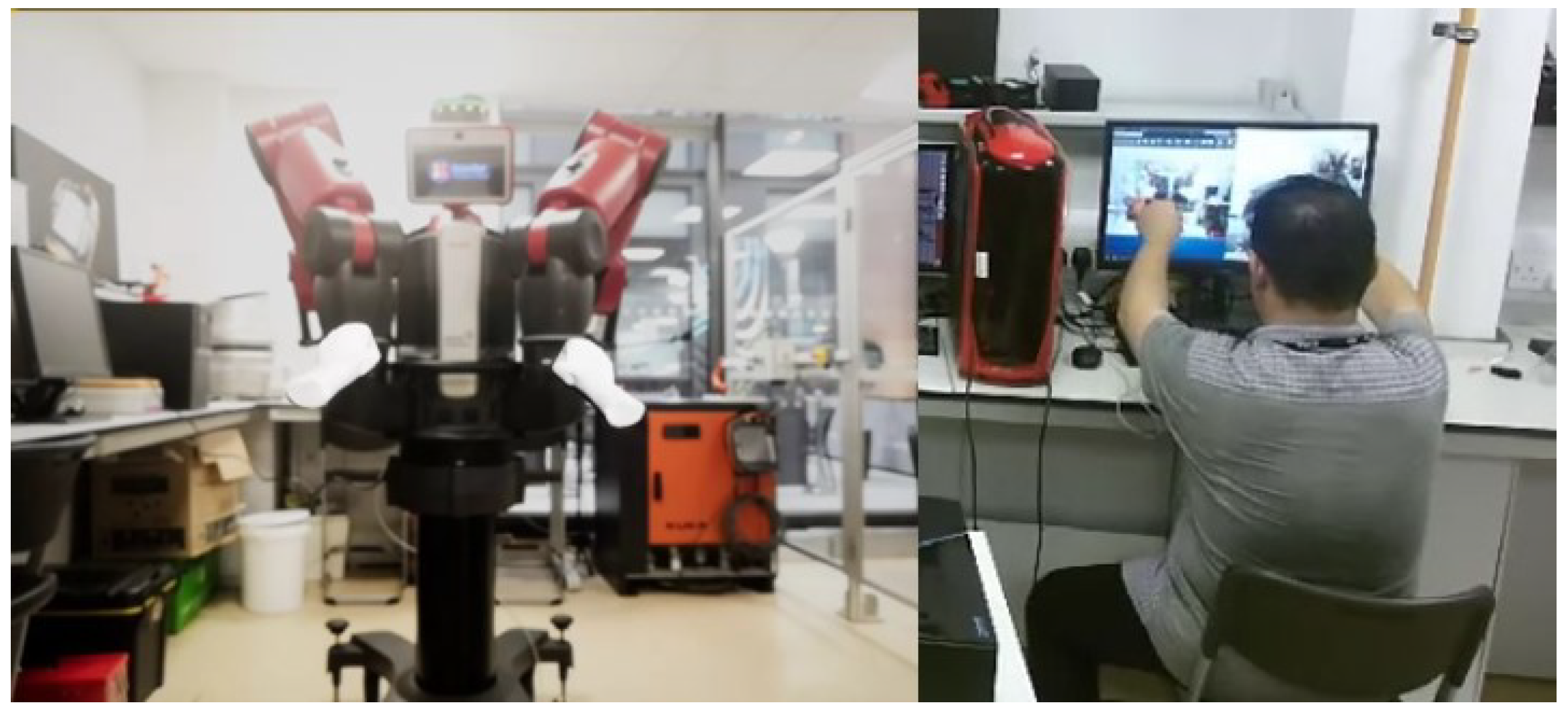

6.1. Experimental Setup

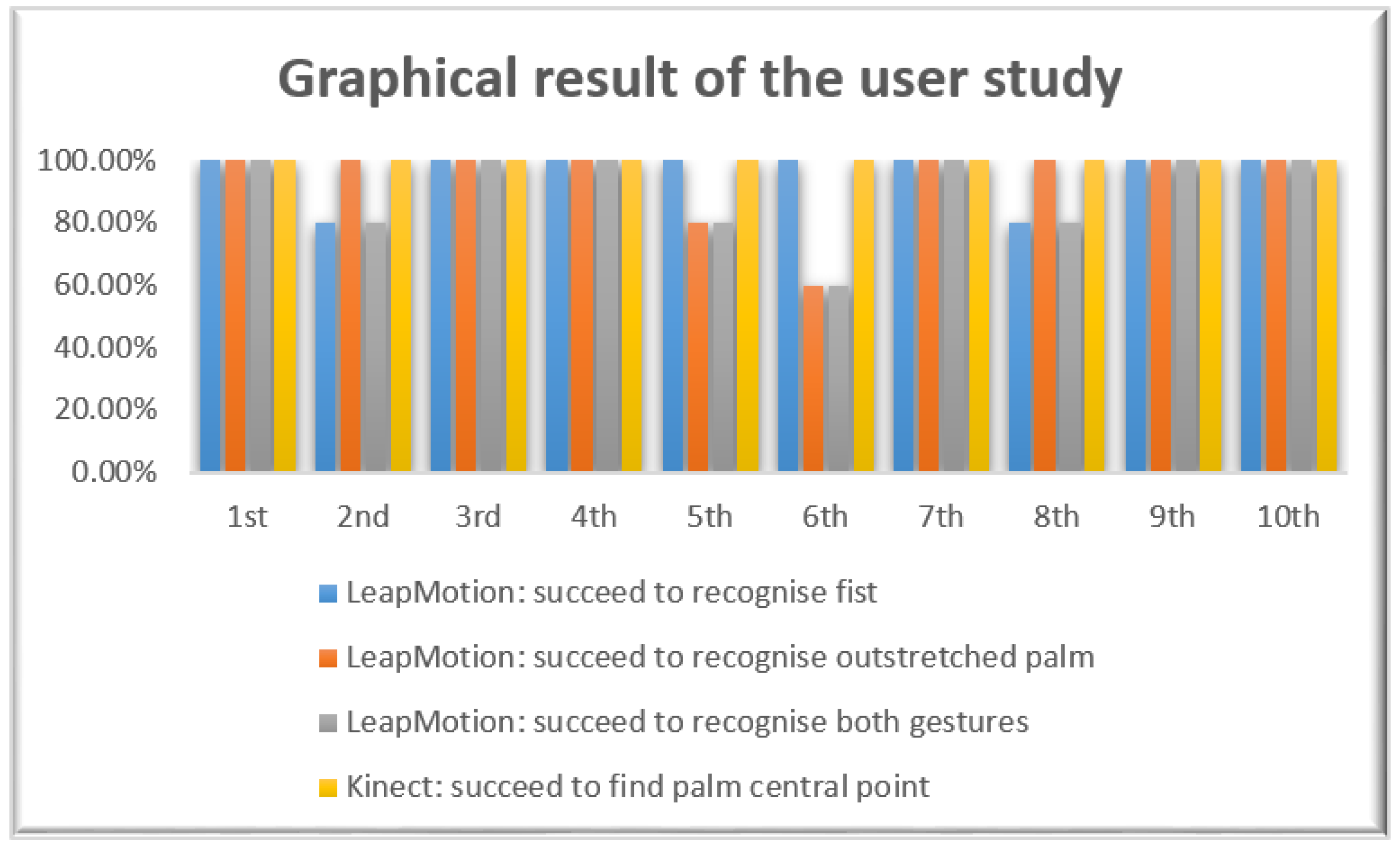

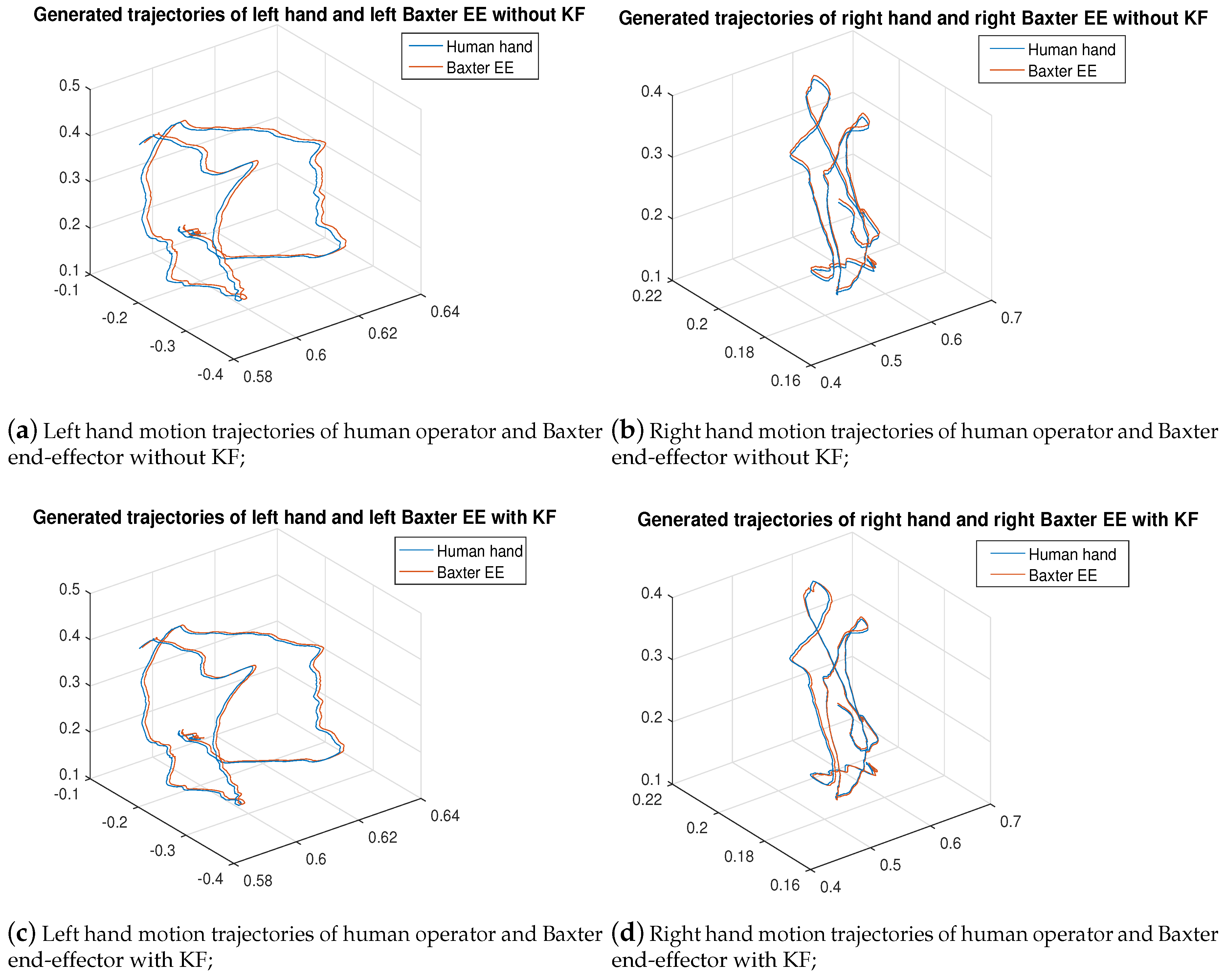

6.2. Experimental Result & Analysis

6.3. Remark

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Goodrich, M.A.; Schultz, A.C. Human–robot interaction: A survey. Found. Trends® Hum. Comput. Interact. 2008, 1, 203–275. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. Shaping human-robot interaction: Understanding the social aspects of intelligent robotic products. In CHI’04 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2004; pp. 1731–1732. [Google Scholar]

- Bremner, P.; Leonards, U. Efficiency of speech and iconic gesture integration for robotic and human communicators-a direct comparison. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1999–2006. [Google Scholar]

- Solis, J.; Marcheschi, S.; Frisoli, A.; Avizzano, C.A.; Bergamasco, M. Reactive robot system using a haptic interface: An active interaction to transfer skills from the robot to unskilled persons. Adv. Robot. 2007, 21, 267–291. [Google Scholar] [CrossRef][Green Version]

- Yang, C.; Zeng, C.; Liang, P.; Li, Z.; Li, R.; Su, C.Y. Interface Design of a Physical Human–Robot Interaction System for Human Impedance Adaptive Skill Transfer. IEEE Trans. Autom. Sci. Eng. 2018, 15, 329–340. [Google Scholar] [CrossRef]

- Pastor, P.; Hoffmann, H.; Asfour, T.; Schaal, S. Learning and generalization of motor skills by learning from demonstration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 763–768. [Google Scholar]

- Faion, F.; Friedberger, S.; Zea, A.; Hanebeck, U.D. Intelligent sensor-scheduling for multi-kinect-tracking. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 3993–3999. [Google Scholar]

- Nechyba, M.C.; Xu, Y. Human skill transfer: Neural networks as learners and teachers. In Proceedings of the 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995; Volume 3, pp. 314–319. [Google Scholar]

- Li, C.; Yang, C.; Giannetti, C. Segmentation and generalization for writing skills transfer from humans to robots. Cognit. Comput. Syst. 2019, 1, 20–25. [Google Scholar]

- Peppoloni, L.; Brizzi, F.; Ruffaldi, E.; Avizzano, C.A. Augmented reality-aided tele-presence system for robot manipulation in industrial manufacturing. In Proceedings of the 21st ACM Symposium on Virtual Reality Software and Technology, Beijing, China, 13–15 November 2015; pp. 237–240. [Google Scholar]

- Tripicchio, P.; Ruffaldi, E.; Gasparello, P.; Eguchi, S.; Kusuno, J.; Kitano, K.; Yamada, M.; Argiolas, A.; Niccolini, M.; Ragaglia, M.; et al. A Stereo-Panoramic Telepresence System for Construction Machines. Procedia Manuf. 2017, 11, 1552–1559. [Google Scholar] [CrossRef]

- Jacinto-Villegas, J.M.; Satler, M.; Filippeschi, A.; Bergamasco, M.; Ragaglia, M.; Argiolas, A.; Niccolini, M.; Avizzano, C.A. A novel wearable haptic controller for teleoperating robotic platforms. IEEE Robot. Autom. Lett. 2017, 2, 2072–2079. [Google Scholar] [CrossRef]

- Quintero, C.P.; Li, S.; Pan, M.K.; Chan, W.P.; Van der Loos, H.M.; Croft, E. Robot programming through augmented trajectories in augmented reality. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1838–1844. [Google Scholar]

- Lee, D.; Park, Y.S. Implementation of Augmented Teleoperation System Based on Robot Operating System (ROS). In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5497–5502. [Google Scholar]

- Morato, C.; Kaipa, K.N.; Zhao, B.; Gupta, S.K. Toward safe human robot collaboration by using multiple kinects based real-time human tracking. J. Comput. Inf. Sci. Eng. 2014, 14, 011006. [Google Scholar] [CrossRef]

- Ragaglia, M.; Zanchettin, A.M.; Rocco, P. Trajectory generation algorithm for safe human-robot collaboration based on multiple depth sensor measurements. Mechatronics 2018, 55, 267–281. [Google Scholar] [CrossRef]

- Casalino, A.; Guzman, S.; Zanchettin, A.M.; Rocco, P. Human pose estimation in presence of occlusion using depth camera sensors, in human-robot coexistence scenarios. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–7. [Google Scholar]

- Feng, J.; Wang, Z.; Zeng, M. Distributed weighted robust Kalman filter fusion for uncertain systems with autocorrelated and cross-correlated noises. Inf. Fusion 2013, 14, 78–86. [Google Scholar] [CrossRef]

- Jenny, S. Enhancing Tourism with Augmented and Virtual Reality; HAMK: Espoo, Finland, 2017. [Google Scholar]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented reality: A class of displays on the reality-virtuality continuum. In Telemanipulator and Telepresence Technologies; International Society for Optics and Photonics; Photonics for Industrial Applications: Boston, MA, USA, 1995; Volume 2351, pp. 282–292. [Google Scholar]

- Natephra, W.; Motamedi, A.; Fukuda, T.; Yabuki, N. Integrating building information modeling and virtual reality development engines for building indoor lighting design. Vis. Eng. 2017, 5, 19. [Google Scholar] [CrossRef]

- Dang, T.L. Level Designing in Game Engine; Helsinki Metropolia University of Applied Sciences: Helsinki, Finland, 2017. [Google Scholar]

- Sanders, A. An Introduction to Unreal Engine 4; AK Peters/CRC Press: Middlesex County, MA, USA, 2016. [Google Scholar]

- Tran, N.; Rands, J.; Williams, T. A Hands-Free Virtual-Reality Teleoperation Interface for Wizard-of-Oz Control. In Proceedings of the 1st International Workshop on Virtual, Augmented, and Mixed Reality for HRI, Chicago, IL, USA, 5–8 March 2018. [Google Scholar]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with leap motion and kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569. [Google Scholar]

- Lu, W.; Tong, Z.; Chu, J. Dynamic hand gesture recognition with leap motion controller. IEEE Signal Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Amon, C.; Fuhrmann, F.; Graf, F. Evaluation of the spatial resolution accuracy of the face tracking system for kinect for windows v1 and v2. In Proceedings of the 6th Congress of the Alps Adria Acoustics Association, Graz, Austria, 16–17 October 2014. [Google Scholar]

- Li, C.; Yang, C.; Liang, P.; Cangelosi, A.; Wan, J. Development of kinect based teleoperation of nao robot. In Proceedings of the 2016 International Conference on Advanced Robotics and Mechatronics (ICARM), Macau, China, 18–20 August 2016; pp. 133–138. [Google Scholar]

- Yang, C.; Chen, J.; Ju, Z.; Annamalai, A.S. Visual servoing of humanoid dual-arm robot with neural learning enhanced skill transferring control. Int. J. Hum. Robot. 2018, 15, 1750023. [Google Scholar] [CrossRef]

- Zhuang, H.; Roth, Z.S.; Sudhakar, R. Simultaneous robot/world and tool/flange calibration by solving homogeneous transformation equations of the form AX= YB. IEEE Trans. Robot. Autom. 1994, 10, 549–554. [Google Scholar] [CrossRef]

- Elgendi, M.; Picon, F.; Magnenat-Thalmann, N.; Abbott, D. Arm movement speed assessment via a Kinect camera: A preliminary study in healthy subjects. Biomed. Eng. Online 2014, 13, 88. [Google Scholar] [CrossRef] [PubMed]

- Davari, N.; Gholami, A.; Shabani, M. Multirate Adaptive Kalman Filter for Marine Integrated Navigation System. J. Navig. 2016, 70, 1–20. [Google Scholar] [CrossRef]

- Särkkä, S.; Solin, A. On continuous-discrete cubature Kalman filtering. IFAC Proc. Vol. 2012, 45, 1221–1226. [Google Scholar] [CrossRef]

- Li, C.; Yang, C.; Wan, J.; Annamalai, A.S.; Cangelosi, A. Teleoperation control of Baxter robot using Kalman filter-based sensor fusion. Syst. Sci. Control Eng. 2017, 5, 156–167. [Google Scholar] [CrossRef]

| Performance Index Values | Without KF | With KF | Promotion Ratio | |||

|---|---|---|---|---|---|---|

| Left | Right | Left | Right | Left | Right | |

| x direction | 5.297% | 2.652% | 3.587% | 1.763% | 32.272% | 33.522% |

| y direction | 5.438% | 2.786% | 3.609% | 1.815% | 33.628% | 34.852% |

| z direction | 4.973% | 2.541% | 3.304% | 1.655% | 33.573% | 34.868% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Fahmy, A.; Sienz, J. An Augmented Reality Based Human-Robot Interaction Interface Using Kalman Filter Sensor Fusion. Sensors 2019, 19, 4586. https://doi.org/10.3390/s19204586

Li C, Fahmy A, Sienz J. An Augmented Reality Based Human-Robot Interaction Interface Using Kalman Filter Sensor Fusion. Sensors. 2019; 19(20):4586. https://doi.org/10.3390/s19204586

Chicago/Turabian StyleLi, Chunxu, Ashraf Fahmy, and Johann Sienz. 2019. "An Augmented Reality Based Human-Robot Interaction Interface Using Kalman Filter Sensor Fusion" Sensors 19, no. 20: 4586. https://doi.org/10.3390/s19204586

APA StyleLi, C., Fahmy, A., & Sienz, J. (2019). An Augmented Reality Based Human-Robot Interaction Interface Using Kalman Filter Sensor Fusion. Sensors, 19(20), 4586. https://doi.org/10.3390/s19204586