1. Introduction

Compressed sensing (CS) theory has been thoroughly analyzed and extensively applied in the signal processing [

1,

2] and image processing community [

3,

4,

5] during the past decades. CS theory indicates that a sparse signal can be reconstructed from a few of measurements lower than the Nyquist rate required [

6,

7]. Specifically, an unknown vector

can be recovered by solving an inverse problem with a sparsity-promoting regularization term, such as

l1-norm or total variation (TV) norm, as follows:

where a measurements vector

is generated by

y =

Ax +

ε,

with

m <

n denotes a sensing matrix, and

represents a noise vector. There are various convex optimization methods [

8,

9,

10,

11] and sparse Bayesian learning methods [

12,

13,

14] dealing with related inverse problems. However, the vast majority of the above-mentioned algorithms consider the real-valued situations. In many applications, such as diverse as wireless communication [

15,

16,

17], biomedical [

18,

19], and radar [

20,

21,

22], the complex domain provides signals and images with a more convenient and appropriate representation to preserve their sparsity and phase information than the real domain. Motived by this, we investigate CS reconstruction methods in complex-valued cases.

In recent years, there have been many papers focusing on this problem, such as Complex Approximate Message Passing (CAMP) [

23] and M-lasso [

24]. CAMP is the extension of Approximate Message Passing (AMP) [

25] to the complex domain. However, the reconstruction performance of CAMP for the unequal-amplitude sparse signal is poor [

26]. M-lasso combines the zero subgradient equations with M-estimation settling Equation (1) in the complex domain. However, the updating strategy in M-lasso is cyclic coordinate descent (CCD) [

27], which calculates one element at a time while keeping others fixed at the current iteration. Obviously, it is computationally expensive. Furthermore, these schemes are designed for specific

l1-norm regularization problems. Thus, a more general algorithm for CS recovery in complex variables is needed.

The Split Bregman method (SBM) proposed in [

28] is a universal convex optimization algorithm for both

l1-norm and TV-norm regularization problems. By the idea of decomposing the original problem into several subproblems worked out by Bregman Iteration (BI) [

29,

30], SBM has been widely utilized in the complex domain through the complex-to-real converting technique [

31,

32], e.g., MRI imaging [

33], SAR imaging [

34], forward-looking scanning radar imaging [

35], SAR image super-resolution [

36], and massive MIMO channel estimation [

37]. However, SBM still has great potential in terms of both reconstruction performance and time cost considering the following two points: The original BI defined in the real domain may not make good use of the phase information for complex variables, which degrades the recovery accuracy; secondly, the converting technique quadruples the elements of the sensing matrix

A to 2

m × 2

n, which consumes more memory and time within the iteration process.

To tackle the aforementioned problems, this paper theoretically generalizes the original SBM into the complex domain, named the complex-valued Split Bregman method (CV-SBM). We first define the complex-valued Bregman distance (CV-BD) and replace the associated regularization term with the CV-BD in the inverse problem. Then, complex-valued Bregman Iteration (CV-BI) is proposed to solve this new problem. In addition, according to the calculation rules, Wirtinger’s Calculus, and optimization theory for complex variables, how well-defined the CV-BI is and its convergence are analyzed in detail. The proof of the above two properties reveals that CV-BI can settle inverse problems if the regularization term is convex. Since CV-BI requires the help of additional algorithms to find the solution to the specific regularization, as BI does, its solution is still complicated and computationally expensive. Inspired by SBM, we adopt the variable separation technique to avoid the requirement of other optimization algorithms and then present CV-SBM to settle the convex inverse problems with the simplified solution. Simulation results on the complex-valued l1-norm problems reveal the effectiveness of CV-SBM compared with existing methods. Particularly, the proposed CV-SBM exhibits 18.2%, 17.6%, and 26.7% lower mean square error (MSE) and takes 28.8%, 25.6%, and 23.6% less time than SBM through the complex-to-real transforming technique in 10 dB, 15 dB and 20 dB SNR cases with large signal scale n = 512.

The rest of this paper is organized as follows. In

Section 2, we briefly review the original BI and SBM techniques.

Section 3 proposes and analyzes CV-BI and CV-SBM in detail.

Section 4 conducts numerical experiments and compares the results with some existing CS reconstruction algorithms in the complex domain. Conclusions and future work are discussed in

Section 5.

3. Complex-Valued Split Bregman Method

3.1. Wirtinger Calculus and Wirtinger’s Subgradients

As is well-known, convex optimization theory requires the differentiability of the objective function. For

T(

c) =

TR(

c) +

jTI(

c) in complex variables

c =

cR +

jcI, the complex differentiability equals to the satisfied Cauchy–Riemann conditions:

For a complex-valued

l1-norm regularization problem:

where

,

,

,

. Apparently,

F(

x) does not obey (17) so that calculating the complex gradient directly is unavailable.

To overcome such a problem, an alternative tool for computing the complex gradient was brought into light recently called Wirtinger’s calculus [

39]. It relaxes the strict requirement for complex differentiability and allows the computation of the complex gradient in simple rules and principles. A key point in Wirtinger’s calculus is the definition of Wirtinger’s gradient (W-gradient) and the conjugate Wirtinger’s gradient (CW-gradient)

where

and

represent the gradient of the

T at

cR and

cI, which can be obtained by the traditional ways. According to Equations (19) and (20), one can calculate the W-gradient of

c* and the CW-gradient of

c:

Considering that both the W-gradient for

c* and the CW-gradient for

c are equal to zero, in Wirtinger’s calculus we can treat

c and

c* as two irrelevant or independent variables, which is the main approach allowing us to utilize the elegance of Wirtinger’s calculus. Here is an example: if

T(

c) =

c(

c*)

2, then we have

and

. More details and examples can be found in [

42].

In general, for the convex function in complex variables, the optimization condition is the CW-gradient equal to zeros vector. Nevertheless, in practice, some functions may not be differentiable everywhere, e.g.,

l1-norm in

F(

x) at zero. In this case, the conjugate Wirtinger’s subgradients (CW-subgradients) [

39] can be adopted to construct the gradient path towards the optimal point. For a real convex function in complex variables

, we define a CW-subgradient

of

T at

c if

and it satisfies

where

and

denote the subgradient of

T at

cR and

cI. The set of all CW-subgradients of

T at

c is called Wirtinger’s differential of

T at

c and is represented by

. It should be noted that for the differentiable point of

T, the Wirtinger’s differential only contains one element, i.e., its CW-gradient. Wirtinger’s differential of modulus |

xi| and

are presented as follows [

43].

where

i is the index for the element of vector

x and

s is some complex number verifying

. Then, a necessary and sufficient condition for the optimization solution to Equation (18) is that

[

43]. By the definition of the CW-subgradient, in the following subsection, we can generalize BD into the complex domain.

3.2. CV Bregman Distance

To prevent the BD becoming complex-valued, we first generalize the BD into the complex domain and introduce the CV Bregman distance (CV-BD) theoretically.

Definition 2. For

, we define the quantity

as a CV-BD associated with real convex function J in complex variables. Clearly, no matter whether the variables u and v are in the real or the complex domain,

is always a real-valued scalar. According to (24), one can point out that a CV-BD is non-negative.

To ensure that the CV-BD can be utilized as the objective function as the BD, in the following Lemma 1 and Lemma 2 prove that the CV-BD is as the same convex as J(x) and can measure the closeness at two points in J.

Lemma 1. Letbe a CV-BD associated with real convex or strictly convex function J, where. Thenis as the same convex property as J for variable u in each v.

Proof. Assume

J is a real convex function and let

,

, and

. Then we get

where

.

Considering that

J is a real convex function,

J satisfies

Then we have

This completes the proof of

is a convex function for variable

u as

J.

For

J is a real strictly convex function, we assume

,

and

. And

J satisfies

Then according to Equations (28), (29), and (32) we obtain

which proves that

is a strictly convex function for variable

u as

J. Then, it can be concluded that

is as the same convex property as

J for variable

u in each

v. □

Lemma 2. Letbe a CV-BD associated with real strictly convex function J and assume a pointis on the line segment connecting u and v, where,. Thenand equality holds if and only if u = v.

Proof. Assume

, then we derive according to

Lemma 1

when

, we yield

This completes the proof of

Lemma 2. Then, the CV-BD at two points in convex function

J would decrease when they get closer, and may become zero if and only if the two points coincide. This property makes the CV-BD the measurement of closeness at two points.

Thus, inspired by the original BI, we use the CV-BD between the variables to be solved and the current solution to replace real convex function

J(

x) as the objective function:

Within the iterations, the CV-BD is nonincreasing. This will be proved in the next subsection. □

Obviously, is convex because of H(x) and the CV-BD. However, the CV-BD may be multivalued at nondifferential xk-1, which inevitably interferes with the solution of xk. As we shall prove below, this issue is not vital, since CV-BI introduced in the following subsection automatically chooses a suitable CW-subgradient when dealing with Equation (36).

3.3. CV Bregman Iterations

CV-BI for Equation (36) is proposed directly and the definition and the convergence are proved in the following.

3.3.1. CV-BI Algorithm

Algorithm 1. Let x0 = 0, p0 = 0, for k = 1,2,

1. compute

xk as a minimizer of the convex function

Qk(

x)

2. compute

Generally, we can initialize x0 and p0 whatever satisfy . Nevertheless, for any x0≠0, its CW-subgradient requires optional calculation, which is not desired in practical.

3.3.2. Definition of the Iteration

In this subsection, we reveal that the iterative procedure in Algorithm 1 is well defined. Specifically, a minimizer xk exists in Qk(x) and the iteration can find an appropriate CW-subgradient pk automatically.

Proposition 1. Assume that,

J(x) is convex and bounded, and let x0=0,. Then, for each, there exists a minimizer xk in Qk(x), and there exists an appropriate CW-subgradientandsuch thatMoreover, if A has no null space, a minimizer xk is unique. Proof. We prove the result by induction. In the case of

k = 1,

Q1(

x) becomes the original function

F(

x), of which the existence of minimizers and the optimality condition

p1 +

q1 =

p0 = 0 is well known [

44]. In addition, assume

and we have

p1 =

AHr1.

Then, we proceed from

k−1 to

k and assume

exists. To prove that the minimizers exist, we first discus the boundedness of

Qk(

x). Recalling the

l2-norm greater than or equal to zero,

Qk(

x) can be estimated as

Since only

J(

x) is not constant, the boundedness of

Qk(

x) implies the boundedness of

J(x). This shows that the level sets of

Qk are weak-* compact [

29]. Hence, a minimizer of

Qk exists due to the optimization theory. Besides, if

A has no null space and

H(

x) as well as

J(

x) is strictly convex,

Qk(

x) is also strictly convex, and therefore the minimizer is unique. This completes the proof of the existence of minimizers for all

k > 1.

The following proves

pk and

qk exist for all

k > 1. According to the optimality conditions for

Qk(

x)

we derive that

Recalling that assume

pk−1 exists, one can get that

and

are not null sets, and consequently yields the existence of

and

, which also satisfies Equation (38).

Recalling Equation (38) and

p0 = 0, we obtain that

The definition of CV-BI has been proved as mentioned above. The whole CV-BI can be summarized as follows:

□

| Algorithm 1: CV-BI |

| Initialization: x0 = 0 p0 = 0 k = 1 λ, |

|

While “stopping criterion is not met” do |

|

;

|

| ; |

| K = k + 1; |

| End while |

Review the entire process of proof and one can find that CV-BI possesses the ability to solve any kind of regularization term J(x) in Equation (18) if J(x) is a real convex function in complex variables. Furthermore, since each step of Algorithm 1 obeys the optimization rules in the complex domain instead of converting the objective function Qk(x) and variable x into the real domain, one can summarize that CV-BI preserve phase information for complex variables.

3.3.3. Convergence Analysis

In this subsection, the convergence property of CV-BI is analyzed. To be specific, two monotonicity properties are proved with the help of the CV-BD.

Proposition 2. Under the above assumption, the sequence of H(xk) obtained from the CV-BI is monotonically nonincreasing, we getMoreover, let x be such that J(x) < ∞, then we even have Proof. Recall the nonnegative property of the CV-BD and that

xk is the minimizer of the convex function

Qk(

x), we obtain

which implies Equation (45).

We can derive a formula motivated by the identity of the original BD [

45]:

Considering the definition of the CW subgradient and

, we yield

which is equivalent to Equation (46). □

Proposition 3. Under the same assumption as Proposition 2, letbe a minimizer of H(x) with, then we have Proof. Recall the nonnegative property of the CV-BD and that

is a minimizer of

H(

x), we get an inequality

According to Equations (49), (51) can be derived as

which proves Equation (50). The results of Equations (45) and (50) conclude a general convergence conclusion for CV-BI. More details about convergence can be found in [

29]. □

3.4. CV-SBM

For various kinds of regularization terms corresponding to Equation (43), CV-BI still has to employ other algorithms as BI does, which makes the solution process complicated and computationally expensive. Inspired by SBM, we separate the original variable and present CV-SBM to settle the convex inverse problems with the simplified solutions.

A constrained optimization problem in complex variables

can be transformed into an unconstrained one

Evidently,

F(

x,

d) is convex in

x and

d. Thus, by applying CV-BI to Equation (54) in each variable, we can derive that

To simplify the above iteration step Equation (55), we assume

and get

where

C1 is a constant. Substituting Equation (58) in Equations (55)–(57) yields

One can resolve Equation (59) by alternating minimization scheme with respect to

x and

dThe above two subproblems can be worked out easily. Considering the property of CV-BI, it can be inferred that CV-SBM is also universal for convex J(x) in any convex optimization task.

The overall CV-SBM is shown as Algorithm 2

| Algorithm 2: CV-SBM |

| Initialization: x0 = 0, d0 = 0, p0 = 0, λ, μ, k = 1 |

| While “stopping criterion is not met” do |

| ; |

| ; |

| ; |

| k =k+1; |

| End while |

Assuming Φ = I, then we can work out Equation (18) through CV-SBM by three steps [

35]:

Step1: Clear up the

x subproblem

Considering the

l2-norm is differentiable, Equation (63) can be tackled by taking the CW-gradient of

x equal to zero, and yield

Step2: Find a solution to the

d subproblem

This subproblem can be dealt with by a shrinkage operator

CV-SBM for l1-norm problem can be presented as Algorithm 3

| Algorithm 3: CV-SBM for l1-norm problem |

| Initialization: x0=0, d0=0, p0=0, λ, μ, k=1 |

| While “stopping criterion is not met” do |

| ; |

| ; |

| ; |

| k = k+1; |

| End while |

4. Numerical Experiments

This section presents the performance of the proposed CV-SBM by conducting a wide range of experiments solving

l1-norm problems in the complex domain. We apply the proposed method to recover a complex-valued random sparse signal

x from the noisy measurements

y generated by

y =

Ax +

ε, where

,

,

,

. The sparse signal

x consists of

L nonzero elements and the amplitudes of both

x’s and

A’s real part and imaginary part obey Gaussian Distribution

N (0,1). The noise vector

ε is assumed to be i.i.d zero-mean complex Gaussian noise. The contrastive means for the proposed scheme in the following subsections are as follows: classical OMP [

46], CAMP, M-lasso, and the original SBM converting technique [

47]. Noted that in the following, the original SBM is called RV-SBM. In addition,

Section 4.1.2 presents the performance of the proposed method conducted in ISAR imaging.

The stopping criterion for all algorithms is given as follow

or

where

tol = 2e

−4 denotes the tolerance and

kmax = 2000 is the maximum iteration times. All the experiments are carried out in MATLAB 2016b on the PC with Intel I7 7700K @4.2 GHz with 32 GB memory.

4.1. An Illustrative Example

4.1.1. Complex-Valued Random Sparse Signal Recovery

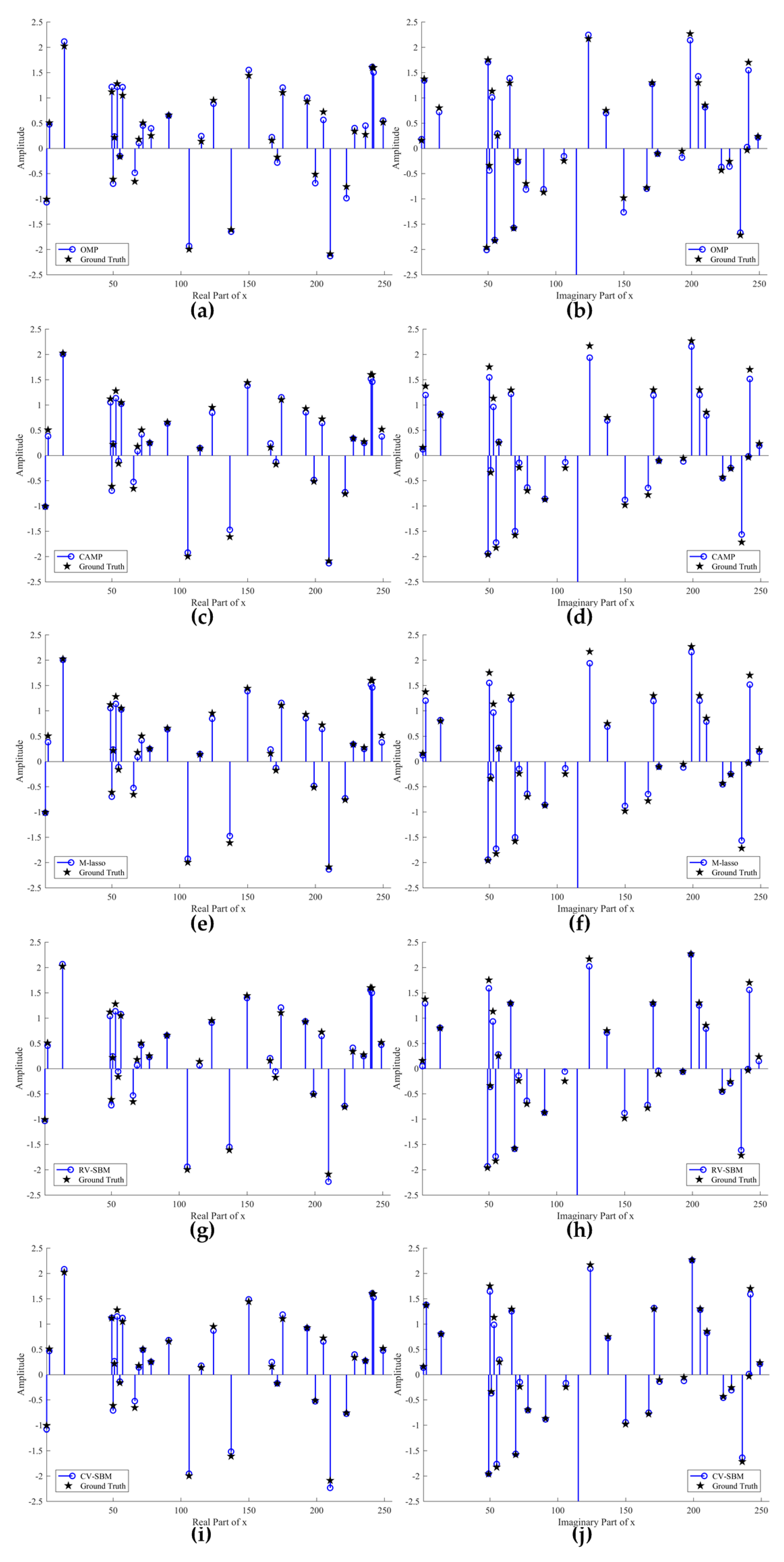

In this subsection, an illustrative example is devised to demonstrate the effectiveness of the proposed method in comparison with OMP, CAMP, M-lasso, and RV-SBM. We consider that the signal and measurement dimension is n = 256 and m = 128, respectively. Moreover, the sparsity level of x is fixed L = 32 and the Signal to Noise Ratio (SNR) is set to 15 dB.

Figure 1 shows the contrast to the real and imaginary part of reconstruction signal among the contrastive means and the proposed CV-SBM. The blue circle lines represent the recovered signal and the black stars denote the ground truth. Note that zero-valued points of

x remain hidden to emphasize the nonzero ones in

Figure 1. As shown in

Figure 1a,b, there are five and nine accurately reconstructed points (circle and star coincide) in real and imaginary part achieved by OMP, respectively. Unsurprisingly, plenty of points mismatch far away from their position, especially the 150th point in the imaginary domain.

Figure 1c,d exhibits the reconstruction result of CAMP, which yields nine well-recovered points in both the real and imaginary parts. However, there also exist outliers, but less than OMP’s. In

Figure 1e,f, eight and nine points are accurately recovered in the real and imaginary domains by M-lasso, respectively. It can be seen that CAMP and M-lasso behave almost the same, better than OMP.

Figure 1g,h give the recovery results for the original RV-SBM whose real part shows eight well-reconstructed points and whose imaginary part demonstrates 11 points. The proposed technique yields 10 and 15 accurately recovered points, shown in

Figure 1i,j, the most among the algorithms. Comparison of the number of accurately reconstructed points is presented in

Table 1. In addition, the furthest outlier given by CV-SBM is at the same length as RV-SBM’s but far less than the others’. This proves the effectiveness of CV-SBM for complex sparse signal recovery.

4.1.2. ISAR Imaging with Real Data

In this subsection, CV-SBM is applied in ISAR imaging with real data of the Yak-42 plane to demonstrate its superiority, comparing with RV-SBM, the range-Doppler (RD) algorithm, and the CS recovery method [

48]. Detailed descriptions of targets and data are provided in [

49]. Main radar parameters are listed as follows: The signal bandwidth is 400 MHz with carrier frequency 10 GHz, corresponding to a range resolution of 0.375 m. The pulse repetition frequency is 100 Hz, i.e., 64 pulses within dwell time [−0.32, 0.32] (s) are used in this experiment. Motion compensated data are utilized by the aforementioned four algorithms, shown in

Figure 2a–d.

Figure 2a exhibits the result of the RD algorithm, in which low-quality focal and high side-lobes occur. In

Figure 2b, many strong scatters are extracted by [

48]. However, there still exist several strong outliers marked in red boxes.

Figure 2c indicates the target’s geometry. Besides, the number of outliers recovered by RV-SBM is less than [

48]. In

Figure 2d, the target’s geometry is clear and scatters, marked in red box, extracted by CV-SBM are stronger than ones in the same area by [

48] and RV-SBM. Furthermore, most of the outliers shown in

Figure 2b,c are suppressed greatly by the proposed CV-SBM. This proves the effectiveness of CV-SBM in real data processing of ISAR imaging.

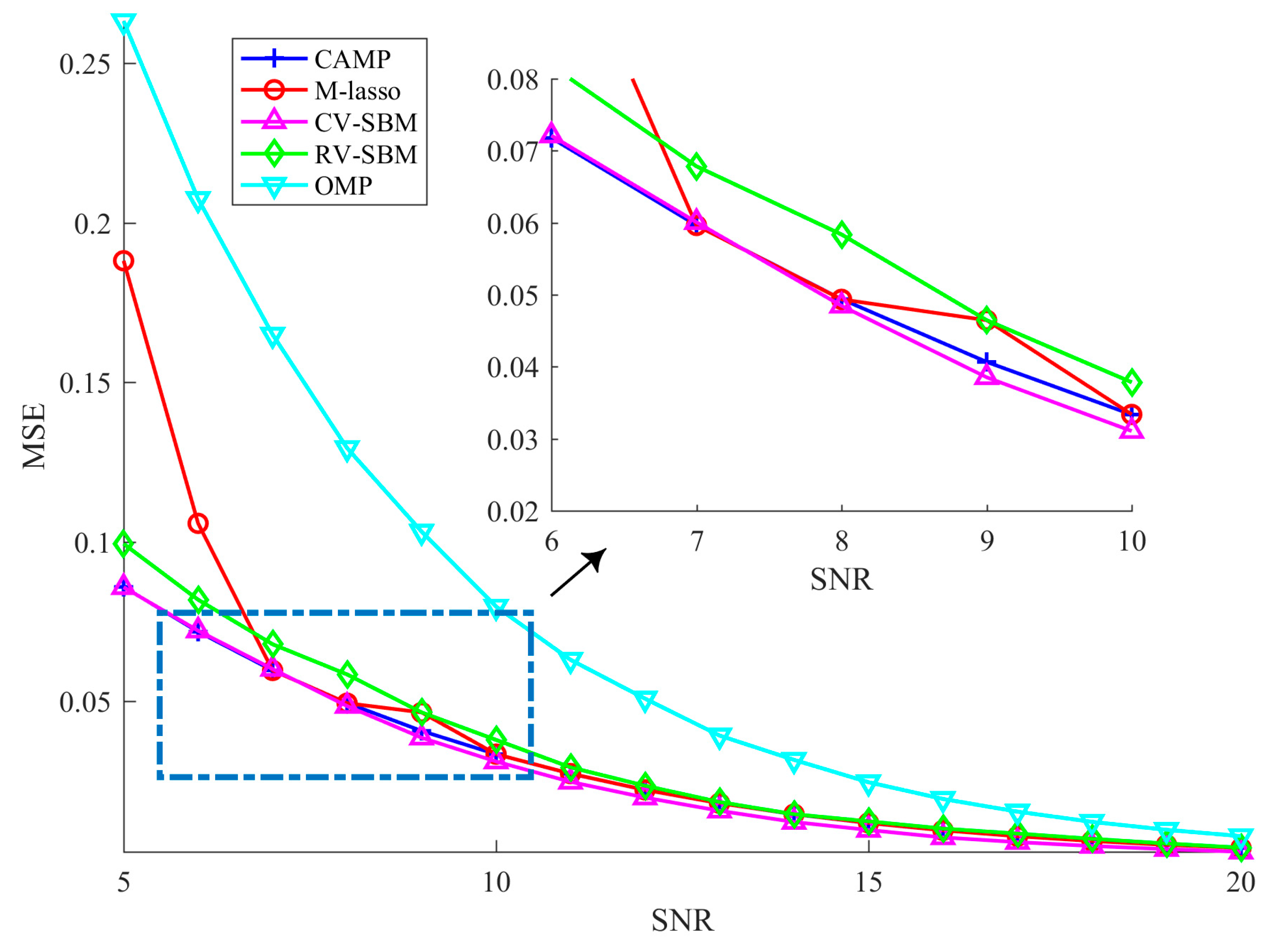

4.2. Robustness Against Measurement Noise

In this subsection, we test the robustness of the proposed technique against the measurement noise. The experimental parameters are set as follows: SNR varies from 5 dB to 20 dB and other parameters are fixed the same as in the previous subsection. For each SNR, we average the MSE of 100 independent trials as the experimental result, as shown in

Figure 3.

As the SNR increases, the MSE of the proposed scheme declines, which implies CV-SBM is robust to the noise. Before the SNR reaches 7 dB, the MSEs of CAMP and CV-SBM are almost the same, but when SNR goes beyond 7 dB, the MSE of CV-SBM surpasses CAMP’s and becomes the lowest among all the algorithms. Both OMP’s and RV-SBM’s MSE numerically exceed CV-SBM’s. In addition, CV-SBM behaves better than M-lasso, except at the point when the SNR is equal to 7 dB, at which they are approximately the same. This demonstrates that the proposed algorithm has better robustness against the measurement noise among the methods.

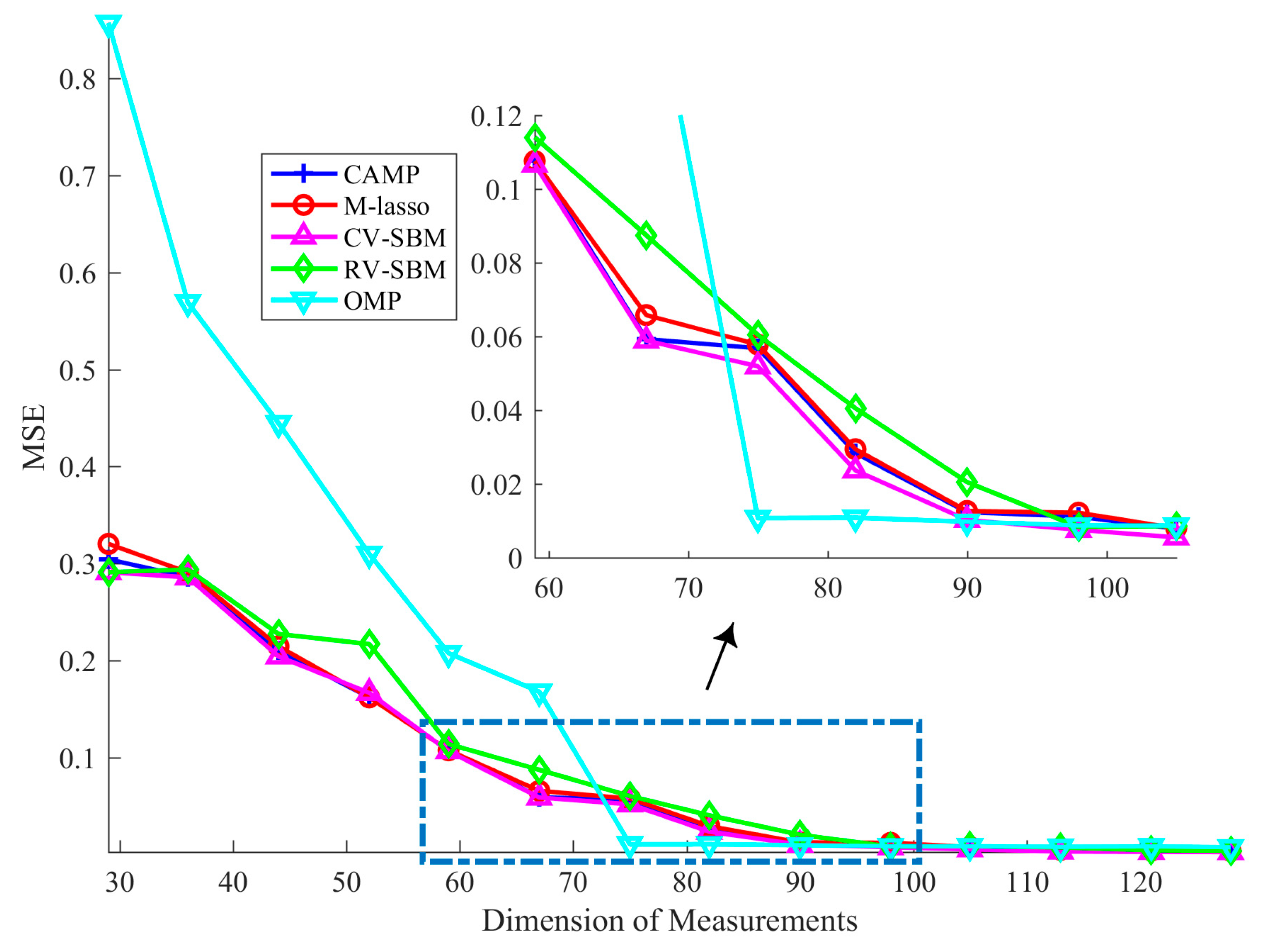

4.3. Robustness Against Measurement Noise

In this subsection, how the dimension of measurements influences the recovery result is presented. We set

n = 256, SNR = 15 dB,

L = 32, and

m varies from 29 to 128. As in the previous subsection, we measure the average MSE over 100 independent trials, as shown in

Figure 4. It can be seen that as the dimension of the measurements rises, the MSE of CV-SBM decreases, which means that the larger the dimension of the measurements, the better the recovery performance of the proposed method.

In

Figure 4, the MSE of CV-SBM is lower than RV-SBM’s and M-lasso’s. Except when the measurement dimension is equal to 52 and 59, the performance of the proposed method is better than CAMP’s. Before the dimension reaches 75, the MSE of OMP is far worse than CV-SBM’s. However, when the dimension exceeds 75, the MSE of OMP reaches to 0.01 suddenly but reduces slowly as it improves, becoming equal to CV-SBM’s at 90. When the dimension is larger than 90, the MSE of CV-SBM continues to decline and becomes the lowest among all the algorithms.

4.4. Time Cost Assessment

In this subsection, the computational cost of the proposed method is measured with increasing dimension of the signal. To this end, we vary

n from 128 to 1024 and fix SNR = 20 dB,

m = 0.5

n,

L = 0.125

n. For each

n, we conduct 20 independent trials and average the CPU time cost as the result, as shown in

Figure 5.

The result shows that CV-SBM takes less CPU time than OMP and M-lasso in all test dimensions. Before the dimension reaches 512, RV-SBM requires the least time. However, when the dimension is more than 512, CV-SBM requires less CPU time than RV-SBM. This is because the complex-to-real transformation utilized in RV-SBM expands the dimension of the sensing matrix A to 2m × 2n, which leads to an inverse matrix with 2n × 2n elements and takes more memory and time within the resolving process, while CV-SBM only needs to calculate a complex inverse matrix with n × n elements. Nevertheless, CV-SBM takes a little more time than CAMP in large signal scale situations thanks to CAMP’s specific design for l1-norm problems, whereas CV-SBM contains an inverse operator. However, CV-SBM still has great potential to exceed CAMP considering that the gap between CAMP and CV-SBM is not large.

4.5. Performance Comparison with RV-SBM

The tests mentioned above have shown that the proposed CV-SBM presents remarkable performance compared with RV-SBM in the same experimental environment. Thus, in this subsection, we focus on the convergence, time cost, and performance of CV-SBM and RV-SBM by implementing experiments with various parameters. In the following experiments, two main parameters for CV-SBM and RV-SBM are set to λ = 0.005 and μ = 120 and the stopping criterion (the tolerance tol and kmax) varies. Furthermore, other experimental parameters are as follow: L = 0.125n, m = 0.5n and SNR varies from 10 dB, 15 dB, and 20 dB. For each stopping criterion and SNR, 20 independent trials were carried out and the average MSE, CPU time cost, and iteration time are selected as the result. The average MSE and CPU time cost of the proposed method are also presented if CV-SBM presents better performance.

In the first test, we examine CV-SBM and RV-SBM in small scale

n = 256 and fix

tol = 2e

−4,

kmax = 2000, as shown in

Table 2. It can be seen that in each SNR situation, in the vast majority of trials, RV-SBM achieves the stopping criterion Equation (69) and requires less CPU time and fewer iterations, while the MSE of CV-SBM is always superior. This implies that RV-SBM possesses more rapid convergence, but this property also leads to a severe performance loss. Besides, the convergence speed of CV-SBM is about five times slower than that of RV-SBM, but this provides CV-SBM more iterations to attain better performance. It should be pointed out that the average CPU time of RV-SBM is not five times as fast as CV-SBM because a minority of trials of RV-SBM still consume 2000 iterations.

To inspect the performance regardless of the convergence condition, we reduce the tolerance to

tol = 2e

−5 and keep the other parameters consistent with the previous experiment (

Table 3). For any situation in

Table 3, both RV-SBM and CV-SBM stop by reaching the maximum iterations. As we can see, the MSE gap between CV-SBM and RV-SBM is extremely narrow, but still exists. This illustrates that CV-SBM achieves better performance due to employing the phase information. Furthermore, CV-SBM still requires a little more time than RV-SBM, as in the first experiment.

At last, large scale

n = 512 is taken into consideration (

Table 4). The tolerance

tol and the maximum iteration times

kmax are set the same as in

Table 3. CV-SBM achieves superior performance in terms of both MSE and time cost in comparison with RV-SBM. Specifically, CV-SBM yields 18.20%, 17.58%, and 26.67% lower MSE and requires 28.75%, 25.59%, and 23.64% less CPU time than RV-SBM in all kinds of SNR cases, respectively. This reveals that the proposed CV-SBM is extremely applicable in large-scale complex-valued sparse signal recovery.