Author Contributions

Conceptualization, M.S., J.I.H, H.S.C., and Y.S.B; formal analysis, M.S., J.I.H.; funding acquisition, M.S., H.S.C., and Y.S.B.; investigation, J.I.H., H.S.C., and S.M.H.; methodology, M.S., J.-H.K.; project administration, Y.S.B.; resources, supervision, J.-H.K., Y.S.B.; validation, M.S., S.M.H.; data curation, software, visualization, writing—original draft, M.S.; writing—review and editing, M.S., J.-H.K., and Y.S.B.

Figure 1.

Subject wearing the lower limb exoskeleton and main configuration of the system.

Figure 1.

Subject wearing the lower limb exoskeleton and main configuration of the system.

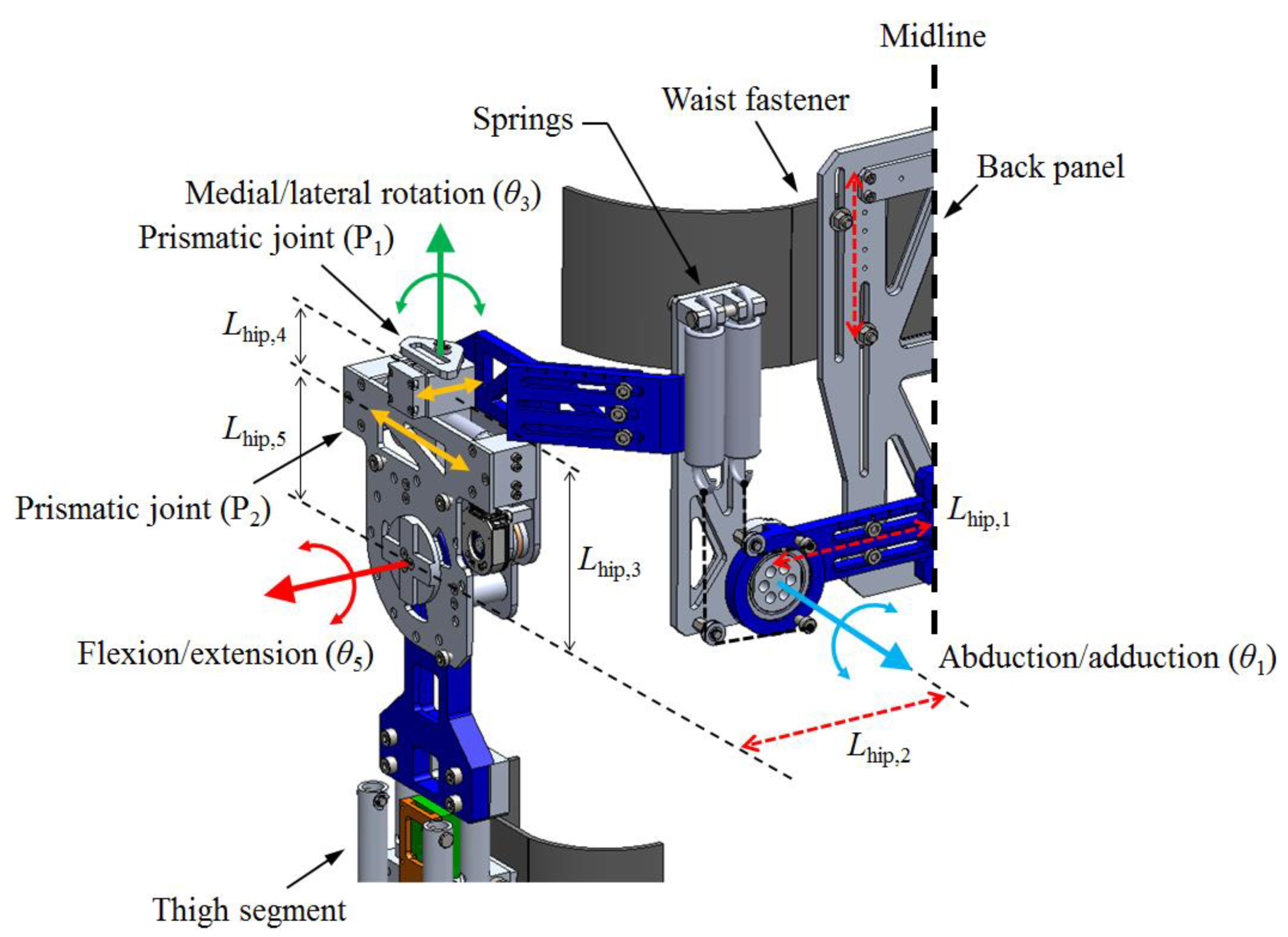

Figure 2.

Hip joint structure for hip flexion/extension, abduction/adduction, and medial/lateral rotation.

Figure 2.

Hip joint structure for hip flexion/extension, abduction/adduction, and medial/lateral rotation.

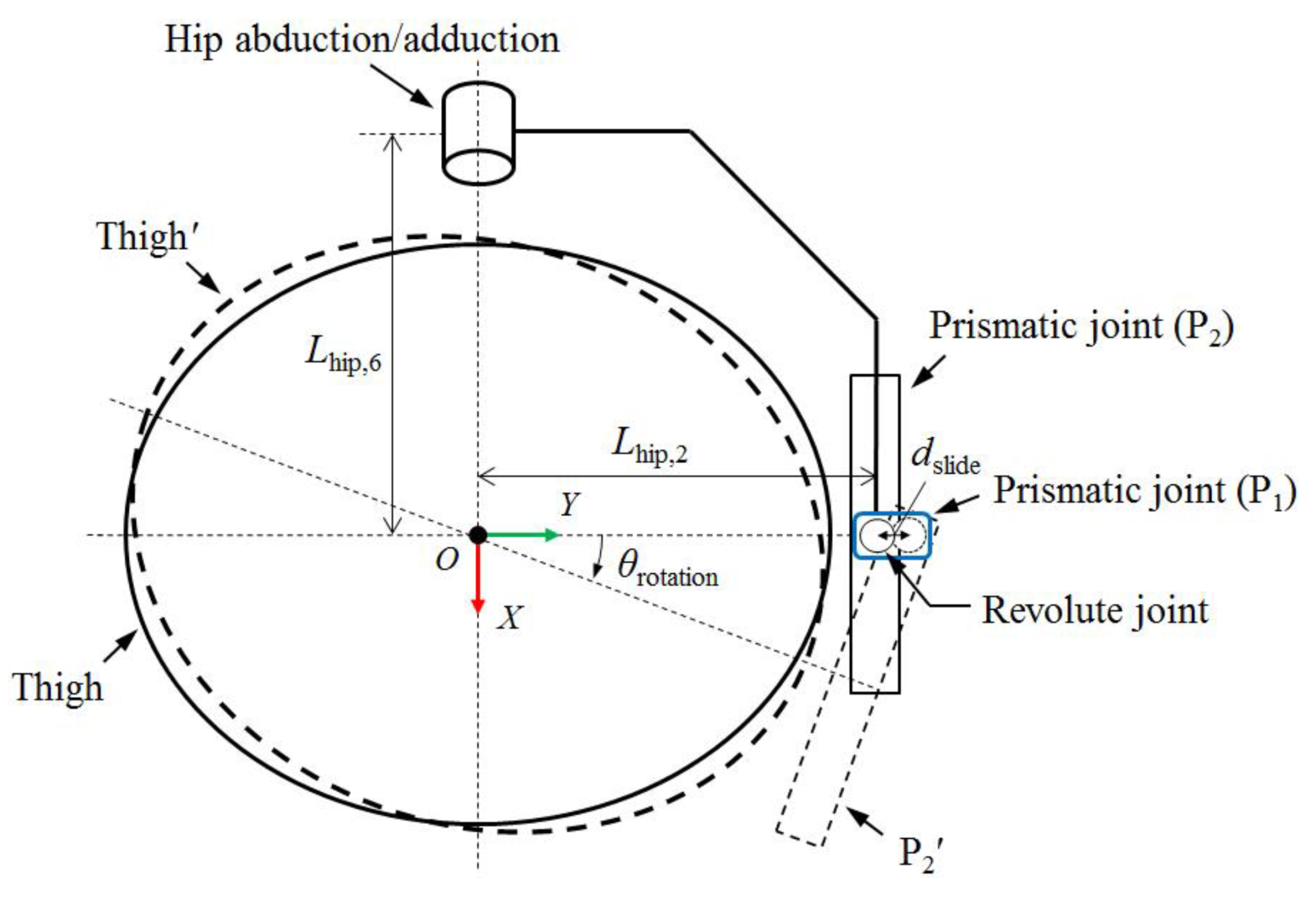

Figure 3.

Schematic of the remote-center rotation of hip medial/lateral joint in the transversal view.

Figure 3.

Schematic of the remote-center rotation of hip medial/lateral joint in the transversal view.

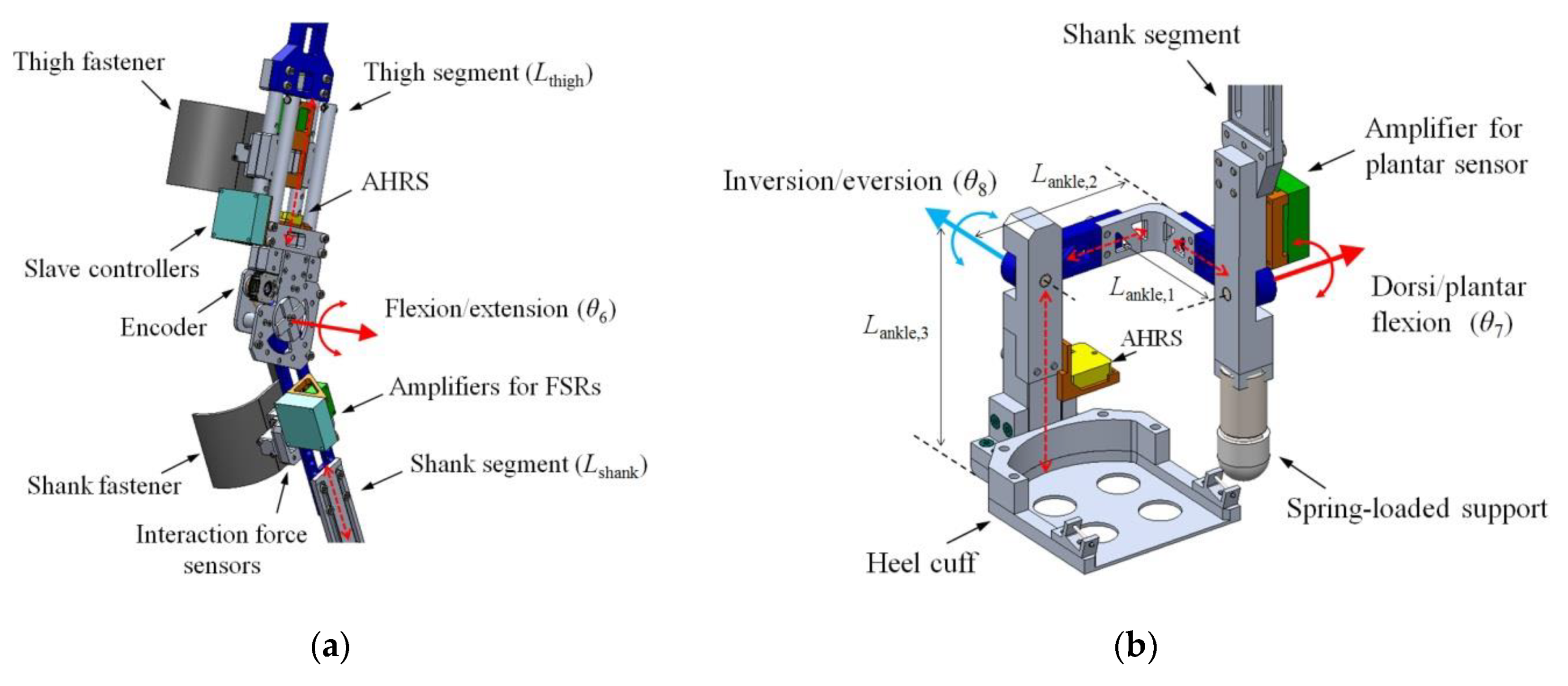

Figure 4.

Configuration of (a) the joint for knee flexion/extension and (b) the joint for ankle dorsi/plantar flexion and inversion/eversion.

Figure 4.

Configuration of (a) the joint for knee flexion/extension and (b) the joint for ankle dorsi/plantar flexion and inversion/eversion.

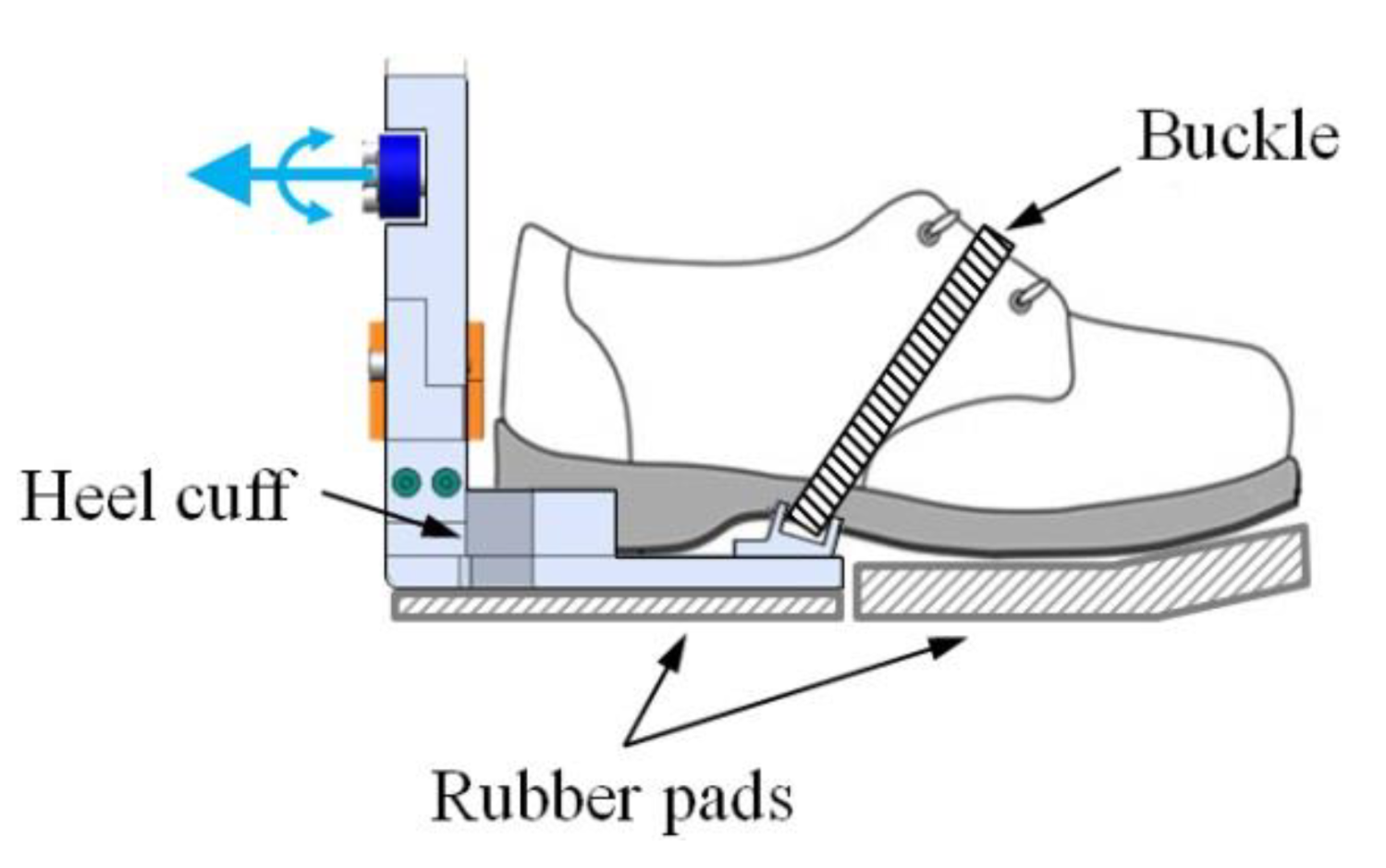

Figure 5.

Foot configuration of the exoskeleton. The forefoot is free to be bent for ensuring smooth ground contact.

Figure 5.

Foot configuration of the exoskeleton. The forefoot is free to be bent for ensuring smooth ground contact.

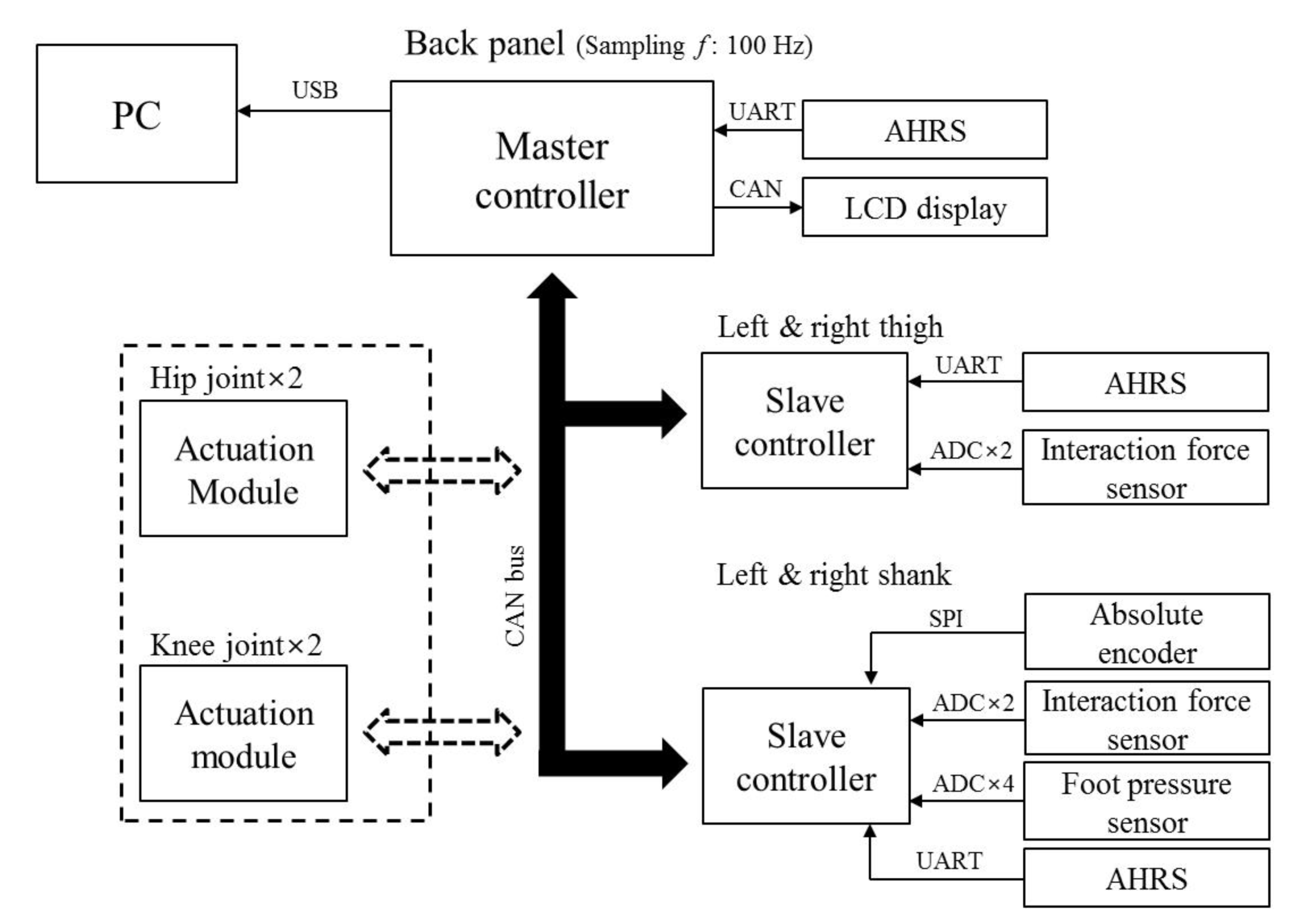

Figure 6.

Schematic of the control system.

Figure 6.

Schematic of the control system.

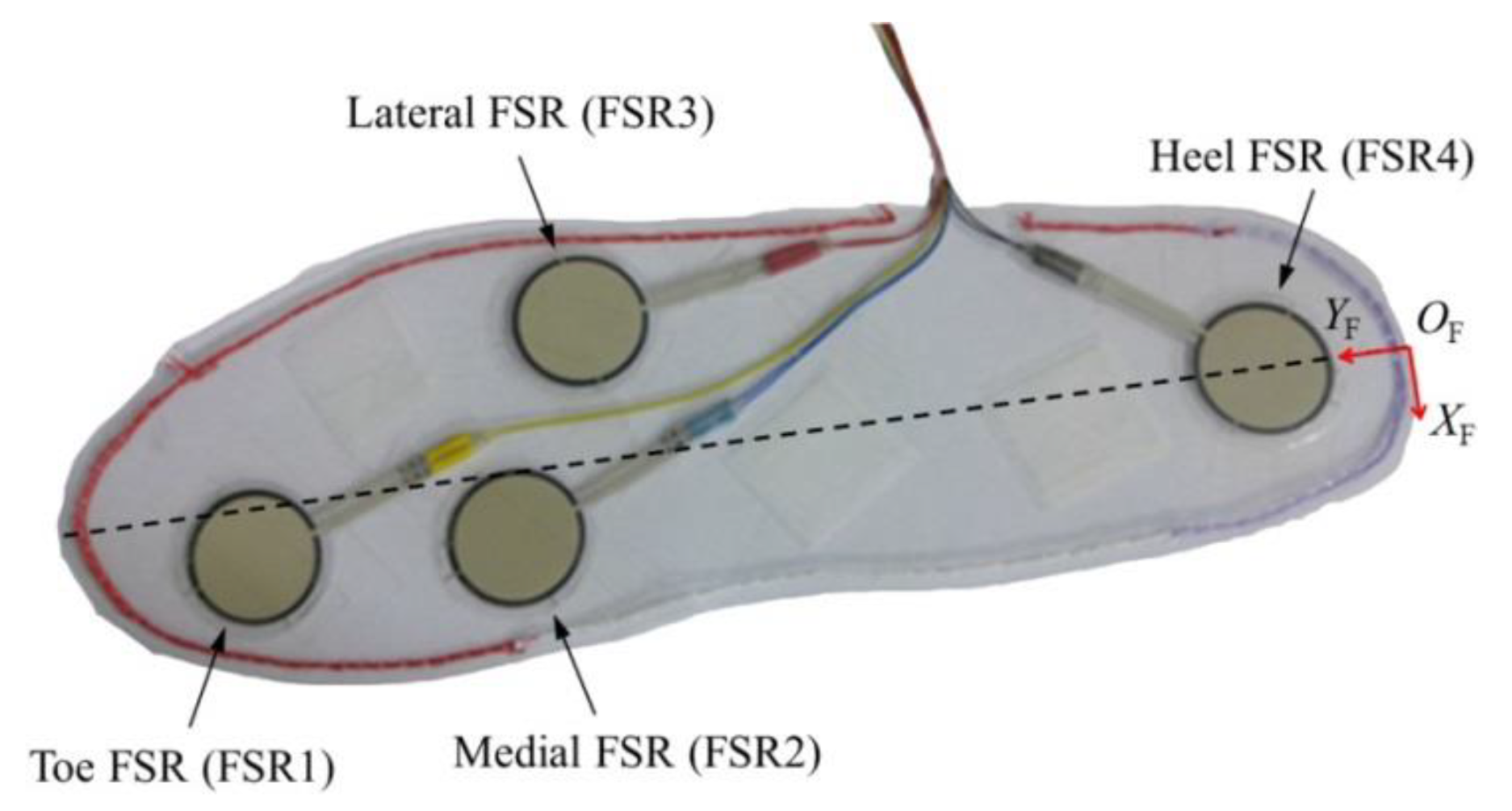

Figure 7.

Insole sensor for measuring plantar pressure distribution.

Figure 7.

Insole sensor for measuring plantar pressure distribution.

Figure 8.

Custom-made sensor for measuring the interaction forces on the thigh and shank segments.

Figure 8.

Custom-made sensor for measuring the interaction forces on the thigh and shank segments.

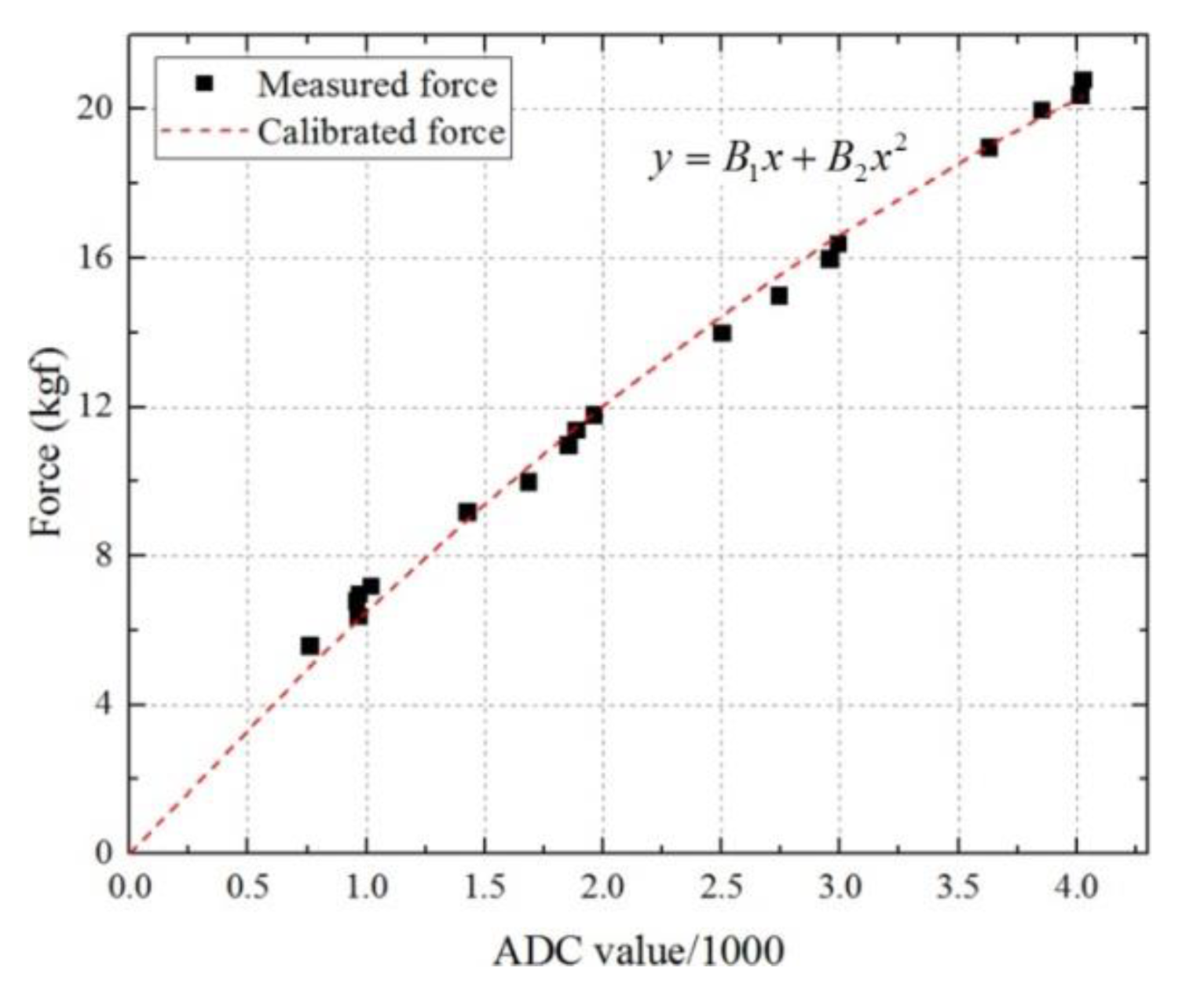

Figure 9.

Calibration curve of the FSR in the range of 0 to 20 kgf. The ADC values divided by 1000 were fitted with a second order polynomial. The coefficients of this curve, B1 and B2, are 6.97 and −0.47, respectively.

Figure 9.

Calibration curve of the FSR in the range of 0 to 20 kgf. The ADC values divided by 1000 were fitted with a second order polynomial. The coefficients of this curve, B1 and B2, are 6.97 and −0.47, respectively.

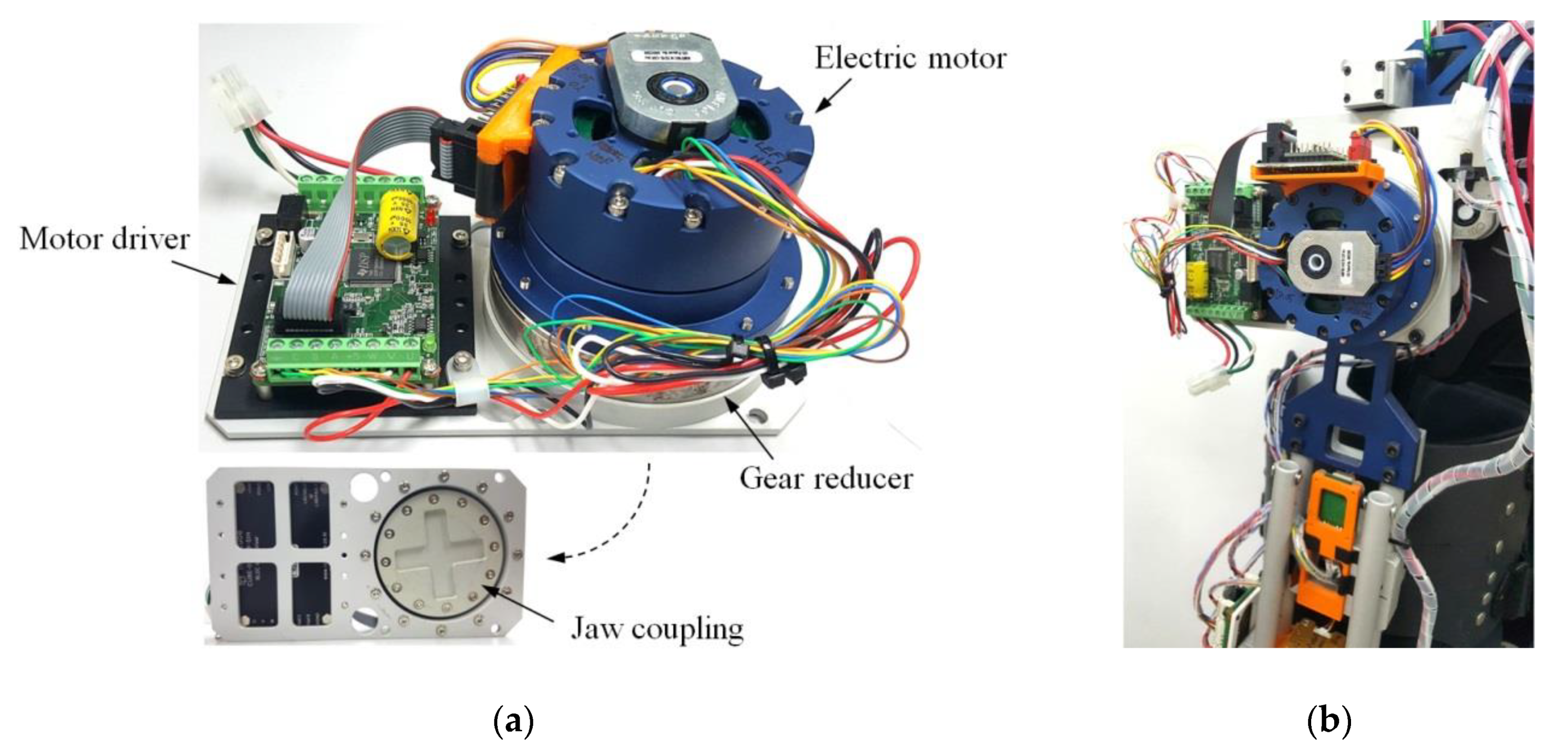

Figure 10.

(a) Composition of the actuation module and (b) the assembly state with the hip joint of the exoskeleton.

Figure 10.

(a) Composition of the actuation module and (b) the assembly state with the hip joint of the exoskeleton.

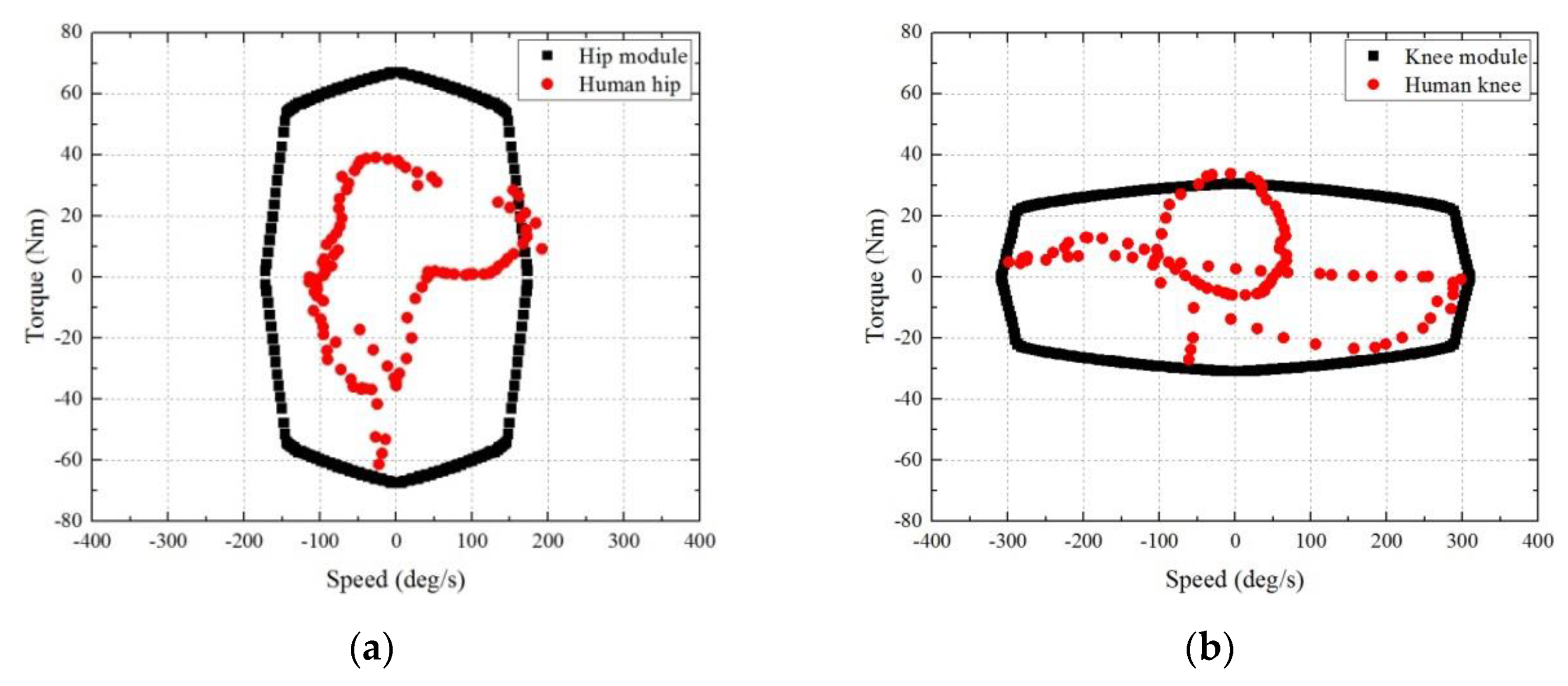

Figure 11.

Comparison for (a) hip and (b) knee torque–speed characteristics of the actuation module and human joint during walking. The angular speed of each joint was calculated based on the stride time of 1.1 s.

Figure 11.

Comparison for (a) hip and (b) knee torque–speed characteristics of the actuation module and human joint during walking. The angular speed of each joint was calculated based on the stride time of 1.1 s.

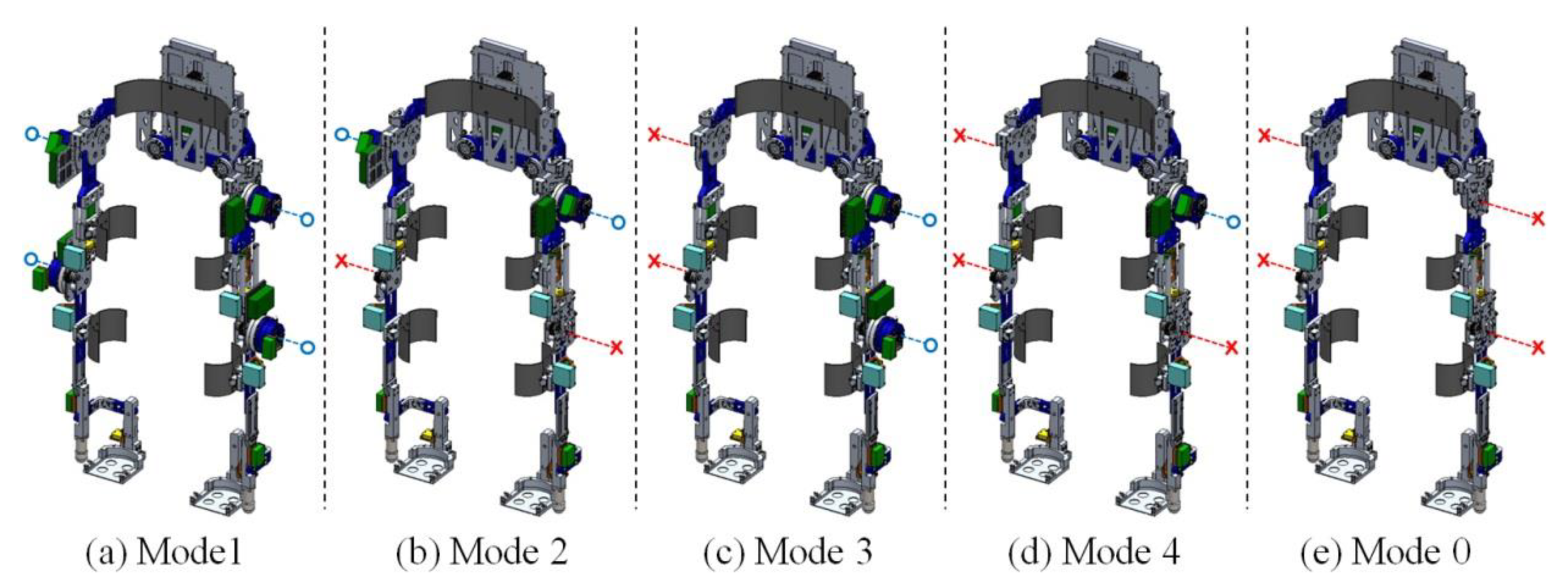

Figure 12.

Configuration of the five modes of the exoskeleton according to the assembly of the actuation modules. The blue-circle and red-cross indicates whether the actuation module is assembled to the joint or not, respectively. Each mode of the exoskeleton can be used for the purpose of assisting (a) hip and knee joints of both legs; (b) hip or knee joints of both legs; (c) hip and knee joints of one leg; and (d) one of the joint of lower-limb. In the case of (e) mode 0, although it cannot assist the joints of a wearer by using actuation modules, it can be used to measure the motion of a wearer by using embedded sensors.

Figure 12.

Configuration of the five modes of the exoskeleton according to the assembly of the actuation modules. The blue-circle and red-cross indicates whether the actuation module is assembled to the joint or not, respectively. Each mode of the exoskeleton can be used for the purpose of assisting (a) hip and knee joints of both legs; (b) hip or knee joints of both legs; (c) hip and knee joints of one leg; and (d) one of the joint of lower-limb. In the case of (e) mode 0, although it cannot assist the joints of a wearer by using actuation modules, it can be used to measure the motion of a wearer by using embedded sensors.

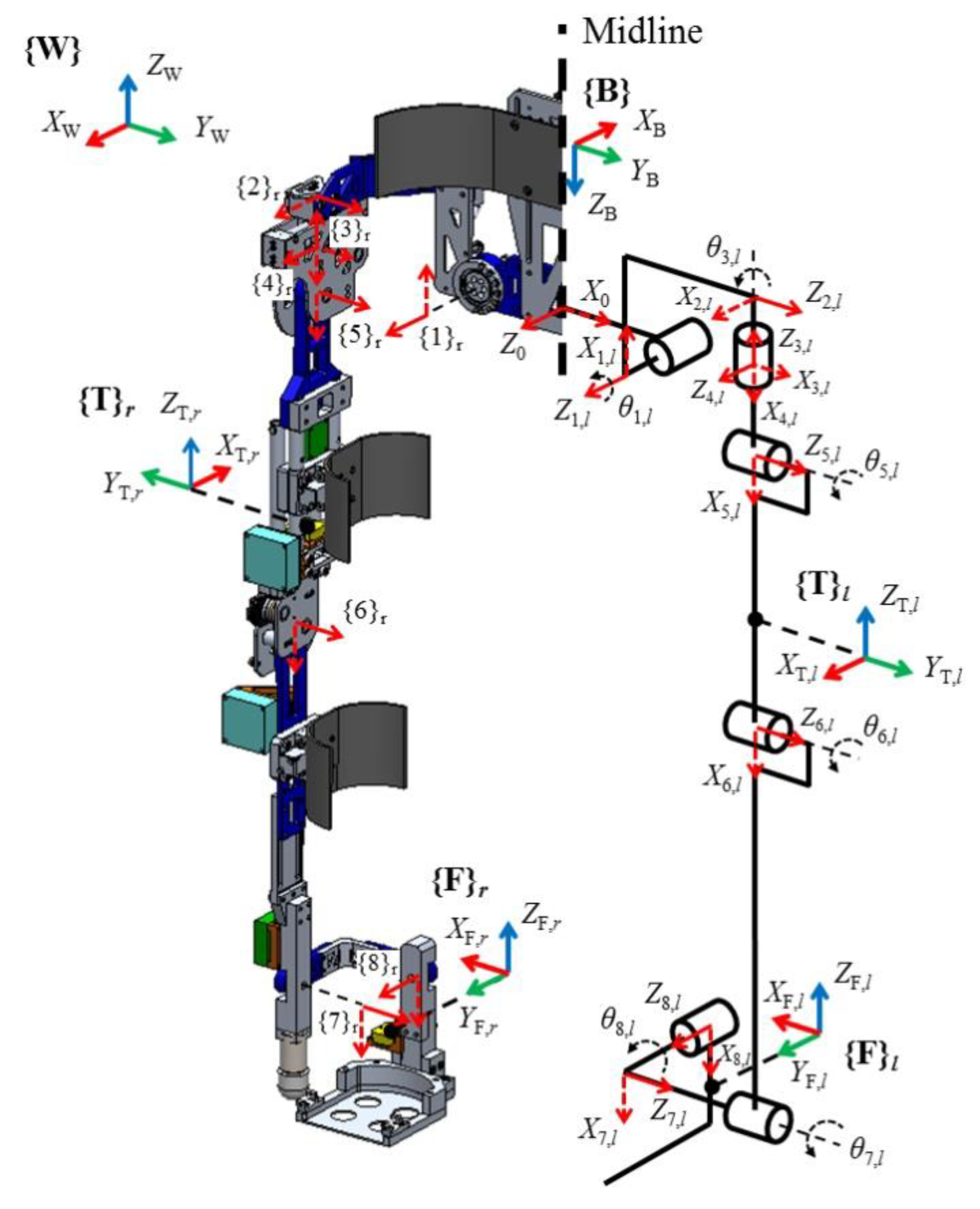

Figure 13.

Kinematic model of the exoskeleton and its frame attachment.

Figure 13.

Kinematic model of the exoskeleton and its frame attachment.

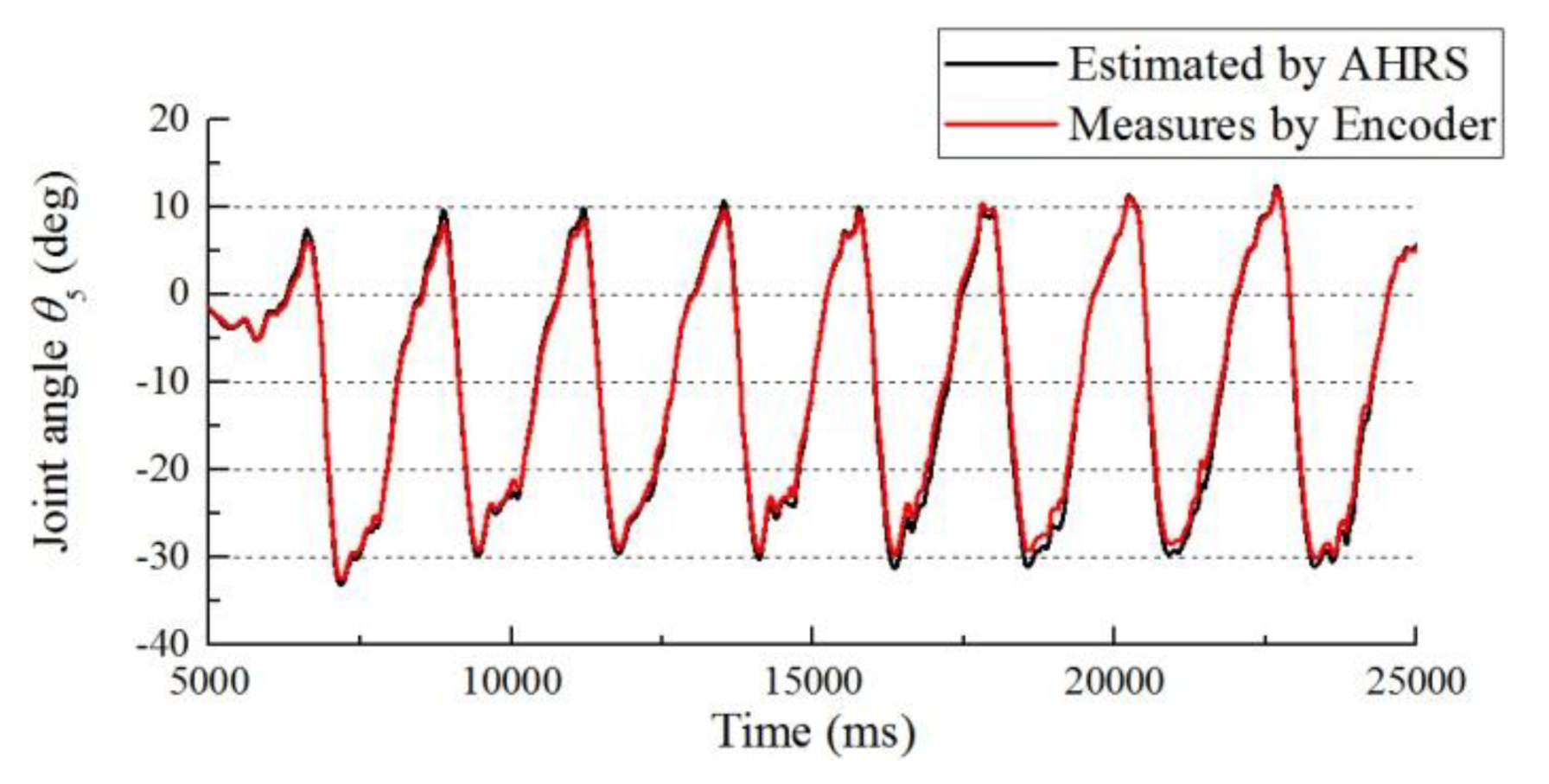

Figure 14.

Joint angle estimation result of θ5 compared with the angle measured by an encoder.

Figure 14.

Joint angle estimation result of θ5 compared with the angle measured by an encoder.

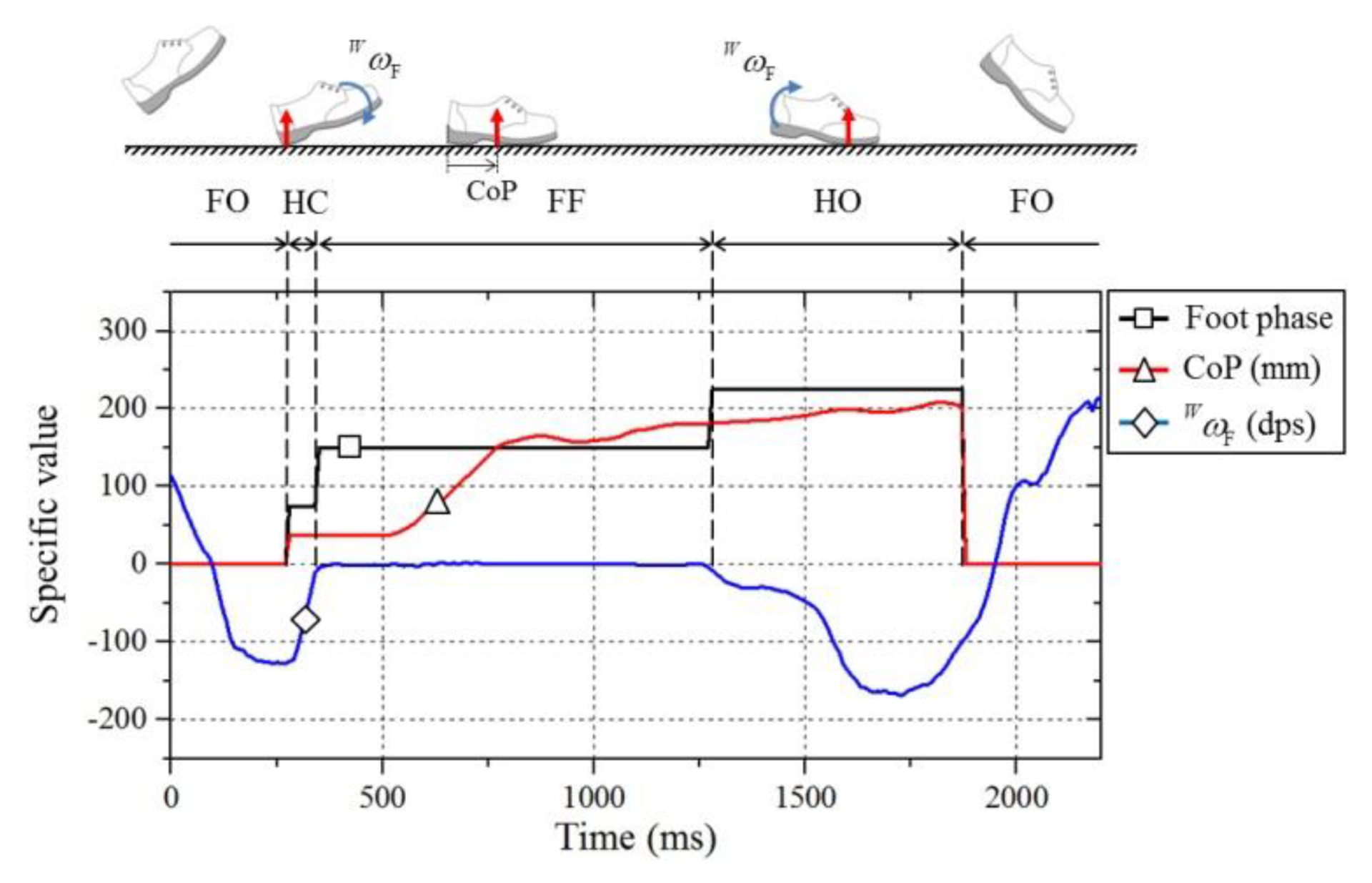

Figure 15.

Foot contact phase determination result using the CoP and the angular velocity of the foot (ωF) with respect to frame {W}.

Figure 15.

Foot contact phase determination result using the CoP and the angular velocity of the foot (ωF) with respect to frame {W}.

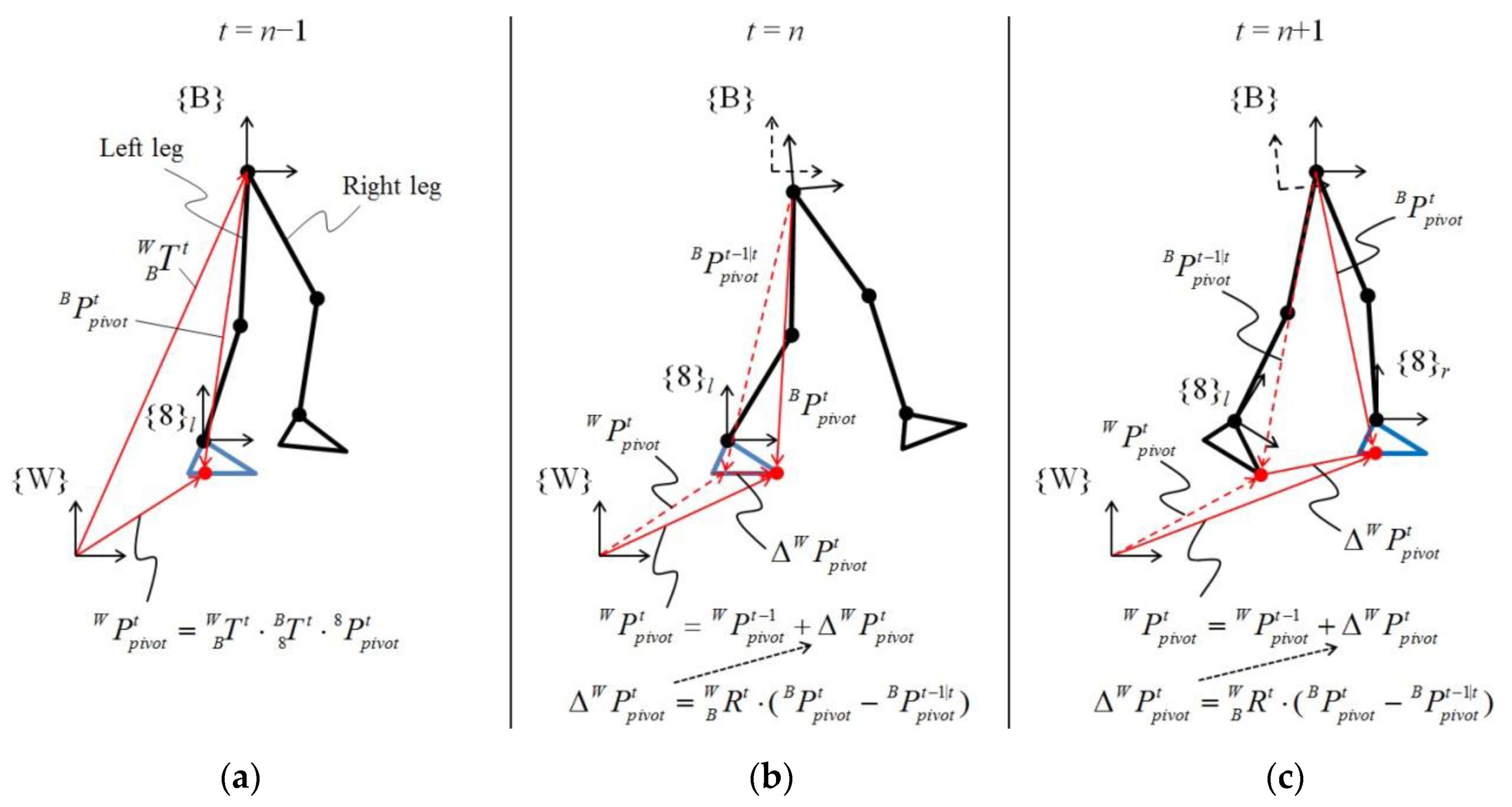

Figure 16.

Calculation of the pivot vector on frame {W} according to the contact phase of feet. (a) At the first contact of a foot with the ground, the CoP of the supporting leg is set to the pivot. (b) As the CoP moves during foot flat phase, the pivot is continuously updated for the variation of the CoP. (c) When the supporting leg is changed to the other leg, the position vector between both feet is used to update the pivot.

Figure 16.

Calculation of the pivot vector on frame {W} according to the contact phase of feet. (a) At the first contact of a foot with the ground, the CoP of the supporting leg is set to the pivot. (b) As the CoP moves during foot flat phase, the pivot is continuously updated for the variation of the CoP. (c) When the supporting leg is changed to the other leg, the position vector between both feet is used to update the pivot.

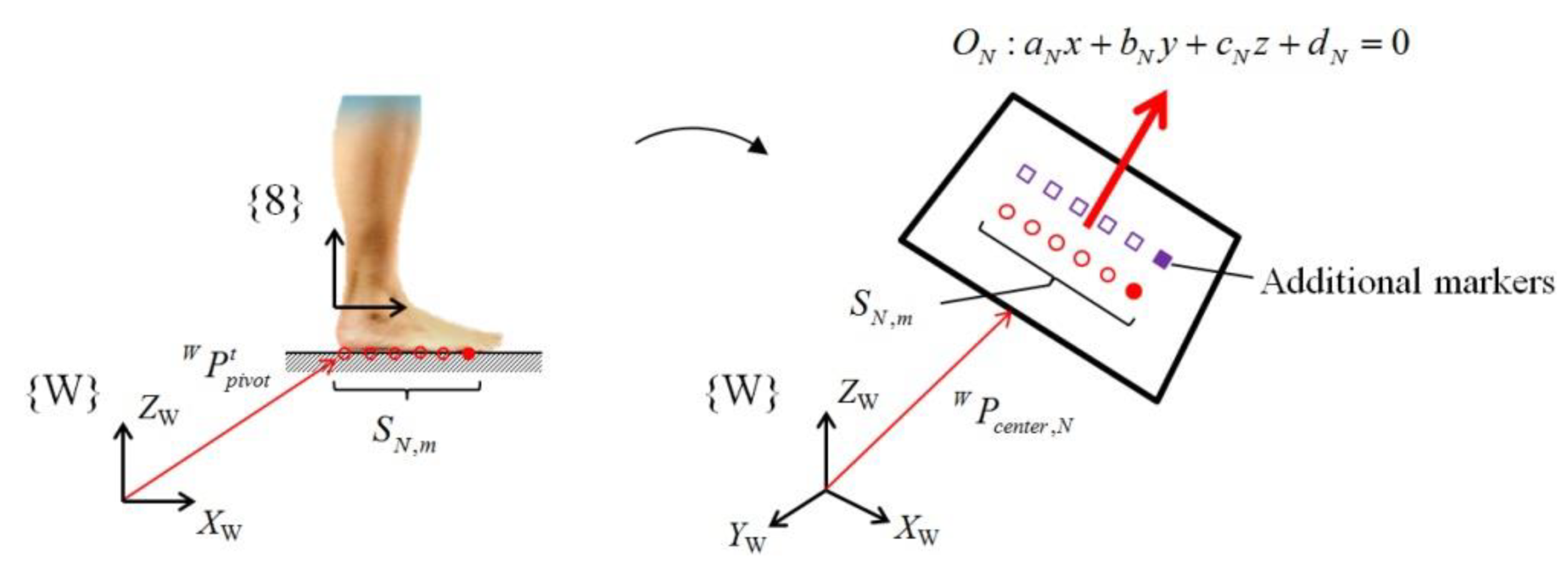

Figure 17.

Modelling of the walking ground as a plane using the collected pivot points of each step.

Figure 17.

Modelling of the walking ground as a plane using the collected pivot points of each step.

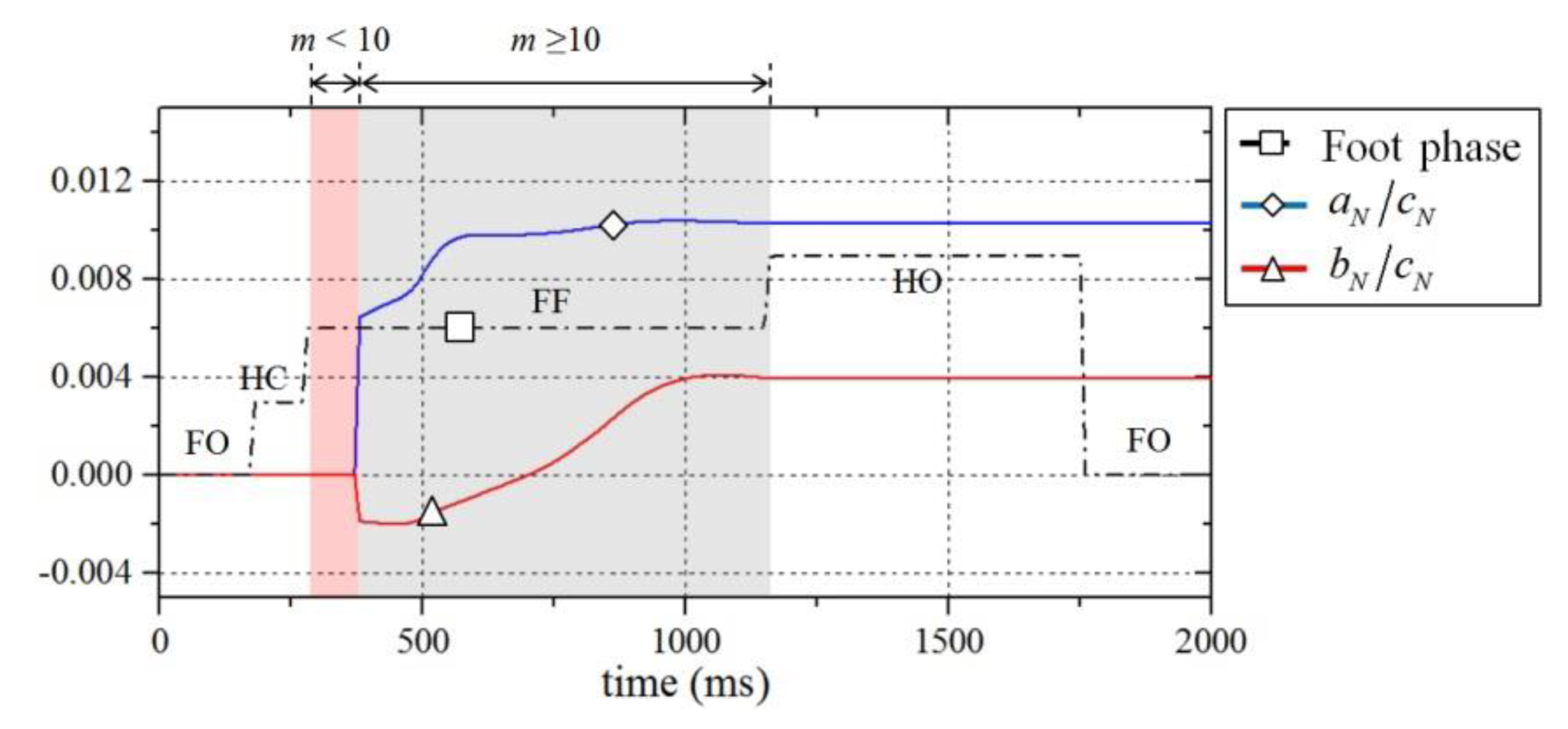

Figure 18.

Updating process of the calculated plane coefficients while the exoskeleton is in contact with the ground. The coefficient, cN, is a constant equal to −1.

Figure 18.

Updating process of the calculated plane coefficients while the exoskeleton is in contact with the ground. The coefficient, cN, is a constant equal to −1.

Figure 19.

Result of contact surface estimation for both feet during walking.

Figure 19.

Result of contact surface estimation for both feet during walking.

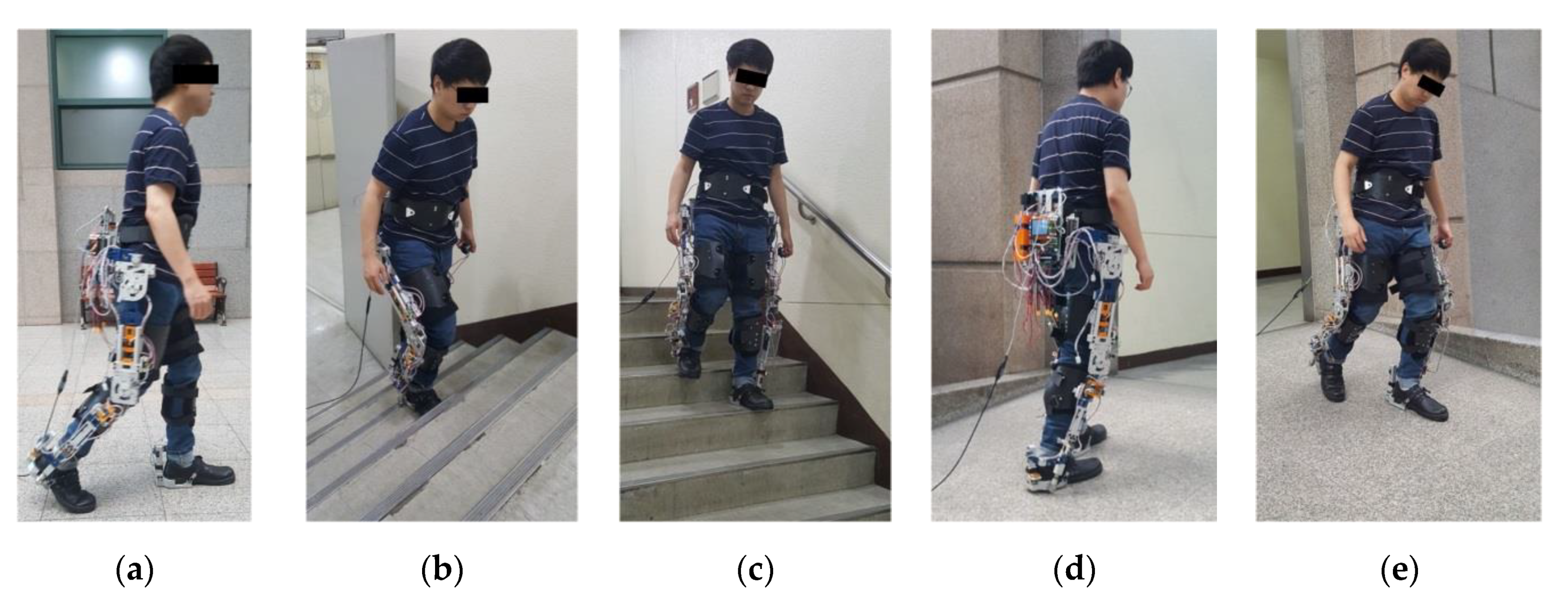

Figure 20.

Five types of ambulation tests: ((a) level walk (LW), (b) stair ascent (SA), (c) stair descent (SD), (d) ramp ascent (RA), and (e) ramp descent (RD)) on three different terrains for performance validation of the proposed method. Ramp ascent and descent were conducted on three ramps with different slopes to validate the performance of the slope estimation.

Figure 20.

Five types of ambulation tests: ((a) level walk (LW), (b) stair ascent (SA), (c) stair descent (SD), (d) ramp ascent (RA), and (e) ramp descent (RD)) on three different terrains for performance validation of the proposed method. Ramp ascent and descent were conducted on three ramps with different slopes to validate the performance of the slope estimation.

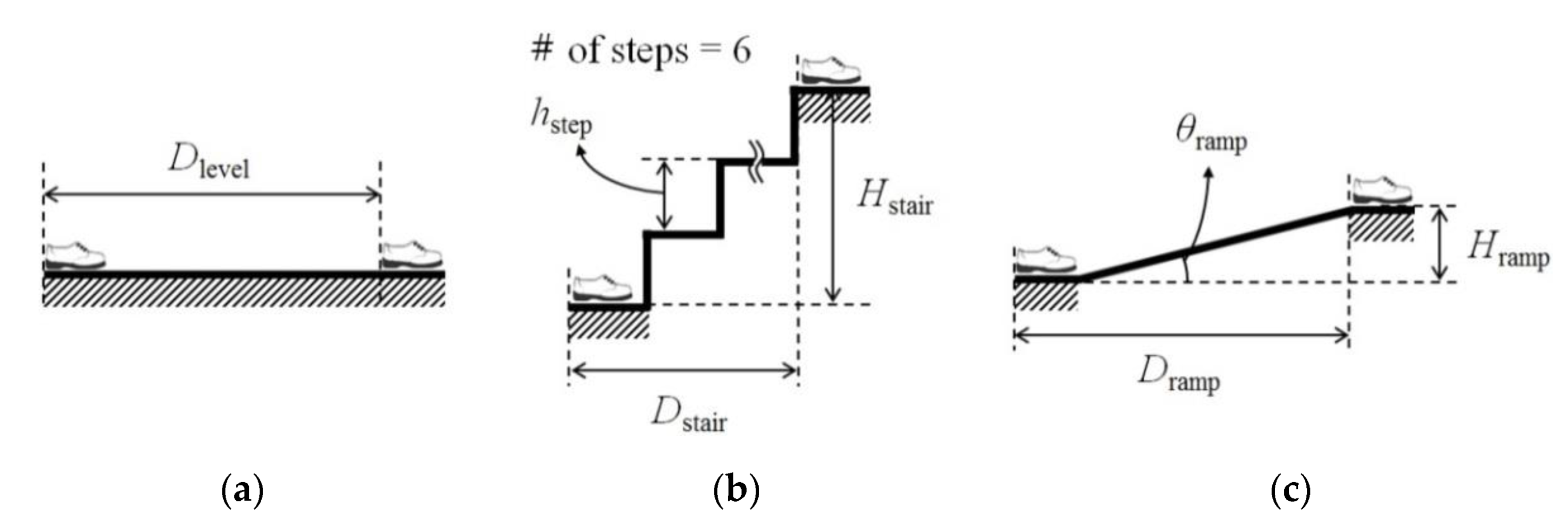

Figure 21.

Terrains used for the tests ((a) level ground, (b)stair, (c)ramp). The shoes in the figure indicate the start and end positions of the subject. The height of each step (hstep) of the stairs is 165 mm.

Figure 21.

Terrains used for the tests ((a) level ground, (b)stair, (c)ramp). The shoes in the figure indicate the start and end positions of the subject. The height of each step (hstep) of the stairs is 165 mm.

Figure 22.

Visualization of position data of the exoskeleton on frame {W} for (a) level walk, (b) stair ascent, (c) stair descent, (d) ramp ascent, and (e) ramp descent.

Figure 22.

Visualization of position data of the exoskeleton on frame {W} for (a) level walk, (b) stair ascent, (c) stair descent, (d) ramp ascent, and (e) ramp descent.

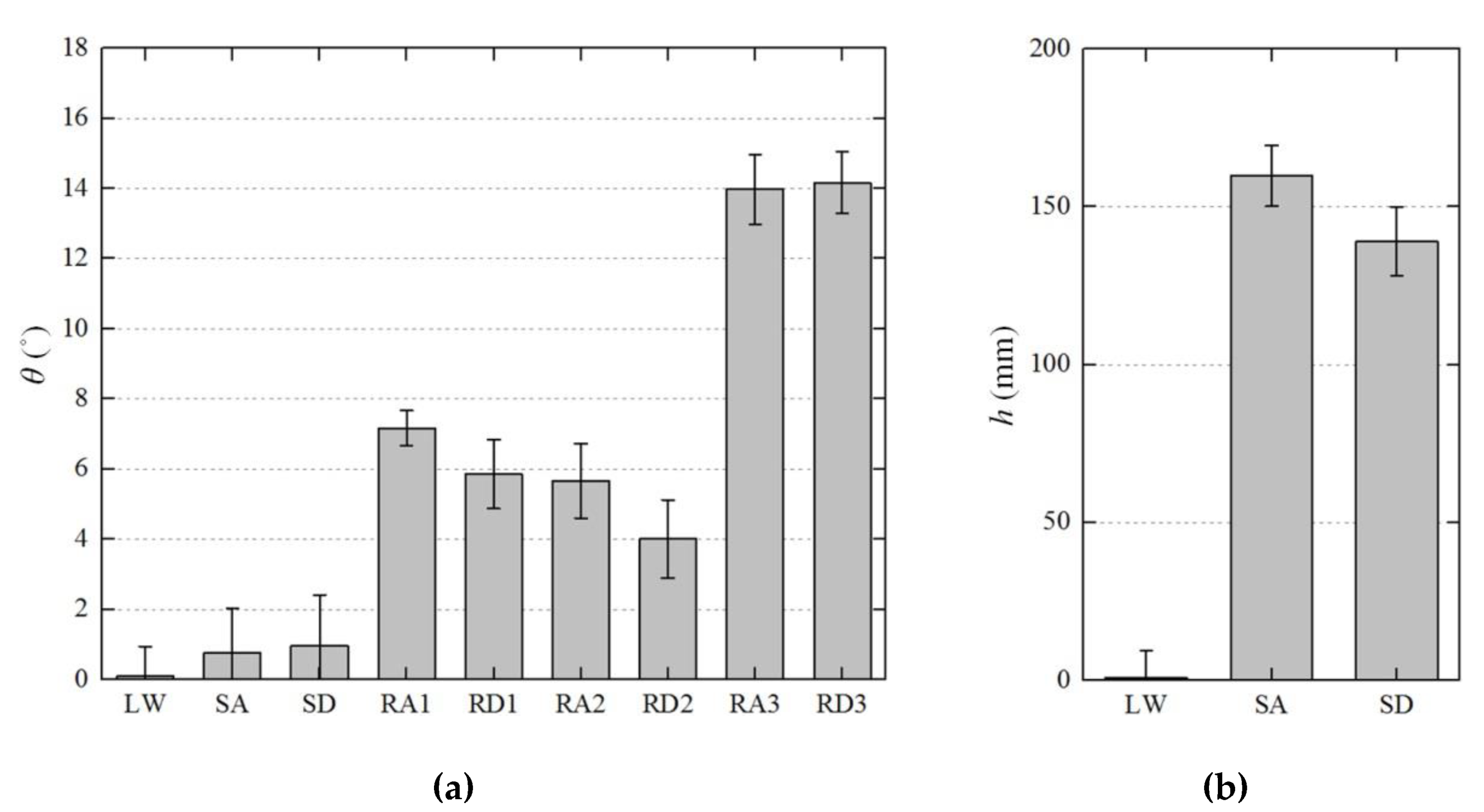

Figure 23.

Average (a) slope and (b) elevation estimation results of all tests for each terrain. The absolute values were used for stairs and ramp descent cases.

Figure 23.

Average (a) slope and (b) elevation estimation results of all tests for each terrain. The absolute values were used for stairs and ramp descent cases.

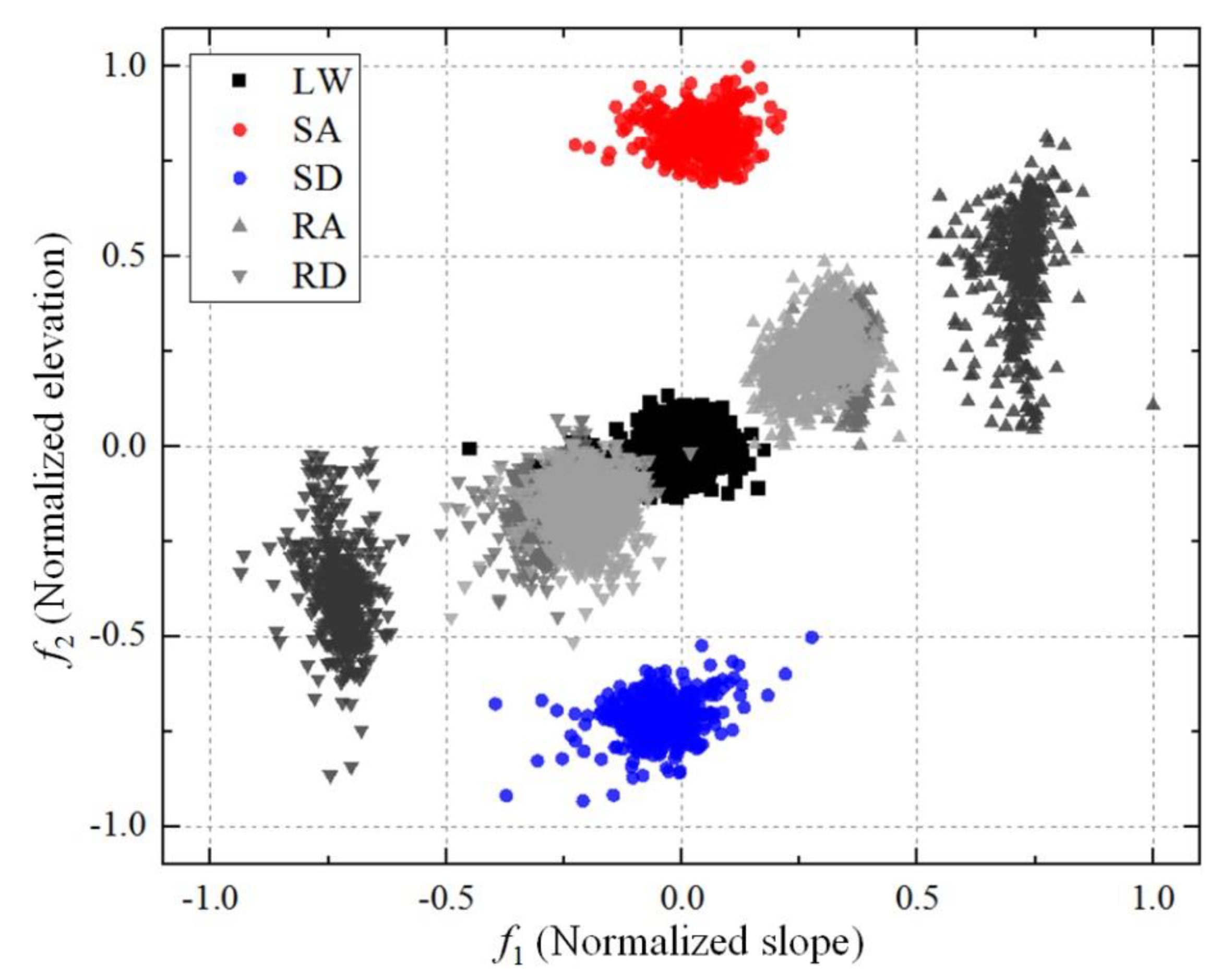

Figure 24.

Normalized terrain slope and elevation results in feature space. The maximum values of and were used for normalization. The samples of RA and RD for different slopes are colored differently (light gray: ramp 1, medium gray: ramp 2, dark gray: ramp 3).

Figure 24.

Normalized terrain slope and elevation results in feature space. The maximum values of and were used for normalization. The samples of RA and RD for different slopes are colored differently (light gray: ramp 1, medium gray: ramp 2, dark gray: ramp 3).

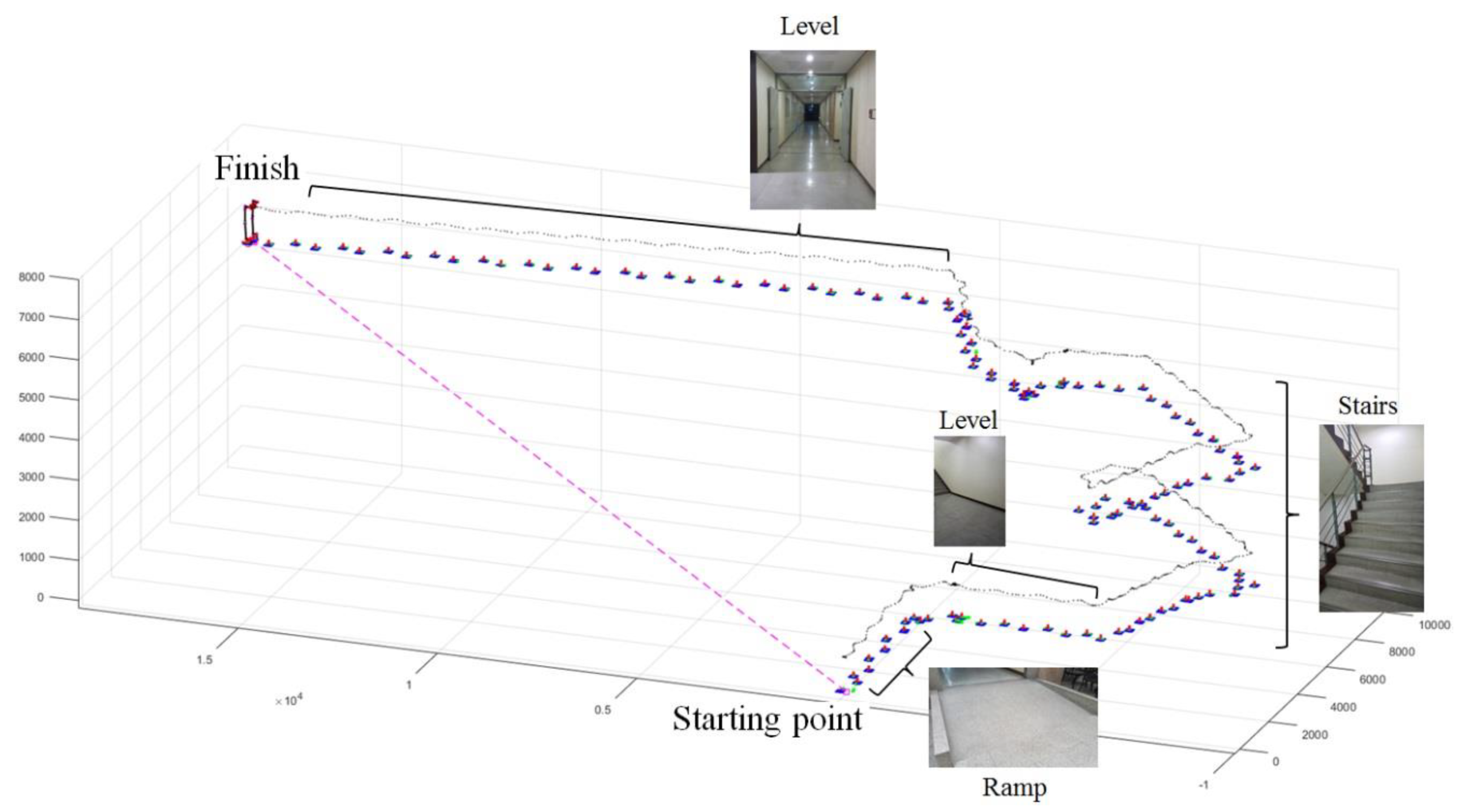

Figure 25.

Test result for the motion of the exoskeleton in a building environment. (The video showing the analysis results of this test can be found in

Supplementary Materials Video S2).

Figure 25.

Test result for the motion of the exoskeleton in a building environment. (The video showing the analysis results of this test can be found in

Supplementary Materials Video S2).

Table 1.

Joint ranges of motion of a human and the exoskeleton.

Table 1.

Joint ranges of motion of a human and the exoskeleton.

| Joint Motion | ROM (°) | In Walking (°) | Exoskeleton (°) |

|---|

| Hip flexion/extension | 120/30 | 36/6 | 110/25 |

| Hip adduction/abduction | 35/40 | 7/6 | 20/30 |

| Hip medial/lateral rotation | 30/60 | 10/13 | 20/20 |

| Knee extension/flexion | 10/140 | 0/64 | 10/110 |

| Ankle dorsi/plantar flexion | 20/50 | 11/19 | 20/20 |

| Ankle inversion/eversion | 35/20 | 5/7 | 10/10 |

| Frame | Left Leg | Right Leg |

|---|

| i | αi− 1 (rad) | ai− 1 (mm) | di (mm) | θi (rad) | αi− 1 (rad) | ai− 1 (mm) | di (mm) | θi (rad) |

|---|

| 1 | 0 | Lhip,1 | Lhip,6 | θ1,l + (π/2) | 0 | −Lhip,1 | Lhip,6 | θ1,r + (π/2) |

| 2 | π/2 | Lhip,3 | Lhip,2 | π/2 | π/2 | Lhip,3 | −Lhip,2 | π/2 |

| 3 | π/2 | 0 | −Lhip,4 | θ3,l + (π/2) | π/2 | 0 | −Lhip,4 | θ3,r + (π/2) |

| 4 | π/2 | 0 | 0 | −π/2 | π/2 | 0 | 0 | −π/2 |

| 5 | −π/2 | Lhip,5 | 0 | θ5,l | −π/2 | Lhip,5 | 0 | θ5,r |

| 6 | 0 | Lthigh | 0 | θ6,l | 0 | Lthigh | 0 | θ6,r |

| 7 | 0 | Lshank | −Lankle,2 | θ7,l | 0 | Lshank | Lankle,2 | θ7,r |

| 8 | π/2 | 0 | −Lankle,1 | θ8,l | π/2 | 0 | −Lankle,1 | θ8,r |

Table 3.

Demographic characteristics of the subjects

Table 3.

Demographic characteristics of the subjects

| Subject | Gender | Age (y) | Height (m) | Weight (kg) | BMI (kg/m2) | Shoe Size (mm) |

|---|

| S1 | Male | 33 | 1.70 | 68 | 23.5 | 260 |

| S2 | Male | 35 | 1.68 | 74 | 26.2 | 260 |

| S3 | Male | 25 | 1.76 | 67 | 21.6 | 265 |

| S4 | Male | 29 | 1.80 | 74 | 22.8 | 270 |

| S5 | Male | 39 | 1.76 | 88 | 28.4 | 275 |

| S6 | Female | 36 | 1.61 | 60 | 23.1 | 240 |

| S7 | Female | 43 | 1.65 | 70 | 25.7 | 250 |

Table 4.

Dimensions of the terrains for evaluating the performance of the developed method.

Table 4.

Dimensions of the terrains for evaluating the performance of the developed method.

| Case | Dcase (mm) | Hcase (mm) | θcase (°) |

|---|

| Level | 10,000 | 0 | 0 |

| Stair | 1900 | 990 | 0 |

| Ramp 1 | 12,770 | 1020 | 5 |

| Ramp 2 | 2460 | 230 | 6.37 |

| Ramp 3 | 3600 | 620 | 14 |

Table 5.

Position error per step and terrain feature estimation error for each test.

Table 5.

Position error per step and terrain feature estimation error for each test.

| | Position Error Per Step | Terrain Estimation Error |

|---|

| Case | Subject | DRMSE (mm) | HRMSE (mm) | YRMSE (mm) | θRMSE (°) | hRMSE (mm) |

|---|

| LW | S1 | 6.15 | 4.18 | 5.93 | 1.30 | 8.90 |

| S2 | 4.83 | 4.02 | 5.35 | 0.71 | 6.36 |

| S3 | 2.45 | 2.78 | 3.41 | 0.66 | 11.43 |

| S4 | 2.11 | 6.67 | 6.77 | 1.10 | 8.01 |

| S5 | 1.94 | 1.93 | 4.51 | 0.84 | 8.33 |

| S6 | 10.89 | 5.57 | 3.82 | 0.76 | 8.81 |

| S7 | 4.00 | 6.14 | 5.46 | 0.69 | 7.38 |

| Stotal | 5.38 | 4.70 | 5.11 | 0.86 | 8.60 |

| SA | S1 | 4.94 | 11.23 | 12.22 | 1.20 | 13.06 |

| S2 | 8.48 | 1.90 | 12.75 | 1.75 | 9.62 |

| S3 | 6.04 | 8.98 | 18.24 | 1.23 | 12.38 |

| S4 | 17.70 | 8.10 | 16.83 | 1.51 | 10.65 |

| S5 | 7.98 | 8.81 | 18.59 | 1.79 | 12.54 |

| S6 | 19.04 | 4.77 | 11.45 | 1.52 | 7.93 |

| S7 | 10.89 | 3.44 | 10.41 | 1.32 | 10.08 |

| Stotal | 12.05 | 7.42 | 14.63 | 1.48 | 11.00 |

| SD | S1 | 7.46 | 24.12 | 17.92 | 1.66 | 26.28 |

| S2 | 5.18 | 14.02 | 15.75 | 1.95 | 21.72 |

| S3 | 8.79 | 20.59 | 10.62 | 1.41 | 27.09 |

| S4 | 12.53 | 26.02 | 9.26 | 1.32 | 33.04 |

| S5 | 4.62 | 19.90 | 8.70 | 2.64 | 26.51 |

| S6 | 7.50 | 24.01 | 13.36 | 1.59 | 32.49 |

| S7 | 12.14 | 19.48 | 15.98 | 1.26 | 27.57 |

| Stotal | 8.89 | 21.55 | 13.51 | 1.74 | 28.12 |

| RA1 | S1 | 11.78 | 8.81 | 14.80 | 1.09 | - |

| S2 | 15.84 | 13.89 | 7.82 | 0.87 | - |

| S3 | 16.13 | 6.06 | 11.25 | 0.83 | - |

| S4 | 12.47 | 2.73 | 9.19 | 1.03 | - |

| S5 | 8.40 | 9.78 | 6.66 | 1.04 | - |

| S6 | 11.01 | 14.26 | 9.82 | 0.91 | - |

| S7 | 4.39 | 2.88 | 9.33 | 0.91 | - |

| Stotal | 11.96 | 9.49 | 9.94 | 0.94 | - |

| RD1 | S1 | 7.97 | 9.04 | 21.74 | 1.13 | - |

| S2 | 13.98 | 7.73 | 9.15 | 1.14 | - |

| S3 | 10.25 | 2.35 | 8.69 | 0.63 | - |

| S4 | 9.59 | 2.74 | 10.45 | 1.30 | - |

| S5 | 9.40 | 3.55 | 5.91 | 1.13 | - |

| S6 | 11.47 | 5.40 | 8.23 | 1.06 | - |

| S7 | 8.66 | 5.02 | 7.91 | 1.31 | - |

| Stotal | 10.37 | 5.50 | 10.99 | 1.11 | - |

| RA2 | S1 | 4.47 | 14.84 | 5.21 | 1.05 | - |

| S2 | 2.66 | 10.46 | 5.18 | 1.33 | - |

| S3 | 2.45 | 5.90 | 3.07 | 1.15 | - |

| S4 | 13.81 | 6.87 | 4.62 | 1.55 | - |

| S5 | 13.06 | 3.84 | 6.40 | 1.32 | - |

| S6 | 10.21 | 17.86 | 4.35 | 1.14 | - |

| S7 | 11.78 | 3.31 | 7.83 | 1.19 | - |

| Stotal | 9.73 | 10.49 | 5.41 | 1.25 | - |

| RD2 | S1 | 5.44 | 15.05 | 3.82 | 1.60 | - |

| S2 | 7.28 | 10.71 | 2.99 | 1.54 | - |

| S3 | 11.52 | 6.14 | 4.38 | 1.40 | - |

| S4 | 11.09 | 1.84 | 9.16 | 1.60 | - |

| S5 | 14.14 | 5.70 | 6.31 | 1.49 | - |

| S6 | 7.98 | 10.36 | 8.74 | 1.37 | - |

| S7 | 14.61 | 4.47 | 3.80 | 1.42 | - |

| Stotal | 11.03 | 8.37 | 6.19 | 1.50 | - |

| RA3 | S1 | 8.29 | 6.57 | 7.48 | 1.70 | - |

| S2 | 17.91 | 12.46 | 9.76 | 0.85 | - |

| S3 | 14.18 | 11.28 | 6.13 | 1.23 | - |

| S4 | 15.32 | 4.33 | 14.95 | 0.84 | - |

| S5 | 14.35 | 5.85 | 6.25 | 0.99 | - |

| S6 | 6.53 | 13.76 | 5.94 | 0.62 | - |

| S7 | 8.38 | 9.97 | 8.37 | 0.62 | - |

| Stotal | 12.74 | 9.77 | 8.99 | 0.99 | - |

| RD3 | S1 | 10.38 | 4.94 | 8.37 | 0.78 | - |

| S2 | 8.86 | 3.82 | 7.01 | 1.39 | - |

| S3 | 9.07 | 5.06 | 6.02 | 0.93 | - |

| S4 | 9.93 | 6.81 | 11.92 | 0.59 | - |

| S5 | 11.42 | 5.53 | 5.73 | 0.60 | - |

| S6 | 8.44 | 7.40 | 6.89 | 0.95 | - |

| S7 | 13.59 | 14.25 | 9.46 | 0.75 | - |

| Stotal | 10.43 | 7.77 | 8.17 | 0.89 | - |

Table 6.

Confusion matrix and classification performance of the results using SVM.

Table 6.

Confusion matrix and classification performance of the results using SVM.

| Predicted | Performance |

|---|

| LW | SA | SD | RA | RD | Precision | Recall | F1-score |

|---|

| Actual | LW | 1770 | 0 | 0 | 1 | 24 | 0.990 | 0.986 | 0.988 |

| SA | 0 | 426 | 0 | 0 | 0 | 1.000 | 1.000 | 1.000 |

| SD | 0 | 0 | 419 | 0 | 1 | 1.000 | 0.998 | 0.999 |

| RA | 1 | 0 | 0 | 1879 | 0 | 0.999 | 0.999 | 0.999 |

| RD | 17 | 0 | 0 | 0 | 1972 | 0.987 | 0.991 | 0.989 |

Table 7.

Classification performance (F1-score) for each subject.

Table 7.

Classification performance (F1-score) for each subject.

| Case | S1 | S2 | S3 | S4 | S5 | S6 | S7 |

|---|

| LW | 0.953 | 0.984 | 0.985 | 0.995 | 0.996 | 1.000 | 0.998 |

| SA | 1.000 | 0.981 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

| SD | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

| RA | 1.000 | 0.995 | 1.000 | 0.998 | 1.000 | 1.000 | 1.000 |

| RD | 0.962 | 0.983 | 0.985 | 0.998 | 0.997 | 1.000 | 0.998 |