A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces

Abstract

1. Introduction

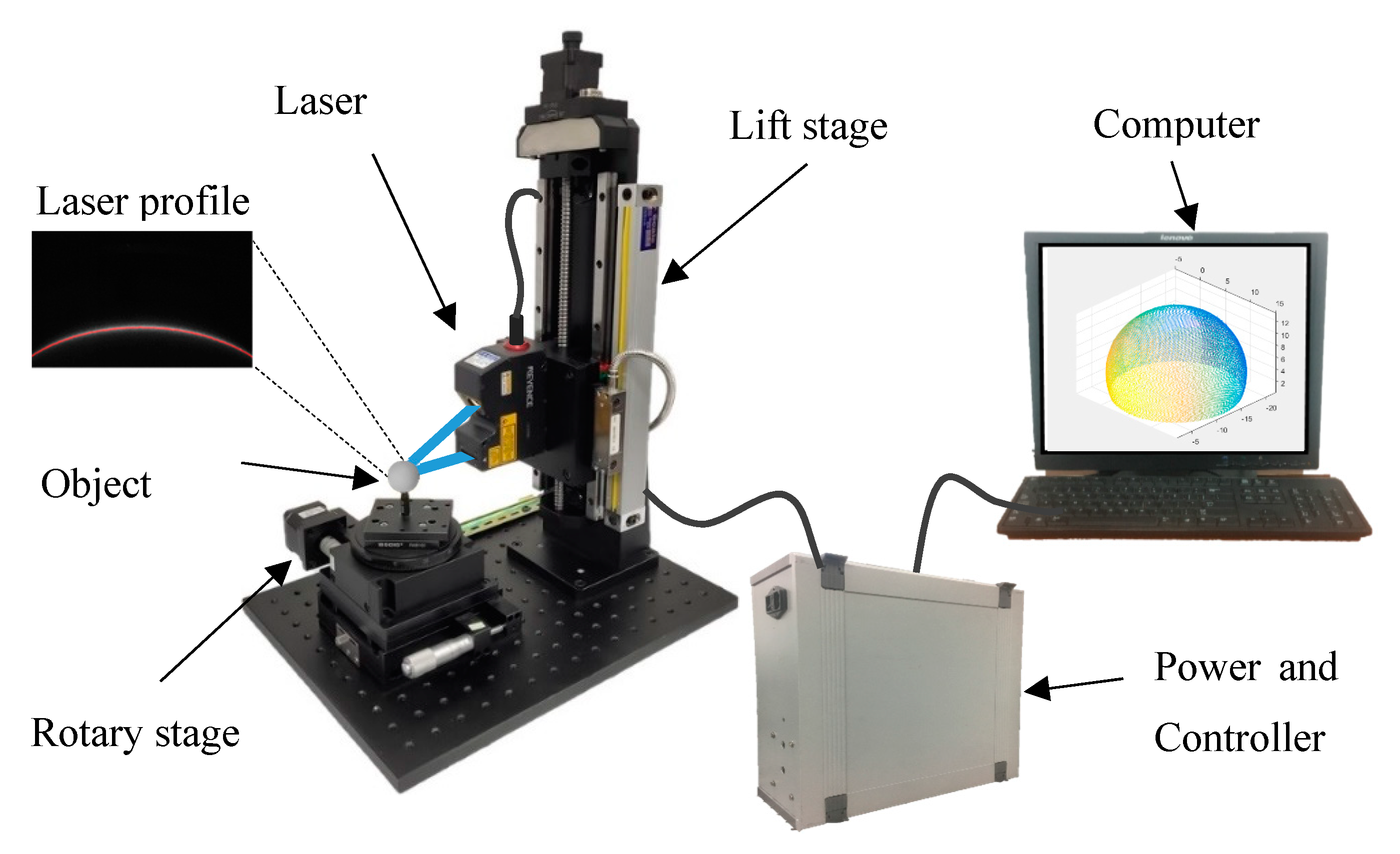

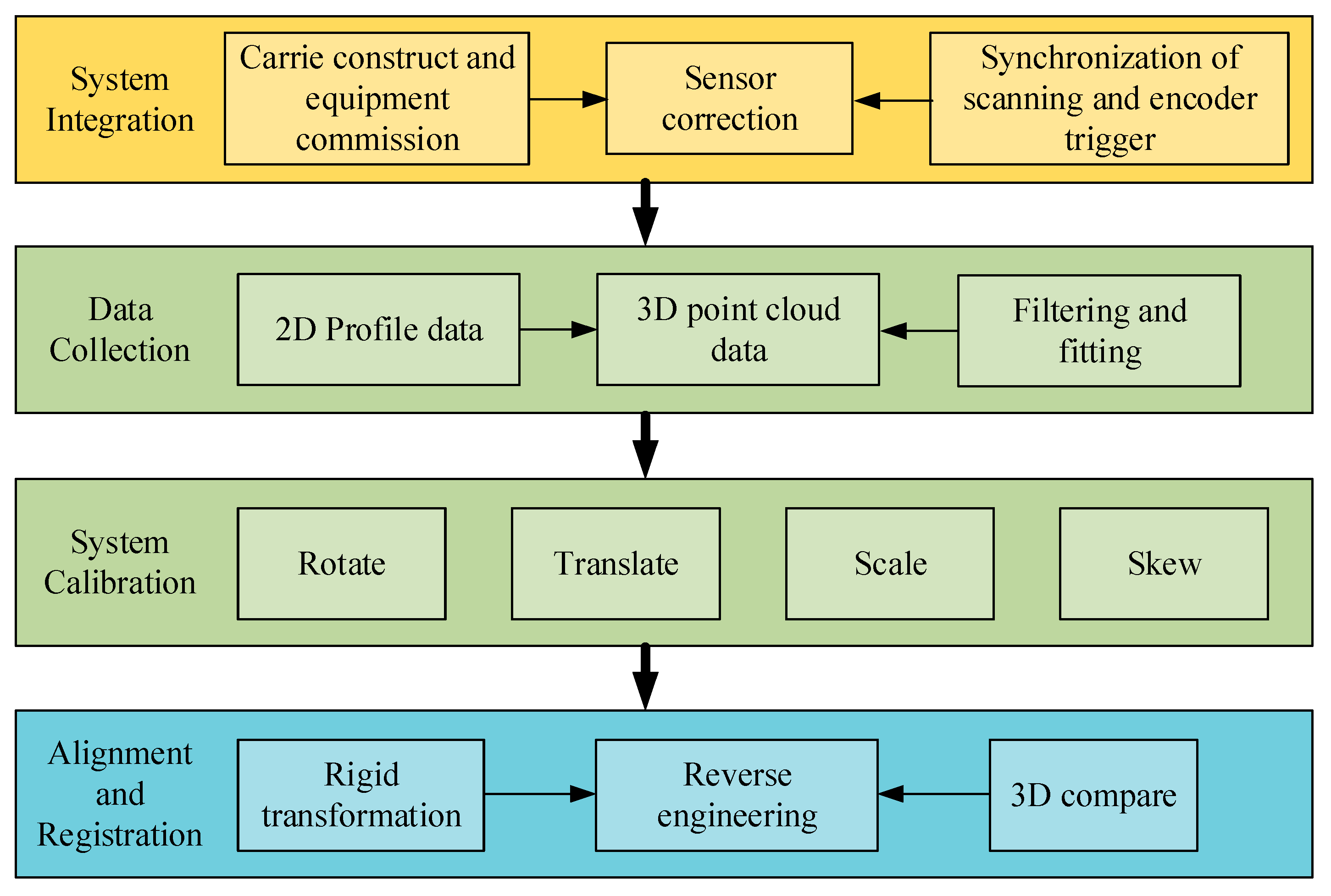

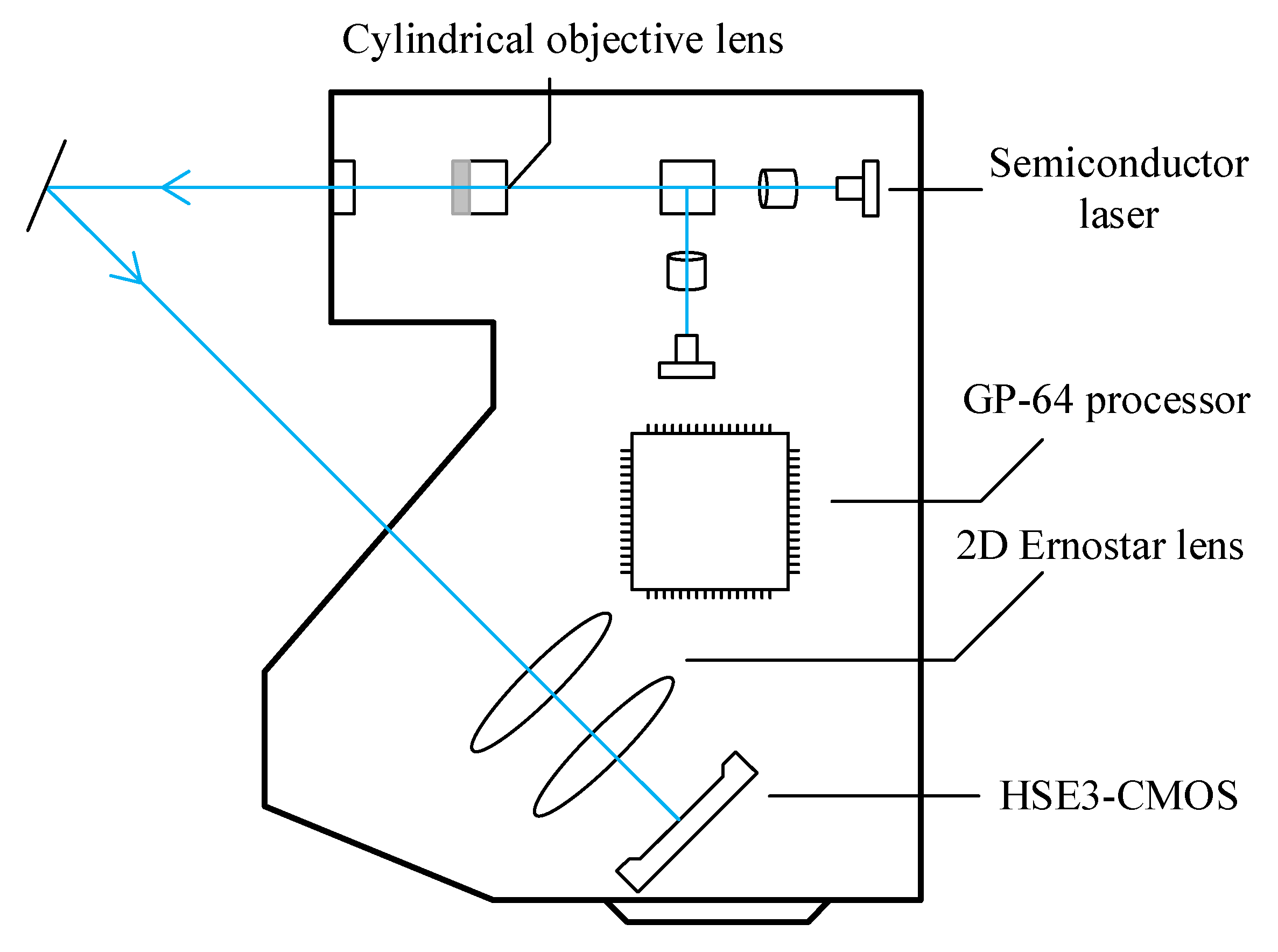

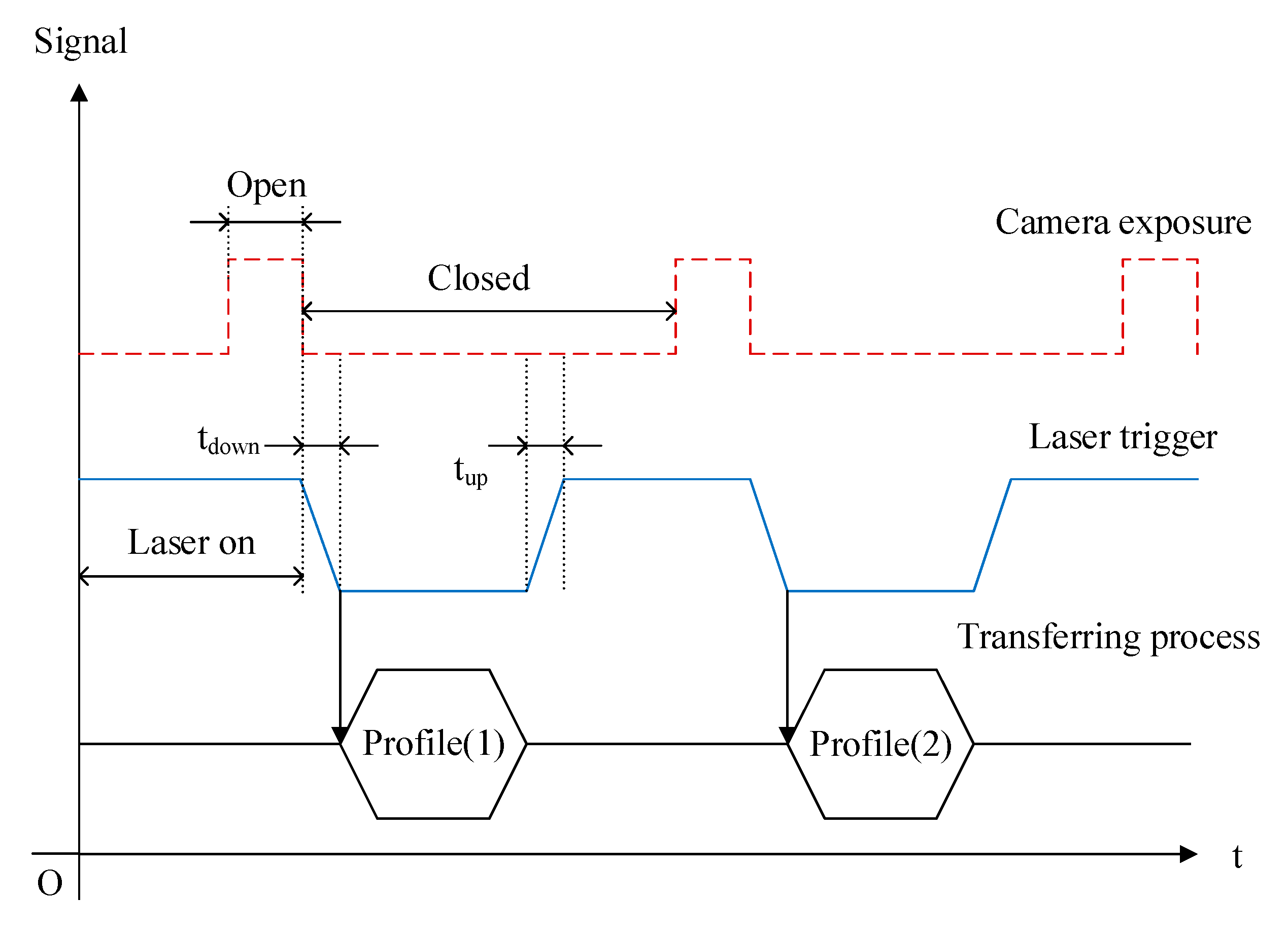

2. Development of the MVS Measurement System

3. Coordinate Calculation and Calibration for the MVS Measuring System

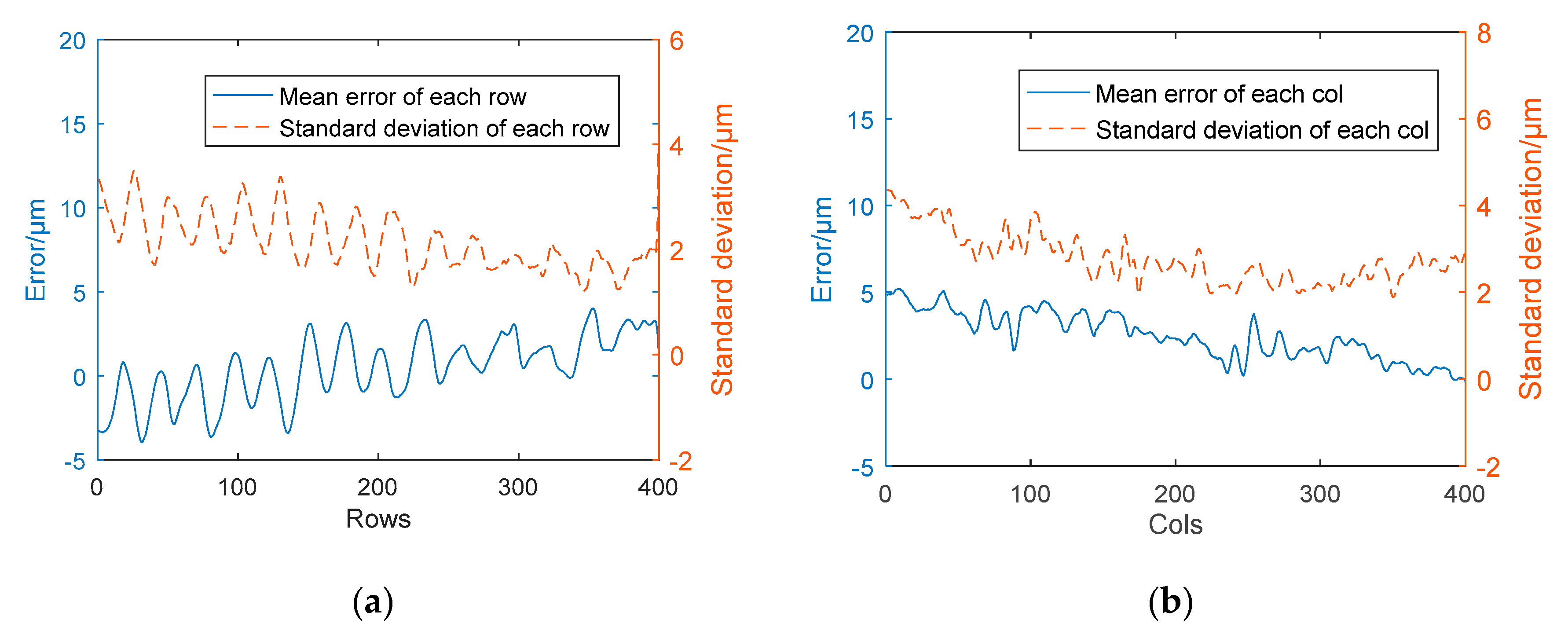

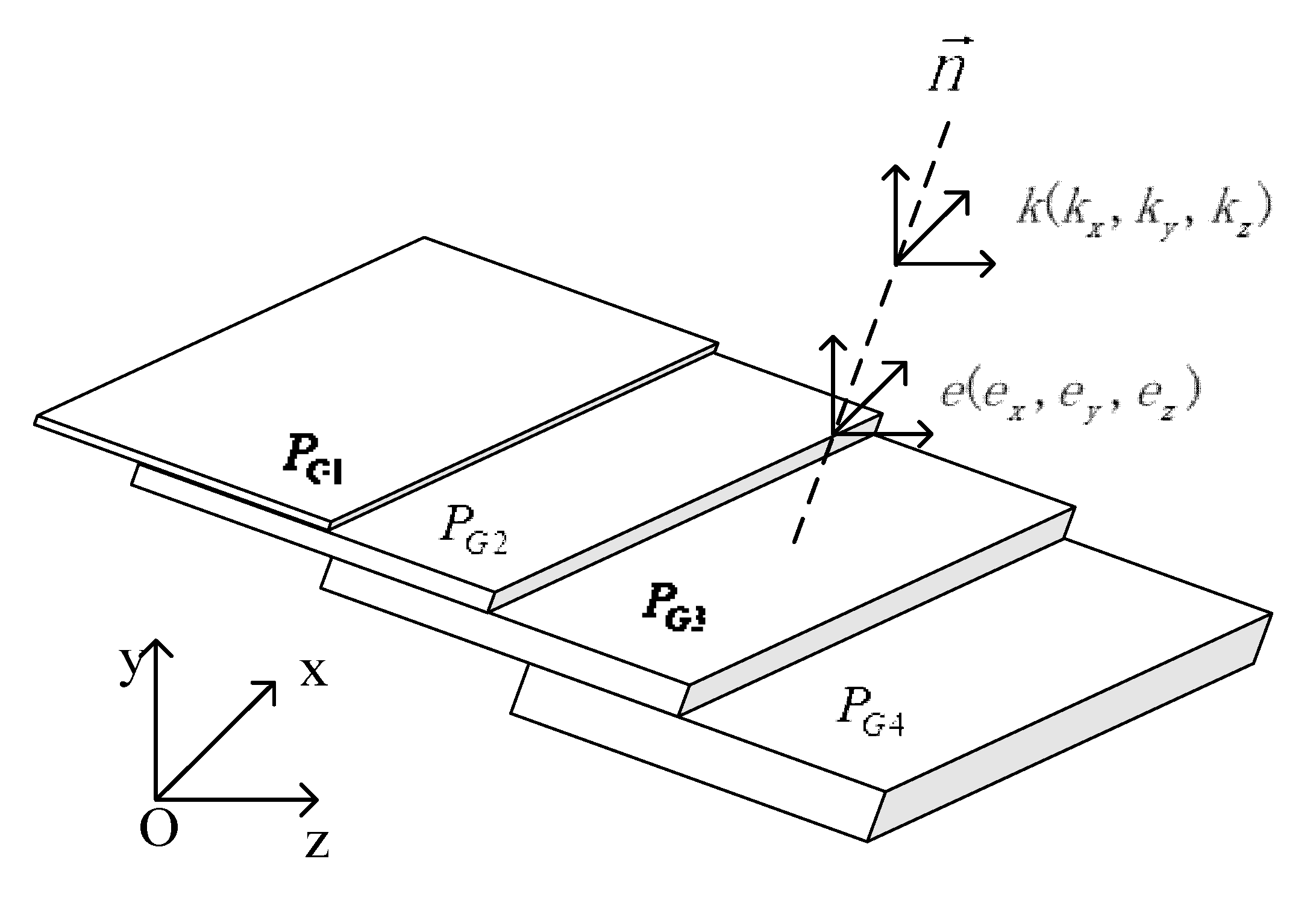

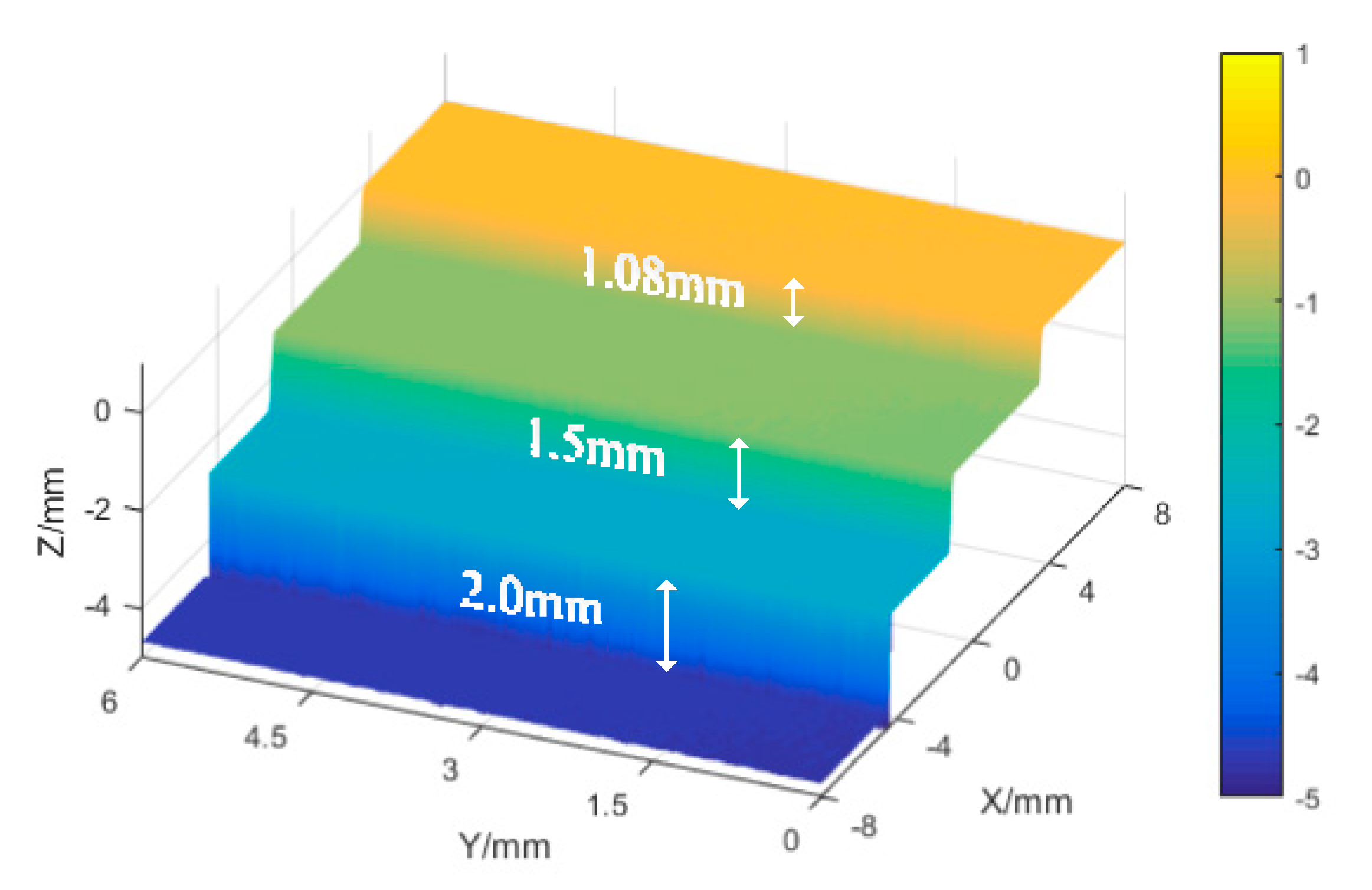

3.1. Gauge Block Ladder Calibration

- Point cloud segmentation: The region growth method is used to segment the gauge block data and filter external isolated points before saving in height order.

- Normal vector calculation: Based on the point cloud covariance, the average normal vector of the whole data is calculated as the standard height difference direction of the gauge ladder.

- Centroid calculation: The centroid of each point cloud level is calculated, and the difference between the two adjacent centroid coordinates along the normal direction can serve as the measured value of the current level, while the error e is the difference between the standard value and the measured value.

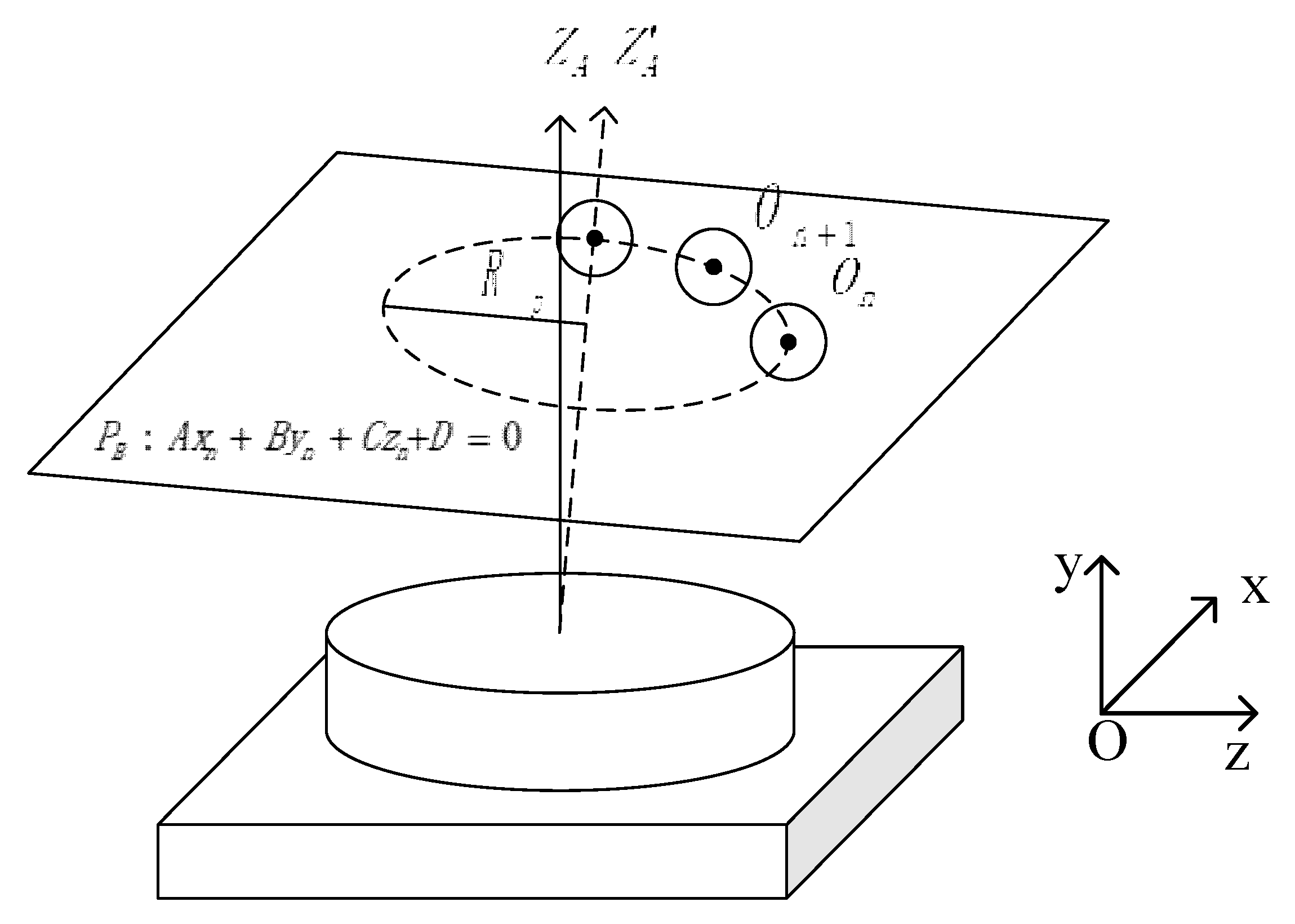

3.2. Turntable Calibration

- At different positions of the rotating table, the 3D coordinate data of a partial sphere with radius are obtained by the sensor. These data are substituted into the space spherical equation, and the nonlinear equations are given by:By solving the equations, the spherical center coordinates of the spherical surface at this position can be calculated. Measured in the position N, the spherical center coordinate data of the group N were fitted together.

- The N group of spherical coordinate data is substituted into the space plane equation, and the N-dimensional linear equation system is constructed by:Solving the equations by least-square method and the fitting plane equation, the equation can be considered the orbit equation of the center of a sphere. The normal vector of the plane u is:

- The N group of spherical coordinate data were projected onto the calibrated rotation trajectory plane , and then the coordinates of the corresponding projection points were obtained. The points found by the search algorithm that have the smallest distance from these projection points in the plane of rotation trajectory can be solved by:the point is the intersection of the revolution axis and the sphere center trajectory plane.

4. Experiments and Discussion

4.1. System Calibration Experiment

4.2. 3D Reconstruction Experiment

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Novaković, G.; Lazar, A.; Kovačič, S.; Vulić, M. The Usability of terrestrial 3D laser scanning technology for tunnel clearance analysis application. Appl. Mech. Mater. 2014, 683, 219–224. [Google Scholar] [CrossRef]

- Yang, T.; Song, Y.; Zhang, W.T.; Li, F. Acoustic emission detection using intensity-modulated DFB fiber laser sensor. Chin. Opt. Lett. 2016, 14, 46–49. [Google Scholar]

- Przemyslaw, L.; Michal, N.; Piotr, S. Characterization of a compact laser scanner as a sensor for legged mobile robots. Manag. Prod. Eng. Rev. 2012, 3, 45–52. [Google Scholar]

- Govindarajan, M.S.; Wang, J.; Post, B.; Fox, A. Target Design and Recognition for 2-D Localization of Indoor Mobile Robots Using a Laser Sensor. IFAC Proc. Vol. 2013, 46, 67–74. [Google Scholar] [CrossRef]

- Chow, C.K.; Tsui, H.T.; Lee, T. Surface registration using a dynamic genetic algorithm. Pattern Recognit. 2004, 37, 105–117. [Google Scholar] [CrossRef]

- Zou, X.; Zou, H.; Lu, J. Virtual manipulator-based binocular stereo vision positioning system and errors modelling. Mach. Vis. Appl. 2012, 23, 43–63. [Google Scholar] [CrossRef]

- Lv, Z.; Zhang, Z. Build 3D Scanner System based on Binocular Stereo Vision. J. Comput. 2012, 7, 399–404. [Google Scholar] [CrossRef]

- Mueller, T.; Jordan, M.; Schneider, T.; Poesch, A.; Reithmeier, E. Measurement of steep edges and undercuts in confocal microscopy. Micron 2016, 84, 79–95. [Google Scholar] [CrossRef]

- Kho, K.W.; Shen, Z.; Malini, O. Hyper-spectral confocal nano-imaging with a 2D super-lens. Opt. Express 2011, 19, 2502–2518. [Google Scholar] [CrossRef]

- Javier, C.; Josep, T.; Isabel, F.; Rodrigo, M.; Luis, G.; Elvira, F. Simulated and Real Sheet-of-Light 3D Object Scanning Using a-Si:H Thin Film PSD Arrays. Sensors 2015, 15, 29938–29949. [Google Scholar]

- Guan, H.; Liu, M.; Ma, X.; Song, Y. Three-Dimensional Reconstruction of Soybean Canopies Using Multisource Imaging for Phenotyping Analysis. Remote Sens. 2018, 10, 1206. [Google Scholar] [CrossRef]

- Genta, G.; Minetola, P.; Barbato, G. Calibration procedure for a laser triangulation scanner with uncertainty evaluation. Opt. Lasers Eng. 2016, 86, 11–19. [Google Scholar] [CrossRef]

- Wagner, B.; Stüberc, P.; Wissel, T.; Bruder, R.; Schweikard, A.; Ernst, F. Ray interpolation for generic triangulation based on a galvanometric laser scanning system. In Proceedings of the 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), New York, NY, USA, 16–19 April 2015; pp. 1419–1422. [Google Scholar]

- Selami, Y.; Tao, W.; Gao, Q.; Yang, H.; Zhao, H. A scheme for enhancing precision in 3-dimensional positioning for non-contact measurement systems based on laser triangulation. Sensors 2018, 18, 504. [Google Scholar] [CrossRef]

- Dong, Z.; Sun, X.; Chen, C.; Sun, M. A fast and on-machine measuring system using the laser displacement sensor for the contour parameters of the drill pipe thread. Sensors 2018, 18, 1192. [Google Scholar] [CrossRef]

- Zhao, Q.; Yue, Y.; Guan, Q. A PSO-Based Ball-Plate Calibration for Laser Scanner. In Proceedings of the IEEE International Conference on Measuring Technology and Mechatronics Automation, Zhangjiajie, China, 11–12 April 2009; Volume 2, pp. 479–481. [Google Scholar]

- Lee, R.T.; Shiou, F.J. Multi-beam laser probe for measuring position and orientation of freeform surface. Measurement 2011, 44, 1–10. [Google Scholar] [CrossRef]

- Dai, M.; Chen, L.; Yang, F.; He, X. Calibration of revolution axis for 360-deg surface measurement. Appl. Opt. 2013, 52, 5440–5448. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Huntley, J.M.; Burguete, R.L.; Coggrave, C.R. Multiple-view shape and deformation measurement by combining fringe projection and digital image correlation. Strain 2012, 48, 256–266. [Google Scholar] [CrossRef]

- Li, Y.; Dai, A.; Guibas, L. Database-assisted object retrieval for real-time 3d reconstruction. Comput. Graphics Forum 2015, 34, 435–446. [Google Scholar] [CrossRef]

- Song, L.; Dong, X.; Xi, J.; Yu, Y.; Yang, C. A new phase unwrapping algorithm based on three wavelength phase shift profilometry method. Opt. Laser Technol. 2013, 45, 319–329. [Google Scholar] [CrossRef]

- He, Y.; Liang, B.; Yang, J.; Li, S.; He, J. An iterative closest points algorithm for registration of 3d laser scanner point clouds with geometric features. Sensors 2017, 17, 1862. [Google Scholar] [CrossRef]

- Chen, J.; Wu, X.; Wang, M.Y.; Li, X. 3D shape modeling using a self-developed hand-held 3d laser scanner and an efficient ht-icp point cloud registration algorithm. Opt. Laser Technol. 2013, 45, 414–423. [Google Scholar] [CrossRef]

- Snyder, W.E.; Qi, H. Machine Vision, 1st ed.; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Confalonieri, S. The casus irreducibilis in cardano’s ars magna and de regula aliza. Arch. Hist. Exact Sci. 2015, 69, 257–289. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; Volume 47, pp. 1–4. [Google Scholar]

- Sitnik, R.; Kujawinska, M.; Woznicki, J.M. Digital fringe projection system for large-volume 360-deg shape measurement. Opt. Eng. 2002, 41, 443–449. [Google Scholar] [CrossRef]

- Zheng, P.; Guo, H.; Yu, Y.; Chen, M. Three-dimensional profile measurement using a flexible new multiview connection method. Proc SPIE 2008, 7155, 112. [Google Scholar]

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T. Numerical Recipes in C: The Art of Scientific Computing; Cambridge University Press: Cambridge, MA, USA, 1995; Volume 10, pp. 176–177. [Google Scholar]

| Feature | Value |

|---|---|

| LED source (blue) | 405 nm |

| Distance measurement | 60 mm ± 8 mm |

| Spot size | 45 μm |

| Laser output | 10 mW |

| Sampling cycle | 16–32 μs |

| Repeatability(Z-axis) | 0.4 μm |

| Linearity | ±0.1% |

| Profile data interval(X-axis) | 20 μm |

| Ladder Level | Mean Error | RMS |

|---|---|---|

| 1.08 mm | 0.073 μm | 4.963 μm |

| 1.5 mm | 4.456 μm | 3.424 μm |

| 2 mm | 3.665 μm | 2.774 μm |

| Gauge tolerance | 0.30 μm | |

| Accuracy grade | 2 | |

| Deg (°) | RMS (mm) | |||

|---|---|---|---|---|

| 0 | (4.983, 3.425, −11.961) | (4.983, 3.425, −11.961) | 0 | (0.014, 0.033, 0.021) |

| 60 | (9.061, 3.433, −13.718) | (4.994, 3.420, −11.969) | 0.014 | (0.009, 0.077, 0.027) |

| 120 | (12.644, 3.393, −11.062) | (4.985, 3.410, −12.004) | 0.046 | (0.013, 0.048, 0.011) |

| 180 | (12.125, 3.377, −6.674) | (4.960, 3.437, −11.970) | 0.028 | (0.011, 0.085, 0.024) |

| 240 | (7.999, 3.347, −4.947) | (5.025, 3.421, −11.980) | 0.046 | (0.008, 0.026, 0.024) |

| 300 | (4.442, 3.347, −7.570) | (4.974, 3.390, −11.997) | 0.051 | (0.006, 0.016, 0.026) |

| Fitting Result | Calibration Evaluation | ||

|---|---|---|---|

| Parameter | Value | Parameter | Value |

| Fitted (mm) | 9.986 | Axis center (mm) | (8.542, 3.387, −9.322) |

| Total points number | 80,597 | Shaft angle of axis (°) | 0.713 |

| Average error (mm) | ±0.004 | Diameter (mm) | 20 |

| Maximum error (mm) | 0.229 | Diameter accuracy (μm) | 0.25 |

| RMS σ (mm) | 0.012 | Roundness (μm) | 0.3 |

| Measurement Number | Geomagic Measurement Results | Measurement Results in this Paper | ||||

|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |

| 1 | 5.7094 | 8.0886 | −14.2365 | 5.7088 | 8.0822 | −14.2381 |

| 2 | 5.7056 | 8.0377 | −14.2013 | 5.7058 | 8.0385 | −14.2014 |

| 3 | 5.6926 | 8.0184 | −14.1902 | 5.6934 | 8.0207 | −14.1904 |

| 4 | 5.7042 | 8.0722 | −14.2083 | 5.6958 | 8.0718 | −14.2054 |

| 5 | 5.6992 | 8.0408 | −14.1931 | 5.7025 | 8.0453 | −14.1967 |

| 6 | 5.7065 | 8.0188 | −14.2085 | 5.7083 | 8.0219 | −14.2099 |

| 7 | 5.6922 | 8.0464 | −14.2019 | 5.6924 | 8.0467 | −14.2022 |

| 8 | 5.7018 | 8.0692 | −14.2415 | 5.7051 | 8.0711 | −14.2430 |

| 9 | 5.6876 | 8.0365 | −14.2100 | 5.6871 | 8.0361 | −14.2084 |

| 10 | 5.7039 | 8.0296 | −14.2317 | 5.7045 | 8.0274 | −14.2334 |

| 11 | 5.6822 | 8.0218 | −14.1976 | 5.6818 | 8.0245 | −14.1983 |

| 12 | 5.6928 | 8.0367 | −14.2145 | 5.6925 | 8.0306 | −14.2172 |

| Dimension | Value |

|---|---|

| The average number of MVS point cloud | 49,078 |

| Time spent in each view (s) | 8.5 |

| Average error (mm) | 0.075 |

| RMS (mm) | 0.151 |

| RMS (%) | 0.604 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, L.; Sun, S.; Yang, Y.; Zhu, X.; Guo, Q.; Yang, H. A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces. Sensors 2019, 19, 381. https://doi.org/10.3390/s19020381

Song L, Sun S, Yang Y, Zhu X, Guo Q, Yang H. A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces. Sensors. 2019; 19(2):381. https://doi.org/10.3390/s19020381

Chicago/Turabian StyleSong, Limei, Siyuan Sun, Yangang Yang, Xinjun Zhu, Qinghua Guo, and Huaidong Yang. 2019. "A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces" Sensors 19, no. 2: 381. https://doi.org/10.3390/s19020381

APA StyleSong, L., Sun, S., Yang, Y., Zhu, X., Guo, Q., & Yang, H. (2019). A Multi-View Stereo Measurement System Based on a Laser Scanner for Fine Workpieces. Sensors, 19(2), 381. https://doi.org/10.3390/s19020381