Blind Estimation of the PN Sequence of A DSSS Signal Using A Modified Online Unsupervised Learning Machine

Abstract

:1. Introduction

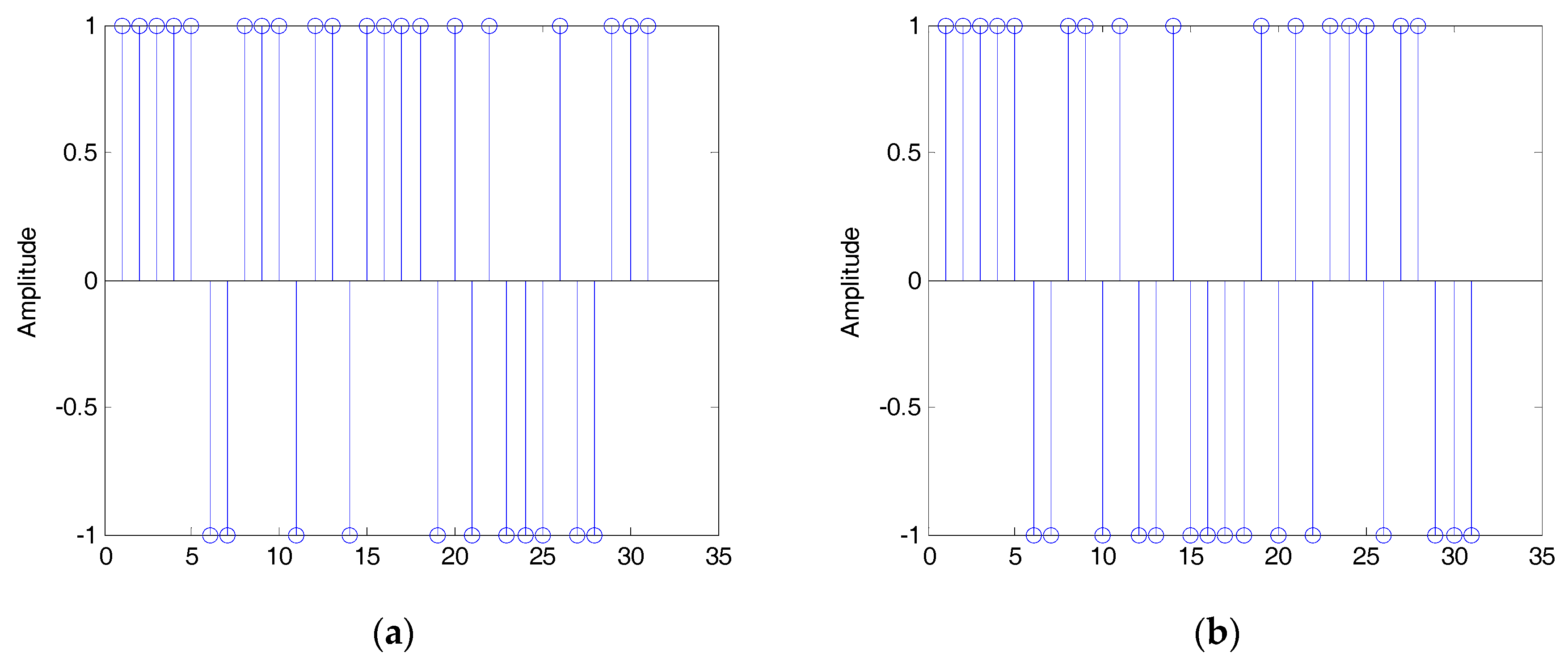

- (a)

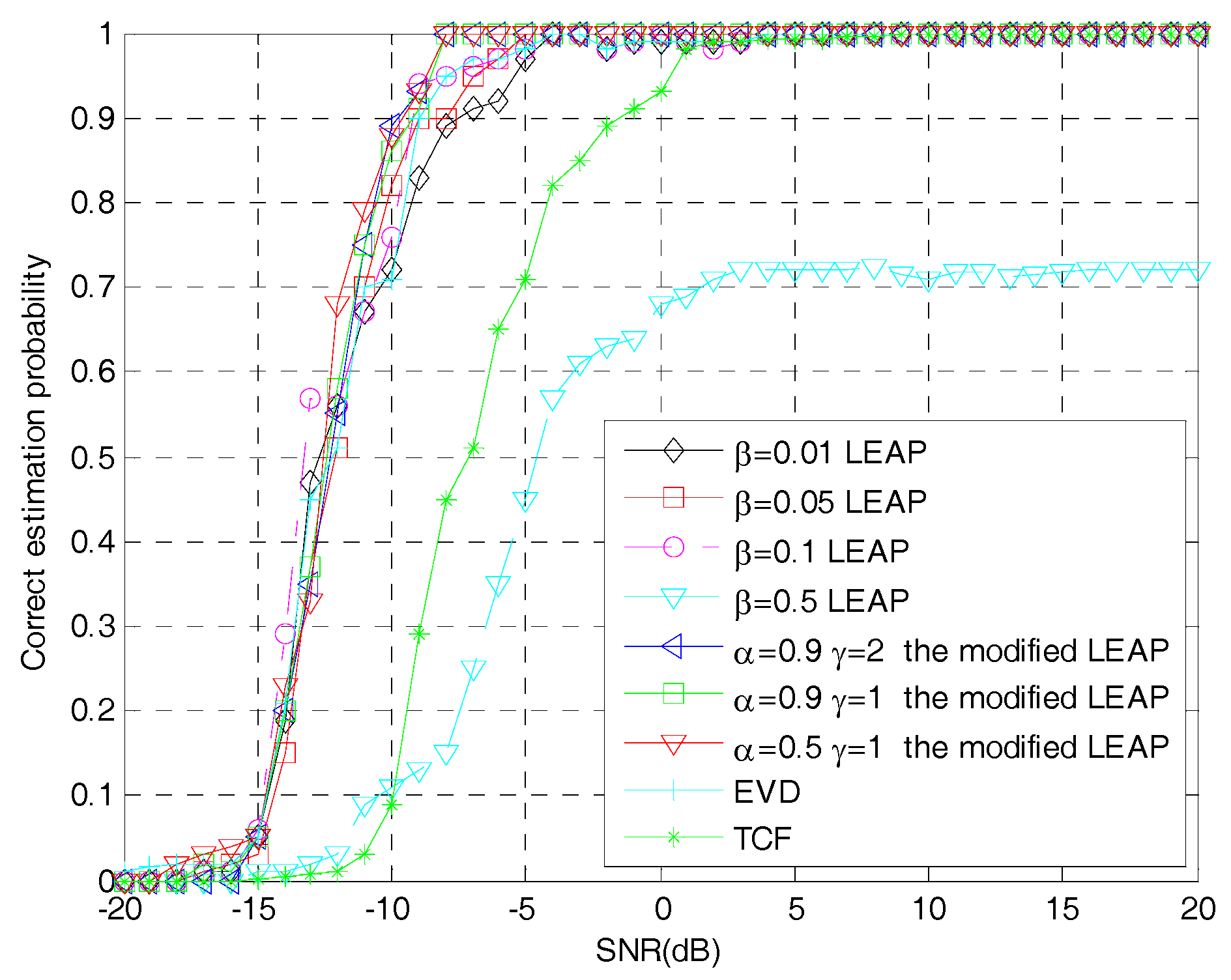

- The LEAP algorithm is applied into the field of the PN sequence estimation of DSSS signals and a modified LEAP algorithm is proposed. Compared to the original LEAP algorithm, the modified LEAP algorithm has a better convergence performance due to its use of variable learning steps rather than a fixed one;

- (b)

- Since the phase of the eigenvector can be inverted, the incorrect estimation of the PN sequence of the DSSS signal may be obtained. Based on this, a novel approach which makes full use of the correlation characteristics of the PN sequence is proposed here to solve this problem.

2. Basic Theories

2.1. DSSS Signal Model

2.2. The Principle of PCA for PN Sequence Estimation

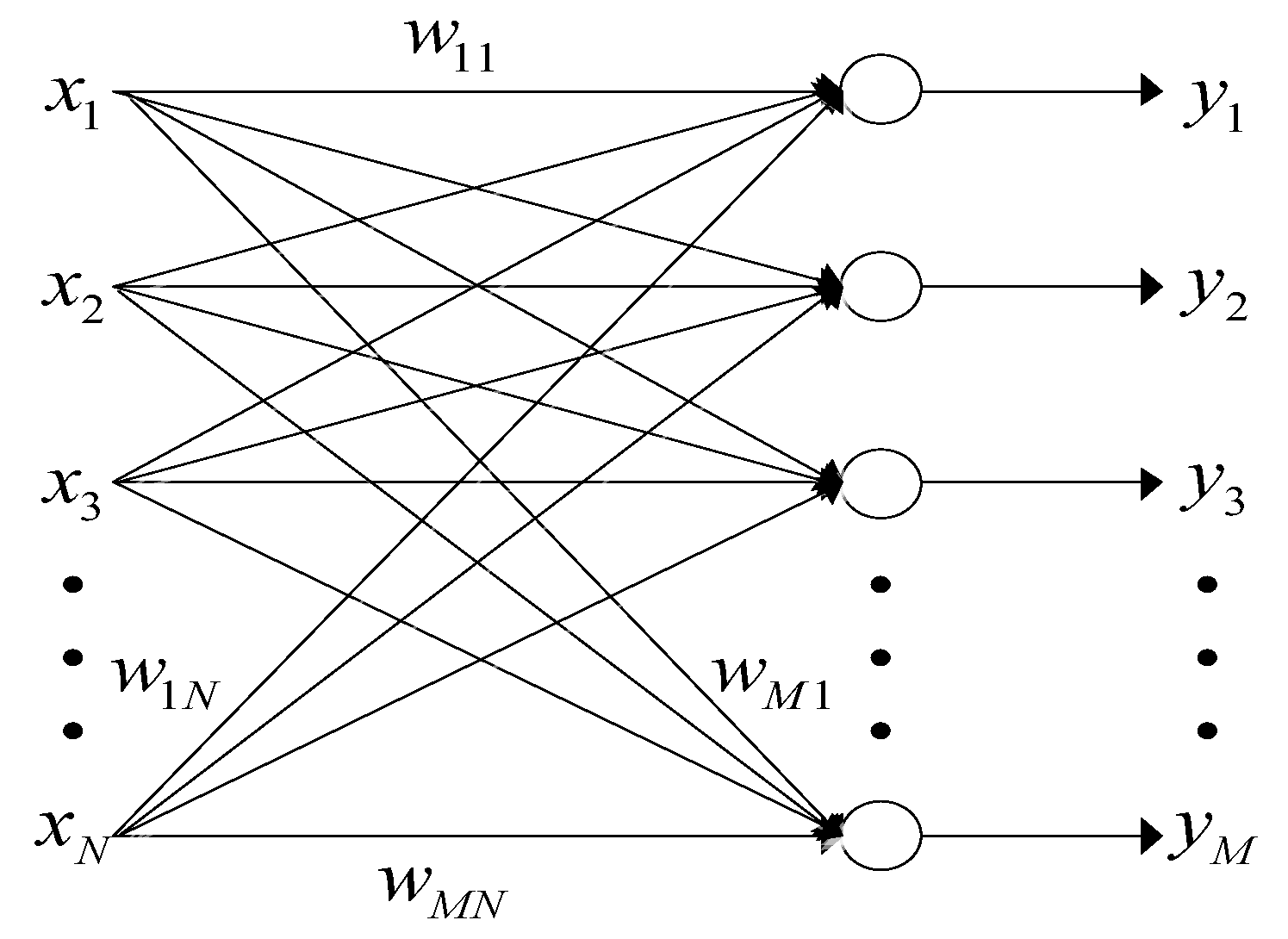

2.3. Mathematical Model of The Modified LEAP

2.4. Asymptotic Stability Analysis of The Modified LEAP

3. PN Sequence Estimation and The Elimination of Phase Ambiguity

4. The Main Steps for PN Sequence Estimation

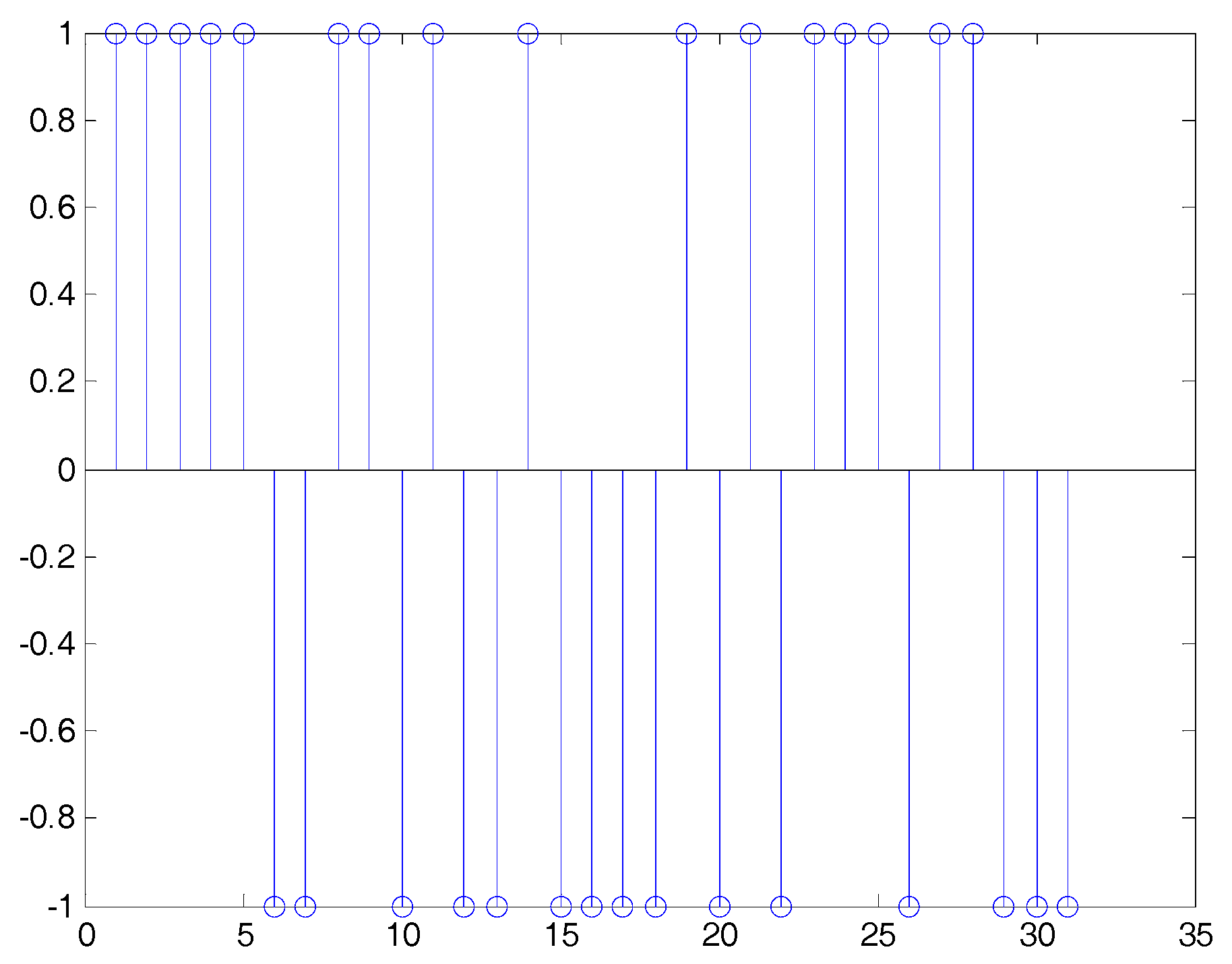

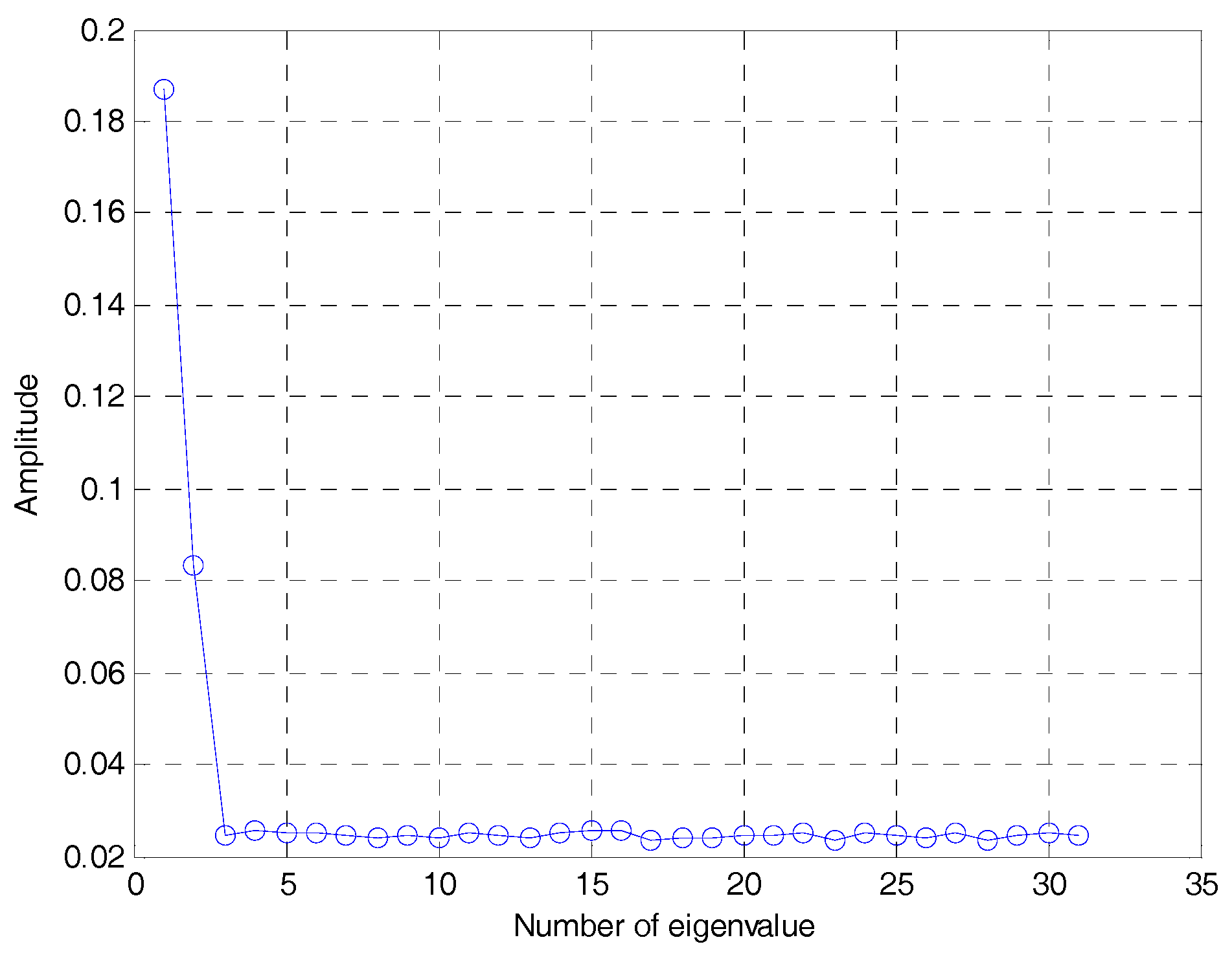

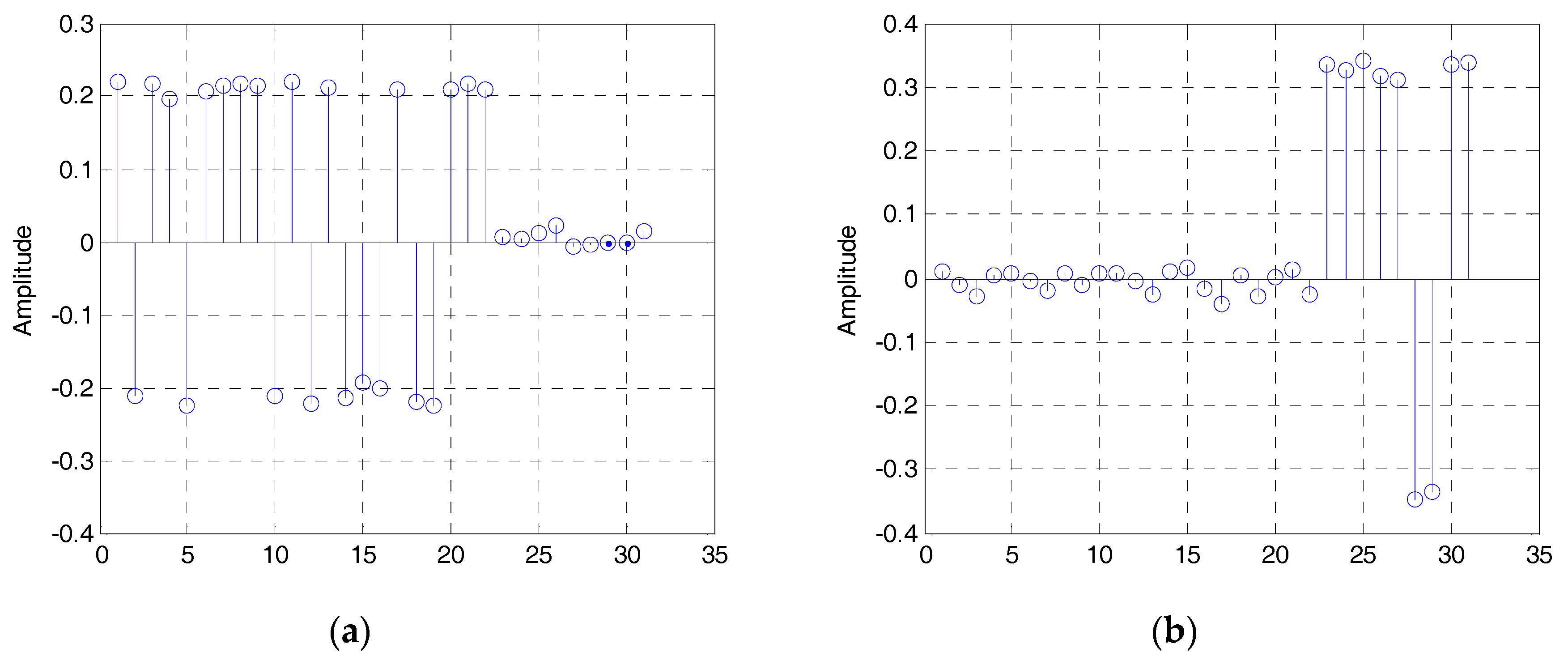

5. Simulations and Analysis

6. Conclusions

7. Patents

Author Contributions

Funding

Conflicts of Interest

References

- De Gaudenzi, R.; Giannetti, F.; Luise, M. Signal recognition and signature code acquisition in CDMA mobile packet communications. IEEE Trans. Veh. Technol. 1998, 47, 196–208. [Google Scholar] [CrossRef]

- Pickholtz, R.; Schilling, D.; Milstein, L. Theory of spread-spectrum communications. IEEE Trans. Commun. 1982, 30, 855–884. [Google Scholar] [CrossRef]

- Dillard, R.A. Detectability of Spread Spectrum Signals. IEEE Trans. Aerosp. Electron. Syst. 1979, 15, 526–537. [Google Scholar] [CrossRef]

- Mou, Q.; Wei, P.; Tai, H.M. Invariant detection for short-code QPSK DS-SS signals. Signal Process. 2010, 90, 1720–1729. [Google Scholar] [CrossRef]

- Rappaport, T.S. Spread spectrum signal Acquisition: Methods and technology. IEEE Commun. Mag. 1984, 22, 6–21. [Google Scholar] [CrossRef]

- Flikkema, P.G. Spread-spectrum techniques for wireless communication. IEEE Signal Process. Mag. 1997, 14, 26–36. [Google Scholar] [CrossRef]

- Davisson, L.D.; Flikkema, P.G. Fast single-element PN acquisition for the TDRSS MA system: Methods and technology. IEEE Trans. Commun. 1988, 36, 1226–1235. [Google Scholar] [CrossRef]

- Warner, E.S.; Mulgrew, B.; Grant, P.M. Triple correlation analysis of m sequences. Electron. Lett. 1993, 29, 1755–1756. [Google Scholar] [CrossRef]

- Gu, X.; Zhao, Z.; Shen, L. Blind estimation of pseudo-random codes in periodic long code direct sequence spread spectrum signals. IET Commun. 2016, 10, 1273–1281. [Google Scholar] [CrossRef]

- Hill, P.C.J.; Comley, V.E.; Adams, E.R. Techniques for detecting and characterising covert communication signals. In Proceedings of the IEEE International Conference on Military Communications, Monterey, CA, USA, 3–5 November 1997. [Google Scholar]

- Adams, E.R.; Gouda, M.; Hill, P.C.J. Statistical techniques for blind detection & discrimination of m-sequence codes in DS/SS systems. In Proceedings of the IEEE 5th International Symposium on Spread Spectrum Techniques and Applications, Sun City, South Africa, 4 September 1998. [Google Scholar]

- Adams, E.R.; Gouda, M.; Hill, P.C.J. Detection and characterisation of DS/SS signals using higher-order correlation. In Proceedings of the IEEE 3th International Symposium on Spread Spectrum Techniques and Applications, Mainz, Germany, 25 September 1996. [Google Scholar]

- Gouda, M.; Ali, Y. M-sequence Triple Correlation Function Co-set Summing and Code Image Print (CIP). In Proceedings of the IEEE 11th International Conference on Computer Modelling and Simulation, Cambridge, UK, 25–27 March 2009. [Google Scholar]

- Burel, G.; Bouder, C. Blind estimation of the pseudo-random sequence of a direct sequence spread spectrum signal. In Proceedings of the 21st Century Military Communications. Architectures and Technologies for Information Superiority, Los Angeles, CA, USA, 22–25 October 2000. [Google Scholar]

- Zhang, T.; Mu, A. A modified eigen-structure analyzer to lower SNR DS-SS signals under narrow band interferences. Digit. Signal Prog. 2008, 18, 526–533. [Google Scholar] [CrossRef]

- Qiu, P.Y.; Huang, Z.T.; Jiang, W.L.; Zhang, C. Blind multiuser spreading sequences estimation algorithm for the direct-sequence code division multiple access signals. IET Signal Process. 2010, 4, 465–478. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, A.M.C. Analyze the eigen-structure of DS-SS signals under narrow band interferences. Digit. Signal Prog. 2006, 16, 746–753. [Google Scholar] [CrossRef]

- Qui, P.Y.; Huang, Z.T.; Jiang, W.L.; Zhang, C. Improved blind-spreading sequence estimation algorithm for direct sequence spread spectrum signals. IET Signal Process. 2008, 2, 139–146. [Google Scholar] [CrossRef]

- Dominique, F.; Reed, J.H. Subspace based PN code sequence estimation for direct sequence signals using a simplified Hebb rule. Electron. Lett. 1997, 33, 1119–1120. [Google Scholar] [CrossRef]

- Chen, H.; Liu, R.W. An On-Line Unsupervised Learning Machine for Adaptive Feature Extraction. IEEE Trans. Circuits Syst. 1994, 41, 87–98. [Google Scholar] [CrossRef]

- Rohrer, R. Transformation Techniques for the Application of Liapunov’s Direct Method to Linear Systems. IEEE Trans. Circuit Theory 1964, 11, 171–173. [Google Scholar] [CrossRef]

- Deville, Y. A unified stability analysis of the Herault-Jutten source separation neural network. Signal Process. 1996, 51, 229–233. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, Y.; Fang, S.; Wang, X.; Huang, S. Blind Estimation of the PN Sequence of A DSSS Signal Using A Modified Online Unsupervised Learning Machine. Sensors 2019, 19, 354. https://doi.org/10.3390/s19020354

Wei Y, Fang S, Wang X, Huang S. Blind Estimation of the PN Sequence of A DSSS Signal Using A Modified Online Unsupervised Learning Machine. Sensors. 2019; 19(2):354. https://doi.org/10.3390/s19020354

Chicago/Turabian StyleWei, Yangjie, Shiliang Fang, Xiaoyan Wang, and Shuxia Huang. 2019. "Blind Estimation of the PN Sequence of A DSSS Signal Using A Modified Online Unsupervised Learning Machine" Sensors 19, no. 2: 354. https://doi.org/10.3390/s19020354

APA StyleWei, Y., Fang, S., Wang, X., & Huang, S. (2019). Blind Estimation of the PN Sequence of A DSSS Signal Using A Modified Online Unsupervised Learning Machine. Sensors, 19(2), 354. https://doi.org/10.3390/s19020354