Abstract

Noise estimation for image sensor is a key technique in many image pre-processing applications such as blind de-noising. The existing noise estimation methods for additive white Gaussian noise (AWGN) and Poisson-Gaussian noise (PGN) may underestimate or overestimate the noise level in the situation of a heavy textured scene image. To cope with this problem, a novel homogenous block-based noise estimation method is proposed to calculate these noises in this paper. Initially, the noisy image is transformed into the map of local gray statistic entropy (LGSE), and the weakly textured image blocks can be selected with several biggest LGSE values in a descending order. Then, the Haar wavelet-based local median absolute deviation (HLMAD) is presented to compute the local variance of these selected homogenous blocks. After that, the noise parameters can be estimated accurately by applying the maximum likelihood estimation (MLE) to analyze the local mean and variance of selected blocks. Extensive experiments on synthesized noised images are induced and the experimental results show that the proposed method could not only more accurately estimate the noise of various scene images with different noise levels than the compared state-of-the-art methods, but also promote the performance of the blind de-noising algorithm.

1. Introduction

Images captured by charge-coupled device (CCD) image sensors will bring about many types of noise, which are derived from CCD image sensor arrays, camera electronics and analog to digital conversion circuits. Noise is the most concerning problem in imaging systems for it directly degrades the image quality. Therefore, image de-noising is a critical procedure to ensure high quality images. Most of existing de-noising algorithms process the noisy images via assuming that the noise level parameters are given. However, this is not the correct operation in real noisy images, because the noise level parameters generally are unknown in the real world. Blind de-noising without requesting any prior knowledge is a high-profile study. How to accurately estimate the noise parameters is a challenging issue in blind de-noising field, hence, noise level estimation has attracted many studies and a number of noise estimation algorithms have been developed [1,2,3].

Actually, estimating the CCD noise level function (NLF) is to extract ground true noise from noisy image by finding the proximal noise probability density function (PDF). Comprehensive noise PDF estimation may not be easy, yet in statistical science, if we know the model of distribution of the noise PDF, the numerical characteristics such as mathematical expectation, variance and covariance can explain the noise PDF credibly. The most prevalent noise model for CCD image sensors is the zero-mean additive white Gaussian noise (AWGN) model, and the goal of estimating AWGN noise PDF is to estimate one parameter, the standard deviation. In this field, many representative algorithms have been proposed and can be divided into four categories: CCD response-based methods, filter-based methods, statistics-based methods and block-based methods. In the CCD response-based methods, the image noise is assessed by noise sources, which are introduced by CCD cameras. In [4], according to a pair of studies on the CCD Photon Transfer Curve (PTC) and camera noise characters, a complete equation of different noise sources of image sensor was established. Irie et al. [5,6] introduced a CCD camera noise model that includes more comprehensive noise sources for CCD image sensors, and developed a robust technique to measure and distinguish those noise sources in CCD camera images. These methods are trying to separate the image noise one by one via analyzing the types of CCD sensor noise sources on the premise of acquiring abundant knowledge about the types of CCD image sensor noises and the procedures of measurement, but it is difficult to meet these needs. To address this problem, the non-linear camera response function (CRF) that transforms real scene light into an image pixel value is proposed in [7]. Furthermore, the algorithm depicted in [8] utilizes the pre-measured real world CRF to transform the CCD camera noise from light space into image domain, and then estimates the NLF by exploiting the piecewise smooth image noise model. These CCD response-based noise estimation methods can be further enhanced to study the sources of image sensor noise and identify the types of image noise. Nevertheless, the CCD response-based methods need more time to compute and require familiarity with the camera responses.

In the filter-based methods, the structures of image are suppressed with a high-pass filter, and then the noise variance of the filtered noisy image can be computed. In the early filter-based methods, Immerkær [9] adopted a fast Laplacian-based filter to strengthen the noise and reduce the structures, and estimated the noise standard deviation via averaging the whole filtered image. In order to further improve the accuracy of Immerkær’s estimation, Yang et al. [10] appended adaptive Sobel edge detector to eliminate edges before Laplacian filtering operation. A step signal filter model is presented in [11]. This model is a nonlinear combination of polarized and directional derivatives, and is used to address edges detection and noise estimation. These filter-based methods have outstanding performance in reducing the time and computational complexity. However, these methods are more likely to overestimate the noise level for rich textured images or low noise levels.

The statistics-based methods are based on observation and measurement of statistical values between noisy images and natural noise-free images and obtain the decisive relationship between noise standard deviation and those statistical values. Zoran and Weiss [12] reported that natural images have scale-invariant statistics and kurtosis values are higher for the low frequency component, and linked the discrete cosine transformation (DCT) kurtosis values to the noise standard deviation. Following DCT kurtosis noise estimation work, Lyu et al. [13] further extended their research to estimate local noise variance, and Dong et al. [14] developed a new kurtosis model using a K-means based method to estimate noise level. Actually, the DCT-based statistics methods work well for low-level noise, yet may underestimate the noise variance at a high noise level. In [15,16], the robust median absolute deviation (MAD) noise estimator suggested by Donoho et al., is one of the most commonly used noise estimation algorithms for its efficiency and accuracy.

Being different from the conventional work to process the whole image, the block-based methods try to select homogenous blocks without a high frequency component. Pyatykh et al. [17] analyzed the basis of principal component analysis (PCA) of image blocks and found that smallest eigenvalue of image blocks can be used to estimate noise variance. Liu et al. [18] suggested that low-rank can be suitable for weak-textured blocks selection, and then the noise level can be estimated from these selected blocks using iterative PCA. Recently, References [19,20] developed sparse representation models of selected homogenous blocks to recover NLF. References [21,22] designed superpixel-based noise estimation approaches by collecting irregular shaped smooth blocks. For their simplicity and efficiency, the block-based noise estimation methods are drawing more and more attention recently. Whereas, there is a challenge for stripping homogenous blocks that can easily influence the performance of estimation.

Some previous studies have mentioned that entropy can be used for image segmentation and texture analysis [23,24,25]. Meanwhile, other studies also reported that entropy has a robust stability to noise [26,27]. However, there is little research on applying the entropy to estimate image sensor noise. Considering the good performances of entropy, the local gray statistical entropy (LGSE) is proposed in this paper to extract blocks with weak textures. By making the utmost out of the block-based method and robust MAD-based method, a novel noise estimator including the LGSE for blocks selection and Haar wavelet-based local median absolute deviation (HLMAD) for local variance calculation is designed.

In our work, we also engage in Poisson-Gaussian noise (PGN), which is the less studied but better modeled for the actual camera noise. The existing PGN sensor noise estimators can be classified as: Scatterplot fitting-based methods, least square fitting-based methods and maximum likelihood estimation-based methods. The scatterplot fitting-based methods are proposed to group a set of local means and variances of the selected blocks, and to fit those scatter points using linear regression [28,29,30]. The least square fitting-based methods [31,32,33] use a least square approach to estimate PGN parameters by sampling weak textured blocks and fitting a group of sampled data. In [34,35], the maximum likelihood estimation-based methods still extract smooth blocks first, and then exploit the maximum likelihood function to estimate PGN parameters. The three categories of PGN estimators are equally popular for estimating PGN parameters. Specifically in this paper, we choose maximum likelihood estimation (MLE) to process extracted local mean-variance data for PGN estimation.

There are four contributions in this paper: (1) We analyze the noise sources of an imaging camera, and choose the simple but effective noise models in the image domain to describe most of noise forms of imaging sensor. (2) In the view of conventional noise estimation methods, textures and edges of noisy images are eliminated first in those algorithms. Inspired by this principle, an effective method using LGSE to select homogenous blocks without high frequency details is proposed in this paper. As far as we know, this is a novel approach to utilize local gray entropy in noise estimation. (3) By comparing traditional noise estimation methods, we find local median absolute deviation has a robust performance to compute local standard deviation and MLE can overcome the weakness of scatterplot-based estimation. By combining above two methods and their advantages, a reliable noise estimation method is established. (4) Due to these measures, a robust image sensor noise level estimation scheme is presented, and it is superior to some of the state-of-the-art noise estimation algorithms.

The structure of this paper is organized as follows: The noise model of image sensor in image domain is discussed, and the AWGN model and PGN model are presented in Section 2. The LGSE and HLMAD based noise estimation algorithm is described, and the whole details of the novel and robust method are presented in Section 3. Experimental results and discussions on synthesized noisy datasets are shown in Section 4. Finally, Section 5 gives the conclusion of this paper.

2. Image Sensor Noise Model

Irie et al. [5,6] indicted that the CCD camera noise model includes comprehensive noise sources: Reset noise, thermal noise, amplifier noise, flicker noise, circuit readout noise and photon shot noise. Here, the reset noise, thermal noise, amplifier noise, flicker noise and circuit readout noise vary only in additive spatial domain and can be generally included in the readout noise. Hence, the total noise of a CCD camera can be defined as the combination of the readout noise (additive Gaussian process) and photon shot noise (multiplicative Poisson process) [5].

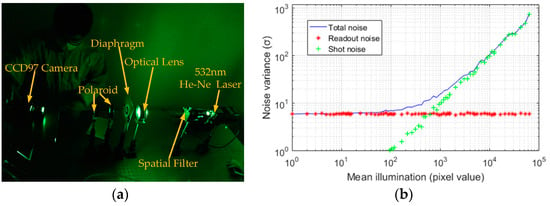

In the CCD noise analysis experiment, we used a 532 nm He-Ne laser to generate light beams, chose CCD97 (produced by e2v, England) in the standard mode (without using multiplicative gain register) to realize the photo-electric conversion, and selected the 16-bit data acquisition card (0-65535 DN) to collect the images. In this experiment, the diaphragm was used to adjust the intensity of the illumination, and the polaroid and optical lens were used to preserve the illumination uniformity on CCD97 light receiving surface. In order to ensure the stability of the scene and the uniformity of the CCD97 received light, a photomultiplier tube was selected as the wide-range micro-illuminometer to monitor the stable brightness at the CCD light receiving surface before the experiment. In addition, the average operation of 25 images in each group can overcome the output fluctuation of He-Ne laser and promote the accuracy of system testing.

To identify and calculate the total noise, readout noise and shot noise of image sensor according to the measurements of [5,6], 100 image groups in different illumination intensities were captured in optical darkroom, and each group had 25 consecutive images without illumination and 25 consecutive images with illumination. Due to the captured images under uniform illumination intensity, the total noise variance in each group is equal to the average value of variances of 25 consecutive images with a given illumination. The variance of the readout noise in each group can be computed by averaging the variances of 25 images captured in dark conditions. The shot noise variance in each group is the difference value between total noise variance and readout noise variance. By measuring the noise variances of these images, the photon transfer curve (PTC) and its logarithm were computed. The experimental platform and the computed PTC curve are shown in Figure 1. Figure 1a shows the experimental platform of CCD noise analysis. Figure 1b depicts that the total noise of image sensor contains signal independent readout noise (red dots) and signal dependent shot noise (green dots). It can be seen from Figure 1b that the noise curve is almost flat under low illumination levels implying that the noise (mostly readout noise) in dark regions of an image is signal independent, while as the illumination increases, the shot noise becomes more and more signal dependent.

Figure 1.

Real noise analysis for charge-coupled device (CCD) image sensor. (a) Experimental platform for noise analysis of CCD image sensor; (b) computed the Photon Transfer Curve (PTC) according to the measurement of [5,6].

According to the noise analysis of the CCD image sensor, two parametric models were used to model the image sensor noise: The generalized zero-mean additive white Gaussian noise (AWGN) model and the Poisson-Gaussian noise (PGN) model.

2.1. Additive White Gaussian Noise Model

In the various sources of CCD camera noise, the reset noise, thermal noise, amplifier noise and circuit readout noise can be seen as additive spatial and temporal noise. For all of these noise sources that obey the zero-mean whited additive Gaussian distribution, their joint noise distribution model can be simplified as:

where is the pixel location, and , , denote original noisy image, noise-free image and random noise image at , respectively. Generally, the random noise image is assumed to be a whited additive Gaussian distribution noise with zero mean and unknown variance :

In the AWGN model, due to the images always having rich textures, the unknown variance estimation still is a head-scratching issue for blind de-noising. To solve this problem, a novel noise variance estimation method is proposed in this paper.

2.2. Poisson-Gaussian Noise Model

For the practical image sensor noise model, the signal-dependent shot noise cannot be ignored. As depicted in Figure 1b, with the increase of irradiation, the measured shot noise also increased significantly. As the shot noise follows the Poisson distribution, the actual CCD camera noise model can be treated as a mixed model of multiplicative Poisson noise and additive Gaussian white noise:

where is the mixed total noise image at . In the Poisson-Gaussian mixed noise model, the mixed total noise image contains signal-dependent (Poisson distribution) noise and signal-independent (Gaussian distribution) noise, and is modeled as:

where follows Poisson distribution, that is , and as mentioned above, the at . Hence, the noise level function becomes:

where is the local total noise variance of mixed noise model, is the expected intensity of noise-free image which is approximately equal to the local mean value of original noise image, and represent the Poisson noise parameter and Gaussian noise variance, respectively. In the PGN model, our mission was mainly to estimate the two parameters and . We can calculate the local mean of the selected image blocks, and estimate the local total variance . Then, a set of local mean-variance was extracted to accurately estimate and by MLE.

3. Proposed Noise Estimation Algorithm

3.1. Proposed Homogenous Blocks Selection Method

Primarily, a group of image blocks with size from input noisy image of size is generated by sliding the rectangular window pixel-by-pixel, and the group of blocks is expressed:

where is the matrix of the i-th block, is the total number of blocks, and the local mean of nearly noise-free signal is computed via averaging all the noisy pixels in the block:

where the local window is around , and the window size is . Then, the proposed homogenous blocks selection method is introduced as following.

3.1.1. Local Gray Statistic Entropy

In information theory, Shannon entropy [36] can effectively reflect the information contained in an event. The local gray statistic method is similar to Shannon entropy, but the new modified method focuses on how messy the gray texture values are. Firstly, given the original noisy image, the local map of normalized pixel grayscale value can be calculated as:

where and created the location in local window around . Meanwhile is the gray level of original noisy image, is the normalized pixel grayscale value, and is a small constant used to avoid the denominator becoming zero. Then, the proposed LGSE is defined as follows:

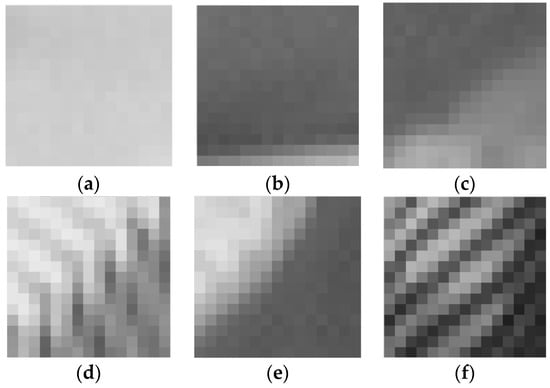

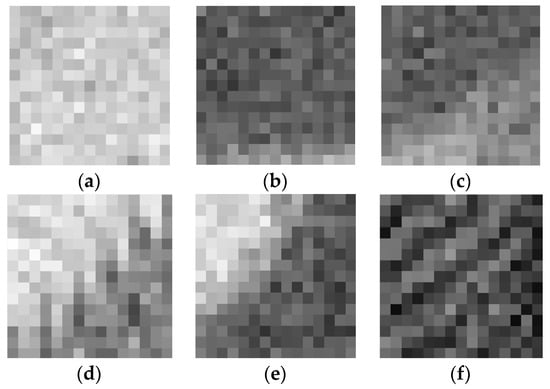

where is the local gray entropy of 3 × 3 center pixels and all of the neighbor pixels around the location . According to the theory of Shannon entropy, the LGSE reflects the degree of dispersion of local image grayscale texture. When the local image of gray distribution is uniform, the LGSE is relatively bigger, and when the local image of gray texture level distribution has a large dispersion, the LGSE is smaller. Due to the LGSE is the outcome of combined action of all pixels in the local window, the local entropy itself has a robust ability to resist noise. Six different noise-free textured blocks and their corresponding values of LGSE are given in Figure 2. As shown in Figure 2, the weakly textured blocks have relatively higher LGSE values. Note that here the weak texture is a generic term that includes not only smooth blocks, but also some slightly textured blocks. In Figure 3, the noisy image blocks are the blocks where the noise-free image blocks in Figure 2 are corrupted by AWGN at noise level , and their corresponding LGSE values are given. By comparing the results of Figure 2 and Figure 3, it is found that weakly textured blocks still have higher LGSE values even in the case of strong noise, while for the blocks with strong textures, their LGSE values drop faster. It can be seen from Figure 2 and Figure 3 that weakly textured blocks have bigger LGSE values and the proposed LGSE has a robust anti-noise ability for weakly textured blocks selection.

Figure 2.

Local gray statistic entropy of various noise-free image blocks, and weakly textured blocks have relatively bigger local gray statistic entropy (LGSE) values; (a) = 7.8135; (b) = 7.7972; (c) = 7.7761; (d) = 7.7618; (e) = 7.7158; (f) = 7.6994.

Figure 3.

Local gray statistic entropy of noisy image blocks corrupted by additive white Gaussian noise (AWGN) at noise level , and weakly textured blocks have bigger LGSE values; (a) = 7.8094; (b) = 7.7155; (c) = 7.6537; (d) = 7.4976; (e) = 7.3127; (f) = 7.1362.

3.1.2. Homogenous Blocks Selection Based on LGSE with Histogram Constraint Rule

Dong et al. [33] indicated that the image gray level histogram and block texture degree have a strong relationship. Inspired by this vision, to reduce or even overcome the disturbance of too many outliers, the gray level histogram-based constraint rule is introduced to firstly filter out the image blocks with disorderly rich textures.

In [19,28,30], the estimated noise level functions (NLFs) all have a comb shape in these scatterplots. These comb-shaped NLFs indicate that the pixel intensities approaching the highest or lowest pixel intensity may cause a disaster for noise estimation, because the brightest or darkest pixels may generate a number of outliers, ultimately reducing the precision of noise estimation. Therefore, we first exclude the blocks whose pixel mean value is too dark (0–15, the pixel intensity range is 0 to 255) or too bright (240–255) in the gray level histogram:

where is the mean gray value of the i-th block, is the exclusion rule of darkest or brightest blocks, and is the updated values of i-th block by darkest or brightest block removal operation. is the residual gray level of after dark or bright block removal operation. The probability of occurrence of can be computed by:

where denotes the frequency of in gray level histogram and is the total pixel number of non-zero values of . The blocks where gray values do not occur frequently may also have high LGSE values, which will generate some undesirable isolated values. Therefore, rather than dealing with all of gray levels, we only explore the gray levels with high occurrences. Accordingly, we try to eliminate the blocks with low frequency of occurrence below a certain threshold value. To solve this gap, the joint probability is firstly introduced as:

where is the frequency of versus maximum frequency of and is the threshold to evaluate and remove the blocks with low occurrence frequency of mean gray value. In terms of Bayes rule, the conditional probability can be expressed as:

By using the Equations (12–14), the blocks whose occurrence probability of their mean gray value is smaller than the conditional probability value will be eliminated.

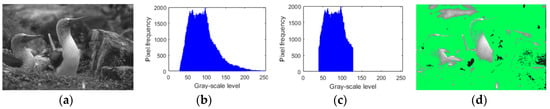

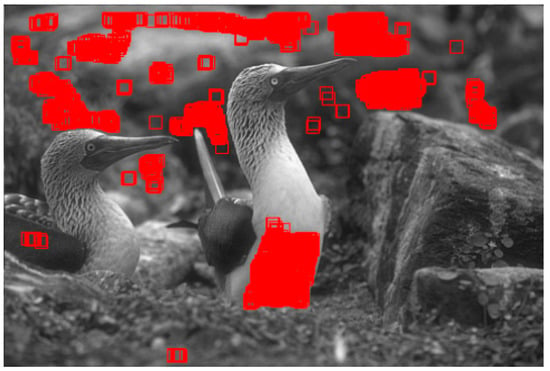

These procedures of gray level histogram-based constraint rule can be simply considered that we arrange the gray levels of pixels in a descending order, select the blocks of mean gray value greater than thr, and renew block set . To be specific, we set to remove disorderly blocks. Hence, the blocks where the mean gray values do not occur frequently represent disorderly rich textured blocks, and the LGSE values of those blocks can be set as zero values. In Figure 4, the whole procedure of histogram-based constraint rule for eliminating outliers is shown using the “Birds” image as example. Figure 4a shows the original “Birds” image and its corresponding gray level histogram is shown in Figure 4b,c is the result of the selected gray levels using histogram constraint rule to overcome outliers. In Figure 4d, the green dots have covered the residual pixel blocks after the operation of histogram constraint rule.

Figure 4.

An example for histogram-based constraint rule for eliminating outliers. (a) Original “Birds” image; (b) the gray level histogram of (a); (c) the selected gray levels after the operation of histogram constraint rule; (d) The selected blocks according to (c) are labeled with green color.

The histogram constraint rule is a simple pretreatment to remove undesirable outliers but cannot obtain the reliable weakly textured blocks. To further suppress the disturbance of the textures of residual blocks, the LGSE-based homogenous image blocks selection method is proposed:

where is the selected minimum value of LGSE, is the maximum value of LGSE, and is the select ratio to control the weakly textured blocks selection. Based on the statistics and observation of noise estimation processing of 134 scene images, the select ratio is empirically set of the total pixel number of whole images in our experiments. This setting means that we only selected 10% of the block set as homogenous blocks. An example for the homogenous blocks selection in “Birds” image is shown in Figure 5 and the 15 × 15 red boxes have outlined the finally selected homogenous blocks. The whole operations of homogenous blocks selection of “Barbara” and “House” are shown in Figure 6.

Figure 5.

An example for homogenous blocks selection, and those 15 × 15 red boxes in the “Birds” image have outlined the finally selected homogenous blocks.

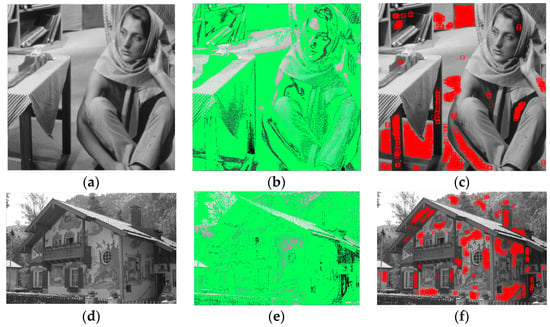

Figure 6.

Two examples for homogenous blocks selection in the “Barbara” and “House.” (a) Original “Barbara” image; (b) the selected blocks after histogram constraint in “Barbara” image are labeled with green color; (c) 15 × 15 red boxes in the “Barbara” image have outlined the finally selected homogenous blocks; (d) original “House” image; (e) the selected blocks after histogram constraint in the “House” image are labeled with green color; (f) 15 × 15 red boxes in the “House” image have outlined the finally selected homogenous blocks.

3.2. Haar Wavelet-Based Local Median Absolute Deviation for Local Variance Estimation

In this paper, a robust Haar wavelet-based local median absolute deviation (HLMAD) method is presented to estimate the standard deviation of noisy image block. The Haar wavelet, which is one of the simplest but most commonly used among wavelet transforms, can be exploited to detect noise for its good regularity and orthogonality. In the first scale of 2-D Haar wavelet analysis, the HH coefficients represent the high frequency component that is the noise component in selected homogenous blocks. The sub-band wavelet coefficients (HH) of first scale of 2-D Haar wavelet transform are calculated by:

where is the original noisy image, is the high frequency component of first step which uses 1-D Haar analysis in each row of the noisy image, and is the high frequency coefficients of 2-D Haar wavelet analysis. Since the MAD is a more stable statistical deviation measure than sample variance, it works much better for more noise distributions. In addition, the MAD is more robust to outliers in datasets than sample standard deviation. By applying the robust MAD depicted in [15,16] to estimate the noise variance, the local block standard deviation is derived as:

where is the median value of absolute deviation of the high frequency coefficients of 2-D Haar wavelet , the constant of proportionality factor , and is the estimated standard deviation of the selected homogenous image block.

3.3. Maximum Likelihood Estimation for Multi-Parameter Estimation

According to the central limit theorem, the distribution of the sample approximately follows normal distribution when the number of sampling points is sufficient. Therefore, by fitting the NLF model , the likelihood function of the dataset of selected homogenous blocks would be written as:

where is the total number of selected blocks, dataset is a set of local pixel mean value and local total variance pair , and , in here, and are the pixel mean value and the estimated noise variance of the i-th block. In addition, the ln-likelihood function is:

According to the nature of maximum likelihood estimator, the two parameters and could be estimated through maximizing the probability of the selected samples:

Here, the gradient descent method is applied to solve the maximum likelihood function:

And the two parameters and of NLF can be estimated reliably.

3.4. Proposed Noise Parameter Estimation Algorithm

3.4.1. Proposed Noise Parameter Estimation Algorithm for the AWGN Model

In the most commonly used image sensor noise model that is AWGN model, the noise standard deviation is the only parameter that needs to be estimated. According to these above mentioned methods and theories, the accurate noise standard deviation estimation algorithm is established. Firstly, we divide the noisy image into a group of blocks by sliding window pixel-by-pixel, and compute the mean gray value of each block. The LGSE of each block is calculated, and the LGSE values of darkest and brightest blocks are excluded. Next, the LGSE values of residual blocks make a descending order, and the homogenous blocks can be extracted by selecting several largest LGSE values. Then, the local standard deviation of each selected block is computed by the HLMAD, and build up a cluster of local standard deviation. Finally, the noise standard deviation is precisely estimated by picking the median of the cluster:

where is the cluster of local standard deviation , is the estimated noise standard deviation for AWGN model. The whole of the proposed noise parameter estimation algorithm for the CCD sensor AWGN model is summarized in Algorithm 1.

| Algorithm 1. Proposed noise parameter estimation algorithm for the AWGN model |

| Input: Noisy image , window size , blocks selection ratios . |

| Output: Estimated noise standard deviation . |

| Step 1: Group a set of blocks by sliding window pixel-by-pixel: |

| . |

| Step 2: Compute the mean gray value of all pixels at each block. |

| Step 3: for block index k = 1:BN do |

| Compute the LGSE of blocks according to (8) and (9). |

| end for |

| Step 4: Exclude the LGSE of blackest and whitest blocks according to (10) and (11). |

| Step 5: Select homogenous blocks based on selection of residual LGSE using (15). |

| Step 6: for homogenous block index t = 1:T do |

| Obtain the local standard deviation of homogenous block by HLMAD according to (16–18). |

| end for |

| Step 7: Estimate the noise standard deviation via using median estimator derived from (23). |

3.4.2. Proposed Noise Parameter Estimation Algorithm for the PGN Model

We also work on the PGN model, which is the most likely model for practical image sensor noise. It is noteworthy that the proposed estimation algorithm for the PGN model generally agrees with the proposed algorithm for AWGN model. Contrasted with Algorithm 1, for the PGN model, we append the gray level histogram-based constraint rule method for firstly removing disorderly blocks, and compute the local mean value of selected blocks. Then, the local mean-variance pair of selected blocks can be obtained. Finally, by utilizing the MLE estimator to process the local mean-variance pair , the two parameters and of PGN model are estimated credibly. The whole of the proposed noise parameter estimation algorithm for the image sensor PGN model is summarized in Algorithm 2.

| Algorithm 2. Proposed noise parameter estimation algorithm for the PGN model |

| Input: Noisy image , window size , blocks selection ratios . |

| Output: Estimated NLF parameters and . |

| Step 1: Group a set of blocks by sliding window pixel-by-pixel: |

| . |

| Step 2: Compute the mean gray value of all pixels in each block. |

| Step 3: for block index k = 1:BN do |

| Compute the LGSE of blocks according to (8) and (9). |

| end for |

| Step 4: Exclude the LGSE of blackest and whitest blocks according to (10) and (11). |

| Step 5: Utilize the gray level histogram-based constraint rule to remove disorderly blocks, |

| and exclude the LGSE of low frequency gray value according to (12–14). |

| Step 6: Select homogenous blocks based on selection of residual LGSE using (15). |

| Step 7: for homogenous block index t = 1:T do |

| Compute the mean gray value of all pixels in selected block using (7). |

| Obtain the local variance of homogenous block by HLMAD according to (16–18). |

| end for |

| Step 8: Estimate the two parameters and of NLF via processing local mean-variance pair using MLE derived from (19)–(22). |

4. Experimental Results

In this section, a series of experiments on synthesized noisy images under various scene images are conducted to evaluate the performance of proposed noise estimation algorithm. Furthermore, some state-of-the-art algorithms and classical algorithms are selected for performance comparison. These experiments are performed in Matlab 2016a (developed by MathWorks, Massachusetts, USA) on the computer with 3.2 Ghz Intel i5-6500 CPU and 16 Gb random access memory.

4.1. Test Dataset

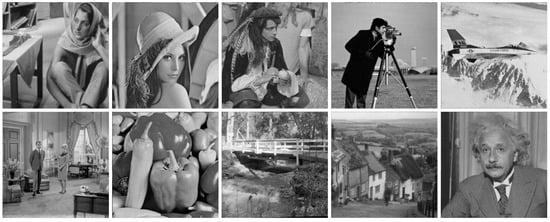

The test dataset is composed of 134 images for synthesized noisy images, which were generated by adding the AWGN or PGN component to three typical test datasets. These three datasets consisted of classic standard test images (CSTI), Kodak PCD0992 images [37] and Berkeley segmentation dataset (BSD) images [38]. The CSTI images have 10 classic standard test images of size 512 × 512 and are shown in Figure 7. The Kodak PCD0992 images have 24 images of size 768 × 512 or 512 × 768 released by the Eastman Kodak Company for unrestricted usage, and many researchers use them as a standard test suite for image de-noising testing. The BSD images totally have 100 test images and 200 train images of size 481 × 321 or 321 × 481, but in our experiments, we randomly selected 25 images from the Berkeley test set and 75 images from Berkeley train set to process. Therefore, the test dataset contains various scene images. Testing on this dataset proves that the algorithm is suitable for many scenarios.

Figure 7.

Classic standard test images for synthesized noisy images: “Barbara,” “Lena,” “Pirate,” “Cameraman,” “Warcraft,” “Couple,” “Peppers,” “Bridge,” “Hill” and “Einstein.”

4.2. Results of Noise Estimation

4.2.1. Effects of Parameters

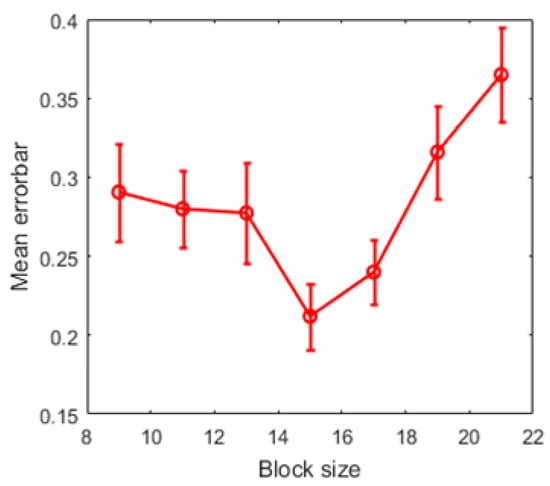

In this part, the experiment is conducted to compare the performance of proposed noise estimation algorithm under various parameter configurations. To qualify the accuracy of the proposed noise estimation algorithm, the mean error of noise level estimation is used to compare the effects of different parameters:

where is the added noise variance, is the group of estimated noise variances, and is the average value of estimated noise variance. In this parameter selection experiment, we set noise level . Obviously, the more accurate the noise estimation algorithm is, the smaller the mean error is.

The proposed noise estimation algorithm is a block-based method, and the performance of proposed method is related to block size. In this experiment, the test dataset with selected 134 images was processed by the proposed noise estimation algorithm with different block sizes. The mean error-bars of different block sizes are shown in Figure 8, and it indicates that the block size can be more suitable for these noisy scene images.

Figure 8.

The mean error-bars of different block sizes. Thus, when the block size , the proposed algorithm has an overwhelming advantage for its accuracy and stability.

4.2.2. Comparison to AWGN Estimation Baseline Methods

In this part, four classical noise level estimation methods and three state-of-the-art noise level estimation methods are introduced in the comparison experiments to evaluate the performance of the proposed AWGN level estimation method. The Immerkær’s fast noise variance estimation (FNVE) [9], Khalil’s median absolute deviation (MAD) based noise estimation [39], Santiago’s variance mode (VarMode) noise level estimation [40] and Zoran’s discrete cosine transform (DCT) based noise estimation [12] were chosen as the classical noise level estimation methods. The Olivier’s nonlinear noise estimator (NOLSE) [11], Pyatykh’s principal component analysis (PPCA) based noise esti-mation [17] and Lyu’s noise variance estimation (EstV) [13] were selected as the state-of-the-art noise estimation methods.

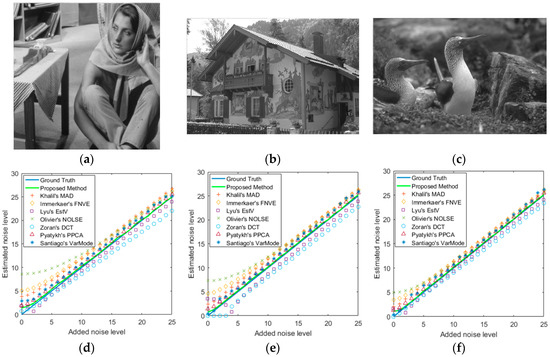

Figure 9 shows three example images (“Barbara”, “House”, “Birds”) which were selected from three image datasets, and the corresponding noise level estimation results were obtained by the compared noise estimation methods. The original images are shown in the first row. Figure 9d–f shows their results of noise level estimation respectively. As shown in Figure 9, the DCT-based noise estimator performs better at low noise level, but underestimates the noise at higher noise level. FNVE, MAD, VarMode and NOLSE noise estimators easily overestimate the noise level. EstV noise estimator underestimates the noise level in each instance. For most noise levels, both the PPCA-based AWGN estimator and the proposed AWGN estimator work well. In these three scenes, our proposed method outperforms these several existing noise estimation methods.

Figure 9.

Different AWGN level estimation methods on three images, and the proposed method outperforms other several existing noise estimation methods. (a–c) are original images (“Barbara,” “House,” “Birds”); (d–f) are their results of noise level estimation respectively.

To further prove the capability of the proposed method, we expanded our experiments on test dataset with 134 images. Table 1, Table 2 and Table 3 show the results of different AWGN estimation algorithms on the CSTI images, the Kodak PCD0992 images and the BSD images, respectively. In Table 1, Table 2 and Table 3, we set the added noise levels = 0, 1, 5, 10, 15, 20 and 25. As shown in the Table 1, Table 2 and Table 3, FNVE, MAD, NOLSE and VarMode estimators overestimate the noise level. This is because these filter-based methods fail to fully consider the effects of image textures on noise estimation. DCT and EstV estimators work well for low noise levels, even better than the proposed estimator when the noise level is less than five, but seriously underestimate the noise level at high noise conditions. The reason is that these two methods use band-pass statistical kurtosis to extract noise components and eliminate the interference of textures to noise estimation. For higher noise levels, these estimators remove too many noise components while removing textures, which leads to underestimation of noise. Compared with those above methods, the PPCA estimator has a better performance. In most cases, the proposed AWGN estimation method is superior to the PPCA estimator. Generally speaking, the experimental results prove that the proposed AWGN estimator not only is suitable for most noise levels, but also has the better estimation capability compared with those state-of-the-art methods.

Table 1.

The results of different AWGN estimation algorithms on the classic standard test images (CSTI).

Table 2.

The results of different AWGN estimation algorithms on the Kodak PCD0992 images.

Table 3.

The results of different AWGN estimation algorithms on the Berkeley segmentation dataset (BSD) images.

4.2.3. Comparison to PGN Estimation Baseline Methods

In this part, the comparison with four representative PGN estimation methods is conducted to evaluate the proposed PGN estimation method. The Foi’s clipped Poisson-Gaussian (CPG) noise fitting algorithm [28], Zabrodina’s scatter-plots regression curve fitting (RCF) estimator [29], Jeong’s simplified Poisson-Gaussian noise (SPGN) estimator [41] and Liu’s principal component analysis (LPCA) based noise estimator [35] were selected as baseline methods because they are well studied and widely used for assessing new PGN estimator.

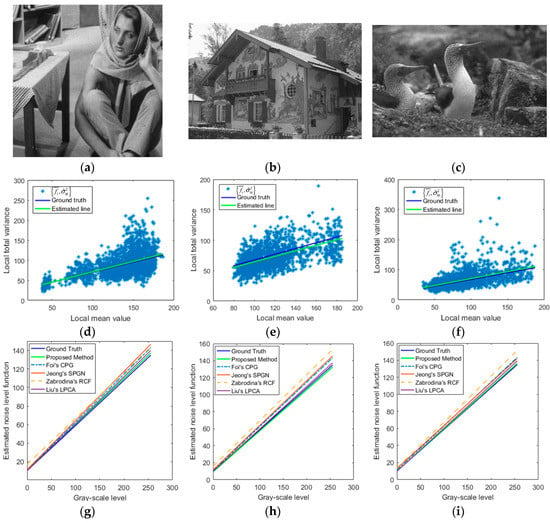

Figure 10 shows three example images, and their corresponding estimated NLF results. The noisy images with = 0.5 and = 10 are shown in the first row. The second row displays their NLF results of the proposed estimation method respectively. The third row shows the estimated NLF results of different PGN estimation methods respectively. It can be seen in Figure 10 that, the CPG, SPGN and RCF noise estimation methods overestimate the NLF in three examples, while both the LPCA-based PGN estimator and proposed PGN estimator work well, and the proposed method works better than LPCA on “Barbara” and “Birds” at noise parameters = 0.5 and = 10.

Figure 10.

Different Poisson-Gaussian noise (PGN) level estimation methods on three images, and the proposed method out-performs several existing PGN estimation methods. (a–c) are noisy images (“Barbara,” “House,” “Birds”) with and ; (d–f) are the results of our parameter estimation algorithm respectively; (g–i) are the results of different PGN estimation methods respectively.

To verify the performance of the proposed PGN estimation method, we still insisted on performing experiments on the test dataset with 134 images. The performances of the compared PGN estimation methods for different image datasets are shown in Table 4, Table 5 and Table 6. In Table 4, Table 5 and Table 6, we set the added PGN parameters () = (0.1,1), (0.1,5), (0.1,10), (0.5,1), (0.5,5), (0.5,10). As shown in the Table 4, Table 5 and Table 6, CPG, RCF and SPGN estimators overestimate the parameters of NLF. Table 4 and Table 5 depict that the proposed PGN estimator work better than other PGN estimators on CSTI images and Kodak PCD0992 images in all cases. Table 6 shows that both the proposed PGN estimator and LPCA-based PGN estimator have comparable estimation results and are better than other estimators on BSD images. This indicates that the LPCA-based PGN estimator performs well for BSD images of size but the proposed PGN estimator can obtain the best performance for all three image datasets, which means that the proposed PGN estimator can work more stably for images with different sizes. Consequently, compared with these representative PGN estimators, the proposed PGN estimator shows more accuracy for various scenarios at different PGN parameters.

Table 4.

The results of different PGN estimation algorithms on the CSTI images.

Table 5.

The results of different PGN estimation algorithms on the Kodak PCD0992 images.

Table 6.

The results of different PGN estimation algorithms on the BSD images.

4.3. Noise Estimation Tuned for Blind De-Noising

4.3.1. Quantify the Performance of Blind De-Noising

Blind de-noising is a pre-processing application that needs the estimated noise parameters. In this part, we try to prove that the proposed AWGN estimator and proposed PGN estimator can promote the performance of existing blind de-noising algorithms. In this part, we chose the state-of-the-art de-noising algorithm BM3D [42] to remove the estimated AWGN of noisy image, and selected representative PGN de-noising algorithm VST-BM3D [43] to eliminate the estimated PGN of noisy image.

In order to make a reliable comparison of similarity between noise-free image and de-noised image, two image quality assessment methods were used in this paper: (1) The structural similarity index measurement (SSIM) [44] and (2) the peak signal-to-noise ratio (PSNR). The SSIM is defined as:

where is the noisy image, is the noise-free image, and are the mean values of noisy image and noise-free image, respectively; , and are standard deviations of noisy image, noise-free image and their covariance, respectively; and are small positive constants used to avoid the denominator becoming zero. The PSNR is written as:

where MSE is the mean squared error of noisy image and can be derived from:

where the size of input noisy image is , and is the pixel location.

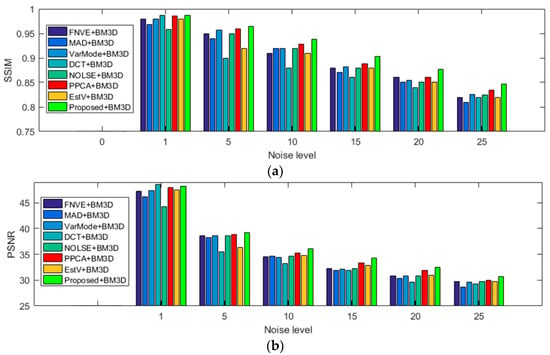

4.3.2. Noise Estimation Tuned for AWGN Blind De-Noising

To quantitatively evaluate the performance of proposed noise estimation algorithm for blind de-noising, all 134 test images of the test dataset were used in AWGN blind de-noising experiment. The added noise levels were set as = 1, 5, 10, 15, 20 and 25 in this experiment. The average SSIM and PSNR comparisons of different AWGN estimators with BM3D for blind de-noising on the test dataset are shown in Figure 11. Figure 11a depicts the SSIM values of different AWGN estimators with BM3D for blind de-noising, and the proposed estimator with BM3D de-noising algorithm has the biggest SSIM values at different noise levels. This demonstrates that the proposed AWGN estimator can promote the structure similarity index of blind de-noising. Figure 11b shows the proposed estimator with BM3D de-noising algorithm has the highest bars of PSNR value at different noise levels. This indicates that the proposed AWGN estimator can decrease the distortion of blind de-noising. In summary, the proposed AWGN estimator can increase the performance of blind de-noising stably.

Figure 11.

Structural similarity index measurement (SSIM) and peak signal-to-noise ratio (PSNR) comparison results of different AWGN estimators with BM3D for blind de-noising on the 134 selected images at different noise levels. (a) Comparison results of SSIM; (b) comparison results of PSNR.

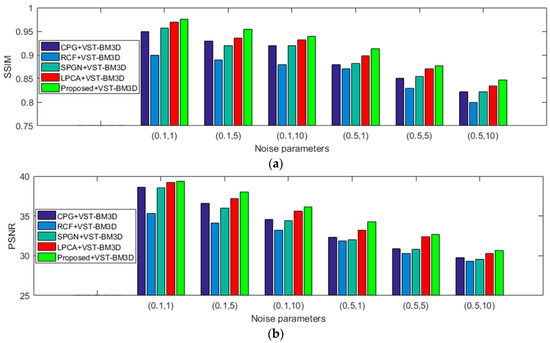

4.3.3. Noise Estimation Tuned for PGN Blind De-Noising

Figure 12 shows the average SSIM and PSNR values of different PGN estimators with VST-BM3D for blind de-noising at different noise parameters, and the proposed PGN estimator with VST-BM3D de-noising algorithm has the largest SSIM and PSNR values at different noise parameters and is superior to LPCA. This demonstrates that the proposed PGN estimator can improve the performance of PGN blind de-noising. From above experiments, it can be seen that the proposed AWGN and PGN estimators not only can work stably for various scene images with different noise parameters, but also can promote the performance of blind de-noising.

Figure 12.

SSIM and PSNR comparison results of different PGN estimators with VST-BM3D for blind de-noising on the 134 selected images at different noise parameters. (a) Comparison results of SSIM; (b) comparison results of PSNR.

5. Conclusions and Future Work

A novel method based on the properties of LGSE and HLMAD is presented in this paper to estimate the AWGN and PGN parameters of an image sensor at different noise levels. By using the map of LGSE, the proposed method can select homogenous blocks from an image with complex textures and strong noise. The local noise variances of the selected homogenous blocks are efficiently computed by the HLMAD, and the noise parameters can be estimated accurately by the MLE. Extensive experiments verify that the proposed AWGN and PGN estimation algorithms have a better estimation performance than compared state-of-the-art methods, including FNVE, MAD, VarMode, DCT, NOLSE, PPCA, EstV for the AWGN model and CPG, RCF, SPGN, LPCA for the PGN model. The experimental results also demonstrate that the proposed method can work stably for various scene images at different noise levels and can improve the performance of blind de-noising. Therefore, combing the proposed noise estimators with recent de-noising methods to develop a novel blind de-noising algorithm, which can remove image sensor noise and preserve fine details effectively for actual noisy scene images, is one important direction in our future studies.

Author Contributions

Y.L. and Z.L. proposed the noise estimation method. Y.L. conducted the experiments and wrote the manuscript under the supervision of Z.L. and B.Q. K.W., W.X., J.Y. assisted in the database selection and performed the comparison experiments. All the authors have read and revised the final manuscript.

Funding

This work was partially supported by the National Natural Science Foundation of China under Grant No. 61675036, Chongqing Research Program of Basic Research and Frontier Technology under Grant No. CSTC2016JCYJA0193, Chinese Academy of Sciences Key Laboratory of Beam Control Fund under Grant No. 2017LBC006, Fundamental Research Funds for Central Universities under Grant No. 2018CDGFTX0016.

Acknowledgments

We would like to express our thanks to anonymous reviewers for their valuable and insightful suggestions, which helped us to improve the quality of this paper.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Riutort-Mayol, G.; Marques-Mateu, A.; Segui, A.E.; Lerma, J.L. Grey level and noise evaluation of a Foveon X3 image sensor: A statistical and experimental approach. Sensors 2012, 12, 10339–10368. [Google Scholar] [CrossRef] [PubMed]

- Rakhshanfar, M.; Amer, M.A. Estimation of Gaussian, Poissonian-Gaussian, and Processed Visual Noise and its level function. IEEE Trans. Image Process. 2016, 25, 4172–4185. [Google Scholar] [CrossRef] [PubMed]

- Bosco, A.; Battiato, S.; Bruna, A.; Rizzo, R. Noise reduction for CFA image sensors exploiting HVS behaviour. Sensors 2009, 9, 1692–1713. [Google Scholar] [CrossRef] [PubMed]

- Reibel, Y.; Jung, M.; Bouhifd, M.; Cunin, B.; Draman, C. CCD or CMOS camera noise characterisation. Eur. Phys. J. Appl. Phys. 2002, 21, 75–80. [Google Scholar] [CrossRef]

- Irie, K.; McKinnon, A.E.; Unsworth, K.; Woodhead, I.M. A technique for evaluation of CCD video-camera noise. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 280–284. [Google Scholar] [CrossRef]

- Irie, K.; McKinnon, A.E.; Unsworth, K.; Woodhead, I.M. A model for measurement of noise in CCD digital-video cameras. Meas. Sci. Technol. 2008, 19, 045207. [Google Scholar] [CrossRef]

- Faraji, H.; MacLean, W.J. CCD noise removal in digital images. IEEE Trans. Image Process. 2006, 15, 2676–2685. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Szeliski, R.; Kang, S.B.; Zitnick, C.L.; Freeman, W.T. Automatic estimation and removal of noise from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 299–314. [Google Scholar] [CrossRef] [PubMed]

- Immerkær, J. Fast noise variance estimation. Comput. Vis. Image Underst. 1996, 64, 300–302. [Google Scholar] [CrossRef]

- Yang, S.-C.; Yang, S.M. A fast method for image noise estimation using Laplacian operator and adaptive edge detection. In Proceedings of the 3rd International Symposium on Communications, Control and Signal Processing, St Julians, Malta, 12–14 March 2008. [Google Scholar]

- Laligant, O.; Truchetet, F.; Fauvet, E. Noise estimation from digital step-model signal. IEEE Signal Process. Soc. 2013, 22, 5158–5167. [Google Scholar] [CrossRef]

- Zoran, D.; Weiss, Y. Scale invariance and noise in natural images. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Lyu, S.; Pan, X.; Zhang, X. Exposing region splicing forgeries with blind local noise estimation. Int. J. Comput. Vis. 2014, 110, 202–221. [Google Scholar] [CrossRef]

- Dong, L.; Zhou, J.; Tang, Y.Y. Noise level estimation for natural images based on scale-invariant kurtosis and piecewise stationarity. IEEE Trans. Image Process. 2017, 26, 1017–1030. [Google Scholar] [CrossRef] [PubMed]

- Donoho, D.L.; Johnstone, I.M. Ideal spatial adaptation by wavelet shrinkage. BIOMETRIKA 1994, 81, 425–455. [Google Scholar] [CrossRef]

- Donoho, D.L. De-noising by soft-thresholding. IEEE Trans. Inf. Theory 1995, 41, 613–627. [Google Scholar] [CrossRef]

- Pyatykh, S.; Hesser, J.; Zheng, L. Image noise level estimation by principal component analysis. IEEE Trans. Image Process. 2013, 22, 687–699. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Tanaka, M.; Okutomi, M. Single-image noise level estimation for blind denoising. IEEE Trans. Image Process. 2013, 22, 5226–5237. [Google Scholar] [CrossRef]

- Yang, J.; Wu, Z.; Hou, C. In estimation of signal-dependent sensor noise via sparse representation of noise level functions. In Proceedings of the 19th IEEE International Conference on Image Processing, 30 September–3 October 2012; pp. 673–676. [Google Scholar]

- Yang, J.; Gan, Z.; Wu, Z.; Hou, C. Estimation of signal-dependent noise level function in transform domain via a sparse recovery model. IEEE Trans. Image Process. 2015, 24, 1561–1572. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.-H.; Chang, H.-H. Superpixel-based image noise variance estimation with local statistical assessment. EURASIP J. Image Video Process. 2015, 2015, 38. [Google Scholar] [CrossRef]

- Fu, P.; Li, C.; Cai, W.; Sun, Q. A spatially cohesive superpixel model for image noise level estimation. Neurocomputing 2017, 266, 420–432. [Google Scholar] [CrossRef]

- Zhu, S.C.; Wu, Y.N.; Mumford, D. Minimax entropy principle and its application to texture modeling. Neural Comput. 1997, 9, 1627–1660. [Google Scholar] [CrossRef]

- Pham, T.D. The Kolmogorov–Sinai entropy in the setting of fuzzy sets for image texture analysis and classification. Pattern Recognit. 2016, 53, 229–237. [Google Scholar] [CrossRef]

- Yin, S.; Qian, Y.; Gong, M. Unsupervised hierarchical image segmentation through fuzzy entropy maximization. Pattern Recognit. 2017, 68, 245–259. [Google Scholar] [CrossRef]

- Shakoor, M.H.; Tajeripour, F. Noise robust and rotation invariant entropy features for texture classification. Multimed. Tools Appl. 2016, 76, 8031–8066. [Google Scholar] [CrossRef]

- Asadi, H.; Seyfe, B. Signal enumeration in Gaussian and non-Gaussian noise using entropy estimation of eigenvalues. Digit. Signal Process. 2018, 78, 163–174. [Google Scholar] [CrossRef]

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian-Gaussian noise modeling and fitting for single-image raw-data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef] [PubMed]

- Zabrodina, V.; Abramov, S.K.; Lukin, V.V.; Astola, J.; Vozel, B.; Chehdi, K. Blind estimation of mixed noise parameters in images using robust regression curve fitting. In Proceedings of the 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 1135–1139. [Google Scholar]

- Lee, S.; Lee, M.S.; Kang, M.G. Poisson-Gaussian noise analysis and estimation for low-dose X-ray images in the NSCT domain. Sensors 2018, 18, 1019. [Google Scholar]

- Lee, M.S.; Park, S.W.; Kang, M.G. Denoising algorithm for CFA image sensors considering inter-channel correlation. Sensors 2017, 17, 1236. [Google Scholar] [CrossRef]

- Zheng, L.; Jin, G.; Xu, W.; Qu, H.; Wu, Y. Noise Model of a Multispectral TDI CCD imaging system and its parameter estimation of piecewise weighted least square fitting. IEEE Sens. J. 2017, 17, 3656–3668. [Google Scholar] [CrossRef]

- Dong, L.; Zhou, J.; Tang, Y.Y. Effective and fast estimation for image sensor noise via constrained weighted least squares. IEEE Trans. Image Process. 2018, 27, 2715–2730. [Google Scholar] [CrossRef]

- Azzari, L.; Foi, A. Indirect estimation of signal-dependent noise with nonadaptive heterogeneous samples. IEEE Trans. Image Process. 2014, 23, 3459–3467. [Google Scholar] [CrossRef]

- Liu, X.; Tanaka, M.; Okutomi, M. Practical signal-dependent noise parameter estimation from a single noisy image. IEEE Trans. Image Process. 2014, 23, 4361–4371. [Google Scholar] [CrossRef] [PubMed]

- Bruhn, J.; Lehmann, L.E.; Röpcke, H.; Bouillon, T.W.; Hoeft, A. Shannon entropy applied to the measurement of the electroencephalographic effects of desflurane. Am. Soc. Anesthesiol. 2001, 95, 30–35. [Google Scholar] [CrossRef]

- Standard Kodak PCD0992 Test Images. Available online: http://r0k.us/graphics/kodak/ (accessed on 6 March 2018).

- Martin, D.R.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the 9th International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001. [Google Scholar]

- Khalil, H.H.; Rahmat, R.O.K.; Mahmoud, W.A. Estimation of noise in gray-scale and colored images using median absolute deviation (MAD). In Proceedings of the 3rd International Conference on Geometric Modeling and Imaging, London, UK, 9–11 July 2008; pp. 92–97. [Google Scholar]

- Aja-Fernández, S.; Vegas-Sánchez-Ferrero, G.; Martín-Fernández, M.; Alberola-López, C. Automatic noise estimation in images using local statistics. Additive and multiplicative cases. Image Vis. Comput. 2009, 27, 756–770. [Google Scholar] [CrossRef]

- Jeong, B.G.; Kim, B.C.; Moon, Y.H.; Eom, I.K. Simplified noise model parameter estimation for signal-dependent noise. Signal Process. 2014, 96, 266–273. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Makitalo, M.; Foi, A. Optimal inversion of the generalized Anscombe transformation for Poisson-Gaussian noise. IEEE Trans. Image Process. 2013, 22, 91–103. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).