An Improved Unauthorized Unmanned Aerial Vehicle Detection Algorithm Using Radiofrequency-Based Statistical Fingerprint Analysis

Abstract

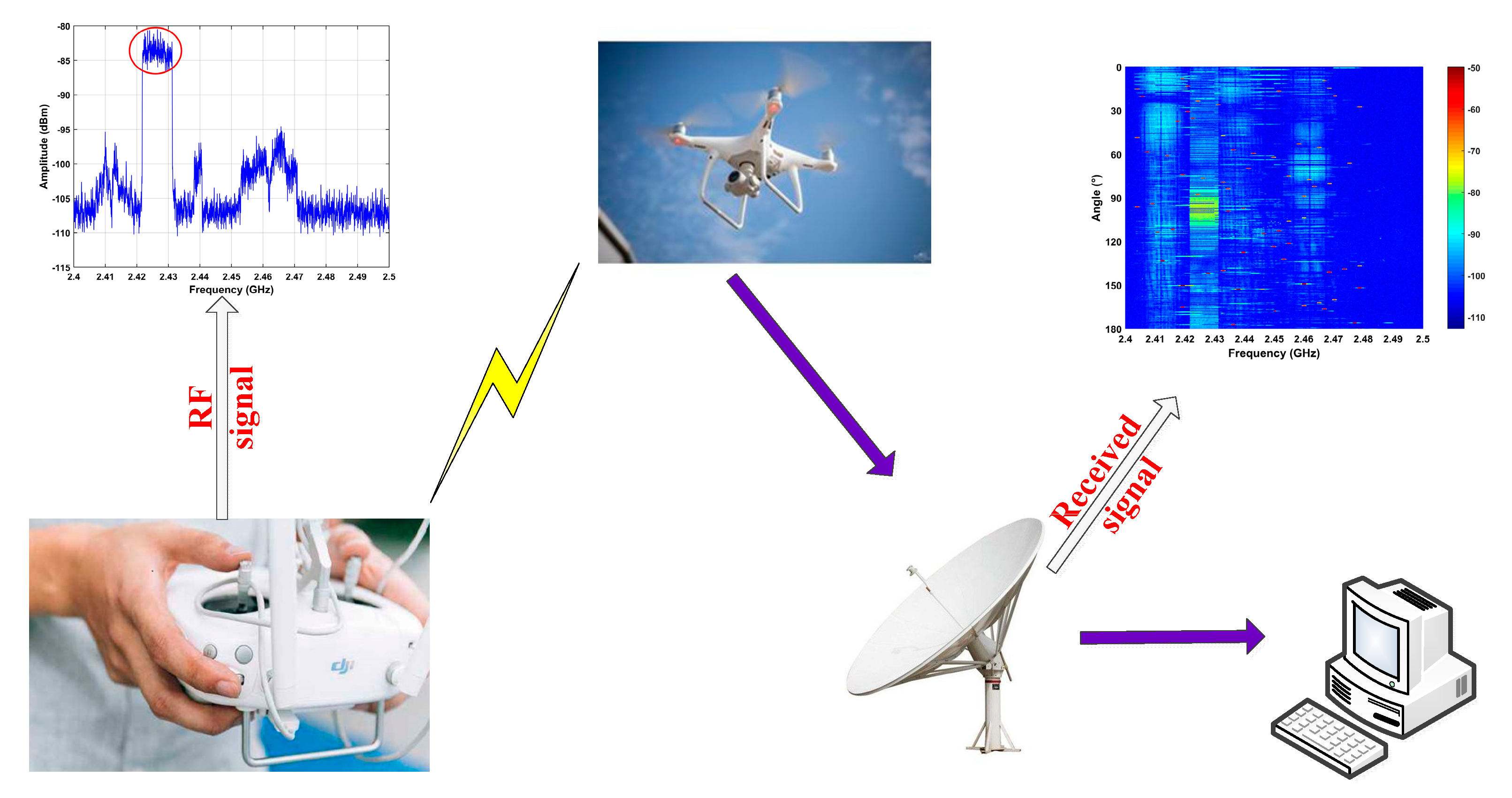

:1. Introduction

- (1)

- Spectrum accumulation (SA) and statistical fingerprint analysis (SFA) techniques are used to provide frequency estimates of RF signals. These estimates are used to determine if a UAV is present in the detection environment.

- (2)

- A region of interest (ROI) is defined to reduce the data size, improve the system efficiency and provide accurate azimuth estimates.

- (3)

- The performance of the proposed algorithm is compared with that using several well-known techniques in the literature. Further, the ability to detect multiple UAVs with the proposed algorithm is evaluated.

2. System Model

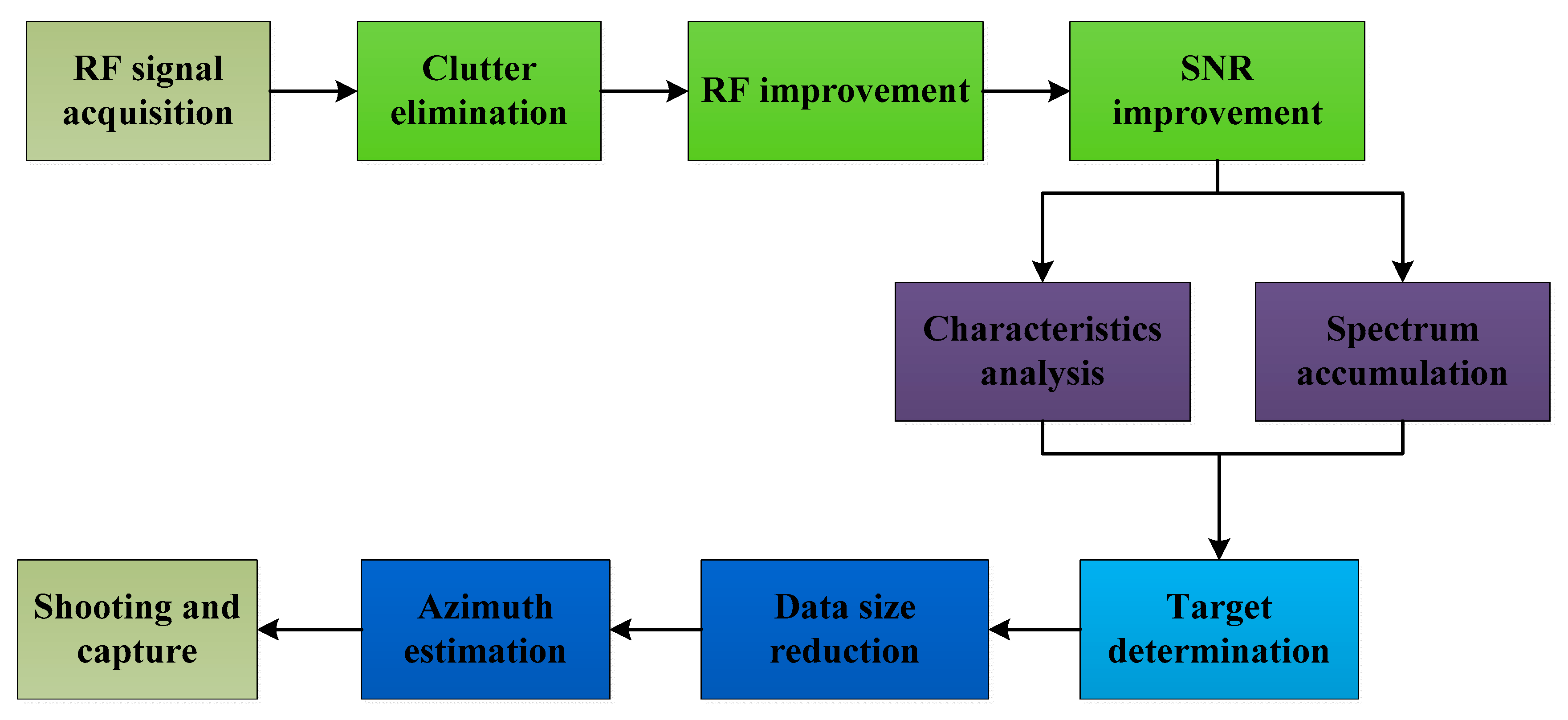

3. Proposed Method

3.1. Clutter Elimination

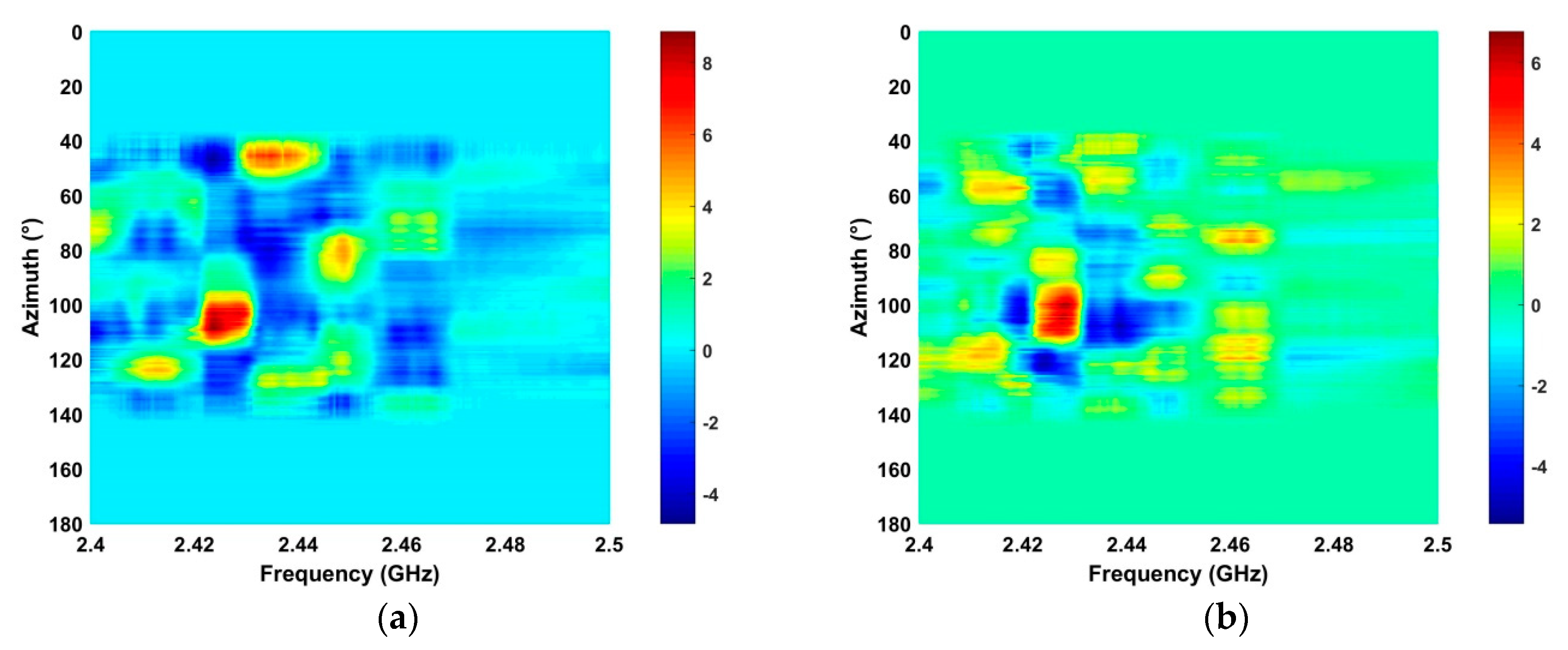

3.2. Signal Improvement

3.3. SNR Improvement

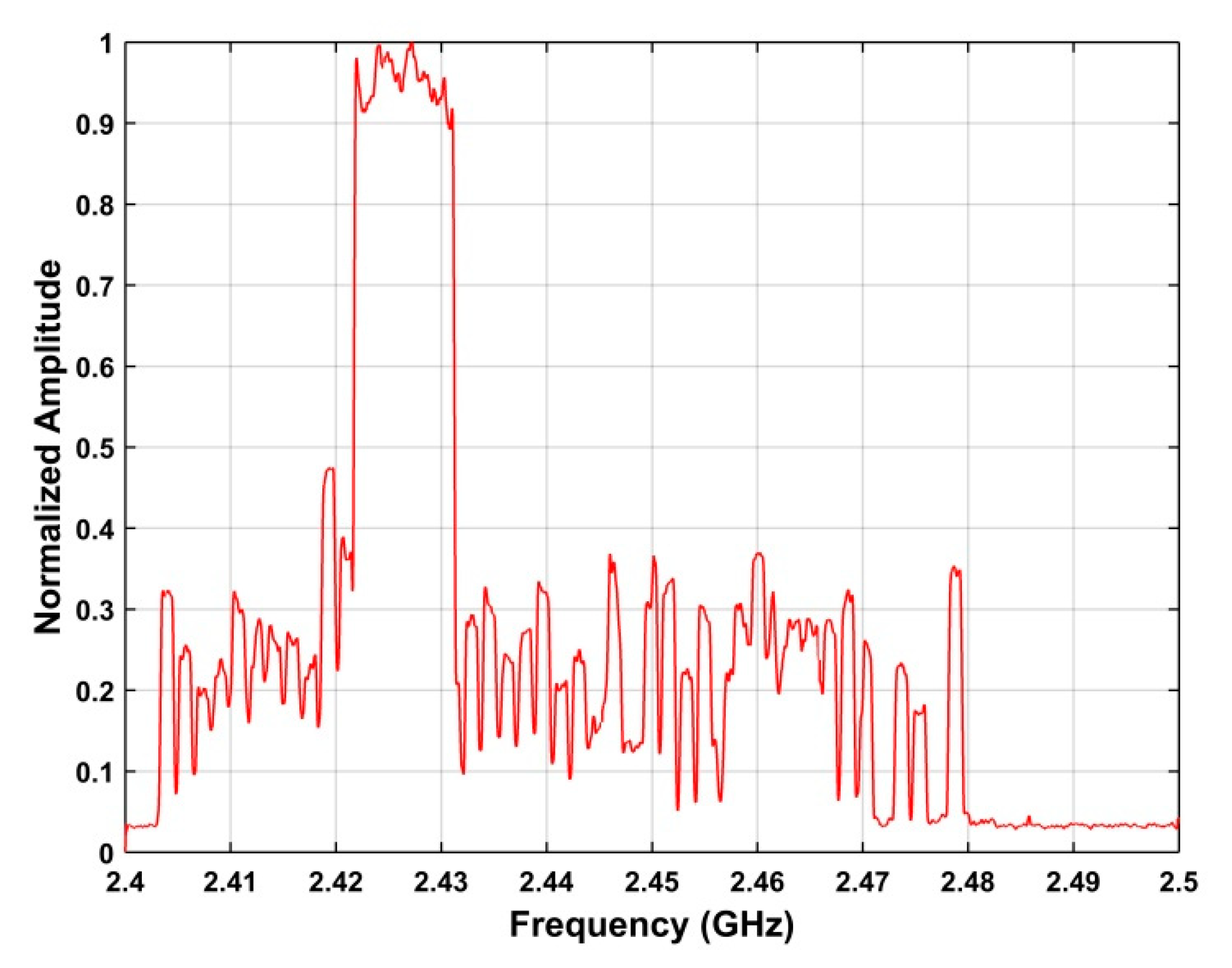

3.4. Spectrum Accumulation

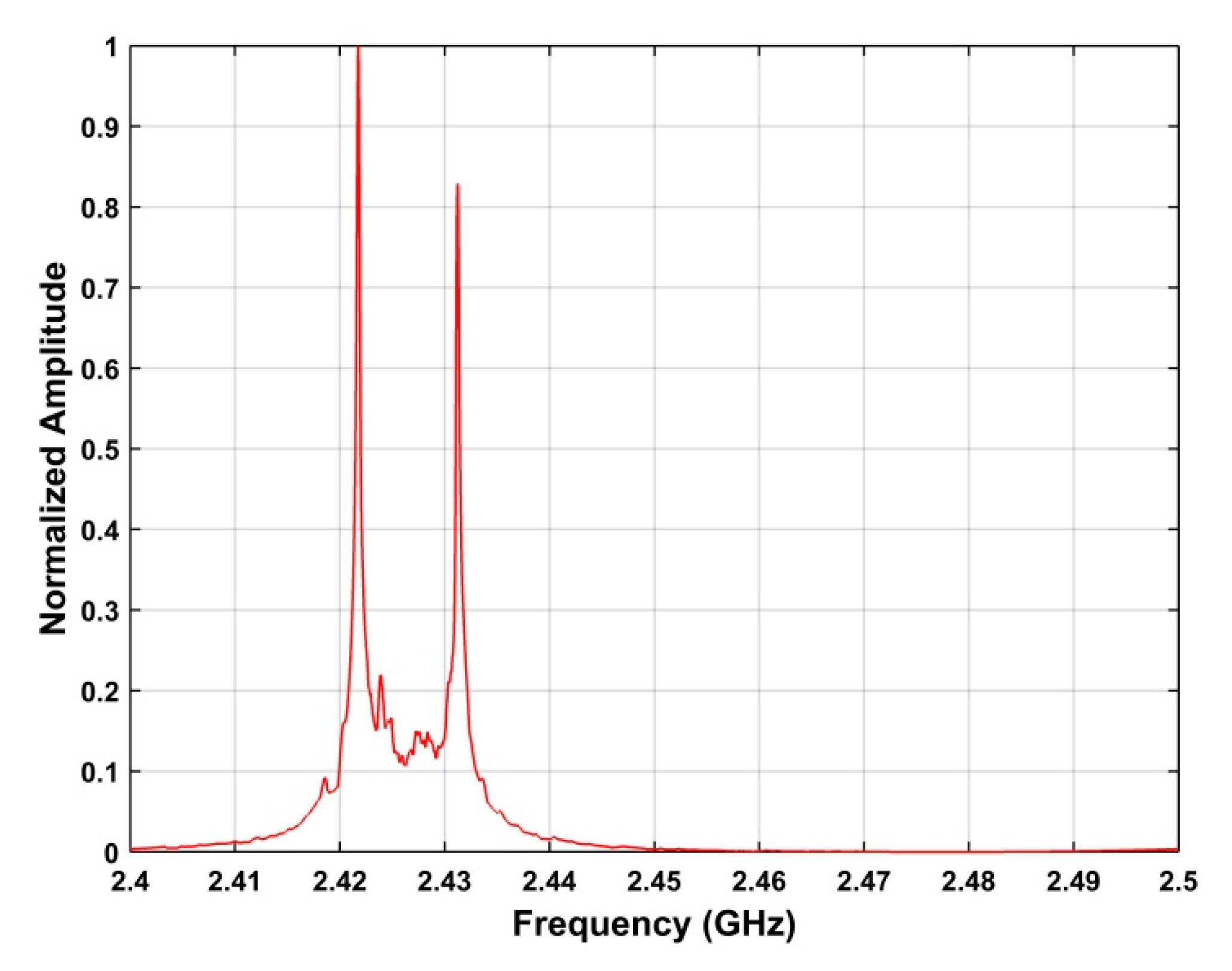

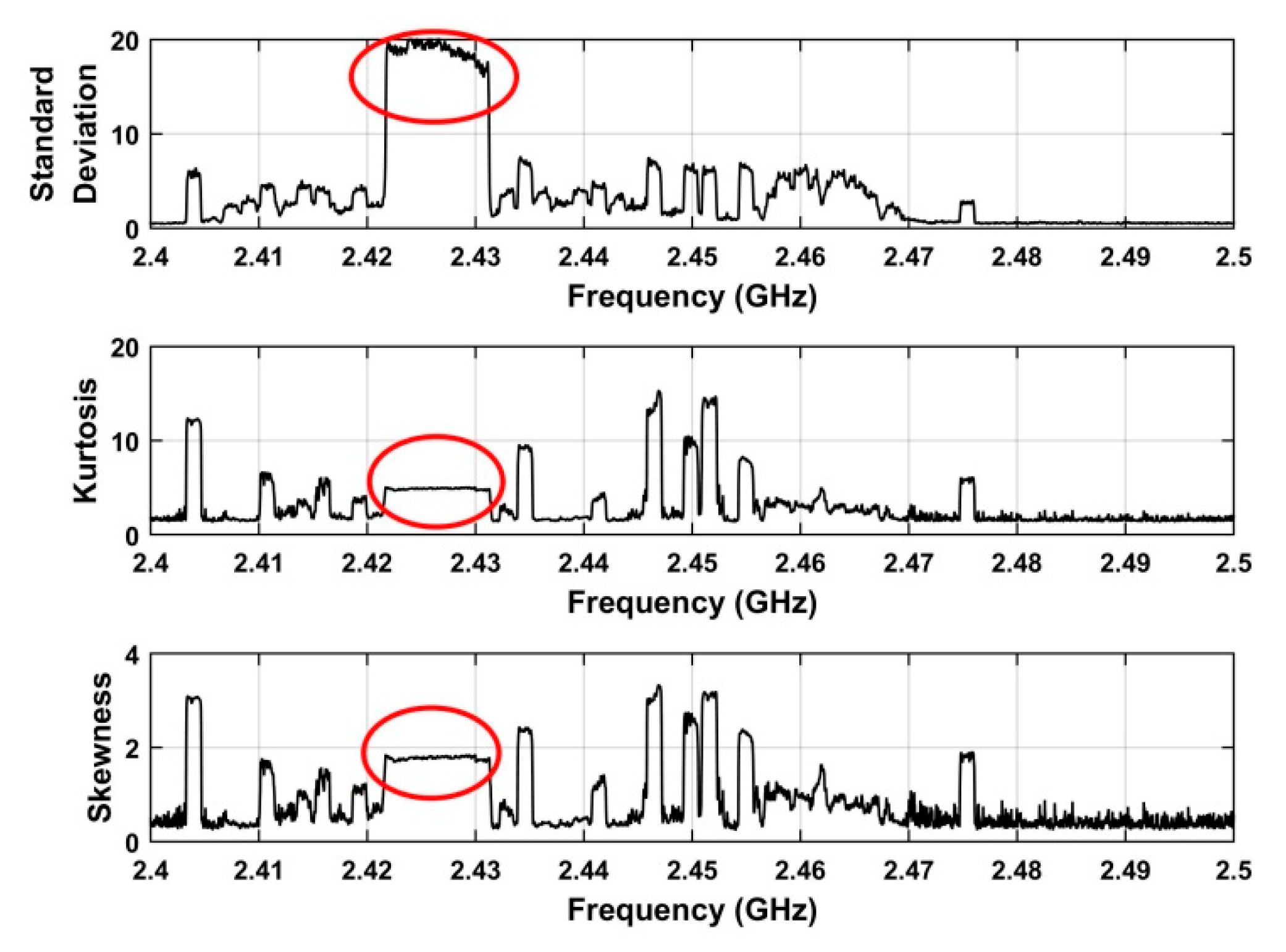

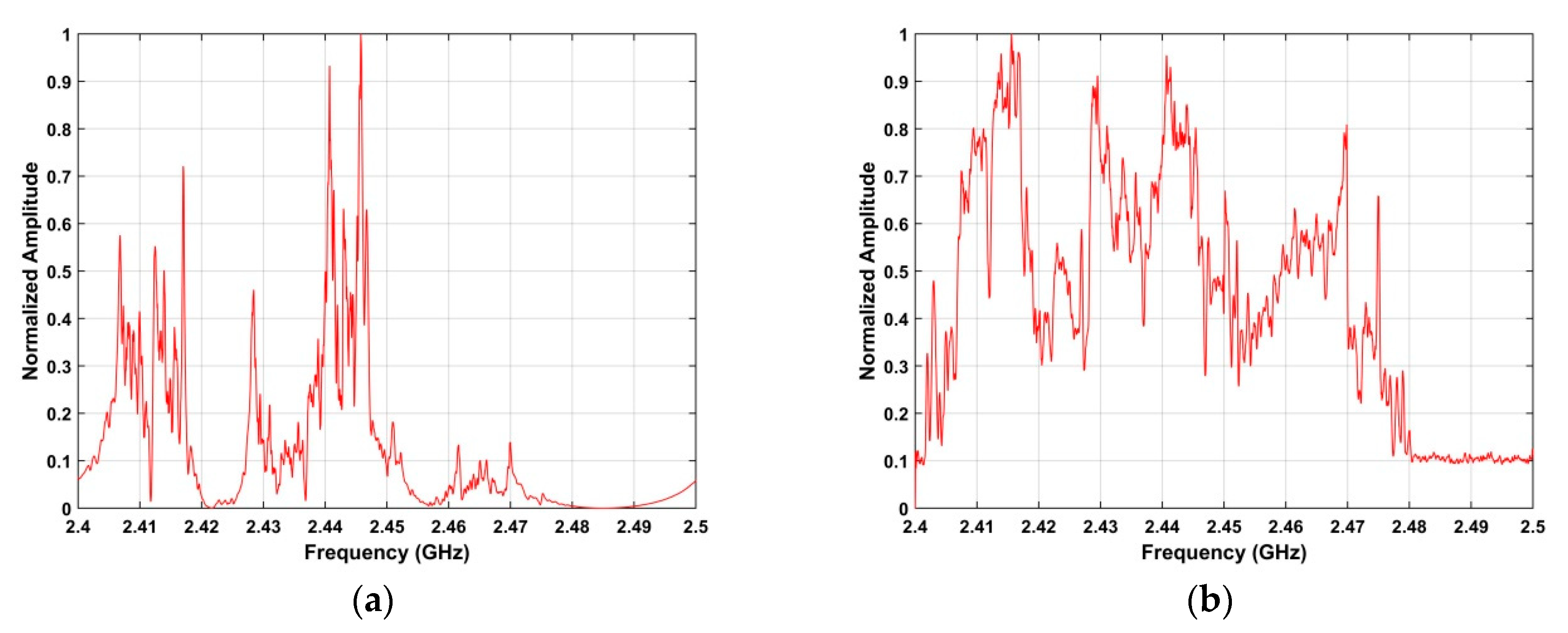

3.5. Statistical Fingerprint Analysis

3.6. UAV Determination

3.7. Data Size Reduction

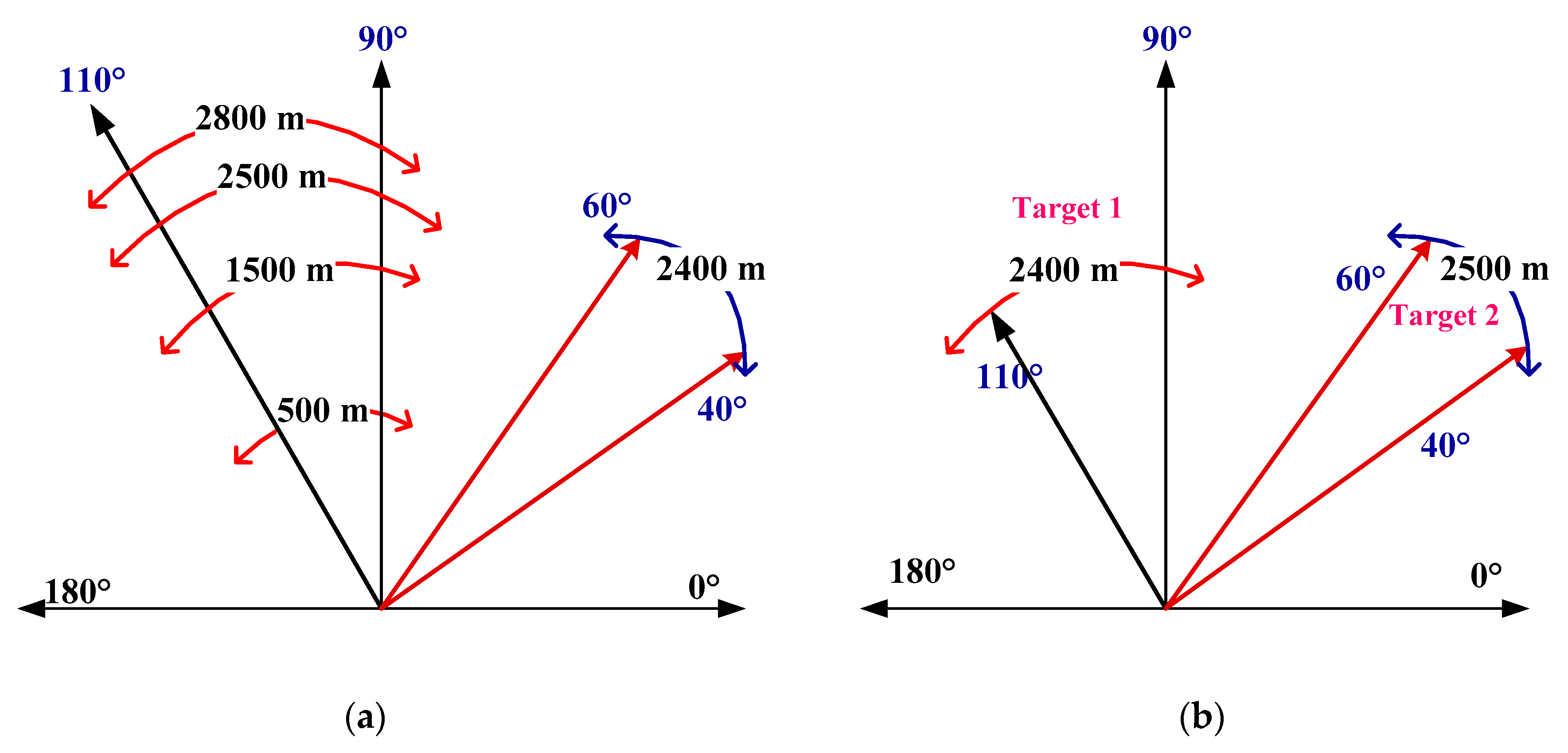

3.8. Azimuth Estimation

4. Results and Discussion

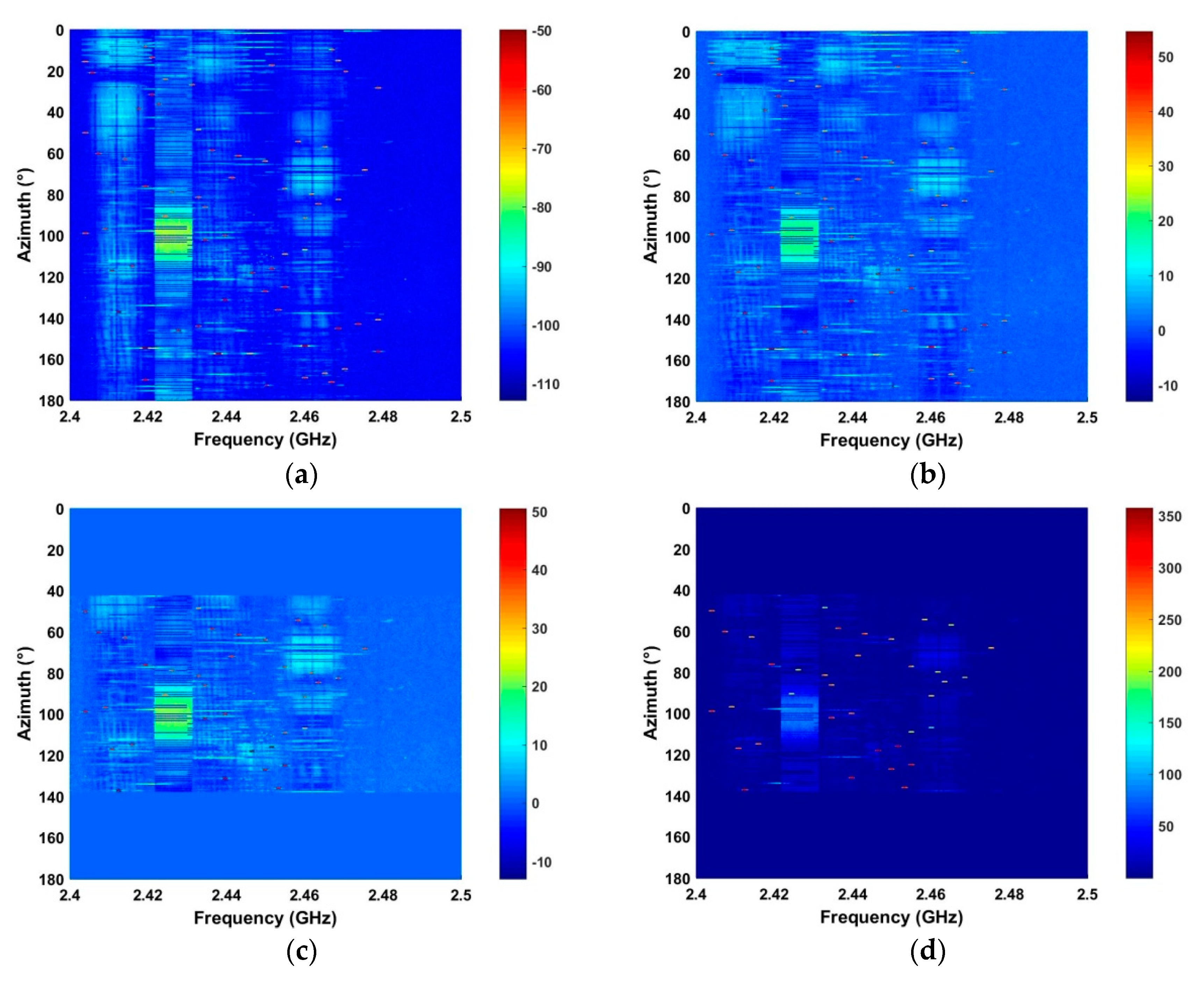

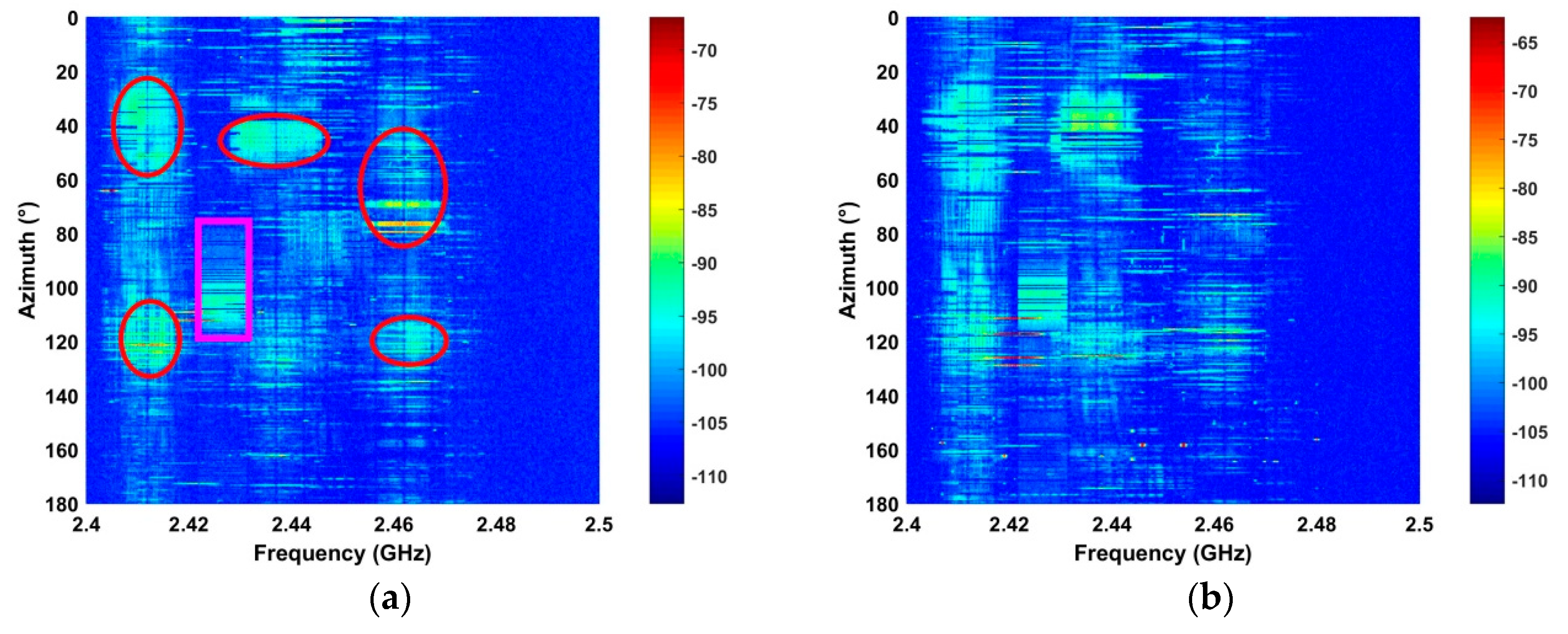

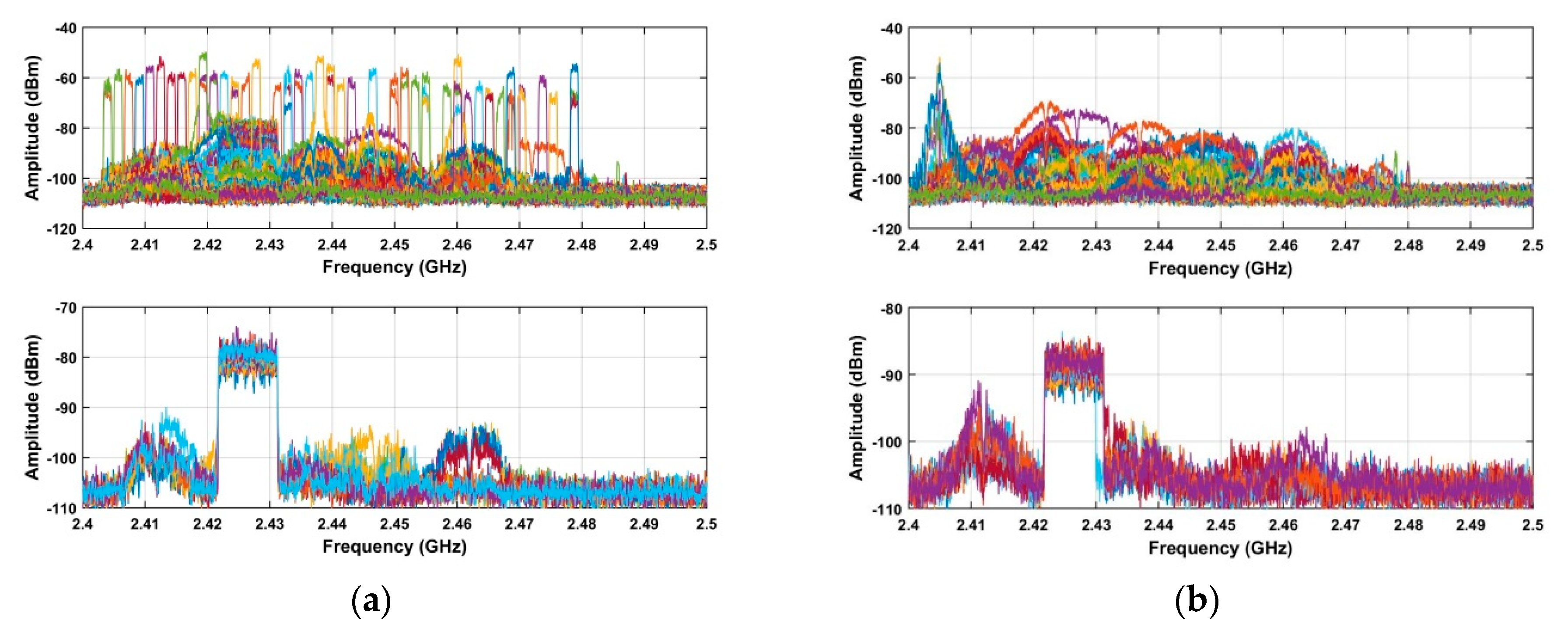

4.1. Clutter Elimination

4.2. Detection Performance in Strong Interference

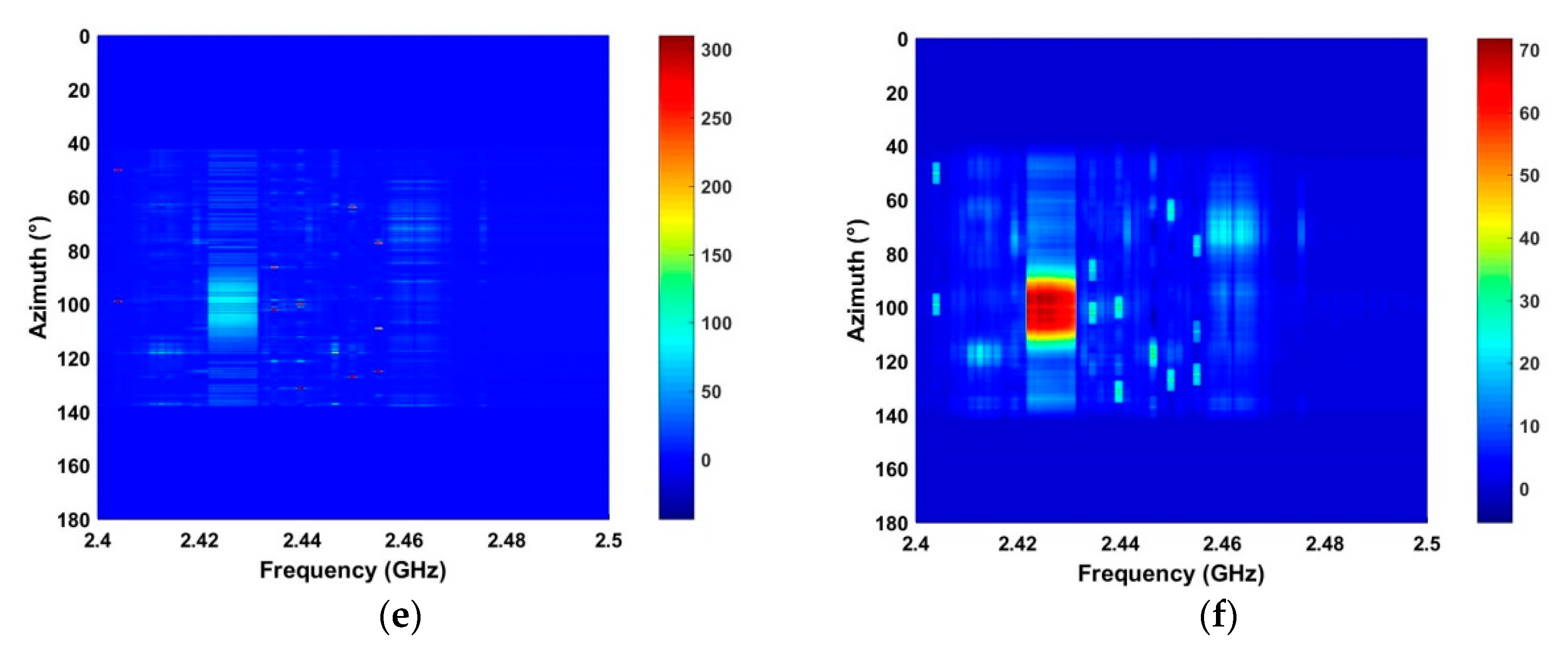

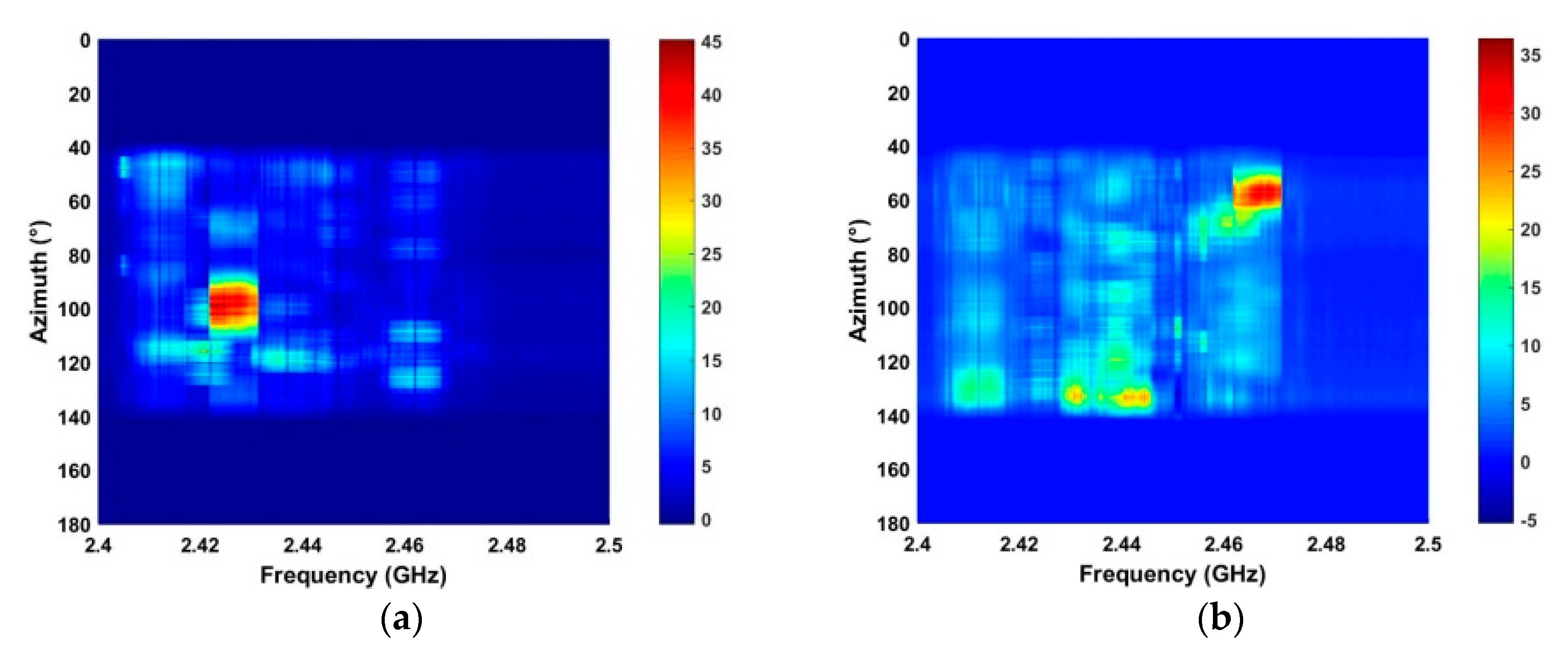

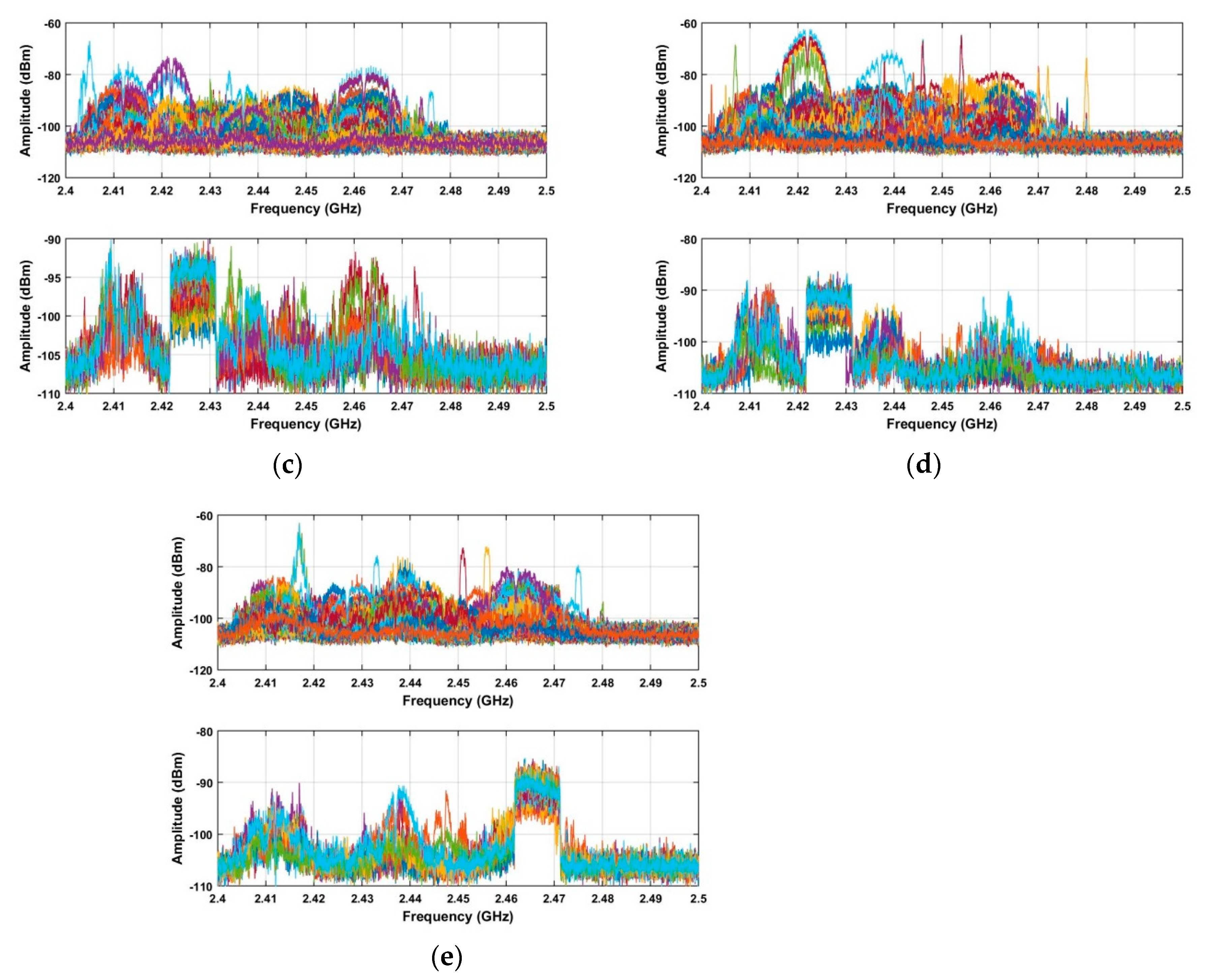

4.3. Frequency and Azimuth Estimation

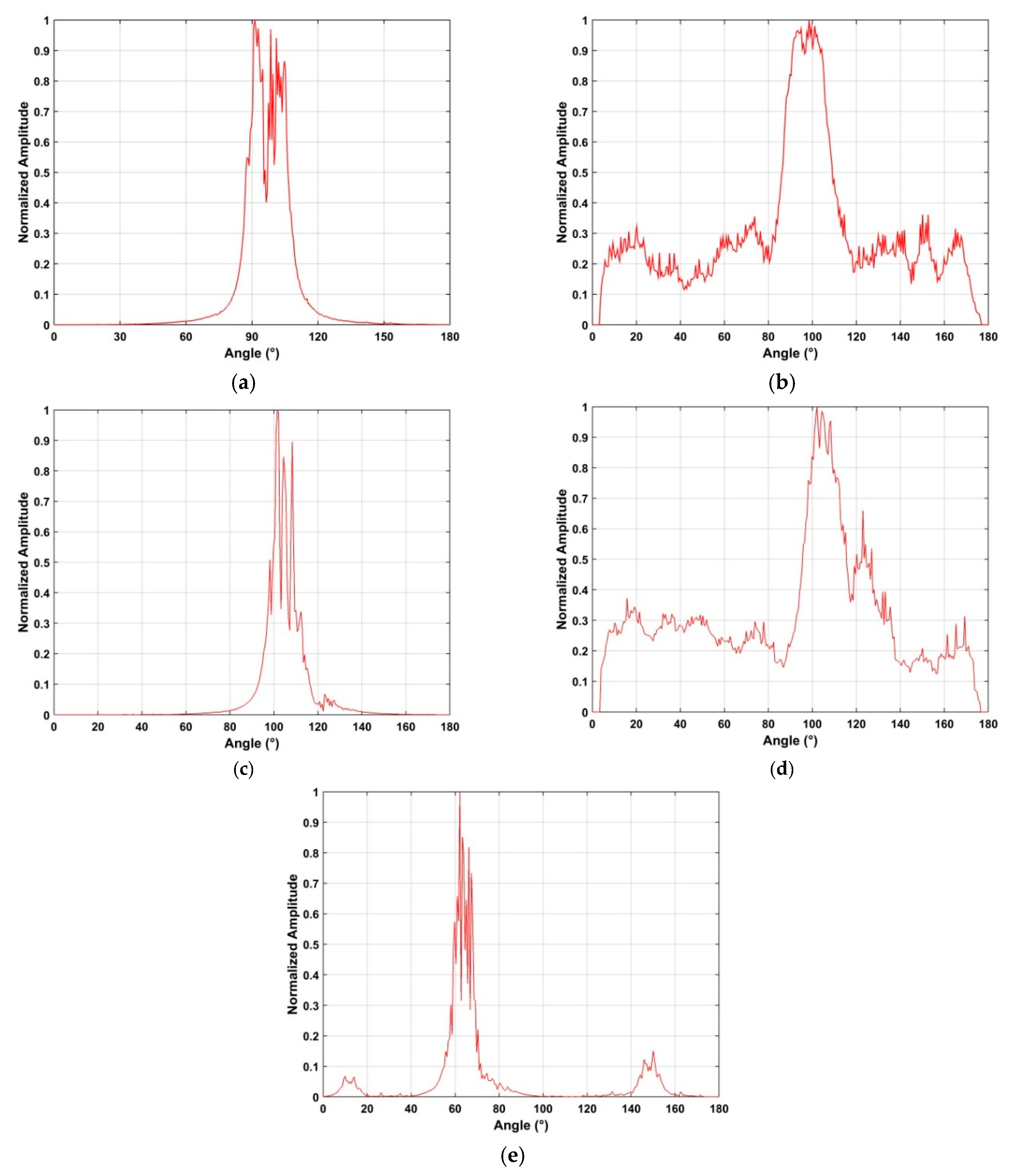

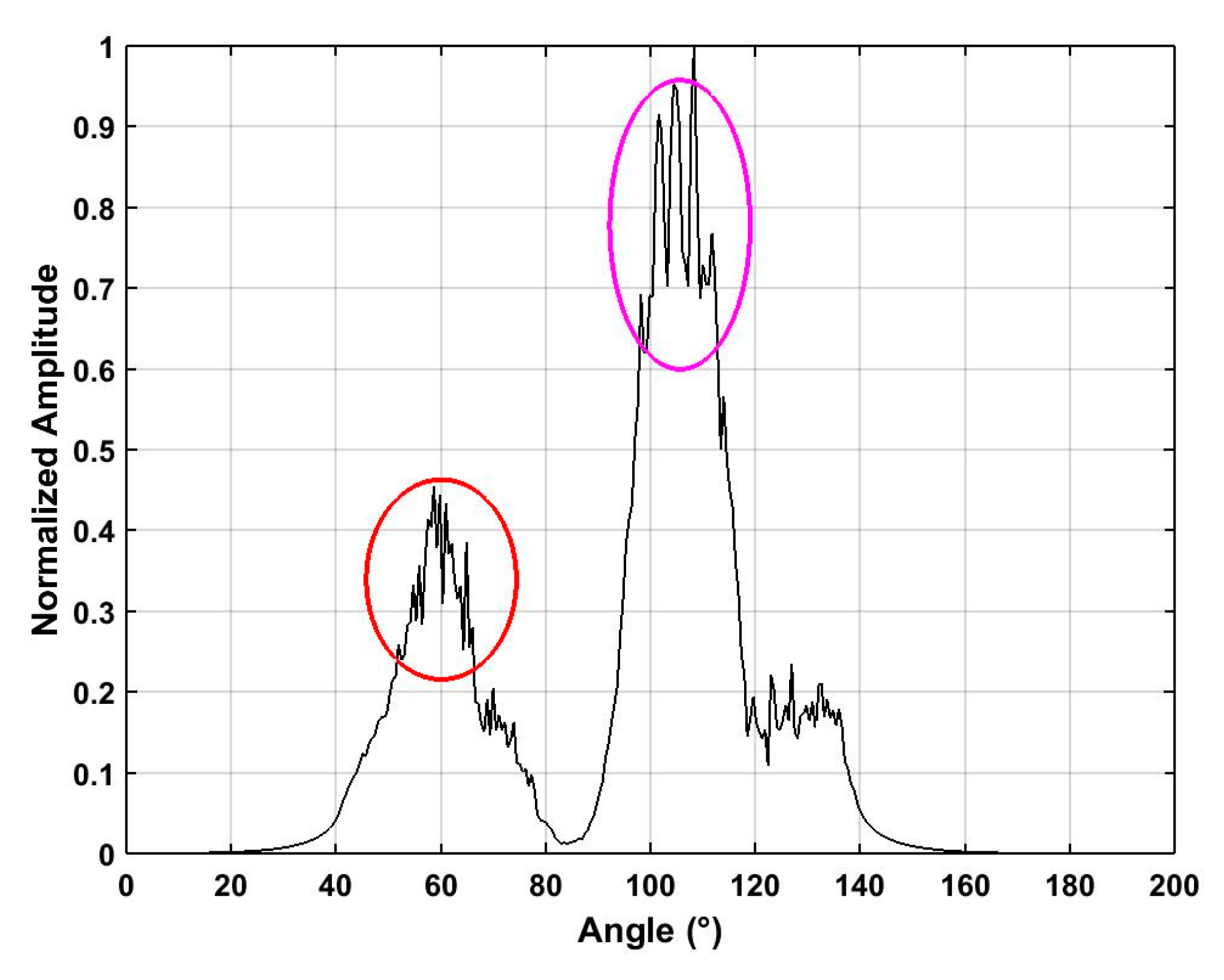

4.4. Detection of Multiple UAVs

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Variable | Description |

|---|---|

| gmax | maximum gain |

| gnorm[i, n] | normalized gain |

| gmin[i, n] | minimum gain |

| e[i, n] | RF signal power in a window of length w |

| S | diagonal matrix |

| UM×M | unitary matrix |

| VN×N | unitary matrix |

| σi | singular values |

| Mk | kth intrinsic image |

| MUAV | effective RF signals |

| Mnoise | noise |

| n | the number of selected singular values |

| α | index of the peak in P |

| ω | frequency value in the range 2.4 GHz–2.5 GHz |

| standard deviation of I in the frequency domain | |

| υ | index of the peak in |

| β | frequency estimate using the SFA method |

| τ | frequency estimate using the SA method |

| δ | error in the two frequency estimates |

| K | standard deviation of the signals in the ROI in the azimuth direction |

| κ | index of the peak in K |

References

- Ahmed, E.; Yaqoob, I.; Gani, A.; Imran, M.; Guizani, M. Internet-of-things-based smart environments: State of the art, taxonomy, and open research challenges. IEEE Wirel. Commun. 2016, 23, 10–16. [Google Scholar] [CrossRef]

- Lazarescu, M.T. Design of a WSN platform for long-term environmental monitoring for IoT applications. IEEE J. Emerg. Sel. Top. Circuits Syst. 2013, 3, 45–54. [Google Scholar] [CrossRef]

- Lau, B.P.; Wijerathne, N.; Ng, B.; Yuen, C. Sensor fusion for public space utilization monitoring in a smart city. IEEE Internet Things J. 2018, 5, 473–481. [Google Scholar] [CrossRef]

- Roy, A.; Siddiquee, J.; Datta, A.; Poddar, P.; Ganguly, G. Smart traffic & parking management using IoT. In Proceedings of the IEEE Information Technology, Electronics and Mobile Communication Conference, Vancouver, BC, Canada, 13–15 October 2016; pp. 1–3. [Google Scholar]

- Islam, S.R.; Rehman, R.A.; Khan, B.; Kim, B.S. The internet of things for health care: A comprehensive survey. IEEE Access 2015, 3, 678–708. [Google Scholar] [CrossRef]

- Latre, S.; Philip, L.; Tanguy, C.; Bart, B.; Pieter, B.; Piet, D. City of things: An integrated and multi-technology testbed for IoT smart city experiments. In Proceedings of the IEEE International Smart Cities Conference, Trento, Italy, 12–15 September 2016; pp. 1–8. [Google Scholar]

- Dastjerdi, A.V.; Sharifi, M.; Buyya, R. On application of ontology and consensus theory to human-centric IoT: An emergency management case study. In Proceedings of the 2015 IEEE International Conference on Data Science and Data Intensive Systems, Sydney, NSW, Australia, 11–13 December 2015; Volume 11, pp. 636–643. [Google Scholar]

- Sheng, Z.; Mahapatra, C.; Zhu, C. Recent advances in industrial wireless sensor networks towards efficient management in IoT. IEEE Access 2015, 3, 622–637. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-stump detection, segmentation, classification, and measurement using unmanned aerial vehicle (UAV) imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef]

- Remondino, F. Heritage recording and 3D modeling with photogrammetry and 3D scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Masuda, K.; Uchiyama, K. Robust control design for quad tilt-wing UAV. Aerospace 2018, 5, 17. [Google Scholar] [CrossRef]

- Maza, I.; Fernando, C.; Capitán, J.; Ollero, A. Experimental results in multi-UAV coordination for disaster management and civil security applications. J. Intel. Robot. Syst. Theory Appl. 2011, 61, 563–585. [Google Scholar] [CrossRef]

- Wu, K. Target tracking based on a nonsingular fast terminal sliding mode guidance law by fixed-wing UAV. Appl. Sci. 2017, 7, 333. [Google Scholar] [CrossRef]

- Bai, G.; Liu, J.; Song, Y.; Zuo, Y. Two-UAV intersection localization system based on the airborne optoelectronic platform. Sensors 2017, 17, 98. [Google Scholar] [CrossRef] [PubMed]

- D’Oleire-Oltmanns, S.; Marzolff, I.; Peter, K.; Ries, J. Unmanned aerial vehicle (UAV) for monitoring soil erosion in Morocco. Remote Sens. 2012, 4, 3390–3416. [Google Scholar]

- Stöcker, C.; Eltner, A.; Karrasch, P. Measuring gullies by synergetic application of UAV and close range photogrammetry-A case study from Andalusia, Spain. Catena 2015, 132, 1–11. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Engel, A.; Teichert, B. The photogrammetric potential of low-cost UAVs in forestry and agriculture. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1207–1213. [Google Scholar]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Honkavaara, E.; Kaivosoja, J.; Mäkynen, J.; Pellikka, I. Hyperspectral reflectance signatures and point clouds for precision agriculture by light weight UAV imaging system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 7, 353–358. [Google Scholar] [CrossRef]

- Hunt, E.; Hively, W.; Fujikawa, S.; Linden, D.; Daughtry, C.; McCarty, G. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Berni, J.; Zarco-Tejada, P.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an unmanned aerial vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Gevaert, C.; Sliuzas, R.; Persello, C. Opportunities for UAV mapping to support unplanned settlement upgrading. In Proceedings of the GeoTech Rwanda, Kigali, Rwanda, 18–20 November 2015. [Google Scholar]

- Lazarescu, M.T. Design and field test of a WSN platform prototype for long-term environmental monitoring. Sensors 2015, 15, 9481–9518. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, B.; Nex, F.; Scaioni, M.; Sarazzi, C. UAV photogrammetry for mapping and 3D modeling-current status and future perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, C22. [Google Scholar] [CrossRef]

- Francisco, A.V.; Fernando, C.R.; Patricio, M.C. Assessment of photogrammetric mapping accuracy based on variation ground control points number using unmanned aerial vehicle. Meas. J. Int. Meas. Confed. 2017, 98, 221–227. [Google Scholar]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Accuracy of digital surface models and orthophotos derived from unmanned aerial vehicle photogrammetry. J. Surv. Eng. 2017, 143, 04016025. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of UAV ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Jain, M. A next-generation approach to the characterization of a non-model plant transcriptome. Curr. Sci. 2011, 101, 1435–1439. [Google Scholar]

- Van, B.; Harmonising, P. UAS Regulations and Standards; UAS Special Issue; GIM International: Lemmer, The Netherlands, 2016. [Google Scholar]

- VanWegen, W.; Stumpf, J. Bringing a New Level of Intelligence to UAVs-Interview with Jan Stumpf; UAS Special Issue; GIM International: Lemmer, The Netherlands, 2016. [Google Scholar]

- Klare, J.; Biallawons, O.; Cerutti-Maori, D. UAV detection with MIMO radar. In Proceedings of the International Radar Symposium, Prague, Czech Republic, 28–30 June 2017. [Google Scholar]

- Zhang, H.; Cao, C.; Xu, L.; Gulliver, T.A. A UAV detection algorithm based on an artificial neural network. IEEE Access 2018, 6, 24720–24728. [Google Scholar] [CrossRef]

- Christof, S.; Maasdorp, F. Micro-UAV detection using DAB-based passive radar. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017. [Google Scholar]

- Biallawons, O.; Klare, J.; Fuhrmann, L. Improved UAV detection with the MIMO radar MIRA-CLE Ka using range-velocity processing and TDMA correction algorithms. In Proceedings of the International Radar Symposium, Bonn, Germany, 20–22 June 2018. [Google Scholar]

- Jovanoska, S.; Brötje, M.; Koch, W. Multisensor data fusion for UAV detection and tracking. In Proceedings of the International Radar Symposium, Bonn, Germany, 20–22 June 2018. [Google Scholar]

- Ádám, S.; Rudolf, S.; Dániel, R.; Péter, R. Multilateration based UAV detection and localization. In Proceedings of the International Radar Symposium, Prague, Czech Republic, 28–30 June 2017. [Google Scholar]

- Ma’sum, M.A.; Arrofi, M.; Jati, G.; Arifin, F.; Kurniawan, M.; Mursanto, P.; Jatmiko, W. Simulation of intelligent unmanned aerial vehicle (UAV) for military surveillance. In Proceedings of the International Conference on Advanced Computer Science and Information Systems, Bali, Indonesia, 28–29 September 2013; pp. 161–166. [Google Scholar]

- Nijim, N.; Mantrawadi, N. UAV classification and identification system by phenome analysis using data mining techniques. In Proceedings of the 2016 IEEE Symposium on Technologies for Homeland Security (HST), Waltham, MA, USA, 10–11 May 2016; pp. 1–5. [Google Scholar]

- Mendis, G.J.; Tharindu, R.; Jin, W.; Arjuna, M. Deep learning based doppler radar for micro UAS detection and classification. In Proceedings of the MILCOM 2016—2016 IEEE Military Communications Conference, Baltimore, MD, USA, 1–3 November 2016; pp. 924–929. [Google Scholar]

- Stolkin, R.; David, R.; Mohammed, T.; Ionut, F. Bayesian fusion of thermal and visible spectra camera data for mean shift tracking with rapid background adaptation. In Proceedings of the IEEE Sensors, Taipei, Taiwan, 28–31 October 2012; pp. 1–4. [Google Scholar]

- Witschi, M.; Schild, J.; Nyffenegger, B.; Stoller, C.; Berger, M.; Vetter, R.; Stirnimann, G.; Schwab, P.; Dellsperger, F. Detection of modern communication signals using frequency domain morphological filtering. In Proceedings of the 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 1413–1417. [Google Scholar]

- Gökçe, F.; Ucoluk, G.; Sahin, E.; Kalkan, S. Vision-based detection and distance estimation of micro unmanned aerial vehicles. Sensors 2015, 15, 23805–23846. [Google Scholar] [CrossRef]

- Fu, C.; Duan, R.; Kircali, D.; Kayacan, E. Onboard robust visual tracking for UAVs using a reliable global-local object model. Sensors 2016, 16, 1406. [Google Scholar] [CrossRef]

- Li, J.; Ye, D.; Chung, T.; Kolsch, M.; Wachs, J.; Boumanet, C. Multi-target detection and tracking from a single camera in Unmanned Aerial Vehicles (UAVs). In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 4992–4997. [Google Scholar]

- Ritchie, M.; Francesco, F.; Hugh, G.; Børge, T. Micro-drone RCS Analysis. In Proceedings of the IEEE Radar Conference, Johannesburg, South Africa, 27–30 October 2015; pp. 452–456. [Google Scholar]

- Boucher, P. Domesticating the UAV: The demilitarisation of unmanned aircraft for civil markets. Sci. Eng. Ethics 2015, 21, 1393–1412. [Google Scholar] [CrossRef] [PubMed]

- Mohammad, S.S.; Osamah, A.R.; Daniel, N.A. Performance of an embedded monopole antenna array in a UAV wing structure. In Proceedings of the IEEE Mediterranean Electrotechnical Conference, Valletta, Malta, 26–28 April 2010; pp. 835–838. [Google Scholar]

- Liang, X.; Zhang, H.; Fang, G.; Ye, S.; Gulliver, T.A. An improved algorithm for through-wall target detection using ultra-wideband impulse radar. IEEE Access 2017, 5, 22101–22118. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, H.; Ye, S.; Fang, G.; Gulliver, T.A. Improved denoising method for through-wall vital sign detection using UWB impulse radar. Digit. Signal Process. 2018, 74, 72–93. [Google Scholar] [CrossRef]

- Gorovoy, S.; Kiryanov, A.; Zheldak, E. Variability of Hydroacoustic Noise Probability Density Function at the Output of Automatic Gain Control System. Appl. Sci. 2018, 8, 142. [Google Scholar] [CrossRef]

- Liang, X.; Wang, Y.; Wu, S.; Gulliver, T.A. Experimental study of wireless monitoring of human respiratory movements using UWB impulse radar systems. Sensors 2018, 18, 3065. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Deng, J.; Zhang, H.; Gulliver, T.A. Ultra-wideband impulse radar through-wall detection of vital signs. Sci. Rep. 2018, 8, 13367. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Wu, S.; Chen, C.; Chen, J.; Fang, G. A novel method for automatic detection of trapped victims by ultrawideband radar. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3132–3142. [Google Scholar] [CrossRef]

- Xu, Y.; Dai, S.; Wu, S.; Chen, J.; Fang, G. Vital sign detection method based on multiple higher order cumulant for ultrawideband radar. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1254–1265. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, H.; Gulliver, T.A. A novel time of arrival estimation algorithm using an energy detector receiver in MMW systems. EURASIP J. Adv. Signal Process. 2017, 83, 1–13. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, H.; Gulliver, T.A. Energy detector based TOA estimation for MMW systems using machine learning. Telecommun. Syst. 2017, 64, 417–427. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, H.; Lu, T. Extreme learning machine for 60 GHz millimetre wave positioning. IET Commun. 2017, 11, 483–487. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Gain | 24 dBi |

| Beamwidth | 10° |

| Frequency range | 2.3 GHz–2.7 GHz |

| Azimuth angle | 0°–180° |

| receiver dynamic range | 72 dB |

| Method | 2500 m | 2800 m |

|---|---|---|

| Proposed | −25.72 | −29.71 |

| ANN | −38.35 | −43.62 |

| HOC | −31.46 | −35.72 |

| CFAR | −29.57 | −33.78 |

| Method | Error | |||||

|---|---|---|---|---|---|---|

| 500 m (MHz) | 1500 m (MHz) | 2400 m (MHz) | 2500 m (MHz) | 2800 m (MHz) | ||

| Proposed | τ | 0.5 | 0.5 | 0.1 | 0.5 | 0.5 |

| β | 0.5 | 0.5 | 0.1 | 0.5 | 0.5 | |

| HOC | 2.3 | 5.7 | 18 | 15 | 37 | |

| CFAR | 4.5 | 19 | 27 | 69 | 91 | |

| Method | Error (°) | ||||

|---|---|---|---|---|---|

| 500 m | 1500 m | 2400 m | 2500 m | 2800 m | |

| Proposed | 3.86 | 5.18 | 3.35 | 4.27 | 7.24 |

| HOC | 11.25 | 19.37 | 34.96 | 8.39 | 12.49 |

| CFAR | 7.68 | 9.24 | 13.86 | 9.27 | 11.24 |

| Method | 500 m | 1500 m | 2400 m | 2500 m | 2800 m |

|---|---|---|---|---|---|

| Proposed method | 100 | 100 | 100 | 90 | 90 |

| HOC | 100 | 90 | 60 | 50 | 50 |

| CFAR | 90 | 90 | 70 | 40 | 40 |

| Method | Parameter | 2400 m | 2500 m | ||

|---|---|---|---|---|---|

| Estimate | Error | Estimate | Error | ||

| Proposed | Frequency (GHz) τ | 2.463 | 0.005 | 2.427 | 0.005 |

| Frequency (GHz) β | 2.463 | 0.005 | 2.427 | 0.005 | |

| Azimuth | 65° | 5° | 112° | 12° | |

| ANN | Frequency (GHz) | 2.446 | 0.022 | 2.453 | 0.031 |

| Azimuth | 30° | 30° | 76° | 34° | |

| HOC | Frequency (GHz) | 2.439 | 0.029 | 2.412 | 0.120 |

| Azimuth | 22° | 38° | 56° | 54° | |

| CFAR | Frequency (GHz) | 2.436 | 0.102 | 2.484 | 0.062 |

| Azimuth | 37° | 23° | 70° | 40° | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, S.; Qin, H.; Liang, X.; Gulliver, T.A. An Improved Unauthorized Unmanned Aerial Vehicle Detection Algorithm Using Radiofrequency-Based Statistical Fingerprint Analysis. Sensors 2019, 19, 274. https://doi.org/10.3390/s19020274

Yang S, Qin H, Liang X, Gulliver TA. An Improved Unauthorized Unmanned Aerial Vehicle Detection Algorithm Using Radiofrequency-Based Statistical Fingerprint Analysis. Sensors. 2019; 19(2):274. https://doi.org/10.3390/s19020274

Chicago/Turabian StyleYang, Shengying, Huibin Qin, Xiaolin Liang, and Thomas Aaron Gulliver. 2019. "An Improved Unauthorized Unmanned Aerial Vehicle Detection Algorithm Using Radiofrequency-Based Statistical Fingerprint Analysis" Sensors 19, no. 2: 274. https://doi.org/10.3390/s19020274

APA StyleYang, S., Qin, H., Liang, X., & Gulliver, T. A. (2019). An Improved Unauthorized Unmanned Aerial Vehicle Detection Algorithm Using Radiofrequency-Based Statistical Fingerprint Analysis. Sensors, 19(2), 274. https://doi.org/10.3390/s19020274