Abstract

In the maritime scene, visible light sensors installed on ships have difficulty accurately detecting the sea–sky line (SSL) and its nearby ships due to complex environments and six-degrees-of-freedom movement. Aimed at this problem, this paper combines the camera and inertial sensor data, and proposes a novel maritime target detection algorithm based on camera motion attitude. The algorithm mainly includes three steps, namely, SSL estimation, SSL detection, and target saliency detection. Firstly, we constructed the camera motion attitude model by analyzing the camera’s six-degrees-of-freedom motion at sea, estimated the candidate region (CR) of the SSL, then applied the improved edge detection algorithm and the straight-line fitting algorithm to extract the optimal SSL in the CR. Finally, in the region of ship detection (ROSD), an improved visual saliency detection algorithm was applied to extract the target ships. In the experiment, we constructed SSL and its nearby ship detection dataset that matches the camera’s motion attitude data by real ship shooting, and verified the effectiveness of each model in the algorithm through comparative experiments. Experimental results show that compared with the other maritime target detection algorithm, the proposed algorithm achieves a higher detection accuracy in the detection of the SSL and its nearby ships, and provides reliable technical support for the visual development of unmanned ships.

1. Introduction

In recent years, with the continuous development of artificial intelligence (AI), big data, and communication technology, unmanned driving technology has made breakthrough achievements. Unmanned aerial vehicles (UAVs) have gradually entered the civil field from the military field, and unmanned ground vehicles (UGVs) are continually testing on public roads around the world. The research on unmanned ships is also developing rapidly. Major research institutions at home and abroad are investing a large amount of manpower, material resources, and financial resources to carry out theoretical research, technology research, and development of large-tonnage unmanned merchant ships. The key technologies of unmanned ships mainly include situational awareness, intelligent decision-making, motion control, maritime communication, and shore-based remote control, etc., and situational awareness is the premise of all other technologies. The advanced sensors are used to obtain the situation information around unmanned ships, provide basic data support for complex tasks such as intelligent decision-making and motion control, and ensure the autonomous operation safety of unmanned ships [1].

Currently, ships perceive the maritime environment mainly through two kinds of sensors, namely, radio detection and ranging (RADAR) and automatic identification system (AIS). They transmit the target information to the electronic chart display and information system (ECDIS), which realizes a certain degree of intelligent analysis and decision. However, the maritime navigation environment is complex and variable. RADAR and AIS cannot directly reflect the spatial information of detection targets. The situational awareness cannot be established quickly, and mariners need to confirm the situation. At the same time, RADAR detection is sensitive to meteorological conditions and the shape, size, and material of the target. AIS cannot effectively detect small targets that are not equipped with it or are not turned on. Visible light sensors are intuitive, reliable, informative, and cost-effective [2]. With the continuous development of computer vision technology, visible light cameras as important situational awareness sensors are gradually being applied to unmanned ships, providing a reliable source of information for intelligent decision-making.

The main targets for maritime detection using cameras include ships, rigs, navigation aids, and icebergs. When maritime targets appear in the field of view of the camera, they must appear in the vicinity of the sea–sky line (SSL). As the distance between the camera and the target approaches, the target gradually enters the sea area. It can be seen that extracting the SSL and performing maritime target detection in its vicinity can greatly reduce the target detection range and reduce the complexity and calculation amount of the algorithm. However, a target near the SSL has a very small area in the image, usually only a few tens or hundreds of pixels, which is easily overwhelmed by the complex sea–sky background, resulting in target missed detection or false detection [3]. Therefore, this paper proposes an algorithm based on the motion attitude model of a visible light camera for the SSL and its nearby ships.

2. Related Work

In general, maritime target detection technology mainly includes three steps, namely, SSL detection, background removal, and foreground segmentation. Based on the research status at home and abroad in recent years, this paper briefly reviews the three algorithms and proposes the main technical framework.

2.1. SSL Detection

The SSL is an important feature of maritime images, and there are many related researches, which are mainly divided into two categories. The first category is based on the combination of edge detection and straight-line fitting. The image is processed by the edge detection operator, and then the high gradient edge pixels are straight-line fitted or projected. Liu [4] proposed an SSL detection algorithm based on inertial measurement and Hough transform fusion. The inertial data of the shipboard camera are used to estimate the position of the SSL in successive frames, then Canny operator and Hough transform are used to realize SSL extraction in the detection region. Wang Bo et al. [5] proposed an SSL detection algorithm based on gradient saliency and region growth. The gradient saliency calculation effectively improves the characteristics of the SSL and suppresses the influence of complex sea conditions such as clouds and sea clutter. Kim et al. [6] proposed an algorithm for estimating the position of the SSL by camera pose and fitting it using random sampling consistency (RANSAC). Fefilatyev et al. [7] used the combination of Gaussian distribution and Hough transform to select the optimal SSL from five candidate SSLs. Santhalia et al. [8] proposed a Sobel operator edge detection algorithm based on eight directions, which effectively eliminates edge noise and has small computational complexity and strong stability. The second category is based on the method of image segmentation, which extracts the upper part of the SSL by threshold processing or background modeling. Dai et al. [9] proposed an edge detection algorithm based on local Otsu segmentation, which solves the problem of poor global threshold segmentation. Zhang et al. [10] proposed an SSL extraction algorithm based on Discrete Cosine Transform (DCT) coefficients. The image is segmented into 8 8 non-overlapping blocks, and the DCT coefficients in the block are calculated to segment the sky and sea areas. Zeng et al. [11] extracted the contour edges using the improved Canny operator of the surrounding texture suppression, and then voted the Hough transform to finely detect the horizontal or oblique SSL. Nasim et al. [12] proposed a K-means algorithm to segment the sea scene into clusters, and extract the SSL by analyzing the image segments. The above algorithms have achieved good detection results in their respective experiments, but the first category of algorithm is not able to balance SSL edge extraction and wave edge suppression according to the gradient extraction edge process, and the second category of algorithm is not able to get the SSL limited by the image segmentation accuracy.

2.2. Background Removal

In the maritime scene, we usually segment the sea–sky background by simulating the color, texture, saturation, and other features, and subtract it from the original image. Kim et al. [13] used improved mean difference filtering to improve the target signal-to-noise ratio while processing infrared images, and averaged the sea–sky background to remove sea clutter interference. However, this method only worked well for structural clutter similar to the SSL, and had a poor effect on the sea surface interference with strong light reflection. Zeng et al. [14] used the surrounding texture filter instead of mean-shift filter to improve the mean-shift image segmentation algorithm, and controlled the filter parameters to perform fast region clustering to remove the sea–sky background. However, the texture filter parameters and clustering parameters needed to be manually set, which needed a certain prior knowledge. In addition, a technique based on the visual saliency model is gradually being applied to maritime target detection. It simulates human visual features through intelligent algorithms, suppresses the sea–sky background, and extracts visual salient regions in the image—that is, regions of human interest. Fang et al. [15] applied the theory of color space and wavelet transform to extract the low frequency, high frequency, hue, saturation, and brightness characteristics of the task water image. The visual attention operator was used to fuse various features, effectively overcoming the background disturbances such as waves, wakes, and onshore buildings. Lou et al. [16] solved the small target detection problem in color images from two aspects of stability and saliency. By multiplying the stability and saliency maps by pixels, the noise interference in the background was eliminated. Agrafiotis et al. [17] designed a maritime tracking system by combining a visual saliency model with a Gaussian Mixed model (GMM) and used an adaptive online neural network tracker to further refine the tracking results. Liu et al. [18] achieved further enhancement of the visual saliency model through a two-scale detection scheme. On a larger scale, the sea surface was removed by the mean-shift filter. On a smaller scale, the target was coarsely extracted by extracting the edge of the significant region, and then the fine processing of the chroma component was used to select the output target. The above algorithm achieves background removal by reducing the background noise of the sea–sky background and enhancing the salient features of the region of interest, but when there is strong cloud, wave, or ship wake disturbance in the sea–sky background, the saliency is the same or higher than the target, which causes the visual saliency algorithm to produce large errors. In addition to the above documents, Ebadi et al. [19] proposed a modified approximated robust PCA algorithm that can handle moving cameras and takes advantage of the block sparse structure of the pixels corresponding to the moving objects.

2.3. Foreground Segmentation

After the image background is removed, we can apply morphological processing to obtain the maritime target. Westall et al. [20] applied improved morphological processing of close-minus-open (CMO) techniques to enhance target detection. Fefilatyev [21] used the Otsu algorithm to obtain global thresholds in the region above the SSL, and used the global threshold to segment the target vessel. Although features such as edges and contours are widely used in target ship detection, it is still difficult to achieve ideal results in complex sea–sky backgrounds with the above algorithms. Besides, Kumar et al. [22] and Selvi et al. [23] made full use of the target ship’s color, texture, shape, and other information, and used the support vector machine to classify the target. Frost et al. [24] also applied the prior knowledge of ship shape to the level set segmentation algorithm to improve ship detection results. Loomans et al. [25] integrated a multi-scale Histogram of Oriented Gradient (HOG) detector and a hierarchical Kanade-Lucas-Tomasi (KLT) feature point tracker to track ships in the port, and achieved better detection and tracking effects. The above algorithm is not based on the background subtraction algorithm, but is based on the manually set ship characteristics for target detection. With the continuous development of deep learning technology, the feature extraction algorithm, based on convolutional neural network, is gradually dominating image classification, detection, segmentation, etc., and is gradually being applied to the field of maritime target detection. Ren et al. [26] proposed an improved Faster R-CNN system to detect small target ships in remote sensing images. The statistical algorithms were used to screen the appropriate anchors, and the detection techniques were greatly improved by using jump links and texture information. Yang et al. [27] designed a rotational density pyramid network model to extract the ship’s direction while accurately detecting the target ship. Zhang et al. [28] proposed a scheme combining saliency detection and convolutional neural networks to accurately detect ships in remote sensing images of different poses, scales, and shapes. In addition to the above documents, Biondi [29] presented a complete procedure for the automatic estimation of maritime target motion parameters by evaluating the generated Kelvin waves detected in synthetic aperture radar (SAR) images. Graziano et al. [30] proposed a novel technique using X-band Synthetic Aperture Radar images provided by COSMO/SkyMed and TerraSAR-X for ship wake detection. Biondi et al. [31] proposed a new approach where the micro-motion estimation of ships, occupying thousands of pixels, was measured, processing the information given by sub-pixel tracking generated during the co-registration process of several re-synthesized time-domain and overlapped sub-apertures.

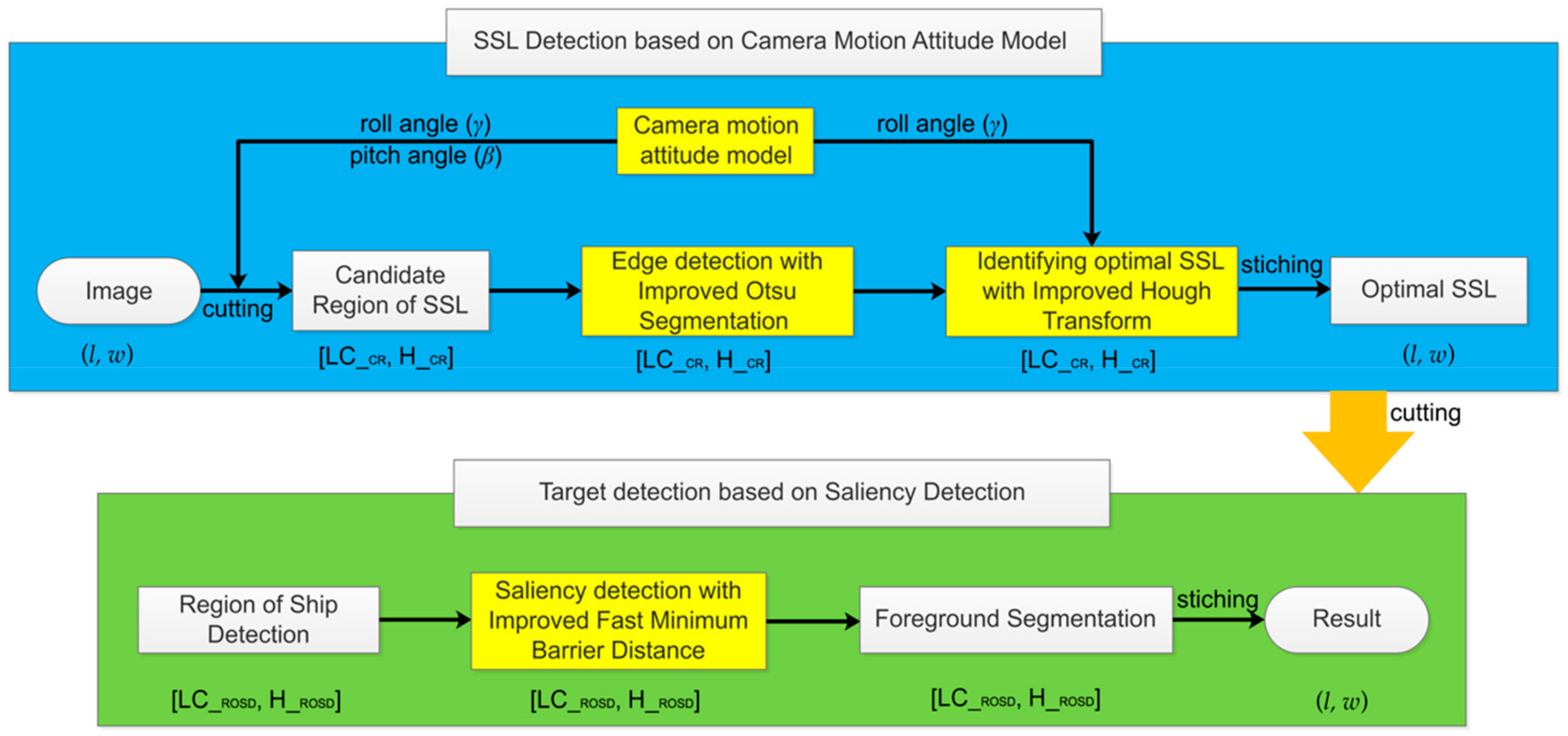

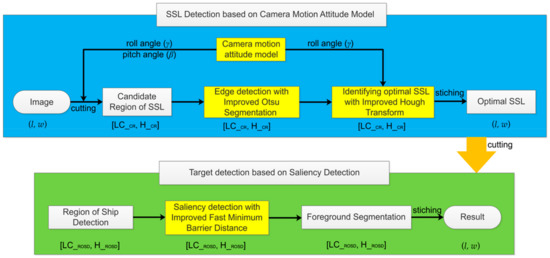

In summary, the above algorithms have achieved good application results in their respective research fields, but it is still difficult to achieve high detection accuracy for the SSL and its nearby ships in the complex sea–sky background. For the above problems, we propose the technical framework of this paper, which mainly includes two technologies, as shown in Figure 1. First, SSL detection. After the camera and the inertial sensor acquire the data synchronously, we pass the inertial data to the camera motion attitude model to obtain the image candidate region (CR) position, then cut the CR from the original image, and only perform edge detection and Hough transform in the CR to extract the optimal SSL. Finally, the CR with the optimal SSL is stitched back to the original image. Second, according to the optimal SSL position, we cut the region of ship detection (ROSD) of the image, and then only perform saliency detection and foreground segmentation on the ROSD, and finally stitch the detection result back to the original image.

Figure 1.

Technical framework of this paper. Roll angle () and pitch angle () represent inertial data, (l, w) represents the size of the original image. [LC_CR, H_CR] represents the candidate (CR) of the image, where LC_CR represents the upper left corner of the CR, and H_CR represents the height of the CR. [LC_ROSD, H_ROSD] represents the region of ship detection (ROSD) of the image, where LC_ROSD represents the upper left corner of the ROSD, and H_ROSD represents the height of the ROSD.

In the remainder of this paper, we describe the camera motion attitude model in Section 3, the SSL detection model in Section 4, and the visual saliency detection model in Section 5. We introduce the dataset used in the experiment and compare experiments with other algorithms in Section 6. Finally, in Section 7, we summarize and draw conclusions.

3. Camera Six-Degrees-of-Freedom Motion Attitude Modeling

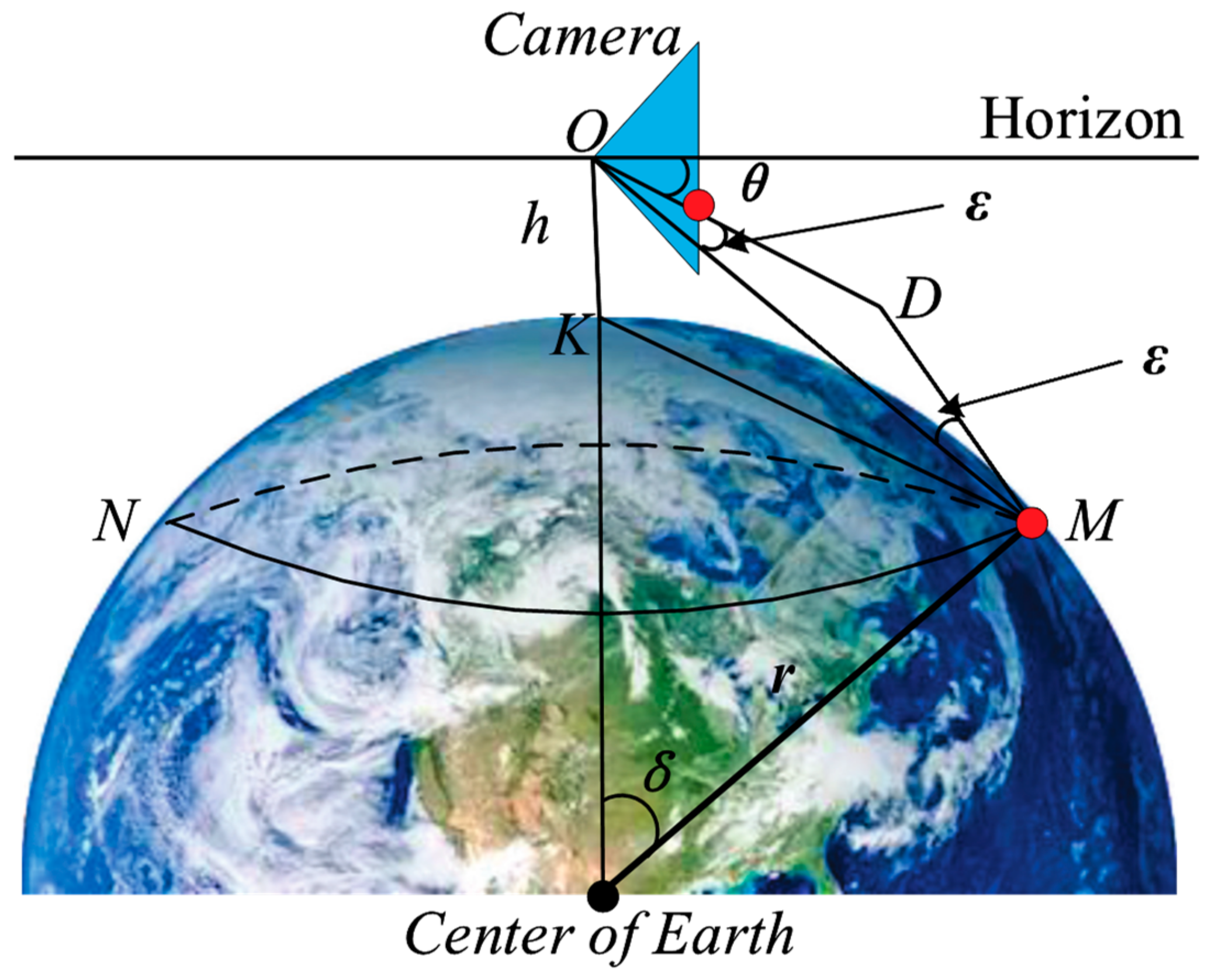

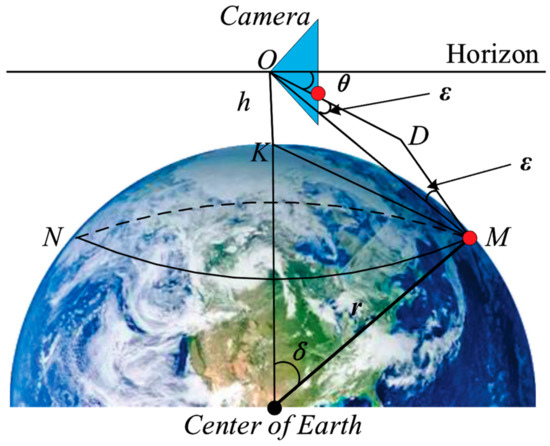

In navigation, the ship is sailing in a large circle at sea; the tester with an eye height of h sees that the farthest sea and the sky intersect into a circle, which is called the tester’s visible horizon, that is, the SSL. In ship vision, we use cameras instead of human eyes for sea target detection and identification. Assuming that the installation position of the camera is h from the sea level, the geometric relationship can be obtained considering the curvature of the earth and the difference of atmosphere refraction, as shown in Figure 2. The circle MN represents the SSL and the blue triangle represents the camera. Before using it, we finished camera calibration and distortion correction [32]. Therefore, in this analysis, we suppose the optical axis of the camera is parallel to the horizontal plane, which is called the initial state of the camera motion. The point O is the camera center, the point K is the projection of the point O at the sea level, r represents the radius of the earth, represents the angle of the ball, represents the difference of atmosphere refraction, the difference in the navigation is (1/13) , and the straight line OM represents the actual distance from camera to the SSL instead of , which is expressed by . In the triangle ∆OKM, since both and are small angles, we can approximate and , and can be obtained by Equation (1). According to the 1 nautical mile representing 1852 meters in navigation, it can be inferred that the r is 6366707 m. The position angle of the SSL in the camera can be obtained by Equation (2).

Figure 2.

Geometric relationship between the big circle and the camera position.

In order to simplify the projection relationship of the camera, we assume the sea level as a plane, while ignoring the relative motion of the camera and the ship, so that the camera coordinate system coincides with the ship’s motion coordinate system. Next, we model the camera’s six-degrees-of-freedom motion and the SSL position according to the coordinate system projection method [33].

3.1. Influence of Camera Swaying, Surging, and Yawing Motions on the Position of the SSL

Under the condition of maintaining the initial state, the height h of the camera remains unchanged when the camera only performs the swaying, surging, and yawing motions. It can be known from Equation (1) that is only related to h, so the camera swaying, surging, and yawing motions have no effect on the position of the SSL on the imaging plane.

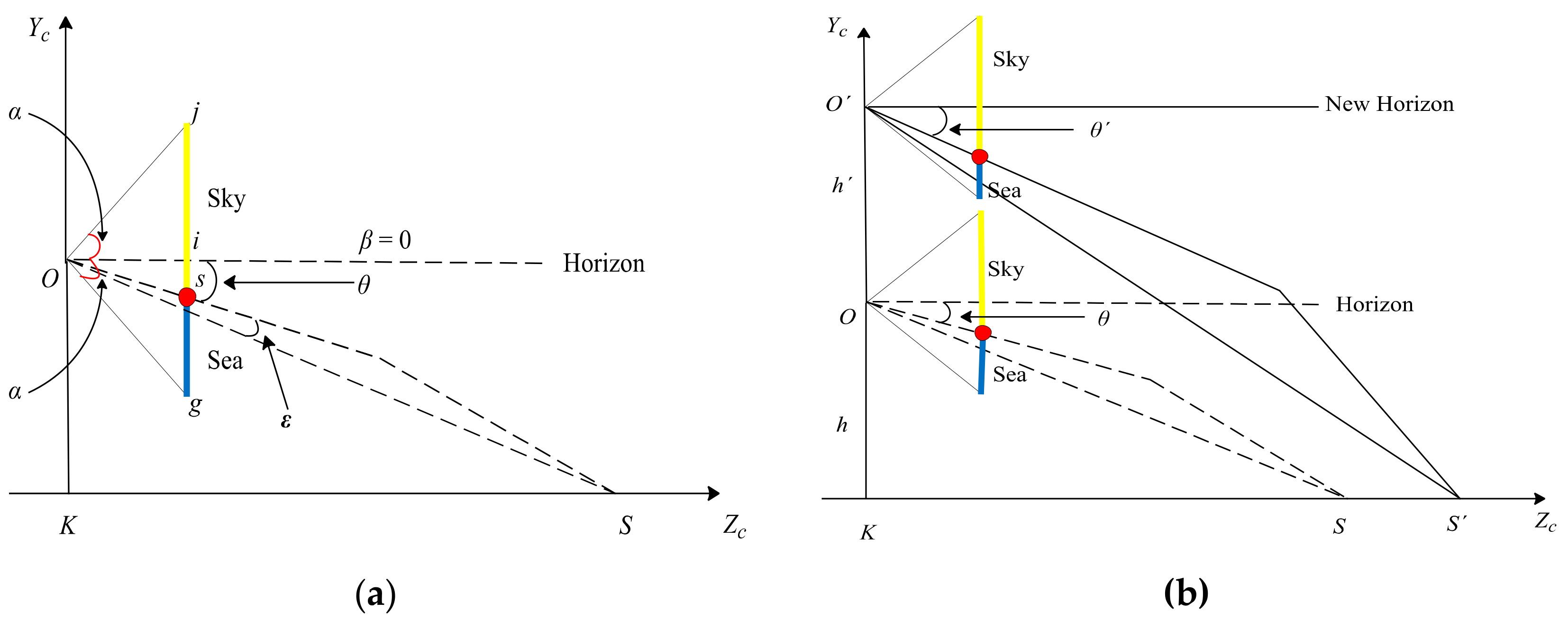

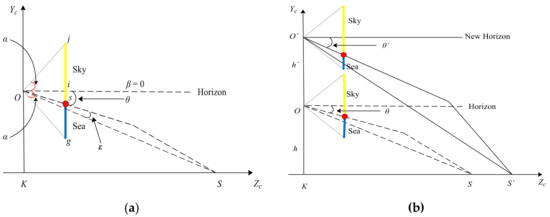

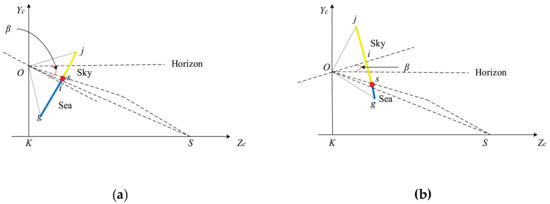

3.2. Influence of Camera Heaving and Pitching Motions on the Position of the SSL

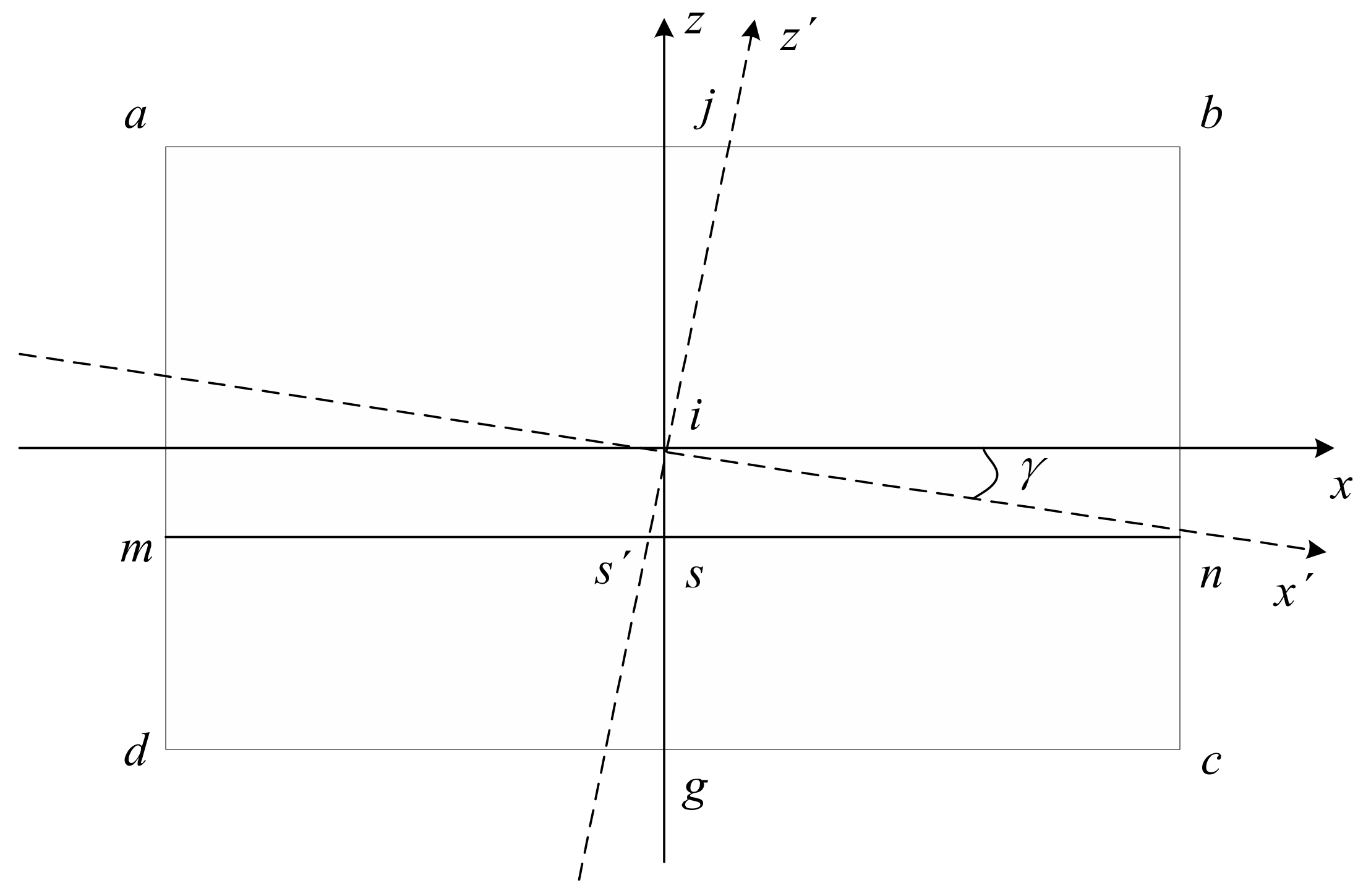

Under the condition of maintaining the initial state, we assume the sea level as a plane according to Figure 2, and obtain the geometric relationship as shown in Figure 3a. The triangle represents the camera. In the imaging plane of the camera, the line js represents the sky area, the line sg represents the sea area, the point s represents the projection point of the SSL, and the point i represents the center point, which is also taken as the origin (0, 0) of the image coordinate system. Assuming that the pitch angle of the camera is represented by , the camera’s vertical viewing angle is represented by 2 , the longitudinal width of the imaging plane of the camera is w, and the position of the SSL in the image is represented by , and can be obtained by:

Figure 3.

Geometric relationship between the sea–sky line (SSL) position and the camera position. (a) Geometric relationship under the initial state. (b) Geometric relationship under the camera heaving motion.

3.2.1. Influence of Camera Heaving Motion

Under the condition of maintaining the initial state, when the camera only performs the heaving motion, as shown in Figure 3b, it is assumed that the heaving height is , and the point represents the new position of the camera center. According to Equation (1), the position angle and the position can be obtained by:

3.2.2. Influence of Camera Pitching Motion

Under the condition of maintaining the initial state, when the camera only performs the pitching motion, as shown in Figure 4, it is assumed that the pitch angle clockwise rotation is positive and the counterclockwise rotation is negative. Under clockwise rotation, when , the SSL is located at the lower part of the imaging plane center line and gradually approaches it as increases. When , the SSL is located at the center line of the imaging plane. When , the SSL is located on the center line of the imaging plane. As the increases, it gradually moves away from the center line and close to the top of the image. When , the SSL is not in the imaging plane, and only the sea area can be seen in the image. Under counterclockwise rotation, when , the SSL is located at the lower part of the center line of the imaging plane, and as the increases, it gradually moves away from the center line and close to the bottom of the image. When , the SSL is not in the imaging plane, and only the sky area can be seen in the image. According to the above analysis, the position of the SSL after the pitching motion can be obtained by:

Figure 4.

Geometric relationship between the SSL position and camera pitching motion. (a) Clockwise rotation. (b) Counterclockwise rotation.

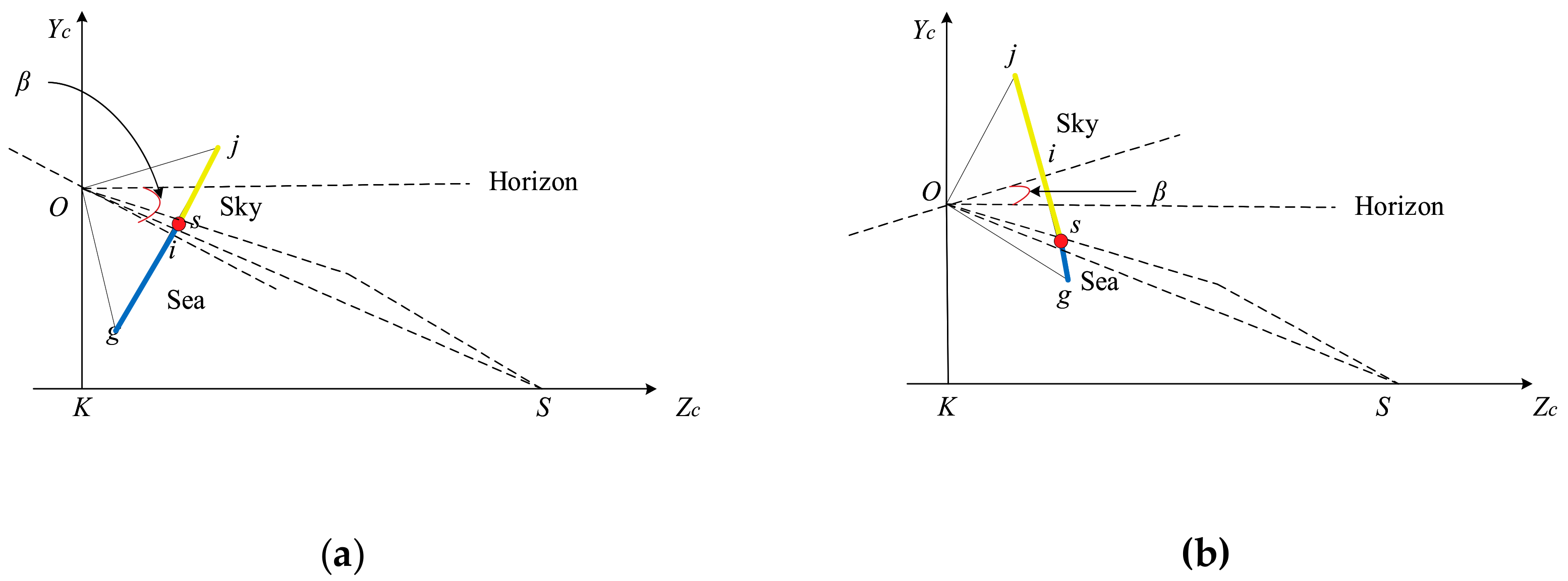

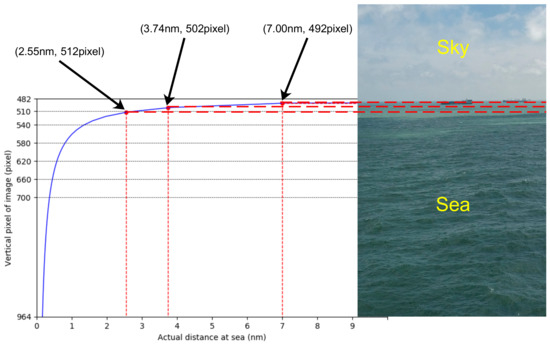

3.3. Influence of Camera Rolling Motion on the Position of the SSL

Under the condition of maintaining the initial state, when the camera only performs the rolling motion, as shown in Figure 5, it is assumed that the rolling angle is clockwise rotated (it is the same as counterclockwise rotation), is a new image coordinate system, and the SSL intersects the axis at . So, the SSL can be expressed by:

Figure 5.

Geometric relationship between the SSL position and camera rolling motion.

Comprehensive analysis of the relationship between the camera six-degrees-of-freedom motion and the SSL shows that when the camera performs the swaying, surging, and yawing motions, the SSL does not change in the image coordinate system. However, when the camera performs the heaving and pitching motions, the SSL performs a translational motion up and down in the image coordinate system, and when the ship performs the rolling motion, the SSL performs a rotational motion in the image coordinate system. Equations (1)–(7) can be used to obtain the estimation equation of the SSL in the image coordinate system, as shown in Equation (8), where the range of is . It can be seen from Equation (8) that the height change generated by the camera’s heaving motion has less influence on the position of the SSL in the image, and it is also much smaller than the installation height of the camera; so, Equation (8) can be simplified to obtain the final SSL estimation equation, as shown in Equation (9).

4. Edge Detection and Hough Transform Algorithm for the Detection of the SSL

4.1. Estimating the CR of the SSL

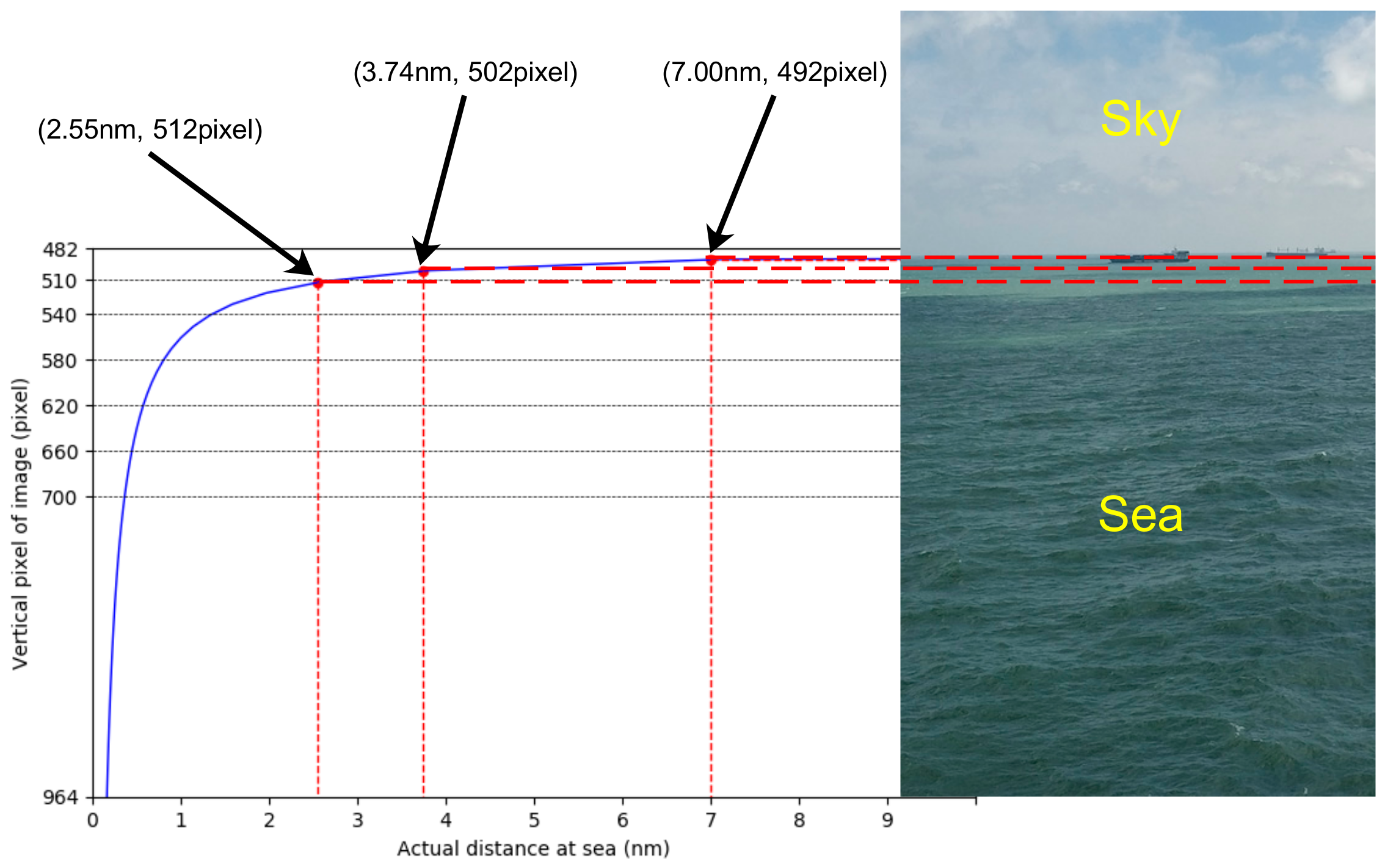

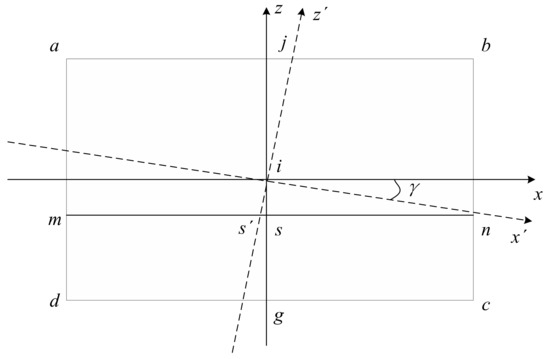

In order to estimate the CR of the SSL, we need to change from the camera coordinate system to the image coordinate system; that is, the coordinate origin moves from the center point to the upper left corner. Then, we begin to explore the relationship between the pixel points on the image and the actual distance at sea. First, we find the position of the SSL on the image in the current coordinate system, as shown in Equation (10), where represents the pixel points on the image. Then, through Equations (1) and (10), we can obtain the relationship between and the actual distance at sea, as shown in Equation (11). Assuming h = 20 m, the camera parameters are w = 964 pixels and = 3.7°, and the relationship between D and can be obtained as shown in Figure 6, where horizontal and vertical coordinates represent D and respectively. Since we only want to show the relationship between the SSL and the sea area, the value of the ordinate is from the center of the image to the bottom, so the range is [482, 964]. From Figure 6, it can be seen that the closer to the SSL, the larger the actual distance represented by each pixel. A distance of 2.55 nm or beyond from the camera can be represented by 30 pixels on the image.

Figure 6.

Relationship between the pixel points on the image and the actual distance at sea.

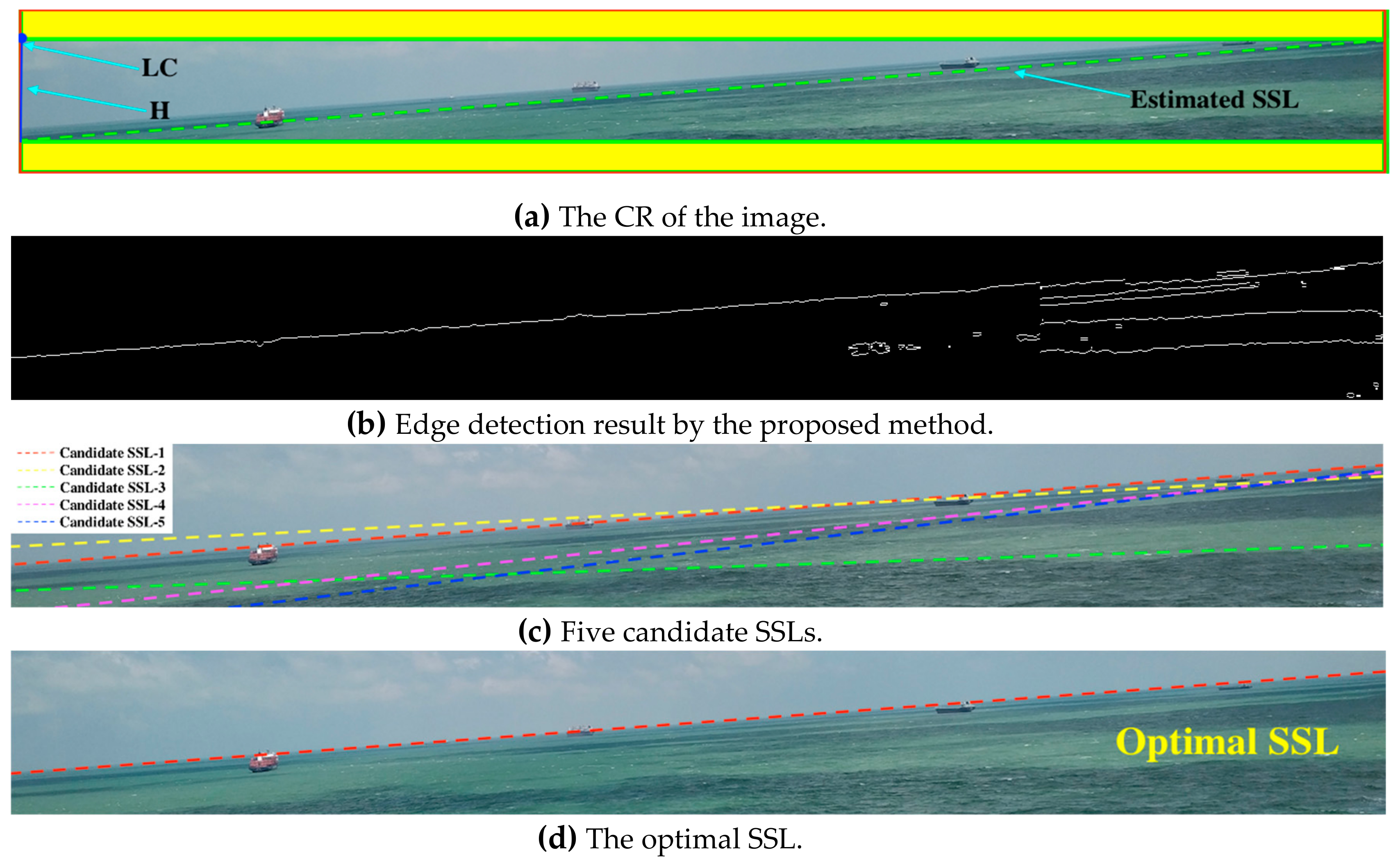

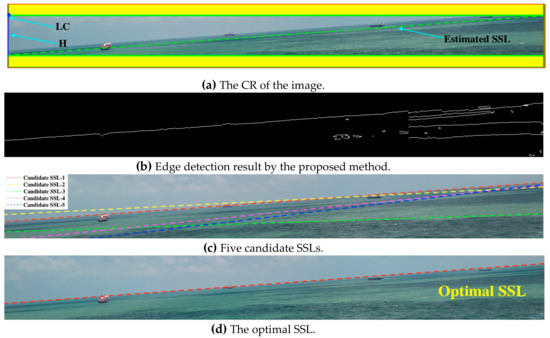

Considering that the SSL is usually a straight line that runs through the entire image and generally has a certain angle of inclination, we use a rectangle to describe the SSL. The upper left corner and the height of the rectangle are represented by LC and H, respectively. In order to reduce the estimation error, we add a yellow area with a height of 30 pixels to the upper and lower sides of the rectangular area as the CR of the SSL. The parameter value can be obtained by Equation (12), as shown in Figure 7a.

Figure 7.

Figures of each stage in the SSL extraction algorithm. In (a), the camera parameters are , , the inertial sensor parameters are ; and the green dotted line represents the estimated SSL obtained by the camera motion attitude model. In (b), . In (c), the clustering parameter is 5. In (d), = 0.4.

4.2. Edge Detection in the CR

After acquiring the CR, it is only necessary to process the image edges in the region, which can effectively reduce the calculation amount of the image processing work. In this paper, a novel edge detection algorithm based on the local Otsu segmentation is designed in the CR. The specific algorithm is shown as follows:

- Preprocessing. The CR is grayscaled and smoothed with median filtering to filter out noise. Median filtering is often used to remove salt and pepper noise, which has a good smoothing effect on the sea surface reflected by strong light, and can maximize edge information.

- Obtaining a binary map by the local Otsu algorithm. According to the gray information of the CR, the Otsu algorithm automatically selects the threshold that maximizes the variance between the two types of pixel as the optimal threshold. For the whole sea–sky image, besides the sea and the sky, there are many other types of pixels, such as clouds, waves, and strong light reflections. It is difficult to obtain an accurate global threshold for the whole image by Otsu algorithm, and the image segmentation accuracy is poor. In this paper, the CR after median filtering is processed into N adjacent image blocks. The pixel distribution of the sky and the sea region in most image blocks has significant differences. Then, the Otsu algorithm is applied to each image block to obtain a local binary image, and finally the binarized image blocks are spliced back to the CR.

- Edge extraction. For the binary image of the CR, we check the position of the pixel mutation in the vertical direction line-by-line, and the position of the pixel mutation is the edge of the binary image, as shown in Equation (13), where () represents the edge image, () represents the binary image, represents the position along any line of pixels, and represents the morphological XOR operation. The edge extraction effect is shown in Figure 7b.

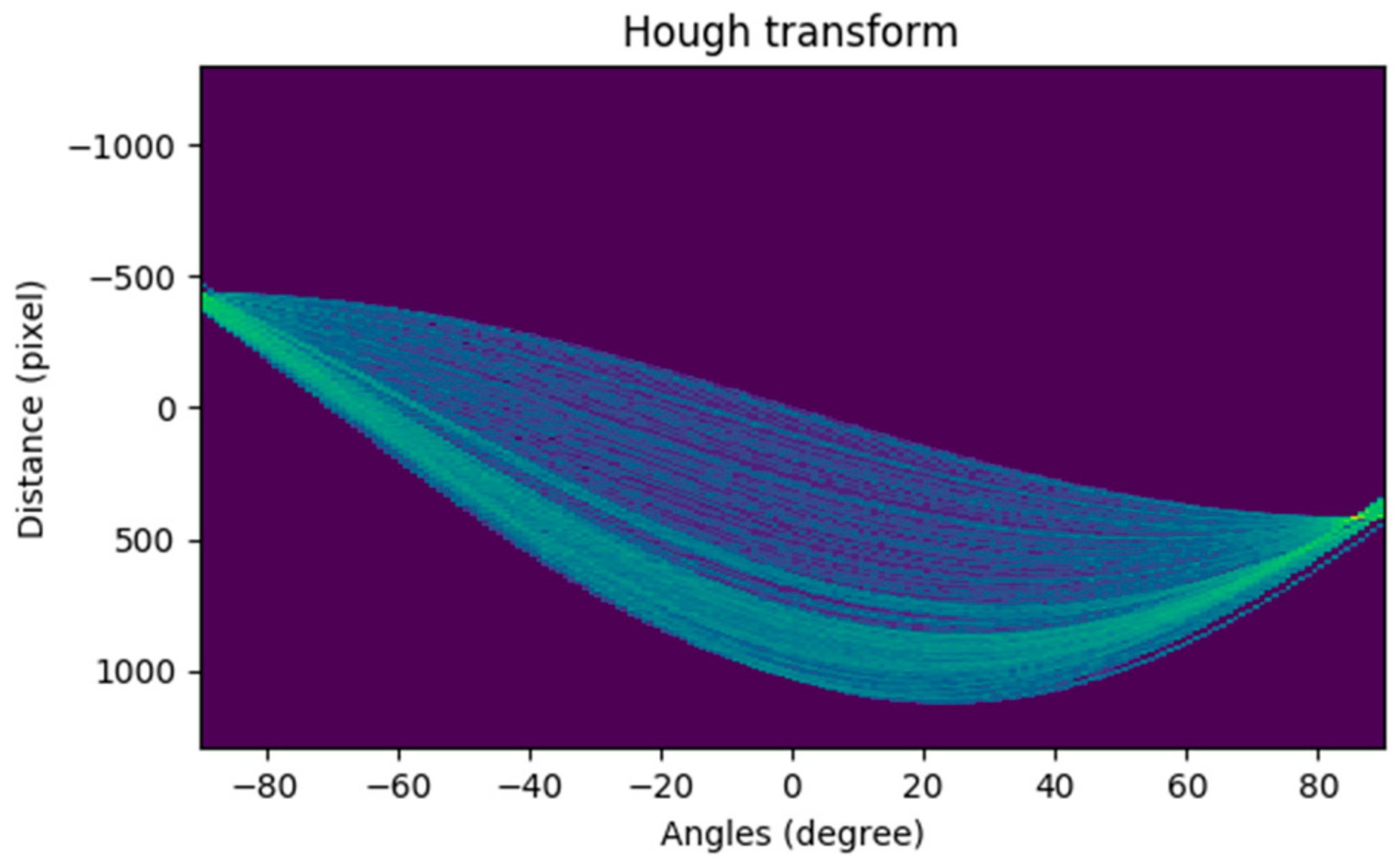

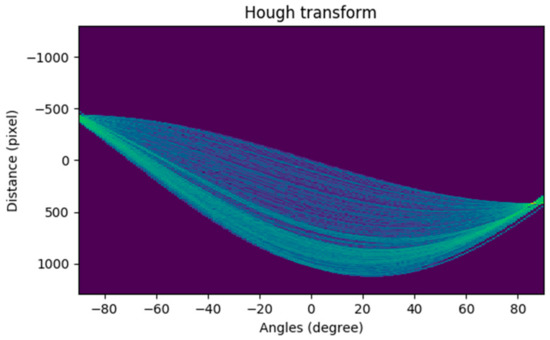

4.3. Identifying the Optimal SSL with Improved Hough Transform

The Hough transform is used to display the edge detection result in the accumulator. As shown in Figure 8, the horizontal coordinate represents the polar angle () and the vertical coordinate represents the polar diameter (, and each curve represents a point in the edge image. The brighter the point, the more the number of curves (represented by ) that pass through this point, indicating that the more points are collinear in the edge image. In this paper, we optimize the SSL extraction algorithm by combining the prediction results calculated by Hough transform according to the SSL length, with the measurement results provided by the inertial sensor according to the SSL angle. The specific algorithm is as follows:

Figure 8.

Hough space of the edge detection image.

- Prediction of SSL using Hough transform. In order to avoid the sample dispersion problem caused by the excessive voting range of the accumulator, we use the Kernel-based Hough transform [34] algorithm to smooth the accumulator. First, we calculate five clusters of pixel points with collinear features, and then find the best fitting line and model the uncertainty for each cluster, and last, vote for the main lines using elliptical-Gaussian kernels computed from the lines associated uncertainties. We obtain the following parameters using Figure 7b processed by Hough transform, as shown in Table 1. In order to present five SSLs visually, we use five colors to mark them in the original image, as show in Figure 7c.

Table 1. Hough spatial parameters of candidate SSLs ranked top five.

Table 1. Hough spatial parameters of candidate SSLs ranked top five. - Measurement results with the inertial sensor. In an ideal state, the roll angle obtained by the inertial sensor is the tilt angle of the SSL, and there is a mutual residual between and . Therefore, we can take into the Hough space to find the SSL. However, there is a certain measurement error in the inertial sensor data, so it is necessary to comprehensively consider the prediction result of Hough transform and the measurement result of inertial sensor.

- Defining cost function. Firstly, we use and to represent the prediction of length and angle, and represent the measurement of length and angle, and (1 −) represent the influence factors of length and angle, respectively. The cost function can be obtained from Equation (14). Then, we need to eliminate the effect of the angle’s direction on the cost function. If , we need to remove the SSL with a negative angle in the predicted value; the processing method is the same if . Finally, in order to eliminate the dimensional influence between the evaluation metrics, the min–max normalization processing method is used to map the result values between [0, 1], and the conversion function is shown in Equation (15).

Assuming l = 1288 pixels, = 5.0°, and = 0.4, the relevant parameters of the cost function are shown in Table 2. We know SSL-1 is the optimal SSL and display it in the original image as shown in Figure 7d.

Table 2.

The relevant parameters of the cost function.

5. Visual Saliency Detection in the ROSD of the SSL

After obtaining the optimal SSL, we add 30 pixels to the rectangle where the optimal SSL is located, cut it, and define it as the ROSD. In the ROSD, the influence of clouds and sea clutter is small. The long-distance ship is mainly near the SSL, and the sea–sky background is relatively uniform and connected with the boundary part of the area. According to the characteristics of the ROSD, we use the fast minimum barrier distance (FMBD) [35] to measure the connectivity of the pixel and the region boundary. The algorithm operates directly on the original pixel, and does not have to acquire the superpixel of the image through the region abstraction [36,37,38,39], which improves the detection performance of the saliency map.

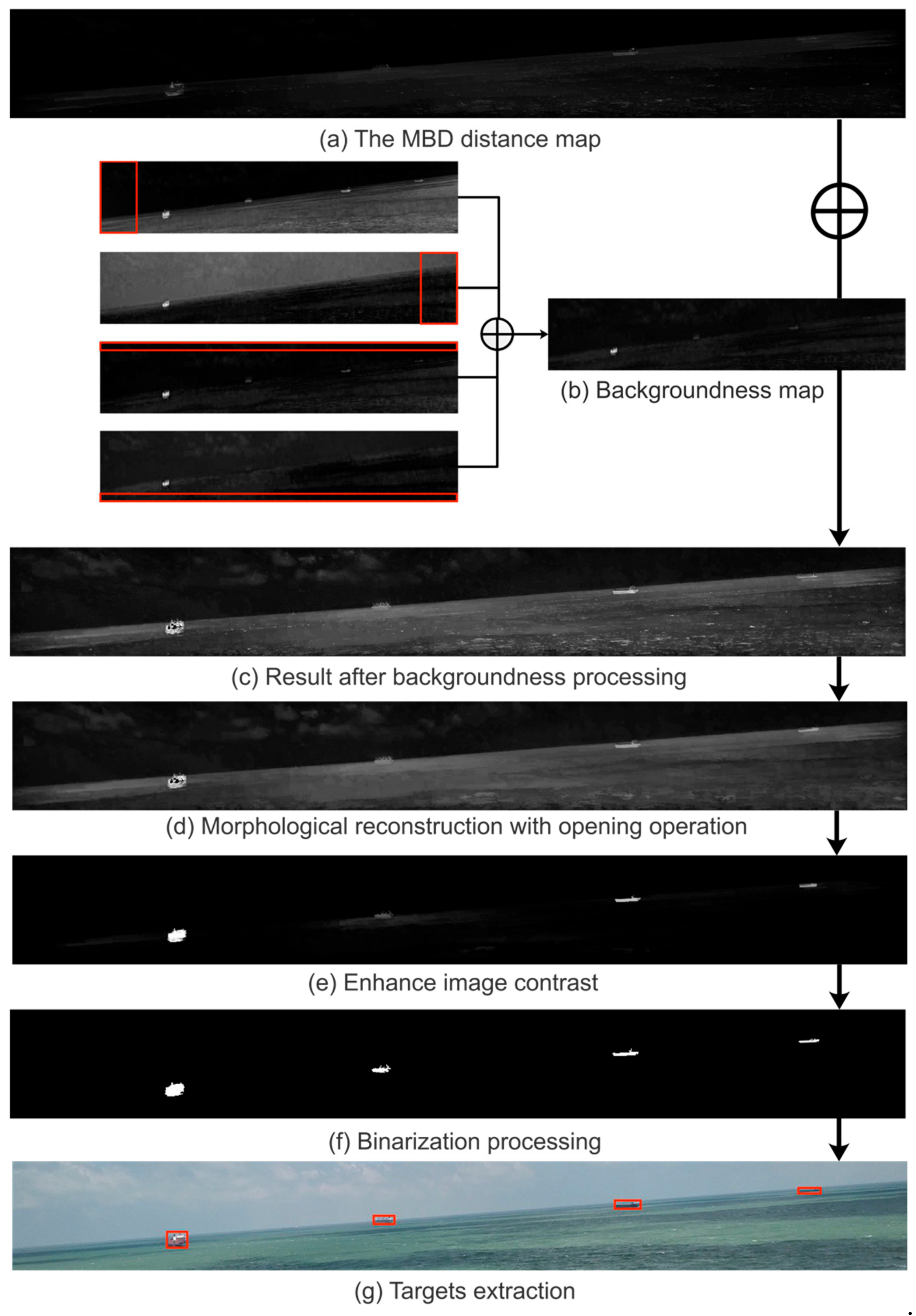

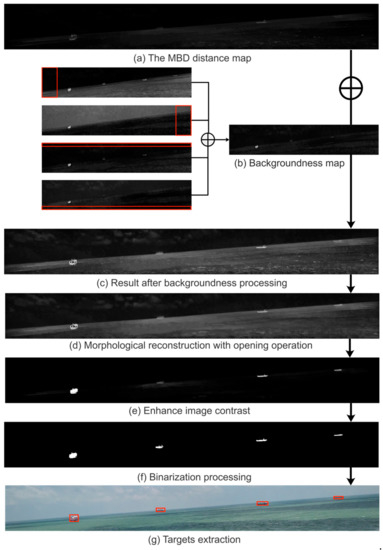

The FMBD algorithm mainly consists of three steps, namely, obtaining the minimum barrier distance (MBD) distance map, backgroundness, and post-processing. We used the same approach as FMBD in the first two steps, but we made appropriate improvements in the post-processing step. The specific algorithm is as follows:

Firstly, we convert the color space of the ROSD from RGB to Lab to better simulate the human visual perception. In each channel, we select a pixel-wide row and column as the seed set S in the upper, lower, left, and right boundaries of the ROSD region. Then, the FMBD algorithm is used to calculate the path cost function of each pixel in the ROSD region to the set S, as shown in Equation (16), where represents any pixel other than the boundary in the image, and represents the path of the pixel to the set S. In this paper, we consider four paths adjacent to each pixel point; represents the pixel value of a point, and the cost function represents the distance between the highest pixel value and the lowest pixel value on a path.

We scan the ROSD area three times, which are raster scan, inverse raster scan, and raster scan. In each scan, half of the four neighborhoods of each pixel are used; that is, the upper neighborhood and the left neighborhood pixel. The path minimization operation is shown in Equation (17), where represents the path currently assigned to pixel , represents the edge from pixel to pixel , represents the path of , and the direction is from to . Assuming , you can get Equation (18), where and are the maximum and minimum values on the path, respectively.

In summary, when a pixel appears in the region of the salient target, its pixel value should be close to the maximum pixel value on each path, and the cost function here is relatively large. When a pixel appears in the background area, its pixel value should be close to the minimum pixel value on each path, and the cost function here is relatively small. Thereby, the highlighting area can be realized, the background area can be darkened, and the target saliency detection can be completed.

Secondly, after obtaining the FMBD distance maps accumulated in the three-color spaces, we apply the backgroundness cue of the ROSD region to enhance the brightness of the saliency map. In the ROSD, the boundary of the image is the sea–sky background. According to this feature, first, we select 10% of the area in the upper, lower, left, and right directions of the ROSD as the boundary part, and then calculate the Mahalanobis Distance of the color mean between all the pixels and the four boundary areas. Finally, the maximum value of the boundary information is subtracted from the sum of the boundary information obtained from the four regions to obtain a boundary comparison map. Therefore, we can exclude the case where a region may contain a foreground region, as shown in Equation (19), where and represent the color mean and covariance of each boundary part, respectively.

Finally, in the post-processing section, the three processing techniques of the original article do not adapt to ship detection near the SSL, so we make appropriate improvements. For the first processing, we replace the previous morphological filtering with morphological reconstruction with opening operation. The specific operation is that we use the structural element b to erode the saliency map (the saliency map is represented by ) n times to obtain the erosion map , then use b to dilate . Next, we take the minimum value of the dilation map and the original map , and iterate the process until no longer changes. The results of our processing can be obtained by Equation (20), where and represent the dilation and erosion operations in morphology, respectively. For the second processing, the original processing utilizes the image enhancement technique in the middle of the image, but it is easy to ignore the small targets around, so this paper directly removes this technology. The third processing is consistent with the original article; the sigmoid function is used to increase the contrast between the target and the background region, as shown in Equation (21), where parameter a is used to control the contrast level of the target and the background.

The saliency feature map obtained by the proposed algorithm has the following characteristics: The target part is highlighted, the background part is darkened, and the contrast is obvious. We select the appropriate threshold to test the saliency map, and use the area threshold to extract the final l target ship, eliminating trivial small area interference. The processing of target detection is shown in Figure 9.

Figure 9.

A processing case for targets detection. (a) is obtained by fusing the average values of the MBD maps of the three channels L, a, and b. In (b), each red box represents 10% of the image area in the four directions of up, down, left, and right, and represents the average of the four images after adding. (d,e) represent post-processing, where . (f,g) represent foreground segmentation, where the binarization threshold is 5 times the average intensity of (e), and the area threshold is 100 pixels.

6. Experimental Results and Discussion

This paper conducts a real ship experiment on the “YUKUN” of Dalian Maritime University’s special teaching practice ship, and uses an inertial sensor and a visible light camera for data acquisition. The inertial sensor adopts the MTi-G-700 MEMS inertial measurement system produced by Xsens Company of the Netherlands. The measurement range of roll angle and pitch angle is [–180°, 180°], and the accuracy of measurement is less than . The camera uses the Blackfly U3-13S2C/M-CS camera from PointGrey, Canada. The chip size is 4.8 3.6 mm, and the number of pixels on the target surface is 1288 964. The camera focal length during the experiment was 27.82 mm. All the experiments in this paper were tested on an Intel i5 processor, 8G memory MacBook Pro, and programmed in Python.

6.1. Dataset and Evaluation Indicators

6.1.1. Dataset

For maritime target detection, there is currently no authoritative dataset to verify the validity of the algorithm. A few datasets that have been opened do not include camera-related attitude data. Therefore, the images in our dataset were obtained by the Blackfly U3-13S2C/M-CS camera installed on the “YUKUN” ship. The image size is 1288 964 pixels. We used the inertial sensor and visible camera synchronization processing algorithm to obtain the camera motion attitude data. The detailed information of the experiment images is shown in Table 3.

Table 3.

Detailed information of the experiment images.

6.1.2. Evaluation Metrics

As can be seen from Section 4.1, each SSL can be represented by a rectangle, so we can describe the SSL by Equation (22). The true value can be obtained by manually marking SSLs in the images. In the experiment, to verify the camera’s motion attitude model, we can calculate the difference between the estimate values and the actual values of LC and H to obtain the model accuracy. In evaluating the detection performance of the SSL, if the difference of LC is less than 5 pixels and the difference of H is less than 10 pixels, we believe that the SSL is correctly detected.

In evaluating the performance of detection, we used the confusion matrix of classification result to represent the detection results, namely, the true positive (TP), false positive (FP), true negative (TN), and false negative (FN). Precision and recall were obtained by Equation (23). Intersection over Union (IoU) was also used as the evaluation metrics; that is, the intersection of the detection result and the true value was compared to their union. When the IoU was greater than or equal to 0.5, the test result was marked as TP. When IoU was less than 0.5, the test result was marked as FP.

6.2. Experimental Results and Discussion on SSL Detection

The SSL extraction algorithm in this paper mainly includes three models, namely, camera motion attitude model, improved edge detection model, and improved Hough transform model. In order to verify the performance of each model separately, the following three experiments were designed. Some parameter settings in the experiment are shown in Table 4.

Table 4.

Some parameter settings in the test.

- 1

- Experiment 1—Verification of the camera motion attitude model

The camera motion attitude model uses the pitch angle and roll angle provided by the inertial sensor to estimate the CR of the SSL in the image, which can effectively narrow the detection range and is of great significance for subsequent algorithms. In this experiment, we used the difference between LC and H of the SSL candidate region and the real region to describe the estimation accuracy.

Eight experiment results of the model accuracy are shown in Table 5. The total number of detected images is 2000. The analysis results show that the LC estimation accuracy of the camera motion attitude model is 6–13 pixels, and the H estimation accuracy is 7–19 pixels. It can be seen from the experimental results that it is reasonable to estimate the rectangular area of the SSL by using the camera motion attitude model, and then increase the height of 30 pixels above and below the estimate rectangular as the CR of the SSL, which can effectively ensure that the real SSL is in the CR.

Table 5.

Location estimated accuracy of camera motion attitude model.

- 2

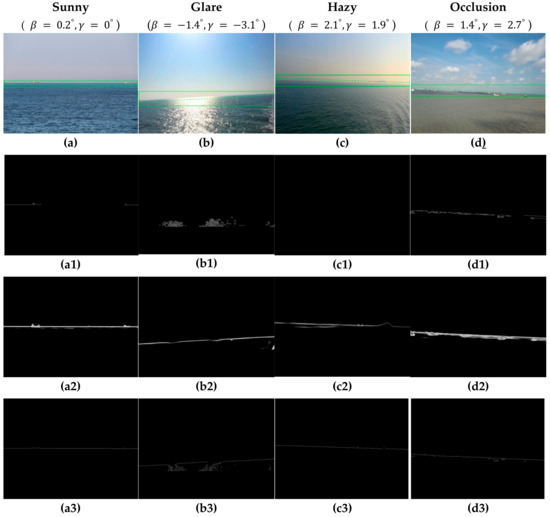

- Experiment 2—Verification of the improved edge detection model

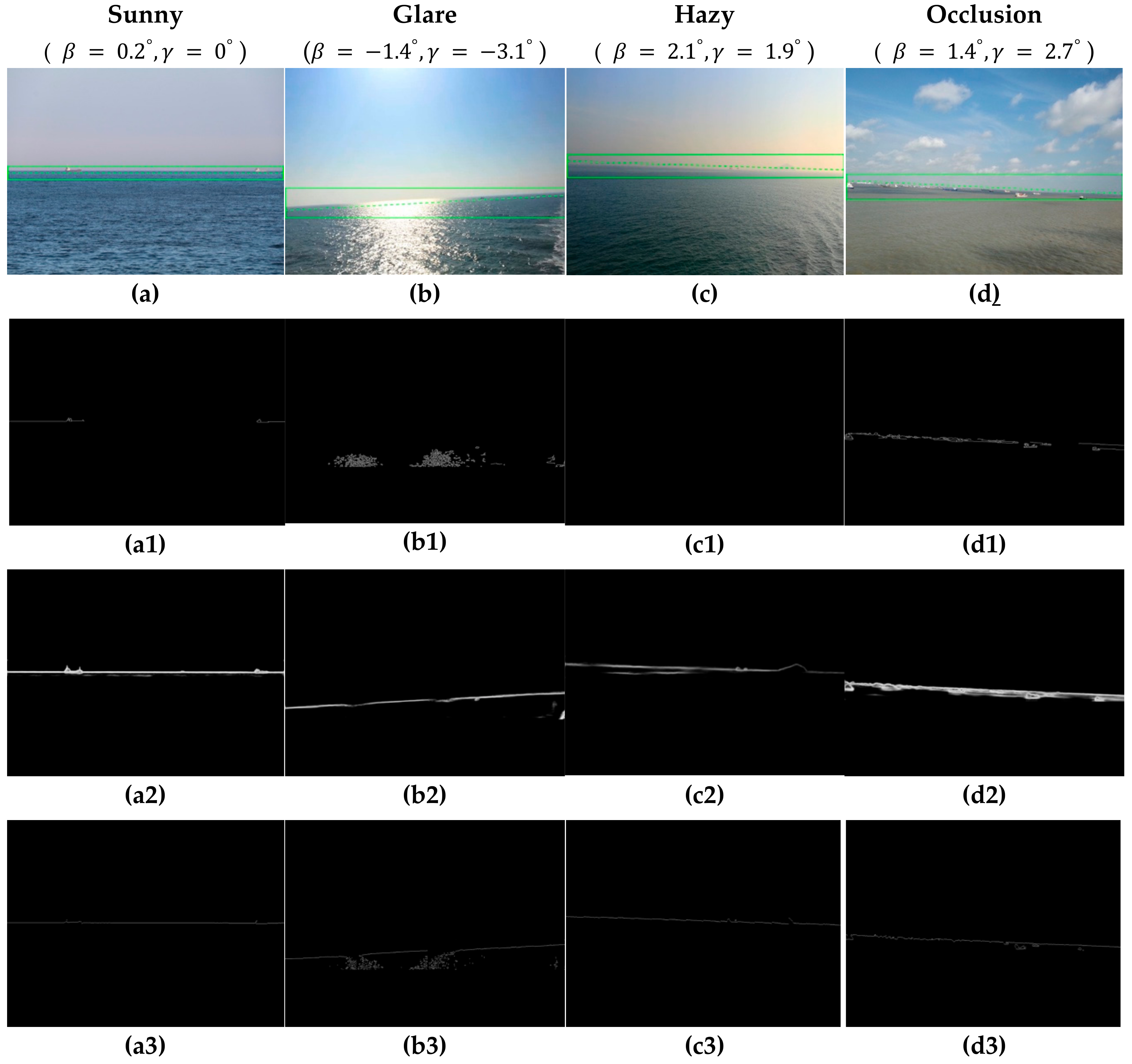

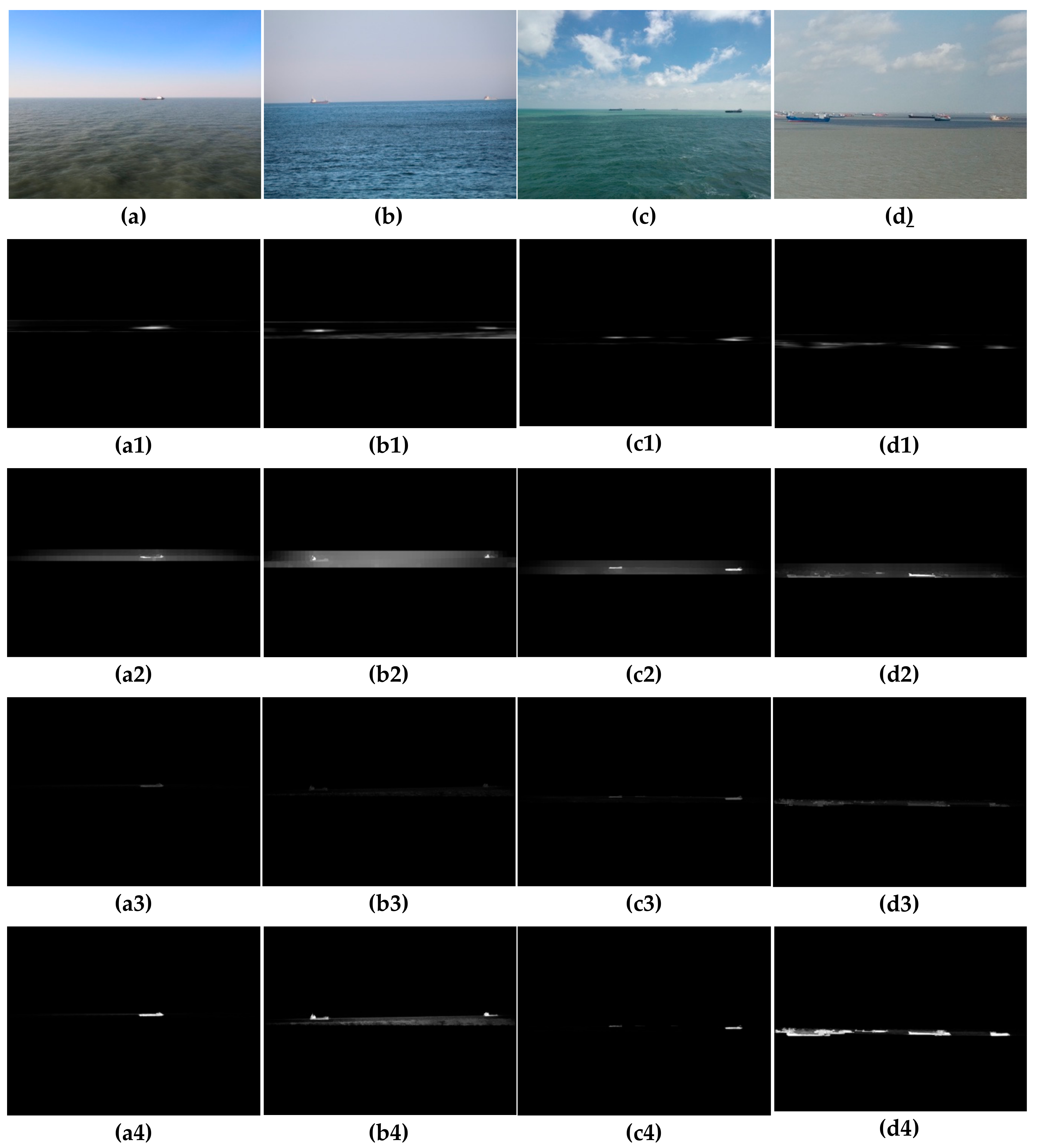

This experiment was carried out in sunny, glare, hazy, and occlusion conditions from the train set, and compared the performance with the Canny operator and the deep learning-based holistically-nested edge detection (HED) algorithm [40]. In order to better illustrate the detection performance of various algorithms, one image was selected for description under four conditions, as shown in Figure 10.

Figure 10.

Edge detection results by the four method. (a–d) Original images with CR. (a1–d1) Edge detection results by Canny (). (a2–d2) Edge detection results by HED. (a3–d3) Edge detection results by the proposed method.

First of all, we used the data provided by the inertial sensor to obtain the CR of the SSL through the camera motion attitude model, as shown in Figure 10a–d, then used three algorithms to process the image separately. We can see that the Canny operator had the worst detection performance of the SSL, since the threshold was not adaptive. In sunny conditions, only part of SSL could be detected, as shown in Figure 10(a1). When the glare conditions or the sea–sky background was hazy, the Canny operator failed to detect the edge of SSL, as shown in Figure 10(b1,c1). When there were obstacles such as ships or islands blocking the SSL, the Canny operator detected the edge of the obstacle and this affected the performance, as shown in Figure 10(d1). The HED algorithm achieved better performance under any condition, but it was easy to cause over-detection. In addition to identifying the SSL, sea clutter was detected in Figure 10(c2), and the edge of the obstruction was added to the SSL in Figure 10(d2). The proposed algorithm achieved the best performance, since the binary division was performed in the adjacent small blocks, over-detection was effectively prevented while ensuring the threshold adaptive. Although part of the spot was detected in Figure 10(a3), it did not affect the extraction of the SSL.

- 3

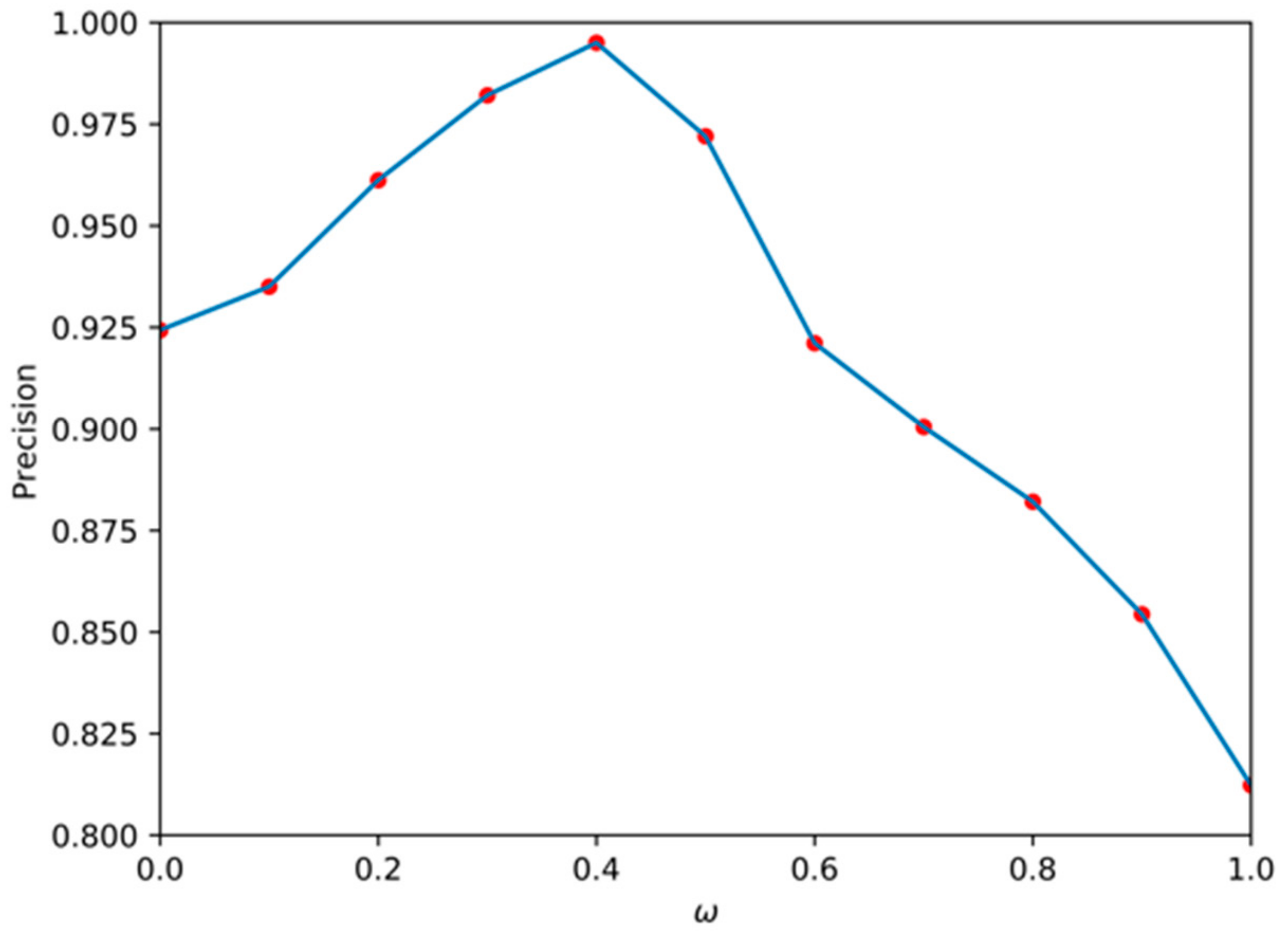

- Experiment 3—Verification of the improved Hough transform model

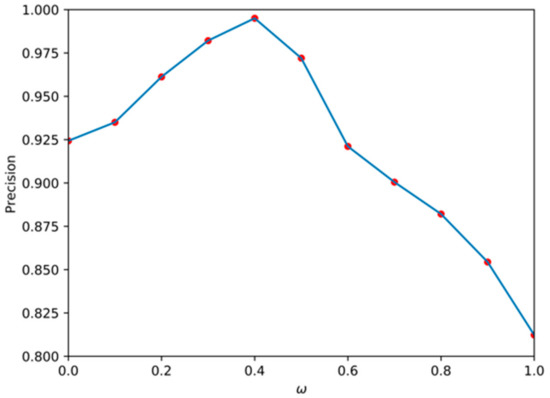

This experiment was mainly to verify the effect of length and angle on the cost function at the stage of SSL extraction. In the train set, we set the value of to [0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0], found the minimum cost function for each value, and drew the corresponding SSL. According to the evaluation metrics of the SSL, the average precision (AP) of the SSL under each is shown in Figure 11. It can be seen that when = 0.4, the extracted SSL had the highest AP, reaching 99.5%, but when only the length factor of the SSL was considered, the AP was the lowest with just 81.23%. Therefore, it can be concluded that the influence of the angle is greater than the length in the cost function extracted by the SSL.

Figure 11.

Relationship between the average precision (AP) of SSL detection and .

The above three experiments were fully verified for each model of the SSL detection. According to the proposed algorithm, we processed 600 images in the test set, and compared with Fefilatyev’s method [7] and Zhang’s method [10]. The precision and recall rates are as shown in Table 6. We can see that all of the three methods achieved good performance in SSL detection, but the proposed method was still better than the other two methods, whose average precision (AP) and average recall (AR) in the test set reached 99.67% and 100%, respectively.

Table 6.

Precision and recall scores for the three methods.

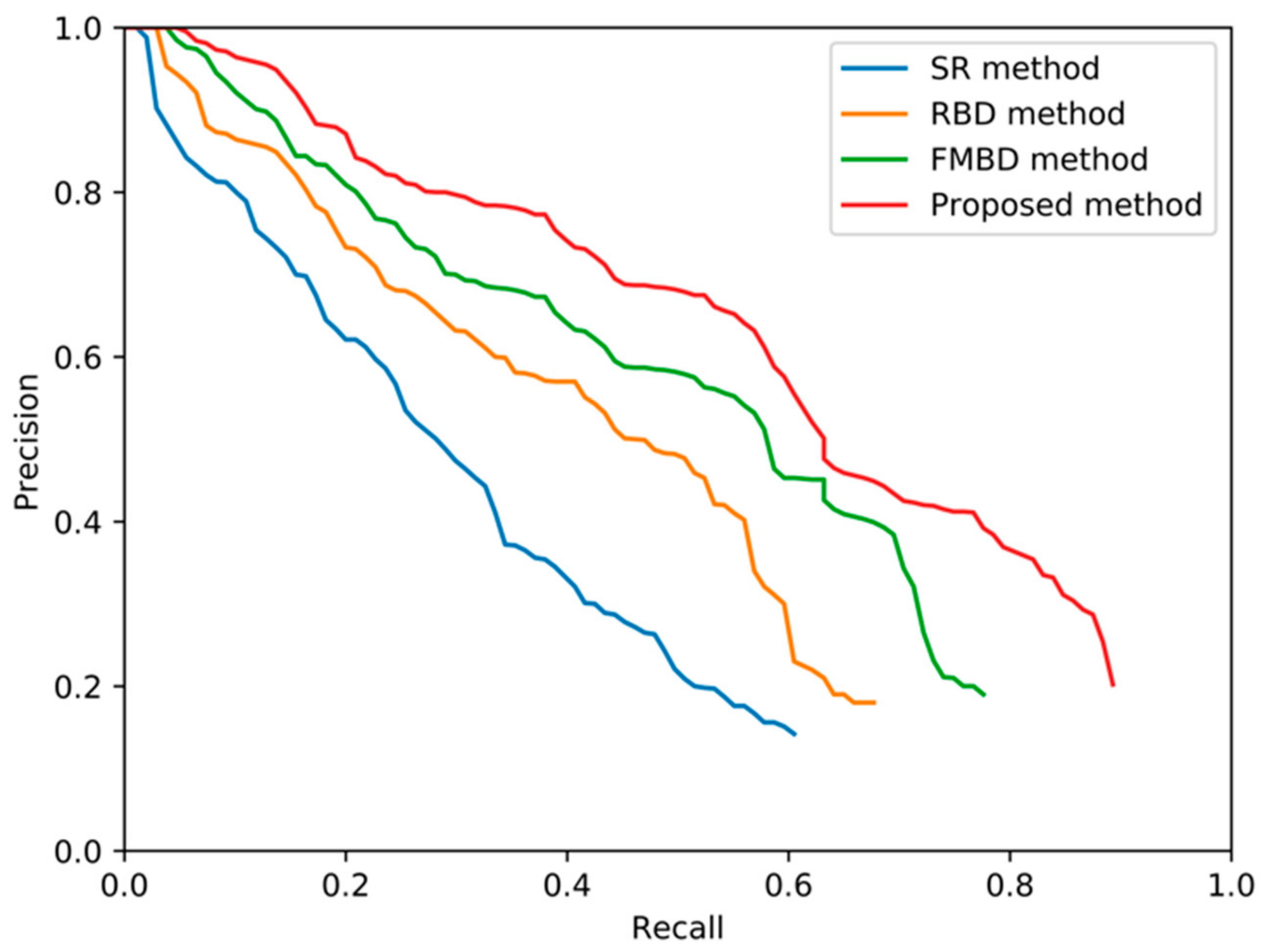

6.3. Experimental Results of Ship Detection in the Train Set

In this experiment, there were a total of 1050 images in the train set. In order to verify the performance of the proposed method, the other three saliency detection algorithms, SR [41], RBD, and traditional FMBD, were used as the comparison experiments. The parameters of the proposed method are in Table 7.

Table 7.

Experiment parameters of saliency detection.

When evaluating the performance of ship detection, the binarization threshold T and the area threshold S determine the satisfaction from two aspects of the pixel intensity and the number of pixels connected. If the T and S are too large, the target will be submerged in the background. If the T and S are too small, false target interference will occur. When determining the range of T, the target pixel intensity significantly exceeds the average intensity , so the value of T represents by times of the average intensity () of the salient map, and a combination of T and S is shown in Table 8.

Table 8.

Combination of T and S.

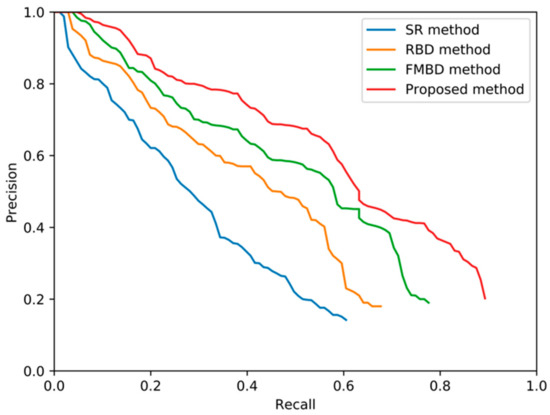

According to the above threshold combination, we can draw the precision-recall graphs of the four detection methods, as shown in Figure 12. It can be seen that the proposed method is superior to the other three saliency detection methods.

Figure 12.

Precision-recall curves of the four object detection methods.

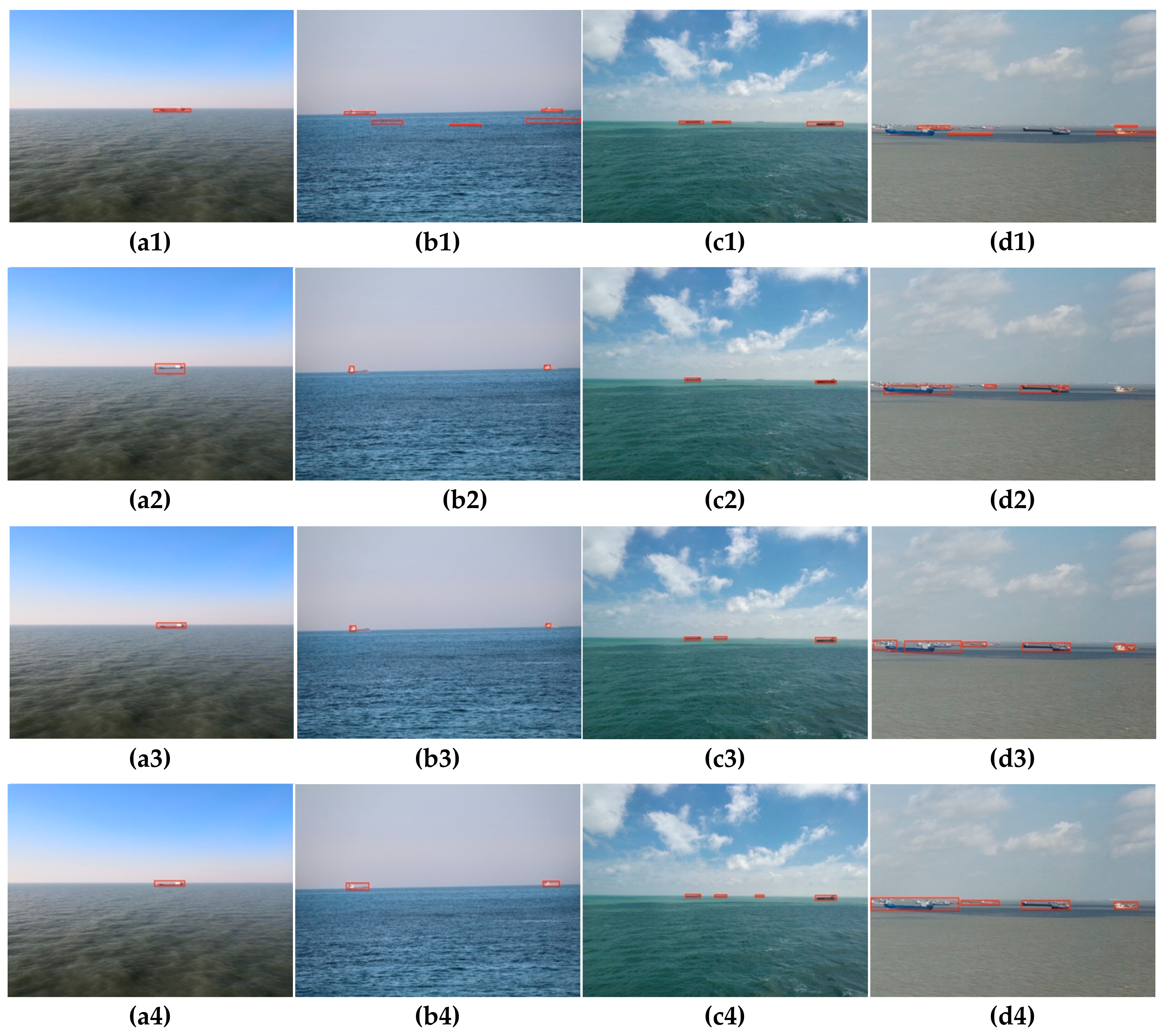

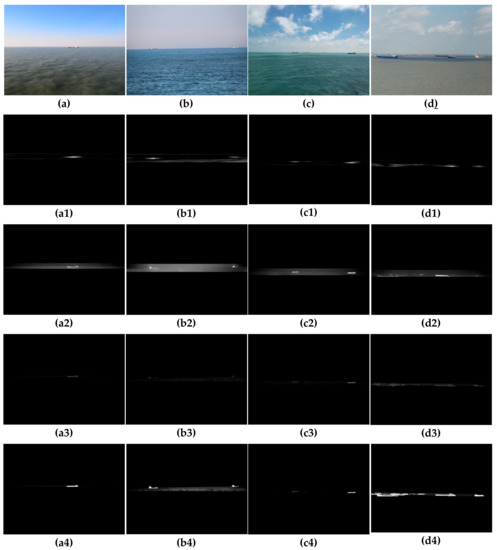

Figure 13 shows the detection performance of several methods on different datasets of the train set. It can be seen that although the residual spectrum (Figure 13(a1–d1)) obtained by the SR method can detect the ship, it does not accurately indicate the position and shape of the target ship, and is liable to cause false detection. The RBD method achieves better detection results when the number of targets in the image is small, as shown in Figure 13(a2,b2), but it is easy to cause missed detection when there are many targets, such as Figure 13(c2,d2). The traditional FMBD method (Figure 13(a3–d3)) can detect the salient targets well, but the contrast between the target and the background is not obvious enough, which is not conducive to subsequent target extraction. The proposed method (Figure 13(a4–d4)) in this paper can clearly distinguish the target and background, accurately detect the shape and position of the target, and the detection performance is the best.

Figure 13.

Saliency detection results by the four methods. (a–d) Original images. (a1–d1) Saliency detection results by SR. (a2–d2) Saliency detection results by RBD. (a3–d3) Saliency detection results by FMBD. (a4–d4) Saliency detection results by the proposed method.

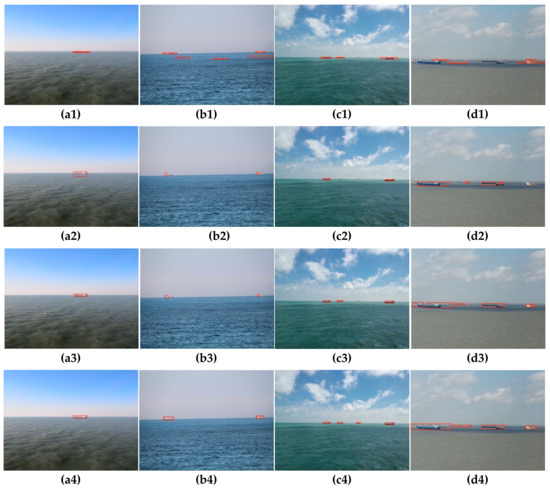

Figure 14 shows segmentation results of the target ships from the salient feature map where the T is 5 times of and S is set to 100 pixels. It can be seen from Figure 14(a1–d1) that the SR method has the worst segmentation result, the detection result has many missed detections and false detections, and the target ship positioning accuracy is also poor. The RBD method is more powerful than the SR method, but with a certain degree of target missed detection, such as Figure 14(c2,d2). The traditional FMBD method has a good segmentation result, but it still has some shortcomings in target positioning accuracy and missed detection, such as Figure 14(a3–c3). The proposed method accurately detects the target in Figure 14(a4–c4), but the method fails to perform accurate target segmentation when the target ship appears to be covered, as shown in Figure 14(d4). This is also a direction we will focus on in the future.

Figure 14.

Object segmentation results by the four methods. (a1–d1) Object segmentation results by the SR method. (a2–d2) Object segmentation results by the RBD method. (a3–d3) Object detection results by the FMBD method. (a4–d4) Object segmentation results by the proposed method.

6.4. Experimental Results of Ship Detection in the Test Set

In this experiment, we verified the proposed target detection method in the test set and compared it with Fefilatyev’s and Zhang’s methods. The precision and recall rates are shown in Table 9. Since Fefilatyev’s method only detects the ship above the SSL, both AP and AR are relatively low. Zhang’s method is relatively good, as the AP and AR reached 59.21% and 73.25%. However, the proposed method achieved the best scores, with an AP and AR of 68.50% and 88.32%, respectively.

Table 9.

Precision and recall scores of the three ship detection methods.

7. Conclusions

This paper proposes a novel maritime target detection algorithm based on the motion attitude of visible light camera. The camera was fixed on the “YUKUN” ship, and the camera’s motion attitude data was acquired synchronously by the inertial sensor, so that the CR of the SSL on the image could be estimated. Then, the improved local Otsu algorithm was applied to the edge detection in the CR, and the Hough transform was improved to extract the optimal SSL. Finally, the improved FMBD algorithm was used to detect the target ships in the vicinity of the SSL. The experimental results show that the proposed algorithm has obvious advantages compared with the other maritime target detection algorithms. In the test set, the detection precision of the SSL reached 99.67%, effectively overcoming the complex maritime environment. The ship detection precision and recall rates were 68.50% and 88.32%, respectively, which improved the detection precision while avoiding the ship’s missed detection.

The main contribution of this paper is the construction of a camera motion attitude model by analyzing the six-degrees-of-freedom motion of the camera at sea, combined with the maritime target detection algorithm, which narrowed the detection range and improved the detection accuracy. The edge detection algorithm was improved. The local Otsu algorithm was used for edge processing in the CR, which effectively overcame the complex maritime environment. The Hough transform algorithm was improved. The length and angle of the SSL were simultaneously considered as evaluation metrics of the cost function, which effectively improved the accuracy of SSL extraction. The ROSD was detected by the improved the FMBD algorithm. In the post-processing part of the algorithm, the morphological reconstruction with opening operation, was used to replace the previous processing method to smooth the sea–sky background, which effectively improved the target ship’s saliency detection effect.

Author Contributions

Conceptualization, X.S. and M.P.; Methodology, X.S.; Software, X.S.; Validation, X.S.; Resources, M.P.; Data curation, L.Z.; Writing—original draft preparation, X.S.; Writing—review and editing, X.S., D.W. and L.Z.; Supervision, D.Z.; Funding acquisition, D.W.

Funding

This research was financially supported by National Natural Science Foundation of China (61772102) and the Fundamental Research Funds for the Central Universities (3132019400).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Porathe, T.; Prison, J.; Yemao, M. Situation awareness in remote control centers for unmanned ships. In Proceedings of the Human Factors in Ship Design & Operation, London, UK, 26–27 February 2014; pp. 93–101. [Google Scholar]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video Processing from Electro-Optical Sensors for Object Detection and Tracking in a Maritime Environment: A Survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Yuxing, D.; Weining, L. Detection of sea-sky line in complicated background on grey characteristics. Chin. J. Opt. Appl. Opt. 2010, 3, 253–256. [Google Scholar]

- Liu, W. Research on the Method of Electronic Image Stabilization for Shipborne Mobile Video; Dalian Maritime University: Dalian, China, 2017; pp. 96–101. [Google Scholar]

- Wang, B.; Su, Y.; Wan, L. A Sea-Sky Line Detection Method for Unmanned Surface Vehicles Based on Gradient Saliency. Sensors 2016, 16, 543. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J. Small infrared target detection by region-adaptive clutter rejection for sea-based infrared search and track. Sensors 2014, 14, 13210–13242. [Google Scholar] [CrossRef] [PubMed]

- Fefilatyev, S.; Goldgof, D.; Shreve, M. Detection and tracking of ships in open sea with rapidly moving buoy-mounted camera system. Ocean Eng. 2012, 54, 1–12. [Google Scholar] [CrossRef]

- Santhalia, G.K.; Sharma, N.; Singh, S. A Method to Extract Future Warships in Complex Sea-sky background which May Be Virtually Invisible. In Proceedings of the Third Asia International Conference on Modelling & Simulation, Bali, Indonesia, 25–29 May 2009; pp. 533–539. [Google Scholar]

- Yongshou, D.; Bowen, L.; Ligang, L.; Jiucai, J.; Weifeng, S.; Feng, S. Sea-sky-line detection based on local Otsu segmentation and Hough transform. Opto Electron. Eng. 2018, 45, 180039. [Google Scholar]

- Zhang, Y.; Li, Q.Z.; Zang, F.N. Ship detection for visual maritime surveillance from non-stationary platforms. Ocean Eng. 2017, 141, 53–63. [Google Scholar] [CrossRef]

- Zeng, W.J.; Wan, L.; Zhang, T.D.; Xu, Y.R. Fast Detection of Sea Line Based on the Visible Characteristics of MaritneImages. Acta Optica Sinica. 2012, 32, 01110011–01110018. [Google Scholar]

- Boroujeni, N.S.; Etemad, S.; Ali, W.A. Robust horizon detection using segmentation for UAV applications. In Proceedings of the 2012 9th Conference on Computer and Robot Vision, Toronto, ON, Canada, 28–30 May 2012; pp. 28–30. [Google Scholar]

- Kim, S. High-Speed Incoming Infrared Target Detection by Fusion of Spatial and Temporal Detectors. Sensors 2015, 15, 7267–7293. [Google Scholar] [CrossRef]

- Zeng, W.J.; Wan, L.; Zhang, T.D.; Xu, Y.R. Fast detection of weak targets in complex sea-sky background. Opt. Precis. Eng. 2012, 20, 403–412. [Google Scholar] [CrossRef]

- Fang, J.; Feng, S.; Feng, Y. Image algorithm of ship detection for surface vehicle. Trans. Beijing Inst. Technol. 2017, 37, 1235–1240. [Google Scholar]

- Lou, J.; Zhu, W.; Wang, H.; Ren, M. Small Target Detection Combining Regional Stability and Saliency in a Color Image. Multimed. Tools Appl. 2017, 76, 14781–14798. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Doulamis, A.; Doulamis, N.; Georgopoulos, A. Multi-sensor target detection and tracking system for sea ground borders surveillance. In Proceedings of the 7th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 27–30 May 2014. [Google Scholar]

- Liu, Z.; Zhou, F.; Bai, X.; Yu, X. Automatic detection of ship target and motion direction in visual images. Int. J. Electron. 2013, 100, 94–111. [Google Scholar] [CrossRef]

- Ebadi, S.E.; Ones, V.G.; Izquierdo, E. Efficient background subtraction with low-rank and sparse matrix decomposition. In Proceedings of the 2015 IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Westall, P.; Ford, J.J.; O’Shea, P.; Hrabar, S. Evaluation of maritime vision techniques for aerial search of humans in maritime environments. In Proceedings of the Digital Image Computing: Techniques and Applications, Canberra, Australia, 1–3 Decemcer 2008; pp. 176–183. [Google Scholar]

- Fefilatyev, S. Algorithms for Visual Maritime Surveillance with Rapidly Moving Camera. Ph.D. Thesis, University of South Florida, Tampa, FL, USA, 2012. [Google Scholar]

- Kumar, S.S.; Selvi, M.U. Sea objects detection using color and texture classification. Int. J. Comput. Appl. Eng. Sci. 2011, 1, 59–63. [Google Scholar]

- Selvi, M.U.; Kumar, S.S. Sea object detection using shape and hybrid color texture classification. In Trends in Computer Science, Engineering and Information Technology; Springer: Berlin/Heidelberg, Germany, 2011; pp. 19–31. [Google Scholar]

- Frost, D.; Tapamo, J.R. Detection and tracking of moving objects in a maritime environment using level set with shape priors. J. Image Video Process. 2013, 2013, 42. [Google Scholar] [CrossRef]

- Loomans, M.; de With, P.; Wijnhoven, R. Robust automatic ship tracking in harbors using active cameras. In Proceedings of the 20th IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 4117–4121. [Google Scholar]

- Ren, Y.; Zhu, C.; Xiao, S. Small Object Detection in Optical Remote Sensing Images via Modified Faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection in Remote Sensing Images from Google Earth of Complex Scenes Based on Multiscale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Zhang, R.; Yao, J.; Zhang, K.; Feng, C.; Zhang, J. S-CNN-based ship detection from high-resolution remote sensing images. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 18 July 2016; pp. 423–430. [Google Scholar]

- Biondi, F. Low-Rank Plus Sparse Decomposition and Localized Radon Transform for Ship-Wake Detection in Synthetic Aperture Radar Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 117–121. [Google Scholar] [CrossRef]

- Graziano, M.D.; Grasso, M.; D’Errico, M. Performance Analysis of Ship Wake Detection on Sentinel-1 SAR Images. Remote Sens. 2017, 9, 1107. [Google Scholar] [CrossRef]

- Biondi, F.; Addabbo, P.; Orlando, D.; Clemente, C. Micro-Motion Estimation of Maritime Targets Using Pixel Tracking in Cosmo-Skymed Synthetic Aperture Radar Data—An Operative Assessment. Remote Sens. 2019, 11, 1637. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Guo, L.; Xu, Y.C.; Li, K.Q.; Lian, X.M. Study on real-time distance detection based on monocular vision technique. J. Image Graph. 2016, 211, 74–81. [Google Scholar]

- Fernandes, L.A.F.; Oliveira, M.M. Real-time line detection through an improved Hough transform voting scheme. Pattern Recognit. 2008, 41, 299–314. [Google Scholar]

- Zhang, J.; Sclaroff, S.; Lin, Z.; Shen, X.; Price, B.; Mech, R. Minimum Barrier Salient Object Detection at 80 FPS. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1404–1412. [Google Scholar]

- Wei, Y.; Wen, F.; Zhu, W.; Sun, J. Geodesic saliency using background priors. In ECCV; Springer: Berlin/Heidelberg, Germany, 2012; pp. 29–42. [Google Scholar]

- Zhu, W.; Liang, S.; Wei, Y.; Sun, J. Saliency optimization from robust background detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2814–2821. [Google Scholar]

- Jiang, B.; Zhang, L.; Lu, H.; Yang, C.; Yang, M.-H. Saliency detection via absorbing markov chain. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M.-H. Saliency detection via graph-based manifold ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3–18. [Google Scholar]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17 June 2007; pp. 1–8. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).