Recognition of Dorsal Hand Vein Based Bit Planes and Block Mutual Information

Abstract

1. Introduction

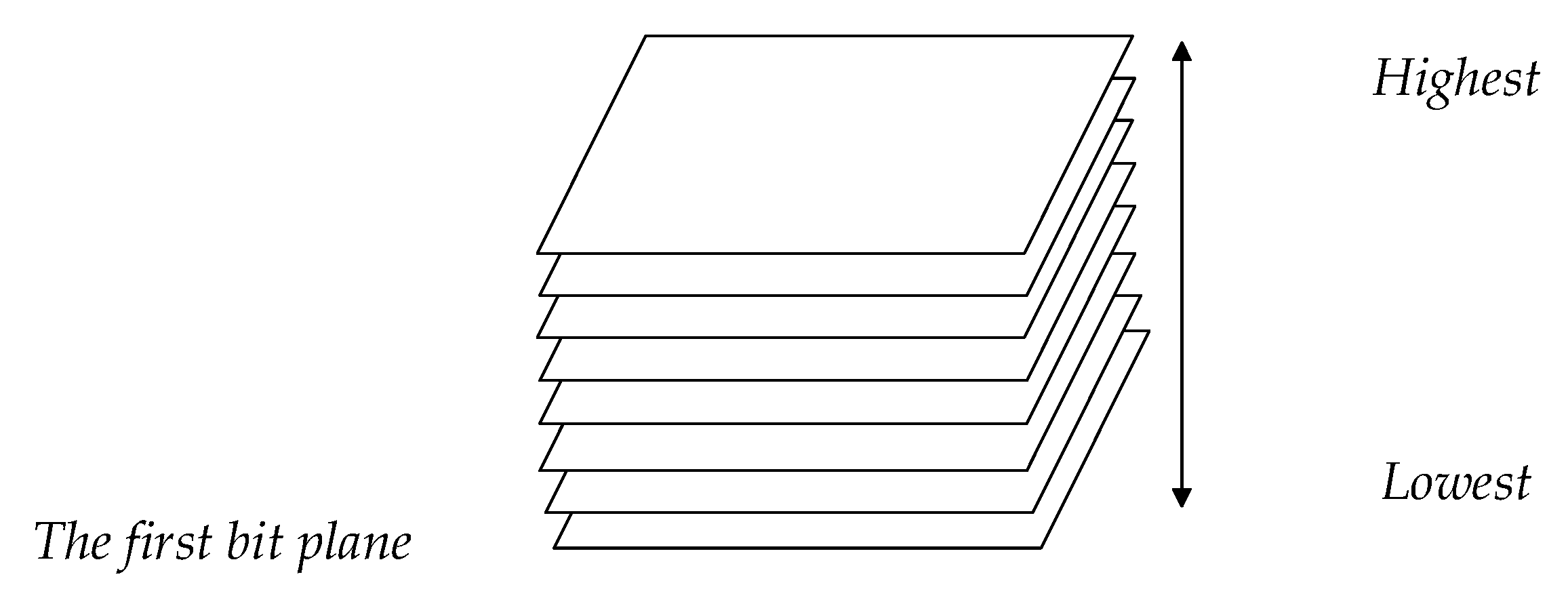

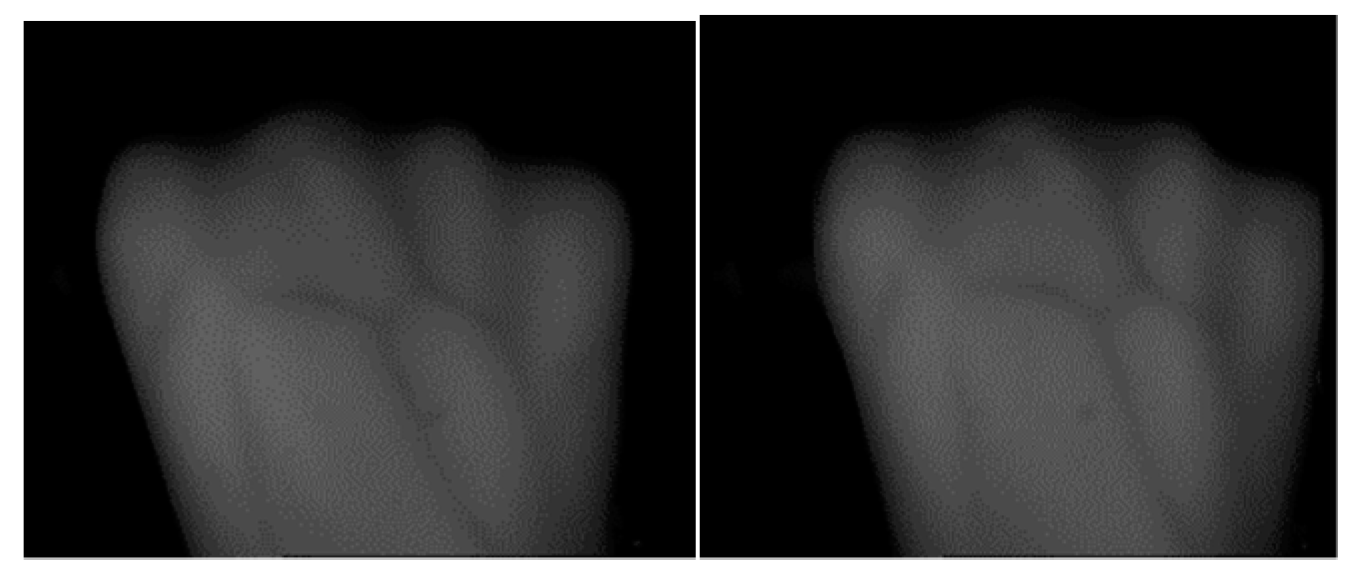

2. Bit Plane Generation

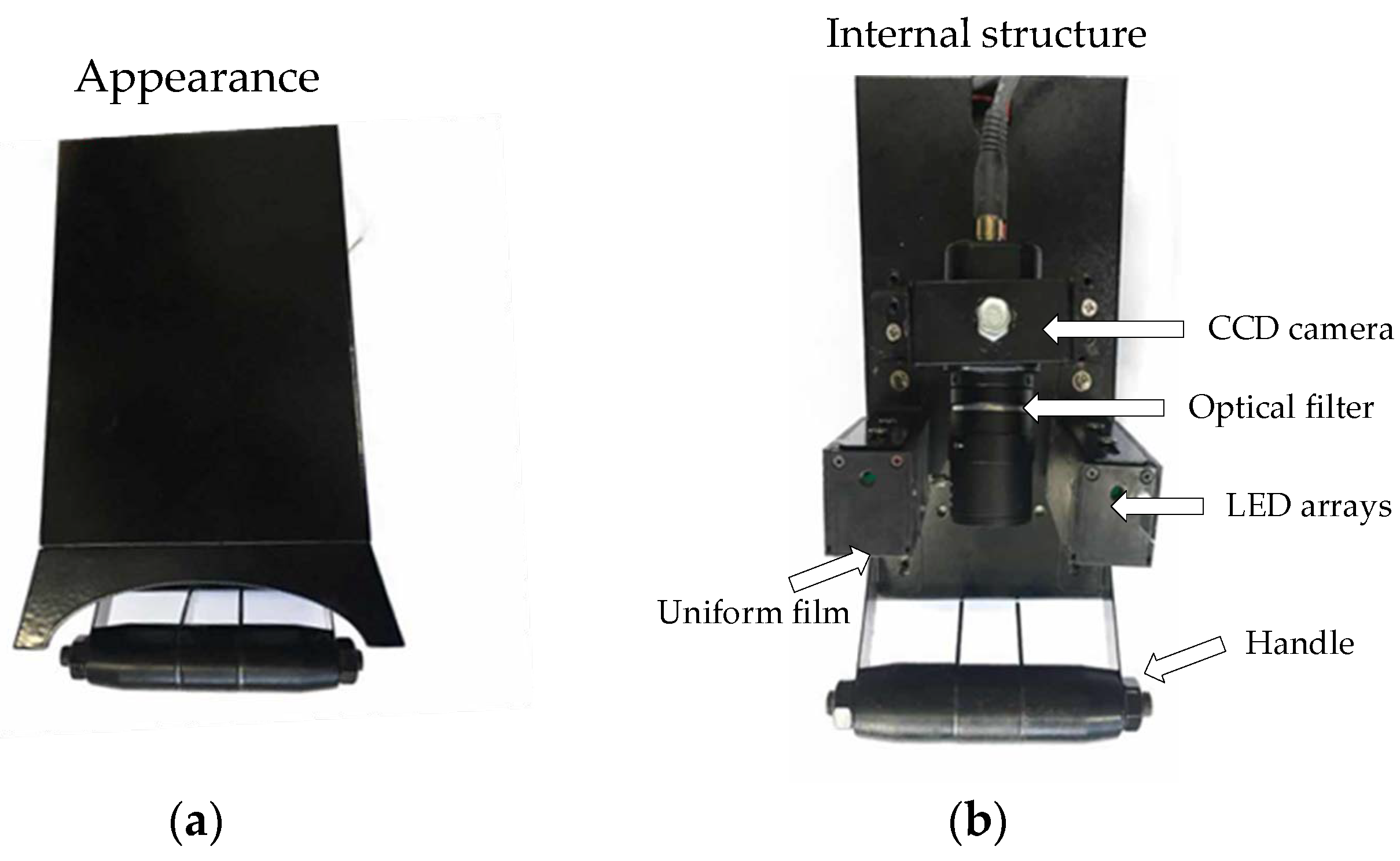

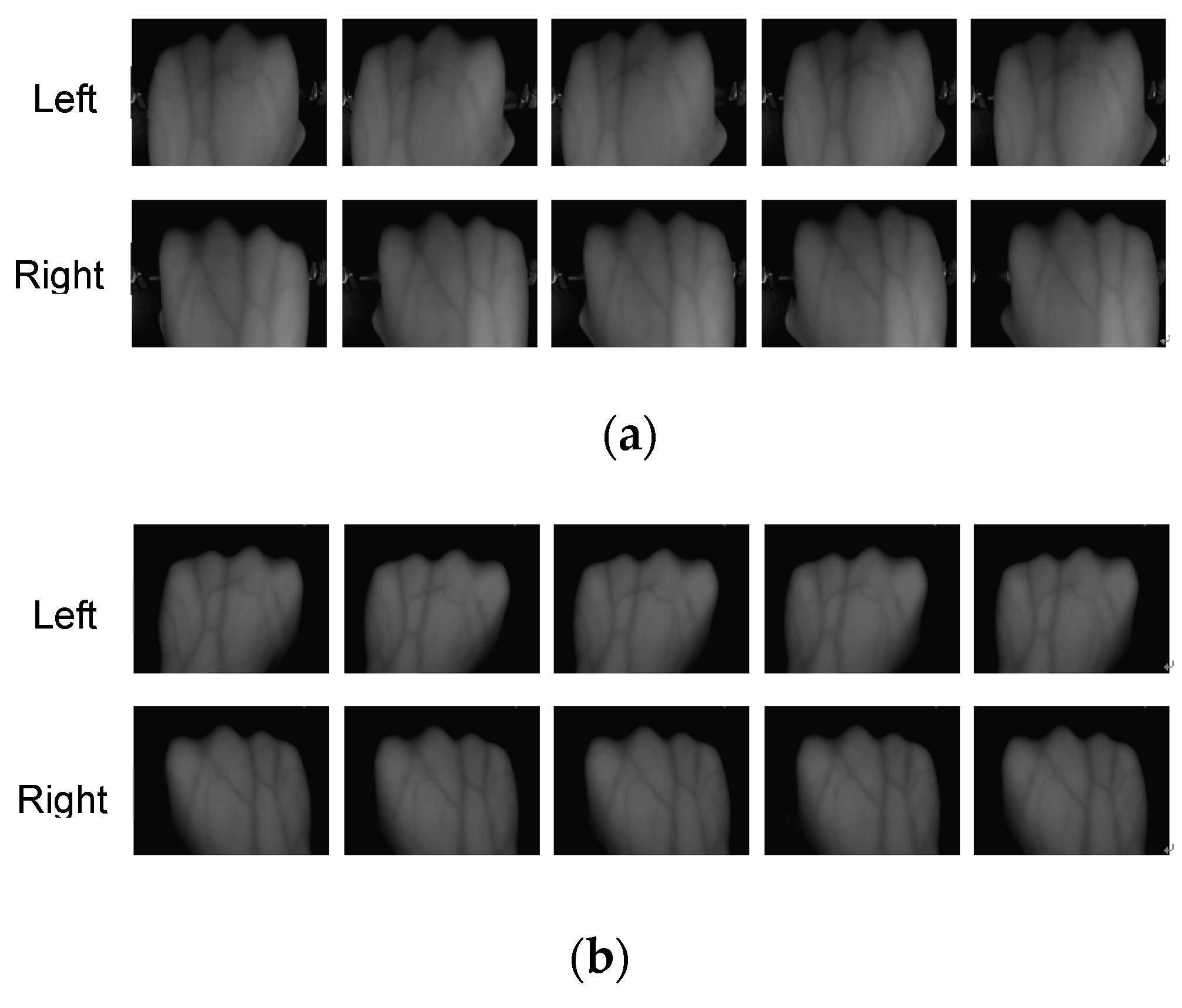

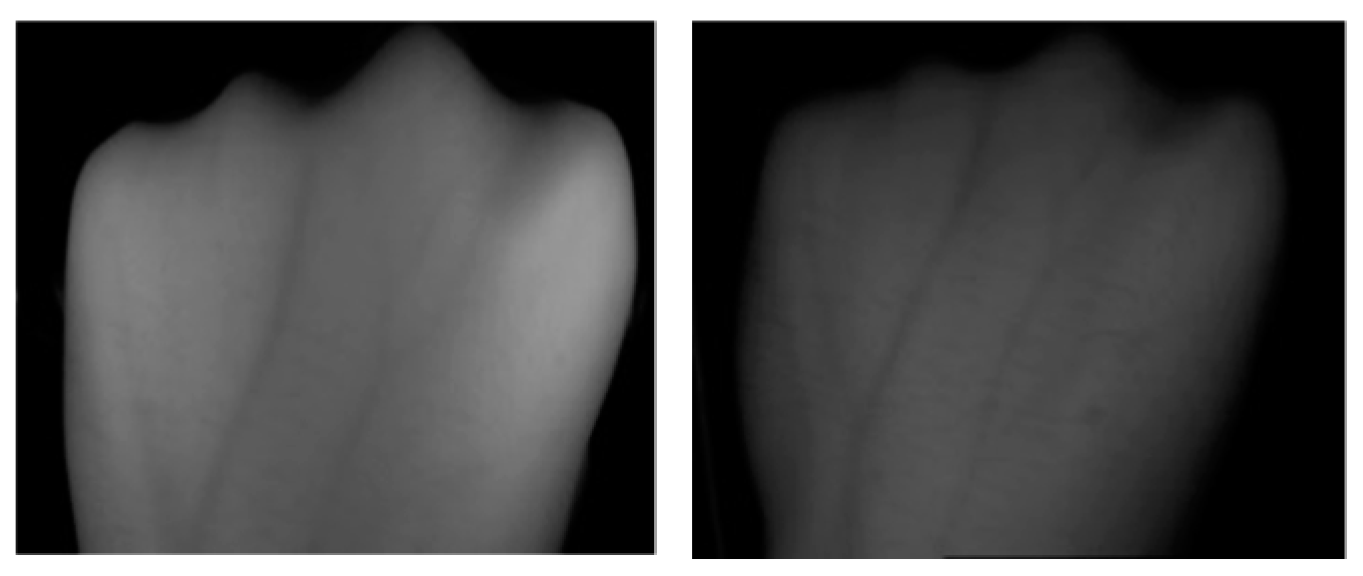

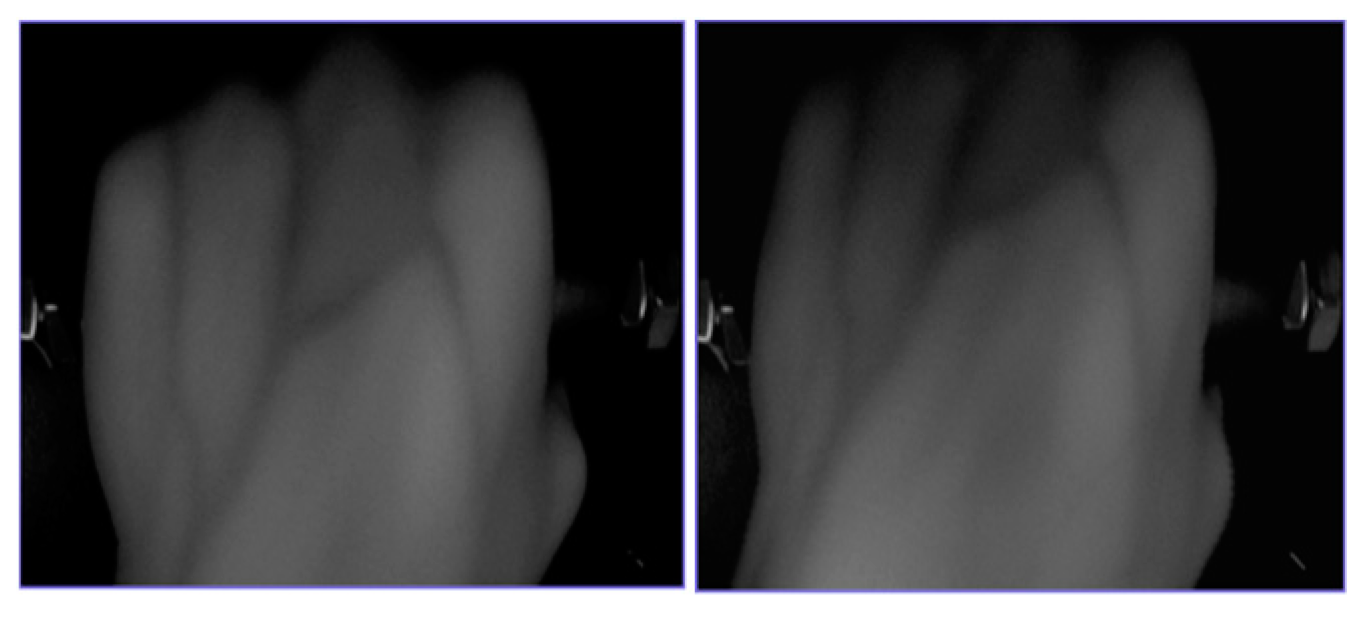

2.1. Image Acquisition of Dorsal Hand Vein

2.2. Image Preprocessing

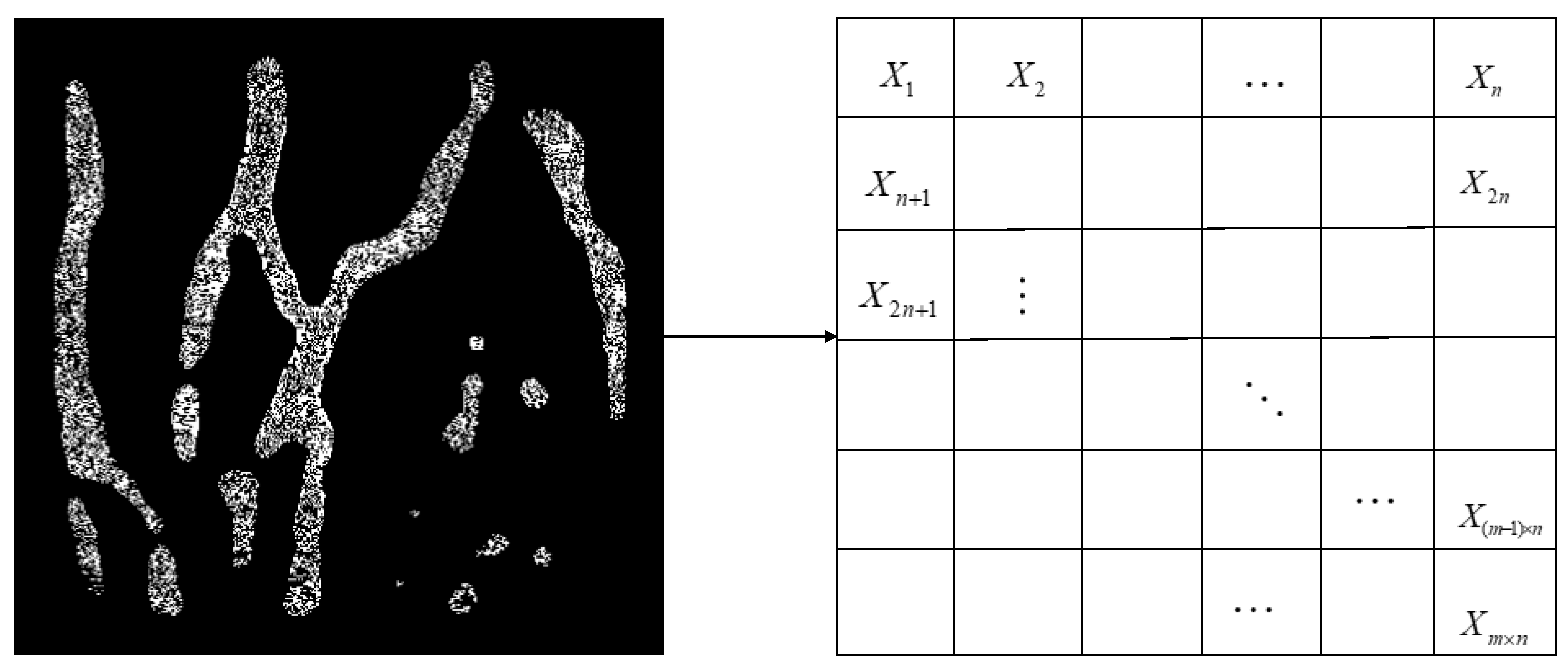

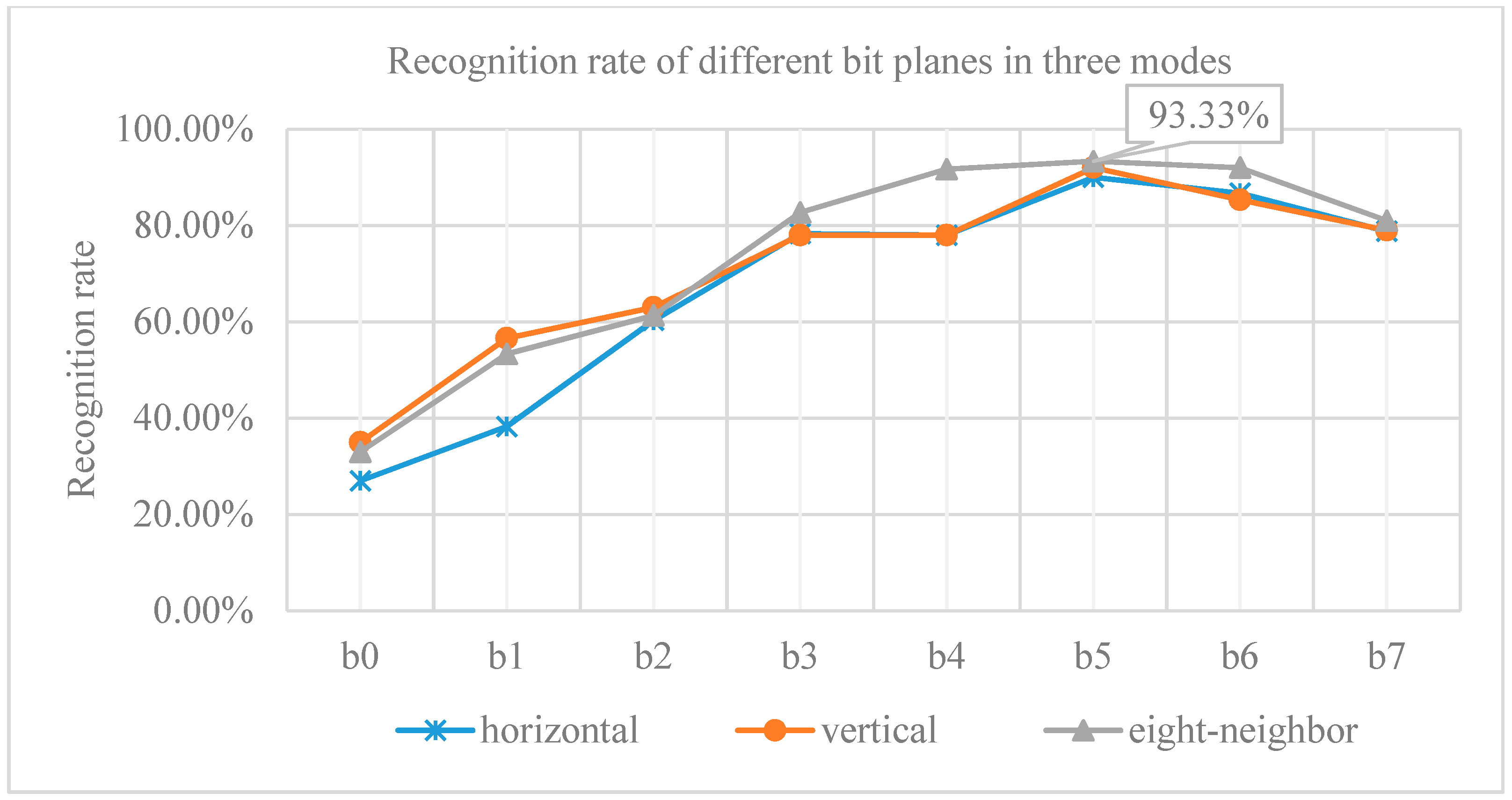

2.3. Selection of Bit Plane

3. Hand Vein Recognition Based on Block Mutual Information

3.1. Mutual Information Calculation

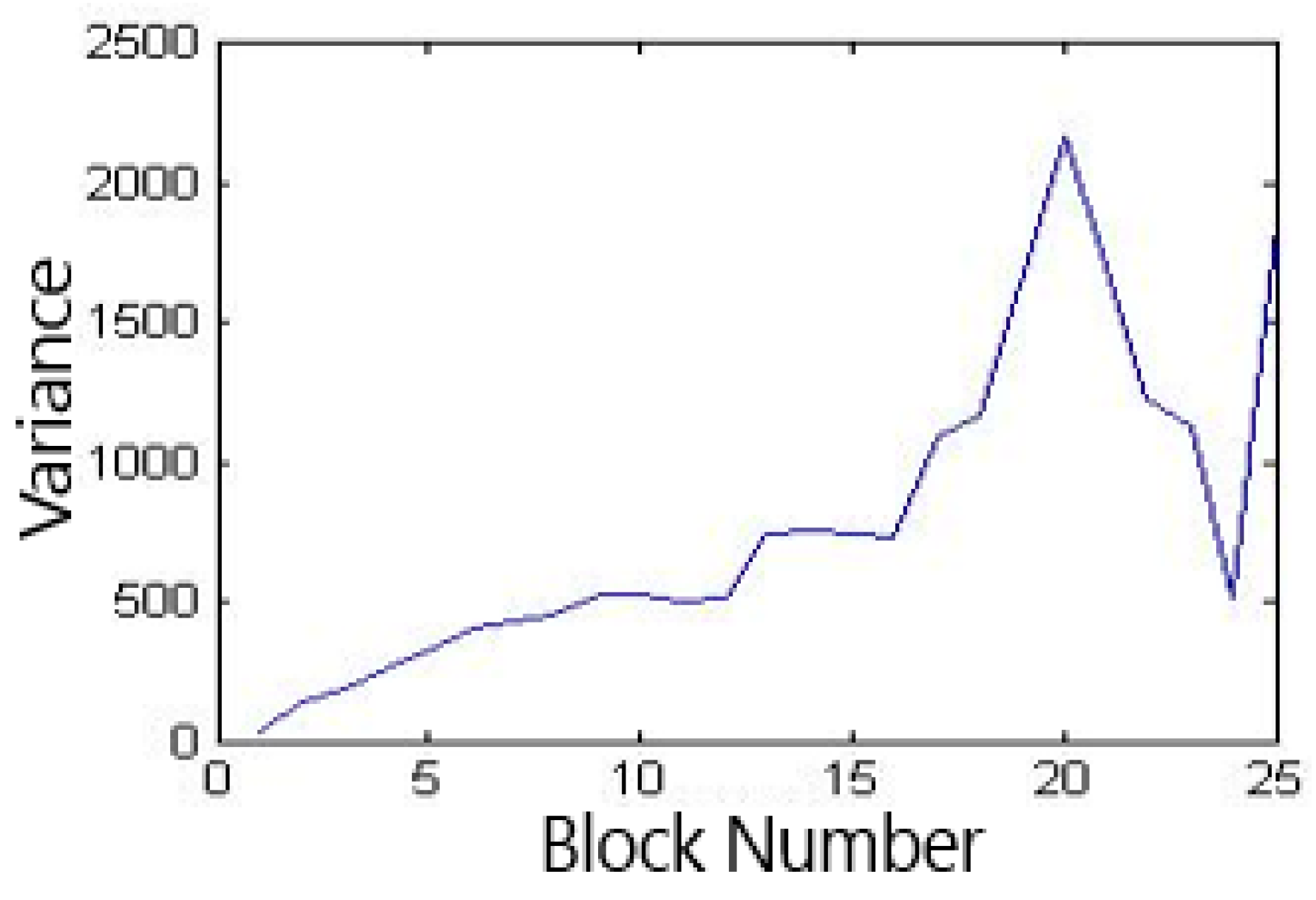

3.2. Optimal Number of Blocks

3.3. Block-based Mutual Information Feature Vector Calculation Mode

3.4. Classification Identification

4. Experiment Analysis

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, J.; Wang, G. Quality-specific hand vein recognition system. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2599–2610. [Google Scholar] [CrossRef]

- Kauba, C.; Uhl, A. Shedding Light on the Veins-Reflected Light or Transillumination in Hand-Vein Recognition. In Proceedings of the IEEE 2018 International Conference on Biometrics (ICB), Gold Coast, QLD, Australia, 20–23 February 2018. [Google Scholar]

- Wang, L.; Leedham, G.; Cho, D.S.-Y. Minutiae feature analysis for infrared hand vein pattern biometrics. Pattern Recognit. 2008, 41, 920–929. [Google Scholar] [CrossRef]

- S. Standring. Gray’s anatomy, 39th ed. Edinburgh: Elsevier Churchill Livingston. 2006. (Monograph). Available online: http://xueshu.baidu.com/usercenter/paper/show?paperid=283b26b16aa526eeb86bfd2a6bdcf553&site=xueshu_se (accessed on 20 May 2019).

- Khan, M.H.-M.; Subramanian, R.; Khan, N.A.M. Representation of hand dorsal vein features using a low dimensional representation integrating cholesky decomposition. In Proceedings of the International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009. [Google Scholar]

- Wang, Y.; Li, K.; Cui, J.; Shark, L.K.; Varley, M. Study of hand-dorsa vein recognition. In Proceedings of the International Conference on Intelligent Computing, Changsha, China, 18–21 August 2010; pp. 490–498. [Google Scholar]

- Huang, D.; Tang, Y.; Wang, Y.; Chen, L.; Wang, Y. Hand-Dorsa Vein Recognition by Matching Local Features of Multisource Keypoints. IEEE Trans. Cybern. 2015, 45, 1. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Huang, D.; Wang, Y.; Wang, Y. Improving Feature based Dorsal Hand Vein Recognition through Random Keypoint Generation and Fine-Grained Matching. In Proceedings of the International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015. [Google Scholar]

- Wang, Y.; Zheng, X. Cross-device hand vein recognition based on improved SIFT. Int. J. Wavelets Multiresolution Inf. Process. 2018, 16, 18400. [Google Scholar] [CrossRef]

- Li, X.; Huang, D.; Zhang, R.; Wang, Y.; Xie, X. Hand dorsal vein recognition by matching Width Skeleton Models. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Im, S.K.; Park, H.M.; Kim, Y.W.; Han, S.C.; Kim, S.W.; Kang, C.H.; Chung, C.K. A biometric identification system by extracting hand vein patterns. J. Korean Phys. Soc. 2001, 38, 268–272. [Google Scholar]

- Pan, X.-p.; Wang, T.-f. Research on ROI Extraction Algorithm for Hand Dorsal Image. Inf. Commun. 2013, 5, 1–3. [Google Scholar]

- Wang, Y.; Wang, H. Gradient based image segmentation for vein pattern. In Proceedings of the Fourth International Conference on Computer Sciences and Convergence Information Technology, Seoul, South Korea, 24–26 November 2009. [Google Scholar]

- Zhi, L.; Zhang, S.; Zhao, D.; Zhao, H.; Lin, D. Similarity-combined image retrieval algorithm inspired by mutual information. J. Image Graph. 2018, 16, 1850–1857. [Google Scholar]

- Guo, J.; Li, H.; Wang, C.; Li, S. An Image Registration Algorithm Based on Mutual Information. J. Transduct. Technol. 2013, 26, 958–960. [Google Scholar]

- Wang, Y. Identification Technique of Dorsal Vein in the Hand; Science Press: Beijing, China, 2015. [Google Scholar]

- Huang, D.; Zhu, X.; Wang, Y.; Zhang, D. Dorsal hand vein recognition via hierarchical combination of texture and shape clues. Neurocomputing 2016, 214, 815–828. [Google Scholar] [CrossRef]

- Pan, Y.; Xie, B. Image Restoration Algorithm Combining Gray Level Co-occurrence Matrix and Entropy. Microcomput. Appl. 2012, 31, 44–46. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Schouten, T.E.; Broek, E.L.V.D. Fast Exact Euclidean Distance (FEED): A New Class of Adaptable Distance Transforms. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2159–2172. [Google Scholar] [CrossRef] [PubMed]

| Modes | Gray-Normalized | Binary | The Gray Image that Only Retains the Contour |

|---|---|---|---|

| Horizontal | 48.30% | 43.30% | 46.33% |

| Vertical | 86.60% | 83.30% | 83.30% |

| Eight-neighborhood | 88.00% | 86.70% | 89.67% |

| Algorithms | Recognition Rate (Single-Device) | Recognition Rate (Cross-Device) |

|---|---|---|

| Ours | >98% | 93.33% |

| LBP | 93.50% | 73.42% |

| PCA | 90.40% | 54.83% |

| SIFT | 97.50% | 86.60% |

| Gaussian distribution based random key-point generation (GDRKG) | 92.30% | 71.38% |

| Improved SIFT | 98.63% | 90.8% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Cao, H.; Jiang, X.; Tang, Y. Recognition of Dorsal Hand Vein Based Bit Planes and Block Mutual Information. Sensors 2019, 19, 3718. https://doi.org/10.3390/s19173718

Wang Y, Cao H, Jiang X, Tang Y. Recognition of Dorsal Hand Vein Based Bit Planes and Block Mutual Information. Sensors. 2019; 19(17):3718. https://doi.org/10.3390/s19173718

Chicago/Turabian StyleWang, Yiding, Heng Cao, Xiaochen Jiang, and Yuanyan Tang. 2019. "Recognition of Dorsal Hand Vein Based Bit Planes and Block Mutual Information" Sensors 19, no. 17: 3718. https://doi.org/10.3390/s19173718

APA StyleWang, Y., Cao, H., Jiang, X., & Tang, Y. (2019). Recognition of Dorsal Hand Vein Based Bit Planes and Block Mutual Information. Sensors, 19(17), 3718. https://doi.org/10.3390/s19173718