Mastcam Image Resolution Enhancement with Application to Disparity Map Generation for Stereo Images with Different Resolutions

Abstract

:1. Introduction

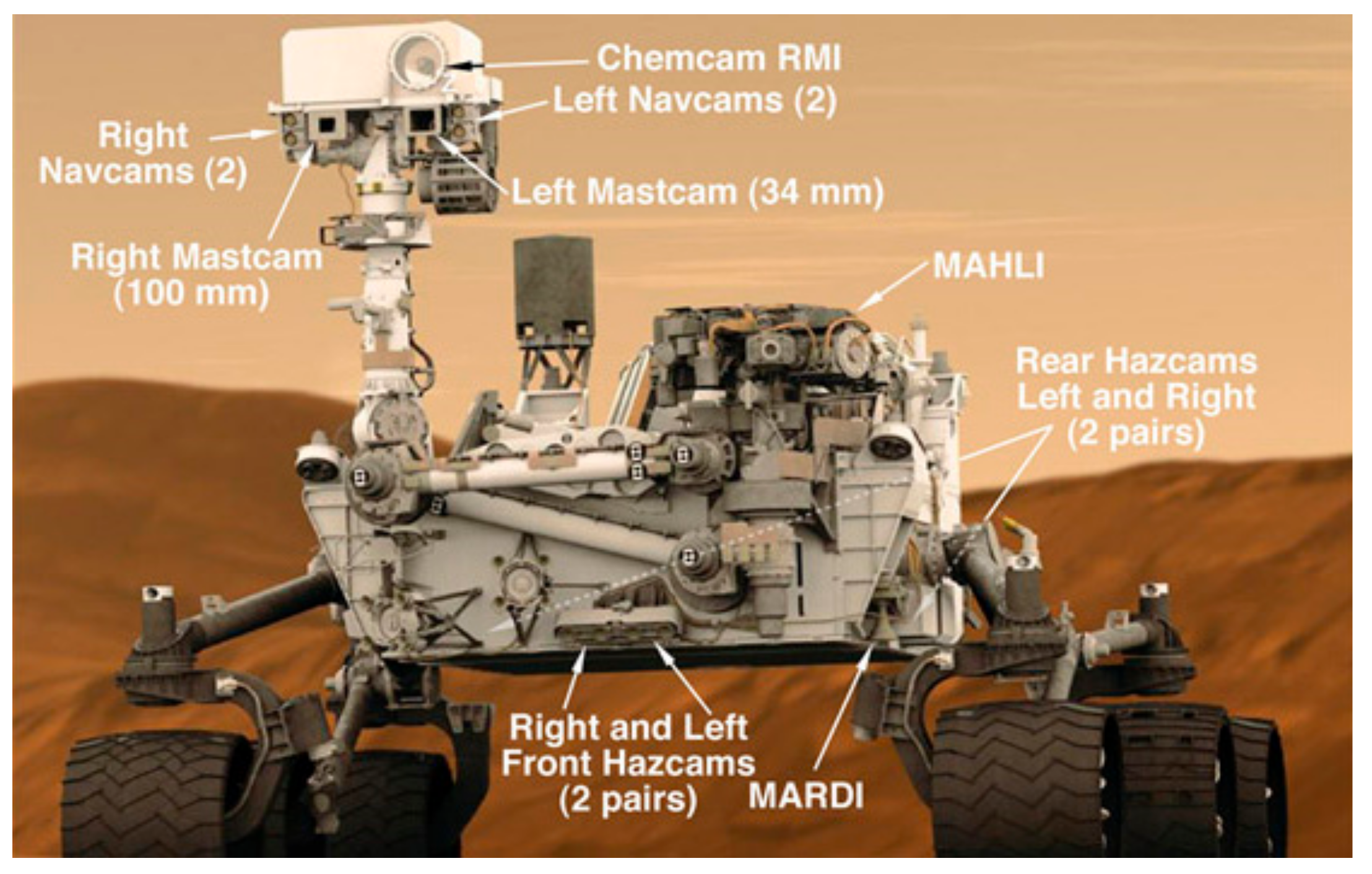

2. Mastcam Imagery for High Resolution Stereo Image and Disparity Map Generation

3. Image Enhancement Methods

3.1. Bicubic Interpolation

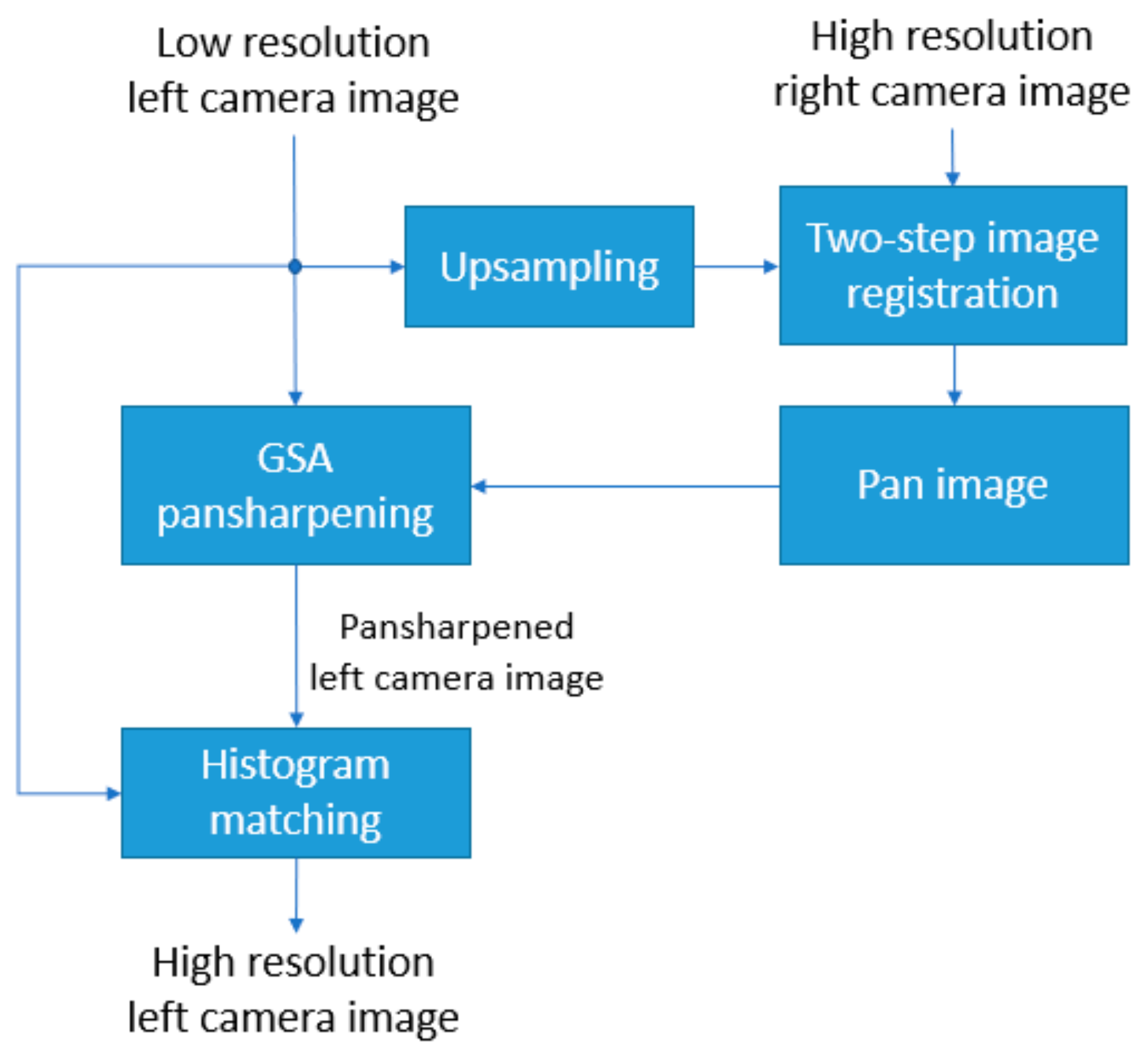

3.2. Pansharpening-Based Image Enhancement Method

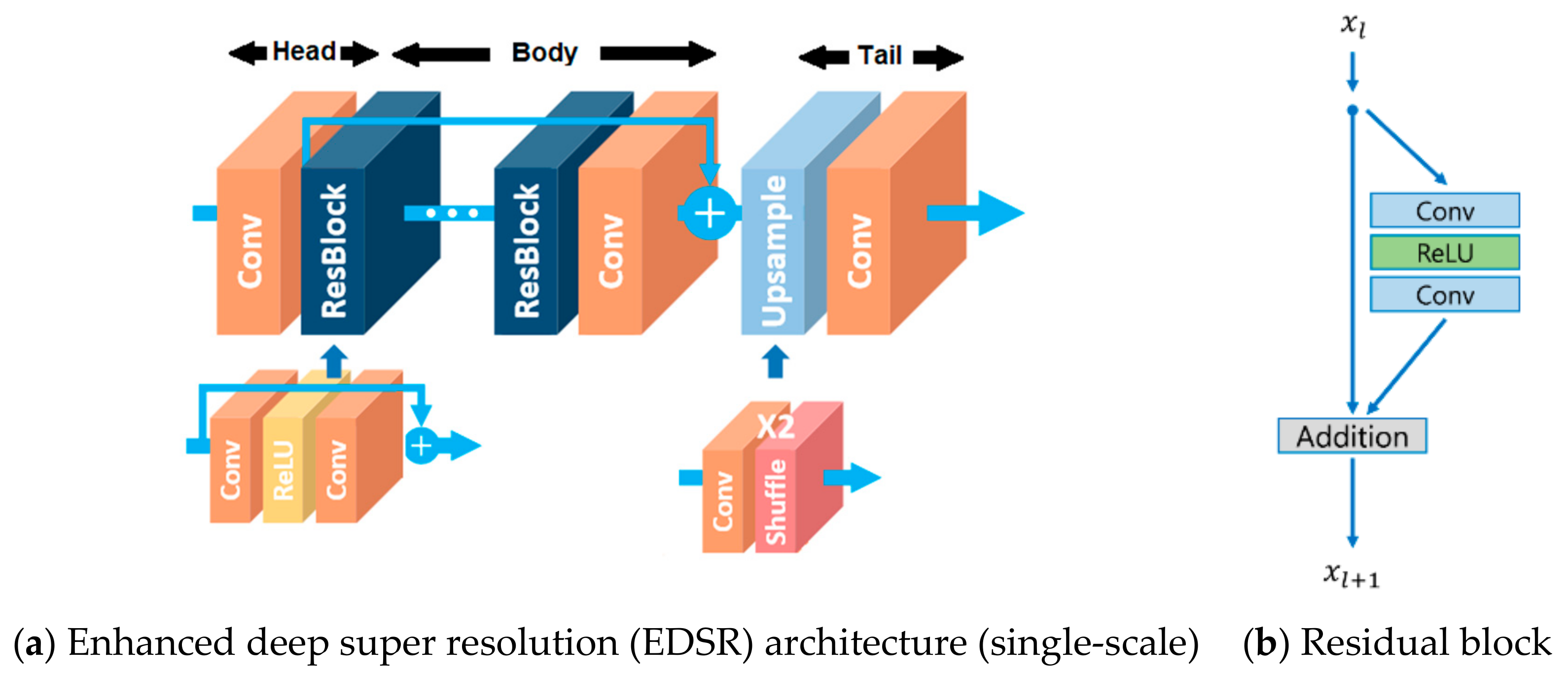

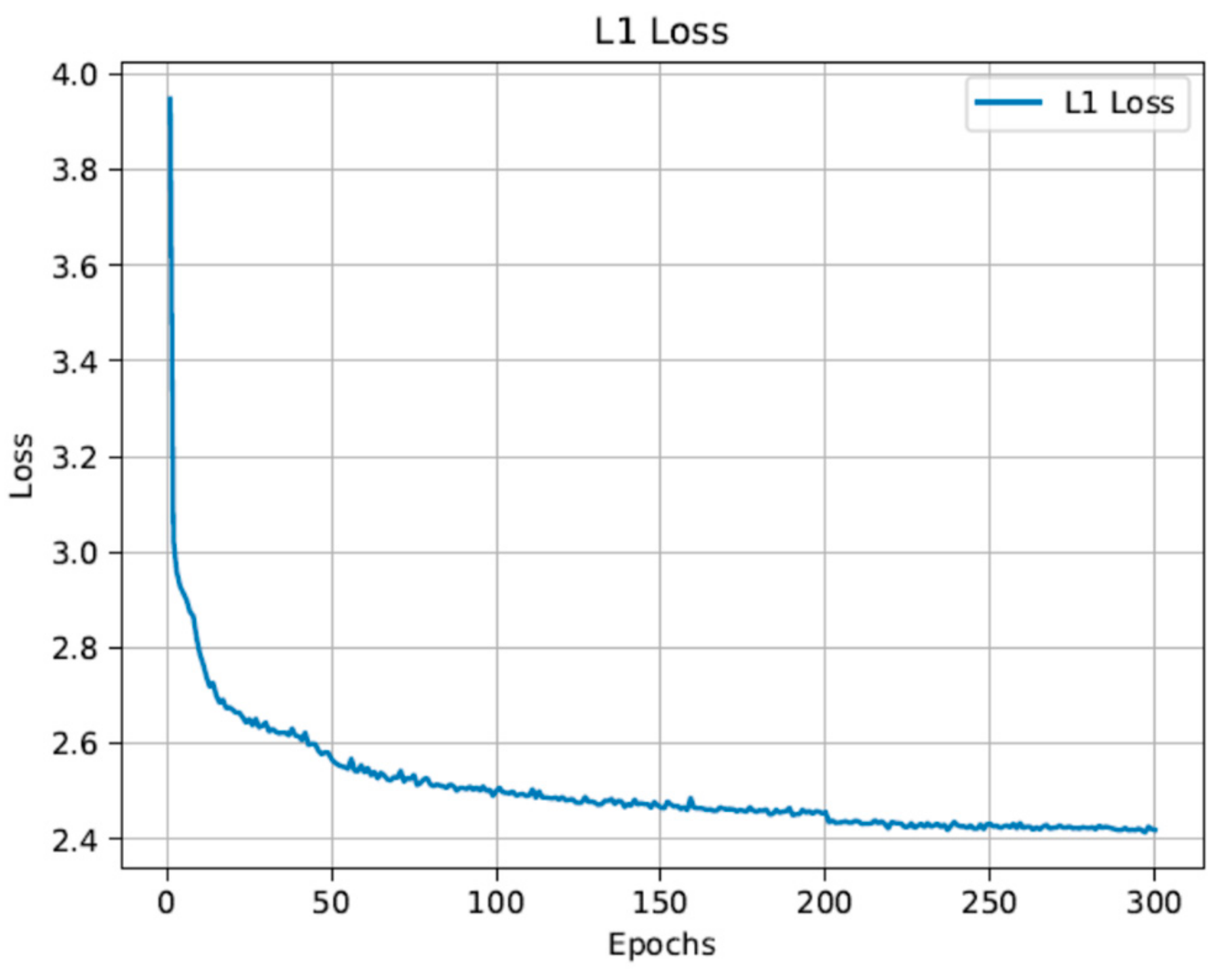

3.3. EDSR (Deep Learning-Based Super Resolution Method)

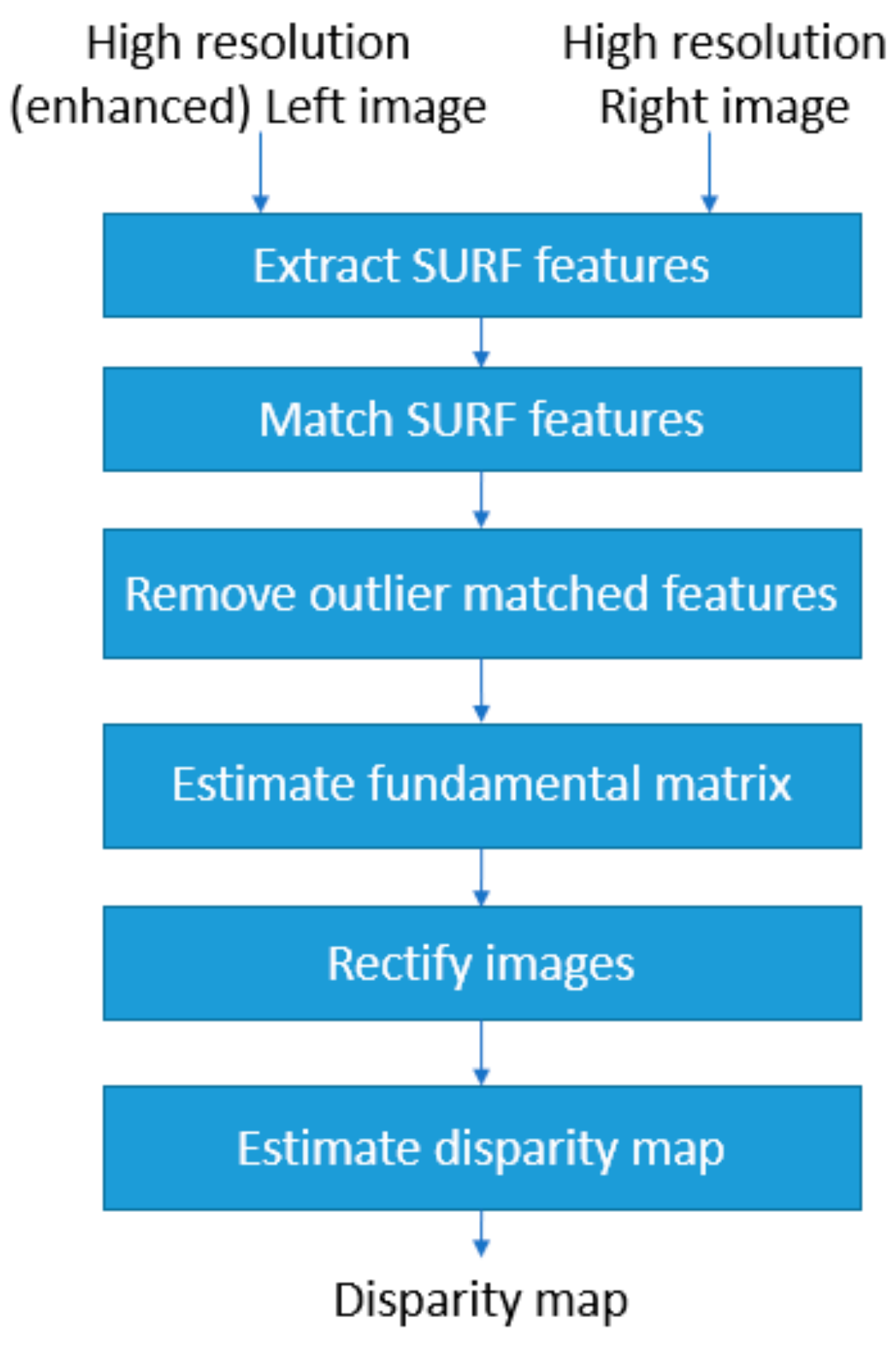

4. Disparity Map Estimation

5. Results and Analyses for a Middlebury Stereo Image Pair

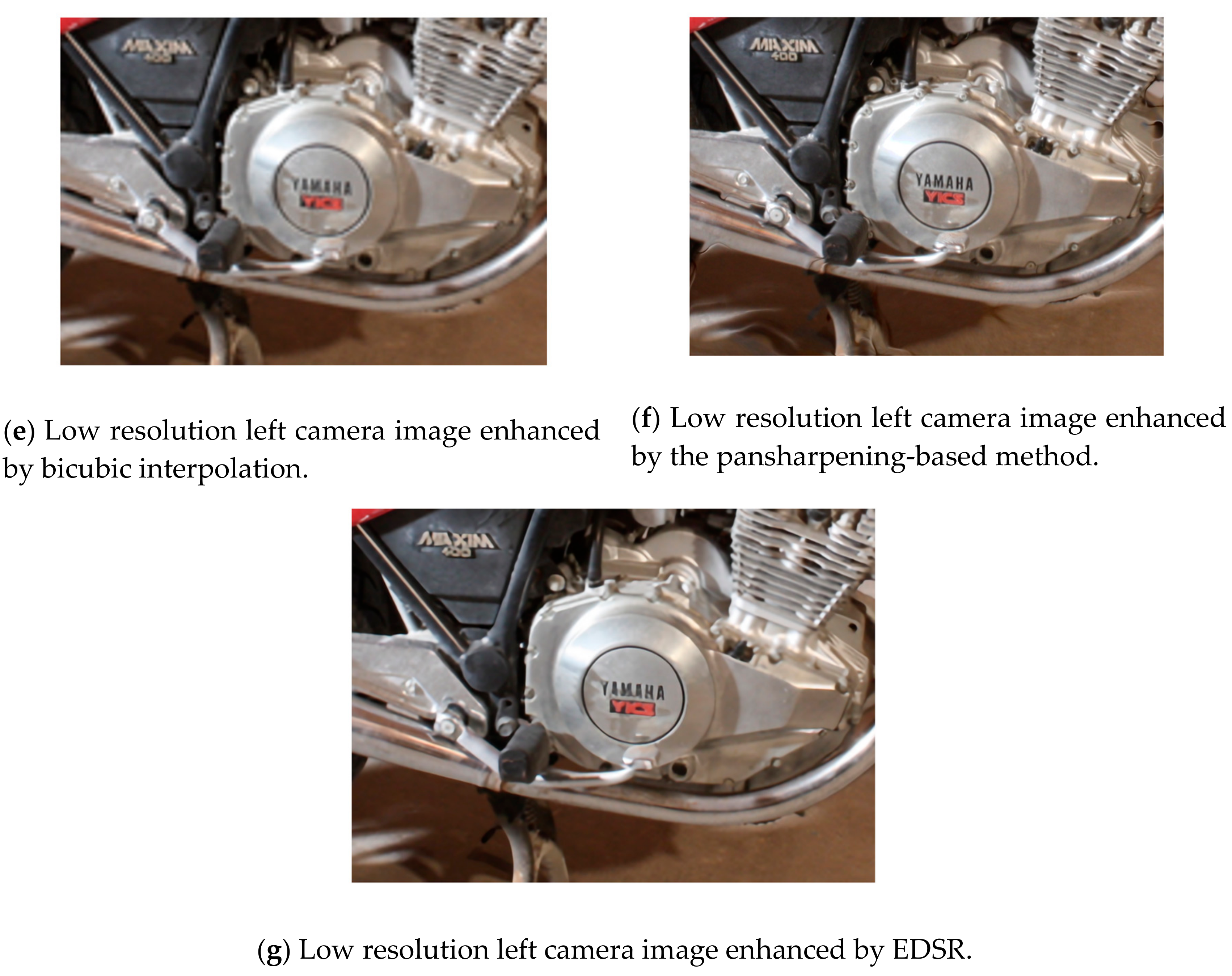

5.1. Low-Resolution Left Camera Image Enhancements

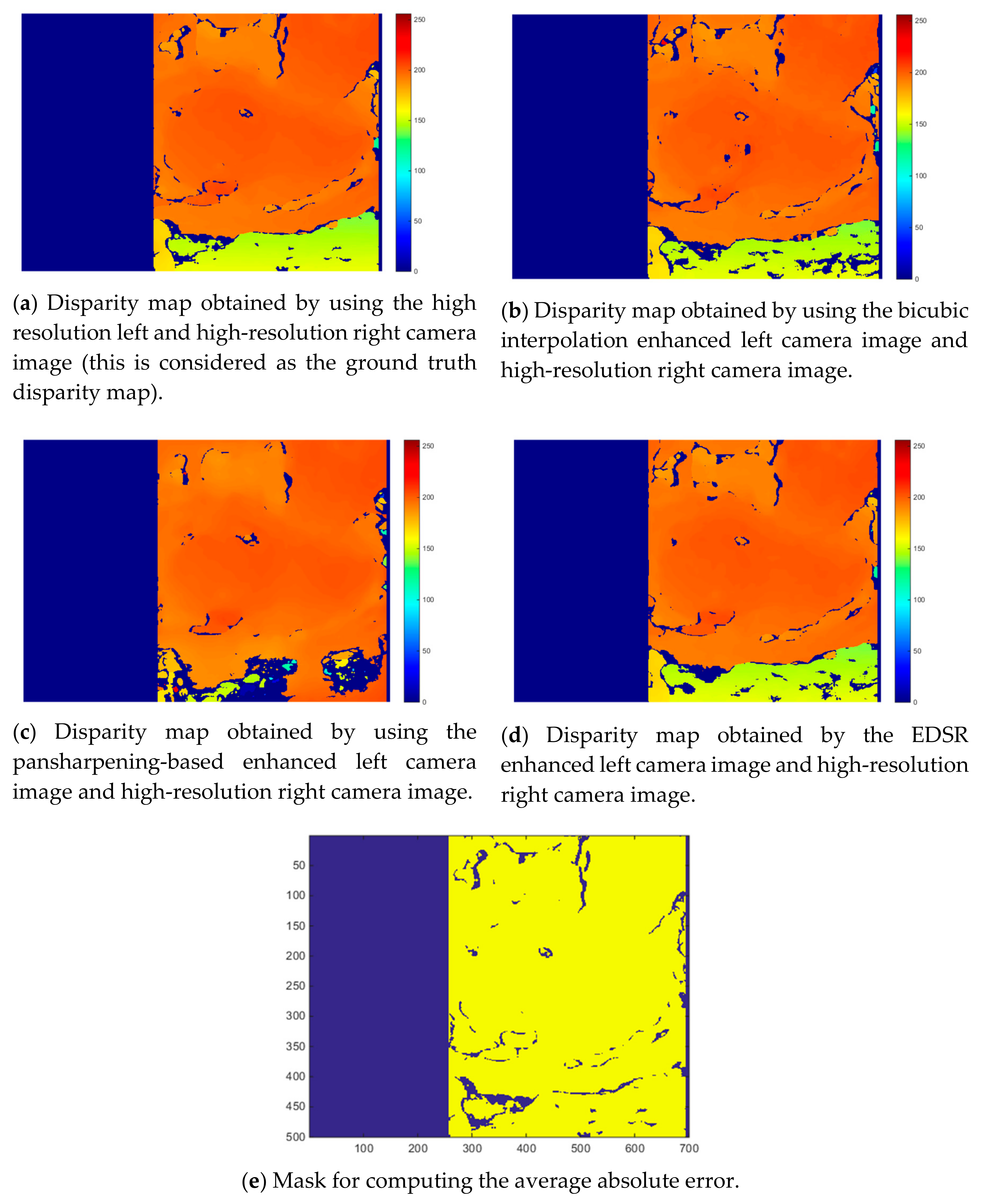

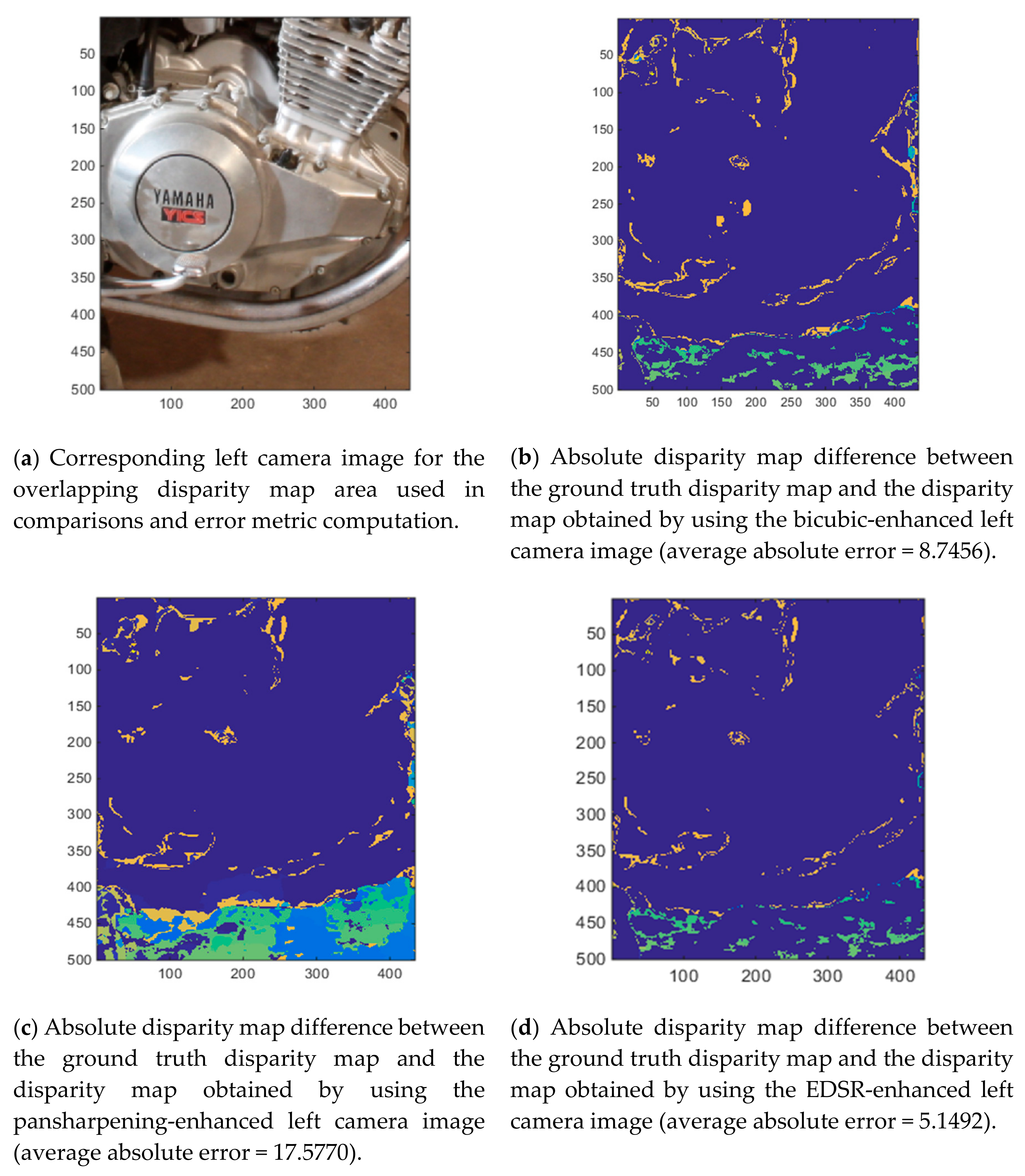

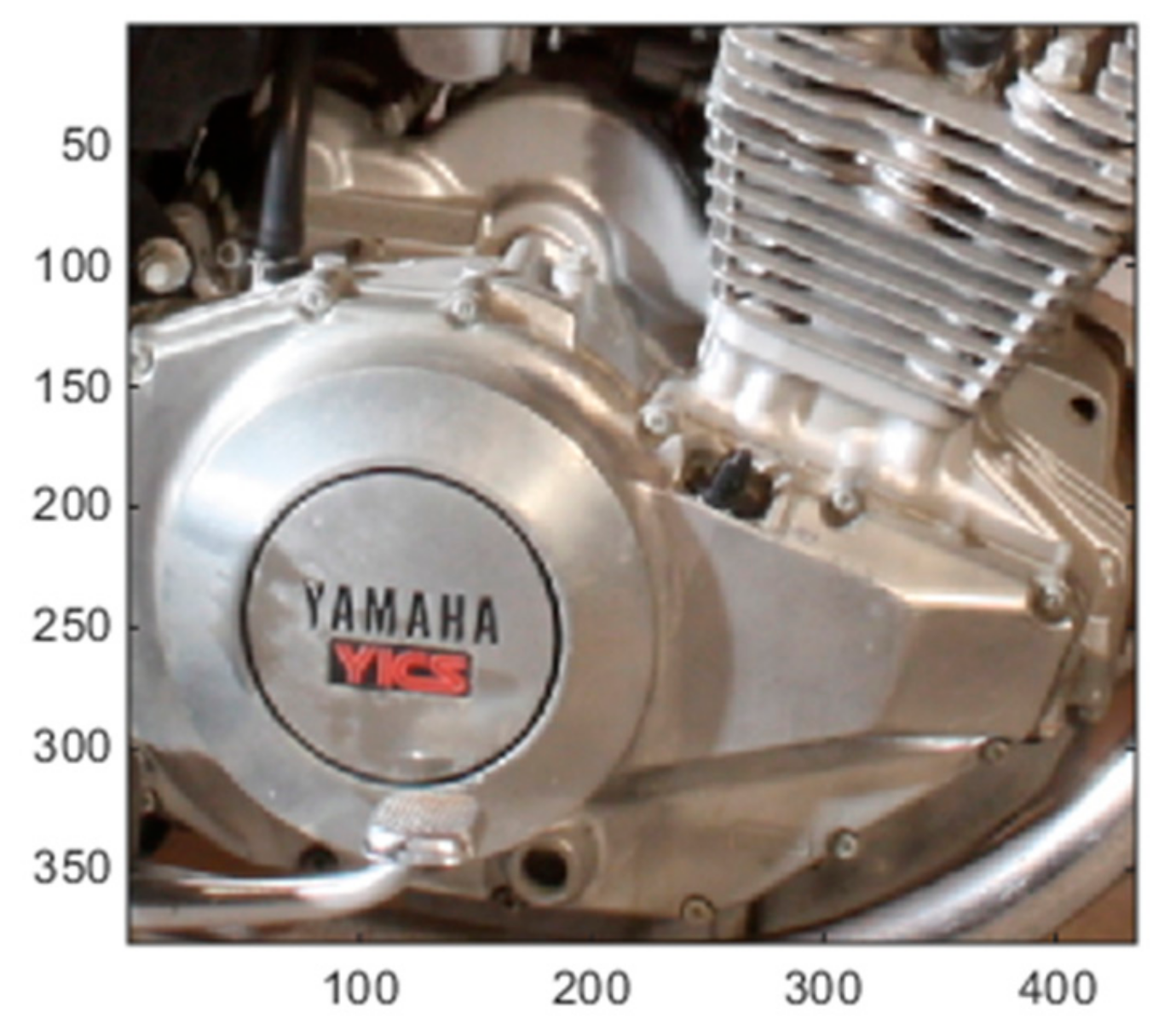

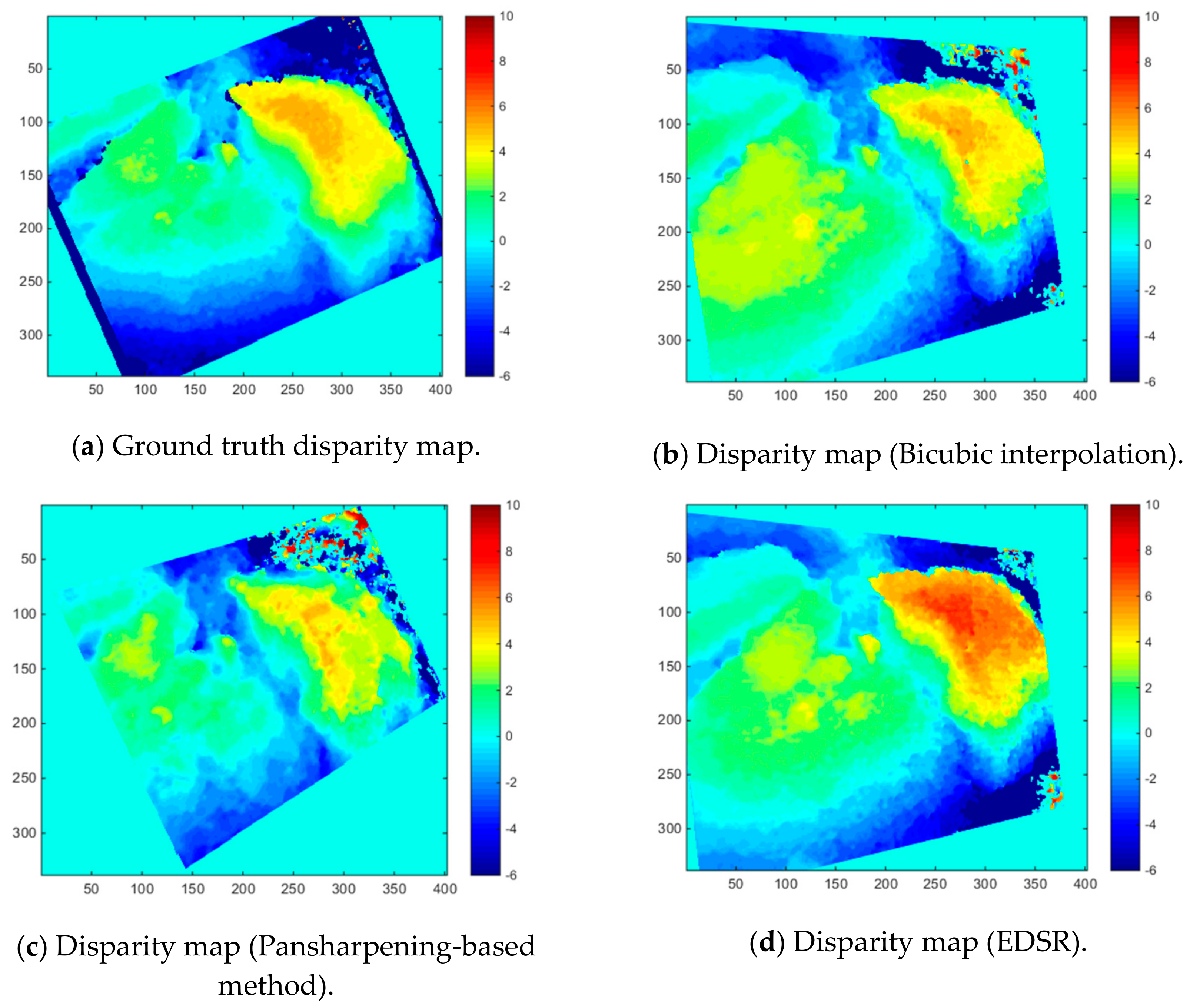

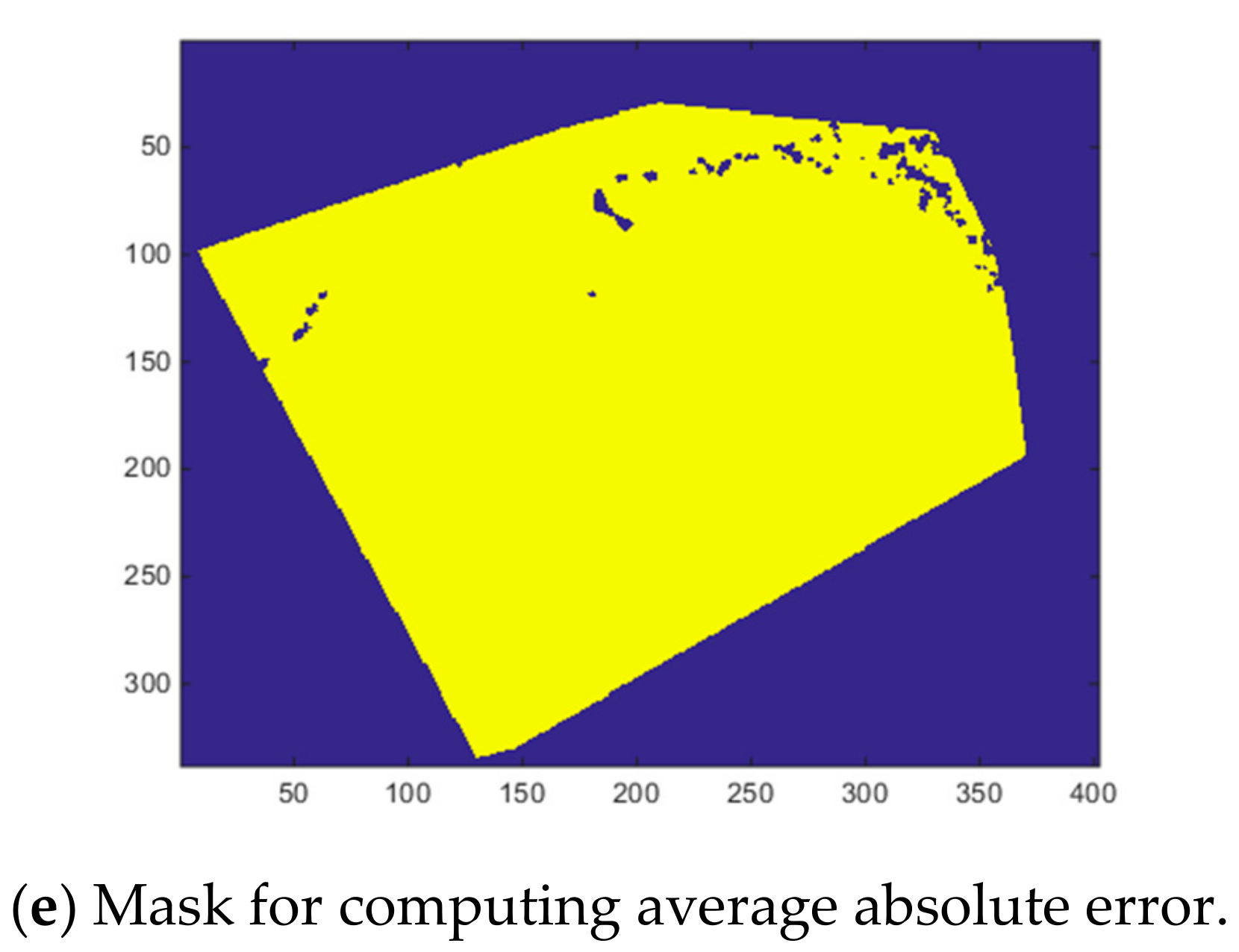

5.2. Disparity Map Estimation

6. Results and Analyses for Mastcam Stereo Image Pairs

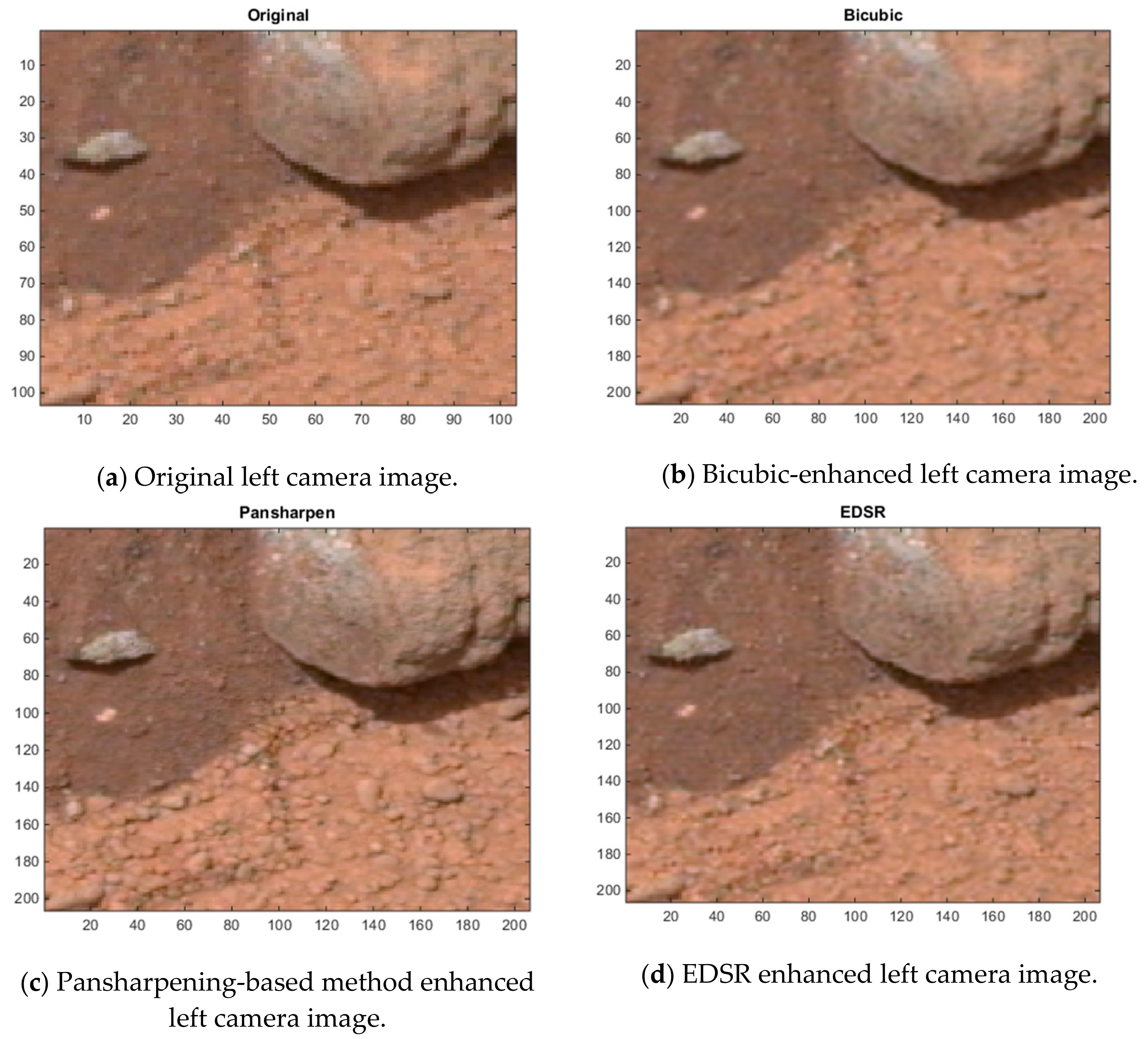

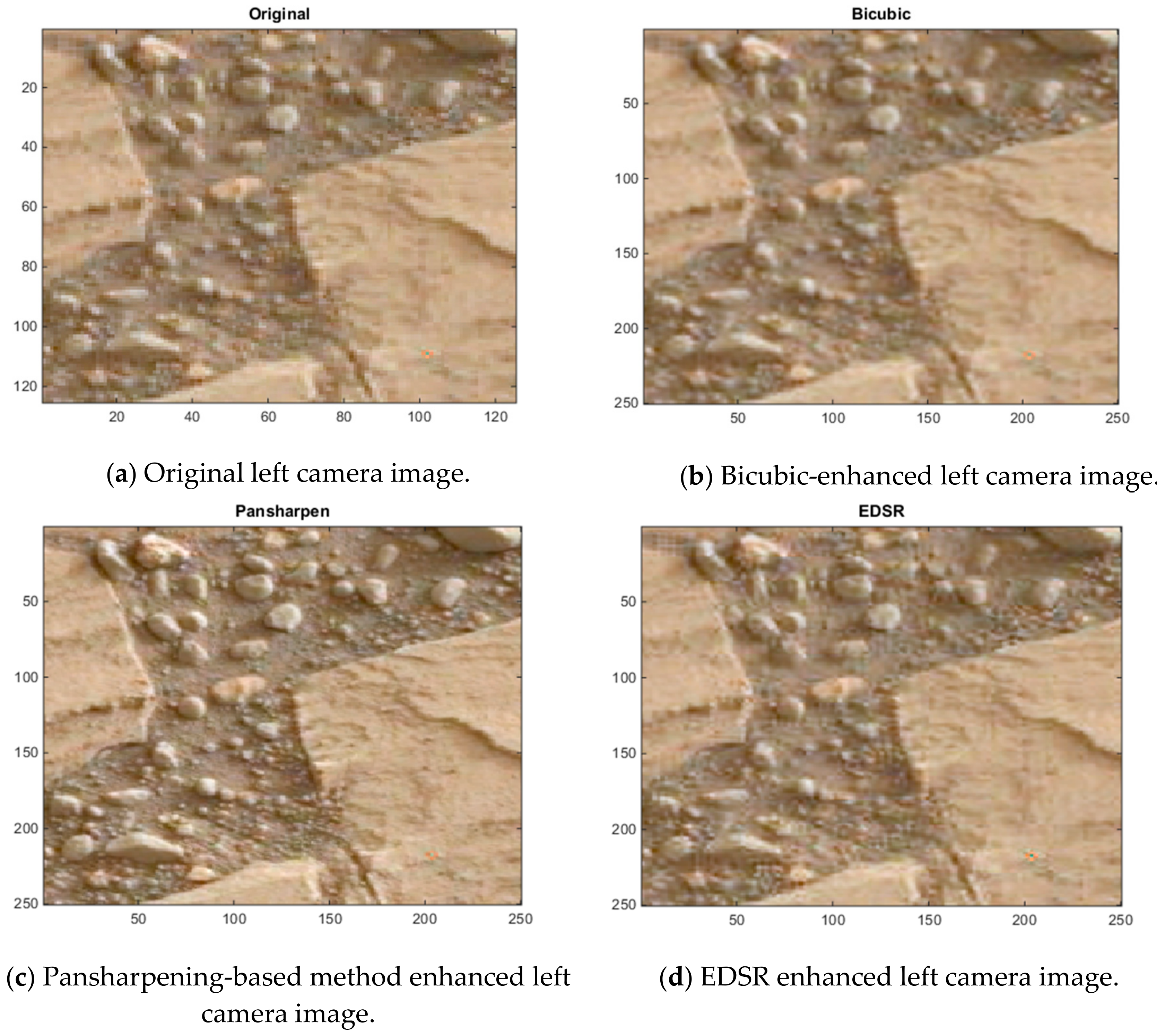

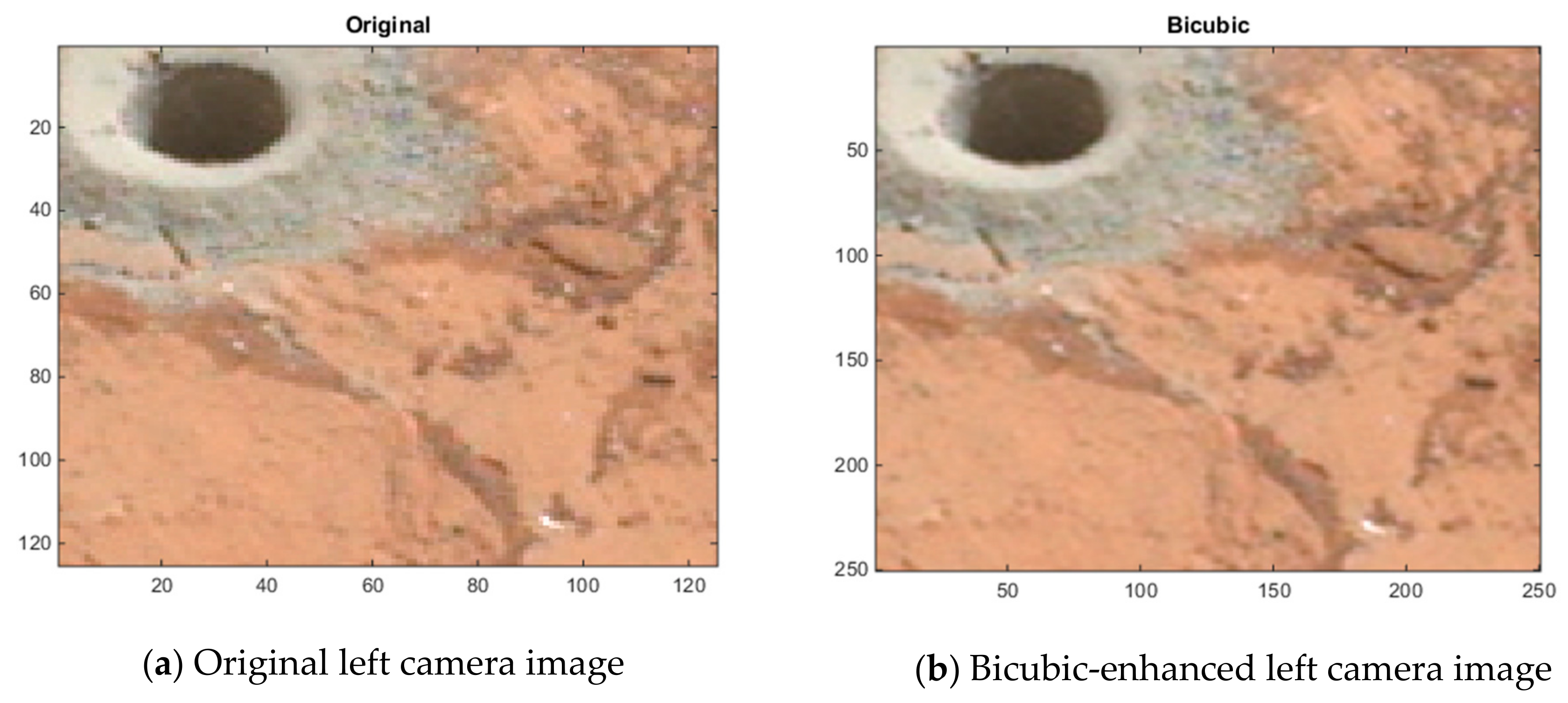

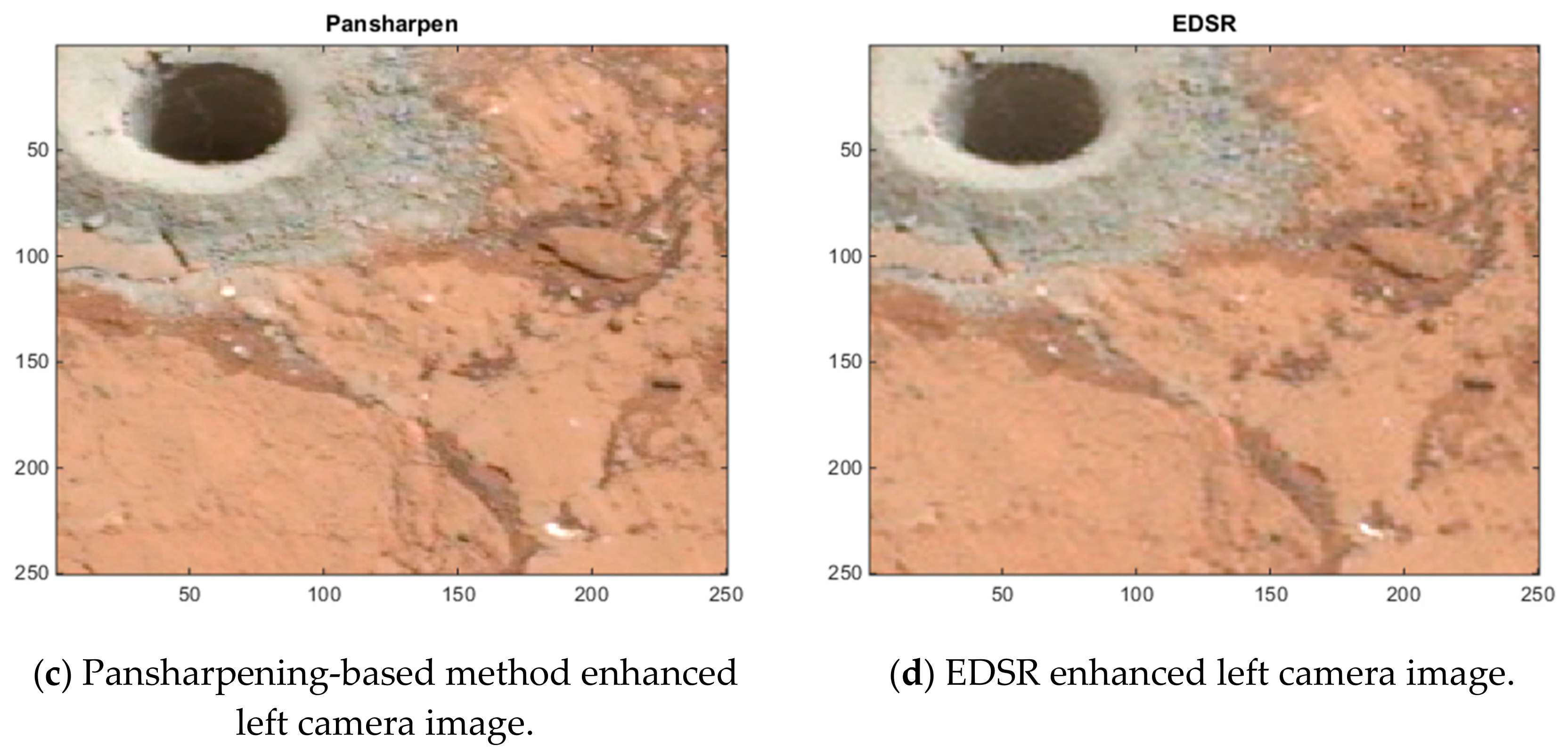

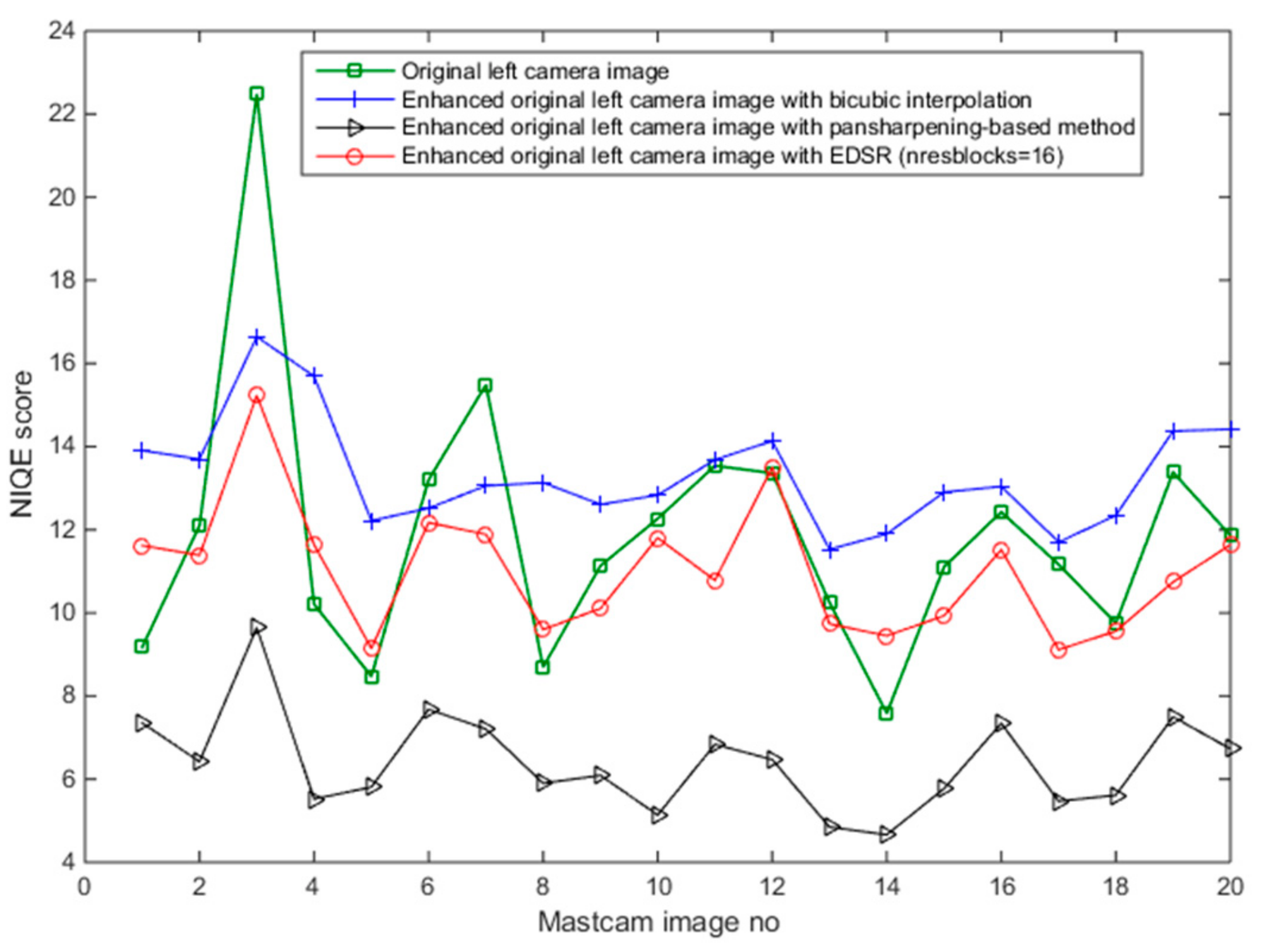

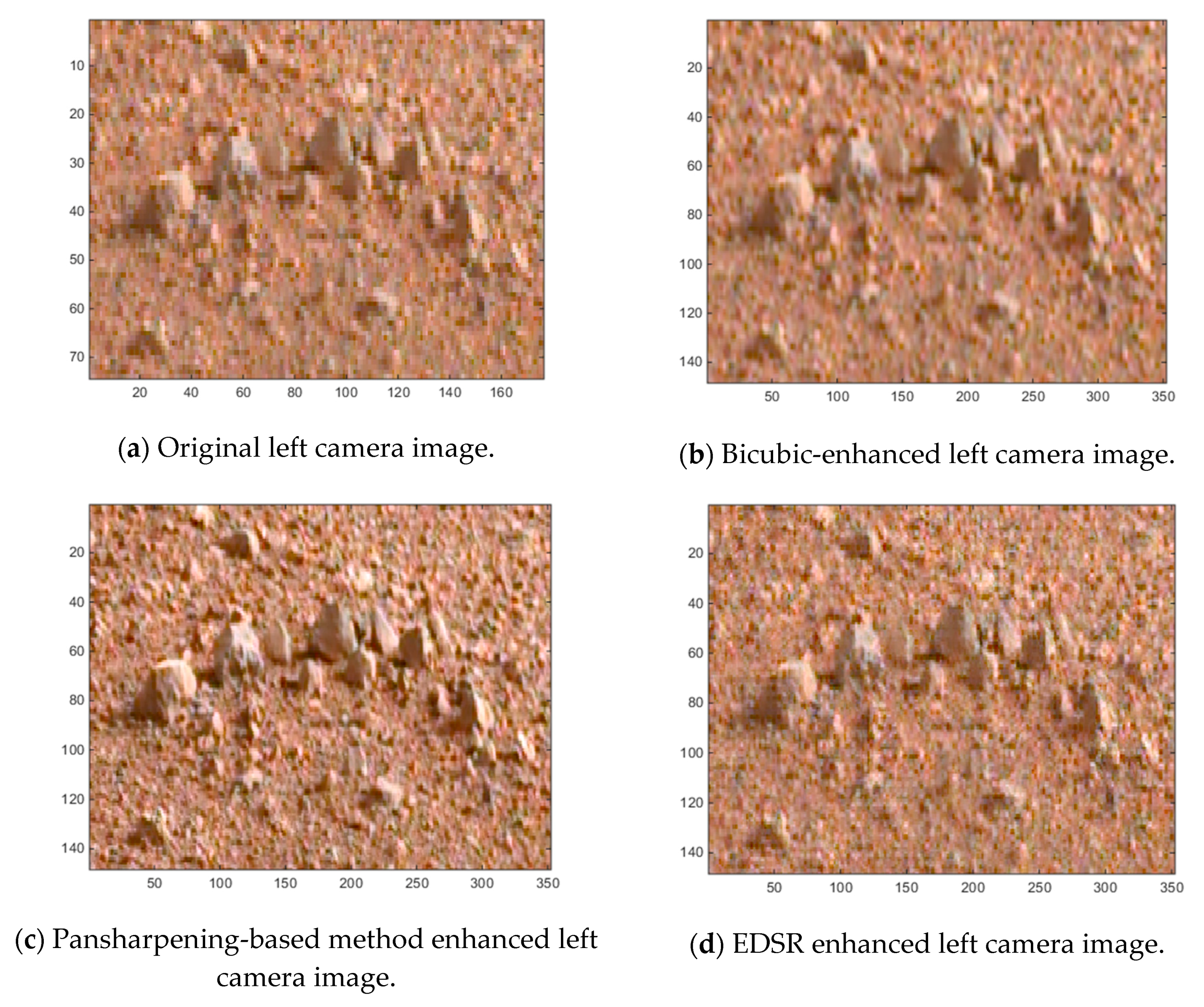

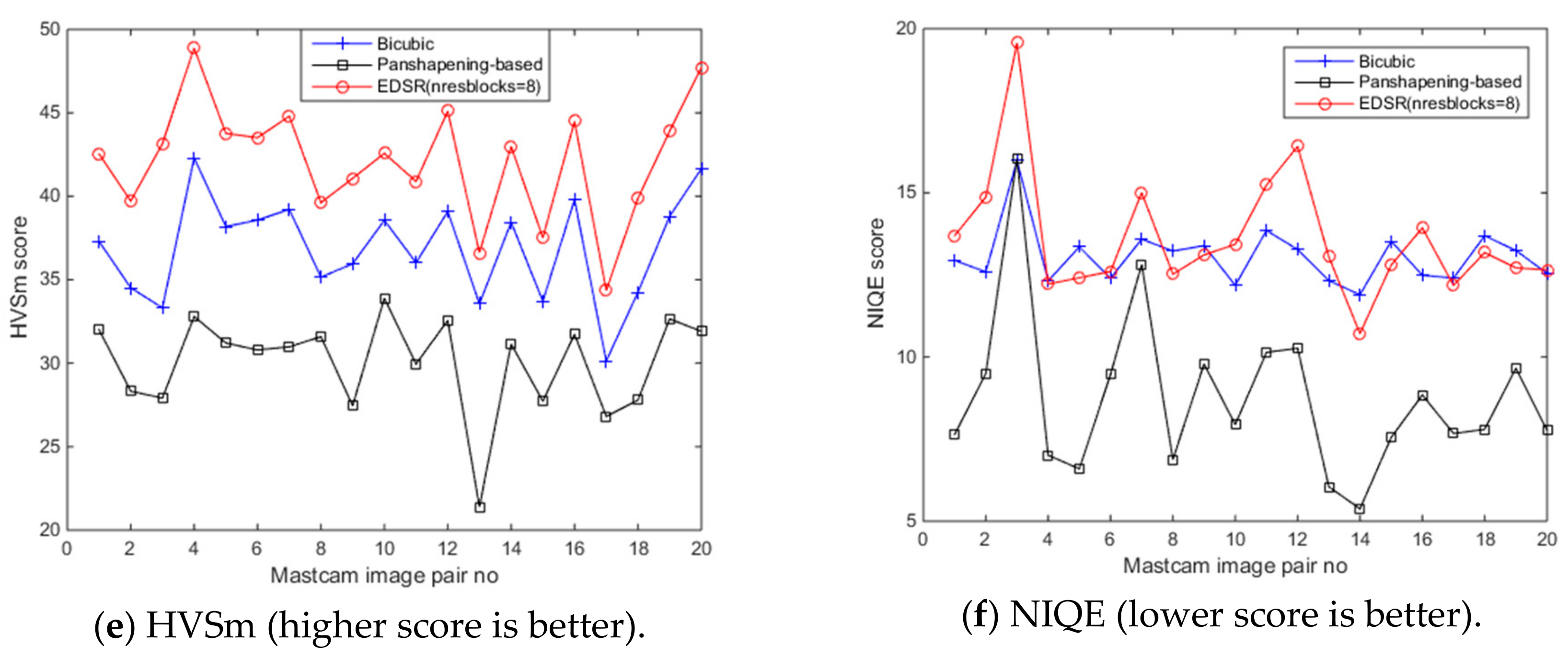

6.1. Original Left Mastcam Image Enhancements

6.2. Disparity Map Estimation Using Enhanced Downsampled Left Mastcam Images

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bell, J.F., III; Godber, A.; McNair, S.; Caplinger, M.A.; Maki, J.N.; Lemmon, M.T.; Van Beek, J.; Malin, M.C.; Wellington, D.; Kinch, K.M.; et al. The Mars Science Laboratory Curiosityrover Mast Camera (Mastcam) instruments: Pre-flight and in-flight calibration, validation, and data archiving. Earth Space Sci. 2017, 4, 396–452. [Google Scholar] [CrossRef]

- Kwan, C.; Chou, B.; Ayhan, B. Stereo Image and Depth Map Generation for Images with Different Views and Resolutions. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York City, NY, USA, 8–10 November 2018. [Google Scholar]

- Ayhan, B.; Dao, M.; Kwan, C.; Chen, H.M.; Bell, J.F.; Kidd, R. A novel utilization of image registration techniques to process Mastcam images in mars rover with applications to image fusion, pixel clustering, and anomaly detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4553–4564. [Google Scholar] [CrossRef]

- Photojournal, PIA16806: Comparing Mastcam and Laboratory Spectra. Available online: http://photojournal.jpl.nasa.gov/catalog/PIA16806 (accessed on 5 July 2019).

- Arya, M.; Hassan, S.; Binjola, S.; Verma, P. Exploration of Mars Using Augmented Reality. In International Conference on Intelligent Computing and Applications; Springer: Singapore, 2018. [Google Scholar]

- Casini, A.E.; Maggiore, P.; Viola, N.; Basso, V.; Ferrino, M.; Hoffman, J.A.; Cowley, A. Analysis of a Moon outpost for Mars enabling technologies through a Virtual Reality environment. Acta Astronaut. 2018, 143, 353–361. [Google Scholar] [CrossRef]

- Mars Virtual Reality Software Wins NASA Award. Available online: https://www.jpl.nasa.gov/news/news.php?feature=7249 (accessed on 5 July 2019).

- Boyle, R. NASA uses Microsoft’s HoloLens and ProtoSpace to build its next Mars Rover in Augmented Reality, GeekWire, (2018). Available online: https://www.geekwire.com/2016/nasa-uses-microsoft-hololens-build-mars-rover-augmented-reality/ (accessed on 5 July 2019).

- Alhashim, I.; Wonka, P. High Quality Monocular Depth Estimation via Transfer Learning. arXiv 2018, arXiv:1812.11941. [Google Scholar]

- Poggi, M.; Tosi, F.; Mattoccia, S. Learning monocular depth estimation with unsupervised trinocular assumptions. In Proceedings of the IEEE International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kwan, C.; Chou, B.; Ayhan, B. Enhancing Stereo Image Formation and Depth Map Estimation for Mastcam Images. In Proceedings of the IEEE Ubiquitous Computing, Electronics & Mobile Communication Conference, New York City, NY, USA, 8–10 November 2018. [Google Scholar]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Qu, Y.; Qi, H.; Ayhan, B.; Kwan, C.; Kidd, R. Does Multispectral/Hyperspectral Pansharpening Improve the Performance of Anomaly Detection? In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Kwan, C.; Budavari, B.; Bovik, A.C.; Marchisio, G. Blind Quality Assessment of Fused WorldView-3 Images by Using the Combinations of Pansharpening and Hypersharpening Paradigms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1835–1839. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Dao, M.; Ayhan, B.; Bell, J.F. Pansharpening of Mastcam images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Dong, C.; Loy, C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Yu, J.; Fan, Y.; Yang, J.; Xu, N.; Wang, Z.; Wang, X.; Huang, T. Wide activation for efficient and accurate image super-resolution. arXiv 2018, arXiv:1808.08718. [Google Scholar]

- Kim, J.; Kwon Lee, J.; Mu Lee, K. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vision 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Seventeen Cameras on Curiosity. Available online: https://www.nasa.gov/mission_pages/msl/multimedia/malin-4.html (accessed on 5 July 2019).

- McHugh, S. Understanding photography: Master Your Digital Camera and Capture That Perfect Photo; No starch press: San Francisco, CA, USA, 2016. [Google Scholar]

- Chen, M.-J.; Huang, C.-H.; Lee, W.-L. A fast edge-oriented algorithm for image interpolation. Image Vision Comput. 2005, 23, 791–798. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European conference on computer vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Adjust Histogram of 2-D Image to match Histogram of Reference Image. Available online: https://www.mathworks.com/help/images/ref/imhistmatch.html (accessed on 5 July 2019).

- Georgoulas, C.; Kotoulas, L.; Sirakoulis, G.C.; Andreadis, I.; Gasteratos, A. Real-time disparity map computation module. Microprocess. Microsyst. 2008, 32, 159–170. [Google Scholar] [CrossRef]

- Hartley, R.; Andrew, Z. Multiple view geometry in computer vision; Cambridge university press: Cambridge, UK, 2003. [Google Scholar]

- Hirschmüller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structure Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Egiazarian, K.; Astola, J.; Ponomarenko, N.; Lukin, V.; Battisti, F.; Carli, M. New full-reference quality metrics based on HVS. In Proceedings of the Second International Workshop on Video Processing and Quality Metrics, Scottsdale, AZ, USA, 22–24 January 2006. [Google Scholar]

- Ponomarenko, N.; Silvestri, F.; Egiazarian, K.; Carli, M.; Astola, J.; Lukin, V. On between-coefficient contrast masking of DCT basis functions. In Proceedings of the third international workshop on video processing and quality metrics. Scottsdale, Scottsdale, AZ, USA, 13–15 January 2010. [Google Scholar]

- MSL Curiosity Analyst‘s Notebook. Available online: https://an.rsl.wustl.edu/msl/mslbrowser/an3.aspx (accessed on 5 July 2019).

- MSL Science Corner. Available online: https://msl-scicorner.jpl.nasa.gov/Instruments/Mastcam/ (accessed on 5 July 2019).

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

| Head: Sequential( (0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) ) Body: 16 x ResBlock ResBlock( (body): Sequential( (0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (1): ReLU(inplace) (2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) ) ) Tail: Sequential( (0): Upsampler ( (0): Conv2d(64, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (1): PixelShuffle(upscale_factor=2)) (1): Conv2d(64, 3, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) ) |

| Method | SSIM | RMSE | PSNR | HVS | HVSm |

|---|---|---|---|---|---|

| Bicubic interpolation | 0.8844 | 9.3966 | 28.6328 | 23.2014 | 24.6190 |

| Pansharpening-based method | 0.9215 | 7.7628 | 30.2594 | 25.9768 | 27.0790 |

| EDSR (Deep learning-based) | 0.9443 | 5.8523 | 32.6983 | 28.2295 | 30.5052 |

| Average Absolute Error | |

|---|---|

| Bicubic interpolation | 8.7456 |

| Pansharpening-based method | 17.5770 |

| EDSR (Deep learning-based method) | 5.1492 |

| Method | SSIM | RMSE | PSNR | HVS | HVSm |

|---|---|---|---|---|---|

| Bicubic interpolation | 0.8600 | 10.6346 | 27.5653 | 22.1325 | 23.5467 |

| Pansharpening-based method | 0.9332 | 7.5230 | 30.5221 | 26.2792 | 27.7185 |

| EDSR (Deep learning-based) | 0.9343 | 6.6221 | 31.6408 | 27.1654 | 29.4234 |

| Method | Average Absolute Error |

|---|---|

| Bicubic interpolation | 6.0159 |

| Pansharpening-based method | 3.2810 |

| EDSR (Deep learning-based method) | 3.2040 |

| Mastcam Image Pair no | Left Camera Image Filename | Right Camera Image Filename |

|---|---|---|

| 1 | 0013ML0000120000100169E01_DRCX_0PCT.png | 0013MR0000120000100039E01_DRCX_0PCT.png |

| 2 | 0013ML0000120070100176E01_DRCX_0PCT.png | 0013MR0000120070100046E01_DRCX_0PCT.png |

| 3 | 0023ML0001140700100703C00_DRCX_0PCT.png | 0023MR0001140700100600C00_DRCX_0PCT.png |

| 4 | 0150ML0008420000104432E01_DRCX_0PCT.png | 0150MR0008420000201218E01_DRCX_0PCT.png |

| 5 | 0172ML0009240020104881E01_DRCX_0PCT.png | 0172MR0009240020201683E01_DRCX_0PCT.png |

| 6 | 0174ML0009350000105177E01_DRCX_0PCT.png | 0174MR0009350070201948E01_DRCX_0PCT.png |

| 7 | 0183ML0009930000105284E01_DRCX_0PCT.png | 0183MR0009930070202041E01_DRCX_0PCT.png |

| 8 | 0184ML0009250350105335E01_DRCX_0PCT.png | 0184MR0009250350202097E01_DRCX_0PCT.png |

| 9 | 0192ML0010170000105681E01_DRCX_0PCT.png | 0192MR0010170000202484E01_DRCX_0PCT.png |

| 10 | 0269ML0011810000106129E01_DRCX_0PCT.png | 0269MR0011810000203215E01_DRCX_0PCT.png |

| 11 | 0275ML0011960010106210E01_DRCX_0PCT.png | 0275MR0011960010203447E01_DRCX_0PCT.png |

| 12 | 0290ML0012250030106375E01_DRCX_0PCT.png | 0290MR0012250030203531E01_DRCX_0PCT.png |

| 13 | 0300ML0012410000106432E01_DRCX_0PCT.png | 0300MR0012410000203739E01_DRCX_0PCT.png |

| 14 | 0301ML0012530020106447E01_DRCX_0PCT.png | 0301MR0012530020203760E01_DRCX_0PCT.png |

| 15 | 0303ML0012610000106474E01_DRCX_0PCT.png | 0303MR0012610000203818E01_DRCX_0PCT.png |

| 16 | 0308ML0012730400106645E01_DRCX_0PCT.png | 0308MR0012730400204006E01_DRCX_0PCT.png |

| 17 | 0508ML0020000260202787E01_DRCX_0PCT.png | 0508MR0020000260303151E01_DRCX_0PCT.png |

| 18 | 0514ML0020280000202963E01_DRCX_0PCT.png | 0514MR0020280000303241E01_DRCX_0PCT.png |

| 19 | 0803ML0035130050400877E01_DRCX_0PCT.png | 0803MR0035130050500252E01_DRCX_0PCT.png |

| 20 | 0813ML0035700050401024E01_DRCX_0PCT.png | 0813MR0035700050500419E01_DRCX_0PCT.png |

| EDSR Model (n_resblocks = 4) | EDSR Model (n_resblocks = 8) | EDSR Model (n_resblocks = 16) | EDSR Model (n_resblocks = 32) | |

|---|---|---|---|---|

| SSIM | 0.90674 | 0.908093 | 0.902667 | 0.901051 |

| RMSE | 5.670515 | 5.632352 | 5.842843 | 5.898821 |

| PSNR | 32.75032 | 32.79714 | 32.51517 | 32.42496 |

| HVS | 35.16401 | 35.21195 | 34.80805 | 34.75265 |

| HVSm | 42.06746 | 42.14309 | 41.29008 | 41.22219 |

| Mastcam Image Pair no | Groundtruth | Bicubic Interpolation | Pansharpening-Based | EDSR (nresblocks = 8) |

|---|---|---|---|---|

| 1 | 49 | 46 | 44 | 36 |

| 2 | 201 | 154 | 177 | 134 |

| 3 | 79 | 58 | 69 | 44 |

| 4 | 117 | 107 | 114 | 102 |

| 5 | 200 | 162 | 197 | 159 |

| 6 | 90 | 70 | 86 | 73 |

| 7 | 80 | 71 | 82 | 33 |

| 8 | 223 | 177 | 204 | 176 |

| 9 | 155 | 119 | 159 | 117 |

| 10 | 89 | 71 | 81 | 61 |

| 11 | 97 | 81 | 88 | 68 |

| 12 | 76 | 75 | 77 | 54 |

| 13 | 229 | 165 | 188 | 172 |

| 14 | 176 | 142 | 173 | 139 |

| 15 | 238 | 179 | 215 | 165 |

| 16 | 134 | 115 | 126 | 100 |

| 17 | 344 | 269 | 327 | 277 |

| 18 | 188 | 147 | 176 | 140 |

| 19 | 114 | 104 | 114 | 109 |

| 20 | 116 | 108 | 114 | 98 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayhan, B.; Kwan, C. Mastcam Image Resolution Enhancement with Application to Disparity Map Generation for Stereo Images with Different Resolutions. Sensors 2019, 19, 3526. https://doi.org/10.3390/s19163526

Ayhan B, Kwan C. Mastcam Image Resolution Enhancement with Application to Disparity Map Generation for Stereo Images with Different Resolutions. Sensors. 2019; 19(16):3526. https://doi.org/10.3390/s19163526

Chicago/Turabian StyleAyhan, Bulent, and Chiman Kwan. 2019. "Mastcam Image Resolution Enhancement with Application to Disparity Map Generation for Stereo Images with Different Resolutions" Sensors 19, no. 16: 3526. https://doi.org/10.3390/s19163526

APA StyleAyhan, B., & Kwan, C. (2019). Mastcam Image Resolution Enhancement with Application to Disparity Map Generation for Stereo Images with Different Resolutions. Sensors, 19(16), 3526. https://doi.org/10.3390/s19163526