Monocular Vision-Based Pose Determination in Close Proximity for Low Impact Docking

Abstract

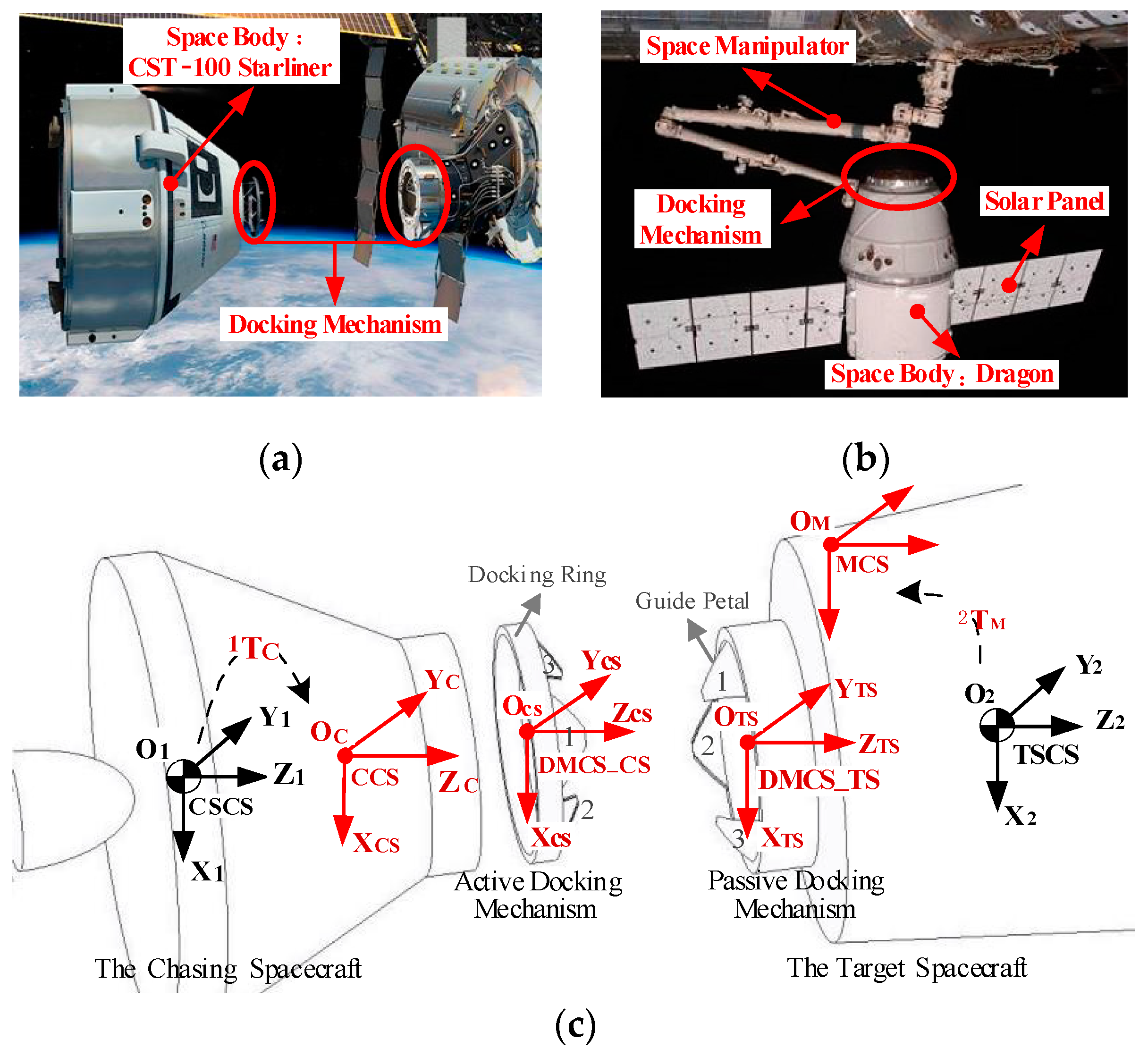

1. Introduction

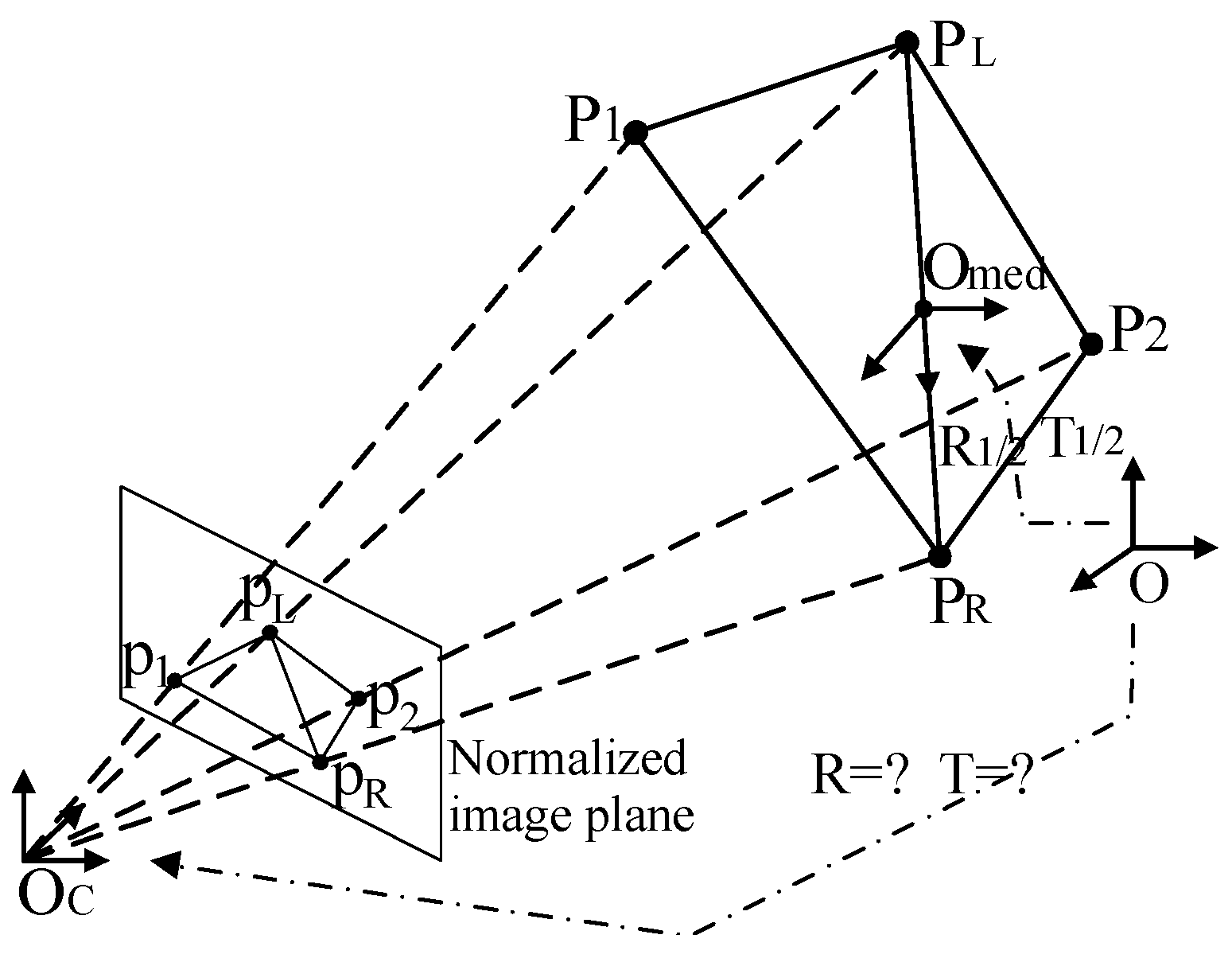

2. Problem Formulation

2.1. Definition of the Coordinate Systems

2.2. Comparison of Different Methods

- As shown in Equations (1) and (2), our proposed method M2 works well for pose measurement for both cooperative and non-cooperative targets, and it is much simpler and more efficient than the existing method M1.

- The measurement accuracy of M2 is higher than that of M1 since .

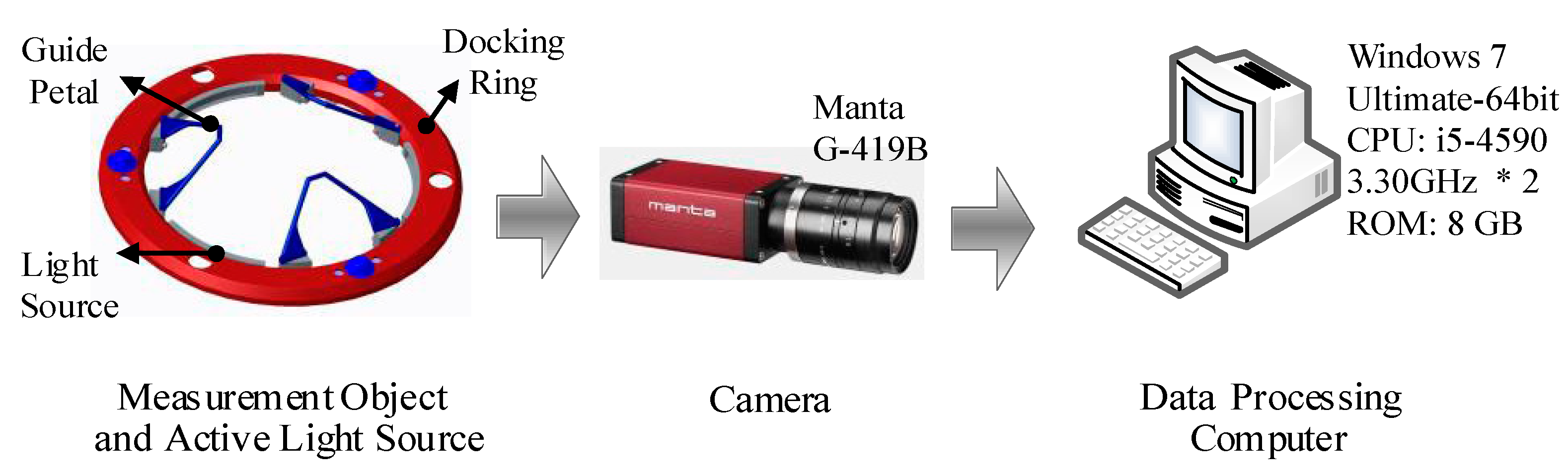

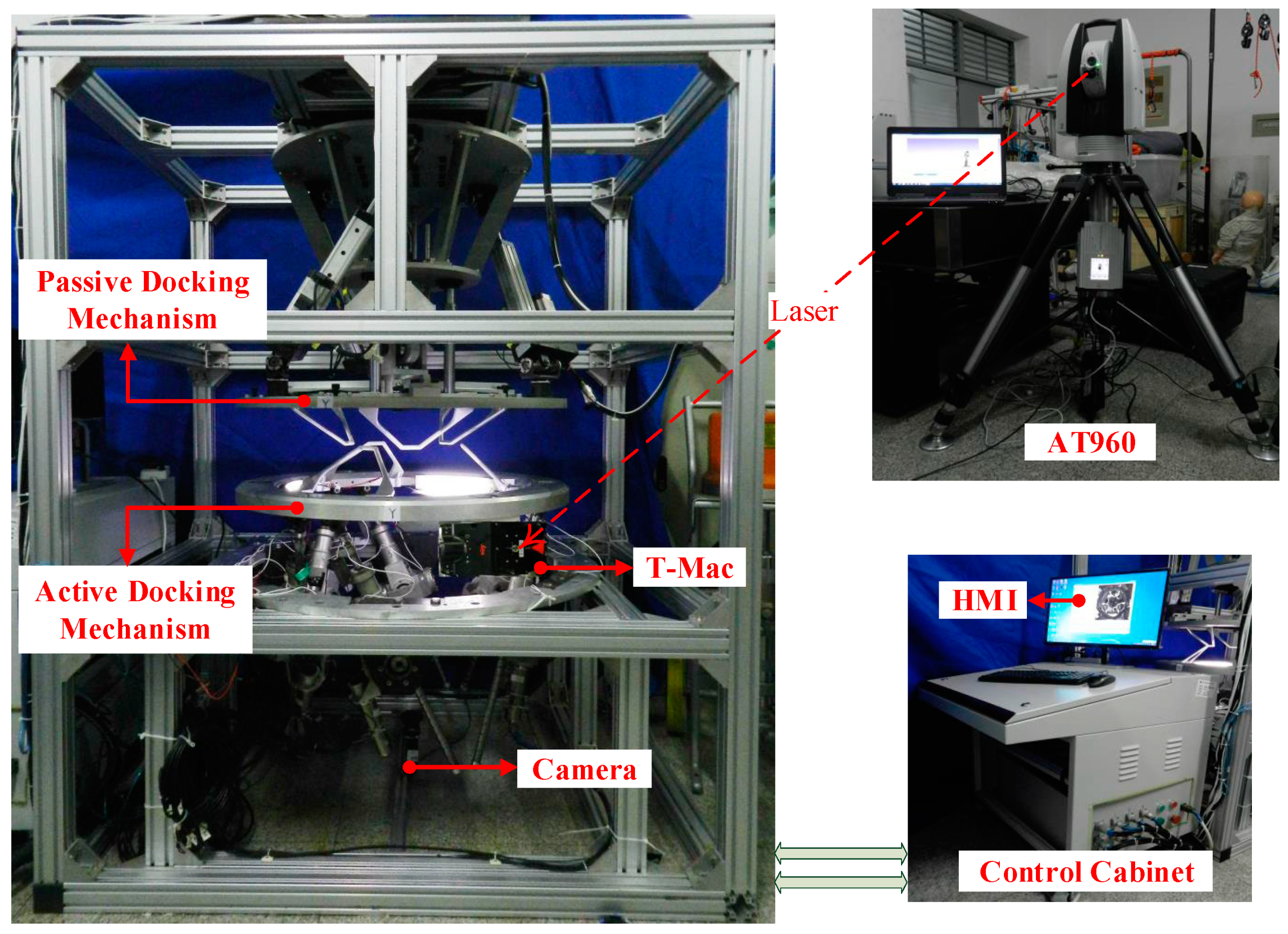

3. Design of the Monocular Vision System

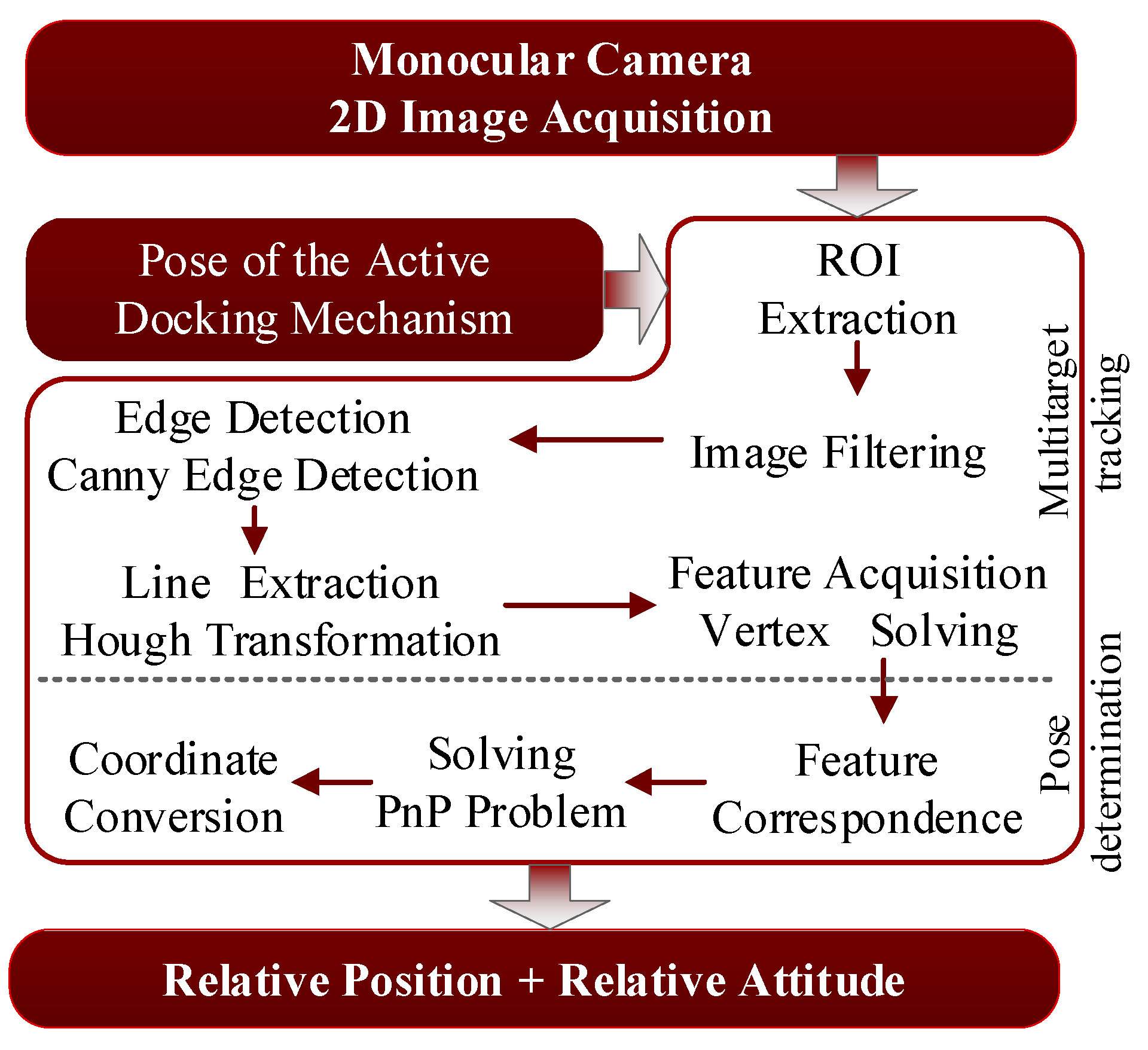

3.1. Architecture of the Monocular Vision System

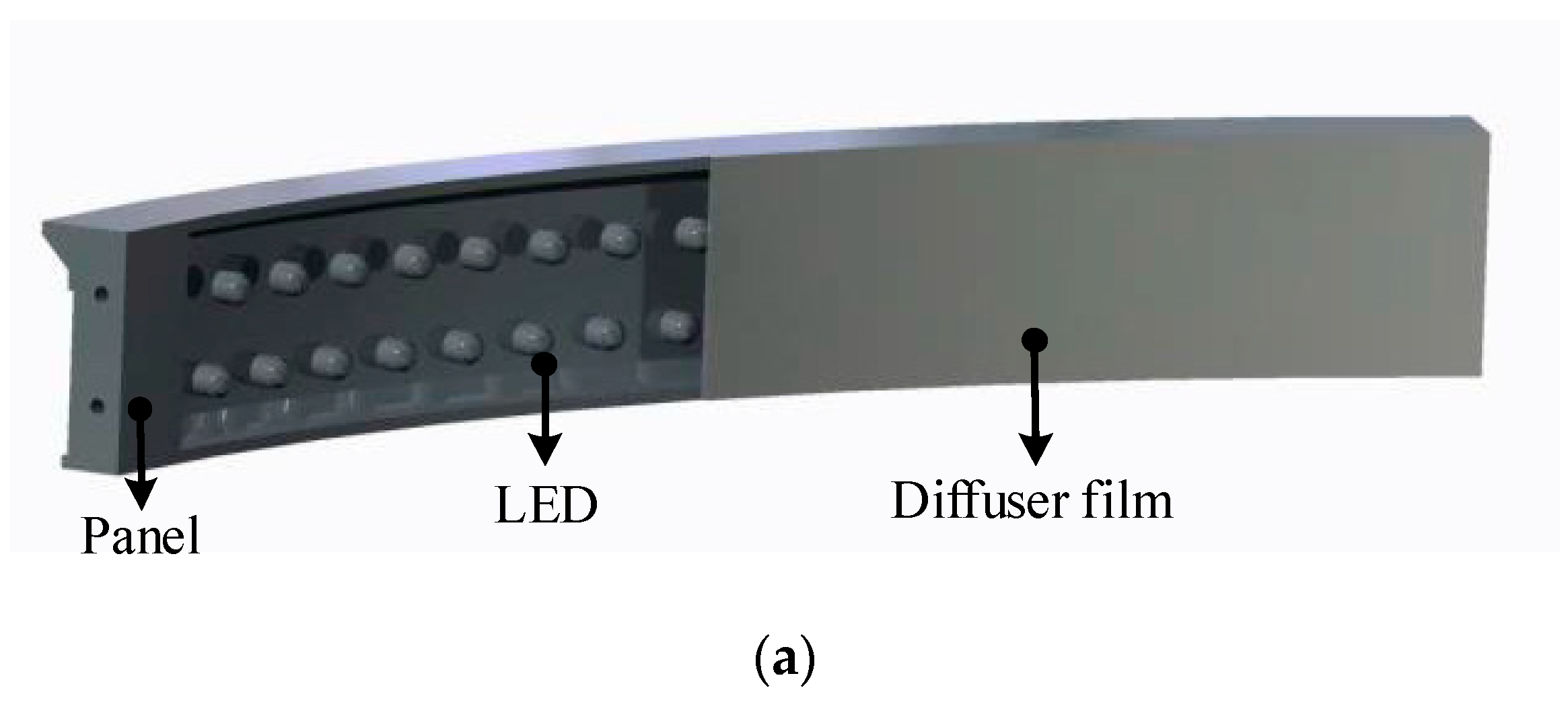

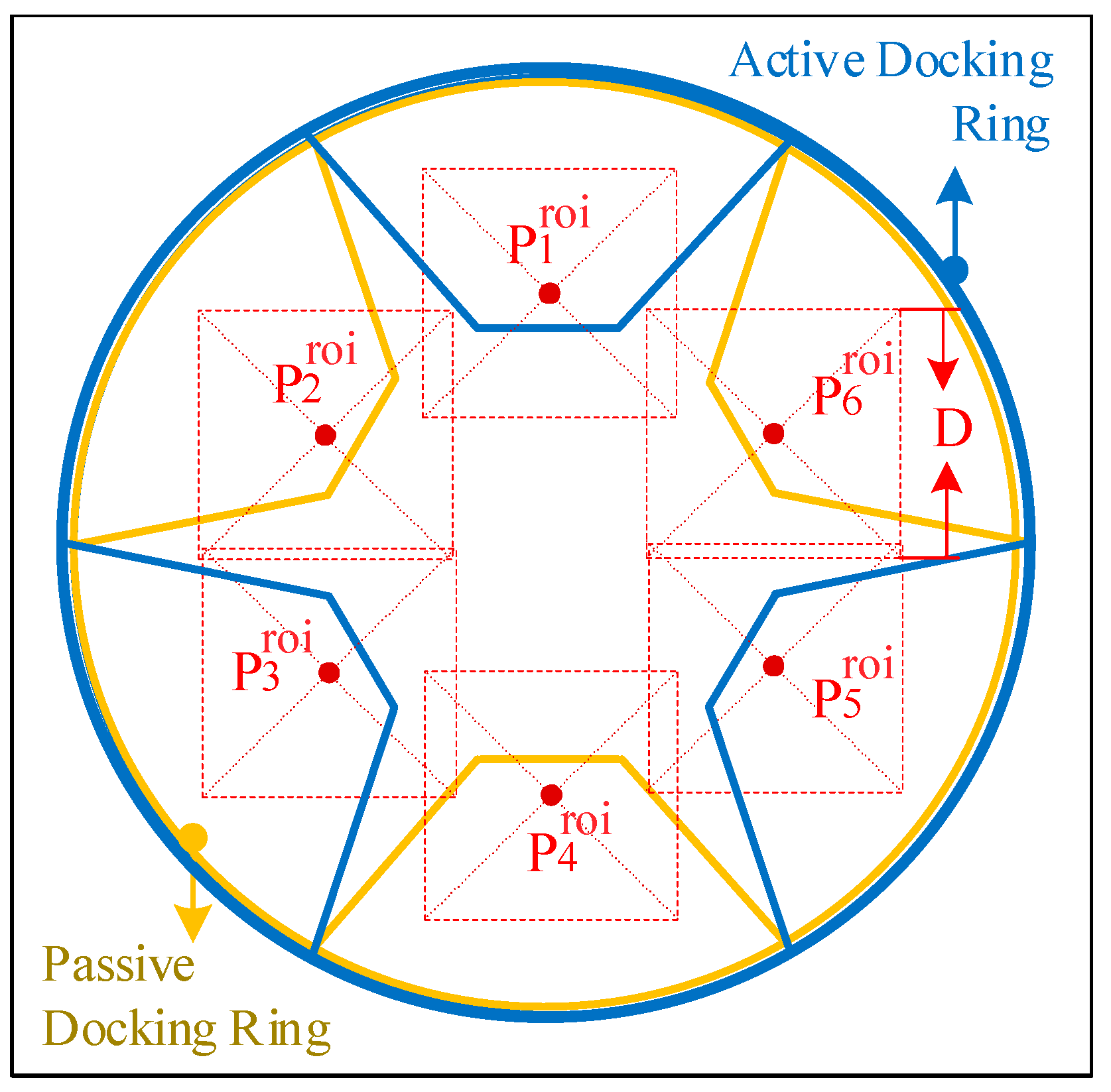

3.2. Design of the Active Light Source

4. Key Algorithms of the Monocular Vision System

4.1. Multitarget Tracking

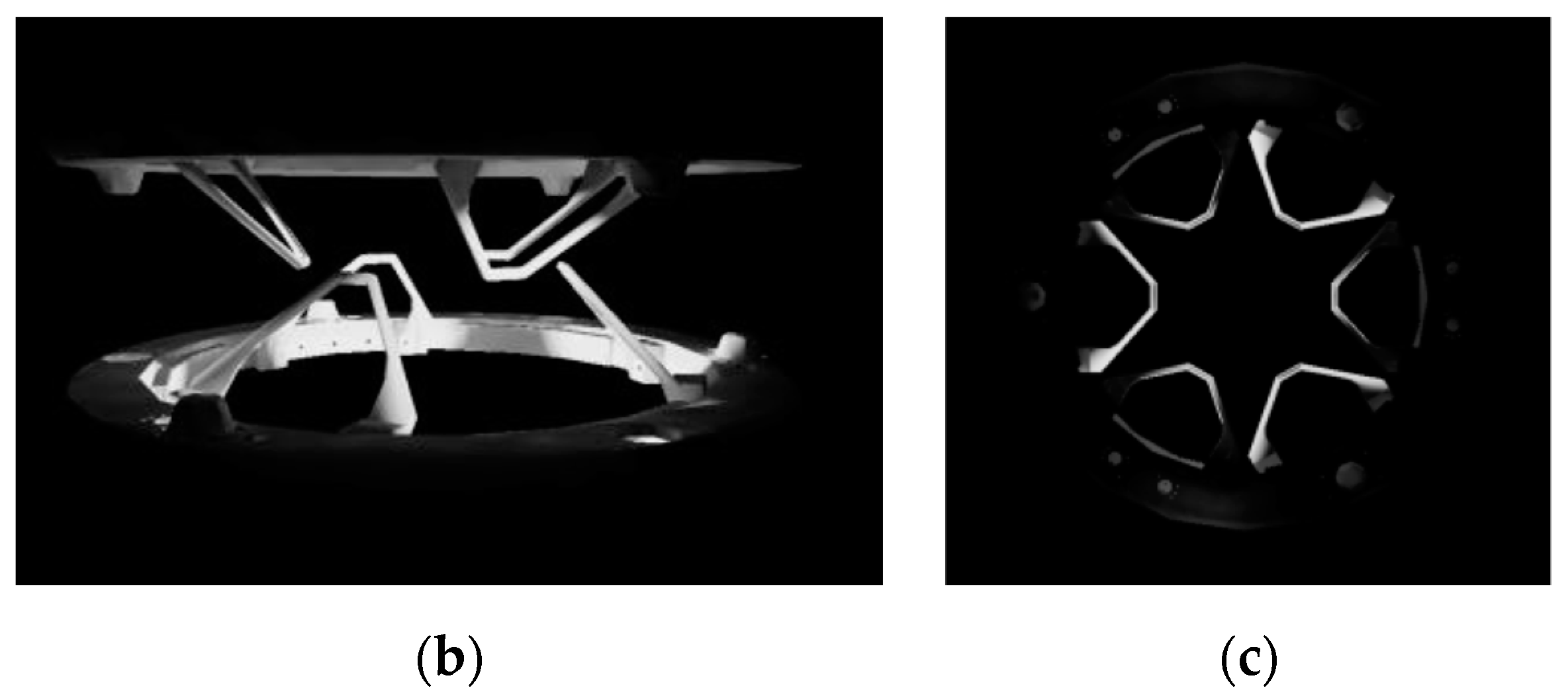

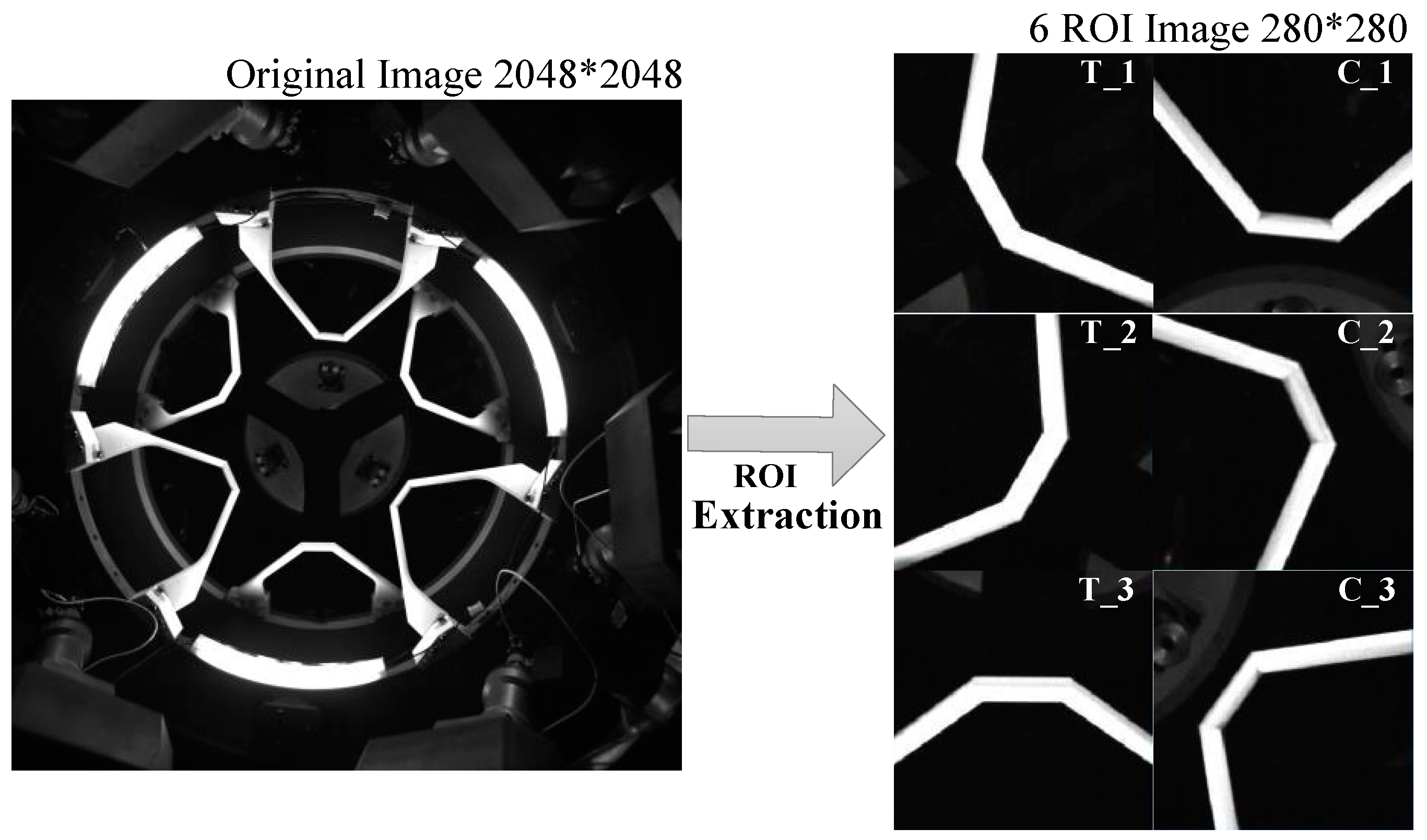

4.1.1. ROI Extraction

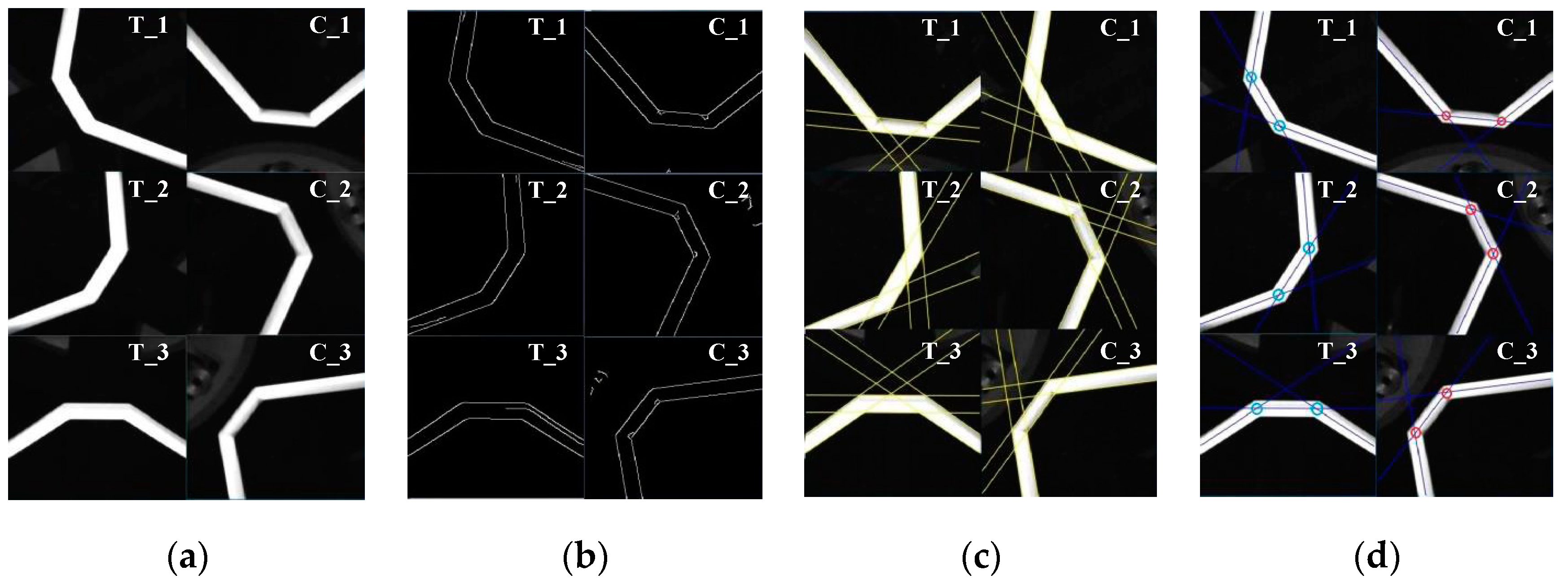

4.1.2. Image Processing

4.2. Pose Determination

4.2.1. Feature Correspondence

4.2.2. Solution of the PnP Problem

4.2.3. Coordinate Conversion

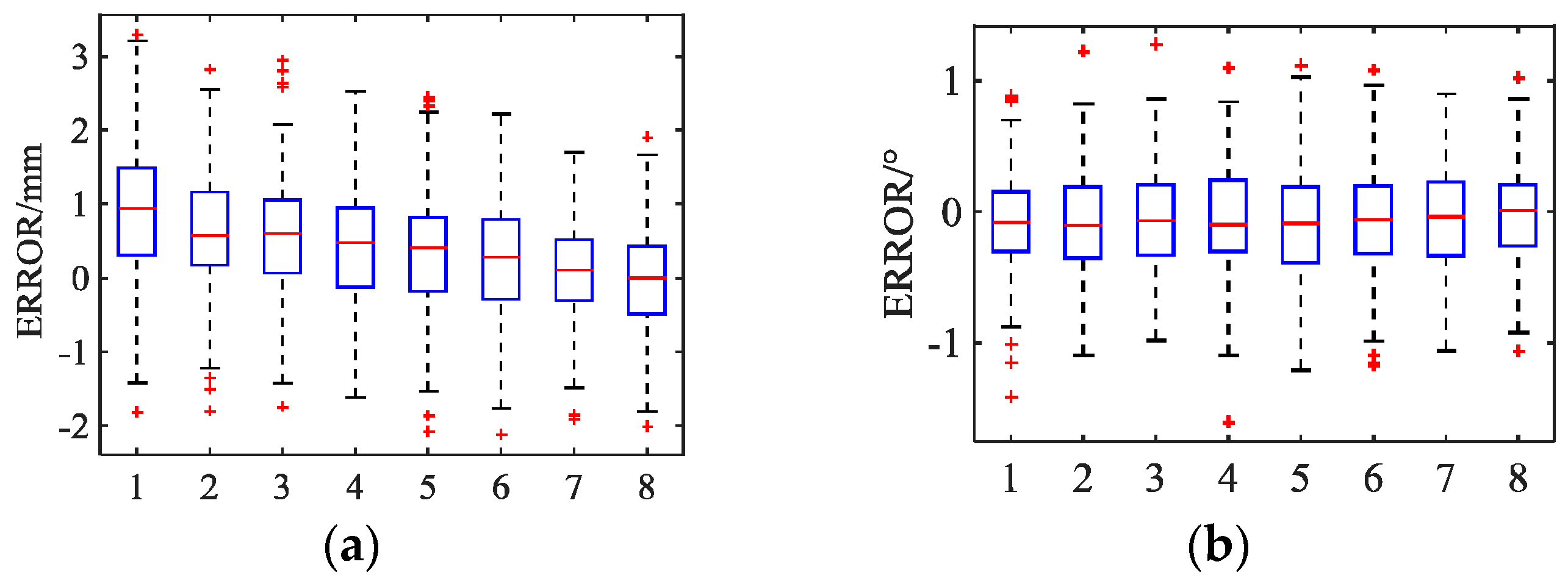

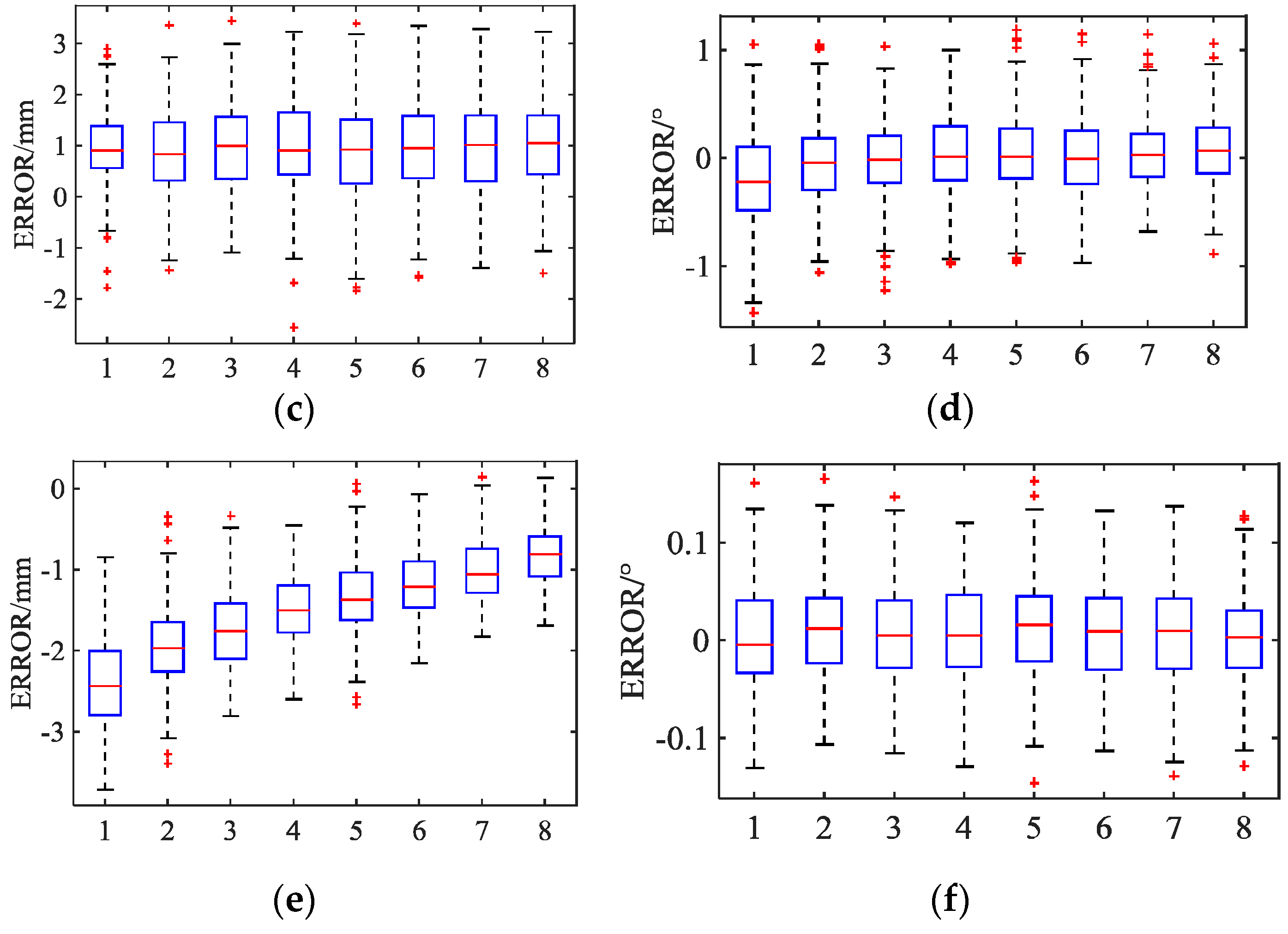

5. Ground-Based Semi-Physical Simulation Experiments

6. Conclusions

- This paper proposed a unified framework for determining the relative pose between two docking mechanisms, which reduces the dependence of artificial beacons or the specific shape of the target spacecraft and the introduction of (manufacturing and assembly) error. Therefore, the novel method can be widely applied for low impact docking.

- The fusion of pose information and the optimization of the PnP problem solution greatly improve the real-time performance and the robustness of pose determination.

- The experiments verified that the method can be used to determinate the relative pose between two docking mechanisms in close proximity for low impact docking. Meanwhile the measurement accuracy and the speed of the proposed method are superior to those of the PXS. The position measurement error is within 3.7 mm, and the rotation error around the docking direction is less than 0.16°, corresponding to a measurement time reduction of 85%.

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Zimpfer, D.; Kachmar, P.; Tuohy, S. Autonomous rendezvous, capture and in-space assembly: Past, present and future. In Proceedings of the 1st Space Exploration Conference: Continuing the Voyage of Discovery, Orlando, FL, USA, 30 January–1 February 2005; p. 2523. [Google Scholar]

- Flores-Abad, A.; Ma, O.; Pham, K.; Ulrich, S. A review of space robotics technologies for on-orbit servicing. Prog. Aerosp. Sci. 2014, 68, 1–26. [Google Scholar] [CrossRef]

- Kubota, T.; Sawai, S.; Hashimoto, T.; Kawaguchi, J. Robotics and autonomous technology for asteroid sample return mission. In Proceedings of the 12th International Conference on Advanced Robotics, Seattle, WA, USA, 18–20 July 2005; pp. 31–38. [Google Scholar]

- Cheng, A.F. Near Earth asteroid rendezvous: Mission summary. Asteroids III 2002, 1, 351–366. [Google Scholar]

- Bonnal, C.; Ruault, J.-M.; Desjean, M.-C. Active debris removal: Recent progress and current trends. Acta Astronaut. 2013, 85, 51–60. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Kasai, T.; Oda, M.; Suzuki, T. Results of the ETS-7 Mission-Rendezvous docking and space robotics experiments. In Proceedings of the Artificial Intelligence, Robotics and Automation in Space, Tsukuba, Japan, 1–3 June 1999; Volume 440, p. 299. [Google Scholar]

- Ohkami, Y.; Kawano, I. Autonomous rendezvous and docking by engineering test satellite VII: A challenge of Japan in guidance, navigation and control—Breakwell memorial lecture. Acta Astronaut. 2003, 53, 1–8. [Google Scholar] [CrossRef]

- Mokuno, M.; Kawano, I.; Suzuki, T. In-orbit demonstration of rendezvous laser radar for unmanned autonomous rendezvous docking. IEEE Trans. Aerosp. Electron. Syst. 2004, 40, 617–626. [Google Scholar] [CrossRef]

- Heaton, A.; Howard, R.; Pinson, R. Orbital express AVGS validation and calibration for automated rendezvous. In Proceedings of the AIAA/AAS Astrodynamics Specialist Conference and Exhibit, Honolulu, HI, USA, 17 August 2008; p. 6937. [Google Scholar]

- Howard, R.T.; Heaton, A.F.; Pinson, R.M.; Carrington, C.L.; Lee, J.E.; Bryan, T.C.; Robertson, B.A.; Spencer, S.H.; Johnson, J.E. The advanced video guidance sensor: Orbital Express and the next generation. AIP Conf. Proc. 2008, 969, 717–724. [Google Scholar]

- Benninghoff, H.; Tzschichholz, T.; Boge, T.; Gaias, G. A far range image processing method for autonomous tracking of an uncooperative target. In Proceedings of the 12th Symposium on Advanced Space Technologies in Robotics and Automation, Noordwijk, The Netherlands, 15–17 May 2013. [Google Scholar]

- Benn, M.; Jørgensen, J.L. Short range pose and position determination of spacecraft using a $μ$-advanced stellar compass. In Proceedings of the 3rd International Symposium on Formation Flying, Missions and Technologies, Noordwijk, The Netherlands, 23–25 April 2008. [Google Scholar]

- Persson, S.; Bodin, P.; Gill, E.; Harr, J.; Jörgensen, J. PRISMA--An autonomous formation flying mission. In Proceedings of the ESA Small Satellite Systems and Services Symposium (4S), Sardinia, Italy, 25–29 September 2006; pp. 25–29. [Google Scholar]

- Sansone, F.; Branz, F.; Francesconi, A. A relative navigation sensor for CubeSats based on LED fiducial markers. Acta Astronaut. 2018, 146, 206–215. [Google Scholar] [CrossRef]

- Pirat, C.; Ankersen, F.; Walker, R.; Gass, V. Vision Based Navigation for Autonomous Cooperative Docking of CubeSats. Acta Astronaut. 2018, 146, 418–434. [Google Scholar] [CrossRef]

- Liu, C.; Hu, W. Relative pose estimation for cylinder-shaped spacecrafts using single image. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 3036–3056. [Google Scholar] [CrossRef]

- Du, X.; Liang, B.; Xu, W.; Qiu, Y. Pose measurement of large non-cooperative satellite based on collaborative cameras. Acta Astronaut. 2011, 68, 2047–2065. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, F.; Hao, Y.; Pan, W. Rectangular-structure-based pose estimation method for non-cooperative rendezvous. Appl. Opt. 2018, 57, 6164–6173. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.-H.; Liang, B.; Pan, L.; Li, Z.-H.; Zhang, Y.-C. A monocular structured light vision method for pose determination of large non-cooperative satellites. Int. J. Control. Autom. Syst. 2016, 14, 1535–1549. [Google Scholar] [CrossRef]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Kneip, L.; Li, H.; Seo, Y. Upnp: An optimal o (n) solution to the absolute pose problem with universal applicability. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 127–142. [Google Scholar]

- Li, S.; Xu, C.; Xie, M. A robust O (n) solution to the perspective-n-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef] [PubMed]

- Fortuna, L.; Arena, P.; Bâlya, D.; Zarândy, A. Cellular neural networks: A paradigm for nonlinear spatio-temporal processing. IEEE Circuits Syst. Mag. 2001, 1, 6–21. [Google Scholar] [CrossRef]

| Name | Abbreviation | Origin and Direction |

|---|---|---|

| Camera coordinate system | CCS | OC: the optical center of camera; +ZC: pointing toward the target; +YC, +XC: respectively parallel to the image plane coordinate system. |

| Docking mechanism coordinate system of chasing spacecraft | DMCS_CS | OCS: center of docking ring; +ZCS: closing direction; +YCS: line of symmetry through petal number 3; +XCS: forms a right-handed coordinate system. |

| Docking mechanism coordinate system of target spacecraft | DMCS_TS | OTS: center of docking ring; XTS, YTS, ZTS: analogous to XCS, YCS, ZCS. |

| Marks coordinate system | MCS | —— |

| Chasing spacecraft coordinate system | CSCS | O1: CG 1 of chasing spacecraft; +Z1: closing direction; +Y1: analogous to + YCS; +X1: forms a right-handed coordinate system. |

| Target spacecraft coordinate system | TSCS | O2: CG 1 of target spacecraft; X2, Y2, Z2: analogous to X1, Y1, Z1. |

| T-Mac | Uncertainty |

|---|---|

| Accuracy of rotation angles | 0.01° = 18 µm/100 mm (0.002″/ft) |

| Accuracy of time stamps | ±5 ms |

| Positional accuracy (for one single coordinate, X, Y or Z) | ±15 µm + 6 µm/m (±0.0006″ + 0.00007″/ft) |

| Group | X/mm | Y/mm | Z/mm | Rx/° | Ry/° | Rz/° |

|---|---|---|---|---|---|---|

| 1 | −25/0/25 | 110 | −5/0/5 | |||

| 2 | −25/0/25 | 100 | −2.5/0/2.5 | |||

| 3 | −25/0/25 | 90 | −2.5/0/2.5 | |||

| 4 | −20/0/20 | 80 | −2/0/2 | |||

| 5 | −20/0/20 | 70 | −2/0/2 | |||

| 6 | −15/0/15 | 60 | −1.5/0/1.5 | |||

| 7 | −15/0/15 | 50 | −1.5/0/1.5 | |||

| 8 | −10/0/10 | 40 | −2/0/2 | |||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; Xu, C.; Zhu, Y.; Zhao, J. Monocular Vision-Based Pose Determination in Close Proximity for Low Impact Docking. Sensors 2019, 19, 3261. https://doi.org/10.3390/s19153261

Liu G, Xu C, Zhu Y, Zhao J. Monocular Vision-Based Pose Determination in Close Proximity for Low Impact Docking. Sensors. 2019; 19(15):3261. https://doi.org/10.3390/s19153261

Chicago/Turabian StyleLiu, Gangfeng, Congcong Xu, Yanhe Zhu, and Jie Zhao. 2019. "Monocular Vision-Based Pose Determination in Close Proximity for Low Impact Docking" Sensors 19, no. 15: 3261. https://doi.org/10.3390/s19153261

APA StyleLiu, G., Xu, C., Zhu, Y., & Zhao, J. (2019). Monocular Vision-Based Pose Determination in Close Proximity for Low Impact Docking. Sensors, 19(15), 3261. https://doi.org/10.3390/s19153261