Structured Light Three-Dimensional Measurement Based on Machine Learning

Abstract

1. Introduction

2. Methods

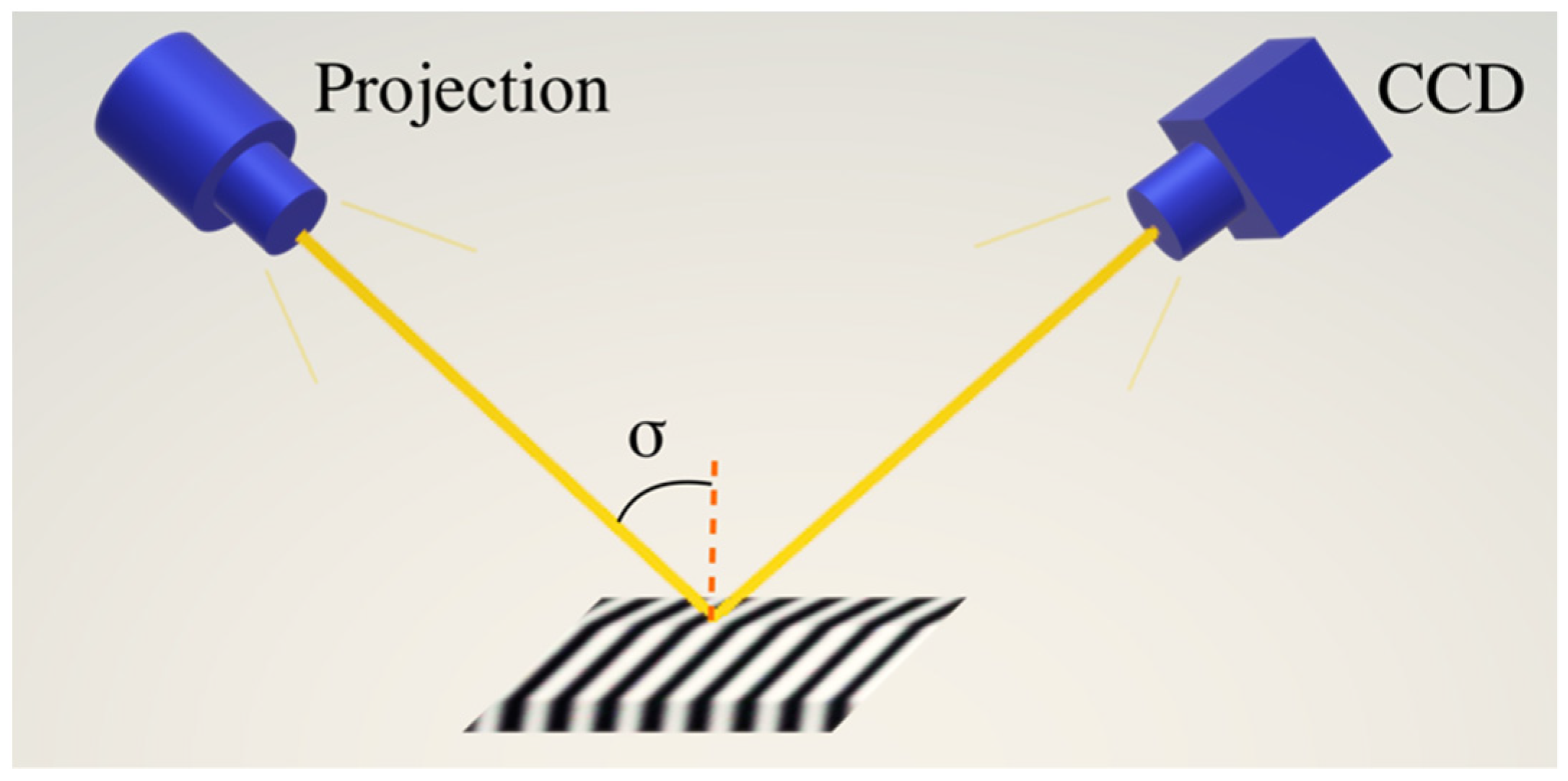

2.1. Structured Light Three-Dimensional Measurement

2.2. Least Squares Support Vector Machine Regression

3. Experiment and Results

3.1. Algorithms and Models

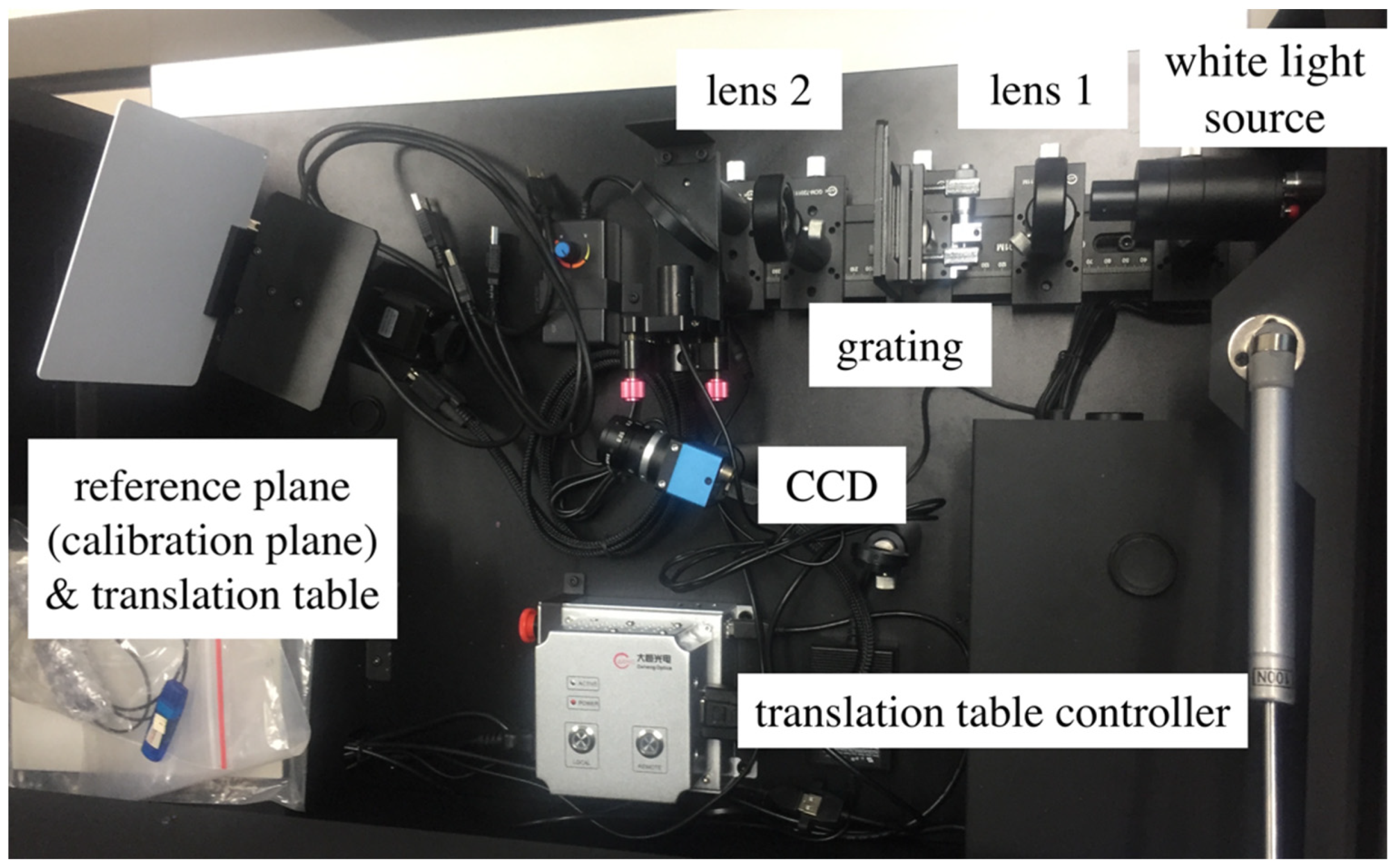

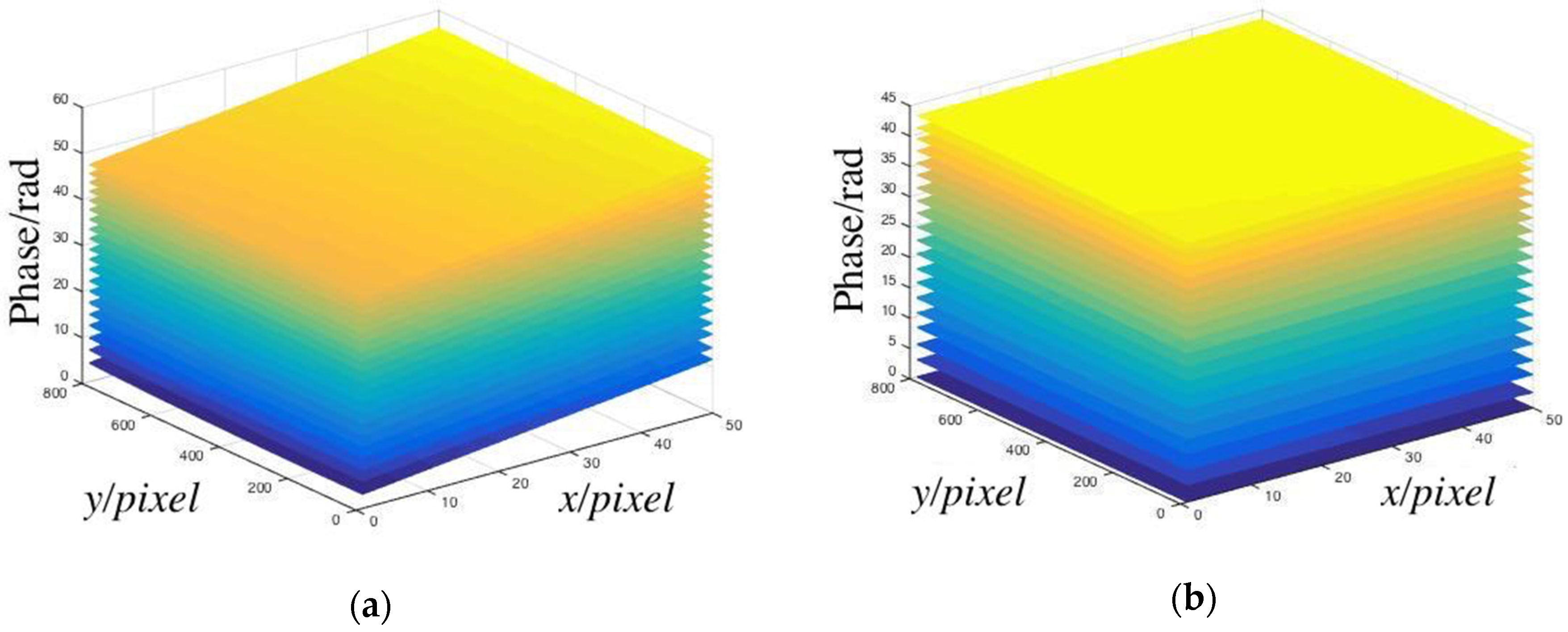

3.2. Calibration

3.3. Measurement and Results

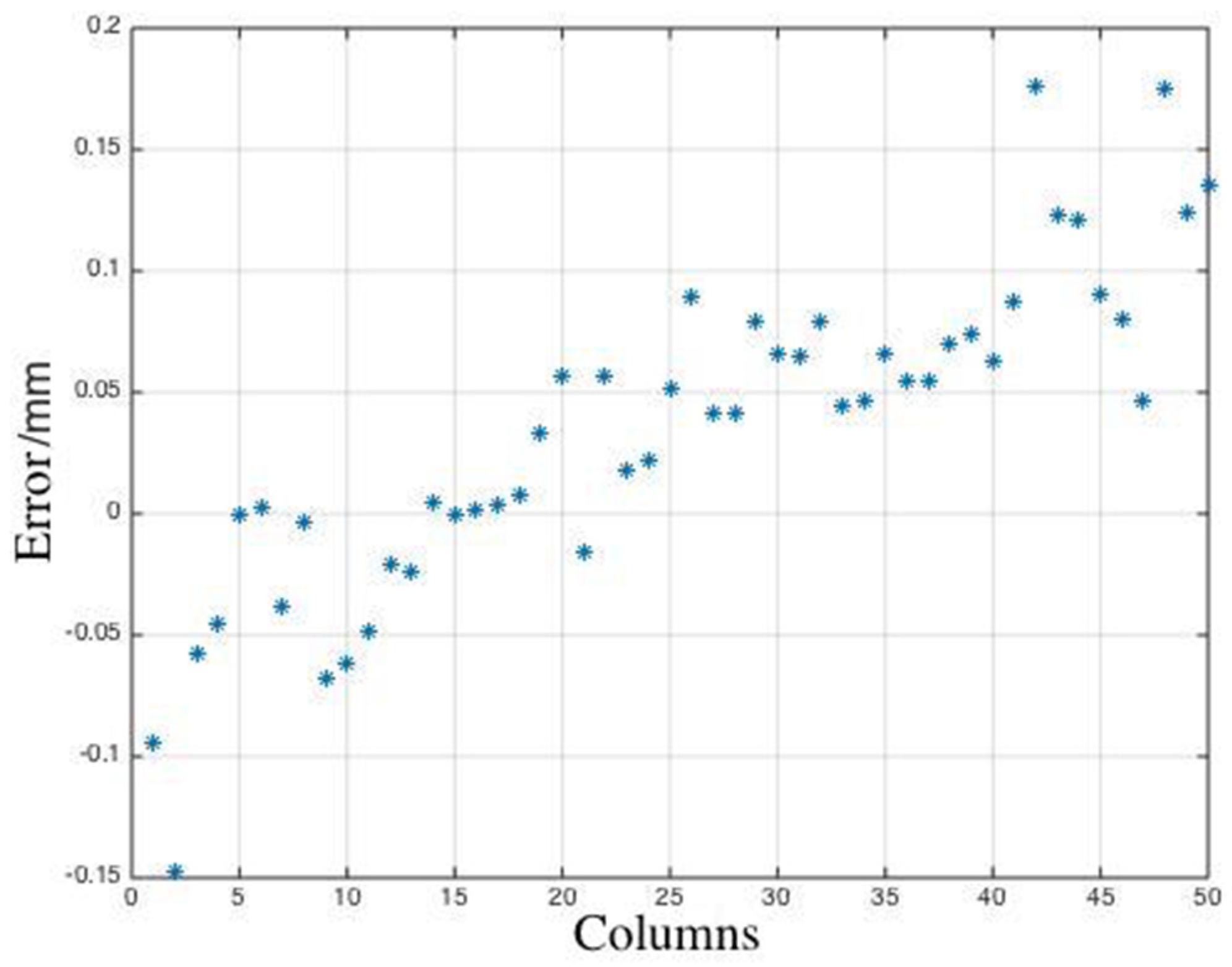

3.3.1. Planar Surface

3.3.2. Curved Surface

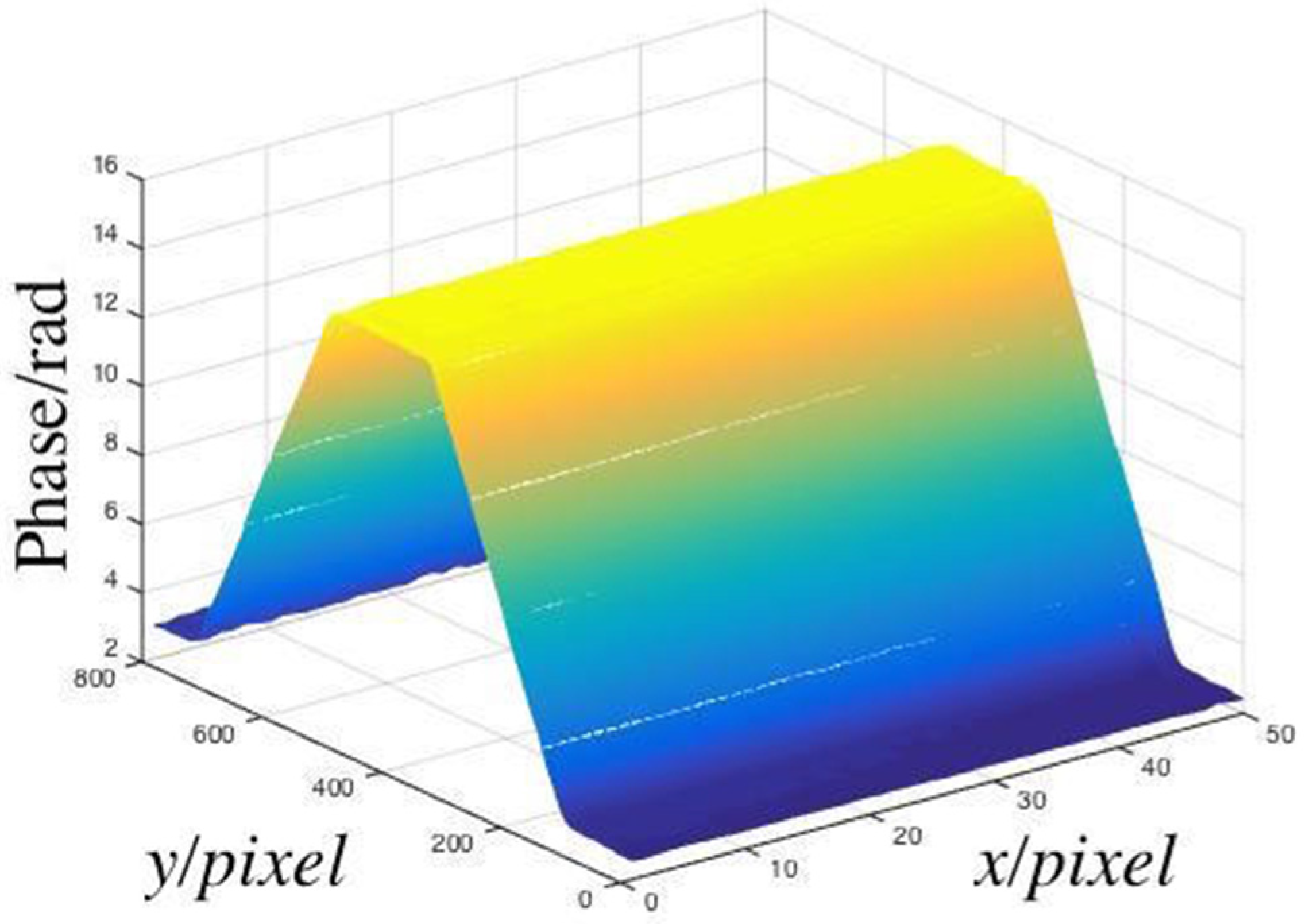

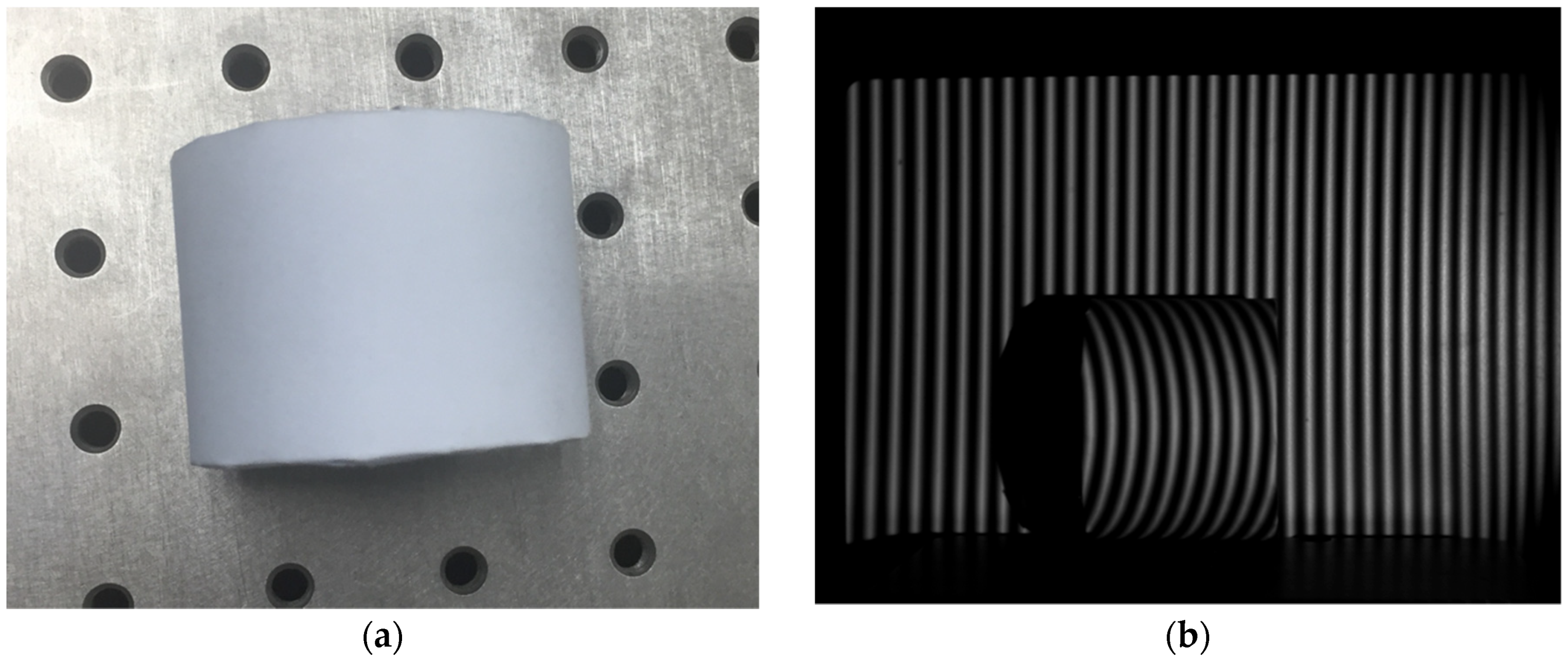

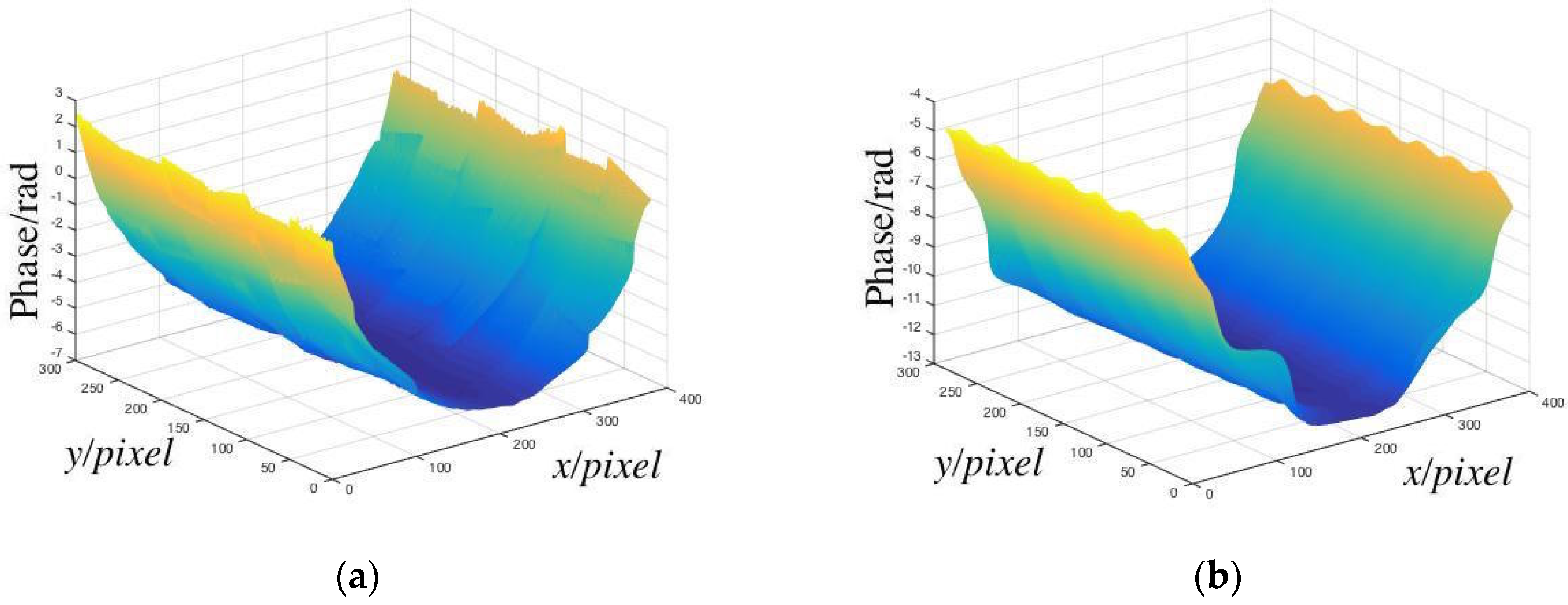

Simple Curved Surface

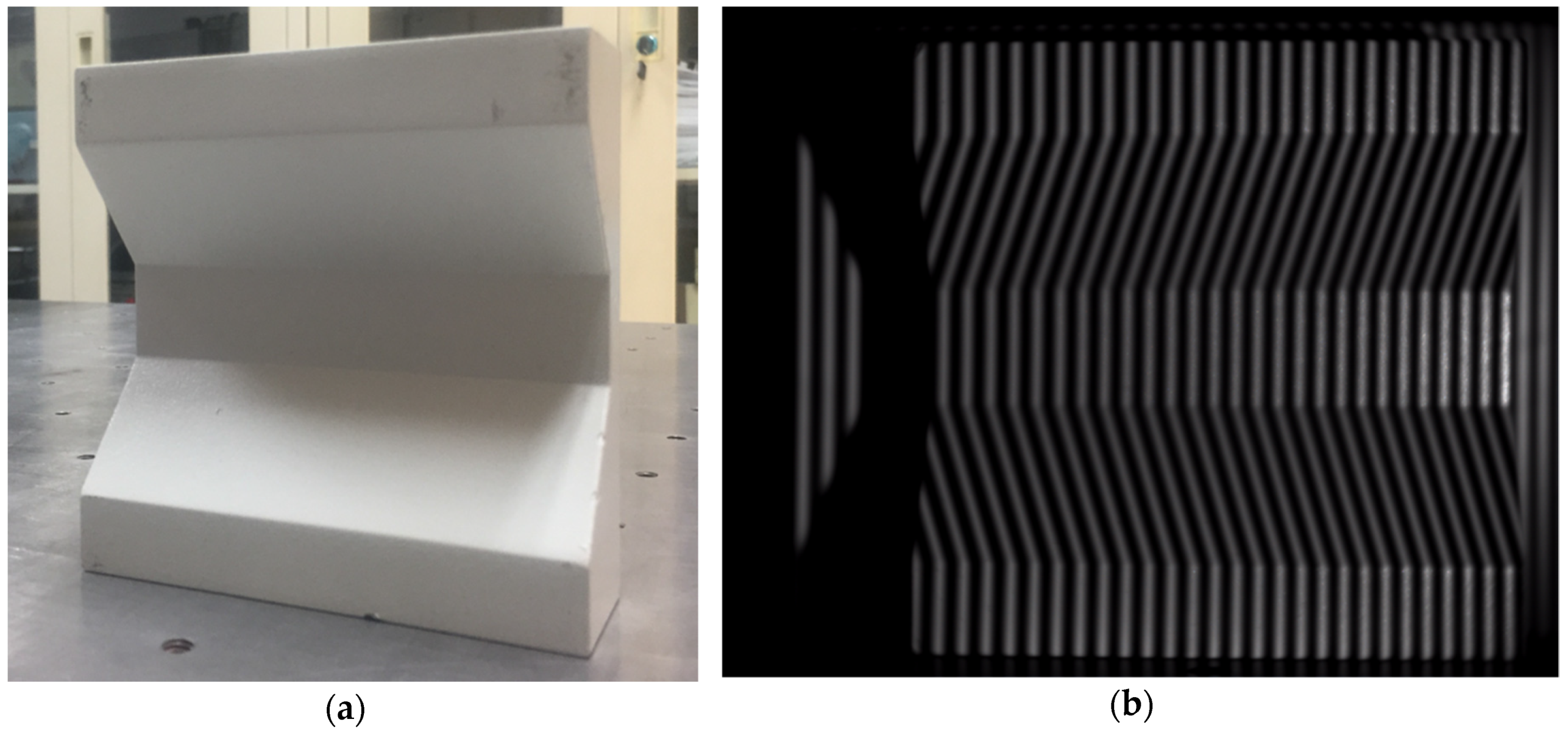

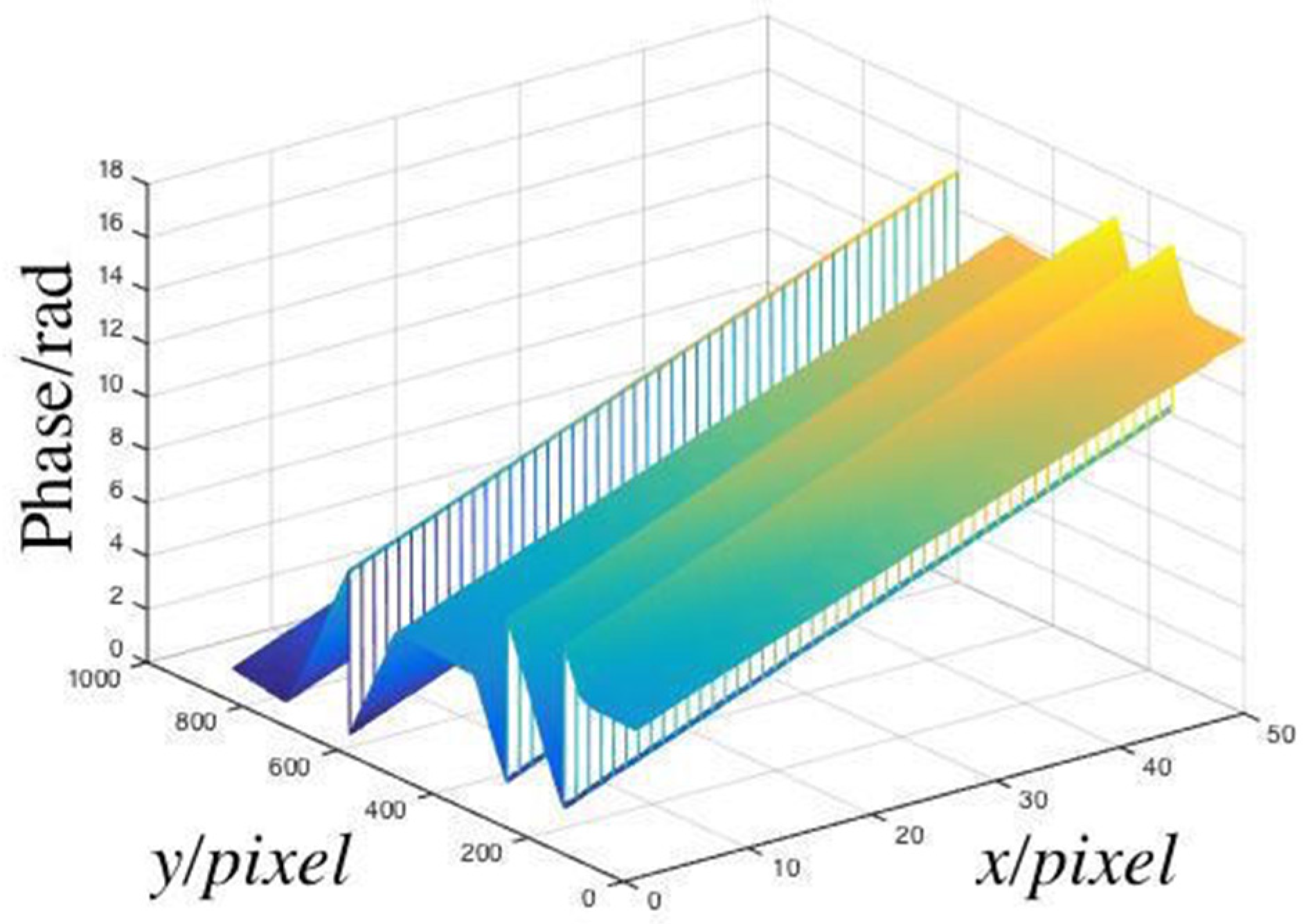

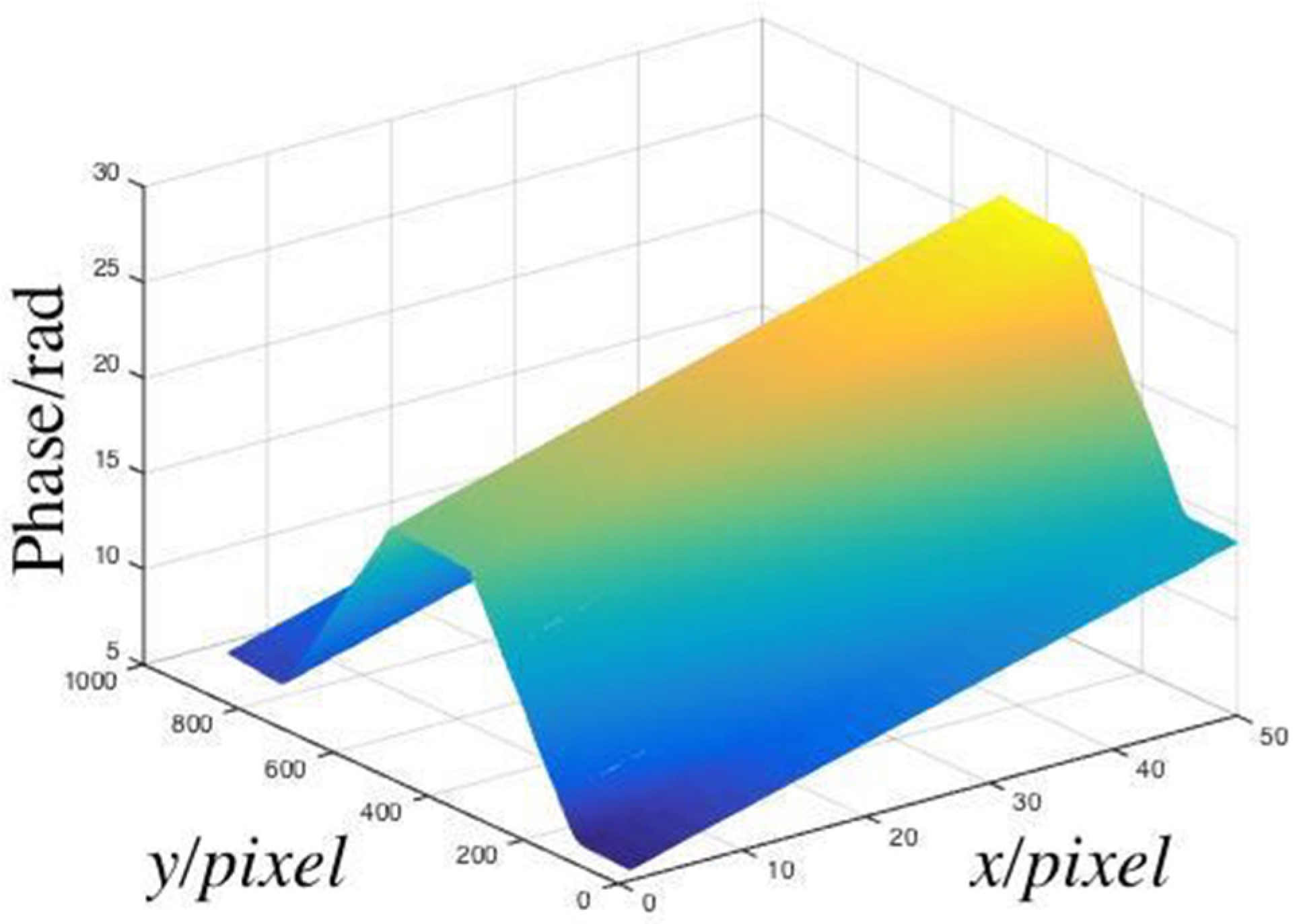

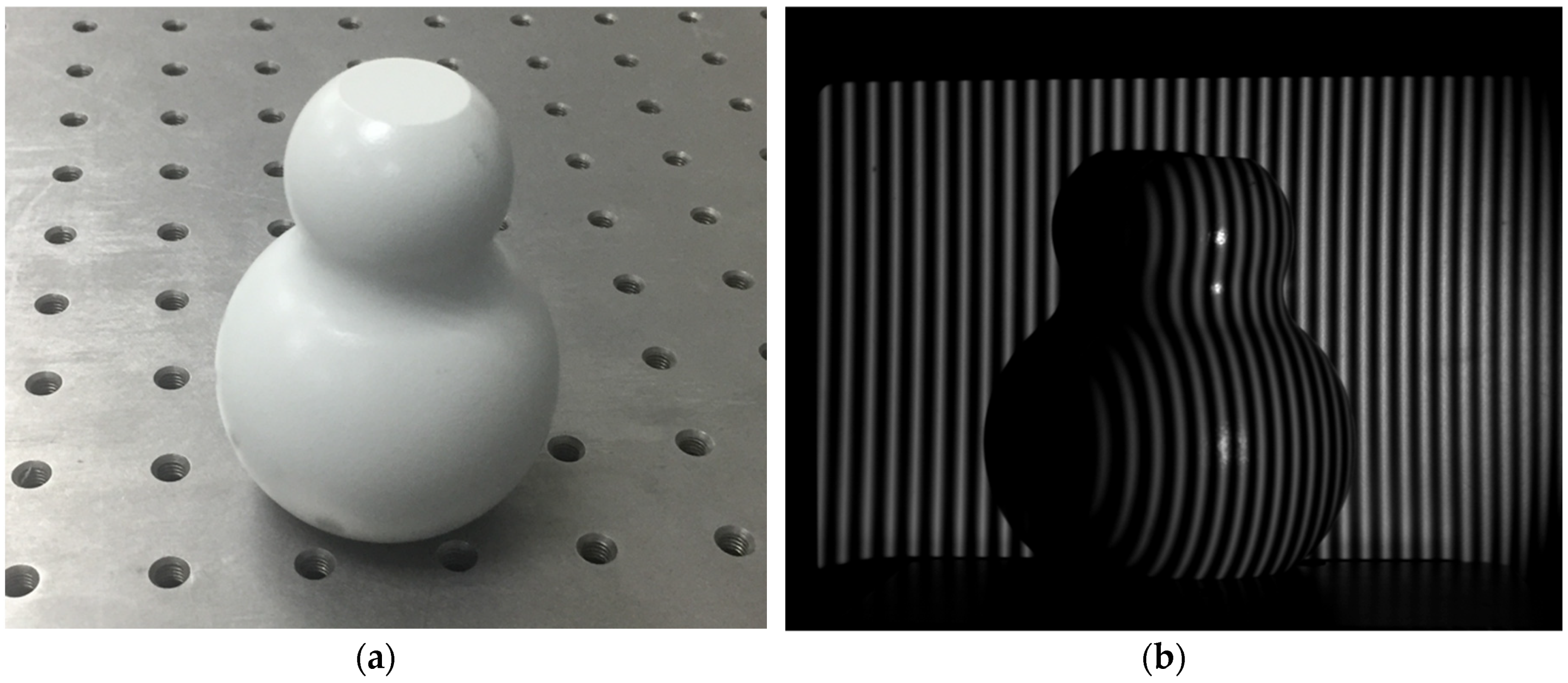

Complex Curved Surface

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Der Jeught, S.V.; Dirckx, J.J.J. Real-time structured light profilometry: a review. Opt. Lasers Eng. 2016, 87, 18–31. [Google Scholar] [CrossRef]

- Xie, K.; Liu, W.Y.; Pu, Z.B. Three-dimensional vision inspection based on structured light projection and neurocalibration. In Proceedings of the Fundamental Problems of Optoelectronics and Microelectronics III, Harbin, China, 12–14 September 2006; p. 65954J. [Google Scholar]

- Wang, X.; Xie, Z.; Wang, K.; Zhou, L. Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects. Sensors 2018, 18, 3567. [Google Scholar] [CrossRef] [PubMed]

- Xue, J.; Zhang, Q.; Li, C.; Lang, W.; Wang, M.; Hu, Y. 3D Face Profilometry Based on Galvanometer Scanner with Infrared Fringe Projection in High Speed. Appl. Sci. 2019, 9, 1458. [Google Scholar] [CrossRef]

- Chen, Z.; Ho, S.-Y.; Tseng, D.-C. Polyhedral face reconstruction and modeling from a single image with structured light. IEEE Trans. Syst. Man Cybern. 1993, 23, 864–872. [Google Scholar] [CrossRef][Green Version]

- Ozturk, A.O.; Halici, U.; Ulusoy, I.; Akagunduz, E. 3D face reconstruction using stereo images and structured light. In Proceedings of the IEEE 16th Signal Processing, Communication and Applications Conference, Aydin, Turkey, 20–22 April 2008; pp. 1–4. [Google Scholar]

- Xue, B.; Chang, B.; Peng, G.; Gao, Y.; Tian, Z.; Du, D.; Wang, G. A Vision Based Detection Method for Narrow Butt Joints and a Robotic Seam Tracking System. Sensors 2019, 19, 1144. [Google Scholar] [CrossRef]

- Chen, C.; Kak, A. Modeling and calibration of a structured light scanner for 3-D robot vision. In Proceedings of the 1987 IEEE International Conference on Robotics and Automation, Raleigh, NC, USA, 31 March–3 April 1987; pp. 807–815. [Google Scholar]

- Escalera, A.D.L.; Moreno, L.; Salichs, M.A.; Armingol, J.M. Continuous mobile robot localization by using structured light and a geometric map. Int. J. Syst. Sci. 1996, 27, 771–782. [Google Scholar] [CrossRef]

- Carré, P. Installation et utilisation du comparateur photoélectrique et interférentiel du Bureau International des Poids et Mesures. Metrologia 1966, 2, 13. [Google Scholar] [CrossRef]

- Takeda, M.; Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 1983, 22, 3977. [Google Scholar] [CrossRef]

- Roddier, C.A.; Roddier, F. Interferogram analysis using Fourier transform techniques. Appl. Opt. 1987, 26, 1668–1673. [Google Scholar] [CrossRef]

- Herraez, M.A.; Burton, D.R.; Lalor, M.J. Accelerating fast Fourier transform and filtering operations in Fourier fringe analysis for accurate measurement of three-dimensional surfaces. Opt. Lasers Eng. 1999, 31, 135–145. [Google Scholar] [CrossRef]

- Li, J.; Su, X.; Guo, L. Improved Fourier transform profilometry for the automatic measurement of three-dimensional object shapes. Opt. Eng. 1990, 29, 1439–1444. [Google Scholar]

- Feng, S.; Chen, Q.; Zuo, C.; Sun, J.; Tao, T.; Hu, Y. A carrier removal technique for Fourier transform profilometry based on principal component analysis. Opt. Lasers Eng. 2015, 74, 80–86. [Google Scholar] [CrossRef]

- Song, K.; Hu, S.; Wen, X.; Yan, Y. Fast 3D shape measurement using Fourier transform profilometry without phase unwrapping. Opt. Lasers Eng. 2016, 84, 74–81. [Google Scholar] [CrossRef]

- Ghiglia, D.C.; Pritt, M.D. Two-Dimensional Phase Unwrapping: Theory, Algorithms, and Software; John Wiley and Sons: New York, NY, USA, 1998; Volume 4. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Mordohai, P.; Medioni, G. Tensor voting: a perceptual organization approach to computer vision and machine learning. Synth. Lect. Image Video Multimedia Process. 2006, 2, 1–136. [Google Scholar] [CrossRef]

- Rowley, H.A.; Baluja, S.; Kanade, T. Neural network-based face detection. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 23–38. [Google Scholar] [CrossRef]

- Yang, M.; Kriegman, D.J.; Ahuja, N. Detecting faces in images: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 34–58. [Google Scholar] [CrossRef]

- Armes, T.; Refern, M. Using Big Data and predictive machine learning in aerospace test environments. In Proceedings of the 2013 IEEE AUTOTESTCON, Schaumburg, IL, USA, 16–19 September 2013; pp. 1–5. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; McClelland, J.L. A general framework for parallel distributed processing. Parallel Distrib. Process. Explor. Microstruct. Cognit. 1986, 1, 26. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Waibel, A.; Hanazawa, T.; Hinton, G.E.; Shikano, K.; Lang, K.J. Phoneme recognition using time-delay neural networks. IEEE Trans. Acoust. Speech, Signal Process. 1989, 37, 393–404. [Google Scholar] [CrossRef]

- Suykens, J.A.K.; Vandewalle, J. Least Squares Support Vector Machine Classifiers. Neural Process. Lett. 1999, 9, 293–300. [Google Scholar] [CrossRef]

- Mitsuo, T.; Hideki, I.; Seiji, K. Fourier-transform method of fringe-pattern analysis for computer-based topography and interferometry. J. Opt. Soc. Am. 1982, 72, 156–160. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, C.; Gao, Z.; Wang, X.; Shao, S.; Gao, C. Structured Light Three-Dimensional Measurement Based on Machine Learning. Sensors 2019, 19, 3229. https://doi.org/10.3390/s19143229

Zhong C, Gao Z, Wang X, Shao S, Gao C. Structured Light Three-Dimensional Measurement Based on Machine Learning. Sensors. 2019; 19(14):3229. https://doi.org/10.3390/s19143229

Chicago/Turabian StyleZhong, Chuqian, Zhan Gao, Xu Wang, Shuangyun Shao, and Chenjia Gao. 2019. "Structured Light Three-Dimensional Measurement Based on Machine Learning" Sensors 19, no. 14: 3229. https://doi.org/10.3390/s19143229

APA StyleZhong, C., Gao, Z., Wang, X., Shao, S., & Gao, C. (2019). Structured Light Three-Dimensional Measurement Based on Machine Learning. Sensors, 19(14), 3229. https://doi.org/10.3390/s19143229