Detecting Anomalies of Satellite Power Subsystem via Stage-Training Denoising Autoencoders

Abstract

1. Introduction

- Definition of anomalous behavior—The boundary between normal and anomalous behavior is often not precise, so it is very difficult to define a normal region that encompasses every possible normal behavior. Thus, an anomalous observation that lies close to the boundary can actually be normal, and vice-versa.

- Irrelevant features—A high proportion of irrelevant features effectively creates noise in the input data, which masks the true anomalies. The challenge is to choose a subspace of the data that highlights the relevant attributes.

- Bias of Scores—Scores based on norms are biased toward high-dimensional subspaces, if they are not normalized appropriately. In particular, distances in different dimensionality (and thus distances measured in different subspaces) are not directly comparable.

2. Background

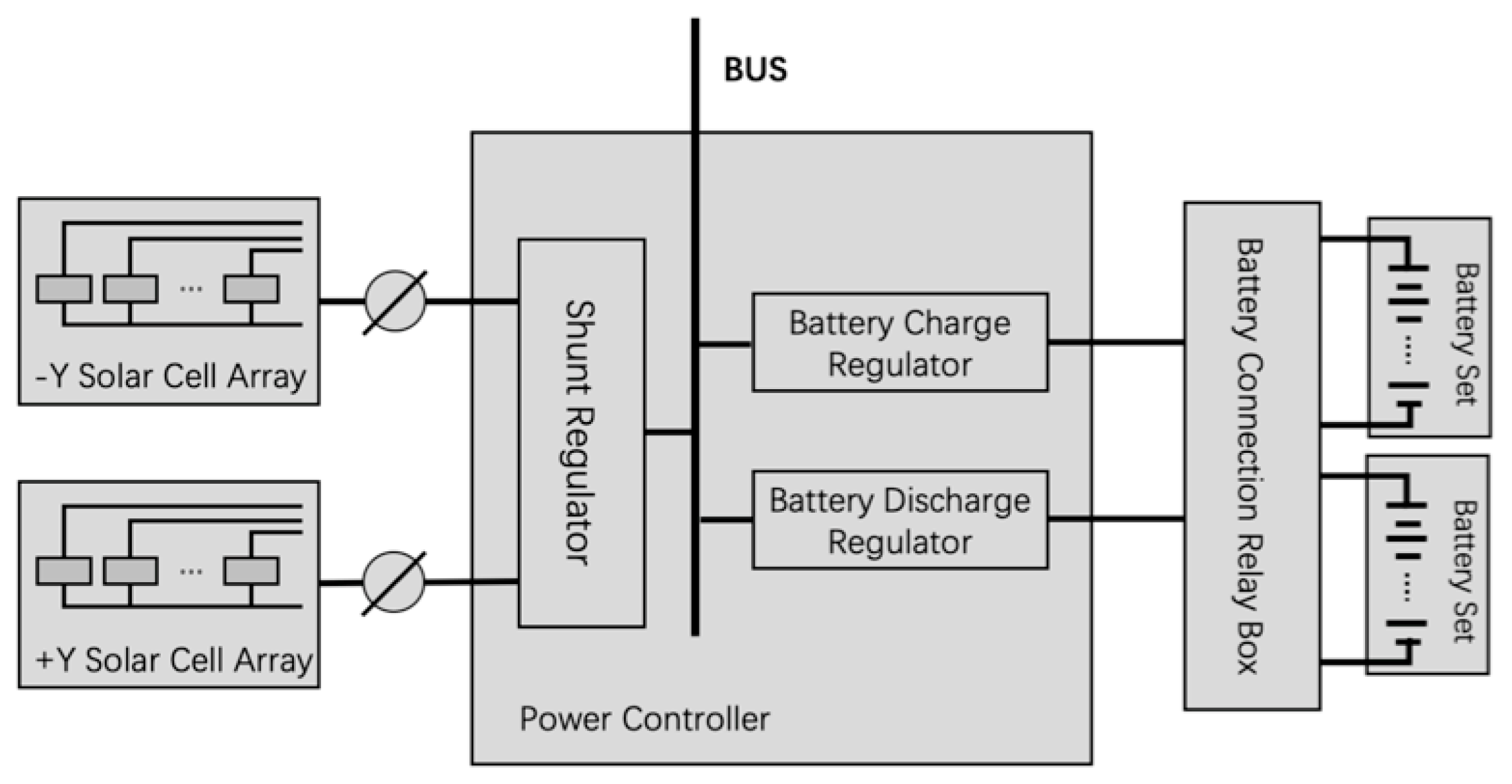

2.1. Satellite Power Subsystem Description

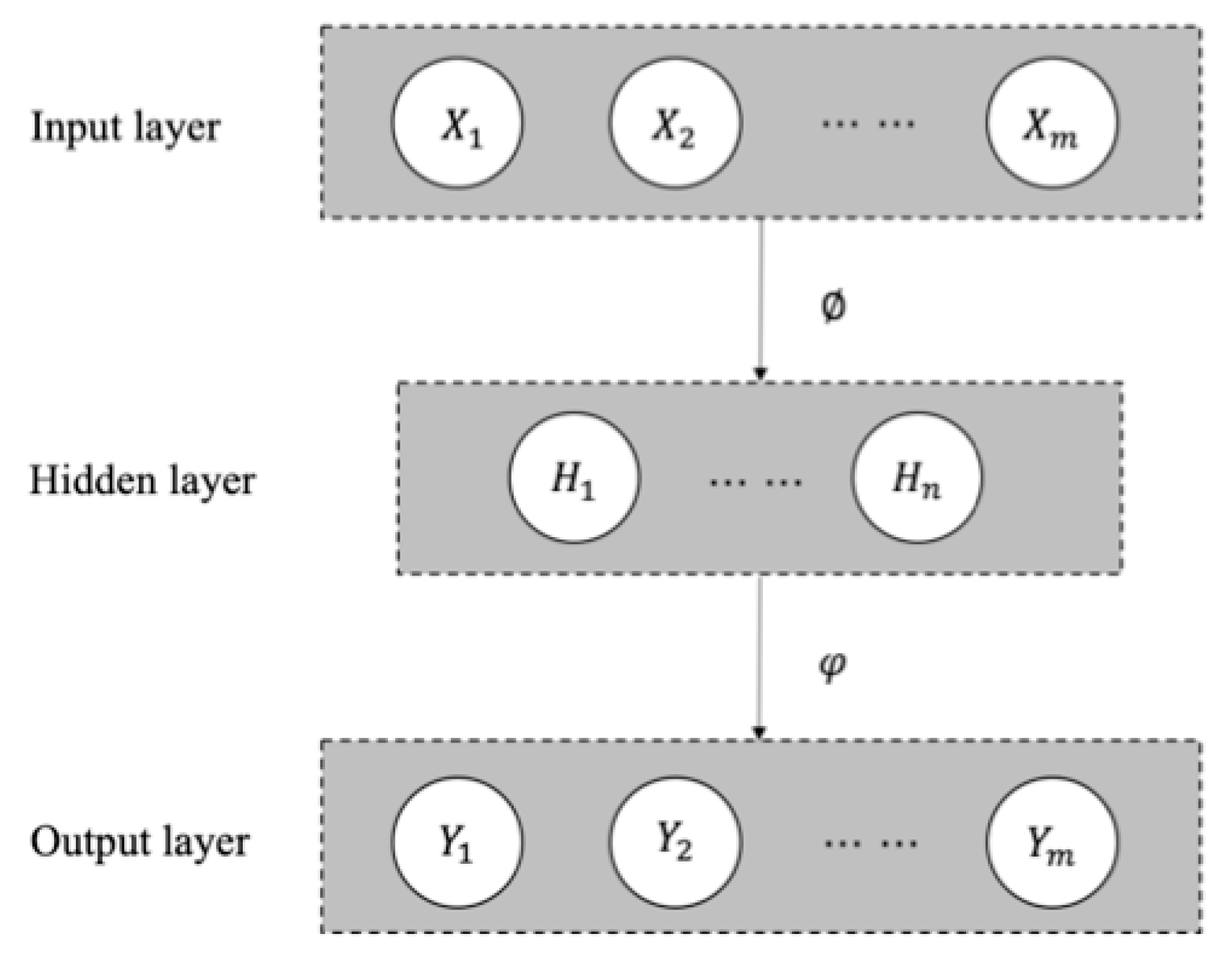

2.2. The Autoencoder

3. Research Methodology

3.1. Data Exploration and Preprocessing

3.2. Stage-Training Denoising Autoencoders

3.3. Performance Evaluation

3.1.1. Computing the Anomaly Scores and Anomaly Threshold

3.1.2. Model Evaluation

4. Experiment and Discussion

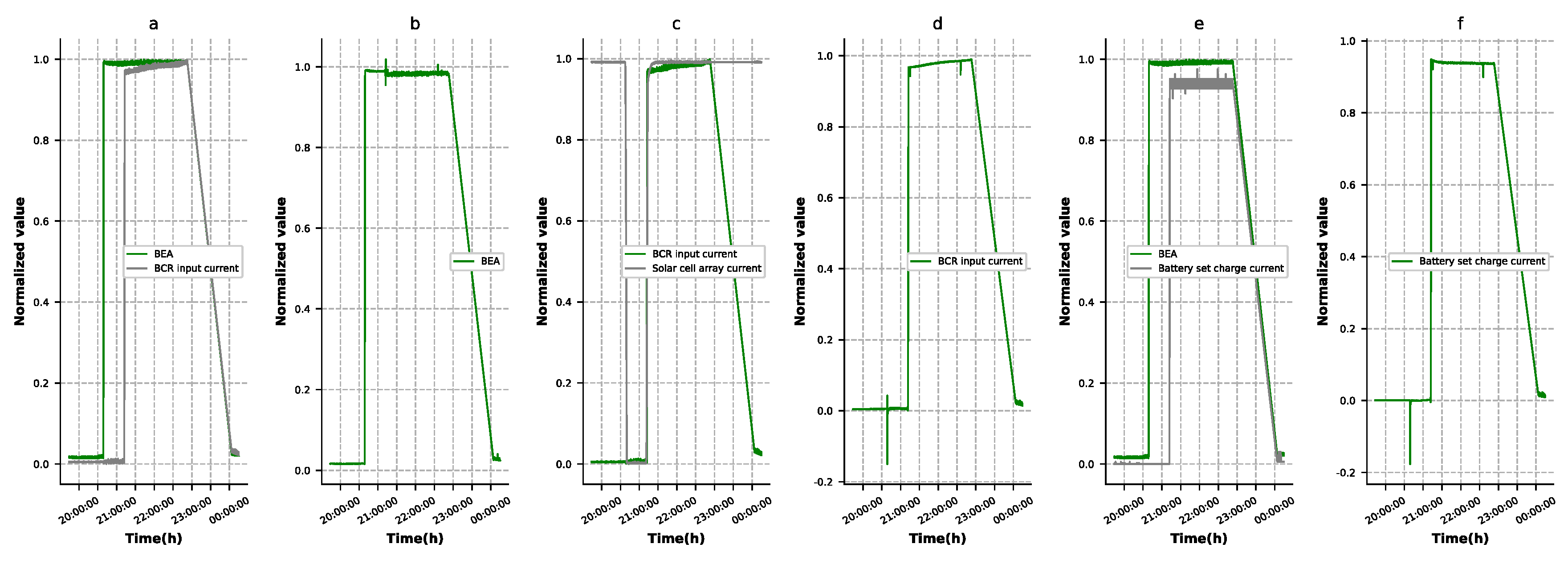

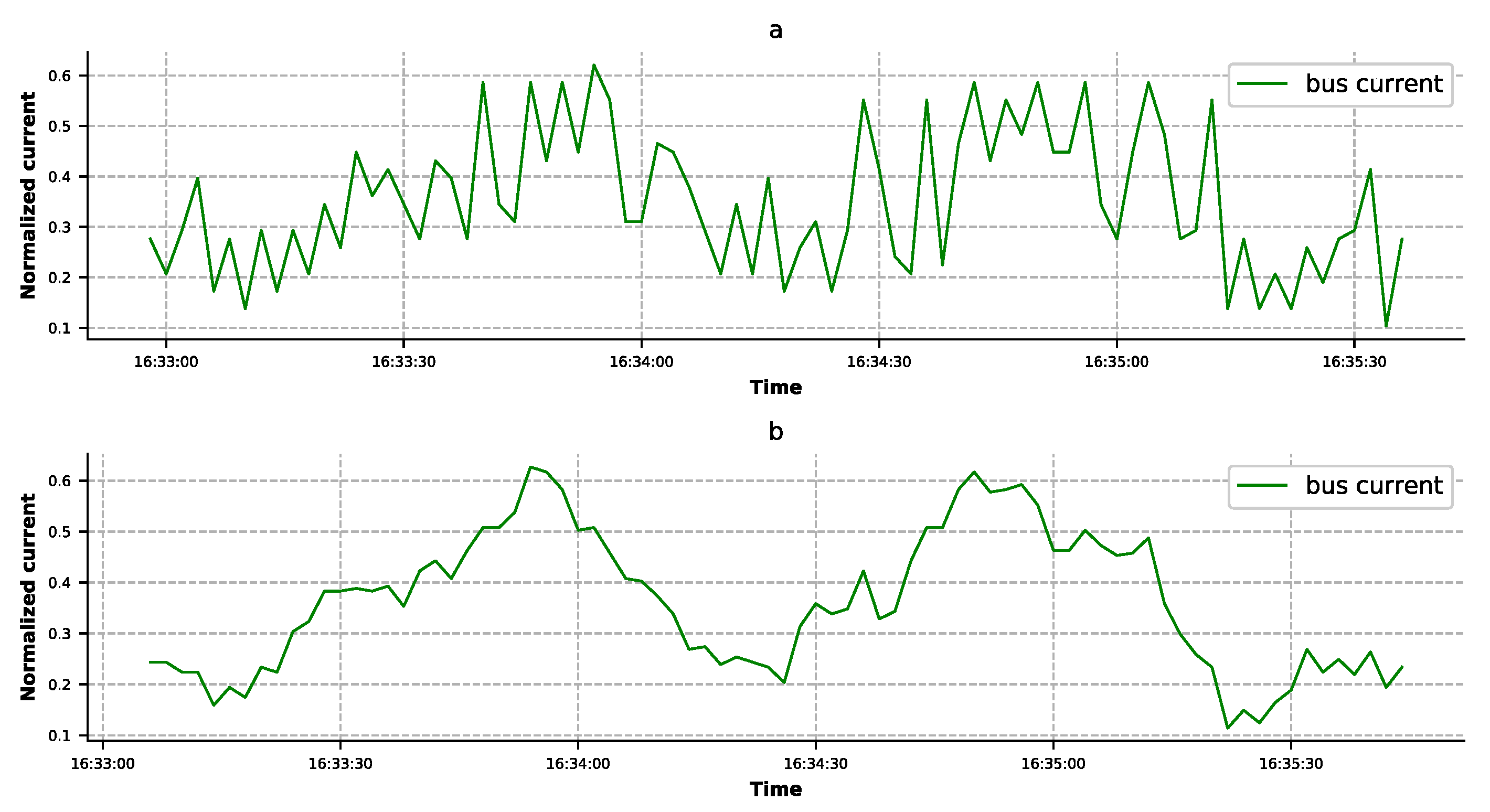

4.1. Data Exploration and Preprocessing of the Telemetry Data

- Bus current and temperature;

- Battery set whole voltage and battery set single voltage;

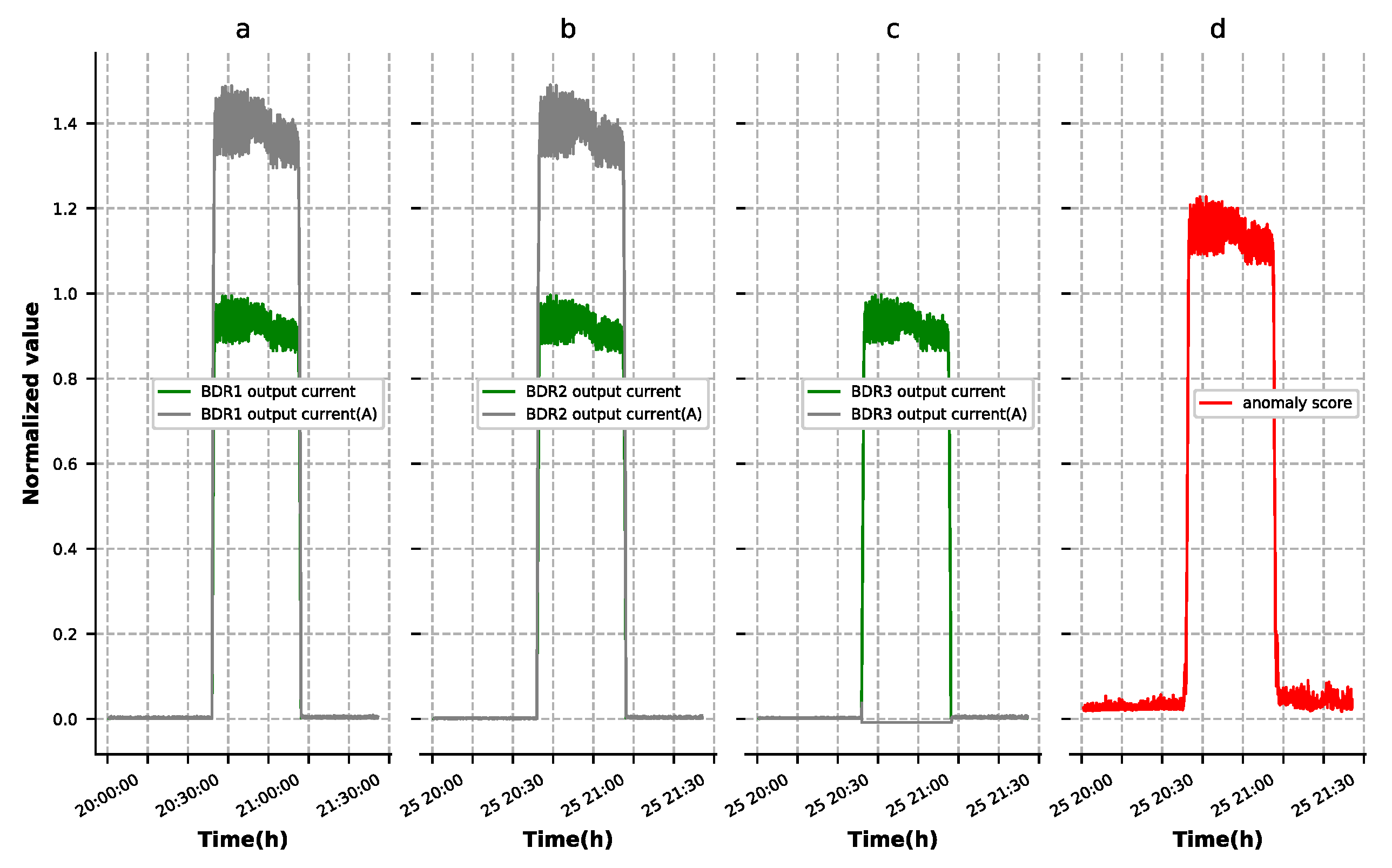

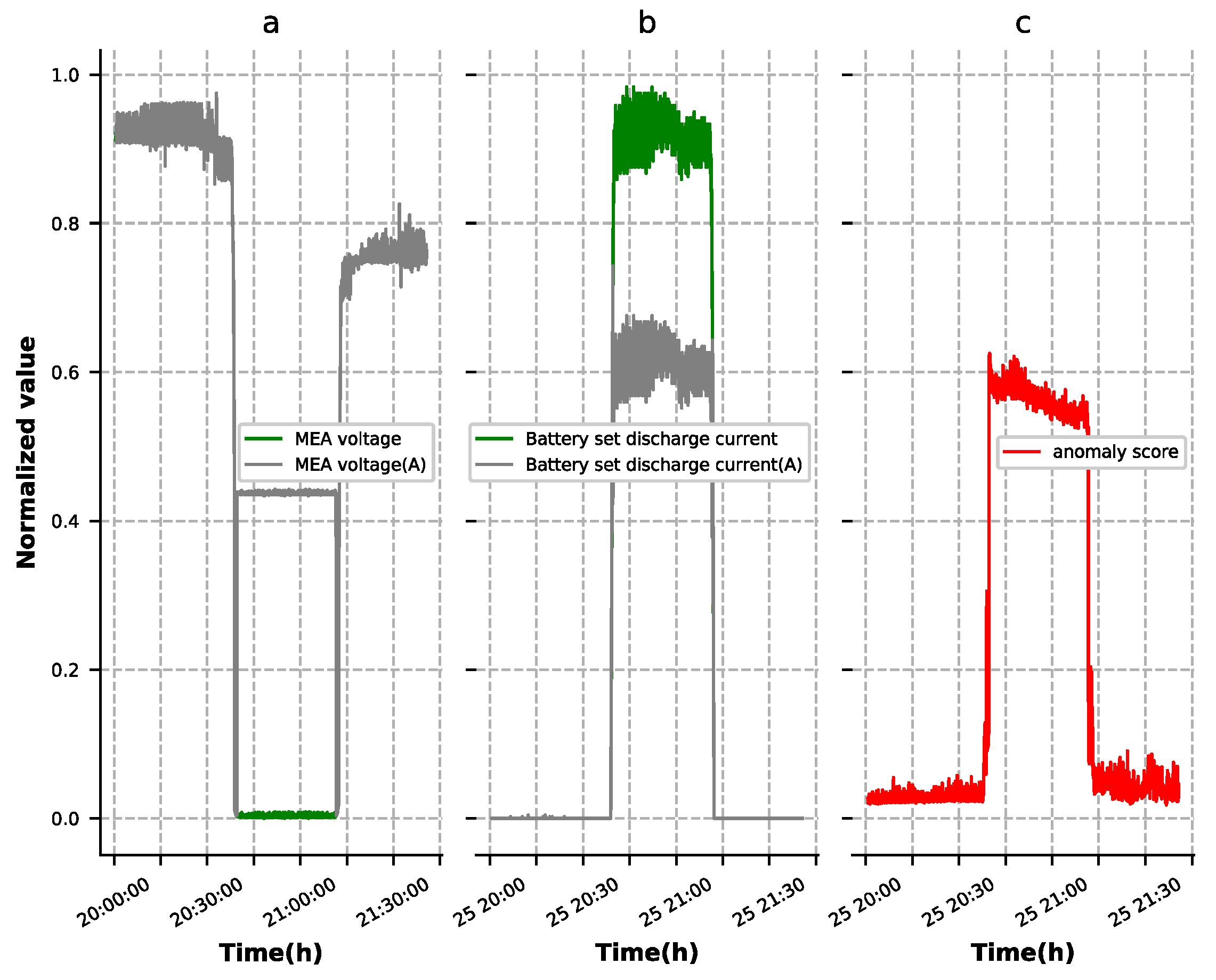

- BDR/BCR output current and battery set discharge current;

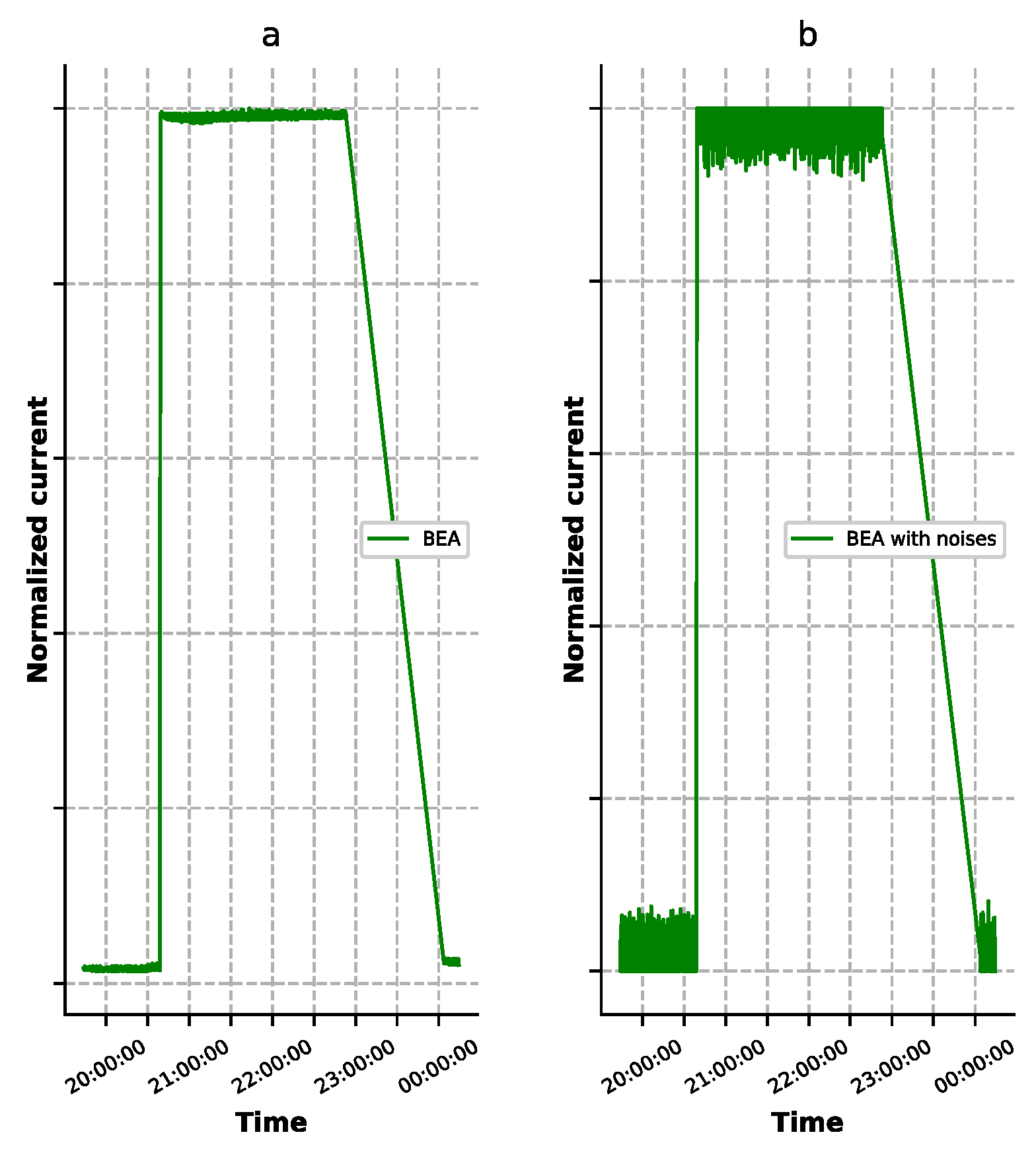

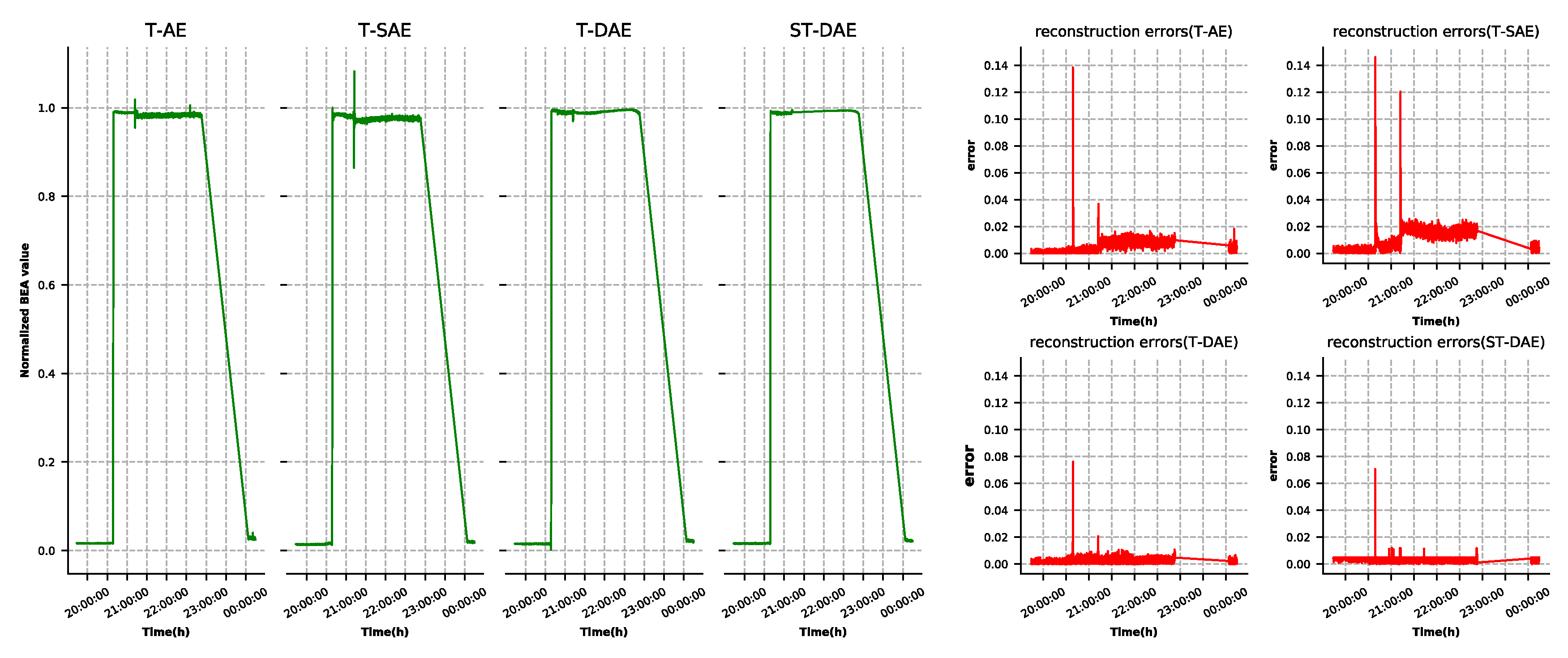

- BEA and solar cell array current;

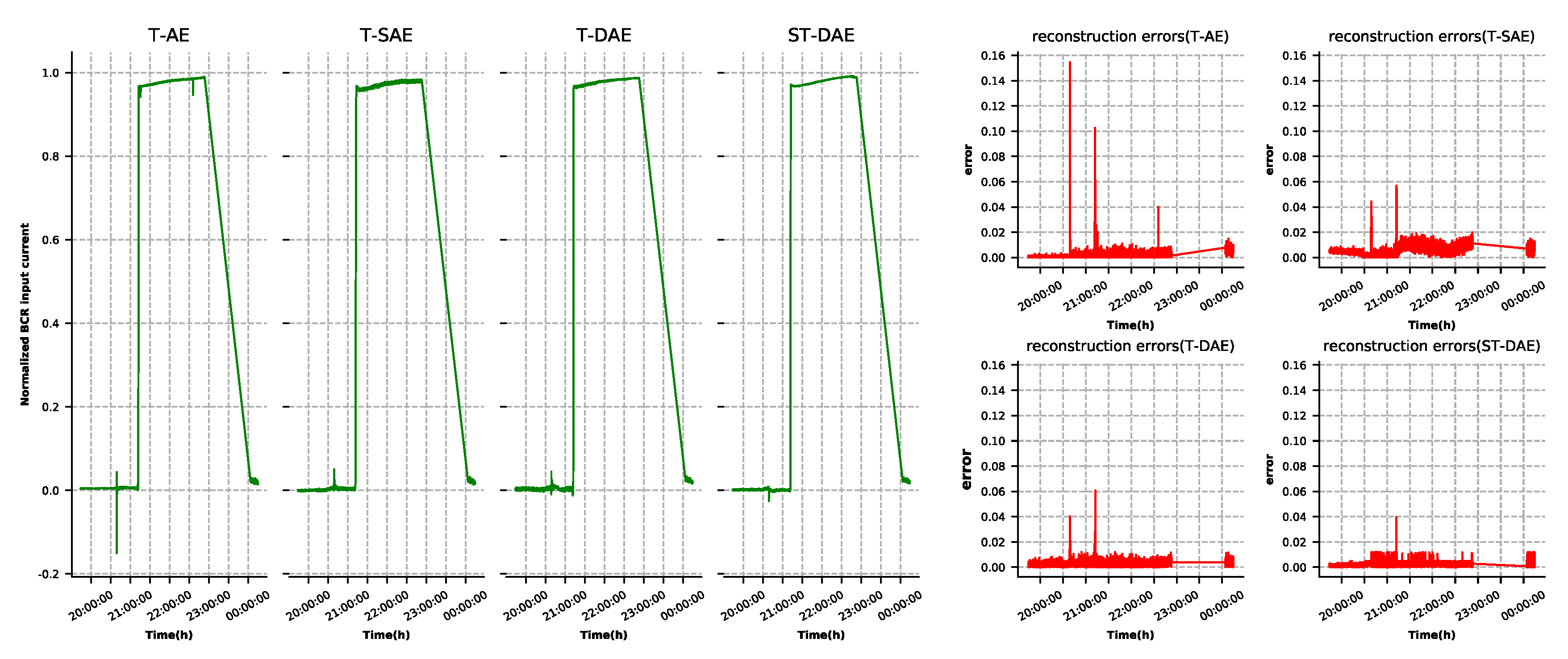

- BCR input current;

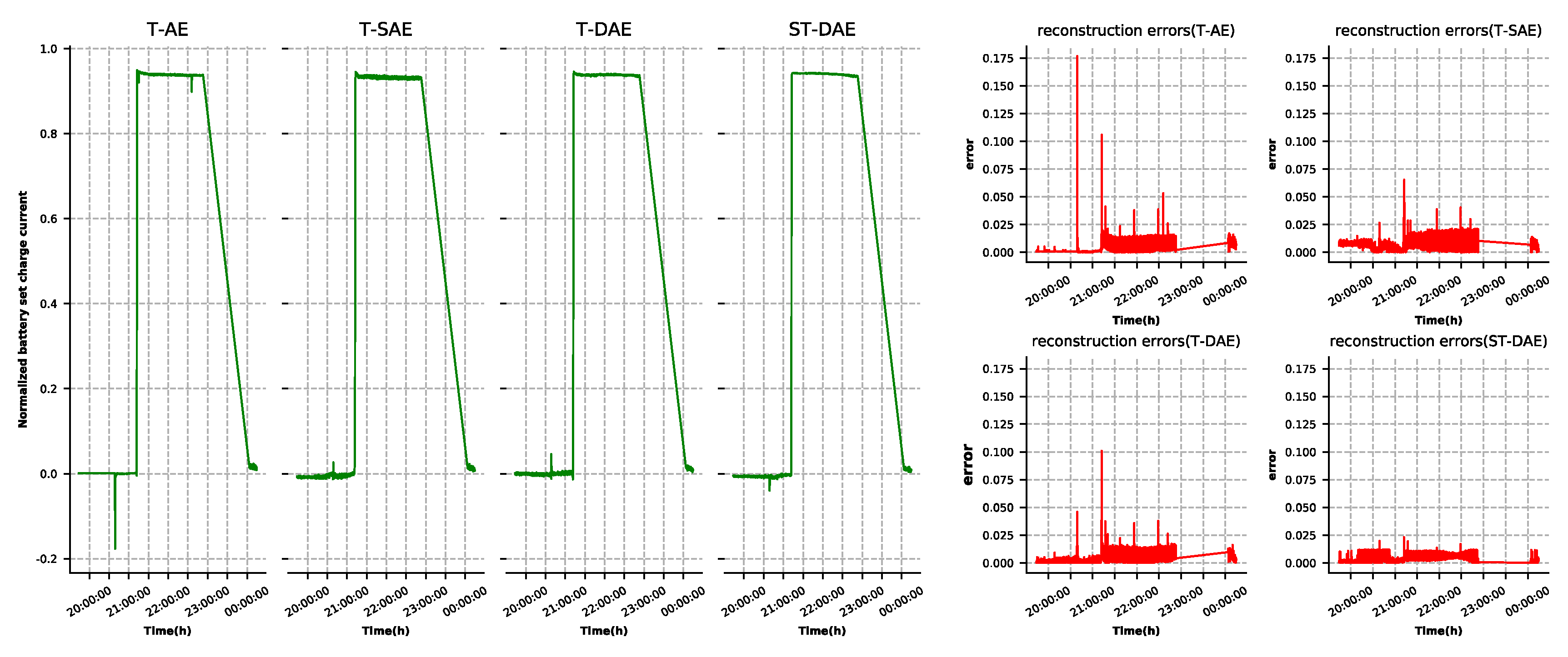

- Battery set charge current;

- MEA voltage.

4.2. Model Training

4.3. Performance Evaluation

4.3.1. Evaluation on Model Reconstruction Capability

4.3.2. Evaluation on Point Anomalies Detection Capability

4.3.3. Evaluation on Contextual Anomalies Detection Capability

5. Conclusion and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ziqian, C. Research on Satellite Power System Diagnosis Based on Qualitative Model [D]. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2007. [Google Scholar]

- Assendorp, J.P. Deep Learning for Anomaly Detection in Multivariate Time Series Data. Ph.D. Thesis, Hochschulinformations-und Bibliotheksservice HIBS der HAW Hamburg, Hamburg, Germany, 2017. [Google Scholar]

- Pan, D.; Liu, D.T.; Zhou, J.; Zhang, G.Y. Anomaly detection for satellite power subsystem with associated rules based on Kernel Principal Component Analysis. Microelectron. Reliab. 2015, 55, 2082–2086. [Google Scholar] [CrossRef]

- Zong, B.; Song, Q.; Martin, R.M.; Wei, C.; Lumezanu, C.; Cho, D.; Haifeng, C. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In Proceedings of the 6th International conference on Learning Repretations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Danfeng, Y.; Xiaokui, S.; Long, C.; Stolfo, S.J. Anomaly Detection as a Service: Challenges, Advances, and Opportunities. Anomaly Detection as a Service: Challenges, Advances, and Opportunities. Synth. Lect. Inf. Secur. Priv. Trust 2017, 9, 1–173. [Google Scholar]

- Erfani, S.M.; Rajasegarar, S.; Karunasekera, S.; Leckie, C. High-dimensional and large-scale anomaly detection using a linear one-class SVM with deep learning. Pattern Recognit. 2016, 58, 121–134. [Google Scholar] [CrossRef]

- Ahmed, M.; Mahmood, A.N.; Hu, J. A survey of network anomaly detection techniques. J. Netw. Comput. Appl. 2016, 60, 19–31. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection:A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, J.; Wei, X. Incremental SVM based on reserved set for network intrusion detection. Expert Syst. Appl. 2011, 38, 7698–7707. [Google Scholar]

- Schölkopf, B.; Williamson, R.C.; Smola, A.J. Support Vector Method for Novelty Detection. In Proceedings of the International Conference on Neural Information Processing Systems, Denver, CO, USA, 29 November–4 December 1999. [Google Scholar]

- Aliakbarisani, R.; Ghasemi, A.; Wu, S.F. A data-driven metric learning-based scheme for unsupervised network anomaly detection. Comput. Electr. Eng. 2019, 73, 71–83. [Google Scholar] [CrossRef]

- Bo, L.; Wang, X. Fault detection and reconstruction for micro-satellite power subsystem based on PCA. In Proceedings of the International Symposium on Systems & Control in Aeronautics & Astronautics, Harbin, China, 8–10 June 2010. [Google Scholar]

- Hong, D.; Zhao, D.; Zhang, Y. The Entropy and PCA Based Anomaly Prediction in Data Streams. Procedia Comput. Sci. 2016, 96, 139–146. [Google Scholar] [CrossRef]

- Olukanmi, O.P.; Twala, B. Sensitivity analysis of an outlier-aware k-means clustering algorithm. In Proceedings of the 2017 Pattern Recognition Association of South Africa and Robotics and Mechatronics (PRASA-RobMech), Bloemfontein, South Africa, 30 November–1 December 2017. [Google Scholar]

- Li, J.; Pedrycz, W.; Jamal, I. Multivariate time series anomaly detection: A framework of Hidden Markov Models. Appl. Soft Comput. 2017, 60, 229–240. [Google Scholar] [CrossRef]

- Malhotra, P.; Ramakrishnan, A.; Anand, G.; Vig, L.; Agarwal, P. LSTM-based Encoder-Decoder for Multi-sensor Anomaly Detection. arXiv 2016, arXiv:1607.00148. [Google Scholar]

- Taylor, A.; Leblanc, S.; Japkowicz, N. Anomaly Detection in Automobile Control Network Data with Long Short-Term Memory Networks. In Proceedings of the 2016 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Montreal, QC, Canada, 17–19 October 2016. [Google Scholar]

- Fan, C. Analytical investigation of autoencoder-based methods for unsupervised anomaly detection in building energy data. Appl. Energy 2018, 211, 1123–1135. [Google Scholar] [CrossRef]

- Rumelhart, E.D.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; MIT Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Bengio, Y. Extracting and composing robust features with denoising autoencoders. In Proceedings of the International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008. [Google Scholar]

- Olshausen, A.B.; Field, D.J. Sparse coding with an overcomplete basis set: A strategy employed by V1? Vis. Res. 1997, 37, 3311–3325. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Ng, A. Sparse autoencoder. CS294A Lect. Notes 2011, 72, 1–19. [Google Scholar]

- Hyun, K. The prevention and handling of the missing data. Korean J. Anesth. 2013, 64, 402–406. [Google Scholar]

- Laberge, Y. Advising on Research Methods: A Consultant’s Companion; Johannes van Kessel Publishing: Huizen, The Netherlands, 2011. [Google Scholar]

- Kim, J.-O.; Curry, J. The Treatment of Missing Data in Multivariate Analysis. Sociol. Methods Res. 1977, 6, 215–240. [Google Scholar] [CrossRef]

- Little, R.J.; Cohen, M.L.; Dickersin, K.; Emerson, S.S.; Farrar, J.T.; Frangakis, C.; Hogan, J.W.; Molenberghs, G. The prevention and treatment of missing data in clinical trials. N. Engl. J. Med. 2012, 367, 1355–1360. [Google Scholar] [CrossRef] [PubMed]

- Idreos, S.; Papaemmanouil, O.; Chaudhuri, S. Overview of Data Exploration Techniques. In Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data, Victoria, Australia, 31 May–4 June 2015; pp. 277–281. [Google Scholar]

- Kuchaiev, O.; Ginsburg, B. Training Deep AutoEncoders for Collaborative Filtering. arXiv 2017, arXiv:1708.01715. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 21 June–18 July 1967. [Google Scholar]

- Kim, C.A.; Park, W.H.; Dong, H.L. A Framework for Anomaly Pattern Recognition in Electronic Financial Transaction Using Moving Average Method; Springer: Dordrecht, The Netherlands, 2013. [Google Scholar]

| Autoencoders | Autoencoder Type | Training Method |

|---|---|---|

| T-AE | Common | Traditional |

| T-SAE | Sparse | Traditional |

| T-DAE | Denoising | Traditional |

| ST-DAE | Denoising | Stage-training |

| Sensor Classes | Numbers |

|---|---|

| Bus current | 1 |

| Battery set charge current | 1 |

| Battery set discharge current | 1 |

| BCR input current | 2 |

| BDR /BCR output current | 6 |

| Temperature | 4 |

| Solar cell array current | 2 |

| Battery set whole voltage | 2 |

| Battery set single voltage | 11 |

| MEA voltage | 2 |

| BEA | 2 |

| Autoencoders. | True Positive | False Negative | False Positive | True Negative | Recall | Precision |

|---|---|---|---|---|---|---|

| T-AE | 14591 | 109 | 186 | 1814 | 0.90700 | 0.94331 |

| T-SAE | 14611 | 89 | 191 | 1809 | 0.90450 | 0.95311 |

| T-DAE | 14690 | 10 | 63 | 1937 | 0.96850 | 0.99486 |

| ST-DAE | 14695 | 5 | 12 | 1988 | 0.99400 | 0.99749 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, W.; Sun, B.; Li, Z.; Zhang, S.; Chen, Z. Detecting Anomalies of Satellite Power Subsystem via Stage-Training Denoising Autoencoders. Sensors 2019, 19, 3216. https://doi.org/10.3390/s19143216

Jin W, Sun B, Li Z, Zhang S, Chen Z. Detecting Anomalies of Satellite Power Subsystem via Stage-Training Denoising Autoencoders. Sensors. 2019; 19(14):3216. https://doi.org/10.3390/s19143216

Chicago/Turabian StyleJin, Weihua, Bo Sun, Zhidong Li, Shijie Zhang, and Zhonggui Chen. 2019. "Detecting Anomalies of Satellite Power Subsystem via Stage-Training Denoising Autoencoders" Sensors 19, no. 14: 3216. https://doi.org/10.3390/s19143216

APA StyleJin, W., Sun, B., Li, Z., Zhang, S., & Chen, Z. (2019). Detecting Anomalies of Satellite Power Subsystem via Stage-Training Denoising Autoencoders. Sensors, 19(14), 3216. https://doi.org/10.3390/s19143216