Abstract

For a visual/inertial integrated system, the calibration of extrinsic parameters plays a crucial role in ensuring accurate navigation and measurement. In this work, a novel extrinsic parameter calibration method is developed based on the geometrical constraints in the object space and is implemented by manual swing. The camera and IMU frames are aligned to the system body frame, which is predefined by the mechanical interface. With a swinging motion, the fixed checkerboard provides constraints for calibrating the extrinsic parameters of the camera, whereas angular velocity and acceleration provides constraints for calibrating the extrinsic parameters of the IMU. We exploit the complementary nature of both the camera and IMU, of which the latter assists in the checkerboard corner detection and correction while the former suppresses the effects of IMU drift. The results of the calibration experiment reveal that the extrinsic parameter accuracy reaches 0.04° for each Euler angle and 0.15 mm for each position vector component (1σ).

1. Introduction

Visual/inertial integrated systems have been used across many contexts, including indoor [1,2,3], underwater [4], space environments [5], and taking some measurement tasks [6,7]. To achieve accurate navigation and measurement, camera and inertial sensor frames should be aligned to the carrier frame in a process often called extrinsic parameter calibration or alignment. In this process, a coordinate transforming relationship is established between the sensor frame and the system body frame. Without calibrating the extrinsic parameters, the navigation errors will be coupled with the misalignment errors [8].

Previous studies have developed theoretical models of the camera [9] and the inertial measurement unit (IMU) [10,11,12], based on which the extrinsic parameters of visual/inertial integrated systems have been calibrated. These parameters are generally calibrated in three ways. First, these parameters are calibrated based on the corresponding rotation differences. Given that both the camera and IMU can evaluate their own rotations, a calibration method that minimizes the overall matching error of the rotation matrix between the visual and inertial coordinate systems in multiple rotations has been proposed in [13], whereas You et al. [14] developed a calibration method that relies on the angular velocity differences between the IMU and camera in the subsequent rotations. Second, these parameters are calibrated based on the vertical direction constraint. Given that the gravity and vertical line are measured by an IMU and camera in the same direction (i.e., vertical direction), a calibration method that uses this direction as reference has been proposed in [15,16,17]. Third, these parameters are calibrated based on filtering or optimization. In vision-aided inertial navigation, the coordinate transforming relation between the IMU and camera is estimated. The methods developed by Mourikis et al. [18] and Kelly and Sukhatme [19] estimate the extrinsic parameters based on the extended Kalman filter (EKF) and the unscented Kalman filter, respectively. In addition, Kaminer et al. [20] proposed a method based on nonlinear, globally stable filters, while Yang and Shen [21] proposed an optimization-based calibration method.

Most of these methods align the camera frame to the IMU frame. However, in some cases where a high-precision operation is required, the visual/inertial system should be aligned to the system body frame and not to the IMU frame. In addition, when a carrier, such as a spacecraft, is too heavy to conduct a calibration operation, the before mentioned methods cannot fully solve the problem. Previous studies have attempted to address this problem by aligning the sensors frame to the system body frame. For instance, Wendel and Underwood [22] aligned the line scanning cameras to the ground vehicle frame and achieved an accuracy of 0.06 m in translation and 1.05° in rotation, while Shi et al. [23] aligned three cameras to the body system frame and achieved 0.6 mm and 0.1° translation and rotation accuracies, respectively. Only the camera frame is aligned to the body frame and the accuracy can be further improved. Given that self-integrated and some commercial IMUs lack a mechanical interface. Foxlin and Naimark [24] aligned the IMU to the system body frame defined by a mechanical interface by rolling on a flat surface and then aligned the camera to the system body frame by using a calibration device. However, the accuracy of the extrinsic parameters is not clearly shown. Pittelkau [25] proposed a method for aligning an inertial sensor assembly of three fiber-optic gyros, two star trackers, and a camera to a spacecraft by using an alignment Kalman filter, but this method has only been validated via simulation.

We present an extrinsic parameter calibration method that uses IMU and camera measurements to align the IMU and camera frames to the system body frame defined by the mechanical interface. This method offers two advantages. First, the sensor frames are aligned to the carrier frame through the mechanical interface without requiring a complex calibration process. Second, this method can be seen as a standard calibration step for factory production and user operation. The operation process of calibration is not complex. Fix a checkerboard on the wall within the field of view of the camera, fix the integrated system on a two-axis turntable, and then manually control this turntable to swing around its two axes. The checkerboard is in the camera’s field of view all the time.

Our novelties are that,

- In this study, we exploit the complementary nature of IMU and camera to improve the calibration accuracy. The camera measurements can suppress the inertial propagation drift, whereas the motion parameters evaluated by IMU can accurately extract the feature points under a smearing effect. We exploit the complementary nature of these two components to improve the calibration accuracy.

- The method for IMU aided checkerboard corner correction under motion blur is introduced, which is the tip step to improve accuracy of camera calibration. We use the IMU to evaluate the motion parameters, and use these motion parameters to eliminate the effects of motion blur in image. It is described in Section 2.3. The extrinsic parameters are evaluated based on EKF, and the rotation angle is evaluated using camera measurement to suppress IMU’s drift.

- The calibration process can be a standard calibration step for factory production and user operation. The turntable is the standard equipment for IMU calibration, and the checkerboard is the standard device for camera calibration. So, the cost is low, and the calibration process is simple and convenient. Our experiment results indicate that our proposed method is valid and achieves a fair level of accuracy. Our method can also align the camera frame to the IMU frame.

2. Calibration Method

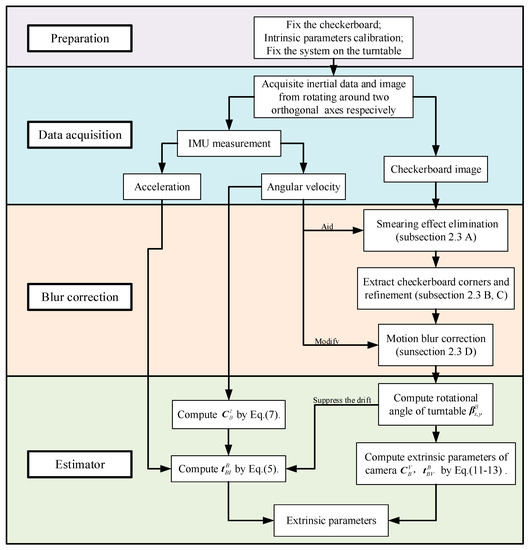

The main idea of the proposed calibration method is shown in Figure 1, which can help understand the part of the calibration. There are four step of this calibration. Firstly, “Preparation”, the following preparations should be performed before the system calibration:

Figure 1.

Extrinsic calibration process.

- The checkerboard should be fixed in an appropriate location that can be observed by the cameras while the system rotates along with the turntable;

- The intrinsic parameters of the camera and IMU should be calibrated;

- The visual/inertial system should be fixed on the turntable.

Secondly, “data acquisition”, the turntable is controlled manually to rotate around two orthogonal axes, such as the x and y axes, for the checkerboard image acquisition by camera and the inertial data acquisition by IMU, respectively.

Thirdly, “blur correction”, the motion blur exits in the checkerboard image. The checkerboard corners are detected with the aid of IMU to achieve higher accuracy.

Finally, “estimator”, we estimate the extrinsic parameters based on EKF.

The main idea of this calibration method is that establish the equations that provide the extrinsic parameters and solve these equations. To better understand the method, the basic measurement models of the IMU and camera are described in the Section 2.1. Because the calibration operation process is swinging motion, the detailed measurement equations of IMU and camera under swinging motion are described in Section 2.2. We exploit the complementary nature of IMU and camera to improve the calibration accuracy, the method of inertial aided checkerboard corner extraction under motion blur is described in Section 2.3. And the extrinsic parameters are evaluated based on EKF, which is shown in Section 2.4. The rotation angle of turntable is evaluated by camera measurement to suppress the IMU’s drift.

2.1. Measurement Model

The measurement model for IMU is formulated as follows (The superscripts and subscripts F, B, I, and V represent the frame of checkerboard, system body, IMU, and camera, respectively, while F0, B0, I0, and V0 represent the frame fixed on the earth and coincident to the frame of checkerboard, system body, IMU, and camera in its initial pose, respectively).

where is the true specific force excluding gravity, is the true angular velocity, is the gravity expressed in the IMU frame, and are the bias errors of the accelerometer and gyro, respectively, and and are the process white Gaussian noises of the accelerometer and gyro, respectively. Both and show minimal changes within a short period.

We use the ideal pinhole model as the measurement model for the camera that maps a 3D point to a 2D image as [26]

where is the camera frame optic axis coordinate of point , , and are the scale factors of the u and v axes of the image plane, respectively, is the non-orthogonal factor of the image plane axes, is the pixel coordinate of the camera principal point, is the 3 × 3 rotation matrix, is the 3D translation vector, and is the intrinsic parameter matrix. In the actual situation, the principal point, focal length deviation, distortion, and other error factors should be considered based on the ideal camera model. These parameters can be acquired via an intrinsic calibration, such as by employing Zhang’s method [27].

2.2. Extrinsic Parameter Calibration Method for a Visual/Inertial Integrated System

The physical quantities that should be calibrated include the following:

- The translation matrices and , which occur between the system body frame and the camera frame as well as between the system body frame and the IMU frame, respectively.

- The position vectors and , which are derived from the camera and IMU principal points to the origin of the system body frame, respectively.

Given that IMU can measure angular velocity and acceleration vectors during rotation, the constraint equations of inertial vectors and the extrinsic parameters of IMU can be established. When the turntable rotates around by one axis, the position vector and Euler angle along the rotation axis cannot be computed. However, when the turntable rotates by around two orthogonal axes, extrinsic parameters of IMU can be computed. Fortunately, IMU calibration is generally performed by using a turntable with two or three orthogonal axes.

Camera calibration generally involves the use of a checkerboard. In the calibration process, both the intrinsic parameters and the translation matrix and position vector between the camera and checkerboard frames can be computed. By turning the turntable, the constraint equation can be established based on the relationship between the extrinsic parameters of camera and the change in the camera position and attitude. The constraint equations of the visual/inertial system are described as follows.

In general, when the Coriolis acceleration is ignored during rotation, the accelerometer measurement is

where is the radial acceleration, is the tangential acceleration, is the gravitational acceleration expressed in the IMU frame, and is the translation matrix from the system body frame to the IMU frame, which can be calculated by the Euler angle .

and are skew-symmetric matrices defined as

where is the angular velocity vector, and is the angular acceleration vector.

Therefore, the accelerometer measurement can be rewritten as

where is the gravity accelerometer expressed in the {} frame and can be written as

where is the accelerometer measurement in the initial pose. Therefore, must be initially evaluated to determine . The gyro measurement is formulated as

An integrated system rotates with a two-axis turntable. When turntable rotates around the x axis, we have

where is the rotation angle of the turntable when rotating around the x axis.

However, when rotating around the y axis, we have

where is the rotation angle of the turntable when rotating around the y axis.

, , can be computed by integrating the gyro measurement, but an accumulation error is observed. This error is then evaluated based on the camera measurement in the Kalman process.

The camera measurement can be formulated as

where subscripts x and y indicate that the turntable rotates around the x and y axes, respectively, is the pixel coordinate of each checkerboard corner, and n is the number of checkerboard corners.

The value of can be computed as

can be defined as

and and in Equation (11) can be written as

where is the 3D coordinate of the ith checkerboard corner in the checkerboard frame. In Equations (8) and (9), can be computed based on the rotational angle of the turntable and correspond to the rotation of the turntable around the x and y axes, respectively.

Meanwhile, is determined by the user, and denotes the pixel coordinate of the checkerboard corner in an image. If the number of checkerboard corners is >8, then and can be evaluated by using least squares.

2.3. Checkerboard Corner Extraction Method under Motion Blur

During the working process of a digital camera, the shutter needs to open for a moment to project light onto the photographic material. This brief moment is called the exposure time. Under highly dynamic conditions, the relative pose between the camera and the object changes evidently during the exposure time, thereby blurring or stretching the generated image [28]. The calibration of the extrinsic parameters requires a rotation, especially for IMU. Therefore, for the camera measurements, we must extract the checkerboard corners during rotation, but a motion blur may be generated in the process. Conventional checkerboard corner detection methods compute the local optimum value [29] with static images. Therefore, when noise and motion blur are present in an image, the errors in the extraction results evidently increase.

We utilize inertial data to eliminate the smearing effect and then extract the checkerboard corners with the aid of the IMU data. The corner detection algorithm is developed as follows. The Lucy–Richardson method is used to rectify the blurred image in step A, and the conventional corner detection method is used to find the corners’ pixel coordinates in step B. Given that checkerboard corners are constrained on a few lines, we add a linear constraint to refine the checkerboard corner location. Through the linear constraint, the corners’ pixel coordinates are refined in step C. Finally, IMU measurement is used to correct the position of corner detected for movement in step D.

- A. Pretreatment and smearing effect elimination.

We deblur the image using the Lucy–Richardson method [30,31,32]. The point–spread function (PSF) is a 2D Gaussian model , and and denote the standard deviations in the x and y axes, respectively. If the velocity of the checkerboard expressed in the camera frame coincides with the x axis, then a motion blur is only observed in the x axis. Therefore, the PSF can be written as , and the corresponding covariance matrix is

where , is the scale factor, and is the angular velocity of rotation.

When the cross angle between the velocity of the checkerboard is expressed in the camera frame and the x axis of the camera frame is , the covariance matrix of PSF can be written as

where . can be evaluated by camera data.

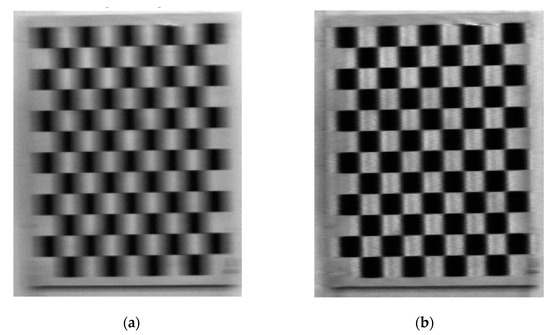

Figure 2 compares the original and deblurred images and shows that the motion blur has been effectively eliminated.

Figure 2.

Image deblurring using the Lucy–Richardson method. (a) Original and (b) deblurred images.

- B. Corner detection.

This step is the same as that in the conventional method. The corners in the image, including the complete checkerboard, are examined. and denote the x (horizontal) and y (vertical) components of the 2D numerical gradient, and the first and second derivatives are computed as

The gradient direction is evaluated by

where is the weight coefficient.

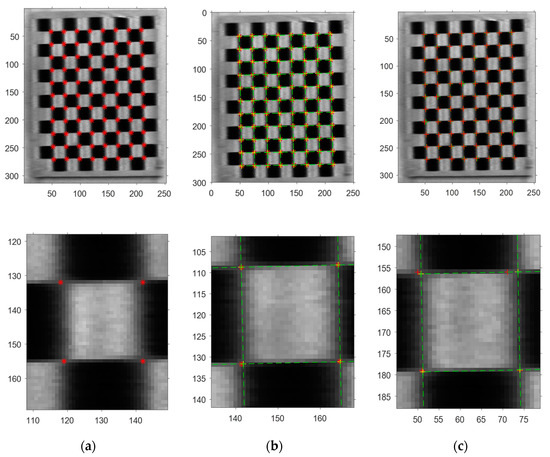

and are computed for each pixel in the picture. Afterward, and are determined—one of which is valid—by minimizing the energy function proposed in [29]. Based on the threshold set in advance and non-maxima suppression, the detection accuracy reaches the pixel level. The results are presented in Figure 3a.

Figure 3.

Image process. The pictures in the bottom row show the details of the pictures presented in the upper row. (a) Roughly detected checkerboard corners (refer to the red “※”); (b) refined results with a linear constraint (refer to the yellow “+”); and (c) results after (refer to the yellow “+”) and before refinement (the red “+”).

- C. Linear constraint refinement.

We can evaluate the lines of checkerboard based on the corners by using least squares. We assume that the line equation is and that i and j represent the row and column numbers, respectively.

The cross points of these lines are then computed for the global optimization of the checkerboard corners (Figure 3b; refer to the yellow ”+”). Figure 3c compares the results before and after refinement, respectively. The error of some corners detected before the refinement has remarkably increased due to motion blur.

- D. IMU-aided checkerboard corner modification

At time t, camera and IMU start collecting data simultaneously. The corners extracted have some delay due to the motion blur in the image, with real data at time . is the exposure time of camera. We can modify the corner coordinates with the inertial data. The details are presented as follows.

We consider the motion of camera in , and point P in the camera frame can be written as

where

where is at time t.

Equation (19) can be written as

is defined, and Equation (21) is expanded as

By substituting Equation (22) into Equation (12), we have

Given that in this study, we have:

Moreover, given that , we have

When , we can modify the coordinates of the checkerboard corners, thereby eliminating the smearing effect.

The image process algorithm is summarized as Table 1.

Table 1.

Checkerboard Corner Detection under Motion Blur.

The corner extraction accuracy can be significantly enhanced by using the above checkerboard corner detection algorithm, especially when a smearing effect exists.

2.4. Description of the Estimator

There are many methods that can solve the equations that provide the extrinsic parameters, such as EKF [33,34], genetic algorithm [35], and so on. Here, we utilize an EKF for calibrating the extrinsic parameters. The EKF algorithm is briefly introduced in Table 2. The state vector includes the extrinsic parameters (translation vectors , and Euler angle , ), the IMU bias ( and ), the camera initial parameter ( and ), and the rotation angle (). Meanwhile, the measurement vector includes the accelerometer ( and ) and gyro ( and ) measurements as well as the extracted checkerboard corners (,, , and ).

Table 2.

EKF updating process.

3. Simulation and Real-World Experiment

3.1. Simulation

A simulation test is designed to validate the performance of the extrinsic parameter calibration method. During the simulation, the turntable is assumed to demonstrate swinging motions around the x and y axes. The swinging rule is , where , denotes the angular velocity, and denote the swinging amplitude and frequency, respectively, and and denote the initial phase and swinging center, respectively. The swinging parameters of the simulation are defined in Table 3.

Table 3.

Swinging parameters.

The true values for simulation data of the visual/inertial integrated system are defined in Table 4.

Table 4.

The true values for simulation data.

Given the parameters in Table 3 and Table 4, the true measurement of the gyroscope and accelerometer can be simulated by using the dynamics equation. The true measurement of the camera (pixel coordinates of the checkerboard corners) can be simulated by using the image model. When the errors in Table 5 are added into the ideal measurement data, real inertial sensor and camera outputs can be generated. The sampling rates of the IMU and camera are 100 Hz and 10 Hz, respectively.

Table 5.

Sensor errors.

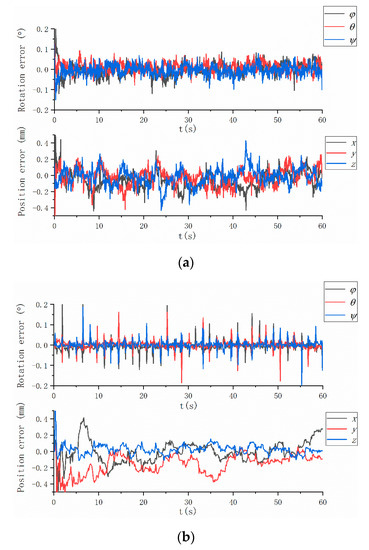

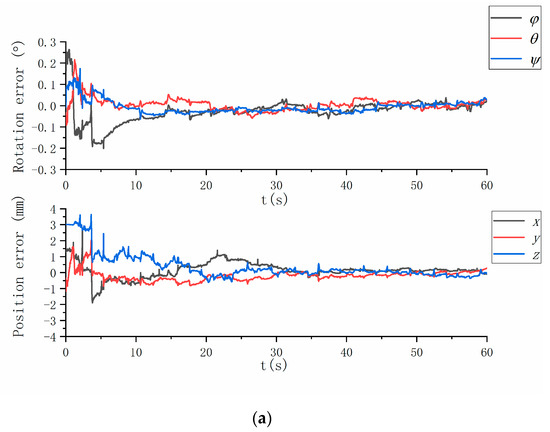

The alignment errors are shown in Figure 4, whereas the alignment error statistics are listed in Table 6. The calibration method can evaluate the extrinsic parameters correctly. The attitude error is <0.03° for each Euler angle, and the position error is <0.10 mm for each position vector component.

Figure 4.

Simulation results: (a) rotation and position errors of MIMU, and (b) rotation and position errors of the camera.

Table 6.

Results and deviation of simulation.

3.2. Real-World Experiment

3.2.1. Experiment Setting

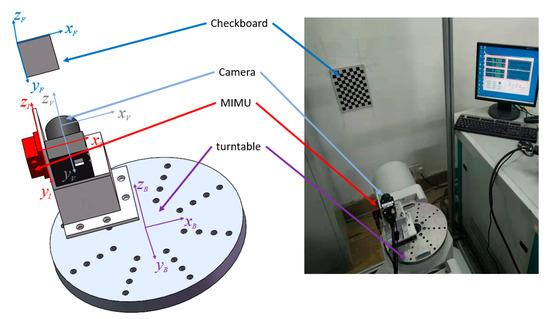

A calibration experiment is conducted to confirm the validity of the proposed method and to evaluate the accuracy of the system. Figure 5 shows the experiment architecture, whereas Table 7 presents the main devices. The system body frame coincides with the turntable frame for the sake of simplicity because the mechanical interface of the turntable frame is clearly defined.

Figure 5.

Calibration architecture.

Table 7.

Major devices involved in the experiment.

The experiment is designed as follows. First, frames {I0}, {V0}, and {B0} are defined to coincide at the initialization time. The system body, camera, and MIMU coordinates are fixed with the turntable, camera, and MIMU, respectively. Second, the turntable is manually controlled to rotate around its axes, whereas the visual/inertial integrated system moves along with the turntable.

The intrinsic parameters obtained through Zhang’s method [27] are shown in Table 8. The calibration achieved an accuracy of 0.08 pixel based on 18 images.

Table 8.

Calibration result of intrinsic parameters of camera.

3.2.2. Experiment Results and Discussion

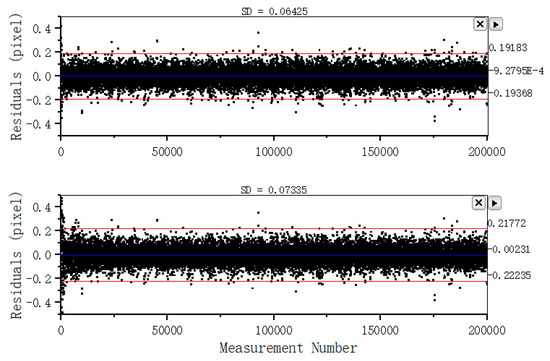

The test results are presented in this section. The extrinsic calibration results before and after the checkerboard corner modification are nearly similar. The standard uncertainty of the modified method (0.15 mm) (Table 10) is lower than that of the unmodified method (0.18 mm) (Table 9). The camera measurement residuals of the two methods are different as shown in Figure 6. The measurement residuals’ 3σ bound of the method based on a linear constraint is approximately 0.193 pixels, whereas the measurement residuals’ 3σ bound of the unmodified method is approximately 0.220 pixels. Thus, the motion blur correction described in Section 2.3 is effective.

Table 9.

Results and deviation of the experiment based on the unmodified corner detection method.

Figure 6.

Measurement residuals with their 3σ bounds. The top and bottom images present the results for methods before and after modification, respectively.

The difference of IMU calibration parameters before and after modification is not significant. The camera measurements can suppress the angle integral error due to IMU’s drift, and increase the accuracy of IMU extrinsic parameters calibration results. Thus, more accuracy camera measurements will lead to more accuracy IMU extrinsic parameters calibration results. But the accuracy of IMU extrinsic parameters calibration results is closed before and after checkerboard corner modification, through compare the results in Table 9 and Table 10. There may be three reasons explain it.

Table 10.

Results and deviation of the experiment based on the modified corner detection method.

- For camera measurements, the rotation angle is computed based on all the checkerboard corners’ pixel coordinates. The effects of motion blur are eliminate by involve all corners into computation process.

- The calibration process is not long, so the effect of accumulation error is not significant.

- There are system errors exits, such as the non-orthogonal of rotation axes, and the time delay of data acquisition, which also influence the error level.

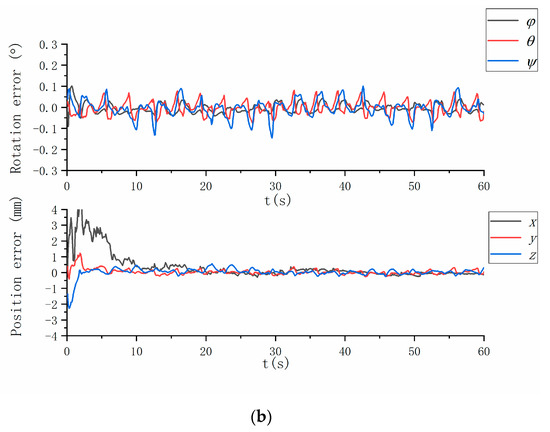

Table 10 and Figure 7 summarize the experiment results. The origin of the MIMU frame is discussed in the sbg-IMU user manual, while that of the camera is the optical center of the lens. Therefore, the translation vector between the MIMU and camera can be roughly evaluated. The results that are evaluated based on the mechanical structure coincide with those that are evaluated by using EKF.

Figure 7.

Results of the calibration experiment: (a) attitude and position errors of MIMU and (b) camera.

The experiment results, the existing problems and possible reasons, the strategies for improving the results, and some directions for future work are presented below:

- IMU and camera frames are aligned to the system body frame. The standard deviations of the three-axis position error are (0.13, 0.15, 0.11) mm and (0.12, 0.10, 0.13) mm for the MIMU and camera, respectively. Meanwhile, the standard deviations of the three-axis Euler angle error are (0.02°, 0.02°, 0.02°) and (0.03°, 0.04°, 0.02°) for the MIMU and camera, respectively. Compare the difference between the method with corner correction and without corner correction. We find the camera extrinsic parameters’ accuracy of former is higher than the latter (Table 9 and Table 10), and the camera measurement residuals of former is lower than the latter (Figure 6). It indicates the corner correction described in Section 2.3 is effective. The reasons why the difference of IMU calibration parameters before and after modification is not significant have been briefly discussed.

- There are three errors affecting the calibration accuracy. Firstly, the calibration errors of IMU and camera intrinsic parameters, which affect the measurements’ accuracy. At present, a reasonable choice of camera calibration method ensures that imaging accuracy reaches the sub-pixel level, and IMU is factory calibrated. Secondly, the time delay between the IMU and camera data acquisition, which affects the calibration accuracy and stability of filter. We align the data by the time label (both IMU and camera data are marked on the time label, respectively), and don’t evaluate the time delay exactly. Thirdly, we ignore the non-orthogonality of the turntable axes, and the turntable has been factory calibrated. Further research on a solution without orthogonal axes could be performed.

- We can observe the convergence of each parameter in the EKF process. The experiment results show that the method is valid and is not restricted in the Kalman filter. Some optimal algorithms, such as the particle filter and Levenberg–Marquardt algorithm can also be used. The calibration parameters are obtained, and the complete visual/inertial integrated system is established. Future research may focus on the calibration in the navigation process, and the proposed method may be seen as a standard calibration step in factory production and user operation.

4. Conclusions

An extrinsic parameter calibration method for a visual/inertial integrated system is developed based on a swinging motion. A checkerboard corner detection algorithm is then utilized to detect checkerboard corners with a smearing effect. The extrinsic parameter calibration method is developed based on the imaging model and dynamic equation. This method is validated by performing a simulation and a real-world experiment, which results highlight the effectiveness of the proposed method. This method can also be seen as a standard calibration step and used for visual/inertial systems, especially for visual and inertial navigation integrated systems.

Author Contributions

C.O. proposed the idea of the calibration method and carried out the theoretical derivation; C.O., K.Z. and S.S. performed the experiments and analyzed the data; S.S. and Z.Y. conceived and supervised the experiments; C.O. wrote the paper.

Funding

This research received no external funding.

Acknowledgments

This research received no external funding. The system calibration is performed at the State Key Laboratory of Precision Measurement Technology and Instruments at Tsinghua University. It is gratefully acknowledged. Thanks for the teacher’s advice.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kis, L.; Prohaszka, Z.; Regula, G. Calibration and testing issues of the vision, inertial measurement and control system of an autonomous indoor quadrotor helicopter. Int. J. Mech. Control 2009, 10, 29–38. [Google Scholar]

- Xia, L.; Meng, Q.; Chi, D.; Meng, B.; Yang, H. An Optimized Tightly-Coupled VIO Design on the Basis of the Fused Point and Line Features for Patrol Robot Navigation. Sensors 2019, 19, 2004. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Yu, M.; Guo, H.; Xu, Y. Mobile Robot Indoor Positioning Based on a Combination of Visual and Inertial Sensors. Sensors 2019, 19, 1773. [Google Scholar] [CrossRef] [PubMed]

- Rahman, S.; Li, A.Q.; Rekleitis, I. SVIn2: Sonar Visual-Inertial SLAM with Loop Closure for Underwater Navigation. arXiv 2018, arXiv:1810.03200. [Google Scholar]

- Li, S.; Cui, P.; Cui, H. Vision-aided inertial navigation for pinpoint planetary landing. Aerosp. Sci. Technol. 2007, 11, 499–506. [Google Scholar] [CrossRef]

- Martinelli, A. Visual-inertial structure from motion: Observability and resolvability. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4235–4242. [Google Scholar]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems; Artech House: Norwood, MA, USA, 2013. [Google Scholar]

- Sturm, P. Pinhole camera model. In Computer Vision: A Reference Guide; Springer: Berlin/Heidelberg, Germany, 2014; pp. 610–613. [Google Scholar]

- El-Diasty, M.; Pagiatakis, S. Calibration and stochastic modelling of inertial navigation sensor errors. J. Glob. Position Syst. 2008, 7, 170–182. [Google Scholar] [CrossRef]

- Syed, Z.; Aggarwal, P.; Goodall, C.; Niu, X.; El-Sheimy, N. A new multi-position calibration method for MEMS inertial navigation systems. Meas. Sci. Technol. 2007, 18, 1897. [Google Scholar] [CrossRef]

- Sifter, D.; Henderson, V. An advanced software mechanization for calibration and alignment of the advanced inertial reference sphere (AIRS). In Proceedings of the 8th Guidance Test Symposium, Holloman Air Force Base, NM, USA, 11–13 May 1977. [Google Scholar]

- Lang, P.; Pinz, A. Calibration of hybrid vision/inertial tracking systems. In Proceedings of the 2nd InerVis: Workshop on Integration of Vision and Inertial Sensors, Barcelona, Spain, 18 April 2005. [Google Scholar]

- You, S.; Neumann, U.; Azuma, R. Hybrid inertial and vision tracking for augmented reality registration. In Proceedings of the IEEE Virtual Reality (Cat. No. 99CB36316), Houston, TX, USA, 13–17 March 1999; pp. 260–267. [Google Scholar]

- Alves, J.; Lobo, J.; Dias, J. Camera-inertial sensor modelling and alignment for visual navigation. Mach. Intell. Robot. Control 2003, 5, 103–112. [Google Scholar]

- Lobo, J.; Dias, J. Relative pose calibration between visual and inertial sensors. Int. J. Robot. Res. 2007, 26, 561–575. [Google Scholar] [CrossRef]

- Lobo, J.; Dias, J. Vision and inertial sensor cooperation using gravity as a vertical reference. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1597–1608. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Trawny, N.; Roumeliotis, S.I.; Johnson, A.E.; Ansar, A.; Matthies, L. Vision-aided inertial navigation for spacecraft entry, descent, and landing. IEEE Trans. Robot. 2009, 25, 264–280. [Google Scholar] [CrossRef]

- Kelly, J.; Sukhatme, G.S. Fast Relative Pose Calibration for Visual and Inertial Sensors; Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 515–524. [Google Scholar]

- Kaminer, I.; Pascoal, A.; Kang, W. Integrated vision/inertial navigation system design using nonlinear filtering. In Proceedings of the 1999 American Control Conference (Cat. No. 99CH36251), San Diego, CA, USA, 2–4 June 1999; pp. 1910–1914. [Google Scholar]

- Yang, Z.; Shen, S. Monocular visual–inertial state estimation with online initialization and camera–imu extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Extrinsic parameter calibration for line scanning cameras on ground vehicles with navigation systems using a calibration pattern. Sensors 2017, 17, 2491. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Zhao, K.; You, Z.; Ouyang, C.; Cao, Y.; Wang, Z. Error analysis and calibration method of a multiple field-of-view navigation system. Sensors 2017, 17, 655. [Google Scholar] [CrossRef] [PubMed]

- Foxlin, E.; Naimark, L. Miniaturization, calibration & accuracy evaluation of a hybrid self-tracker. In Proceedings of the Second IEEE and ACM International Symposium on Mixed and Augmented Reality, Washington, DC, USA, 7–10 October 2003; pp. 151–160. [Google Scholar]

- Pittelkau, M.E. Kalman filtering for spacecraft system alignment calibration. J. Guid. Control Dyn. 2001, 24, 1187–1195. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Katsaggelos, A. Digital Image Restoration: Springer Series in Information Sciences; Springer: Berlin/Heidelberg, Germany, 1991. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Lucy, L.B. An iterative technique for the rectification of observed distributions. Astron. J. 1974, 79, 745. [Google Scholar] [CrossRef]

- Biggs, D.S.; Andrews, M. Acceleration of iterative image restoration algorithms. Appl. Opt. 1997, 36, 1766–1775. [Google Scholar] [CrossRef]

- Hanisch, R.J.; White, R.L.; Gilliland, R.L. Deconvolution of Hubbles Space Telescope images and spectra. In Deconvolution of Images and Spectra, 2nd ed.; Academic Press, Inc.: Orlando, FL, USA, 1996; pp. 310–360. [Google Scholar]

- Bloesch, M.; Burri, M.; Omari, S.; Hutter, M.; Siegwart, R. Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback. Int. J. Robot. Res. 2017, 36, 1053–1072. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; ACM Inc.: Summersville, WV, USA, 1995. [Google Scholar]

- Rodríguez, J.A.M.; Mejía Alanís, F.C. Binocular self-calibration performed via adaptive genetic algorithm based on laser line imaging. J. Mod. Opt. 2016, 63, 1219–1232. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).