Abstract

Emotion detection based on computer vision and remote extraction of user signals commonly rely on stimuli where users have a passive role with limited possibilities for interaction or emotional involvement, e.g., images and videos. Predictive models are also trained on a group level, which potentially excludes or dilutes key individualities of users. We present a non-obtrusive, multifactorial, user-tailored emotion detection method based on remotely estimated psychophysiological signals. A neural network learns the emotional profile of a user during the interaction with calibration games, a novel game-based emotion elicitation material designed to induce emotions while accounting for particularities of individuals. We evaluate our method in two experiments ( and ) with mean classification accuracy of 61.6%, which is statistically significantly better than chance-level classification. Our approach and its evaluation present unique circumstances: our model is trained on one dataset (calibration games) and tested on another (evaluation game), while preserving the natural behavior of subjects and using remote acquisition of signals. Results of this study suggest our method is feasible and an initiative to move away from questionnaires and physical sensors into a non-obtrusive, remote-based solution for detecting emotions in a context involving more naturalistic user behavior and games.

1. Introduction

The process of detecting user emotions, an important element of affective computing [1], has wide applications in the field of human–computer interaction (HCI), particularly in games research. One of most the common techniques used to obtain data regarding the emotional state of players in a game is psychophysiological measurements [2]. Physical sensors provide uninterrupted measurement of user’s physiological signals without affecting the task at hand [3,4,5,6]. A significant amount of psychophysiological information can be read from the human body, including signals such as heart rate (HR), respiratory rate (RR), facial actions (FA), among others. The combined analysis of those different signals, known as multimodal or multifactorial analysis, is more likely to produce accurate results in the mapping of signals into emotional states [7,8]. The use of physical sensors, despite avoiding interruptions, is usually perceived as uncomfortable and intrusive, since they need to be properly attached to various parts of the user’s body. Additionally, sensors might restrict player’s motion abilities, e.g., a sensor attached to a finger prevents the use of that finger. Sensors also increase the user’s awareness of being monitored [9,10,11], which affects the results of any emotional modeling.

The use of remote sensing to acquire psychophysiological signals from users, a non-obtrusive data collection approach, is mentioned in the literature as a possible solution for this problem. Facial analysis, for instance, is an unobtrusive approach for emotion detection of players [12]. Advances in computer vision allow detailed inspection of facial activity, making automated facial analysis enough to infer emotions of players [13,14]. In that sense, automatically detected facial expressions have been used in the contexts of games, including to correlate dimensions of experience [15] and to enhance online games [16,17]. Another common signal used in emotion detection is HR, which can also be acquired using remote, non-obtrusive approaches. The use of remote photoplethysmography (rPPG) allows the estimation of HR and heart rate variability (HRV) using ordinary RGB cameras [18]. Physiological arousal is connected to emotion regulation [19,20,21], in particular the interactions of the Autonomic Nervous System (ANS). Consequently, physiological signals such as the HR are considered reliable sources of information due to their link to the ANS, as opposed to facial expressions [22], for instance. Despite the potential rPPG has to estimate HR without physical sensors, the natural behavior of users in a gaming context, e.g., movement and facial expressions, considerably impacts estimations [23]. Although these challenges are limiting factors, the use of rPPG has already been applied to emotion detection. Signals, such as HR and HRV, were used to remotely detect stress [3,24,25], for instance. In the majority of the cases, however, subjects are instructed to stay still [18], which leads to improved accuracy in the estimations. Such behavioral constraints affect the interaction between players and games making the experience unnatural.

The process of mapping physically or remotely acquired psychophysiological signals, e.g., HR and FA, into emotional states involves the identification of the signals that better predict the emotional states [26], as well as a proper definition of the emotional states themselves [27]. A common approach used to perform such mapping is the application of machine learning. The use of machine learning commonly starts with a group of users being exposed to emotion elicitation materials, e.g., images and videos with known emotional labels such as stress and boredom. Signals from those users, e.g., HR and facial expressions, are then measured during the interaction and used to train a machine learning model according to the labeled elicitation materials. Consequentially, predictive models are based on a collective perspective. Since a model is usually trained from data of several users, in practice, it potentially describes the average behavior of the group and excludes the key individualities of each user. Such individualities are the main characteristics that define a person, since people are not similar in several aspects such as play and learning styles [28], as well as different responses to media based on personality [29]. It has been suggested that a user-tailored approach, where a model is trained from a single individual instead of a group, is more likely to produce better emotional estimations [30].

In summary, previous work focused on obtrusive, physical sensors to acquire user signals for emotion detection. Even when non-obtrusive approaches are used, e.g., rPPG and facial analysis based on computer vision, models are trained based on emotion elicitation materials with limited interactivity, e.g., images and videos. When exposed to such materials, subjects face a passive role with limited opportunities for interaction. Additionally, such materials do not adapt themselves to account for differences in cultural expectations of subjects, assuming all subjects experience the pre-defined emotional stimuli in the same way. Hence, it is reasonable to believe that individual characteristics of users might be better observed with more personalized and complex emotion elicitation materials such as games. Furthermore, a user-tailored approach is likely to preserve and better account for individual characteristics in a method for emotion detection, as opposed to a group model to detect emotions. Additionally, models created from a group are highly affected by ethnic and gender bias, since it is significantly difficult to obtain data from a group that accurately represents the world population. Such limitation is considerably mitigated in a user-tailored model, since the approach is, by design, based on the data of a single person who is already a perfect representation of him/herself.

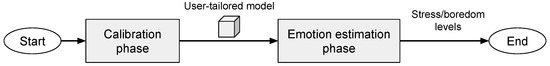

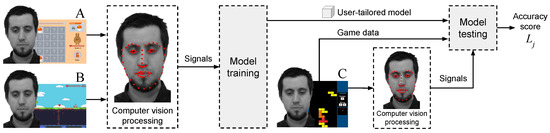

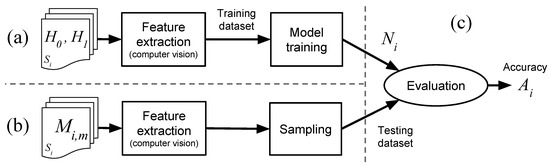

In that light, there is a lack of initiatives focusing on non-obtrusive, user-tailored emotion detection models within the context of games research that is based on game stimuli. This paper presents an approach that aims to fill that gap, providing the HCI and games research community with a process to remotely detect the emotional state of users in a non-obtrusive way. Our approach uses remotely acquired signals, namely HR and FA, to create a user-tailored model, i.e., trained neural network, able to detect emotional states of boredom and stress of a given subject. The approach is composed of two phases: training (or calibration) and testing, as illustrated in Figure 1. In the training phase, the model is trained using a user-tailored approach, i.e., data from subject playing calibration games are used to create model . The calibration games are a novel emotion elicitation material introduced by this research. These games are carefully designed to present a difficulty level that constantly progresses over time without a pre-defined stopping point, inducing emotional states of boredom and stress, which accounts for the individualities of each user. The result of the training phase is a user-tailored model, i.e., model , which is a trained neural network aimed for use on subject . Finally, the testing phase is conducted in a game session involving subject playing any ordinary, non-calibration game, e.g., Super Mario. During the testing phase, the signals of subject’s are remotely acquired and fed into the previously trained model , which then outputs the estimated emotional state of subject for that particular testing game. Subjects are not instructed on how to behave during the process, which makes the method more generalizable to a context where users behave and interact with games naturally. Our main contribution is a method for detecting emotional states of stress and boredom of users during gaming sessions using remote acquisition of signals via computer vision, a user-tailored model and emotion elicitation based on a novel game-based calibration phase. The approach is automated and implemented as a software without the need of specialized equipment, e.g., sensors. Only a regular video camera and a computer are needed. We present the conception, feasibility test, and systematic evaluation of our method conducted to test the overarching hypothesis: an emotion detection approach that uses remotely acquired signals, i.e., HR and FA, as input of a machine learning model, i.e., neural network that is trained on a user-tailored basis (one subject produces one model) using calibration games as emotion elicitation can achieve an accuracy rate better than chance-level classification. Results are derived from two different experiments with a heterogeneous group of subjects, i.e., and , who behaved naturally during the interaction with the games. Previous studies use physical sensors, non-interactive emotion elicitation materials, e.g., images, instructions for subjects to keep still, and highly correlated samples for training and testing any emotion classification model. Our approach and analysis, however, features unique circumstances: our user-tailored model is trained on one dataset (calibration games) and tested on another (evaluation game), while preserving the natural behavior of subjects and using remote acquisition of signals. This configuration is significantly more challenging than previous work. Our proposed method has use cases in both the academia and industry. Regarding the former, researchers of games and HCI can use our approach to detect the emotional state of users during the interaction with digital games and information systems non-obtrusively and without interruptions, e.g., answering questionnaires. The detection of stress and boredom, for instance, is directly related to the engagement level of users as represented by the Theory of Flow [31]. In the industry, the unobtrusive detection of stress and boredom levels can be used to evaluate games, since said emotions are key components in the challenge–reward cycle that makes a game fun and engaging [32]. An evaluation regarding stress and boredom, for instance, allows game developers and publishers to optimize their products before market, leading to higher chances of financial success. Finally, game developers can explore new ways of creating games that detect stress and boredom levels without additional hardware or sensors, making games self-adjust the difficulty level during run-time to improve players’ experience.

Figure 1.

General structure of our proposed method for remote detection of stress and boredom of players during their interaction with games.

2. Remote Detection of Stress and Boredom

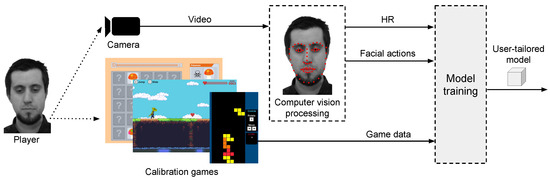

The general structure of our proposed method for remote detection of emotions contains two main phases, as previously mentioned: a calibration and an emotion estimation phase. In the calibration phase, the user plays a set of carefully designed games, named calibration games that act as emotion elicitation materials. During this phase, illustrated in Figure 2, user signals elicited from the interaction with the games, e.g., HR and facial actions, are remotely acquired and used to train a user-tailored model, i.e., neural network. This model is the foundation for the detection of emotions of that particular user. Finally, in the emotion estimation phase, the user interacts with any ordinary game, e.g., commercial off-the-shelf (COTS) game, while his/her signals are remotely acquired and fed into the previously trained user-tailored model. The model then outputs the emotional state of stress and boredom for that user in that game.

Figure 2.

Calibration phase composed of emotion elicitation games (calibration games) and remote acquisition of signals from the user. The result of this phase is a user-tailored model applied to detect emotions.

As a consequence of the design of the calibration games (detailed in Section 2.1), which induce particular emotional states, the remotely collected information from the user during the calibration phase contains a detailed variation profile of that user, including changes of psychophysiological signals, throughout the theoretically known emotional states. If a person has a better response to a certain physiological signal instead of another, e.g., HR over facial actions, then the variation of that signal is likely to be considered more relevant by the machine learning model during its training. Since the training process is completely based on the signals of a single user, nuances and individual behavior are likely to be registered and learned. The calibration phase needs to be performed once per person.

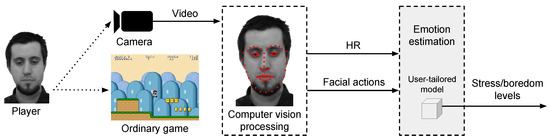

After the calibration phase, the person can play any other ordinary game and be monitored in an emotion estimation phase, as illustrated by Figure 3. As the user plays the game, signals are remotely acquired based on the analysis of the video feed of the interaction. These signals are then used as input to the previously trained user-tailored model of that particular person, which, as a result, produces an estimation of the emotional state regarding stress and boredom for that person in that game. Given that a user has a trained user-tailored model, the emotion estimation phase can be performed for any game as many times as desired.

Figure 3.

Emotion estimation phase. Remotely acquired signals from the player are fed into a user-tailored model that outputs the stress/boredom levels of the player during the interaction with an ordinary game.

2.1. Game-Based Emotion Elicitation

Different types of emotion elicitation materials have been mentioned in the literature regarding emotional stimuli. Commonly, emotion elicitation materials are based on images [33,34], videos [30,35] and gamified cognitive tests [24,36]. The use of computer games as emotion elicitation material is less common; however, they have been proved to provoke alteration in the mean HR of players at stressful periods of gameplay [37,38]. The type of emotion elicitation material also impacts the behavior of subjects. When images and videos are used, for instance, subjects take a passive role with limited possibilities of interaction, more likely resulting in less emotional and corporal manifestations. If games are used instead, users can take an active role in the interaction and are more likely to behave in a natural way, e.g., featuring facial expressions and moving the body [39]. In a game, users present some degree of body movement, e.g., move of fingers in regard to controllers. Users are also in charge of actions related to the mechanic, whose consequences, e.g., wrong/right moves, is likely to produce an emotional reaction associated with movement, e.g., laugher and head tilt. Therefore, it is plausible to believe that games present a more sophisticated interaction through their mechanics, as opposed to the simplistic, one-way interaction between users and images/videos, for instance. Consequentially, detecting emotions of users during their interaction with games presents a set of challenges inherently associated with the natural behavior of users in that context. As such, the use of games as emotion elicitation materials is likely to create an emotional connection between users and the stimuli while still portraying the expected natural behavior existent in a gaming session, resulting in clear and observable changes in body language and psychophysiological signals.

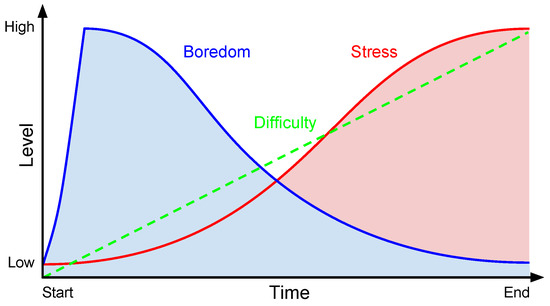

Part of the novelty of our approach relies on the use of games as emotion elicitation materials, which are likely to induce richer emotional reactions, as previously mentioned. In the calibration phase of our method, three games are used to provoke emotional changes in the subjects, so an emotional profile can be learned. We call them calibration games because their goal is to induce an emotional state of boredom and stress on the subject on a user-tailored basis, accounting for different gaming skills and expectations, as illustrated by Figure 4. At the beginning, the difficulty level (green line) is low and the games are highly predictive and without changes or surprises with a focus on the passage of time and waiting. Such characteristics lead the user to an emotional state of boredom (blue curve) [32,40,41]. The games’ difficulty is then constantly and periodically adjusted, which makes them more challenging as time progresses. At some point in time, which is different for each user depending on gaming skills and personal preferences, the pace of the gameplay and the difficulty level will be overwhelming, leading the user to an emotional state of stress (red curve) [32,39]. As the difficulty level continues to increase, the stress level of the user will also increase. Finally, the difficulty level will increase to the point at which the user is unable to cope with the game. This will lead to consecutive mistakes in the game that will eventually terminate it, e.g., health bar of the main character reaches zero. Consequently, the calibration games should account for the different expectations and gaming skills of a wide range of users while inducing boredom and stress. Three calibration games were developed for the experiments presented in this paper. They were 2D and casual-themed, and played with a mouse or keyboard in a web browser. The game mechanics were chosen to prevent the subject to control the pace of the game, which ensures that all the subjects have the same game pace. As previously described, the difficulty of the games increases constantly and the pace is used as a main element to induce particular emotions. Additionally, the mechanics were selected to prevent players from instantly killing the main character by mistake, avoiding an abrupt termination of the interaction.

Figure 4.

Structure of a calibration game. The x-axis shows the progression of time. The y-axis shows the game difficulty and the emotional state users should experience, i.e., stress and boredom.

Previous analysis [39] conducted on the three calibration games mentioned in this paper, i.e., Mushroom, Platformer and Tetris (see Section 4.1), show that players’ self-reported emotional state indicate they perceived the games as being boring at the beginning and stressful at the end. This is an essential characteristic of our idea of calibration games, since they are expected to induce emotional states of boredom and stress, as previously mentioned. It has also been confirmed that at boring and stressful parts of such calibration games players present different mean HR [42] and facial activity [39,43]. This strengthens the idea of our calibration games being used as emotion elicitations materials able to evoke psychophysiological reactions that can be used to train a machine learning model for emotion detection.

2.2. Remote Extraction of User Signals and Classification Features

The classification efficiency of a machine learning model is related to the number of features able to accurately discriminate the elements being classified, and the use of more features does not necessarily produce a better model [44] (Chapter 6). Hence, we use a set of features for classification derived from psychophysiological signals that are remotely extracted from a video. They were designed on the basis of previous reports regarding the potential of said signals and features to differentiate emotional states in games. In total, eight features, denoted to , are calculated: to are related to facial activity, while is related to HR activity estimated using rPPG. Table 1 presents a description of all the features.

Table 1.

Description of features used for classification.

Features to are based on 68 facial landmarks automatically detected using Constrained Local Neural Fields (CLNF) [46,47]. They are mostly calculated using the Euclidian distance between those facial landmarks, and they are modeled after facial elements that express a connection with emotional states (see Bevilacqua et al. [43] for a detailed description of the features). This connection is mentioned in Section 3, such as activities of the corrugator, the zygomatic and the orbicularis oculi muscles which are related to positive game events [48], positive emotions [6], and rewarding game events [49], respectively. The detection of stress is also related to blinking rate [33,50], lip movement [50] and lips deformation [33,51], mouth activity [52], and head movement/velocity [33].

Feature is based on remote estimations of HR performed using an established rPPG technique, i.e., Poh et al. [45]. As mentioned in Section 3, HR and its derivatives are well founded sources of information used for emotion detection [7]. Reports in the literature show the use of HR and its derivatives for continuous arousal monitoring [35], the measurement of confusion [53], the detection of emotional reactions related to digital media [54], the detection of mental and physical stress [55,56], and the measurement of frustration [38]. The rPPG technique proposed by Poh et al. [45] has been reported in the literature as significantly resilient when estimating the HR under challenging conditions, thus we have selected such technique as a suitable way to estimate the HR of users within a context involving games and the natural behavior of players.

The process of extracting and calculating the features is based on a moving window applied to the input video. The moving window has a size of 15 s and a step of one second (93.33% overlap). For each window in the video, the previously mentioned CLNF and rPPG techniques are applied to all frames within that window, to detect facial landmarks and collect information regarding pixel values, e.g., the mean value of pixels in the blue channel. Even though all frames within the window are analyzed, only a single, final value is assigned to each feature per window. For features to , the final value of a given feature is calculated by aggregating the values of all the frames within the window of that given feature by using mean or standard deviation. Empirical tests conducted for this research have shown that features connected to facial regions with fast changes within the window, e.g., eye area and face motion, are better represented by an aggregation using the standard deviation. However, facial features with slower changes, e.g., face area and mouth activity, are better represented by an aggregation using the mean. Consequentially, features , and are aggregated using the mean, while features and are aggregated using the standard deviation. Feature does not require any aggregation of values, since all frames within the window are used to produce a single value, i.e., the estimation of the mean HR in that window.

3. Background and Related Work

An important aspect of emotion detection relies on physiological signals being linked to emotion regulation [19,20,21]. The ANS, which is related to physiological arousal [19], is subdivided into the Sympathetic and Parasympathetic Nervous System (SNS and PNS, respectively). These systems interact (often antagonistically) to produce variations in physiological signals to prepare a person to react to a situation. The SNS is dominant during physical and psychological stress, triggering the body to alertness, by increasing the HR, for instance. The PNS, on the other hand, is dominant in periods of stability and relative safety, maintaining physiological signals at a lower degree of arousal, e.g., by decreasing the HR. The continuous changes between the SNS and PNS impulses cause, for instance, variations of HR and HRV [21], which refers to beat-to-beat alternations in HR intervals.

Physiological signals are interesting for emotion detection because suppressing emotions or social masking through physiological signals is impossible [57]. In the games research field, the use of physiological signals to automatically assess different emotional states has been demonstrated [3,4,5,6]. Commonly, the process of emotion detection involves three main steps: emotion elicitation stimuli, feature extraction, and classification. In that configuration, subjects are exposed to emotion elicitation materials which induce changes in psychophysiological signals. The signals are then physically or remotely read and a set of features is derived from them. Finally, the features are used to train a machine learning model able to classify emotions based on the patterns of changes of said signals/features.

Different approaches exist for emotion elicitation stimuli, feature extraction, and classification methodologies regarding emotion detection. The following sections present works and theories related to each one of those elements.

3.1. Extraction and Selection of Psychophysiological Signals

The extraction of psychophysiological signals is usually performed obtrusively, e.g., via physical sensors, or non-obtrusively, e.g., remote sensing based on computer vision.

3.1.1. Physical Readings of Signals

Signals obtained by Electroencephalography (EEG), Electrocardiogram (ECG), Electromyography (EMG), and Galvanic Sking Response (GSR) are widely mentioned in the literature [58]. Reading of HR via ECG, for instance, have been shown to be usable in detecting emotional states [59], including stress [60] and boredom [9]. Mental stress or physical activity can influence the HR due to its connection to the ANS [61], which can be exploited for emotion detection. Facial activity is also mentioned as a source of information for emotion detection. The use of EMG to measure the electrical activity of facial muscles has been used to correlate emotions with activity of the zygomatic muscle [6], game events with activity of the corrugator [48], and the zygomatic and orbicularis oculi muscles [49]. Finally, non-invasive, watch-like wearable devices have also been demonstrated as sensors to collect physical signals for emotion classification [62]. Rincon et al. [63] demonstrate the use of such a wearable device that was custom-built to detect GSR, temperature, variations in blood flow, and movement. The device is jointly used with automated facial analysis and aims to detect the emotional state of individuals to collectively infer the emotional state of a group of users using a multi-agent approach. Emotion information of individuals using the wristband contributes to the calculations of an emotional state of the group they belong to.

Despite sensors allowing accurate measurement of signals, reports in the literature show that noise is still found in collected data due to sensor motion [64].

3.1.2. Remote Readings of Signals

Contrary to the obtrusiveness of measuring signals using physical sensors, the extraction of signals using computer vision is presented as a viable, non-obtrusive alternative [65]. Remote photoplethysmography (rPPG) [66] is commonly used to estimate the HR of a person from the analysis of a video [67]. The principle of rPPG is the contact-less, camera-based detection and monitoring of the variations in light absorption that happen on the human skin in order to estimate cardiac activities [68]. The use of such remotely estimated HR measurements have been used in the context of emotion estimation, including the detection of cognitive stress [36], and inference of stress [3,24,25] and boredom [7]. The use of rPPG, however, is highly affected by the subject’s movement and facial activity, e.g., facial occlusion and talking, which is likely to happen during more natural interactions. Consequentially, evaluations of rPPG measurements are commonly performed in circumstances where subjects are instructed to remain still [18,69,70], or to perform artificial tasks intended to mimic natural behavior; examples of such tasks include arbitrary head movements [71], conduct mathematical operations mentally [36], and simplistic computer–human interactions [72,73]. Aiming to improve the accuracy of the HR estimations, rPPG-based techniques employ different approaches to collect and process the data acquired from a video. Initiatives include tracking of head movement [74], use of pre-defined skin-tone models [75,76], and signal estimation based on blind source separation (BSS) such as independent component analysis (ICA) [45]. Due to the statistical nature of ICA, the BSS-based technique by Poh et al. [45] is significantly resilient to subject’s motion and changes in illumination conditions, presenting better HR estimations compared to other rPPG techniques when subjects are not performing periodic motion [75], e.g., exercise.

Facial analysis performed manually or automatically is another source of information to extract user signals. When manual analysis is conducted, inspection commonly relies on the Facial Action Coding System (FACS) and its facial action units (AU) being used as a signals/features for quantitative emotion detection [77]. The frequency of manually annotated AUs has been reported as an indication of the emotional state of subjects, suggesting more AUs in stressful parts of a game compared to neutral parts [78]. Automated FACS-based analysis has been used to correlate engagement and frustration to facial expressions [79], as well as an estimator of emotional states in games [80]. The use of FACS-based analysis is contested; however, since it is a time-demanding task when performed manually and the decoding of facial expression has yielded different results due to cultural factors [81]. In that light, the automated analysis based on computer vision is presented as an alternative to detect facial features and classify them into facial expressions [82]. Some initiatives focus on detecting the six universal facial expressions [83], while others rely on distances, i.e., Euclidian distance, and angles of facial features to estimate emotional states [84,85,86,87,88,89]. The tracking of head movement and facial activity, e.g., talking and gestures, has also been reported as an important element in emotion detection, arguably because subjects feature a neutral face most of the time during the interaction with games [90]. Head movement is mentioned as another element for emotion detection, which can be used to detect gestures and facial expressions [91], or correlated with experience in gaming [92] or stressful situations [33].

3.2. Emotion Classification

Emotion classification based on psychophysiological signals commonly try to map those signals into emotional states defined by a model. One model mentioned in the literature is the basic emotions proposed by Ekman and Friesen [83]. Constructed from an experiment involving cultural differences, it suggests that particular facial muscular patterns and discrete emotions are universal. The six emotions mentioned in the theory are: happiness, surprise, sadness, fear, anger and disgust. A contrary definition is presented by Russell [93], who defined another model of emotions named Circumplex Model of Affect (CMA). Commonly referred to as Russell’s Arousal–Valence (AV) space, the model is contrary to strictly basic emotion models of affective state, where each emotion emerges from independent neural systems [94]. The model proposes a dimensional approach where all affective states arise from the activation of two fundamental neurophysiological systems: arousal (or alertness) and valence (a pleasure–displeasure continuum). In the field of games research, one of the most mentioned theories regarding emotions is the theory of flow. Flow was originally defined as a phenomenon in which a person experiences a subjective state characterized by an intense level of attention during the execution of an intrinsically motivated activity [95]. It has been used as the foundation for several concepts, including engagement and immersion [96], sense of presence [97] and applicability in game design [31,98,99].

3.2.1. Approaches Based on Physical Contact and Sensors

The first multifactorial analysis approaches were based on obtrusive measurements of signals using physical sensors. Chanel et al. [100], for instance, demonstrate the use of multifactorial input analysis to measure emotions and involvement in a game context. In their study, participants play Tetris in different conditions of difficulty while a variety of sensors, including a respiration belt and an electroencephalogram (EEG) system, monitor them. Classification accuracy ranges from 48% to 55% depending on input signals and classifiers used to create the model.

Vandeput et al. [55] demonstrate the use of HR and HRV to detect mental and physical stress. Three demanding activities (a postural task, a mental task and a task that is a combination of both) are used and for almost all the HR measures obtained, the demanding activities can be distinguished from the rest period. The authors also point out that mental stress decreased high frequency components of the HRV interval, i.e., , while increasing low frequency ones, i.e., . A similar experiment conducted by Garde et al. [56] involved two tasks: one mental and physically demanding, i.e., digital version of the Stroop color word test [101], while the other was only physically demanding. The authors confirmed the findings of Vandeput et al. [55] by showing higher HR, increased and decreased during the mentally demanding task, compared to the rest period.

Grundlehner et al. [35] also use physical sensors to perform real-time continuous arousal monitoring. The authors record and use four signals from subjects to estimate arousal: ECG, respiration, skin conductance and skin temperature. The ECG is used to calculate HRV, which is then applied in the estimation. The data used for emotion-triggering is based on videos, sounds and a cognitive demanding game. A regression analysis is performed to identify the importance of the features in the estimation of arousal. and are not significant, compared to the other signals, e.g., skin conductance, while the standard deviation of HRV presents a significant weight. The arousal prediction matches the hypothesized arousal events marked by the authors in each of the emotion-triggering events. The results, however, were derived with controlled, pre-defined events that are expected to cause reactions, which might be related to arousal. More subtle or dynamic interactions, such as the ones obtained when a subject plays a digital game, might not be identified or detected by the approach proposed by the authors.

Bailenson et al. [30] use a combination of physiological signals (obtained from physical sensors) and facial expressions. Authors use a machine learning model to map the input signals to emotions (sadness or amusement). The training data used for the machine learning model are based on recordings of participants while they watched a video containing different emotion-triggering segments. Physiological signals, among them HR and skin conductance/temperature, are used in conjunction with video frames annotated by professional coders to create a predicting model. Results show a model better at categorizing emotions than measuring their intensities. In addition, the use of facial and physiological information combined is more efficient than using either one alone. Additionally, a person-specific model performs better than a group model, which suggests that person-tailored models might be more suitable for predictions than general-purpose ones.

3.2.2. Approaches based on Remote, Non-Contact Analysis

Zhou et al. [102] propose a completely non-obtrusive and remote approach for user emotion identification based on a multifactorial analysis. A classifier is trained on a set of psychophysiological signals, among them HR and facial activity, i.e., expressions, blinking, pupil dilatation and head movement, in combination with information related to the user interaction, i.e., keyboard/mouse usage and sentiment analysis of the content being read. The accuracy rate the classifier achieves ranges from 56% to 89% depending on different emotional states being identified. However, the authors do not specify how much each input signals contributes to the emotional output. Additionally, it is not possible to infer whether such approach could be used outside the controlled environment created by the authors, since this would require a simulation of a social network filled with previously defined and known content in order to work.

McDuff et al. [36] also use a camera to remotely measure cognitive stress via HRV. Participants are recorded while resting and performing arithmetic tasks silently. A facial region is automatically detected and used to estimate the blood volume pulse (BVP) based on a PPG signal processed using Independent Component Analysis (ICA). A set of physiological parameters are then derived from the estimated BVP, including HR, HRV, and respiratory rate (RR). Such parameters are used to train a classifier to predict if a user is under cognitive stress. Results show a prediction accuracy of 85% for a model based on Support Vector Machine (SVM). Authors report that the HR was not significantly different during the periods of rest and cognitive stress. Additionally, RR and HRV are reported as the strongest predictors. McDuff et al. [24] perform further investigations but use different cognitive tasks (two cognitive demanding games). A person-independent machine learning model based on HR, HRV, , (along with normalized and combined versions of these signals) and breathing rate is used to classify the stress level of the subjects. Authors report no statistical difference regarding heart and breathing rates in any case. The variations of and , however, are significantly different during the cognitive tasks compared to the rest period; higher and lower power are found in both cognitive tasks compared to the rest period, which aligns with findings of previous work. The authors also point out that the stress predictions made by the model are consistent with the self-reported answers. The two participants with the highest self-reported stress level show the highest predicted stress level, while the two participants with the lowest self-reported stress level also present the lowest predictions.

Finally, Giannakakis et al. [33] present an approach for the detection of stress/anxiety on the basis of eye blink, mouth/head movements and HR estimations using rPPG. The participants are recorded while performing a set of tasks designed to trigger emotional responses, such as talking in a foreign language, remembering a sad incident, visualizing images/videos and playing a gamified cognitive test, i.e., Stroop test. Facial cues are obtained from an automatically detected region of interest (ROI). These cues are used to extract facial features connected to head movement, mouth and eyes. The extracted features, including HR information estimated using rPPG, are selected and ranked accordingly to maximize a machine learning classification step. Different classifiers are employed, which yield different classification accuracy rates. For each task performed by the subjects, classification accuracy ranges between 80% and 90% taking into account the most efficient classifier. It is noted by the authors that the observation of stressful images and the interaction with the Stroop test appear to be the most consistent across the classifiers employed.

4. Experimental Setup

We have conducted two distinct experiments, henceforth named Study 1 and 2, to systematically evaluate our method for remote detection of emotions. In Study 1, we aimed to test the feasibility of both our proposed method and the use of calibration games as emotion elicitation materials to train an emotion classifier. In Study 2, we aimed to evaluate the accuracy of our method in a more realistic scenario by applying it to estimate the emotional state of subjects during the interaction with a COTS game. Both experiments share common elements, such as the calibration games used for emotion elicitation. The following sections firstly describe such elements, followed by a detailed description of the unique elements of each study, including their setup and the profile of the subjects involved.

4.1. Calibration Games

Three games (source code available at: https://fernandobevilacqua.com/link/phd-experiment1) were used as stimuli elicitation materials in both experiments. They are illustrated in Figure 5. They were named Mushroom, Platformer, and Tetris due to their mechanics. The Mushroom game follows the puzzle genre and the main goal is to correctly sort poisonous from non-poisonous mushrooms. The distinction is made by the color pattern found on the upper part of the mushrooms. At each round, a set of mushrooms is presented to the player who has a certain time to correctly identify and sort them, dragging and dropping the good ones into a creature (the monster that feeds on the mushrooms) and the bad ones into the trash bin. At the upper part of the screen, the game shows an illustration of a poisonous mushroom that must be identified among the presented mushrooms for that round, along with a bar that shows the progression of time, i.e., time remaining to identify the mushrooms of the current round. At each round, a new illustration of a poisonous mushroom is presented along with a new set of mushrooms to be identified and sorted. The player does not have to remember previously presented poisonous mushrooms, just the currently presented poisonous one for the active round. As a consequence, a poisonous mushroom in a previous round could be a non-poisonous mushroom in future rounds, and vice versa. If the player performs a wrong action, e.g., drags a good mushroom into the trash or a bad one into the monster, a buzz sound is played, visual feedback informs about the mistake, and the health bar of the monster is decreased. Correct actions are rewarded with a pleasant sound, a visual feedback, and an increase in the health bar of the monster. If the player correctly identifies all mushrooms of the round before the time bar reaches the end, the player must wait until a new round is presented. If there are still mushrooms left to identify when the available time for the current round ends, each remaining mushroom is treated as a wrong action, i.e., a buzz sounds and visual feedback highlights all pieces that were not sorted. At the beginning of the game, rounds present few mushrooms and considerable time is available for sorting all pieces. As the subject plays the game and the time progresses, rounds present more mushrooms and less time is given to the sorting task. Eventually, mistakes will decrease the health bar of the monster towards zero, ending the game.

Figure 5.

Screenshots of the three calibration games used in the experiments. From left to right: Mushroom, Platformer, and Tetris. Reproduced from Bevilacqua et al. [23].

The Platformer game follows the endless runner platform genre and the main goal is to avoid obstacles while collecting hearts. The main character, i.e., a dinosaur, is displayed slightly to the left of the screen and the player is unable to move it horizontally. The player can make the character jump over or slide under obstacles. Platforms with different heights are presented to the player, moving from right to left; however, they never become disconnected, i.e., the player does not need to jump from one platform to another, just follow the provided slope up or down. When the main character collides with an obstacle, a sound related to hurting is played and the content of the health bar is decreased. If the character collects a heart, a pleasant sound is played and the content of the health bar increases. At the beginning of the game, the speed of the main character, i.e., how fast the platforms move towards the left, is significantly slow, few obstacles are presented, several hearts are available, and there are few variations regarding the heights of the platforms. As time progresses, the speed of the platforms increase, more obstacles, and different platform heights are presented, as well as less hearts being available. As the speed of the platforms and the number of obstacles increases, the player commits more mistakes, eventually leading the health bar of the main character to reach zero, which ends the game.

Finally, the Tetris game follows the genre of the original Tetris game. Differently from its original version, in our game, the player is not presented with a visual indication of the next piece to enter the screen. Additionally, the player is not able to use any key to speed up the movement of the pieces. They can only be moved to the right/left and rotated. At the beginning of the game, the speed the pieces fall is significantly low, so players must wait until pieces reach the bottom of the screen or fit into already established pieces. As time progresses, the speed the pieces fall increases every minute, so the player has less time to react to new pieces and plan their trajectory. Eventually, the pieces move too fast for the skill level of the player (which is different for each subject based on gaming experience, for instance), leading to mistakes and the pilling up of pieces. As in the original version of the Tetris game, when a piece reaches the upper part of the screen the game ends.

The three calibration games used the same seed for any calculation of randomness, i.e., random number generation. Consequentially, the same sequence of game elements was guaranteed to all subjects, e.g., same sequence of mushrooms in the Mushroom game, same pattern of platforms and obstacles in the Platformer, and same sequence of pieces in Tetris.

4.2. Equipment and Data Collection

In both experiments, subjects were seated alone in a room in front a computer, while being recorded by a camera and measured by a heart rate sensor. The camera was attached to a tripod placed in front of the subjects at a distance of approximately 0.6 m; the camera was tilted slightly up. A spotlight, tilted 45 up, placed at a distance of 1.6 m from the subject and 45 cm higher than the camera level, was used for illumination; no other light source was active during the experiment. Figure 6 illustrates the setup.

Figure 6.

Experiment setup. On the left, an image illustrating the position of the equipment, including the angle of the external light source; on the right, an image highlighting the position and angle of the video camera.

During the whole experiment, subjects were recorded using a Canon Legria HF R606 video camera (Canon Inc., Tokyo, Japan). All videos were recorded in color with 24-bit RGB, i.e., three channels of color with eight bits each, and a frame rate of 50 p. Video files presented a resolution of 1920 × 1080 pixels and used the AVCHD-HD format (encoded using MPEG-4 AVC). At the same time, the HR of subjects was measured with a frequency of 1 Hz by a TomTom Runner Cardio watch (TomTom International BV, Amsterdam, The Netherlands). The watch was placed on the left arm like a regular wrist watch at a distance of about 7 cm from the wrist. The use of such device was unobtrusive, therefore subjects could still freely use both hands to play the games.

After playing each calibration game, subjects answered a questionnaire to provide self-reported emotional states related to stress and boredom. Questions were presented as a 5-point Likert scale contextualized regarding the beginning and the end parts of the games, e.g., how stressful was this game at the beginning (1: not stressful at all, 5: extremely stressful). After playing all games, subjects also answered a questionnaire about demographics and gaming experience. The questions were based on the Video Game Experience Questionnaire [103] and the Survey of Spatial Representation and Activities [104].

4.3. Participants

In both experiments, subjects were recruited through advertisement material spread on social media channels and in the campus of the University of Skövde. Subjects were students, staff members, and inhabitants of the community/city. All participants gave their informed and written consent to participate in the experiments, which were approved by the research ethics adviser at the University of Skövde, Sweden and conducted in accordance with the ethical recommendations of the Swedish Research Council [105]. There were, for Study 1 and 2 respectively, subjects (50% female) aged 22 to 59 years old (mean 35.4 ± 10.79) and subjects (38.7% female) aged 19 to 66 years old (mean 27.2 ± 7.2). Participants of this experiment, i.e., Study 2, and those of Study 1 are different; there is no overlap of subjects in both experiments. The population of subjects in both experiments presented a diversity of gender and gaming experience, as shown in Table 2 and Table 3, which present self-reported levels of skill at playing video games and the number of hours per week subjects played any type of video game over the last year for subjects of Study 1 and 2, respectively.

Table 2.

Self-reported levels of skill at playing video games for subjects in Study 1 () and Study 2 ().

Table 3.

Self-reported number of hours per week subjects in Study 1 () and Study 2 () played any type of video game over the last year.

The diversity in gaming experience, gender and age of the subjects in both of our experiments provides heterogeneous data that allow a more realistic and broad analysis of our proposed method. It is important to highlight that, in a model trained on a group basis, i.e., data from several subjects are used to train a model that is evaluated on other subjects, the wide range of ages and gaming experiences of our population of subjects could create outliers in the statistical examination of the training and testing phases. This is not the case since our method uses a user-tailored approach, i.e., the emotion classifier is trained and evaluated on the data of a single subject, not a group. Consequentially, all statistical analysis conducted in the training and testing phases is based on each subject, which means that a given subject is evaluated with a model trained on the data from that same given subject. In summary, the answers given by a particular subject are the baseline for the analysis of that subject.

4.4. Configuration of Study 1

Materials and Procedures

Each participant was recorded for approximately 25 min, during which they played three games, i.e., Mushroom, Platformer and Tetris. Subjects played those three games in a randomized order. The first two games were followed by a 138 s’ rest period, during which subjects listened to calm classical music. Participants received instructions from a researcher informing them that they should play three games, answer a questionnaire after each game and rest. In addition, they were told that their gaming performance was not being analyzed, that they should not give up in the middle of the games and that they should remain seated during the whole process. As previously mentioned, subjects answered a questionnaire after playing each game.

4.5. Configuration of Study 2

4.5.1. Materials and Procedures

Participants were recorded for an average of 45 min during two (uninterrupted) parts of the experiment, i.e., the calibration and testing phase, as illustrated by Figure 7. In the calibration part, aimed at gathering data for training a user-tailored model, subjects played three calibration games (described in Section 4.1). Subjects played those calibration games in a randomized order. Similarly to Study 1, after playing each game, subjects answered a questionnaire and rested for a period of 138 s. In the testing part, aimed at gathering data to test the accuracy of the user-tailored model, subjects played seven levels of an evaluation game, i.e., Infinite Mario (described in Section 4.5.2). Subjects answered a questionnaire after each level of Infinite Mario to provide a self-reported assessment of experienced stress and boredom. The questionnaire had two questions, one concerning stress and the other concerning boredom; both used a 5-point Likert scale to assess the level of stress/boredom players experienced during the level played, e.g., 1 being not bored at all, 5 being extremely bored.

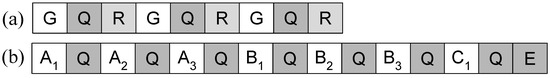

Figure 7.

Two (uninterrupted) parts of the experiment. (a) calibration part; (b) testing part. G: calibration game, Q: questionnaire about game/level, R: resting, ABC: levels of Infinite Mario, E: demographic questionnaire.

Regarding the levels of Infinite Mario in the testing part, they were organized in three batches: batches A, B and C containing 3, 3 and 1 level each, respectively. The levels in batches A and B were designed to present an increase in difficulty within the batch; therefore, levels A and B were expected to be less difficult/challenging than levels A and B, for instance. Similarly, the levels in batch B were designed to be more challenging than the levels in batch A, also following an increase in difficulty. Consequently, levels B are expected to be slightly more difficult/challenging than levels A. This pattern intended to mimic the balance curve of a commercial game, where levels, and game parts, commonly tend to increase their difficulty as the game story progresses. In order to ensure that the subjects would experience some level of boredom during the testing phase, which is required for the evaluation of the proposed method, levels B and C were designed using a particular set of changes, including the use of Mario’s auto-scrolling camera mechanics. In such a configuration, the player has no control of the speed of the level. After each level was played, the subjects were required to answer a questionnaire about how boring/stressful the game level had been. The order in which the levels were played was not randomized among the subjects during the testing phase of the experiment. As a consequence, all the subjects played the evaluation game in the same order: levels A to A, then by levels B to B, finally level C.

After subjects finished playing the last level in the testing part, i.e., level C, they answered a final questionnaire about their age and gaming experience/profile. Before starting the experiment, participants received the following instructions from a researcher: they should play a few games, answer questionnaires after each game and rest when instructed; they were told that their gaming performance was not being evaluated that they should not give up in the middle of the games that a time limit exists for the levels to prevent them from playing too long, and that they should remain seated during the whole process.

4.5.2. Evaluation Game: Infinite Mario

The game used in the evaluation phase of the experiment is a modified version of Markus Persson’s Infinite Mario, a public domain clone of Nintendo’s platform game Super Mario Bros (the version of the game used in the experiment is an HTML5, web-based version built by Robert Kleffner, available at: https://github.com/robertkleffner/mariohtml5; Robert ported to HTML5 the original Java version created by Markus Persson; source code of both versions, Robert’s and Markus’, are in the public domain; the source code of the final version used in this experiment is available at: https://fernandobevilacqua.com/link/phd-experiment2). In the case of this experiment, the game is played with a keyboard in a web browser. Infinite Mario has been widely mentioned in the literature, including studies involving the modeling of player experience [92,106,107] and detection of affective states [108].

The gameplay in Super Mario, consequentially in Infinite Mario as well, consists of controlling the main character, Mario, along the level. Mario can move left or right, jump, run, duck, and throw fireballs (if the power-up Flower has been collected). The objective of the game is to complete each level, which is accomplished by traversing it from left to right until the “end of level” checkpoint is reached. Mario can be in three different states: small, big, and power-up. If Mario is small, any interaction with enemies that is different from landing on top of them after a jump results in Mario getting killed immediately. If Mario is big, the same “wrong” interaction with enemies causes Mario to be hurt and transform into the small state. If Mario is in the power-up state, the “wrong" interaction with enemies causes Mario to be hurt and transform into the big state. Consequently, keeping Mario in the big or power-up state is a strategic advantage that prevents Mario from being killed, which is likely to calm players, i.e., relaxed emotional state. On the other hand, keeping Mario in the small state is less beneficial, since mistakes are fatal, thus likely causing players to feel anxious/stressed in such conditions. Along the level, Mario might encounter enemies, which can be killed or ignored. Mario can kill enemies by jumping and landing on top of them, which is rewarded with score points. Some enemies, e.g., Koopa Troopa (a sort of turtle), leave a shell behind when killed by Mario. The shell can be picked up by Mario and carried around, serving as a weapon when released. The levels might also contain terrain obstacles of varying sizes, e.g., gaps that must be jumped over. If Mario falls into a gap, he dies immediately. Mario can also find collectable items, i.e., coins and power-ups, or interactable items, e.g., blocks. Mario collects items by touching them, i.e., a collected coin results in score points. Collectable items might be visible in the level or hidden inside interactable items, e.g., blocks. Mario interacts with blocks by bumping into them from below, e.g., jumping and hitting Mario’s head on the bottom of a block destroys it. A destroyed block might give a collectable item as a reward, e.g., coin, Mushroom (Mario transitions to big state) or Flower (Mario transitions to power-up state).

During gameplay, information about Mario, the score and the current level is displayed at the top of the screen. This information includes the number of lives Mario has left to complete the level, the level score, number of coins collected (collecting 100 coins results in an extra life), name of the current level, and the amount of time available to complete the level (constantly ticking down). When the time remaining to complete the level reaches the 60 s mark, a hurry up sound is played, then the background music starts to play in a faster tempo. Unless informed otherwise, all the levels of Infinite Mario in the experiment start with three lives and 200 s of available time. Every time Mario dies, the time remaining to complete the level is reset to its initial value, e.g., 200 s.

Originally, Infinite Mario procedurally generates all its gameplay content, e.g., level design and position of items/enemies. This behavior was not desired for the experiment, since all the subjects should experience the same Mario levels. Additionally, subjects should feel stressed and bored in the game at some point, so that the proposed emotion detection method can be properly evaluated when such moments are detected. As a consequence, Infinite Mario was adapted and tweaked, thus made to fit as an ideal evaluation game in the experiment. The procedural content generation was constrained by a seed and a set of parameters was introduced to control the creation of the content, e.g., length of the level, amount and width of terrain obstacles, such as gaps and platforms, availability of coins and power-ups, among others. It ensured that all the subjects experienced exactly the same levels.

Previous works using Infinite Mario [106,107] have shown a correlation between anxiety and (1) difficulty of jumping, e.g., overcoming obstacles, and (2) gap width. There is also a correlation between boredom and the width of gaps, i.e., the wider the gap, the less boring the level. Based on those findings and the guidance provided by game design experts, the Mario levels used in the experiment were adjusted according to the description presented in Table 4. Column Level refers to the level name/number. Column Type refers to the overall visual representation of the level. Possible types are Overground (open sky and green landscape), Underground (closed ceiling, dirt-like environment), and Castle (closed ceiling with bricks resembling the interior of a castle). Each level type features different background music and visual elements, as illustrated in Figure 8. Column Emotion refers to the expected emotional state most subjects will experience. Finally, column Adjustments refer to the constraints used to generate the levels content.

Table 4.

Levels of Infinite Mario and adjustments made to induce a particular emotional state.

Figure 8.

Screenshots from Infinite Mario. From left to right, level types Overground, Underground and Castle, respectively.

Level is an introduction to the game to familiarize the subjects with the mechanics, e.g., move, jump, collect items. Levels and were designed to be regular Mario levels with a compelling and enjoyable challenge scale. Levels and were designed to be more stressful by including more enemies and several gaps which were wider than usual. The absence of power-ups, the number of challenges, i.e., enemies and wide gaps, and the fact that Mario is continuously in the small state should force the subjects to better time actions, e.g., jump, and constantly pay attention to the surroundings. These levels also use the Castle type, which is usually associated with “boss levels” in Super Mario (commonly more challenging). Finally, levels and have an available time of 80 s to be finished, a considerably lower value compared to 200 s in other levels. As a consequence, after 20 s of gameplay, the hurry up sound is played and the background music starts to play faster, a configuration that is likely to cause an emotional state of stress. In contrast, levels and were designed to be more boring. These levels include an auto-scrolling camera mechanic, which enables the camera to automatically traverse the level independently of Mario’s movements. The speed of the auto-scrolling camera was adjusted to be at constant, but slow pace. Additionally, the reduced number of interactable/collectable items, the existence of only a few terrain obstacles, as well as the absence of gaps, power-ups and enemies are likely to cause an emotional state of boredom. Furthermore, levels and are very similar visually, which might cause subjects to perceive level as a repetition of level . In that case, subjects might perceive level as even more boring, since the level topology is already known and the player is unable to move the camera at a faster pace.

As previously mentioned, the levels were adjusted and play-tested by game design experts. It ensured that the content of all levels and the constraints/modifications applied to them did not affect the subject’s perception of playing a clone of a Mario. For instance, the order in which the levels were played, i.e., repeating the pattern of an overground, then an underground, and finally a castle level, was kept as an important element. It should mimic the expected world progression of the original Mario game, where the final level of a particular world is usually a castle level with a boss. Finally, particular attention was invested to make Infinite Mario levels difficulty as different as possible from the linear difficulty progression present in the three calibration games. The aim was to make Infinite Mario as similar to Super Mario as possible respecting the content constraints mentioned previously.

5. Analysis and Procedures

The following sections describe how the data gathered in both studies, including the video recordings and the self-reported emotional states, were processed and analyzed to evaluate our proposed method.

5.1. Study 1

The aim of Study 1 is to evaluate the feasibility of using calibration games as emotion elicitation materials to train a neural network able to classify emotional states of stress and boredom. In this study, subjects played three calibration games, which were used both to train and evaluate an emotion classifier based on our proposed method. Additionally, Study 1 aims to assess how different features influence the classification process, for instance, if the combined use of HR and FA features is better than the use of FA only. The assessment also included the use of HR data from a physical sensor as a classification feature, so the impact of using a physical sensor instead of rPPG could be checked regarding classification accuracy.

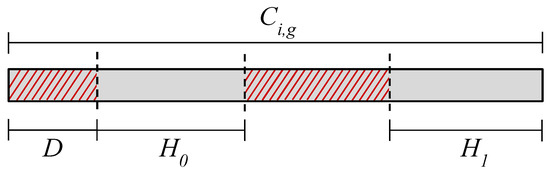

5.1.1. Data Pre-Processing

The pre-processing of video recordings involved the extraction of the parts containing the interaction with the games and the discarding of noisy frames. The process is illustrated in Figure 9. Firstly, the periods in which the subjects played each of the available games were extracted from the video recordings. This resulted in three videos per subject, denoted as where s is the s-th subject and represents the game. Then, the initial s of any given video were ignored because we assumed it might not be ideal for emotion analysis. Firstly, during that period, subjects are less likely to be in their usual neutral emotional state. They are more likely to be stimulated by the excitement of the initial contact with a game soon to be played, which interferes with any feelings of boredom. Secondly, subjects need a basic understanding of and experimentation with the game, in order to assess whether it is boring or not. As per our understanding, it is less likely for such conjecture to be fulfilled during the initial 45 s of gameplay than it is afterwards. After the removal of D seconds, the remainder of each video was divided into three segments, from which the first and the last were selected as and , respectively. Since calibration games were designed to be boring at the beginning and stressful at the end, segments and are more likely to represent the moments subjects perceived the games as being boring and stressful, respectively.

Figure 9.

Extraction of video segments and containing boring and stressful interactions, respectively, in the calibration games. Initial D seconds of any video are ignored and the remainder is divided into three segments, from which the first and the last ones are selected. Stripes highlight discarded video segments.

The pre-processing of the recordings resulted in six video segments per subjects: three segments (one per game) and three segments (one per game). A given game i contains pairs of and video segments (20 segments , one per subject, and 20 segments , one per subject). Regarding all the subjects and games, there are pairs of and video segments (3 games × 20 subjects, resulting in 60 segments and 60 segments ). Subject 9 had problems playing the Platformer game; therefore, all segments and from that subject were discarded. Consequentially, there are pairs of and video segments in total, after the pre-processing.

The emotion elicitation design of the calibration games, where and represent boring and stressful interactions, respectively, is used to label samples to train and test the model. Samples from the part were labeled as boredom and samples from the part were labeled as stress. This process accounts for the informed levels of boredom and stress of the subject, which aimed to ensure a correct labeling of the samples, based on video segments that more likely, accurately reflect the emotional state self-reported by the subjects.

5.1.2. Features Extraction and Calculation

Features used in the classification model were extracted remotely via the analysis of the video segments of each subject. In total, nine features, denoted to , were used in the process. Detailed information regarding how features to were extracted, calculated, and aggregated, as well as the reasoning for its use is presented in Section 2.2. Feature , similarly to feature , represents HR activity, however it is calculated on the basis of the HR measurements performed by a physical sensor, i.e., watch, used by subjects. It is important to highlight that feature is not part of our proposed method for remote detection of emotions. It has been used as a classification feature in study 1 to evaluate to what extent a HR measurement that is not based on a rPPG estimation, i.e., a physical sensor in this case, effects the emotion classification.

5.1.3. Training and Evaluation of an Emotion Classifier

The classification procedure uses the previously mentioned features to and a neural network trained to identify two emotional states: boredom and stress. Both the training and evaluation of the neural network are performed in a user tailored fashion: data from a given subject is used to train and evaluate the emotion classification of that given subject . Figure 10 illustrates the process.

Figure 10.

Iteration of a 3-fold Leave-One-Session-Out Cross-Validation performed on the gaming session of a given subject with three games, i.e., A, B and C. Data of two calibration games, e.g., A and B, are used to train the machine learning model, while data of the third calibration game, e.g., C, are used to evaluate the model.

Leave-One-Session-Out Cross-Validation (LOSOCV) is used to evaluate each trained user-tailored model, as illustrated in Figure 10. In LOSOCV, the data from one session instance are left out and a model is constructed on the data from all other session instances. In the present study, a given subject played three calibration games, e.g., A, B and C, thus, the data from one calibration game are left out and a model is trained on the data of the other two calibration games for that subject . This is repeated for all three calibration games of that subject . Consequentially, the use of LOSOCV will produce three models per subject, resulting in three measurements of classification accuracy per subject, denoted , where represent each evaluated model. The final classification accuracy for given subject , named , is calculated as the mean of values obtained from the iterations in the LOSOCV. In other words, each subject contributes a single classification accuracy value , which is calculated on the basis of the mean classification accuracy of the subject’s three models in the LOSOCV iterations.

In the training process of each model, which is performed three times per user, the hyper- parameters of each neural network, e.g., number of neurons, are optimized using random search [109]. A 10-fold cross validation method repeated three times is applied, which divides the dataset into 10-subsets, each of which is left out while the model is trained on all the others. The process is repeated three times and the final metric for the model is the mean from the number of repeats. The area under the ROC curve (AUC) is used as a metric to select the best model.

As previously mentioned, the calibration games were perceived as boring at the beginning and stressful at the end. As a consequence, it is assumed that the subject’s emotional state in and is boredom and stress, respectively. Based on this assumption, training and evaluation data obtained from the video segments in and were labeled as boredom and stress, respectively.

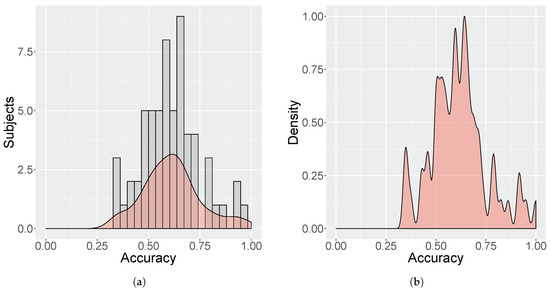

5.1.4. Analysis

In order to test the effectiveness of the neural network in classifying samples as either boredom or stress, all of the trained neural networks were evaluated in conjunction. As described in the previous section, each subject’s model was evaluated using LOSOCV, which produced a classification accuracy for any given subject . The minimum, maximum and mean value of was calculated as a metric for accuracy. In order to better contextualize the classification results, the same process was also applied to the other three metrics obtained during the LOSOCV evaluation: Precision, Recall and F1 score (F1 score should not be confused with , the mouth outer facial feature used in the model). Precision accounts for the correctly predicted positive observations of the total predicted positive observations, e.g., of all the samples classified as stress, how many were indeed labeled as stress. Recall accounts for the correctly predicted positive observations of all the available observations in a class, e.g., of all the available samples labeled as boredom (or stress), how many were actually classified as such. Finally, the F1 score is the weighted average of Precision and Recall.

In order to better understand the contribution of each feature for the classification process, the training/evaluation process mentioned previously was also performed using different feature sets. Each of these different feature sets was evaluated in an independent test, denoted . Table 5 shows tests and the corresponding feature sets used in the process.

Table 5.

Tests and their respective feature sets.

Tests MULTI_R and MULTI_G use a multifactorial set of features for their neural network, where facial and HR information is used in combination. The difference between MULTI_R and MULTI_G is that the former uses rPPG estimated HR, while the latter uses the HR obtained from the physical sensor. Test FACE uses a set of features based solely on facial information. Finally, tests HR_R and HR_G use only HR information as a feature. Similar to MULTI_R and MULTI_G, tests HR_R and HR_G use HR readings from rPPG estimations and a physical sensor, respectively.

Since the calibration games used in the experiment induce emotional states of boredom and stress, then this difference should enable a trained neural network to properly classify evaluation samples as either boredom or stress. Additionally, the use of a multifactorial approach, where facial analysis and HR information are used in combination instead of either one alone, is expected to produce better classification results [8]. Based on those expectations, the following hypotheses state:

- : a user-tailored neural network using a multifactorial feature set, i.e., facial and HR features, performs with greater accuracy than a user-tailored neural network using facial features only;

- : a user-tailored neural network using a multifactorial feature set, i.e., facial and HR features, performs with greater accuracy than a user-tailored neural network using HR features only.

Hypotheses and were tested by performing a Wilcoxon Signed Ranks test on all values of the two competing tests . As previously mentioned, the use of LOSOCV produces 57 accuracy measurements per test .

5.2. Study 2

The aim of Study 2 is to validate the proposed method for remote detection of emotions in a larger scale compared to Study 1. In Study 1, subjects interacted with three calibration games; in the training (or calibration) phase, two of those calibration games were used to train the emotion classifier, while the third calibration game was used in the testing phase. In Study 2, our method is evaluated using the same two phases, i.e., training (or calibration) and testing; however, the three previously described calibration games are used to train the emotion classifier, while a fourth game, i.e., Infinite Mario, is used for evaluation. In summary, during the training phase, the model is trained by applying a user-tailored approach, i.e., data from subject playing three calibration games (Mushroom, Platformer and Tetris) are used to create model . The result of the training phase is a user-tailored model, i.e., model , a trained neural network for use on subject . The testing phase occurs in a game session involving subject playing the non-calibration game Infinite Mario. During the testing phase, the signals of subject are remotely acquired and fed into the previously trained model , which outputs the estimated emotional state of subject for that particular testing game. In summary, the aim of this experiment is to answer the following research question: how accurate is an emotion detection approach that uses remotely acquired signals, i.e., heart rate and facial actions, as input of a machine learning model, i.e., neural network that is trained on a user-tailored basis (one subject produces one model) using calibration games as emotion elicitation? The overall goal of this experiment is to analyze the emotion classification accuracy during the testing phase, which is more similar to a real gaming session involving a commercial game, for instance. The following sections present a detailed explanation of the experiment, including how data was processed and analyzed.

5.2.1. Data Preprocessing